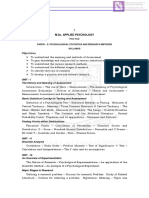

Professional Documents

Culture Documents

Statistics in Psychology

Uploaded by

Kim AnhOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Statistics in Psychology

Uploaded by

Kim AnhCopyright:

Available Formats

Statistics in Psychology

An Historical Perspective

Second Edition

This page intentionally left blank

Statistics in Psychology

An Historical Perspective

SecondEdition

Michael Cowles

York University, Toronto

LAWRENCE ERLBAUM ASSOCIATES, PUBLISHERS

2001 Mahwah,New Jersey London

Copyright 2001by Lawrence Erlbaum Associates, Inc.

All rights reserved.No part of this bookmay bereproduced

in anyform, by photostat, microform, retrieval system, or any

other means, without the prior written permissionof thepublisher.

Lawrence Erlbaum Associates, Inc., Publishers

10 IndustrialAvenue

Mahwah,NJ 07430

Coverdesignby Kathryn Houghtaling Lacey

Library of Congress Cataloging-in-Publication Data

Cowles, Michael, 1936--

Statisticsin psychology: anhistorical perspective / Michael Cowles.--

2

nd

ed.

p. cm.

Includes bibliographical references (p.) andindex.

ISBN 0-8058-3509-1(c: alk. paper)-ISBN 0-8058-3510-5(p: alk. paper)

1. PsychologyStatisticalmethodsHistory. 2. Social sciences

Statisical methodsHistory.I. Title.

BF39.C67 2000

150'.7'27~dc21 00-035369

The final camera copyfor this workwaspreparedby theauthor,andthereforethe

publisher takesno responsibilityfor consistencyor correctnessof typographical style.

However, this arrangement helps to make publicationof this kindof scholarshippossible.

Books publishedby Lawrence Erlbaum Associates areprintedon

acid-freepaper,andtheir bindingsarechosenfor strengthanddurability.

Printedin theUnited Statesof America

1 0 9 8 7 6 5 4 3 2 1

Contents

Preface ix

Acknowledgments xi

1 The Development of Statistics 1

Evolution, Biometrics,andEugenics 1

The Definition of Statistics 6

Probability 7

The Normal Distribution 12

Biometrics 14

Statistical Criticism 18

2 Science,Psychology,and Statistics 21

Determinism 21

ProbabilisticandDeterministic Models 26

ScienceandInduction 27

Inference 31

Statisticsin Psychology 33

3 Measurement 36

In Respectof Measurement 36

SomeFundamentals 39

Error in Measurement 44

Vi CONTENTS

4 The Organization of Data 47

The Early Inventories 47

Political Arithmetic 48

Vital Statistics 50

Graphical Methods 53

5 Probability 56

The Early Beginnings 56

The Beginnings 57

The Meaningof Probability 60

Formal Probability Theory 66

6 Distributions 68

The Binomial Distribution 68

The Poisson Distribution 70

The Normal Distribution 72

7 Practical Inference 77

Inverse Probability

andthe Foundationsof Inference 77

FisherianInference 81

Bayesorp< . 05? 83

8 Sampling and Estimation 85

RandomnessandRandom Numbers 85

Combining Observations 88

Samplingin Theory and inPractice 95

The Theoryof Estimation 98

The Battle for Randomization 101

9 Sampling Distributions 105

The Chi-SquareDistribution 705

The t Distribution 114

The F Distribution 121

The Central Limit Theorem 124

CONTENTS VJJ

10 Comparisons, Correlations,

and Predictions 127

Comparing Measurements 727

Galton'sDiscoveryof Regression 129

Galton' s Measureof Co-relation 138

The Coefficientof Correlation 141

Correlation- Controversies

andCharacter 146

11 Factor Analysis 154

Factors 154

The Beginnings 156

Rewriting the Beginnings 162

The Practitioners 164

12 The Designof Experiments 171

The Problemof Control / 71

Methodsof Inquiry 1 73

The Conceptof Statistical Control 176

The Linear Model 181

The Designof Experiments 752

13 Assessing Differencesand Having Confidence 186

FisherianStatistics 186

The Analysisof Variance 187

Multiple Comparison Procedures 795

ConfidenceIntervals

andSignificanceTests 199

A Note on 'One-Tail'

and Two-Tail' Tests 203

14 Treatments and Effects: The Rise of ANOVA 205

The Beginnings 205

The Experimental Texts 205

The Journalsand thePapers 207

The Statistical Texts 272

Expected Means Squares 273

Viii CONTENTS

15 The Statistical Hotpot 216

Times of Change 216

NeymanandPearson 217

StatisticsandInvective 224

Fisher versus Neyman andPearson 228

Practical Statistics 233

References 236

Author Index 254

Subject Index 258

Preface

In this secondedition I have made some corrections to errors in algebraic

expressions that I missedin the firstedition and Ihavebriefly expandedon some

sections of the original whereI thought such expansion would make the

narrativecleareror moreuseful. The main changeis theinclusionof two new

chapters;one onfactor analysisand one on therise of the use ofANOVA in

psychologicalresearch. I am still of theopinion that factor analysis deserves its

own historical account,but I ampersuaded that the audiencefor sucha work

would be limited werethe early mathematical contortions to befully explored.

I have triedto providea brief non-mathematical background to its arrival on the

statistical scene.

I realized thatmy accountof ANOVA in the first edition did not dojustice

to thestory of its adoptionby psychology,andlargely due to myre-readingof

the work of Sandy Lovie(of the University of Liverpool, England)and Pat

Lovie (of Keele University, England), who always writepapersthat 1 wish 1 had

written, decidedto try again.I hope thatthe Lovies will not be toodisappointed

by my attempt to summarize their sterling contributions to thehistory of both

factor analysisandANOVA.

As before,any errorsandmisinterpretationsare myresponsibility alone. I

would welcome correspondence that points to alternative views.

I would like to give special thanksto thereviewersof the first edition for

their kind commentsand allthosewho have helpedto bring aboutthe revival

of this work.In particular Professor Niels Waller of Vanderbilt University must

be acknowledgedfor his insistentandencouraging remarks. I hope thatI have

ix

X PREFACE

deserved them. My colleaguesand manyof my studentsat York University,

Toronto, have been very supportive. Those students, both undergraduate and

graduate,who have expressed their appreciation for my inclusionof some

historical backgroundin my classeson statisticsandmethod have givenme

enormous satisfaction. This relatively short account is mainly for them, and

I hopeit will encourage some of themto explore someof these mattersfurther.

There seemsto be aslow realization among statistical consumers in

psychology that there is moreto theenterprise than null hypothesis significance

testing,andother controversies to exerciseus. It isstill my firm belief that just

a little more mathematical sophistication andjust a little more historical

knowledge woulddo agreatdealfor the way wecarry on ourresearch business

in psychology.

The editorsandproduction peopleat Lawrence Erlbaum, ever sharp and

efficient, get onwith the job andbring their expertiseandsensible adviceto the

project and Ivery much appreciate their efforts.

My wife hassacrificeda great dealof hertime andgiven me considerable

help with thefinal stagesof this revisionand she and my family, even yet,put

up with it all. Mere thanksare notsufficient.

Michael Cowles

Acknowledgments

I wish to expressmy appreciationto anumberof individuals, institutions,and

publishersfor granting permissionto reproduce material that appearsin this

book:

Excerpts from Fisher Box, J. (c) 1978,R. A. Fisher thelife of a scientist,from

Scheffe,H. (1959) Theanalysisof variance,andfrom Lovie, A. D & Lovie, P.

Charles Spearman, Cyril Burt, and theorigins of factor analysis. Journal of the

History of the Behavioral Sciences, 29, 308-321.Reprintedby permissionof

John Wiley& Sons Inc.,the copyright holders.

Excerptsfrom a numberof papersby R.A. Fisher. Reprinted by permissionof

Professor J.H. Bennett on behalf of the copyright holders,the University of

Adelaide.

Excerpts, figures andtables by permissionof Hafner Press,a division of

Macmillan Publishing Companyfrom Statistical methods for research workers

by R.A. Fisher. Copyright (c) 1970by University of Adelaide.

Excerpts from volumes of Biometrika. Reprintedby permission of the

Biometrika Trusteesandfrom Oxford University Press.

Excerpts from MacKenzie, D.A. (1981). Statisticsin Britain 1865-1930.

Reprintedby permissionof theEdinburgh University Press.

XI

Xii ACKNOWLEDGMENTS

Two plates from Galton, F. (1885a). Regression towards mediocrity in

hereditarystature. Journal of the Anthropological Instituteof Great Britainand

Ireland, 15, 246-263.Reprintedby permissionof the Royal Anthropological

Instituteof GreatBritain andIreland.

ProfessorW. H. Kruskal, Professor F. Mosteller,and theInternational Statistical

Institutefor permissionto reprint a quotationfrom Kruskal,W., & Mosteller,

F. (1979). Representative sampling, IV: The history of theconceptin statistics,

1895-1939.International StatisticalReview,47, 169-195.

Excerptsfrom Hogben,L. (1957). Statistical theory. London: Allen andUnwin;

Russell,B. (1931).Thescientific outlook. London: Allen andUnwin; Russell,

B. (1946). Historyof western philosophyand itsconnection withpolitical and

social circumstances from theearliest timesto thepresentday. London: Allen

and Unwin; von Mises, R. (1957). Probability, statisticsand truth. (Second

revised English Edition preparedby Hilda Geiringer) London: Allenand

Unwin. Reprintedby permissionof Unwin Hyman,the copyright holders.

Excerptsfrom Clark, R. W. (1971). Einstein,the life and times.New York:

World. Reprintedby permissionof Peters, Fraser andDunlop, Literary Agents.

Dr D. C. Yalden-Thomsonfor permissionto reprint a passagefrom Hume,D.

(1748). An enquiry concerning human understanding. (In D. C. Yalden-

Thomson(Ed.). (1951). Hume, Theory of Knowledge. Edinburgh: Thomas

Nelson).

Excerpts from various volumesof the Journal of theAmerican Statistical

Association. Reprinted by permissionof theBoardof Directorsof theAmerican

Statistical Association.

An excerpt reprintedfrom Probability, statistics,and data analysisby O.

Kempthorneand L.Folks, (c) 1971 Iowa StateUniversity Press, Ames, Iowa

50010.

Excerptsfrom De Moivre, A. (1756).Thedoctrineof chances:or, A methodof

calculating the probabilities of eventsin play. (3rd ed.),London:A. Millar.

Reprintedfrom the edition publishedby theChelseaPublishingCo.,New York,

(c) 1967with permissionand Kolmogorov,A. N. (1956). Foundationsof the

theory of probability. (N. Morrison, Trans.). Reprinted by permissionof the

Chelsea Publishing Co.

ACKNOWLEDGMENTS Xiii

An excerptreprinted with permission of Macmillan Publishing Companyfrom

An introduction to thestudy of experimental medicineby C. Bernard(H. C.

Greene,Trans.),(c) 1927 (Original work published in 1865)andfrom Science

and human behaviorby B. F. Skinner(c) 1953 by Macmillan Publishing

Company, renewed 1981 by B. F.Skinner.

Excerptsfrom Galton,F. (1908). Memoriesof mylife. Reprintedby permission

of Methuenand Co.

An excerptfrom Chang, W-C. (1976). Sampling theories andsamplingpractice.

In D. B. Owen(Ed.), On thehistoryof statisticsand probability (pp.299~315).

Reprintedby permissionof Marcel Dekker, Inc. New York.

An excerpt from Jacobs,J. (1885). Reviewof Ebbinghaus's Ueber das

Gedachtnis. Mind, 10, 454-459 and from Hacking, I. (1971). Jacques

Bernoulli'sArt of Conjecturing. British Journalfor thePhilosophyof Science,

22,209-229. Reprintedby permissionof Oxford University PressandProfessor

Ian Hacking.

Excerptsfrom Lovie, A. D. (1979). The analysisof variancein experimental

psychology: 1934-1945.British Journal of Mathematical and Statistical

Psychology,32, 151-178and Yule, G. U. (1921). Reviewof W. Brown and

G. H. Thomson, The essentialsof mental measurement. British Journal of

Psychology,2, 100-107andThomson,G. H. (1939)The factorial analysisof

humanability. I. The present positionand theproblems confronting us. British

Journal of Psychology,30, 105-108.Reprintedby permissionof theBritish

Psychological Society.

Excerptsfrom Edgeworth,F. Y. (1887). Observations andstatistics:An essay

on the theory of errors of observationand the firstprinciplesof statistics.

Transactions of the Cambridge Philosophical Society, 14, 138169 and

Neyman,J., & Pearson,E. S.(1933b).The testingof statistical hypotheses in

relationto probabilitiesa priori. Proceedingsof the Cambridge Philosophical

Society,29, 492-510.Reprintedby permissionof the Cambridge University

Press.

Excerptsfrom Cochrane,W. G. (1980). Fisher and theanalysisof variance.In

S. E. Fienberg,& D. V. Hinckley (Eds.).,R. A. Fisher: An Appreciation (pp.

17-34)andfrom Reid,C. (1982). Neyman -fromlife. Reprintedby permission

of Springer-Verlag,New York.

XJV ACKNOWLEDGMENTS

Excerptsandplatesfrom Philosophical Transactions of the Royal Societyand

Proceedingsof theRoyal Societyof London. Reprintedby permissionof the

Royal Society.

Excerptsfrom LaplaceP. S. de(1820).A philosophical essay on probabilities.

F. W. Truscott, & F. L. Emory, Trans.). Reprinted by permissionof Dover

Publications,New York.

Excerptsfrom Galton, F. (1889). Natural inheritance, Thomson, W. (Lord

Kelvin). (1891). Popular lecturesand addresses, andTodhunter,I. (1865). A

history of the mathematical theoryof probability from thetimeof Pascalto that

of Laplace. Reprintedby permissionof Macmillan andCo., London.

Excerptsfrom various volumesof theJournal of the Royal Statistical Society,

reprintedby permissionof theRoyal Statistical Society.

An excerptfrom Boring, E. G.(1957). Whenis humanbehavior predetermined?

TheScientific Monthly, 84, 189-196.Reprintedby permissionof theAmerican

Associationfor theAdvancement of Science.

Data obtainedfrom Rutherford, E., & Geiger, H. (1910). The probability

variations in the distribution of a particles. Philosophical Magazine, 20,

698-707. Material usedby permissionof Taylor and Francis, Publishers,

London.

Excerptsfrom various volumesof Nature reprintedby permissionof Macmillan

Magazines Ltd.

An excerpt from Forrest,D. W. (1974). Francis Galton:Thelife and work of a

Victorian genius.London: Elek. Reprinted by permissionof Grafton Books,a

division of theCollins PublishingGroup.

Excerptsfrom Fisher,R. A. (1935, 1966,8th ed.). Thedesignof experiments.

Edinburgh: OliverandBoyd. Reprintedby permissionof theLongman Group,

UK, Limited.

Excerptsfrom Pearson,E. S. (1966). TheNeyman-Pearson story: 1926-34.

Historical sidelights on an episodein Anglo-Polish collaboration.In F. N.

David (Ed.). Festschriftfor J. Neyman. London:Wiley. Reprinted by

permissionof JohnWiley andSons Ltd., Chichester.

ACKNOWLEDGMENTS XV

An excerptfrom Beloff, J. (1993) Parapsychology: a concise history. London:

Athlone Press.Reprinted with permission.

Excerptsfrom Thomson,G. H. (1946) Thefactorial analysisof human ability.

London: Universityof London Press. Reprinted by permissionof TheAthlone

Press.

An excerpt from Spearman,C. (1927) The abilities of man. New York:

Macmillan Company. Reprintedby permissionof Simon & Schuster, the

copyright holders.

An excerpt from Kelley, T. L. (1928) Crossroadsin themind of man: a study

of differentiable mental abilities. Reprinted with the permissionof Stanford

University Press.

An excerpt from Gould, S. J.(1981)Themismeasureof man. Reprinted with

the permissionof W. W. Norton & Company.

An excerptfrom Boring, E. G.(1957) Whenis human behavior predetermined?

Scientific Monthly, 84,189-196. And from Wilson, E. B. (1929) Reviewof The

abilities of man. Science,67, 244-248.Reprintedwith permissionfrom the

American Associationfor theAdvancement of Science.

An excerpt from Sidman, M. (1960/1988) Tacticsof scientific research:

Evaluating experimental data inpsychology.New York: Basic Books. Boston:

Authors Cooperative (reprinted). Reprinted with the permissionof Dr Sidman.

An excerptfrom Carroll, J. B.(1953)An analytical solutionfor approximating

simple structurein factor analysis. Psychometrika, 18, 23-38.Reprinted with

the permissionof Psychometrika.

Excerptsfrom Garrett H. E. & Zubin, J. (1943) The analysisof variancein

psychologicalresearch. Psychological Bulletin, 40, 233-267andfrom Grant,

D. A. (1944) On "The analysisof variancein psychological research."

Psychological Bulletin, 41, 158-166.Reprintedwith the permissionof the

American Psychological Association.

An excerpt from Wilson, E. B. (1929) Reviewof Crossroadsin the mind of

man. Journal of General Psychology,2, 153-169. Reprinted with the

permissionof the Helen Dwight Reid Educational Foundation. Published by

Heldref Publications, 1319 18th St. N.W. Washington, D.C. 20036-1802.

This page intentionally left blank

1

The Development

of Statistics

EVOLUTION, BIOMETRICS, AND EUGENICS

The central concernof thelife sciencesis thestudyof variation.To what extent

does this individual or groupof individualsdiffer from another? What are the

reasonsfor thevariability? Can thevariability be controlledor manipulated?

Do the similarities that exist springfrom a common root? What are theeffects

of the variation on thelife of theorganisms?Thesearequestionsaskedby

biologistsandpsychologists alike.

The life-science disciplines aredefinedby thedifferent emphases placed on

observed variation, by thenatureof theparticular variablesof interest,and by

the waysin which the different variables contributeto thelife andbehaviorof

the subject matter. Change and diversity in nature reston an organizing

principle, the formulationof which hasbeen saidto be thesingle mostinfluential

scientific achievement of the 19th century:the theoryof evolutionby meansof

natural selection.The explicationof thetheoryis attributed, rightly,to Charles

Darwin (1809-1882).His book TheOrigin of Specieswas publishedin 1859,

but a numberof other scientistshadwritten on theprinciple,in whole or in part,

and thesemenwereacknowledgedby Darwin in later editionsof his work.

Natural selectionis possible because there is variationin living matter.The

strugglefor survival withinandacrossspeciesthen ruthlessly favors the indi-

viduals that possessa combinationof traits and characters, behavioral and

physical,that allows themto copewith the total environment, exist, survive,

and reproduce.

Not all sourcesof variability arebiological. Many organisms to agreateror

1

2 1. THE DEVELOPMENT OF STATISTICS

lesser extent reshape their environment, their experience, andtherefore their

behavior through learning. In human beings this reshapingof theenvironment

hasreachedits most sophisticatedform in what hascometo becalled cultural

evolution.A fundamental feature of thehuman condition,of human nature, is

our ability to processa very great deal of information. Human beings have

originality and creative powers that continually expand the boundariesof

knowledge. And, perhaps most important of all, our language skills, verbal and

written, allowfor the accumulationof knowledgeand itstransmissionfrom

generationto generation. The rich diversityof human civilization stemsfrom

cultural, aswell asgenetic, diversity.

Curiosity about diversityandvariability leadsto attemptsto classify and to

measure.The orderingof diversity and theassessment of variation have spurred

the developmentof measurement in thebiological andsocial sciences, and the

applicationof statisticsis onestrategyfor handlingthe numericaldataobtained.

As sciencehas progressed,it has become increasingly concerned with

quantification as ameansof describing events.It is felt that preciseand

economical descriptions of eventsand therelationships among themarebest

achievedby measurement. Measurement is thelink between mathematics and

science,and theapparent(at anyrateto mathematicians!) clarityandorderof

mathematicsfosterthe scientist's urgeto measure.The central importanceof

measurement wasvigorously expoundedby Francis Galton(1822-1911):"Un-

til the phenomenaof anybranchof knowledge have been submitted to meas-

urementandnumberit cannot assume the statusanddignity of a Science."

Thesewords formed partof the letterheadof the Departmentof Applied

Statisticsof University College, London,an institution that received much

intellectualandfinancial supportfrom Galton. And it is with Galton,who first

formulatedthe methodof correlation, thatthe commonstatisticalprocedures

of modern social science began.

The natureof variation and thenatureof inheritancein organisms were

much-discussedand much-confused topicsin the second halfof the 19th

century. Galtonwasconcernedto makethe studyof heredity mathematical and

to bring orderinto the chaos.

FrancisGalton was Charles Darwin's cousin. Galton's mother was the

daughterof Erasmus Darwin (1731-1802) by his secondwife, andDarwin's

fatherwasErasmus'sson by hisfirst. Darwin, who was 13yearsGalton'ssenior,

had returned homefrom a 5-year voyageas thenaturaliston board H.M.S.

Beagle(an Admiralty expeditionary ship) in 1836and by1838had conceived

of the principle of natural selectionto accountfor someof theobservationshe

hadmadeon theexpedition.The careersandpersonalitiesof GaltonandDarwin

were quitedifferent. Darwin painstakingly marshaled evidence and single-

mindedly buttressedhis theory, but remaineddiffident aboutit, apparently

EVOLUTION, BIOMETRICS AND EUGENICS 3

uncertainof its acceptance. In fact, it was only the inevitability of the an-

nouncementof the independent discovery of the principle by Alfred Russell

Wallace (1823~1913) that forced Darwin to publish, some20 yearsafter he had

formed the idea. Gallon,on theother hand, though a staidandformal Victorian,

was notwithout vanity, enjoyingthe fame andrecognition brought to him by

his many publicationson abewildering varietyof topics. The steady streamof

lectures, papersandbooks continued unabated from 1850until shortly before

his death.

The notion of correlated variationwas discussedby the newbiologists.

Darwin observesin TheOrigin of Species:

Many laws regulate variation, some few of which can bedimly seen,...I will

here only alludeto what may becalled correlated variation. Important changes

in the embryoor larva will probably entail changes in themature animal. . .

Breeders believe that long limbs arealmost always accompanied by anelongated

head.. .catswhich areentirely whiteandhave blue eyesaregenerallydeaf...

it appears that white sheep and pigs are injured by certain plants whilst

dark-coloured individuals escape ... (Darwin, 1859/1958,p. 34)

Of course,at this time,the hereditary mechanismwasunknown, and, partly

in an attemptto elucidateit, Galtonbegan,in the mid-1870s,to breed sweet

peas.

1

The resultsof his studyof thesizeof sweetpeaseeds over two generations

were publishedin 1877. Whena fixedsizeof parentseedwascompared with

the mean sizeof theoffspring seeds, Galton observed the tendency that he called

thenreversionandlater regressionto themean. The meanoffspring sizeis not

asextremeas theparental size. Large parent seeds of a particular size produce

seeds that have a mean size that is larger than average, but not aslargeas the

parentsize. The offspring of small parentseedsof a fixedsize havea mean size

that is smaller than average but nowthis mean sizeis not assmall asthat of the

fixed parent size. This phenomenon is discussed later in more detail.For the

moment,suffice it to saythat it is anarithmetical artifact arisingfrom the fact

that offspring sizesdo notmatch parental sizes absolutely uniformly. In other

words, the correlation is imperfect.

Galton misinterpreted this statistical phenomenon as areal trend towarda

reductionin population variability. Paradoxically, however, it led to theforma-

tion of theBiometric Schoolof heredityandthus encouragedthe development

of a great many statistical methods.

1

Mendel had already carriedout hiswork with ediblepeasandthus begunthe scienceof

genetics. The resultsof his work were publishedin a rather obscure journal in 1866and thewider

scientific world remained obliviousof themuntil 1900.

4 1. THE DEVELOPMENT OF STATISTICS

Over the next several years Galton collected data on inherited human

characteristicsby the simple expedientof offering cashprizes for family

records. From these data he arrivedat theregression linesfor hereditary stature.

Figures showing these lines areshownin Chapter10.

A common themein Galton's work, and later that of Karl Pearson

(1857-1936),was aparticular social philosophy. Ronald Fisher (1890-1962)

alsosubscribedto it, although, it mustbe admitted, it wasnot, assuch,a direct

influenceon hiswork. Thesethreemen are thefoundersof what are nowcalled

classical statisticsand allwere eugenists. They believed that the most relevant

andimportant variablesin humanaffairs areinherited. One'sancestorsrather

than one'senvironmental experiences are theoverriding determinants of intel-

lectual capacityand personalityaswell asphysical attributes. Human well-

being, human personality, indeed human society, could therefore, they argued,

be improvedby encouragingthe most ableto have more children than the least

able. MacKenzie (1981) andCowan(1972,1977)have argued that much of the

early work in statisticsand thecontroversies that arose among biologists and

statisticians reflect the commitmentof thefoundersof biometry, Pearson being

the leader,to theeugenics movement.

In 1884, Galtonfinanced andoperatedan anthropometric laboratory at the

International Health Exhibition.For achargeof threepence, members of the

public were measured. Visual andauditory acuity, weight, height, limb span,

strength,and anumberof other variables were recorded. Over 9,000 data sets

were obtained, and, at thecloseof theexhibition,the equipment wastransferred

to the South Kensington Museum where datacollection continued. Francis

Galtonwas anavid measurer.

Karl Pearson(1930)relates that Galton's first forays intothe problemof

correlation involved ranking techniques, although he wasaware that ranking

methods couldbe cumbersome. How could onecomparedifferent measuresof

anthropometric variables?In a flash of illumination, Galton realized that

characteristics measured on scales basedon their own variability (we would

now saystandard score units) could be directly compared. This inspiration is

certainly one of themost importantin theearly yearsof statistics.He recalls

the occasionin Memoriesof my Life, publishedin 1908:

As these linesarebeing written,the circumstances under which I first

clearly graspedthe important generalisation that the laws of hereditywere solely

concerned with deviations expressed in statistical unitsarevividly recalled to

my memory. It was in thegroundsof Naworth Castle, where an invitation had

been givento ramblefreely. A temporary shower drove me toseekrefugein a

reddish recessin therock by thesideof thepathway. Therethe idea flashed

5 EVOLUTION, BIOMETRICS AND EUGENICS

acrossme and Iforgot everythingelsefor a momentin my greatdelight. (Galton,

1908, p. 300)

2

This incident apparently took place in 1888,andbeforethe year wasout,

Co-relationsand Their Measurement Chiefly From Anthropometric Datahad

been presentedto theRoyal Society.In this paper Galton defines co-relation:

"Two variable organsaresaid to beco-relatedwhenthe variation of one is

accompaniedon theaverageby moreor less variationof theother,and in the

samedirection" (Gallon, 1888,p. 135).

The last five words of the quotation indicate that the notion of negative

correlationhad notthen been conceived, but this briefbut important paper shows

that Galtonfully understoodthe importanceof his statistical approach. Shortly

thereafter, mathematicians entered the picture with encouragement from some,

but by nomeansall, biologists.

Much of the basic mathematics of correlationhad,in fact, already been

developedby thetime of Gallon's paper, but theutility of theprocedure itself

in this contexlhadapparently eluded everyone. It wasKarl Pearson, Gallon's

discipleandbiographer,who, in 1896,set theconcepton asound mathematical

foundationandpresented statistics with the solutionto theproblemof repre-

senting covariationby meansof a numerical index, thecoefficient of correla-

tion.

From thesebeginnings springthe whole corpusof present-daystatistical

techniques. George Udny Yule (1871~1951), aninfluential statisticianwho was

not aeugenist,andPearson himself elaborated the conceptsof multiple and

partial correlation.The general psychologyof individual differencesand re-

searchinto the structureof human abilitiesandintelligence relied heavilyon

correlationaltechniques. Thefirsl third of the20th centurysaw theintroduction

of factor analysis throughthe work of Charles Spearman(1863-1945),Sir

Godfrey Thomson(1881-1955),Sir Cyril Burt (1883-1971),and Louis L.

Thurstone(1887-1955).

A further prolific andfundamentallyimportant streamof development arises

from the work of Sir Ronald Fisher.The techniqueof analysisof variancewas

developed directlyfrom the methodof intra-class correlation- anindex of the

extentto which measurements in thesame category or family arerelated, relative

to other categoriesor families.

2

Karl Pearson (1914 -1930)in thevolume publishedin 1924, suggested that this spot deserves

a commemorative plaque. Unfortunately, it looks asthoughtheinspirationcanneverbe somarked,

for Kenna (1973), investigating the episode, reports that: "In the groundsof Naworth Castle there

arenot anyrocks,reddishor otherwise, which could provide a recess,..." (p. 229),and hesuggests

that the locationof theincident might have been Corby Castle.

6 1. THE DEVELOPMENT OF STATISTICS

Fisher studied mathematicsat Cambridgebut also pursued interests in

biology andgenetics.In 1913he spentthe summer workingon afarm in Canada.

He workedfor a while with a City investment company andthenfound himself

declaredunfit for military service because of his extremely poor eyesight. He

turnedto school-teachingfor which he had notalentandwhich he hated. In

1919he had theopportunityof a postat University Collegewith Karl Pearson,

then headof theDepartmentof Applied Statistics,but choseinsteadto develop

a statistical laboratoryat theRothamsted Experimental Station near Harpenden

in England, wherehe developed experimental methods for agricultural research.

Over the next several years, relations between Pearson andFisher became

increasingly strained. They clashedon a variety of issues. Someof their

disagreements helped, andsome hindered, the developmentof statistics.Had

they beencollaboratorsand friends, rather than adversaries and enemies,

statisticsmight havehad aquite different history. In 1933 Fisher became Galton

Professorof Eugenicsat University Collegeand in1943 movedto Cambridge,

wherehe wasProfessorof Genetics. Analysisof variance, whichhas hadsuch

far-reachingeffects on experimentationin thebehavioral sciences, wasdevel-

oped through attempts to tackle problems posed at Rothamsted.

It may befairly said thatthe majority of textson methodologyand statistics

in the social sciences are theoffspring (diversity andselection notwithstanding!)

of Fisher's books, Statistical Methodsfor ResearchWorkers

3

first publishedin

1925(a), and TheDesignof Experimentsfirst publishedin 1935(a).

In succeeding chapters thesestatistical conceptsareexaminedin more detail

and their development elaborated, but first the use of theterm statisticsis

exploreda little further.

THE DEFINITION OF STATISTICS

In an everyday sense when we think of statisticswe think of factsand figures,

of numerical descriptionsof political andeconomic states (from which the word

is derived),and ofinventoriesof thevariousaspectsof our social organization.

The history of statistical proceduresin this sensegoesbackto thebeginnings

of human civilization. Whentradeandcommerce began, when governments

imposed taxes, numerical records were kept. The countingof people, goods,

andchattelswasregularlycarriedout in theRoman Empire, the Domesday Book

attempted to describethe stateof Englandfor theNorman conquerors, and

government agenciesthe world over expenda great dealof money and

3

MauriceKendall (1963)saysof this work, "It is not aneasy book. Somebody once said

that no student should attempt to readit unlesshe hadreadit before" (p. 2).

PROBABILITY 7

energyin collectingandtabulating suchinformation in thepresent day. Statis-

tics areusedto describeandsummarize, in numerical terms, a wide varietyof

situations.

But thereis anothermore recently-developed activity subsumed under the

term statistics:the practiceof not only collectingandcollating numerical facts,

but alsothe processof reasoningabout them. Going beyond the data,making

inferencesanddrawing conclusions with greateror lesserdegreesof certainty

in an orderly andconsistentfashion is the aim ofmodern applied statistics. In

this sense statistical reasoning did not beginuntil fairly late in the 17th century

andthen onlyin a quitelimited way. The sophisticated models now employed,

backedby theoretical formulations that areoften complex,are allless than100

years old. Westergaard (1932) points to theconfusionsthat sometimes arise

becausethe word statisticsis usedto signify both collectionsof measurements

and reasoning about them, and that in former timesit referred merelyto

descriptionsof statesin both numerical andnon-numerical terms.

In adoptingthe statisticalinferential strategythe experimentalist in the life

sciencesis acceptingthe intrinsic variabilityof thesubject matter.In recogniz-

ing a rangeof possibilities,the scientist comesfour-squareagainstthe problem

of deciding whetheror not theparticular set of observationshe or she has

collectedcanreasonablybe expectedto reflect the characteristicsof thetotal

range. Thisis the problemof parameter estimation, the task of estimating

population values (parameters) from a considerationof themeasurements made

on a particular population subset - thesample statistics. A second taskfor

inferential statisticsis hypothesis testing, the processof judging whetheror not

a particular statistical outcome is likely or unlikely to be due tochance. The

statistical inferential strategy depends on aknowledgeof probabilities.

This aspectof statisticshasgrown out of three activities that, at first glance,

appearto bequite different but in fact have somecloselinks. They areactuarial

prediction, gambling,and error assessment. Each addresses the problemsof

making decisions,evaluating outcomes, andtesting predictionsin the faceof

uncertainty,andeachhascontributedto thedevelopment of probability theory.

PROBABILITY

Statistical operations areoften thoughtof aspractical applications of previously

developed probability theory. The fact is, however, that almost all our present-

day statistical techniques have arisen from attemptsto answerreal-life problems

of prediction and error assessment, and theoretical developments have not

always paralleled technical accomplishments. Box (1984) hasreviewedthe

scientific context of a rangeof statistical advances andshown thatthe funda-

mental methodsevolvedfrom the work of practisingscientists.

8 1. THE DEVELOPMENT OF STATISTICS

JohnGraunt,a London haberdasher, born in 1620,is credited withthe first

attemptto predict andexplaina numberof social phenomena from a considera-

tion of actuarialtables.He compiledhis tablesfrom Bills of Mortality, the parish

accountsof deaths that were regularly, if somewhat crudely, recorded from the

beginningof the 17th century.

Grauntrecognizesthat the question mightbe asked:"To what purpose tends

all this laborious buzzling, andgroping?To know, 1. thenumberof the People?

2. How many Males,andFemales?3. How many Married,andsingle?"(Graunt,

1662/1975,p. 77), andsays:"To this 1 might answerin generalby saying, that

those,who cannot apprehend the reasonof these Enquiries, areunfit to trouble

themselvesto askthem." (p. 77).

Graunt reassured readers of this quite remarkable work:

The Lunaticksarealsobut few, viz. \ 58 in 229250thoughI fear manymorethanare

setdown in our Bills ...

So that, this Casualty beingso uncertain,1 shall not force my self to makeany

inference fromthe numbers,andproportions we finde in ourBills concerning it:

onely I dareensureany man at this present,well in his Wits, for one in thethousand,

that heshall not die aLunatick in Bedlam,within thesesevenyears,becauseI finde

not aboveone inabout onethousandfive hundredhavedoneso.(pp. 35-36)

Here is aninference basedon numerical dataandcouchedin termsnot so

very far removedfrom thosein reportsin themodern literature. Graunt's work

wasimmediatelyrecognizedasbeingof great importance, and theKing himself

(CharlesII) supportedhis electionto the recently incorporated Royal Society.

A few years earlierthe seedsof modern probability theory were being sown

in France.

4

At this time gamblingwas apopular habitin fashionable society and

a rangeof gamesof chancewasbeing played. For experienced players the

oddsapplicableto various situations must have been appreciated, but noformal

methods for calculating the chancesof various outcomeswere available.

Antoine Gombauld,the Chevalierde Mere, a "man-about-town" andgambler

with a scientific andmathematical turnof mind, consultedhis friend, Blaise

Pascal (1623-1662),a philosopher, scientist, andmathematician, hoping that

4

But note that therearehints of probability conceptsin mathematics going back at leastas

far as the12th centuryandthat Girolamo Cardano wrote Liber deLudo Aleae,(The Bookon Games

of Chance)a century beforeit waspublishedin 1663 (see Ore, 1953). There is alsono doubt that

quite early in human civilization, therewas anappreciationof long-run relative frequencies,

randomness, anddegreesof likelihood in gaming,andsome quiteformal conceptsare to befound

in GreekandRoman writings.

9 PROBABILITY

he would be able to resolve questionson calculationof expected(probable)

frequencyof gainsandlosses,aswell as on thefair division of the stakesin

games that were interrupted. Consideration of these questions led tocorrespon-

dence between Pascal and hisfellow mathematician Pierre Fermat (1601 -1665).

No doubt their advice aided de Mere's game.

5

More significantly, it was from

this exchange that some of thefoundationsof probability theoryandcombina-

torial algebra were laid.

ChristianHuygens(1629-1695)published,in 1657,a tract On Reasoning

With Gamesof Dice (1657/1970), which waspartly basedon thePascal-Fermat

correspondence, and in1713, Jacques Bernoulli's (1654-1705)book The Art of

Conjecturedevelopeda theoryof gamesof chance.

Pascalhadconnectedthe studyof probability with the arithmetic triangle

(Fig. 1.1), for which he discoverednew properties, although the trianglewas

known in China at least five hundred years earlier. Proofs of the triangle's

properties were obtained by mathematical induction or reasoningby recurrence.

FIG. 1.1 Pascal's Triangle

Poisson(1781-1840),writing of this episodein 1837 says,"A problem concerning games

of chance, proposedby a man of theworld to anaustere Jansenist, was theorigin of the calculus

of probabilities" (quotedby Struik, 1954,p. 145). De Mere wascertainly "a man of theworld"

andPascaldid become austere andreligious, but at thetime of deMere'squestionsPascalwas in

his so-called "worldlyperiod" (1652-1654).I am indebtedto my father-in-law,the late Professor

F.T.H. Fletcher,for many insights intothelife of Pascal.

5

10 1. THE DEVELOPMENT OF STATISTICS

Pascal'striangle, as it isknown in theWest,is atabulationof thebinomial

coefficientsthat may beobtainedfrom the expansionof (P + Q)

n

whereP = Q

= I The expansionwasdevelopedby Sir Isaac Newton(1642-1727),and,

independently, by theScottishmathematician, James Gregory (1638-1675).

Gregory discoveredthe rule about 1670. Newton communicated it to theRoyal

Societyin 1676, although later that year he explained that he had firstformulated

it in 1664 whilehe was aCambridge undergraduate. The example shownin Fig.

1.1 demonstrates that theexpansionof (~ + | )

4

generates, in thenumerators

of the expression, the numbersin the fifth row ofPascal'striangle. The terms

of this expression also give us the fiveexpected frequencies of outcome(0, 1,

2,3, or 4 heads)or improbabilitieswhena fair coin is tossedfour times. Simple

experiment will demonstrate that the actual outcomesin the"real world"of coin

tossing closely approximate the distribution thathasbeen calculatedfrom a

mathematicalabstraction.

During the 18th centurythe theory of probability attractedthe interestof

many brilliant minds. Among themwas afriend and admirer of Newton,

AbrahamDe Moivre (1667-1754).De Moivre, a French Huguenot, was in-

ternedin 1685after the revocationby Louis XIV of the Edict of Nantes,an edict

which hadguaranteedtolerationto French Protestants. He wasreleasedin 1688,

fled to England,and spentthe remainderof his life in London. De Moivre

published what might be describedas agambler's manual, entitled TheDoctrine

of Chancesor a Methodof Calculatingthe Probabilitiesof Eventsin Play. In

the second editionof this work,publishedin 1738,and in arevised third edition

published posthumouslyin 1756, De Moivre (1756/1967) demonstrated a

method, whichhe had firstdevisedin 1733,of approximatingthe sum of avery

large number of binomial terms whenn in (P + Q)" is very large(an immensely

laborious computationfrom the basic expansion).

It may beappreciated that as ngrows larger,the numberof termsin the

expansionalsogrows larger. The graphof thedistribution beginsto resemble

a smooth curve (Fig. 1.2), a bell-shaped symmetrical distributionthat held great

interestin mathematical terms but little practical utility outsideof gaming.

It is safeto saythat no other theoretical mathematical abstraction has had

such an important influence on psychologyand thesocial sciencesas that

bell-shaped curvenow commonly knownby thename that Karl Pearson decided

on- thenormal distribution-a\thougl\he was not the first to use the term. Pierre

Laplace(1749-1827)independently derivedthefunction andbroughttogether

muchof theearlier workon probability in Theorie AnalytiquedesProbabilites,

publishedin 1812.It was hiswork, aswell ascontributionsby many others, that

interpretedthe curveas the Lawof Error andshowed thatit could be appliedto

variableresults obtainedin multiple observations. One of the firstapplications

of the distribution outsideof gamingwas in theassessmentof errors in

FIG. 1.2 The Binomial Distribution for N = 12

and the Normal Distribution

11

12 1. THE DEVELOPMENT OF STATISTICS

astronomical observations. Later the utility of the"law" in error assessment was

extendedto land surveyingandevento range estimation problems in artillery

fire. Indeed, between 1800 and 1820the foundationsof thetheory of error

distribution were laid.

Carl Friedrich Gauss (1777-1855),perhapsthe greatest mathematician of all

time, also made important contributions to work in this area. He was a

consultantto thegovernmentsof Hanoverand ofDenmark when they undertook

geodeticsurveys. The function that helpedto rationalizethe combinationof

observationsis sometimes calledthe Laplace-Gaussian distribution.

Following the work of LaplaceandGauss,the developmentof mathematical

probability theory slowed somewhat and not agreat dealof progresswasmade

until the present century.But it was during the 19th century, throughthe

developmentof life insurance companies andthroughthe growth of statistical

approachesin the social and biological sciences, that the applications of

probability theory burgeoned. Augustus De Morgan (1806-1871), for example,

attemptedto reducethe constructsof probability to straightforward rulesof

thumb. His work An Essayon Probabilities and onTheir Applicationto Life

Contingenciesand InsuranceOffices, publishedin 1838, is full of practical

adviceand iscommentedon byWalker (1929).

THE NORMAL DISTRIBUTION

The normal distributionwas sonamed because many biological variables when

measuredin large groupsof individuals,andplottedasfrequency distributions,

do show close approximations to thecurve. It is partly for this reason that the

mathematicsof thedistributionareusedin data assessment in thesocial sciences

and inbiology. The responsibility,aswell as thecredit,for this extensionof the

use ofcalculations designed to estimate error or gambling expectancies into the

examinationof human characteristics rests with Lambert Adolphe Quetelet

(1796-1874),a Belgian astronomer.

In 1835Queteletdescribedhis conceptof the averageman- / 'homme moyen.

L'homme moyenis Nature's ideal,an ideal that corresponds with a middle,

measured value. But Nature makeserrors,and in, as it were,missingthe target,

producesthe variability observedin human traitsandphysicalcharacters.More

importantly, the extent andfrequencyof these errorsoften conformto the law

of frequencyof error-thenormal distribution.

JohnVenn (1834-1933), the English logician, objectedto the use of the word

error in this context:"When Nature presents us with a groupof objectsof every

kind, it is using rathera bold metaphorto speakin this case alsoof a law of

error" (Venn, 1888,p. 42), but theanalogywasattractiveto some.

Quetelet examined the distributionof themeasurements of thechest girths

13 THE NORMAL DISTRIBUTION

of 5,738 Scottish soldiers, these data having been extracted from the 13th

volumeof theEdinburgh MedicalJournal. There is nodoubt thatthe measure-

ments closely approximate to anormal curve.In another attempt to exemplify

the law, Quetelet examined the heightsof 100,000 French conscripts. Here he

noticeda discrepancy between observed andpredicted values:

The official documents would make it appearthat,of the 100,000men,28,620

are ofless height than5 feet 2 inches: calculation gives only 26,345. Is it not a

fair presumption, that the 2,275 men whoconstitutethe difference of these

numbershave beenfraudulently rejected?We canreadily understand that it is

an easy matterto reduceone'sheighta half-inch,or aninch, whenso greatan

interestis atstakeasthat of being rejected. (Quetelet, 1835/1849, p. 98)

Whether or not theallegation stated here - that short (butnot tooshort)

Frenchmen havestoopedso low as toavoid military service- is true is no

longeran issue. A more important point is notedby Boring (1920):

While admittingthe dependenceof the law onexperience, Quetelet proceeds in

numerouscasesto analyze experienceby meansof it. Sucha double-edged

sword is a peculiarly effective weapon,and it is nowonder that subsequent

investigators were tempted to use it inspiteof thenecessary rules of scientific

warfare. (Boring, 1920,p. 11)

The use of thenormal curvein statisticsis not, however, based solely on the

fact that it can beusedto describethe frequencydistributionof many observed

characteristics.It has amuch morefundamental significancein inferential

statistics,aswill be seen,and thedistributionand itsproperties appear in many

partsof this book.

Galton first became awareof the distribution from his friend William

Spottiswoode,who in 1862 became Secretary of the Royal Geographical

Society,but it was thework of Quetelet that greatly impressed him. Many of

the data setshe collected approximated to the law and heseemed,on occasion,

to bealmost mystically impressed with it.

I know of scarcely anythingso apt toimpressthe imaginationas thewonderful

form of cosmic order expressed by the"Law of Frequencyof Error." The law

would havebeenpersonifiedby theGreeksanddeified,if they hadknown of it.

It reigns with serenityand in completeself-effacement amidst the wildest

confusion. The hugerthe mob and thegreaterthe apparent anarchy, the more

perfect is its sway. It is thesupremelaw of Unreason. Whenever a large sample

of chaotic elementsaretaken in handand marshalledin theorderof their

magnitude,an unsuspectedandmostbeautiful form of regularityprovesto have

been latent all along. (Galton, 1889, p. 66)

14 1. THE DEVELOPMENT OF STATISTICS

This rathertheologicalattitude towardthe distribution echoesDe Moivre,

who, overa century before, proclaimed in TheDoctrineof Chances:

Altho' chance produces irregularities, still the Oddswill beinfinitely great, that

in the processof Time, thoseirregularities will bearno proportion to the

recurrencyof that Order which naturally results from ORIGINAL DESIGN...

SuchLaws, aswell as theoriginal DesignandPurposeof their Establishment,

must all be fromwi thout... if we blind not ourselves with metaphysical dust,

we shall beled, by ashortandobvious way,to theacknowledgement of thegreat

MAKER andGOVENOURof all; Himself all-wise, all-powerful andgood.(De

Moivre, 1756/1967p. 251-252)

The ready acceptance of thenormal distributionas a law of nature encouraged

its wide applicationand also produced consternation when exceptions were

observed. Quetelet himself admitted the possibility of theexistenceof asym-

metric distributions,andGaltonwas attimes less lyrical, for critics hadobjected

to the use of thedistribution,not as apractical toolto beused with caution where

it seemedappropriate, but as asort of divine rule:

It hasbeenobjectedto someof my former work,especiallyin HereditaryGenius,

that I pushedthe applicationof the Law ofFrequencyof Error somewhattoo

far.

I may have doneso, ratherby incautious phrases than in reality; ... I am

satisfiedto claim the Normal Law is afair averagerepresentationof theobserved

Curves during nine-tenths of their course;...(Galton, 1889,p. 56)

6

BIOMETRICS

In 1890, WalterF. R. Weldon(1860-1906)was appointedto the Chair of

Zoology at University College, London.He wasgreatly impressedandmuch

influencedby Gallon's Natural Inheritance.Not only did thebook showhim

how thefrequencyof thedeviationsfrom a "type" might be measured, it opened

up for him, and forotherzoologists,a hostof biometric problems.In two papers

publishedin 1890 and 1892, Weldon showed that various measurements on

shrimps mightbe assessed usingthe normal distribution.He also demonstrated

interrelationships (correlations) between two variableswithin the individuals.

But the critical factorin Weldon's contributionto thedevelopment of statistics

was hisprofessorial appointment, for this broughthim into contact with Karl

Pearson, then Professor of Applied MathematicsandMechanics,a post Pearson

had held since 1884. Weldonwas attempting to remedy his weaknessin

6

Note thatthis quotationand thepreviousonefrom Galtonare 10pagesapartin thesame

work!

BIOMETRICS 15

mathematicsso that he could extendhis research,and heapproached Pearson

for help. His enthusiasmfor thebiometric approach drew Pearson away from

more orthodox work.

A second important link waswith Galton,who hadreviewed Weldon'sfirst

paperon variation in shrimps. Galtonsupportedandencouragedthe work of

thesetwo youngermen until his death, and, under the termsof his will, left

45,000to endowa Chair of Eugenicsat theUniversity of London, together

with the wish thatthe post mightbeofferedfirst to Karl Pearson.Theoffer was

madeand accepted.

In 1904, Galtonhadoffered the University of London 500to establishthe

study of national eugenics.Pearsonwas amemberof theCommittee thatthe

University set up, and the outcomewas adecisionto appointthe Gallon Research

Fellow at what was to benamedthe Eugenics RecordOffice. This becamethe

Galton Laboratory for National Eugenicsin 1906, and yet more financial

assistancewasprovidedby Gallon. Pearson, still Professorof Applied Mathe-

matics,was itsDirector aswell asHeadof theBiometrics Laboratory. This latter

received muchof its funding over many yearsfrom grantsfrom the Worshipful

Companyof Drapers, whichfirst gave moneyto theUniversity in 1903.

Pearson's appointment to theGallon Chair brought applied statistics,bio-

melrics, and eugenics together under his direction at University College.II

cannot however be claimedabsolutelythat the day-to-day workof these units

wasdriven by acommon theme. Applied statistics andbiometrics were primar-

ily concernedwith the development andapplicationof statisticaltechniques to

a variety of problems,including anthropometric investigations; the Eugenics

Laboratory collected extensive family pedigreesandexamined actuarial death

rates. Of course Pearson coordinated all thework, andtherewas interchange

andexchange among the staff that workedwith him, but Magnello (1998,1999)

hasargued that therewas not asingleunifying purposein Pearson'sresearch.

Others, notably MacKenzie (1981), Kevles (1985), and Porter (1986), have

promotedthe view that eugenics was thedriving force behindPearson'sstatis-

tical endeavors.

Pearsonwas not aformal memberof theeugenics movementHe did not

join the Eugenics Education Society, andapparentlyhe tried to keepthe two

laboratories administratively separate, maintaining separate financial accounts,

for example,but it has to berecognized that his personal viewsof thehuman

condition and itsfuture includedthe conviction that eugenics was ofcritical

importance.Therewas anobviousand persistent interminglingof statistical

resultsandeugenicsin his pronouncements. For example, in his Huxley Lecture

in 1903 (publishedin Biometrikain 1903and 1904),on topicsthat were clearly

biometric,havingto dowith his researcheson relationships between moral and

16 1. THE DEVELOPMENT OF STATISTICS

intellectual variables, he ended witha plea,if not a rallying cry, for eugenics:

The mentally better stockin thenationis notreproducing itselfat thesame rateas it

did of old; the less able, and theless energetic, aremore fertile thanthe better stocks.

... Theonly remedy,if one bepossibleat all, is to alter the relativefertility of the

good and the badstocksin thecommunity.. . . intelligencecan beaidedand be

trained,but notraining or educationcancreateit. You must breedit, that is thebroad

result for statecraft whichflows from the equalityin inheritanceof thepsychicaland

the physical characters in man. (Pearson, 1904a, pp. 179-180).

Pearson'scontributionwasmonumental, for in less than8 years, between

1893 and 1901, he published over30 paperson statisticalmethods. The first

waswritten as aresultof Weldon's discovery that the distributionof one set of

measurementsof thecharacteristicsof crabs, collectedat thezoological station

at Naplesin 1892,was"double-humped." The distributionwasreducedto the

sum of two normal curves. Pearson (1894)proceededto investigatethe general

problemof fitting observed distributions to theoretical curves. This work was

to lead directlyto theformulationof the x

2

testof "goodnessof fit" in 1900,one

of the most important developments in thehistory of statistics.

Weldon approachedthe problemof discrepancies between theory and

observationin a much more empirical way, tossing coins anddice andcompar-

ing the outcomes withthe binomial model. These data helped to produce

another lineof development.

In a letter to Galton, writtenin 1894, Weldon asksfor a commenton the

resultsof 7,000tossingsof 12 dice collectedfor him by aclerk at University

College:

A day or two agoPearsonwantedsomerecordsof thekind in a hurry, in order

to illustratea lecture,and Igavehim therecordof theclerk's7000tosses... on

examinationhe rejects them, because he thinks the deviationfrom the theoreti-

cally most probable resultis so great as to make the record intrinsically

incredible, (quotedby E. S.Pearson,1965, p. 11)

This incidentset off agood dealof correspondence between Karl Pearson,

F.Y. Edgeworth(1845-1926),an economist andstatistician, andWeldon, the

details of which are nowonly of minor importance. But, as Karl Pearson

remarked, "Probabilitiesare very slippery things" (quotedby E. S.Pearson,

1965, p. 14), and thesearchfor criteria by which to assessthe differences

between observed andtheoretical frequencies, andwhetheror notthey couldbe

reasonably attributedto chance sampling fluctuations, began. Statistical re-

search rapidly expanded into careful examinationof distributions other than

the normal curveandeventually intothe propertiesof sampling distributions,

BIOMETRICS 17

particularly throughthe seminal work of Ronald Fisher.

In developinghis researchinto the propertiesof theprobability distributions

of statistics, Fisher investigated the basisof hypothesis testingand thefounda-

tions of all thewell-known testsof statistical significance. Fisher'sassertion

that p = .05 (1 in 20) is theprobability thatis convenientfor judging whetheror

not a deviationis to beconsidered significant (i.e. unlikely to be due tochance),

hasprofoundly affected research in the social sciences,althoughit shouldbe

noted thathe was not theoriginatorof theconvention (Cowles& Davis, 1982a).

Of course,the developmentof statistical methods doesnot endhere,nor have

all the threads been drawn together. Discussion of theimportant contribution

of W. S. Gosset("Student,"1876-1937)to small sample workand therefine-

ments introduced into hypothesis testing by Karl Pearson'sson, EgonS.

Pearson(1895-1980)andJerzy Neyman(1899-1981)will be found in later

chapters, whenthe earlier details have been elaborated.

Biometrics and Genetics

The early yearsof the biometric school were surrounded by controversy.

Pearsonand Weldon heldfast to the view that evolution took placeby the

continuous selectionsof variations that were favorable to organismsin their

environment.The rediscoveryof Mendel's workin 1900 supportedthe concept

that heredity depends on self-reproducing particles (what we nowcall genes),

andthat inherited variationis discontinuousandsaltatory. The sourceof the

developmentof higher typeswas occasional genetic jumps or mutations.

Curiously enough, thiswas theview of evolution that Galtonhad supported.

His misinterpretationof thepurely statistical phenomenon of regressionled him

to thenotion thata distinctionhad to bemade between variations from the mean

that regressandwhat he called"sports"(a breeder'sterm for ananimalor plant

variety thatappearsapparently spontaneously) that will not.

A championof theposition that mutations were of critical importancein the

evolutionaryprocesswasWilliam Bateson(1861-1926)and aprolongedand

bitter argument withthe biometricians ensued. The Evolution Committeeof the

Royal Society broke down over the dispute. Biometrikawasfoundedby Pearson

and Weldon, with Galton'sfinancial support,in 1900, after the Royal Society

had allowed Batesonto publish a detailed criticismof a paper submittedby

Pearson beforethe paper itselfhadbeen issued. Britain's important scientific

journal, Nature, tookthe biometricians' sideandwould not print lettersfrom

Bateson. Pearson replied to Bateson'scriticismsin Biometrika but refusedto

acceptBateson'srejoinders, whereupon Bateson hadthem privately printedby

the CambridgeUniversity Pressin theformat of Biometrika\

At the British Association meetingin Cambridgein 1904, Bateson, then

18 1. THE DEVELOPMENT OF STATISTICS

Presidentof theZoological Section, took the opportunityto deliver a bitter attack

on the biometric school. Dramatically waving aloft the published volumesof

Biometrika,he pronounced them worthless and hedescribed Pearson's correla-

tion tablesas: "aProcrusteanbedinto whichthe biometricianfits his unanalysed

data." (quotedby Julian Huxley,1949).

It is even said that Pearson andBatesonrefusedto shake hands at Weldon's

funeral. Nevertheless, after Weldon's deaththe controversy cooled. Pearson's

work became more concerned with the theoryof statistics, althoughthe influ-

enceof his eugenic philosophy wasstill in evidence, and by1910, when Bateson

becameDirector of theJohn Innes Horticultural Institute, the argument haddied.

However, some statistical aspects of this contentious debate predated the

evolution dispute,andechoesof them- indeed, marked reverberations from

them - arestill around today, although of course MendelianandDarwinian

thinking arecompletely reconciled.

STATISTICAL CRITICISM

Statisticshasbeen calledthe "scienceof averages,"andthis definitionis not

meant in a kindly way. The great physiologist Claude Bernard (1813-1878)

maintained that the use ofaveragesin physiology couldnot be countenanced:

becausethe true relationsof phenomenadisappear in theaverage; whendealing

with complex andvariable experiments, we must study their various circum-

stances, andthen present our most perfect experiment as atype, which, however,

still standsfor true facts.

... averagesmust thereforeberejected, because they confuse while aiming to

unify, anddistort while aiming to simplify. (Bernard,1865/1927,p. 135)

Now it is, of course, true that lumping measurements together may notgive

us anything more thana pictureof thelumping together,and theaverage value

may not beanything likeany oneindividual measurement at all, but Bernard's

ideal type fails to acknowledgethe reality of individual differences. A rather

memorable exampleof a very real confusionis given by Bernard(1865/1927):

A startling instanceof this kindwasinventedby aphysiologistwho took urinefrom

a railway stationurinal where peopleof all nationspassed, and whobelievedthat he

could thus present an analysisof average European urine! (pp. 134-135).

A less memorable, but just astelling, exampleis that of thesocial psycholo-

gist who solemnly reports "mean social class."

Pearson(1906) notes that:

One of theblows to Weldon, which resultedfrom his biometric viewof life

STATISTICAL CRITICISM 19

wasthat his biological friendscould not appreciatehis newenthusiasms. They

could not understandhow theMuseum"specimen"was in thefuture to be

replacedby the"sample"of 500 to1000 individuals, (p. 37)

The view is still not wholly appreciated. Many psychologists subscribe to

the position thatthe most pressing problems of thediscipline,andcertainlythe

onesof most practical interest, areproblemsof individual behavior. A major

criticism of the effect of the use of thestatistical approachin psychological

researchis thefailure to differentiateadequately between general propositions

that applyto most, if not all, membersof a particular groupand statistical

propositions that applyto someaggregatedmeasureof the membersof the

group. The latter approach discounts the exceptionsto thestatisticalaggregate,

which not only may be themost interestingbut may, on occasion, constitute a

large proportionof thegroup.

Controversy abounds in thefield of measurement, probability, andstatistics,

and themethods employedare open to criticism, revision, and downright

rejection. On theother hand, measurement andstatistics playa leading rolein

psychological research, and thegreatest danger seemsto lie in anonawareness

of the limitations of thestatistical approachand thebasesof their development,

aswell as the use of techniques, assisted by thehigh-speed computer, asrecipes

for datamanipulation.

Miller (1963) observedof Fisher, "Few psychologists have educated us as

rapidly, or have influencedour work aspervasively,as didthis fervent, clear-

headed statistician."(p. 157).

Hogben (1957) certainly agreesthat Fisherhasbeen enormouslyinfluential

but heobjectsto Fisher's confidence in his ownintuitions:

This intrepid beliefin what he disarmingly calls common sense... has ledFisher

... to advancea batteryof conceptsfor thesemantic credentials of which neither

he nor hisdisciplesoffer any justification enrapport with the generallyaccepted

tenetsof theclassical theoryof probability. (Hogben, 1957, p. 504)

Hogben also expresses a thoughtoften shared by natural scientists when

they review psychological research, that:

Acceptability of a statisticallysignificant result of an experimenton animal

behaviourin contradistinctionto aresult whichthe investigatorcanrepeat before

a critical audience naturally promotes a high outputof publication. Hencethe

argumentthat the techniques workhas atempting appeal to young biologists.

(Hogben, 1957,p. 27)

Experimental psychologists may well agree thatthe tightly controlledex-

periment is the apotheosisof classicalscientific method,but they are not so

20 1. THE DEVELOPMENT OF STATISTICS

arrogantas tosuppose that their subject matter will necessarily submit to this

form of analysis,andthey turn, almost inevitably, to statistical,asopposedto

experimental, control. This is not amuddle-headed notion, but it does present

dangersif it is accepted without caution.

A balanced,but notuncritical, viewof theutility of statisticscan bearrived

at from a considerationof theforces that shaped the disciplineand anexami-

nationof its development. Whether or notthis is anassertion that anyone, let

alonethe authorof this book,canjustify remainsto beseen.

Yet thereareWriters, of a Classindeed very different from that of JamesBernoulli,

who insinuateas if theDoctrine of Probabilities could haveno placein anyserious

Enquiry; andthat Studiesof this kind, trivialandeasyasthey be,rather disqualify

a man forreasoningon anyother subject. Let the Reader chuse.(De Moivre,

1756/1967,p. 254)

2

Science, Psychology,

and Statistics

DETERMINISM

It is apopular notion thatif psychologyis to beconsidereda science, thenit

most certainlyis not anexact science. The propositionsof psychologyare

consideredto beinexact becauseno psychologist on earth would venturea

statement suchasthis: "All stable extravertswill, when asked,volunteerto

participate in psychological experiments."

1

The propositionsof the natural

sciencesareconsideredto beexact because all physicists wouldbe preparedto

attest (with somefew cautionary qualifications) that, "fireburns" or, more

pretentiously, that "e = mc

2

." In short, it is felt that the order in theuniverse,

which nearly everyone (though for different reasons)is sure must be there,has

been more obviously demonstrated by thenatural rather thanthe social scien-

tists.

Order in theuniverse implies determinism, a mostuseful and amost vexing

term, for it brings thosewho wonder about such things into contact with the

philosophicalunderpinningsof therather everyday concept of causality.No one

hasstatedthe situation more clearly than Laplace in his Essai:

Present eventsareconnectedwith preceding onesby a tiebasedupon the evident

principle that a thing cannot occur without a causewhich producesit. This axiom

known by the nameof theprinciple of sufficient reason, extends even to actions

which areconsideredindifferent; the freestwill is unable withouta determinative

motive to give them bi rth;...

We ought thento regardthe present stateof theuniverseas theeffect of its anterior

1

Not wishingto make pronouncements on theprobabilistic natureof thework of others,thewriter

is makinganoblique referenceto work in which he and acolleague (Cowles& Davis, 1987)found that

thereis an 80%chance that stable extraverts will volunteerto participatein psychological research.

21

22 2. SCIENCE, PSYCHOLOGY, AND STATISTICS

stateand as thecauseof the onethat is to follow. Given for oneinstantanintelligence

which could comprehendall the forces by which natureis animated and the

respectivesituationsof thebeingswho composeit- an intelligence sufficiently vast

to submit thesedatato analysis - it would embracein the same formulathe

movementsof thegreatestbodiesof theuniverseandthoseof thelightest atom;for

it nothingwould be uncertainand thefuture, as thepast,would be present to its eyes.

(Laplace,1820/1951,pp. 3-4)

The assumptionof determinismis simply the apparently reasonable notion

that eventsare caused.Sciencediscovers regularitiesin nature, formulates

descriptionsof these regularities, and provides explanations, that is to say,

discoverscauses.Knowledgeof thepastenablesthe future to be predicted.

This is thepopular viewof science,and it isalsoa sort of working ruleof thumb

for those engaged in thescientific enterprise. Determinism is thecentral feature

of the development of modern science up to thefirst quarterof the20th century.

The successesof thenatural sciences, particularly the successof Newtonian

mechanics, urged andinfluenced someof thegiantsof psychology, particularly

in North America,to adopt a similar mechanistic approach to the study of

behavior. The rise of behaviorism promotedthe view that psychology could

be ascience, "like other sciences."Formulae couldbe devised that would allow

behaviorto bepredicted,and atechnology couldbe achieved that would enable

environmentalconditionsto be somanipulatedthat behavior couldbe control-

led. The variability in living things was to bebrought under experimental

control, a program that leads quite naturally to thenotion of thestimulus control

of behavior. It follows thatconceptssuchaswill or choiceor freedomof action

could be rejectedby behavioral science.

In 1913, JohnB. Watson(1878-1958)publisheda paper that became the

behaviorists' manifesto. It begins, "Psychology as thebehaviorist viewsit is a

purely objective experimental branch of naturalscience.Its theoretical goal is

the predictionandcontrol of behavior" (Watson, 1913, p. 158).

Oddly enough, this pronouncement coincided with work that began to

questionthe assumptionof determinismin physics,the undoubted leader of the

natural sciences.In 1913, a laboratory experiment in Cambridge, England,

providedspectroscopic proof of what is known as theRutherford-Bohr model

of the atom. Ernest Rutherford (later Lord Rutherford, 1871-1937)hadpro-

posed that the atomwaslike a miniaturesolar system with electrons orbiting a

central nucleus. Niels Bohr (1885-1962),a Danish physicist, explained that the

electrons movedfrom oneorbit to another, emittingor absorbing energy asthey

movedtowardor awayfrom the nucleus. The jumping of anelectronfrom orbit

to orbit appearedto beunpredictable. The totality of exchanges could only be

predictedin a statistical, probabilistic fashion.That giantof modern physicists,

DETERMINISM 23

Albert Einstein(1879-1955),whose workhadhelpedto start the revolutionin

physics,wasloath to abandonthe conceptof a completely causal universe and

indeed never did entirely abandonit. In the 1920s, Einstein made the statement

that hasoften been paraphrased as,"God doesnot play dice withthe world."

Nevertheless,he recognizedthe problem. In a lecture givenin 1928, Einstein

said:

Today faithin unbroken causalityis threatenedpreciselyby thosewhosepathit had

illumined astheir chief and unrestricted leader at thefront, namelyby therepre-

sentativesof physics... All natural lawsaretherefore claimedto be, "in principle,"

of a statisticalvariety and ourimperfect observationpracticesalone havecheatedus

into a belief in strict causality, (quotedby Clark, 1971,pp. 347-348)

But Einstein never really accepted this proposition, believing to the endthat

indeterminacywas to beequated with ignorance. Einstein may beright in

subscribing ultimatelyto theinflexibility of Laplace's all-seeing demon, but

another approachto indeterminacywas advancedby Werner Heisenberg

(1902-1981)a German physicist who, in 1927, formulatedhis famous uncer-

tainty principle. He examinednot merelythe practical limits of measurement

but the theoretical limits,andshowed that the act ofobservationof theposition

andvelocity of a subatomic particle interfered with it so as toinevitably produce

errors in themeasurement of one or theother. This assertion hasbeen takento

mean that, ultimately,the forces in our universeare randomand therefore

indeterminate.Bertrand Russell (1931) disagrees:

Spaceandtime were inventedby theGreeks, andserved their purpose admirably

until the present century. Einstein replaced them by akind of centaur whichhe called

"space-time,"andthis did well enoughfor a coupleof decades, but modern quantum

mechanicshasmadeit evident thata more fundamental reconstruction is necessary.

The Principleof Indeterminacyis merelyanillustration of this necessity,not of the

failure of physical lawsto determinethe courseof nature, (pp. 108-109)

The important pointto beawareof is that Heisenberg's principle refers to

the observerand the act of observationand notdirectly to thephenomena that

arebeing observed. This implies that the phenomena have an existence outside

their observationanddescription,a contention that, by itself, occupies philoso-

phers. Nowhereis thedemonstration that technique andmethod shape the way

in which we conceptualize phenomena more apparent than in the physicsof

light. The progressof eventsin physics thatled to theview thatlight wasboth

waveandparticle,a view that Einstein's workhadpromoted, beganto dismay

him when it was used to suggest that physics would have to abandon strict

24 2. SCIENCE, PSYCHOLOGY, AND STATISTICS

continuity andcausality. Bohr responded to Einstein's dismay:

You, the man whointroducedthe ideaof light asparticles! If you are soconcerned

with the situationin physicsin which the natureof light allows for a dual interpre-

tation, thenask theGerman government to ban the use of photoelectric cellsif you

think that light is waves, or the use ofdiffraction gratingsif light is corpuscular,

(quotedby Clark, 1971,p. 253)

The parallelsin experimental psychologyareobvious. That eminent histo-

rian of thediscipline, Edwin Boring, describes a colloquiumat Harvard when

his colleague, William McDougall,who: "believed in freedomfor thehuman

mind - in atleast a little residueof freedom- believedin it andhopedfor as

much as hecould savefrom the inroadsof scientific determinism,"and he, a

determinist, achieved, for Boring, an understanding:

McDougall'sfreedomwas myvariance. McDougall hoped that variance would

alwaysbe found in specifyingthe laws of behavior,for there freedom might still