Professional Documents

Culture Documents

Random Forests: H S H H

Uploaded by

Preethi Sj0 ratings0% found this document useful (0 votes)

27 views2 pagesrandom forest

Original Title

Random Forests

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentrandom forest

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

27 views2 pagesRandom Forests: H S H H

Uploaded by

Preethi Sjrandom forest

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 2

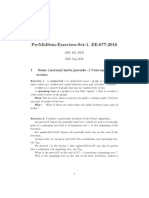

Random Forests

Random forests is an ensemble learning algorithm. The basic premise of the

algorithm is that building a small decision-tree with few features is a computationally cheap process. If we can build many small, weak decision trees in parallel,

we can then combine the trees to form a single, strong learner by averaging or taking the majority vote. In practice, random forests are often found to be the most

accurate learning algorithms to date. The pseudocode is illustrated in Algorithm 1.

The algorithm works as follows: for each tree in the forest, we select a bootstrap

sample from S where S (i) denotes the ith bootstrap. We then learn a decision-tree

using a modied decision-tree learning algorithm. The algorithm is modied as

follows: at each node of the tree, instead of examining all possible feature-splits, we

randomly select some subset of the features f F. where F is the set of features.

The node then splits on the best feature in f rather than F. In practice f is much,

much smaller than F. Deciding on which feature to split is oftentimes the most

computationally expensive aspect of decision tree learning. By narrowing the set

of features, we drastically speed up the learning of the tree.

Algorithm 1 Random Forest

Precondition: A training set S := (x1 , y1 ), . . . , (xn , yn ), features F, and number

of trees in forest B.

1 function RandomForest(S , F)

2

H

3

for i 1, . . . , B do

4

S (i) A bootstrap sample from S

5

hi RandomizedTreeLearn(S (i) , F)

6

H H {hi }

7

end for

8

return H

9 end function

10 function RandomizedTreeLearn(S , F)

11

At each node:

12

f very small subset of F

13

Split on best feature in f

14

return The learned tree

15 end function

Why do random forests work?

The random forest algorithm uses the bagging technique for building an ensemble

of decision trees. Bagging is known to reduce the variance of the algorithm.

However, the natural question to ask is why does the ensemble work better when

we choose features from random subsets rather than learn the tree using the traditional algorithm? Recall, that ensembles are more eective when the individual

models that comprise them are uncorrelated. In traditional bagging with decisiontrees, the constituent decision trees may end up to be very correlated because the

same features will tend to be used repeatedly to split the bootstrap samples. By

restricting each split-test to a small, random sample of features, we can decrease

the correlation between trees in the ensemble.

Furthermore, by restricting the features that we consider at each node, we can

learn each tree much faster, and therefore, can learn more decision trees in a given

amount of time. Thus, not only can we build many more trees using the randomized tree learning algorithm, but these trees will also be less correlated. For these

reasons, random forests tend to have excellent performance.

You might also like

- EXTENDED PROJECT-Shoe - SalesDocument28 pagesEXTENDED PROJECT-Shoe - Salesrhea100% (5)

- Marine Lifting and Lashing HandbookDocument96 pagesMarine Lifting and Lashing HandbookAmrit Raja100% (1)

- Intermediate Accounting (15th Edition) by Donald E. Kieso & Others - 2Document11 pagesIntermediate Accounting (15th Edition) by Donald E. Kieso & Others - 2Jericho PedragosaNo ratings yet

- Machine Learning: Practical Tutorial On Random Forest and Parameter Tuning in RDocument11 pagesMachine Learning: Practical Tutorial On Random Forest and Parameter Tuning in RendaleNo ratings yet

- Random Forest (RF) : Decision TreesDocument3 pagesRandom Forest (RF) : Decision TreesDivya NegiNo ratings yet

- Random ForestDocument11 pagesRandom Forestjoseph676No ratings yet

- Random Forest Algorithms - Comprehensive Guide With ExamplesDocument13 pagesRandom Forest Algorithms - Comprehensive Guide With Examplesfaria shahzadiNo ratings yet

- Random ForestDocument32 pagesRandom ForestHJ ConsultantsNo ratings yet

- Random ForestDocument8 pagesRandom ForestColan VladNo ratings yet

- Learn Random Forest Using ExcelDocument9 pagesLearn Random Forest Using ExcelkPrasad8No ratings yet

- Machine Learning With Random Forests - by Knoldus Inc. - Knoldus - Technical Insights - MediumDocument12 pagesMachine Learning With Random Forests - by Knoldus Inc. - Knoldus - Technical Insights - Mediumdimo.michevNo ratings yet

- AIML Final Cpy WordDocument15 pagesAIML Final Cpy WordSachin ChavanNo ratings yet

- Machine Learning Revision NotesDocument6 pagesMachine Learning Revision NotesGaurav TendolkarNo ratings yet

- CS109a Lecture16 Bagging RF BoostingDocument48 pagesCS109a Lecture16 Bagging RF BoostingTeshome MulugetaNo ratings yet

- 13 Ensemble MethodsDocument7 pages13 Ensemble MethodsJiahong HeNo ratings yet

- Machine Learning 6Document28 pagesMachine Learning 6barkha saxenaNo ratings yet

- Lecture 05 Random Forest 07112022 124639pmDocument25 pagesLecture 05 Random Forest 07112022 124639pmMisbahNo ratings yet

- Random Forest SummaryDocument6 pagesRandom Forest SummaryNkechi KokoNo ratings yet

- Lecture+Notes+-+Random ForestsDocument10 pagesLecture+Notes+-+Random Forestssamrat141988No ratings yet

- What's Next?: Tree Models Decision Trees Ranking and Probability Estimation TreesDocument49 pagesWhat's Next?: Tree Models Decision Trees Ranking and Probability Estimation TreessowmyalalithaNo ratings yet

- Premidsem Exercises Set 1Document5 pagesPremidsem Exercises Set 1sahil walkeNo ratings yet

- Decision Trees / NLPDocument27 pagesDecision Trees / NLPQamaNo ratings yet

- Stats 5Document3 pagesStats 5Ankita MishraNo ratings yet

- Lecture Notes - Random Forests PDFDocument4 pagesLecture Notes - Random Forests PDFSankar SusarlaNo ratings yet

- Random ForestsDocument2 pagesRandom Foreststazvitya MapfumoNo ratings yet

- Advanced Econometrics: Professor: Sukjin HanDocument12 pagesAdvanced Econometrics: Professor: Sukjin HanMack Coleman DowdallNo ratings yet

- Machine Learning: Classification & Decision TreesDocument24 pagesMachine Learning: Classification & Decision TreesNguyen Ba QuanNo ratings yet

- Random FOrestDocument19 pagesRandom FOrestAnurag TiwariNo ratings yet

- Assignment No 1 Submission Deadline: 24 Feb, 2019Document2 pagesAssignment No 1 Submission Deadline: 24 Feb, 2019i181440 Iram WaheedNo ratings yet

- HIT3002: Introduction To Artificial Intelligence: Learning From ObservationsDocument13 pagesHIT3002: Introduction To Artificial Intelligence: Learning From ObservationsHuan NguyenNo ratings yet

- Machine LearningDocument8 pagesMachine LearningRowa salmanNo ratings yet

- ML Unit 3Document14 pagesML Unit 3aiswaryaNo ratings yet

- Lecture 10 Ensemble MethodsDocument69 pagesLecture 10 Ensemble MethodsLIU HengxuNo ratings yet

- Lecture 9Document12 pagesLecture 9Chris GabrielNo ratings yet

- Machine Learning: B.E, M.Tech, PH.DDocument23 pagesMachine Learning: B.E, M.Tech, PH.DTatipatti chiranjeeviNo ratings yet

- ML OptDocument89 pagesML OptKADDAMI SaousanNo ratings yet

- Fuzzy Decision TreesDocument12 pagesFuzzy Decision TreesVu Trung NguyenNo ratings yet

- XG Boost CompDocument21 pagesXG Boost CompTeshome MulugetaNo ratings yet

- Random ForestDocument18 pagesRandom ForestendaleNo ratings yet

- Ensemble V2 1Document19 pagesEnsemble V2 1AradhyaNo ratings yet

- Bagging and Random Forest Presentation1Document23 pagesBagging and Random Forest Presentation1endale100% (2)

- Outlines: Statements of Problems Objectives Bagging Random Forest Boosting AdaboostDocument14 pagesOutlines: Statements of Problems Objectives Bagging Random Forest Boosting Adaboostendale100% (1)

- Data Structures AptitudeDocument3 pagesData Structures AptitudeNeema JoseNo ratings yet

- Explicação de Cada Modelo IAMLDocument10 pagesExplicação de Cada Modelo IAMLGabriel PehlsNo ratings yet

- Ch5 Data ScienceDocument60 pagesCh5 Data ScienceIngle AshwiniNo ratings yet

- Handout9 Trees Bagging BoostingDocument23 pagesHandout9 Trees Bagging Boostingmatthiaskoerner19100% (1)

- Gonzalez 2020Document79 pagesGonzalez 2020hu jackNo ratings yet

- A Comparative Study of Pruned Decision Trees and Fuzzy Decision TreesDocument5 pagesA Comparative Study of Pruned Decision Trees and Fuzzy Decision TreesAjay KumarNo ratings yet

- Additional Material and LinksDocument18 pagesAdditional Material and LinksVilma madaNo ratings yet

- 7 - Random Forests - Trees EverywhereDocument19 pages7 - Random Forests - Trees EverywhereMirela FrandesNo ratings yet

- The Greedy Method: General Method: Given N Inputs Choose A SubDocument11 pagesThe Greedy Method: General Method: Given N Inputs Choose A SubrishabhNo ratings yet

- JU Ch9Document21 pagesJU Ch9abebemako302No ratings yet

- Random ForestDocument10 pagesRandom ForestAbhishek SrivastavaNo ratings yet

- 09 Decision Trees Nearest NeighborDocument8 pages09 Decision Trees Nearest NeighborYiwei ChenNo ratings yet

- Random Forests Brei Man MC Learning JDocument28 pagesRandom Forests Brei Man MC Learning JNidhi DubeyNo ratings yet

- Decision Trees and Random Forest Q&aDocument48 pagesDecision Trees and Random Forest Q&aVishnuNo ratings yet

- Module 3 DecisionTree NotesDocument14 pagesModule 3 DecisionTree NotesManas HassijaNo ratings yet

- Adjusting HeapsDocument18 pagesAdjusting Heapsravikumarsid2990No ratings yet

- Splay TreeDocument7 pagesSplay TreePraveen YadavNo ratings yet

- Classification Trees: C4.5: Vanden Berghen FrankDocument5 pagesClassification Trees: C4.5: Vanden Berghen FrankwidodoediNo ratings yet

- Tree Structure Compression With RepairDocument10 pagesTree Structure Compression With RepairHusnit CLNo ratings yet

- Andrew Rosenberg - Lecture 11: Clustering Introduction and Projects Machine LearningDocument65 pagesAndrew Rosenberg - Lecture 11: Clustering Introduction and Projects Machine LearningRoots999No ratings yet

- Essential Algorithms: A Practical Approach to Computer AlgorithmsFrom EverandEssential Algorithms: A Practical Approach to Computer AlgorithmsRating: 4.5 out of 5 stars4.5/5 (2)

- KL TransformDocument10 pagesKL TransformPreethi SjNo ratings yet

- Butter PolynomialDocument4 pagesButter PolynomialPreethi SjNo ratings yet

- Ss Assignment 2Document3 pagesSs Assignment 2Preethi SjNo ratings yet

- Filter Programs MatlabDocument8 pagesFilter Programs MatlabPreethi Sj100% (1)

- Ss Assignment 1Document2 pagesSs Assignment 1Preethi SjNo ratings yet

- Steps Toimplement FiltersDocument1 pageSteps Toimplement FiltersPreethi SjNo ratings yet

- Experiment No: 09 The Continuous Time Fourier TransformDocument2 pagesExperiment No: 09 The Continuous Time Fourier TransformPreethi SjNo ratings yet

- Unit 345 QBDocument3 pagesUnit 345 QBPreethi SjNo ratings yet

- Government of West Bengal Finance (Audit) Department: NABANNA', HOWRAH-711102 No. Dated, The 13 May, 2020Document2 pagesGovernment of West Bengal Finance (Audit) Department: NABANNA', HOWRAH-711102 No. Dated, The 13 May, 2020Satyaki Prasad MaitiNo ratings yet

- Extent of The Use of Instructional Materials in The Effective Teaching and Learning of Home Home EconomicsDocument47 pagesExtent of The Use of Instructional Materials in The Effective Teaching and Learning of Home Home Economicschukwu solomon75% (4)

- Zelio Control RM35UA13MWDocument3 pagesZelio Control RM35UA13MWSerban NicolaeNo ratings yet

- Historical Development of AccountingDocument25 pagesHistorical Development of AccountingstrifehartNo ratings yet

- Online Learning Interactions During The Level I Covid-19 Pandemic Community Activity Restriction: What Are The Important Determinants and Complaints?Document16 pagesOnline Learning Interactions During The Level I Covid-19 Pandemic Community Activity Restriction: What Are The Important Determinants and Complaints?Maulana Adhi Setyo NugrohoNo ratings yet

- RENCANA KERJA Serious Inspeksi#3 Maret-April 2019Document2 pagesRENCANA KERJA Serious Inspeksi#3 Maret-April 2019Nur Ali SaidNo ratings yet

- 21st Bomber Command Tactical Mission Report 178, OcrDocument49 pages21st Bomber Command Tactical Mission Report 178, OcrJapanAirRaidsNo ratings yet

- 1400 Service Manual2Document40 pages1400 Service Manual2Gabriel Catanescu100% (1)

- TLE - IA - Carpentry Grades 7-10 CG 04.06.2014Document14 pagesTLE - IA - Carpentry Grades 7-10 CG 04.06.2014RickyJeciel100% (2)

- Loading N Unloading of Tanker PDFDocument36 pagesLoading N Unloading of Tanker PDFKirtishbose ChowdhuryNo ratings yet

- Gardner Denver PZ-11revF3Document66 pagesGardner Denver PZ-11revF3Luciano GarridoNo ratings yet

- Personal Best B1+ Unit 1 Reading TestDocument2 pagesPersonal Best B1+ Unit 1 Reading TestFy FyNo ratings yet

- 6 V 6 PlexiDocument8 pages6 V 6 PlexiFlyinGaitNo ratings yet

- Basic Vibration Analysis Training-1Document193 pagesBasic Vibration Analysis Training-1Sanjeevi Kumar SpNo ratings yet

- How Yaffs WorksDocument25 pagesHow Yaffs WorkseemkutayNo ratings yet

- Brand Positioning of PepsiCoDocument9 pagesBrand Positioning of PepsiCoAbhishek DhawanNo ratings yet

- What Caused The Slave Trade Ruth LingardDocument17 pagesWhat Caused The Slave Trade Ruth LingardmahaNo ratings yet

- 90FF1DC58987 PDFDocument9 pages90FF1DC58987 PDFfanta tasfayeNo ratings yet

- NOP PortalDocument87 pagesNOP PortalCarlos RicoNo ratings yet

- T1500Z / T2500Z: Coated Cermet Grades With Brilliant Coat For Steel TurningDocument16 pagesT1500Z / T2500Z: Coated Cermet Grades With Brilliant Coat For Steel TurninghosseinNo ratings yet

- Social Media Marketing Advice To Get You StartedmhogmDocument2 pagesSocial Media Marketing Advice To Get You StartedmhogmSanchezCowan8No ratings yet

- Rofi Operation and Maintenance ManualDocument3 pagesRofi Operation and Maintenance ManualSteve NewmanNo ratings yet

- PLT Lecture NotesDocument5 pagesPLT Lecture NotesRamzi AbdochNo ratings yet

- Sterling B2B Integrator - Installing and Uninstalling Standards - V5.2Document20 pagesSterling B2B Integrator - Installing and Uninstalling Standards - V5.2Willy GaoNo ratings yet

- CLAT 2014 Previous Year Question Paper Answer KeyDocument41 pagesCLAT 2014 Previous Year Question Paper Answer Keyakhil SrinadhuNo ratings yet

- Shahroz Khan CVDocument5 pagesShahroz Khan CVsid202pkNo ratings yet

- On CatiaDocument42 pagesOn Catiahimanshuvermac3053100% (1)