Professional Documents

Culture Documents

Queuing Theory

Uploaded by

peteronyangohOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Queuing Theory

Uploaded by

peteronyangohCopyright:

Available Formats

Queuing theory Given the important of response time (and throughtput), we need a means of compu ting values for

r these metrics. Queuing o o o o Our black box model: Let's assume our system is in steady-state (input rate = output rate). The contents of our black box. I/O requests "depart" by being completed by the server. theory Elements of a queuing system: Request & arrival rate This is a single "request for service". The rate at which requests are generated is the arrival rate . Server & service rate This is the part of the system that services requests. The rate at which requests are serviced is called the service rate.

Queue o This is where requests wait between the time they arrive and the time th eir processing starts in the server. Queuing theory Useful statistics Length queue ,Time queue o These are the average length of the queue and the average time a request spends waiting in the queue . Length server ,Time server o These are the average number of tasks being serviced and the average tim e each task spends in the server . o Note that a server may be able to serve more than one request at a time. o stem . o erver. o Queuing o me . o o o Time system ,Length system This is the average time a request (also called a task) spends in the sy It is the sum of the time spent in the queue and the time spent in the s The length is just the average number of tasks anywhere in the system. theory Useful statistics Little's Law The mean number of tasks in the system = arrival rate * mean response ti This is true only for systems in equilibrium. We must assume any system we study (for this class) is in such a state. Server utilization This is just This must be between 0 and 1. If it is larger than 1, the queue will grow infinitely long.

o This is also called traffic intensity . Queuing theory Queue discipline

o o o o o o

This is the order in which requests are delivered to the server. Common orders are FIFO, LIFO, and random. For FIFO, we can figure out how long a request waits in the queue by: The last parameter is the hardest to figure out. We can just use the formula:

C is the coefficient of variance, whose derivation is in the book. (don't worry about how to derive it - this isn't a class on queuing theory.) Queuing theory Example: Given: Processor sends 10 disk I/O per second (which are exponentially distributed). Average disk service time is 20 ms. On average, how utilized is the disk?

What is the average time spent in the queue? o When the service distribution is exponential, we can use a simplified fo rmula for the average time spent waiting in line: What is the average response time for a disk request (including queuing time and disk service time)?

Queuing theory Basic assumptions made about problems: System is in equilibrium. Interarrival time (time between two successive requests arriving) is exponential ly distributed. Infinite number of requests. Server does not need to delay between servicing requests. No limit to the length of the queue and queue is FIFO. All requests must be completed at some point. o o o This is called an M/G/1 queue M = exponential arrival G = general service distribution (i.e. not exponential) 1 = server can serve 1 request at a time

It turns out this is a good model for computer science because many arrival proc esses turn out to be exponential . Service times , however, may follow any of a number of distributions. Disk Performance Benchmarks We use these formulas to predict the performance of storage subsystems. ueueing We also need to measure the performance of real systems to: Collect the values of parameters needed for prediction. To determine if the queuing theory assumptions hold (e.g., to determine if the q distribution model used is valid).

Benchmarks: Transaction processing o The purpose of these benchmarks is to determine how many small (and usua lly random) requests a system can satisfy in a given period of time. o This means the benchmark stresses I/O rate (number of disk accesses per second) rather than data rate (bytes of data per second).

Banks, airlines, and other large customer service organizations are most interested in these systems, as they allow simultaneous updates to little piece s of data from many terminals. Disk Performance Benchmarks TPC-A and TPC-B o These are benchmarks designed by the people who do transaction processin g. o They measure a system's ability to do random updates to small pieces of data on disk. o As the number of transactions is increased, so must the number of reques ters and the size of the account file . These restrictions are imposed to ensure that the benchmark really measures disk I/O. They prevent vendors from adding more main memory as a database cache, artificia lly inflating TPS rates. o SPEC system-level file server (SFS) This benchmark was designed to evaluate systems running Sun Microsystems network file service, NFS.

Disk Performance Benchmarks SPEC system-level file server (SFS) o It was synthesized based on measurements of NFS systems to provide a rea sonable mix of reads, writes and file operations. o Similar to TPC-B, SFS scales the size of the file system according to th e reported throughput , i.e., It requires that for every 100 NFS operations per second, the size of the disk m ust be increased by 1 GB. It also limits average response time to 50ms. o Self-scaling I/O This method of I/O benchmarking uses a program that automatically scales several parameters that govern performance.

Number of unique bytes touched. This parameter governs the total size of the data set. By making the value large, the effects of a cache can be counteracted. Disk Performance Benchmarks Self-scaling I/O Percentage of reads. Average I/O request size. This is scalable since some systems may work better with large requests, and som e with small. uest. As with request size, some systems are better at sequential and some are better at random requests. Number of processes. This is varied to control concurrent requests, e.g., the number of tasks simulta neously issuing I/O requests. Disk Performance Benchmarks Self-scaling I/O Percentage of sequential requests. The percentage of requests that sequentially follow (address-wise) the prior req

o The benchmark first chooses a nominal value for each of the five paramet ers (based on the system's performance). It then varies each parameter in turn while holding the others at their nominal value. o Performance can thus be graphed using any of five axes to show the effec ts of changing parameters on a system's performance.

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Fernandez ArmestoDocument10 pagesFernandez Armestosrodriguezlorenzo3288No ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Us Virgin Island WWWWDocument166 pagesUs Virgin Island WWWWErickvannNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Free Radical TheoryDocument2 pagesFree Radical TheoryMIA ALVAREZNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Reg FeeDocument1 pageReg FeeSikder MizanNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Oxford Digital Marketing Programme ProspectusDocument12 pagesOxford Digital Marketing Programme ProspectusLeonard AbellaNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Hindustan Motors Case StudyDocument50 pagesHindustan Motors Case Studyashitshekhar100% (4)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Neuropsychological Deficits in Disordered Screen Use Behaviours - A Systematic Review and Meta-AnalysisDocument32 pagesNeuropsychological Deficits in Disordered Screen Use Behaviours - A Systematic Review and Meta-AnalysisBang Pedro HattrickmerchNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- 2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTDocument5 pages2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTIbrahim JaberNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- ERP Complete Cycle of ERP From Order To DispatchDocument316 pagesERP Complete Cycle of ERP From Order To DispatchgynxNo ratings yet

- 5511Document29 pages5511Ckaal74No ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Moor, The - Nature - Importance - and - Difficulty - of - Machine - EthicsDocument4 pagesMoor, The - Nature - Importance - and - Difficulty - of - Machine - EthicsIrene IturraldeNo ratings yet

- John Titor TIME MACHINEDocument21 pagesJohn Titor TIME MACHINEKevin Carey100% (1)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Evaluating Sources IB Style: Social 20ib Opvl NotesDocument7 pagesEvaluating Sources IB Style: Social 20ib Opvl NotesRobert ZhangNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Yellowstone Food WebDocument4 pagesYellowstone Food WebAmsyidi AsmidaNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- MODULE+4+ +Continuous+Probability+Distributions+2022+Document41 pagesMODULE+4+ +Continuous+Probability+Distributions+2022+Hemis ResdNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Postgraduate Notes in OrthodonticsDocument257 pagesPostgraduate Notes in OrthodonticsSabrina Nitulescu100% (4)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Dole-Oshc Tower Crane Inspection ReportDocument6 pagesDole-Oshc Tower Crane Inspection ReportDaryl HernandezNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- SCA ALKO Case Study ReportDocument4 pagesSCA ALKO Case Study ReportRavidas KRNo ratings yet

- 4 Wheel ThunderDocument9 pages4 Wheel ThunderOlga Lucia Zapata SavaresseNo ratings yet

- Annamalai International Journal of Business Studies and Research AijbsrDocument2 pagesAnnamalai International Journal of Business Studies and Research AijbsrNisha NishaNo ratings yet

- MID TERM Question Paper SETTLEMENT PLANNING - SEC CDocument1 pageMID TERM Question Paper SETTLEMENT PLANNING - SEC CSHASHWAT GUPTANo ratings yet

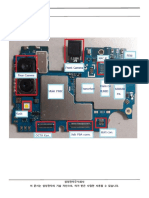

- Level 3 Repair PBA Parts LayoutDocument32 pagesLevel 3 Repair PBA Parts LayoutabivecueNo ratings yet

- Pub - Essentials of Nuclear Medicine Imaging 5th Edition PDFDocument584 pagesPub - Essentials of Nuclear Medicine Imaging 5th Edition PDFNick Lariccia100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Skuld List of CorrespondentDocument351 pagesSkuld List of CorrespondentKASHANNo ratings yet

- Portfolio Artifact Entry Form - Ostp Standard 3Document1 pagePortfolio Artifact Entry Form - Ostp Standard 3api-253007574No ratings yet

- Iso 9001 CRMDocument6 pagesIso 9001 CRMleovenceNo ratings yet

- Experiences from OJT ImmersionDocument3 pagesExperiences from OJT ImmersionTrisha Camille OrtegaNo ratings yet

- Panel Data Econometrics: Manuel ArellanoDocument5 pagesPanel Data Econometrics: Manuel Arellanoeliasem2014No ratings yet

- Attributes and DialogsDocument29 pagesAttributes and DialogsErdenegombo MunkhbaatarNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)