Professional Documents

Culture Documents

A Data Throughput Prediction Using Scheduling and Assignment Technique

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Data Throughput Prediction Using Scheduling and Assignment Technique

Copyright:

Available Formats

International Journal Of Computational Engineering Research (ijceronline.com) Vol. 2 Issue.

A Data Throughput Prediction Using Scheduling And Assignment Technique

M.Rajarajeswari 1, P.R.Kandasamy2, T.Ravichandran3

1. Research Scholar, Dept. of Mathematics Karpagam University,Coimbatore. 2.Professor and Head, Dept. of M.CA Hindusthan Institute of Technology,coimbatore. 3.The Principal, Hindusthan Institute of Technology, coimbatore.

Abstract:

Task computing is a computation to fill the gap between tasks (what user wants to be done), and services (functionalities that are available to the user). Task computing seeks to redefine how users interact with and use computing environments. Wide distributed many-task computing (MTC) environment aims to bridge the gap between two computing paradigms, high throughput computing (HTC) and high-performance computing (HPC). In a wide distributed many-task computing environment, data sharing between participating clusters may become a major performance constriction. In this project, we present the design and implementation of an application-layer data throughput prediction and optimization service for manytask computing in widely distributed environments using Operation research. This service uses multiple parallel TCP streams which are used to find maximum data distribution stream through assignment model which is to improve the end-to-end throughput of data transfers in the network. A novel mathematical model (optimization model) is developed to determine the number of parallel streams, required to achieve the best network performance. This model can predict the optimal number of parallel streams with as few as three prediction points (i.e. three Switching points). We implement this new service in the Stork Data Scheduler model, where the prediction points can be obtained using Iperf and GridFTP samplings technique. Our results show that the prediction cost plus the optimized transfer time is much less than the non optimized transfer time in most cases. As a result, Stork data model evaluates and transfer jobs with optimization service based sampling rate and no. of task is given as input, so our system can be completed much earlier, compared to non optimized data transfer jobs.

Key words: Optimization, Assignment Technique, Stork scheduling Data throughput.

Modules: 1) Construction of Grid Computing Architecture. 2) Applying Optimization Service. 3) Integration with Stork Data cheduler. 4) Applying Quantity Control of Sampling Data. 5) Performance Comparison. Existing System: TCP is the most widely adopted transport protocol but it has major bottleneck. So we have gone for other different implementation techniques, in both at the transport and application layers, to overcome the inefficient network utilization of the TCP protocol. At the transport layer, different variations of TCP have been implemented to more efficiently utilize highspeed networks. At the application layer, other improvements are proposed on top of the regular TCP, such as opening multiple parallel streams or tuning the buffer size. Parallel TCP streams are able to achieve high network throughput by behaving like a single giant stream, which is the combination of n streams, and getting an unfair share of the available bandwidth. Disadvantage Of System: In a widely distributed many-task computing ernvionment, data communication between participating clusters may become a major performance bottleneck. TCP to fully utilize the available network bandwidth. This becomes a major bottleneck, especially in wide-area high speed networks, where both bandwidth and delay properties are too large, which, in turn, results in a large delay before the bandwidth is fully saturated. Inefficient network utilization.

Issn 2250-3005(online)

September| 2012

Page 1306

International Journal Of Computational Engineering Research (ijceronline.com) Vol. 2 Issue. 5

Proposed System: We present the design and implementation of a service that will provide the user with the optimal number of parallel TCP streams as well as a provision of the estimated time and throughput for a specific data transfer. A novel mathematical model (optimization model) is developed to determine the number of parallel streams, required to achieve the best network performance. This model can predict the optimal number of parallel streams with as few as three prediction points (i.e. three Switching points). We implement this new service in the Stork Data Scheduler model, where the prediction points can be obtained using Iperf and GridFTP samplings technique. Advantage of System: The prediction models, the quantity control of sampling and the algorithms applied using the mathematical models. We have improved an existing prediction model by using three prediction points and adapting a full second order equation or an equation where the order is determined dynamically. We have designed an exponentially increasing sampling strategy to get the data pairs for prediction The algorithm to instantiate the throughput function with respect to the number of parallel streams can avoid the ineffectiveness of the prediction models due to some unexpected sampling data pairs. We propose to find a solution to satisfy both the time limitation and the accuracy requirements. Our approach doubles the number of parallel streams for every iteration of sampling, and observes the corresponding throughput. We implement this new service in the Stork Data Scheduler, where the prediction points can be obtained using Iperf and GridFTP samplings

Implementation module:

In this project we have implemented the optimization service, based on both Iperf and GridFTP. The structure of our design and presents two scenarios based on both, GridFTP and Iperf versions of the service. For the GridFTP version, these hosts would have GridFTP servers. For the Iperf version, these hosts would have Iperf servers running as well as a small remote module (TranServer) that will make a request to Iperf. Optimization server is the orchestrator host, designated to perform the optimization of TCP parameters and store the resultant data. It also has to be recognized by the sites, since the thirdparty sampling of throughput data will be performed by it. User/Client represents the host that sends out the request of optimization to the server. All of these hosts are connected via LAN. When a user wants to transfer data between two site , the user will first send a request that consists of source and destination addresses, file size, and an optional buffer size parameter to the optimization server, which process the request and respond to the user with the optimal parallel stream number to do the transfer. The buffer size parameter is an optional parameter, which is given to the GridFTP protocol to set the buffer size to a different value than the system set buffer size. At the same time, the optimization server will estimate the optimal throughput that can be achieved, and the time needed to finish the specified transfer between two site. This information is then returned back to the User/Client making the request. Stork is a batch scheduler, specialized in data placement and movement. In this implementation, Stork is extended to support both estimation and optimization tasks. A task is categorized as an estimation task, if only estimated information regarding to the specific data movement is reported without the actual transfer. On the other hand, a task is categorized as optimization, if the specific data movement is performed, according to the optimized estimation results.

Mathematical Model :

A novel mathematical model (optimization model) is developed to determine the number of parallel streams, required to achieve the best network performance. This model can predict the optimal number of parallel streams with as few as three prediction points (i.e. three Switching points).We propose to find a solution to satisfy both the time limitation and the accuracy requirements. Our approach doubles the number of parallel streams for every iteration of sampling, and observes the corresponding throughput. While the throughput increases, if the slope of the curve is below a threshold between successive iterations, the sampling stops. Another stopping condition is: if the throughput decreases compared to the previous iteration before reaching that threshold. Assignment Problem: Consider an matrix with n rows and n columns, rows will be consider as grid let and columns will as jobs. Like,

Issn 2250-3005(online)

September| 2012

Page 1307

International Journal Of Computational Engineering Research (ijceronline.com) Vol. 2 Issue. 5

Job 1 Grid 1 Grid 2 . Grid n Task 1 Tas k1 Tas k1

Job 2

Job n

Task2 . Task . 2 Task 2 .

Task n Task n Task n

There will be more than one job for each grid so assign problem occur. to solve this problem we are going for new mathematical model to solve this problem. Three condition occur 1) Find out the minim value of each row and subtract least value with same row task value. Make least value as zero. If process matrix diagonally comes as 0 then process stop and job will assign successfully and job assign successfully.

Job 1 Grid 1 Grid 2 . Grid n

Job2

Job n

10 6 0

3 0 6

0 . 7 . 7 .

2) Find out the minim value of each row and Subtract least value with same row task value. Make least value as zero. if column wise matrix diagonally comes as 0 then , then Find out minim value of each column and subtract least value with same row column value. Make least value as zero. Then if process matrix diagonally comes as 0 then process stop and job will assign successfully and job assign successfully.

Job 1 Grid 1 Grid 2 . Grid n 10 6 6

Job 2 0 0 0

. 3 7 . . 7 .

Job n

3) Find out the minim value of each row and subtract least value with same row task value. Make least value as zero. If column wise matrix diagonally comes as 0 then, then Find out minim value of each column and subtract least value with same row column value. Make least value as zero. Then if process matrix diagonally will comes as 0 then process stop and that job will be discard .

Issn 2250-3005(online)

September| 2012

Page 1308

International Journal Of Computational Engineering Research (ijceronline.com) Vol. 2 Issue. 5

Job 1 Grid 1 Grid 2 . Grid n 10 6 6

Job 2 0 0 0

. . . . . 4 . 7 . . 7

Job n

3 7 7

Job 1 Grid 1 Grid 2 . Grid n

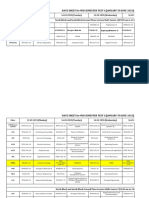

Experimental results:

Test Scenario Applying Optimization Service Pre-Condition Client send request to EOS server then that request will be transfer to Gridftp server If More than one Client make request to EOS server

Job 2 0 0 6

Job n

10 6 0

Test Case Check the client request will be Valid request or not(only txt format file can be download/Estimate from Gridftp server ) Stork data scheduler make schedule for the incoming request exceed than one request/ checking the is Estimation or optimization Of service

Expected Output Client Requested will be response to client with some information (number of stream taken to transfer the file and throughput achieve at the time of transfer file) Priority will be assigned to each user request(first come first process) / if estimation request will be give most first priority

Actual Output File received successfully

Result Pass

Integration with Stork Data Scheduler

According to priority the request process in Gridftp server and response given to client

Pass

Performance Comparison

Issn 2250-3005(online)

September| 2012

Page 1309

International Journal Of Computational Engineering Research (ijceronline.com) Vol. 2 Issue. 5

Conclusion:

This study describes the design and implementation of a network throughput prediction and optimization service for many-task computing in widely distributed environments. This involves the selection of prediction models, the mathematical models. We have improved an existing prediction model by using three prediction points and adapting a full second order equation, or an equation where the order is determined dynamically. We have designed an exponentially increasing sampling strategy to get the data pairs prediction. We implement this new service in the Stork Data Scheduler, where the prediction points can be obtained using Iperf and GridFTP samplings. The experimental results justify our improved models as well as the algorithms applied to the implementation. When used within the Stork Data Scheduler, the optimization service decreases the total transfer time for a large number of data transfer jobs submitted to the scheduler significantly compared to the non optimized Stork transfers.

Reference:

[1] [2] [3] [5] [6] [7] I. Raicu, I. Foster, and Y. Zhao, Many-Task Computing for Grids and Supercomputers, Proc. IEEE Workshop ManyTask Computing on Grids and Supercomputers (MTAGS), 2008. Louisiana Optical Network Initiative (LONI), http://www.loni.org/, 2010. Energy Sciences Network (ESNet), http://www.es.net/, 2010. [4] TeraGrid, http://www.teragrid.org/, 2010. S. Floyd, Rfc 3649: Highspeed TCP for Large Congestion Windows, 2003. R. Kelly, Scalable TCP: Improving Performance in Highspeed Wide Area Networks, Computer Comm. Rev., vol. 32, Experiments, IEEE Network, vol. 19, no. 1, pp. 4-11, Feb. 2005. no. 2, pp. 83- 91, 2003. C. Jin, D.X. Wei, S.H. Low, G. Buhrmaster, J. Bunn, D.H. Choe, R.L.A. Cottrell, J.C. Doyle, W. Feng, O. Martin, H. Newman, F. Paganini, S. Ravot, and S. Singh, Fast TCP: From Theory to Networks, Computer Comm. Rev., vol. 32, Experiments, IEEE Network, vol. 19, no. 1, pp. 4-11, Feb. 2005. H. Sivakumar, S. Bailey, and R.L. Grossman, Psockets: The Case for Application-Level Network Striping for Data Intensive Applications Using High Speed Wide Area Networks, Proc. IEEE Super Computing Conf. (SC 00), p. 63, Nov. 2000. J. Lee, D. Gunter, B. Tierney, B. Allcock, J. Bester, J. Bresnahan, and S. Tuecke, Applied Techniques for High Bandwidth Data Transfers across Wide Area Networks, Proc. Intl Conf. Computing in High Energy and Nuclear Physics (CHEP 01), Sept. 2001. H. Balakrishman, V.N. Padmanabhan, S. Seshan, and R.H.K.M. Stemm, TCP Behavior of a Busy Internet Server: Analysis and Improvements, Proc. IEEE INFOCOM 98, pp. 252-262, Mar. 1998. T.J. Hacker, B.D. Noble, and B.D. Atley, Adaptive Data Block Scheduling for Parallel Streams, Proc. IEEE Intl Symp. High Performance Distributed Computing (HPDC 05), pp. 265-275, July 2005. L. Eggert, J. Heideman, and J. Touch, Effects of Ensemble TCP, ACM Computer Comm. Rev., vol. 30, no. 1, pp. 1529, 2000. R.P. Karrer, J. Park, and J. Kim, TCP-ROME: Performance and Fairness in Parallel Downloads for Web and Real Time Multimedia Streaming Applications, technical report, Deutsche Telekom Labs, 2006. D. Lu, Y. Qiao, and P.A. Dinda, Characterizing and Predicting TCP Throughput on the Wide Area Network, Proc. IEEE Intl Conf. Distributed Computing Systems (ICDCS 05), pp. 414-424, 2005. E. Yildirim, D. Yin, and T. Kosar, Prediction of Optimal Parallelism Level in Wide Area Data Transfers, to be published in IEEE Trans. Parallel and Distributed Systems, 2010.

[8]

[9]

[10] [11] [12] [13] [14] [15]

Issn 2250-3005(online)

September| 2012

Page 1310

You might also like

- Implementation of Dynamic Level Scheduling Algorithm Using Genetic OperatorsDocument5 pagesImplementation of Dynamic Level Scheduling Algorithm Using Genetic OperatorsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Very High-Level Synthesis of Datapath and Control Structures For Reconfigurable Logic DevicesDocument5 pagesVery High-Level Synthesis of Datapath and Control Structures For Reconfigurable Logic Devices8148593856No ratings yet

- GA and TS Optimization of ATM NetworksDocument5 pagesGA and TS Optimization of ATM NetworksPrateek BahetiNo ratings yet

- Algorithms for Online Travelling Salesman ProblemsDocument30 pagesAlgorithms for Online Travelling Salesman ProblemsmichelangeloNo ratings yet

- Clustering Clickstream Data with a Three-Phase FrameworkDocument11 pagesClustering Clickstream Data with a Three-Phase FrameworkPPMPhilNo ratings yet

- A MPI Parallel Algorithm For The Maximum Flow ProblemDocument4 pagesA MPI Parallel Algorithm For The Maximum Flow ProblemGRUNDOPUNKNo ratings yet

- Simulating Performance of Parallel Database SystemsDocument6 pagesSimulating Performance of Parallel Database SystemsKhacNam NguyễnNo ratings yet

- The Performance of A Selection of Sorting Algorithms On A General Purpose Parallel ComputerDocument18 pagesThe Performance of A Selection of Sorting Algorithms On A General Purpose Parallel Computerron potterNo ratings yet

- Final Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryDocument24 pagesFinal Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryBurlyaev DmitryNo ratings yet

- TCSOM Clustering Transactions Using Self-Organizing MapDocument13 pagesTCSOM Clustering Transactions Using Self-Organizing MapAxo ZhangNo ratings yet

- The Data Locality of Work Stealing: Theory of Computing SystemsDocument27 pagesThe Data Locality of Work Stealing: Theory of Computing SystemsAnonymous RrGVQjNo ratings yet

- Techniques For Simulation of Realistic Infrastructure Wireless Network TrafficDocument10 pagesTechniques For Simulation of Realistic Infrastructure Wireless Network TrafficA.K.M.TOUHIDUR RAHMANNo ratings yet

- Optimal Circuits For Parallel Multipliers: Paul F. Stelling,,, Charles U. Martel, Vojin G. Oklobdzija,,, and R. RaviDocument13 pagesOptimal Circuits For Parallel Multipliers: Paul F. Stelling,,, Charles U. Martel, Vojin G. Oklobdzija,,, and R. RaviMathew GeorgeNo ratings yet

- Real-Time Calculus Scheduling AlgorithmDocument4 pagesReal-Time Calculus Scheduling AlgorithmChrisLaiNo ratings yet

- Requirements For Clustering Data Streams: Dbarbara@gmu - EduDocument5 pagesRequirements For Clustering Data Streams: Dbarbara@gmu - EduHolisson SoaresNo ratings yet

- Scalability of Parallel Algorithms For MDocument26 pagesScalability of Parallel Algorithms For MIsmael Abdulkarim AliNo ratings yet

- A Processor Mapping Strategy For Processor Utilization in A Heterogeneous Distributed SystemDocument8 pagesA Processor Mapping Strategy For Processor Utilization in A Heterogeneous Distributed SystemJournal of ComputingNo ratings yet

- A Hybrid Algorithm For Grid Task Scheduling ProblemDocument5 pagesA Hybrid Algorithm For Grid Task Scheduling ProblemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Dmatm: Dual Modified Adaptive Technique Based MultiplierDocument6 pagesDmatm: Dual Modified Adaptive Technique Based MultiplierTJPRC PublicationsNo ratings yet

- Ijait 020602Document12 pagesIjait 020602ijaitjournalNo ratings yet

- Ambimorphic, Highly-Available Algorithms For 802.11B: Mous and AnonDocument7 pagesAmbimorphic, Highly-Available Algorithms For 802.11B: Mous and Anonmdp anonNo ratings yet

- Analysis of Performance Evaluation Techniques for Wireless Sensor NetworksDocument12 pagesAnalysis of Performance Evaluation Techniques for Wireless Sensor Networksenaam1977No ratings yet

- AutoScaling (ResearchPPT)Document34 pagesAutoScaling (ResearchPPT)Naveen JaiswalNo ratings yet

- Modeling of A Queuing System Based On Cen Network in GPSS World Software EnvironmentDocument5 pagesModeling of A Queuing System Based On Cen Network in GPSS World Software EnvironmentAlex HropatyiNo ratings yet

- WriteupDocument5 pagesWriteupmaria kNo ratings yet

- Vlsi Handbook ChapDocument34 pagesVlsi Handbook ChapJnaneswar BhoomannaNo ratings yet

- JaJa Parallel - Algorithms IntroDocument45 pagesJaJa Parallel - Algorithms IntroHernán Misæl50% (2)

- An Overview of Cell-Level ATM Network SimulationDocument13 pagesAn Overview of Cell-Level ATM Network SimulationSandeep Singh TomarNo ratings yet

- Student Member, Ieee, Ô Ômember, IeeeDocument3 pagesStudent Member, Ieee, Ô Ômember, IeeeKannan VijayNo ratings yet

- Data Mining Project 11Document18 pagesData Mining Project 11Abraham ZelekeNo ratings yet

- Simulation of Computer Network With Switch and Packet ReservationDocument6 pagesSimulation of Computer Network With Switch and Packet ReservationArthee PandiNo ratings yet

- Performance PDFDocument109 pagesPerformance PDFRafa SoriaNo ratings yet

- Weighting Techniques in Data Compression Theory and AlgoritmsDocument187 pagesWeighting Techniques in Data Compression Theory and AlgoritmsPhuong Minh NguyenNo ratings yet

- Concurrent Multipath Transfer Using SCTP MultihomingDocument9 pagesConcurrent Multipath Transfer Using SCTP MultihomingHrushikesh MohapatraNo ratings yet

- DSEP 01 Regpaper 2Document10 pagesDSEP 01 Regpaper 2Gonzalo Martinez RamirezNo ratings yet

- Multithreaded Algorithms For The Fast Fourier TransformDocument10 pagesMultithreaded Algorithms For The Fast Fourier Transformiv727No ratings yet

- 18bce0537 VL2020210104308 Pe003Document31 pages18bce0537 VL2020210104308 Pe003CHALASANI AKHIL CHOWDARY 19BCE2390No ratings yet

- 8-bit Microprocessor for Content MatchingDocument7 pages8-bit Microprocessor for Content MatchinggiangNo ratings yet

- Parallel Ray Tracing Using Mpi and Openmp: January 2008Document18 pagesParallel Ray Tracing Using Mpi and Openmp: January 2008Chandan SinghNo ratings yet

- Grid ComputingDocument10 pagesGrid ComputingNaveen KumarNo ratings yet

- PDCDocument14 pagesPDCzapperfatterNo ratings yet

- A Survey of Network Simulation Tools: Current Status and Future DevelopmentsDocument6 pagesA Survey of Network Simulation Tools: Current Status and Future DevelopmentsSebastián Ignacio Lorca DelgadoNo ratings yet

- Computer Generation of Streaming Sorting NetworksDocument9 pagesComputer Generation of Streaming Sorting Networksdang2327No ratings yet

- Distributed CountingDocument10 pagesDistributed CountingMandeep GillNo ratings yet

- An Efficient K-Means Clustering AlgorithmDocument6 pagesAn Efficient K-Means Clustering Algorithmgeorge_43No ratings yet

- Implementing Matrix Multiplication On An Mpi Cluster of WorkstationsDocument9 pagesImplementing Matrix Multiplication On An Mpi Cluster of WorkstationsDr-Firoz AliNo ratings yet

- Optimized Ant Colony System (Oacs) For Effective Load Balancing in Cloud ComputingDocument6 pagesOptimized Ant Colony System (Oacs) For Effective Load Balancing in Cloud ComputingInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Computer Network Performance Evaluation Based On Different Data Packet Size Using Omnet++ Simulation EnvironmentDocument5 pagesComputer Network Performance Evaluation Based On Different Data Packet Size Using Omnet++ Simulation Environmentmetaphysics18No ratings yet

- Explain Different Distributed Computing Model With DetailDocument25 pagesExplain Different Distributed Computing Model With DetailSushantNo ratings yet

- A Multiway Partitioning Algorithm For Parallel Gate Level Verilog SimulationDocument8 pagesA Multiway Partitioning Algorithm For Parallel Gate Level Verilog SimulationVivek GowdaNo ratings yet

- Simulation of Dynamic Grid Replication Strategies in OptorsimDocument15 pagesSimulation of Dynamic Grid Replication Strategies in OptorsimmcemceNo ratings yet

- Quick SortDocument5 pagesQuick SortdmaheshkNo ratings yet

- Parallel Performance Study of Monte Carlo Photon Transport Code On Shared-, Distributed-, and Distributed-Shared-Memory ArchitecturesDocument7 pagesParallel Performance Study of Monte Carlo Photon Transport Code On Shared-, Distributed-, and Distributed-Shared-Memory Architecturesvermashanu89No ratings yet

- Dynamic Approach To K-Means Clustering Algorithm-2Document16 pagesDynamic Approach To K-Means Clustering Algorithm-2IAEME PublicationNo ratings yet

- AbstractsDocument3 pagesAbstractsManju ScmNo ratings yet

- A SOM Based Approach For Visualization of GSM Network Performance DataDocument10 pagesA SOM Based Approach For Visualization of GSM Network Performance Datavatsalshah24No ratings yet

- V Models of Parallel Computers V. Models of Parallel Computers - After PRAM and Early ModelsDocument35 pagesV Models of Parallel Computers V. Models of Parallel Computers - After PRAM and Early Modelstt_aljobory3911No ratings yet

- A Synthesisable Quasi-Delay Insensitive Result Forwarding Unit For An Asynchronous ProcessorDocument8 pagesA Synthesisable Quasi-Delay Insensitive Result Forwarding Unit For An Asynchronous ProcessorHoogahNo ratings yet

- Building Wireless Sensor Networks: Application to Routing and Data DiffusionFrom EverandBuilding Wireless Sensor Networks: Application to Routing and Data DiffusionNo ratings yet

- Analysis of Metamaterial Based Microstrip Array AntennaDocument2 pagesAnalysis of Metamaterial Based Microstrip Array AntennaInternational Journal of computational Engineering research (IJCER)No ratings yet

- The Effect of Bottom Sediment Transport On Wave Set-UpDocument10 pagesThe Effect of Bottom Sediment Transport On Wave Set-UpInternational Journal of computational Engineering research (IJCER)No ratings yet

- Parametric Study On Analysis and Design of Permanently Anchored Secant Pile Wall For Earthquake LoadingDocument16 pagesParametric Study On Analysis and Design of Permanently Anchored Secant Pile Wall For Earthquake LoadingInternational Journal of computational Engineering research (IJCER)No ratings yet

- Parametric Study On Analysis and Design of Permanently Anchored Secant Pile Wall For Earthquake LoadingDocument16 pagesParametric Study On Analysis and Design of Permanently Anchored Secant Pile Wall For Earthquake LoadingInternational Journal of computational Engineering research (IJCER)No ratings yet

- An Analysis of The Implementation of Work Safety System in Underpass Development Projects of The Intersection of Mandai Makassar-IndonesiaDocument5 pagesAn Analysis of The Implementation of Work Safety System in Underpass Development Projects of The Intersection of Mandai Makassar-IndonesiaInternational Journal of computational Engineering research (IJCER)No ratings yet

- The Influence of Price Offers For Procurement of Goods and Services On The Quality of Road ConstructionsDocument7 pagesThe Influence of Price Offers For Procurement of Goods and Services On The Quality of Road ConstructionsInternational Journal of computational Engineering research (IJCER)No ratings yet

- Stiffness Analysis of Two Wheeler Tyre Using Air, Nitrogen and Argon As Inflating FluidsDocument8 pagesStiffness Analysis of Two Wheeler Tyre Using Air, Nitrogen and Argon As Inflating FluidsInternational Journal of computational Engineering research (IJCER)No ratings yet

- Wave-Current Interaction Model On An Exponential ProfileDocument10 pagesWave-Current Interaction Model On An Exponential ProfileInternational Journal of computational Engineering research (IJCER)No ratings yet

- Analysis of The Pedestrian System in Jayapura City (A Case Study of Pedestrian Line On Percetakan StreetDocument9 pagesAnalysis of The Pedestrian System in Jayapura City (A Case Study of Pedestrian Line On Percetakan StreetInternational Journal of computational Engineering research (IJCER)No ratings yet

- An Analysis of The Noise Level at The Residential Area As The Impact of Flight Operations at The International Airport of Sultan Hasanuddin Maros in South Sulawesi ProvinceDocument4 pagesAn Analysis of The Noise Level at The Residential Area As The Impact of Flight Operations at The International Airport of Sultan Hasanuddin Maros in South Sulawesi ProvinceInternational Journal of computational Engineering research (IJCER)No ratings yet

- The Influence of Price Offers For Procurement of Goods and Services On The Quality of Road ConstructionsDocument7 pagesThe Influence of Price Offers For Procurement of Goods and Services On The Quality of Road ConstructionsInternational Journal of computational Engineering research (IJCER)No ratings yet

- A Real Time Abandoned Object Detection and Addressing Using IoTDocument5 pagesA Real Time Abandoned Object Detection and Addressing Using IoTInternational Journal of computational Engineering research (IJCER)No ratings yet

- Drag Optimization of Bluff Bodies Using CFD For Aerodynamic ApplicationsDocument8 pagesDrag Optimization of Bluff Bodies Using CFD For Aerodynamic ApplicationsInternational Journal of computational Engineering research (IJCER)No ratings yet

- A Real Time Abandoned Object Detection and Addressing Using IoTDocument5 pagesA Real Time Abandoned Object Detection and Addressing Using IoTInternational Journal of computational Engineering research (IJCER)No ratings yet

- Effect of The Nipah Mall Development On The Performance Roads of Urip Sumohardjo in Makassar CityDocument5 pagesEffect of The Nipah Mall Development On The Performance Roads of Urip Sumohardjo in Makassar CityInternational Journal of computational Engineering research (IJCER)No ratings yet

- Modeling The Frictional Effect On The Rip Current On A Linear Depth ProfileDocument6 pagesModeling The Frictional Effect On The Rip Current On A Linear Depth ProfileInternational Journal of computational Engineering research (IJCER)No ratings yet

- Multi-Response Optimization of WEDM Process Parameters of Monel 400 Using Integrated RSM and GADocument8 pagesMulti-Response Optimization of WEDM Process Parameters of Monel 400 Using Integrated RSM and GAInternational Journal of computational Engineering research (IJCER)No ratings yet

- Comparison of Different Evapotranspiration Estimation Techniques For Mohanpur, Nadia District, West BengalDocument7 pagesComparison of Different Evapotranspiration Estimation Techniques For Mohanpur, Nadia District, West BengalInternational Journal of computational Engineering research (IJCER)No ratings yet

- Investigation of Vibration On Suspension Systems at Different Load and Operating ConditionsDocument5 pagesInvestigation of Vibration On Suspension Systems at Different Load and Operating ConditionsInternational Journal of computational Engineering research (IJCER)No ratings yet

- Anatomy of The Traffic Accidents On The RoadDocument6 pagesAnatomy of The Traffic Accidents On The RoadInternational Journal of computational Engineering research (IJCER)No ratings yet

- Location of Zeros of PolynomialsDocument7 pagesLocation of Zeros of PolynomialsInternational Journal of computational Engineering research (IJCER)No ratings yet

- A Holistic Approach For Determining The Characteristic Flow On Kangsabati CatchmentDocument8 pagesA Holistic Approach For Determining The Characteristic Flow On Kangsabati CatchmentInternational Journal of computational Engineering research (IJCER)No ratings yet

- PCB Faults Detection Using Image ProcessingDocument5 pagesPCB Faults Detection Using Image ProcessingInternational Journal of computational Engineering research (IJCER)No ratings yet

- Time-History Analysis On Seismic Stability of Nuclear Island Bedrock With Weak InterlayerDocument9 pagesTime-History Analysis On Seismic Stability of Nuclear Island Bedrock With Weak InterlayerInternational Journal of computational Engineering research (IJCER)No ratings yet

- Urban Town PlanningDocument4 pagesUrban Town PlanningInternational Journal of computational Engineering research (IJCER)No ratings yet

- Modelling, Fabrication & Analysis of Pelton Turbine For Different Head and MaterialsDocument17 pagesModelling, Fabrication & Analysis of Pelton Turbine For Different Head and MaterialsInternational Journal of computational Engineering research (IJCER)No ratings yet

- Effect of Turbulence Model in Numerical Simulation of Single Round Jet at Low Reynolds NumberDocument16 pagesEffect of Turbulence Model in Numerical Simulation of Single Round Jet at Low Reynolds NumberInternational Journal of computational Engineering research (IJCER)No ratings yet

- An Evaluation of The Taxi Supply Management at The International Airport of Sultan Hasanuddin in Makassar - IndonesiaDocument4 pagesAn Evaluation of The Taxi Supply Management at The International Airport of Sultan Hasanuddin in Makassar - IndonesiaInternational Journal of computational Engineering research (IJCER)No ratings yet

- Wi MAX Deinter Leaver's Address Generation Unit Through FPGA ImplementationDocument3 pagesWi MAX Deinter Leaver's Address Generation Unit Through FPGA ImplementationInternational Journal of computational Engineering research (IJCER)No ratings yet

- Study of The Class and Structural Changes Caused by Incorporating The Target Class Guided Feature Subsetting in High Dimensional DataDocument13 pagesStudy of The Class and Structural Changes Caused by Incorporating The Target Class Guided Feature Subsetting in High Dimensional DataInternational Journal of computational Engineering research (IJCER)No ratings yet

- Low Cost PCO Billing Meter PDFDocument1 pageLow Cost PCO Billing Meter PDFJuber ShaikhNo ratings yet

- Programming Guide - Power Navigator Power Navigator / EnavigatorDocument14 pagesProgramming Guide - Power Navigator Power Navigator / EnavigatorPandu BirumakovelaNo ratings yet

- Hec RasDocument453 pagesHec RasED Ricra CapchaNo ratings yet

- Master of Business Administration (MBA 2) : Mca - Gurmandeep@gmailDocument26 pagesMaster of Business Administration (MBA 2) : Mca - Gurmandeep@gmailSachin KirolaNo ratings yet

- Manual Xartu1Document61 pagesManual Xartu1Oscar Ivan De La OssaNo ratings yet

- College Events Dissemination System: Rishabh Kedia, Abhishek Pratap Singh & Varun GuptaDocument8 pagesCollege Events Dissemination System: Rishabh Kedia, Abhishek Pratap Singh & Varun GuptaTJPRC PublicationsNo ratings yet

- BNB List 3742Document443 pagesBNB List 3742Walter MatsudaNo ratings yet

- Saas Solutions On Aws FinalDocument26 pagesSaas Solutions On Aws Finalfawares1No ratings yet

- EC20 HMI Connection GuideDocument3 pagesEC20 HMI Connection GuideGabriel Villa HómezNo ratings yet

- Mt8870 DTMF DecoderDocument17 pagesMt8870 DTMF DecoderLawrence NgariNo ratings yet

- NGT SR Transceiver CodanDocument2 pagesNGT SR Transceiver CodanEdi PaNo ratings yet

- Assessment Specifications Table (Ast) : Kementerian Pendidikan MalaysiaDocument6 pagesAssessment Specifications Table (Ast) : Kementerian Pendidikan MalaysiaMohd raziffNo ratings yet

- Ict-Webpage 10 q1 w3 Mod3Document12 pagesIct-Webpage 10 q1 w3 Mod3Lemuel Ramos RempilloNo ratings yet

- Singh G. CompTIA Network+ N10-008 Certification Guide... N10-008 Exam 2ed 2022Document692 pagesSingh G. CompTIA Network+ N10-008 Certification Guide... N10-008 Exam 2ed 2022hazzardousmonk100% (1)

- Eclipse PDFDocument18 pagesEclipse PDFanjaniNo ratings yet

- Threats Analysis On VoIP SystemDocument9 pagesThreats Analysis On VoIP SystemIJEC_EditorNo ratings yet

- Ih 03Document138 pagesIh 03Rocio MardonesNo ratings yet

- LM M530Document58 pagesLM M530Victor VilcapomaNo ratings yet

- Date Sheet (MST-I)Document12 pagesDate Sheet (MST-I)Tauqeer AhmadNo ratings yet

- Powerrouter Application Guideline: Technical Information About A Self-Use InstallationDocument31 pagesPowerrouter Application Guideline: Technical Information About A Self-Use InstallationluigigerulaNo ratings yet

- A Technical Seminar ReportDocument28 pagesA Technical Seminar Reportnishitha pachimatlaNo ratings yet

- Function X - A Universal Decentralized InternetDocument24 pagesFunction X - A Universal Decentralized InternetrahmahNo ratings yet

- Divisors and representations of integersDocument4 pagesDivisors and representations of integersAngeliePanerioGonzagaNo ratings yet

- Face RecogDocument26 pagesFace RecogFavasNo ratings yet

- Chapter 3: Computer Instructions: ObjectivesDocument7 pagesChapter 3: Computer Instructions: ObjectivesSteffany RoqueNo ratings yet

- Analysis of Cylinder in AnsysDocument14 pagesAnalysis of Cylinder in AnsysSrinivas NadellaNo ratings yet

- Sandip University: End Semester Examinations-June 2021Document1 pageSandip University: End Semester Examinations-June 2021Industrial Training Institute ITI,SilvassaNo ratings yet

- Samaresh Das: Education SkillsDocument1 pageSamaresh Das: Education SkillsSamaresh DasNo ratings yet

- UAV-based Multispectral Remote Sensing For Precision Agriculture: A T Comparison Between Different CamerasDocument13 pagesUAV-based Multispectral Remote Sensing For Precision Agriculture: A T Comparison Between Different CamerasSantiago BedoyaNo ratings yet

- DC 850 X12 5010 I01 Purchase OrderDocument40 pagesDC 850 X12 5010 I01 Purchase OrderGeervani SowduriNo ratings yet