Professional Documents

Culture Documents

Vol. 28

Uploaded by

Guesh GebrekidanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Vol. 28

Uploaded by

Guesh GebrekidanCopyright:

Available Formats

*E-mail: aworku@gibbinternational.

com

Journal of EEA, Vol. 28, 2011

RECENT DEVELOPMENTS IN THE DEFINITION OF DESIGN EARTHQUAKE

GROUND MOTIONS

CALLING FOR A REVISION OF THE CURRENT ETHIOPIAN SEISMIC CODE -

EBCS 8: 1995

Asrat Worku*

Department of Civil Engineering

Addis Ababa Institute of Technology, Addis Ababa University

ABSTRACT

Recent developments in the definition of design

ground motions for seismic analysis of structures

are presented. A summary of results of empirical

and analytical site-effect studies are provided and

recent findings from empirical studies on

instrumental records are compared against similar

results from earlier studies.

Pertinent changes introduced in recent editions of

international codes as a result of these evidences

are presented. Comparisons of relevant provisions

of EBCS 8: 1995 with those in contemporary

American, European and South African codes are

made.

The paper presents compelling evidences showing

that the amplification potential of site-soils can in

general be significantly larger at sites of low-

amplitude rock-surface acceleration up to 0.1g

than at sites of larger accelerations.

Noting the practical significance of this fact on the

seismic design of structures in low to moderate

seismic regions, to which many cities and towns of

Ethiopia belong, changes to selected provisions of

the local code are proposed.

KEY WORDS: Earthquake ground motion, return

period, response spectra, seismic hazard, site

amplification.

INTRODUCTION

The latest edition of the Ethiopian standard code

for building design was issued in 1995 by the

Ministry of Works and Urban Development. This

document known by the name of the Ethiopian

Building Code Standard (EBCS) has a separate

volume, EBCS 8, specifically dedicated to the

design and construction of buildings in seismic

regions [1].

EBCS 8 covers a wide range of issues ranging from

basic definitions to detailed requirements. The

document stands out as an important reference

material having the purpose of ensuring safety to

human lives and limiting damages to buildings

during earthquakes. It is widely referred to by

design engineers not only in Ethiopia, but also in

the wider seismic-prone region of East Africa.

Nevertheless, as rightly stated in its Forward, such

standards are technical documents which require

periodic updating through the incorporation of new

knowledge and practice as they emerge. This is

especially true in seismic design of structures for

the obvious reason that the discipline is still

growing and gets refined with further acquisition of

data as new earthquakes occur.

EBCS 8 has been in use for the past 16 years

without being updated. Meanwhile, a number of

devastating earthquakes have rattled many places

all over the world. In the past decade alone, several

earthquakes of magnitudes up to 7.3 on the Richter

scale have surprised Africa the continent once

regarded as an earthquake free zone. Due to

increased data base, knowledge on earthquakes and

their effects on human life has tremendously

improved. As a result, requirements of many design

codes have significantly been refined. Some basic

provisions in older editions of design codes are

discarded. Existing design approaches have been

modified and new ones introduced.

This paper attempts to address the basic issue of the

definition of design ground motion in EBCS 8 vis-

-vis those in recent editions of selected major

international codes. Two major aspects of design

ground-motion are dealt with: seismic-hazard

definition and consideration of site effect. The

documents selected for comparison include the

post-1994 editions of the National Earthquake

Hazard Reduction Program (NEHRP) of USA [2-

6], the 1994 and 2004 editions of the European

Norm [7-9] and the 2010 edition of the South

African National Standard [10,11].

Asrat Worku

2 Journal of EEA, Vol. 28, 2011

The paper starts by briefly reviewing the historical

development of empirical studies of ground motion

records with emphasis on site-soil effects [12,13].

Obvious differences in results of studies before and

after the 1989 Loma-Prieta earthquake are

summarized [12-23]. This is supplemented by basic

theoretical evidences [16,17,24]. Developments in

pertinent provisions of recent seismic codes are

summarized. Specifically, new definitions and

methods of characterization of site soils are

introduced. Significantly improved amplification

factors incorporated in contemporary seismic codes

are presented [2-11].

Basic design spectra of EBCS 8 for different site-

soil conditions are compared with corresponding

spectra specified by the selected codes [1-

4,7,8,10,25]. It is demonstrated that the EBCS 8

spectra fail to ensure adequate safety for the

majority of common buildings ranging from multi-

story residential houses through condominiums and

school buildings to multi-purpose buildings with

fundamental periods up to around 1 second,

especially when the structures are founded on

softer formations.

Moreover, it is pointed out that the 100-year return

period adapted by EBCS 8 to define design ground

motions is incompatible with the 475-year return

period accepted worldwide and can significantly

compromise safety [1,26]. This led to the

recommendation that appropriate provisions of

EBCS 8 need revision.

BRIEF HISTORICAL DEVELOPMENT OF

SITE EFFECT STUDIES

Results of Early Instrumental Studies

Even though the potential of site soils to amplify

earthquake ground motions was recognized since

around the 1950s, it was only in the early 1970s

that notable results of empirical studies on the

subject started to emerge. The pioneering works

were performed in Japan and the USA.

Hayashi et al (1971), as cited by Seed et al [12], are

probably the first to present site-dependent average

spectra, which were based on 61 accelerograms

from 38 earthquakes in Japan. However, due to the

limited size and quality of their data, the authors

themselves suggested that their spectral curves be

regarded with caution.

A more detailed study was reported by Seed et al at

a later time [12]. Based on a total of 104 ground

motion records from sites of fairly known

geotechnical conditions, the study considered four

site groups. Most of the records in the first three

site groups were obtained from sites in western

United States dominated by the 1971 San Fernando

earthquake, whereas many records for the softest

site soil group were from the Japanese earthquakes

of 1964 Niigata and 1968 Higashi-Matsuyame.

The average spectra for the four site conditions are

given in Fig. 1(a) for 5% damping. Significant

differences are observed in the spectral shapes of

the various site classes. For periods greater than 0.4

to 0.5 s, spectral amplifications are much higher for

deep cohesionless soil deposits and soft to medium

clay deposits than for stiff site conditions and rock

over a wide range of periods. Seed et al [12]

pointed out the inadequacy of their records for the

fourth soil class and advised against the use of this

particular spectral curve until further studies shade

better light.

Mohraz [13] concurrently with Seed et al [12]

conducted also an independent study on almost the

same ground motion data base and came up with

similar results.

Based on these results, the Applied Technology

Council Project (ATC-3) came up in 1978 with the

simplified site-dependent design spectra shown in

Fig. 1(b) for three site soil groups: S1 (rock or

shallow stiff soils), S2 (deep firm soils) and S3 (7

to 14 m deep soft soils). The spectral curves of S2

and S3 are obtained in such a way that their

respective ratios with respect to S1, normally

known as ratio of response spectra (RRS), in the

velocity-sensitive region are 1.5 and 2.2,

respectively. The less reliable fourth soil class was

excluded, apparently heeding the advice of the

researchers [12].

In general, the ATC-3 spectra are characterized by

an ascending straight line for the very short-period

range up to around 0.2 s, a constant acceleration for

the acceleration-sensitive short-period range and a

curve descending for the velocity-sensitive

intermediate-period range.

The ATC-3 spectra were integrated in the series of

editions of the National Earthquake Hazard

Reduction Program (NEHRP) up to 1994 and in the

Uniform Building Code (UBC) series up until

1997. In 1988, a fourth soil type, S4, for deep soft

clays was included with the aim to address the

rather high amplification potential of soft soils as

evidenced by the 1985 Mexico City earthquake

[5,16,17].

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 3

Figure1. (a) response spectra for different site conditions after Seed et al [12]; (b) The design spectra proposed

by ATC-3:1978 Project [16,17]

It is important to note that the site-dependent

spectral values in Fig. 1 are normalized with

respect to the peak-ground acceleration, and thus

the spectral curves are all anchored to unity at T=0.

This has the effect of concealing inherent

amplifications in the short-period range so that only

amplifications in the intermediate velocity-

sensitive range are observed. This will be clearer in

the subsequent sections.

Results of Recent Empirical Studies

During the 7.1-magnitude Loma-Prieta earthquake

of 1989, most damages linked to site-soil

amplification and liquefaction took place in the bay

area of San Francisco and Oakland located about

100 km NW of the epicenter. Much of the recorded

evidence was also obtained from this area [14-18].

This, together with evidences from laboratory and

analytical studies, encouraged a critical review of

the single-factor amplification concept that endured

up until that time and described above. A number

of studies conducted on the enlarged data base

shaded more light on site effects than ever before.

Idriss [14,15] studied the amplification of rock-

surface accelerations using records from this and

the 1985 Mexico earthquake both of which are

associated with small rock-level accelerations. His

main findings are that soil sites have the ability to

amplify rock-surface accelerations of up to around

0.4g.

A more important outcome of post-Loma-Prieta

studies, especially for engineers, is the rather high

amplification of response spectra by soft soil sites.

Average spectral accelerations of ground motion

records due to the Loma-Prieta earthquake from

thick soil sites near the San Francisco bay area and

Oakland are provided in Fig. 2 for a damping of

5% in comparison with the corresponding average

spectra of adjoining rock sites.

Figure 2 Comparison of average soil-site

spectra in Oakland and San Francisco

areas with average rock-site spectra in

the region during 1989 Loma-Prieta

earthquake [16,17]

The figure shows that the rock-surface acceleration

(as T0) is 0.08 to 0.1 g and amplified two to

threefold by the soil. A similar degree of

amplification is seen for periods up to about 0.2 s.

The response spectra in the period range of 0.2 to

1.5 s are amplified to a much larger degree. Similar

trends, but with lesser degree of amplification,

were observed for stiff soil sites, though not

presented here [16,17].

Asrat Worku

4 Journal of EEA, Vol. 28, 2011

Comparison of the spectral curves in Fig. 2 with

those in Fig. 1 shows that the short-period

amplifications were not revealed in the early

studies. Also, the amplifications in the velocity-

sensitive region were underestimated. For this

reason, the single-factor approach is no more found

adequate to account for site-soil effects and has

long been abandoned. This fact led to the

introduction of new site-dependent design spectra

in US seismic codes since 1994 and in other

national and regional design codes.

BASIC THEORETICAL EVIDENCE IN

DYNAMIC SITE RESPONSE

A simple one-dimensional model of a homogenous

soil layer overlying a rock formation subjected to a

vertically propagating sinusoidal shear wave can be

used to provide a basic understanding of the

amplification potential of soft-soil sites [16,17,24].

Roesset [24] showed that the ratio of the

amplitudes of the sinusoidal accelerogram at the

soil surface, a

A

, to that at the rock, a

B

, is a function

of the soil shear-wave velocity, v

s

, and the soil

material damping ratio,

s

| . Plots of this maximum

amplification ratio as a function of v

s

for selected

values of

s

| are presented in Fig. 3.

Figure 3 Ground amplification ratio at resonance

versus shear-wave velocity for different

damping ratios

Approximating RRS

max

by this amplitude ratio an

assumption that has been found to be fairly

reasonable for a preliminary estimate - this

rudimentary model fairly accurately predicts

RRS

max

= 9 for the Mexico City soft soil site, for

which a shear-wave velocity of 80 m/s and

s

| = 3% are employed. This compares well with

RRS

max

of 8 to 20 actually recorded at the soil sites

in Mexico City during the 1985 earthquake.

Similarly, the model predicts RRS

max

=4 for San

Francisco Bay area corresponding to representative

values of v

s

=150 m/s and

s

| = 8% for the area.

The model once again predicted well RRS

max

observed at this site during the Loma-Prieta

earthquake that ranges from 3 to 6 [16,17].

These results indicate that, for places where

previous seismic records are not available, prior

knowledge of the representative shear-wave

velocity of the site and its damping behavior can

provide a good idea of its amplification potential.

Such an exercise is particularly useful for Ethiopia,

where none to few recorded strong ground motion

records are available to conduct statistical studies.

According to laboratory evidences, the material

damping ratio,

s

| , is a nonlinear function of the

plasticity and the strain level of the soil. Highly

plastic soils (PI > 50%) exhibit small damping and

behave nearly linearly over a wide range of strains.

For highly plastic clays,

s

| can be less than 3% for

strains up to 0.1%.

Soils of high PI are not uncommon in urbanized

seismic regions of Ethiopia, a typical example

being the dark and light grey expansive soils

covering a big part of Addis Ababa and its

environs. At some locations, this formation can be

several tens of meters thick and in a rather soft

state over a significant depth. The damping

potential of such soils can be quite low and their

amplification potential very high.

THE NEW APPROACH TO ACCOUNT FOR

SITE EFFECT

Evaluation of Improved Site Coefficients

A more practical approach for the evaluation of site

amplification factors in regions, where sufficient

earthquake records and geotechnical data are

available, is the calculation of statistical averages

of RRS for site soils grouped according to their

dynamic behavior. A number of empirical studies

conducted after the Loma-Prieta earthquake

suggested that average amplification factors of soil

sites are proportional to the mean shear-wave

velocity, v

S

, of the upper 30 m thickness raised to a

certain negative exponent, which is dependent on

the period band and the intensity of the rock

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 5

acceleration [16-23]. It was thus found important

that site soils are classified on the basis of this

important parameter.

The empirical study of Borcherdt [18] in particular

suggested the following generic best-fit relations

for the two amplification factors, denoted by F

a

and

F

v

, as a function of v

s

and the rock-surface shaking

intensity:

1050

a

m

a

S

F

v

| |

=

|

\ .

;

1050

v

m

v

S

F

v

| |

=

|

\ .

(1)

The factor F

a

is applicable for the acceleration-

sensitive short-period region (about 0.1 to 0.5 s)

and F

v

for the velocity-sensitive intermediate-

period region (about 0.4 to 2 s). The values of the

exponents, m

a

and m

v

, are provided in Table 1.

Table 1: Values of the exponents in Borcherdts

regression relations of Equation (2) [18]

The plots of Eq. 1 are given in Fig. 4 which shows

that F

v

is consistently larger than F

a

for v

s

up to

around 1000 m/s - v

S

of the reference rock site.

Both factors tend to unity with v

S

approaching 1000

m/s and decrease with increasing intensity of rock

shaking. Please note the similarity of these curves

to the theoretical curves of Fig. 3 demonstrating

that increasing rock-shaking intensity is associated

with increased damping.

Figure 4 Variation of spectral amplification factors

versus

S

v for short and long period ranges

and for a range of intensity of rock

shaking (Re-plotted after Borcherdt [18]

The New System of Soil Classification

For a generally stratified formation of n layers each

having a thickness of h

i

and a shear-wave velocity

of v

Si

within the upper 30 m thickness, v

S

can be

established using the following relationship [2-4, 8,

10, 16, and 17]:

( )

30

1

30 30

n

S i Si

i

v t h v

=

= =

(2)

The terms in the summation represent the time

taken for the shear wave to travel through each

individual layer. The shear-wave velocity

computed in this manner is based on the time, t

30

,

taken by the shear wave to travel from a depth of

30 m to the ground surface, and is thus not

computed as the mere arithmetic average.

This approach also allows for the use of more

readily measurable quantities such as the standard

penetration test blow count, N, for granular

deposits or undrained shear strength, S

u

, for

saturated cohesive soils though they are less

reliable due to the inherent double correlations. The

representative values are determined in a manner

similar to Eq. 2

Based on a landmark consensus reached by

geotechnical engineers and earth scientists in the

USA in the early 1990s, five distinct soil and rock

classes, A to E, are identified in accordance with

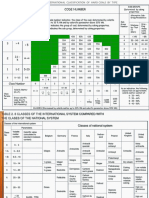

this approach and provided in Table 2.

Corresponding approximate soil classes as per

older methods are also provided in the first column

for comparison purposes. A sixth much softer site

class, F, is also defined that requires site-specific

studies. It is described in detail in NEHRP

documents [2, 3, and 4].

Rock acceleration (g) m

a

m

v

0.1 0.35 0.65

0.2 0.25 0.60

0.3 0.10 0.53

0.4 0.05 0.45

Asrat Worku

6 Journal of EEA, Vol. 28, 2011

Table 2: Site soil classes as per the recent NEHRP editions [2, 3, and 4]

While this method of site classification is not

entirely correct from a theoretical perspective, the

general consensus is that the stiffness of the

shallow soil as measured by vs is the most reliable

single site parameter to best characterize site

amplification potential [ 16 - 20]. In addition, vs is

readily measured in the field.

The New Site Amplification Factors

Using the average v

s

of each soil class given in

Table 2, the site amplification factors can now be

established by reading from Fig. 4 for the

representative value of rock-motion intensity

considered. The discrete values so obtained

according to Borcherdit [18] and adopted by

NEHRP [2, 3, 4] are given in Table 3. The effective

peak acceleration, A

n,

and the effective velocity

related acceleration, A

v

, are rock-level seismic

hazard parameters employed to characterize site

seismicity of US for 90% probability of not being

exceeded in 50 years (475 years return period) [2].

Table 3: Values of the site coefficient F

a

and F

v

according to NEHRP 1994 [2]

The new amplification factors exhibit the following

main features [5, 6, and 25]:

1. The three (later on four) site categories in

earlier codes are replaced by six new

categories A to F. Soil classes C to E

amplify the rock motion significantly,

especially when the rock shaking intensity

is small.

2. Two seismicity dependent site

coefficients, F

a

and F

v

, replace the single

site coefficient, S, in older codes. F

a

is for

the acceleration-sensitive region and F

v

is

for the velocity-sensitive region. Both

factors decrease with increasing seismicity

due to increased damping, and F

v

is

almost always larger than F

a

for all sites

3. While the old factor, S, assumed values up

to 1.5 (or 2.2), the new factors, F

a

and F

v

,

take values of up to 2.5 and 3.5 for short-

period and intermediate-period bands,

respectively. This results in much larger

seismic design forces for many classes of

structures on soft formations especially in

Pre-1994 Site Class

(approximate)

New NEHRP

Site Class

Description v

S

(m/s) SPT blow

count, N

S

u

(kPa)

S1 A Hard Rock >1500 - -

B Rock 760 1500 - -

S1 and S2 C Soft rock/very dense

soil

360 760 >50 >100

D Stiff soil 180 360 15 50 50 100

S3 and S4 E Soft soil <180 <15 <50

F Soils requiring site-

specific study

Soil Profile Type F

a

for

Shaking Intensity, A

a

F

v

for

Shaking Intensity, A

v

0.1

0.2 0.3 0.4 0.5 0.1

0.2 0.3 0.4 0.5

A 0.8 0.8 0.8 0.8 0.8 0.8 0.8 0.8 0.8 0.8

B 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

C 1.2 1.2 1.1 1.0 1.0 1.7 1.6 1.5 1.4 1.3

D 1.6 1.4 1.2 1.1 1.0 2.4 2.0 1.8 1.6 1.5

E 2.5 1.7 1.2 0.9

b

3.5 3.2 2.8 2.4 SR

F SR=

Site-specific geotechnical studies and dynamic site-response analysis required

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 7

less seismic regions. For this reason, the

seismic design of structures in less seismic

regions has become much more stringent

than ever before.

4. The older qualitative site classification

method is replaced by a new unambiguous

and more rational classification method

using representative shear-wave velocities

of the upper 30 m geological formation.

Alternatively, though less preferable,

average SPT blow counts and/or

undrained shear strength can be used to

classify sites (See Table 2).

It is important to note that results of later studies on

an enlarged data base including records from more

recent earthquakes like Northridge 1994 have not

suggested significant changes to the values of the

above site amplification factors [4, 19, and 20].

DESIGN SPECTRA IN SEISMIC CODES

The Design Spectra of NEHRP

As noted earlier, the ATC-3: 1978 Spectra were for

the first time replaced by new design spectra in a

1994 document issued through a long-term federal

project of the US Government known by the name

of the National Earthquake Hazard Reduction

Program (NEHRP), which was initiated in 1985 to

replace the mission of ATC. NEHRP incorporated

the new results based on the 1989 Loma Prieta

earthquake. As presented above, the new results

clearly demonstrated that the site-dependent design

spectra that were in use up to that time were

inadequate [5,6,16,17]. Furthermore, NEHRP has

since its inception consistently employed a 475-

year return period in defining the design ground

motion [2-4].

The basic elastic design spectrum of NEHRP 1994

that for the first time made use of the above values

of amplification factors is given by the following

relationship [2]:

a

v

se

C

T

c

C 5 . 2

2 . 1

3 / 2

;

v v v

A F C

a a a

A F C (3)

Note that F

a

is applied on the constant part of the

spectrum, whereas F

v

is applied on the descending

segment. A plot of Eq. 3 normalized with respect to

C

a

against period is given in Fig. 5(a) for C

v

/C

a

=1.

This plot shows the shape of the basic elastic

design spectral curve.

Figure 5 Elastic design spectra according to

NEHRP 1994 (a) Basic [2]; (b) For

A

a

=A

v

=0.1

Spectral curves corresponding to the five possible

soil classes A to E can be plotted from Eq. 3 for a

given earthquake shaking intensity. Such design

spectra for a seismic region characterized by

A

a

=A

v

=0.1 are given in Fig. 5(b). Similar curves

can be prepared for other seismic regions. This is to

be compared to the three spectral curves of ATC-3

given in Fig. 1, where amplification occurs in the

declining section only.

The basic design spectrum in NEHRP 1997 [3] has

shown substantial changes as shown in Fig. 6(a), in

which two key spectral ordinates in the figure, S

DS

and S

D1

, are introduced as given by

(a)

(b)

Asrat Worku

8 Journal of EEA, Vol. 28, 2011

s d MS DS

S F S S

3

2

3

2

1 1 1

3

2

3

2

S F S S

v M S

(4)

Figure 6 The basic design spectral curves

according to (a) NEHRP 1997 [3]; (b)

NEHRP 2003[4]

The transition periods in the figure are obtained

from

0 1 1

0.2 ;

D DS S D DS

T S S T S S (5)

S

S

and S

1

are mapped spectral accelerations in

terms of fractions of g for the short and

intermediate-period regions represented by 0.2 s

and 1 s, respectively. These spectra correspond to

the Maximum Considered Earthquake (MCE) and

replace the effective accelerations, A

a

and A

v

, of the

1994 version to characterize the seismic hazard.

The MCE corresponds to a 2% probability of being

exceeded in 50 years (or 2500 years return period)

to be adjusted later to 475-years return period by

multiplying by 2/3. S

MS

and S

1S

are the

corresponding spectra that account for site-soil

effect [3]. The coefficients F

a

and F

v

are the same

site soil amplification factors of NEHRP 1994

given in Table 3. Note also that the descending

right part is varying according to T

-1

and no more

according to T

-2/3

. Similar to Fig. 5(b), a set of five

curves can be plotted from Eqs. 4 and 5 for the five

different soil groups in a given seismic region. The

basic design spectral curve in Fig. 6(a) has

remained the same in the subsequent editions of

NEHRP since 2000, except for the introduction of a

flatter curve varying according to T

-2

for the

displacement-sensitive long-period period region

beyond T

L

as shown in Fig. 6(b) [4].

The Eurocode Design Spectra

The 1994 edition of the European seismic code (EC

8) employed three site classes, A, B and C, similar

to those in ATC-3, 1978 [7]. However, while the

ATC-3 spectra shown in Fig. 1 have a common

plateau to all site classes, EC 8: 1994 paradoxically

specifies a smaller maximum value and a smaller

amplification factor over the entire period range for

the softest site class C as shown in Fig. 7(a), in

which the spectra are normalized with respect to

the design ground acceleration. In light of the

background material given above, such a

representation of the dynamic behavior of soft

formations is obviously faulty. Similar views have

recently been expressed by Rey et al [9], who

attribute this pitfall to lack of sufficient ad hoc

studies prior to the publication. These spectra are

no more in use in Europe.

(a)

(b)

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 9

Figure 7 Normalized elastic response spectra: (a)

EC 8: 1994 [7]; (b) EC 8: 2004 Type 1

(for M

S

5.5; re-plotted after [8])

The more recent edition of EC8 issued in 2004 has

not only rectified this problem but also introduced

the new soil classes of NEHRP with some

modifications [2,3,4,8] (see Fig. 7(b)). According

to the new EC 8, all rock and rock-like geological

formations with v

s

> 800 m/s are categorized under

Ground Type A. This is unlike the provision for

two distinct rock site classes of A and B in the

recent NEHRP editions. Each soil class in EC 8,

2004 is assigned a constant amplification factor for

the entire period range. In general, these factors are

lower than the corresponding NEHRP factors.

Two types of spectra, Type 1 and Type 2, are

proposed by EC 8: 2004 for regions with

predominant earthquakes of surface-wave

magnitudes larger than 5.5 and less than 5.5,

respectively. Fig. 7(b) presents Type 1 spectra for

the five soil classes. A segment descending

according to T

-2

is included for the periods longer

than 2 s. The Type 2 spectra proposed for less

seismic regions are similar in shape to the Type 1

spectra but with larger amplification factors and

reduced control periods.

The Design Spectra of SANS 10160-4: 2010

This standard for seismic actions was published

very recently (June 2010) and makes up one of the

eight parts of the South African National Standard

SANS 10160 series - Basis of structural design

and actions for buildings and industrial structures

[10]. It supersedes the older version SABS

0160:1989 [10,11].

The code adapted Type 1 basic spectrum of EC 8:

2004 with a slight modification of the left linear

part. It has also directly adopted Ground Types A

to D of EC 8, 2004 and the corresponding

amplification factors and control periods omitting

Ground Types E and F. Since the plots of the

response spectra are similar to those in Fig. 7(b),

they are not presented here.

The seismic hazard is represented in terms of

reference peak ground acceleration, a

g

, for Ground

Type 1 (rock site) and given in form of a seismic

hazard map based on a 475-year return period.

Noteworthy is that this return period was also used

in the superseded 1989 edition [11]. Two major

zones are distinguishable: Zone I of natural

seismic activities and Zone II of mining-induced

and natural seismic activities. The majority of Zone

I is assigned a

g

=0.1g with sites of a

g

values less

than 0.05g being rare.

Given the relatively stable seismic nature of South

Africa, the attention given to seismic design in the

country is quite instructive to the more seismic

nations in East Africa. This provides an additional

perspective to critically evaluate the rather liberal

seismic hazard definition of EBCS 8 and its

provisions for site effects.

The Design Spectra of EBCS 8, 1995

The normalized elastic design spectra, S

d

, of the

Ethiopian Building Code Standard, EBCS 8 (1995),

proposed for dynamic analysis are given in Fig.

8(a).

(a)

(b)

Asrat Worku

10 Journal of EEA, Vol. 28, 2011

Figure 8 The design spectra of EBCS 8 (1995) (a)

for dynamic analysis; (b) for static

analysis [1,25]

Excepting for some minor differences, the EBCS 8

spectra are practically identical to the already

obsolete ATC-3 (1978) spectra given in Fig. 1. The

design spectra proposed for pseudo-static analysis

are also given in Fig. 8(b) for comparison purposes.

The left linear part is omitted in this case, the right

side descends according to T

-2/3

instead of T

-1

and

the amplification factors reduced.

COMPARISON OF EBCS 8 DESIGN

SPECTRA WITH THE REST

In this section, a comparative study of EBCS 8

spectra against those specified by NEHRP 2003,

EC8: 2004 and SANS 2010 is presented.

EBCS 8 Versus NEHRP 2003

The basic design spectrum of NEHRP in all its

editions since 2003 remained almost unchanged.

This spectrum as it appears in NEHRP 2003 [4], is

given in Fig. 6(b) and can be expressed as

( ) ( )

0 0

0

1

2

1

0.6 0.4 ; 0

;

;

;

DS

DS S

a

D S L

D L L

T T S T T

S T T T

S

S T T T T

S T T T T

+ s s

< s

=

< s

>

(6)

For T = 0, Eq. (6) yields the design spectral

ordinate for an ideally rigid structure undergoing

the same motion as its foundation which we can

denote by S

a0

. With this and the introduction of Eq.

4 in Eq. 6, we obtain:

0

0.26

a a S

S F S =

(7)

For a rigid structure on the reference ground type,

Class B, F

a

takes the value of unity (See Table 3),

and the design spectral ordinate S

a0

should be equal

or the same as the peak ground acceleration (PGA)

of the site for the design earthquake. This enables

us to estimate the value of S

S

from Eq. 7 for a

known PGA of a site.

As per the existing seismic hazard map of Ethiopia

which is based on a return period of 100 years, the

capital, Addis Ababa, located in Seismic Zone 2, is

assigned a PGA of 0.05g. With this value inserted

in Eq. 7 for S

a0

, the corresponding maximum value

of spectral acceleration for short period according

to NEHRP 2003 would be obtained as S

S

=0.188g.

The corresponding one-second spectral

acceleration, S

1

, can be extrapolated from Table 3

as 0.072g. With these inserted in Eq. 4, the design

spectral values S

DS

and S

D1

for Zone 2 are obtained

as

( )

a s DS

F X F S 125 . 0 188 . 0

3

2

= =

( )

v v D

F X F S 048 . 0 072 . 0

3

2

1

= =

(8)

Similarly, the transition periods can be computed

by substituting Eq. 8 back into Eq. 5. The values of

S

DS

, S

D1

, T

0

and T

S

computed in this manner are

substituted in Eq. 6 and the resulting expressions

plotted for the different site soils. These are given

in Fig. 9 together with the EBCS 8 spectra for a

PGA of 0.05g specified for Zone 2. Comparisons

for other seismic zones can be made in a similar

way.

(a)

(b)

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 11

Figure 9 Comparison of EBCS 8 spectra and

NEHRP spectra adapted to a return period

of 100 years for Zone 2 (including Addis

Ababa)

The plots show that the introduction of the NEHRP

2003 site factors demands design forces up to 150

% in excess of what is currently required by

EBCS8. The largest spectral discrepancies occur in

a very important period range encompassing

buildings of small to moderate height of up to

around 12 stories with a fundamental period of up

to around 1 s built on NEHRP Site Classes D and

E. Evidently, such buildings are the most

frequently built structures including residential

houses, condominiums, apartments, office flats,

public offices, hotels, hospitals and many others.

Thus, the implications of the above results are not

difficult to figure out.

EBCS Versus EC 8 and SANS 2010

Comparison of the EBCS spectra with the

European and South African spectra is more direct

forward, as all of these documents use rock-level

PGA to characterize seismicity.

Type I spectra of EC 8: 2004 are compared in Fig.

10 with EBCS spectra, which show that buildings

in the short-period region designed in accordance

with EBCS 8 could be underdesigned by up to 40

%. A similar comparison with Type II spectra of

EC 8, 2004 indicates larger differences of up to 80

%. These discrepancies are comparatively smaller

than the discrepancies observed with NEHRP

spectra, because the NEHRP site amplification

factors are consistently larger than the EC 8

amplification factors. Comparison of the EBCS 8

spectra with the SANS spectra gives identical

results as in Fig. 10 with Site Class E omitted.

Figure 10 Comparison of EBCS 8 spectra with

Type I EC 8: 2004 spectra adapted to

100-year return period

Note that the comparisons in Fig. (9) and (10) are

conducted without considering the difference in the

definition of the return period. This issue is treated

in the next section.

Influence of Seismic Hazard definition

Seismic Hazard Maps of Ethiopia

The seismic hazard map of Ethiopia as provided in

EBCS 8, 1995 is presented in Fig. 11 [1]. This map

is based on a 100-year return period or

approximately 50 % of being exceeded in 50 years.

According to this map, each seismic zone of 1 to 4

is assigned a constant bedrock acceleration ratio,

0

, of 0.03, 0.05, 0.07 or 0.1, whereas Zone 0 is

considered seismic free. Addis Ababa belongs to

Zone 2 with

0

= 0.05. Many cities and big towns

like Mekele, Dese, Semera, Adama, Awasa and

Arba Minch, of which some are capitals of federal

states, all belong to Zone 4 with

0

=0.1.

Asrat Worku

12 Journal of EEA, Vol. 28, 2011

Figure 11 Seismic hazard map of Ethiopia for 100-year return period as per EBCS 8: 1995 [23]

A recent helpful compilation of worldwide

seismicity is provided by the Global Seismic

Hazard Assessment Program (GSHAP), which was

launched by the International Lithosphere Program

(ILP) with the support of the International Council

of Scientific Unions (ICSU), and endorsed as a

demonstration program in the framework of the

United Nations International Decade for Natural

Disaster Reduction (UN/IDNDR). It had the

objective of mitigating the risk associated with the

recurrence of earthquakes by promoting a

regionally coordinated, homogeneous approach to

seismic hazard evaluation. The project was

operational from 1992 to 1999 [26].

The major output of the GSHAP is the global

seismic hazard map for a 475-year return period.

As noted above, this level of hazard has been

widely accepted all over the world as a design-level

earthquake and incorporated in US codes for more

than three decades now. In contrast, the EBCS 8,

1995 employs a return period of just 100 years.

Reference documents could not be found providing

a rational explanation for taking such a bold

decision involving risks on the safety of life and

property.

The data base of GSHAP is accessible to users

[26]. A seismic hazard map for Ethiopia prepared

by the author using the appropriate data is given in

Fig. 12, in which five distinct seismic regions are

identified with different ranges of PGA values as

shown in the legend. Note that the ratio of the PGA

to the gravitational acceleration, g, corresponds to

0

- the bedrock acceleration ratio in EBCS 8.

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 13

Figure 12 The seismic hazard map of Ethiopia

based on the GSHAP data for a return

period of 475 years

Comparison of Fig. 11 with Fig. 12 shows that not

only corresponding seismic regions are assigned

much higher values of PGA in the GSHAP map,

but also are the entire size and extent of the

individual seismic zones changed. According to the

new map, the most seismic area of the country is

concentrated near and around the Afar region

characterized by a PGA of 0.16g to 0.24g. This

alone entails an increase in seismic force demand

of 60 to 140 % in this region without including site

effect. The capital, Addis Ababa, belongs to the

second most seismic zone with PGA in the range of

0.1g to 0.16g. This again implies an increase of 100

to 220 % in seismic hazard level with an average

increase of 160 %. Several rapidly growing towns

including, Mekele, Dese, Debre Berhan, Ziway,

Hawasa, Arbaminch and Dire Dawa belong to this

seismic zone, while Semera, the current capital of

the Afar Region, is in the heart of the most seismic

zone.

Returning to the spectral comparison, the combined

influence of the new site classification system and

the new seismic hazard definition is studied next.

Considering 0.1g as the lowest-estimate PGA of

the region, to which Addis Ababa belongs, Eq. 7

yields a corresponding short-period spectral

acceleration, S

S

, of 0.45g.The one-second spectral

acceleration, S

1

, can be interpolated as 0.18g.

With these values inserted in Eq. 4, the design

spectral values S

DS

and S

D1

are obtained and the

transition periods easily computed as before. These

quantities are substituted in Eq. (6) and the

resulting expressions plotted for the different site

soils. These are presented in Fig. 13 together with

the EBCS 8 spectra for a PGA of 0.05g specified

for Zone 2 including Addis Ababa.

Figure 13 Comparison of EBCS 8 spectra with

NEHRP spectra adapted to GSHAP

zoning Of Ethiopia: Spectra for the

second most seismic region (including

Addis Ababa)

The plots show a very significant difference

between the two sets of design spectra. Design

base shear computed in accordance with EBCS 8

spectra fulfills only a fraction of the base shear

demanded by NEHRP requirements, in some cases

being as low as 24 %. All ranges of buildings on

any soil formation are affected by the inadequate

provisions of EBCS 8. Similar comparisons made

with the European and South African spectra

confirm these discrepancies.

CONCLUSIONS AND RECOMMENDATIONS

Recent changes in the definition of design ground

motions have been presented. Results of empirical

site-effect studies together with basic analytical

evidences on site response are provided.

Differences in results of empirical studies on recent

instrumental records against results from earlier

studies are highlighted. Changes introduced in

recent editions of international codes as a result of

such evidences are presented.

Comparisons of relevant provisions of EBCS 8,

1995 with those in contemporary American,

European and South African codes demonstrate

that seismic loads of most buildings designed in

accordance with EBCS 8 are significantly

underestimated. This is especially the case when

Asrat Worku

14 Journal of EEA, Vol. 28, 2011

the site soil overlying the bedrock is medium stiff

to soft and is relatively thick. Most vulnerable

buildings are those with fundamental periods up to

around 1 second that encompass most commonly

constructed buildings.

The two main culprits in EBCS 8 for these pitfalls

are the rather old and inadequate provisions for site

amplification effects and the 100-year return period

of the design-level earthquake.

The outcomes of the study strongly suggest that

there is an urgent need to revise EBCS 8, 1995 with

the objective to account for the above two major

issues among others. The post-Loma-Prieta studies

on site effects provided sufficient evidence

suggesting the use of higher site amplification

factors in all period ranges. This has already been

addressed in contemporary major seismic codes

worldwide including in Africa. EBCS 8 should

follow suite, especially with the current

construction boom and the relaxed quality control

in sight.

Furthermore, it is proposed that a new nation-wide

seismic-hazard study is conducted based on an

updated catalogue, employing state-of-the-art

hazard analysis methods and using appropriate

attenuation rules. The GSHAP study results can be

used as a good benchmark for this purpose.

It is also strongly recommended that the rather

risky 100-year return period, which is currently in

use, is critically revisited in consultation with

policy makers, property owners, financiers,

insurers and other stakeholders.

ACKNOWLEDGMENT

This study was inspired by the significant recent

developments in the discipline and by

encouragements from my colleagues Dr. Samuel

Kinde (San Diego State University), Mr Samson

Engida (formerly of CALTRANS) and Dr. Atalay

Ayele (Addis Ababa University) with an

anticipated effect to initiate the revision of EBCS 8.

The Author is thankful to all of them.

REFERENCES

[1] Ministry of Works and Urban

Development, Design of Structures for

Earthquake Resistance, Ethiopian

Building Code Standard (EBCS 8),

Addis Ababa, 1995.

[2] Building Seismic Safety Council

(BSSC), 1994 Edition NEHRP

Recommended Provisions for Seismic

Regulations for New Buildings, FEMA

222A and 223A (Provisions and

Commentary), Washington DC, 1995.

[3] Building Seismic Safety Council

(BSSC), 1997 Edition NEHRP

Recommended Provisions for Seismic

Regulations for New Buildings and

Other Structures, FEMA 302 and 303

(Provisions and Commentary),

Washington DC, 1998.

[4] Building Seismic Safety Council

(BSSC), 2003 Edition NEHRP

Recommended Provisions for Seismic

Regulations for New Buildings and

Other Structures, FEMA 450

(Provisions and Commentary),

Washington DC, 2004.

[5] Ghosh, S., Trends in the Seismic

Design Provisions of US Building

Codes, PCI Journal, Sept.-Oct. 2001,

pp. 98-102.

[6] Ghosh, S., Update on the NEHRP

Provisions: The Resource Document for

Seismic Design, PCI Journal, May-

June, 2004, pp. 96-102.

[7] European Committee for

Standardization, Eurocode 8, Design

Provisions for Earthquake Resistance

for Structures (ENV 1998), Brussels,

May 1994.

[8] European Committee for

Standardization, Eurocode 8, Design of

Structures for Earthquake Resistance

(EN 1998-1: 2004), Brussels, 2004.

[9] Rey, J., Faccioli, E. and Bommer, J.,

Derivation of Design Soil Coefficients

(S) and Response Spectral Shapes for

Eurocode 8 Using the European Strong-

Motion Database, Journal of

Seismology, Vol. 6, 2002, pp. 547-555.

[10] South African National Standard, SANS

10160-1, Basis of Structural Design

and actions for Buildings and Industrial

structures, SABS Standards Division,

Pretoria, 2010.

Recent Developments in the Definition of Design Earthquake Ground Motions

Journal of EEA, Vol. 28, 2011 15

[11] Wium, J., Background to SANS 10160

(2009): Part 4 Seismic Loading,

Journal of the South African Institution

of Civil Engineering, Vol. 52, No. 1,

2010, pp. 20-27.

[12] Seed, H., Ugas, C. and Lysmer, J., Site

Dependent Spectra for Earthquake-

Resistant Design, Bulletin Of

Seismological Society of America, Vol.

66, No. 1, 1976, pp. 221-244.

[13] Mohraz, B., A study of Earthquake

Response Spectra for Different

Geological Conditions, Bulletin of

Seismological Society of America, Vol.

66, No. 3, 1976, pp. 915-935.

[14] Idriss, I.M., "Response of soft soil sites

during earthquakes", Proceedings of

the Symposium to Honor Professor H.

B. Seed, Berkeley, May, 1990, pp. 273-

289.

[15] Idriss, I.M., "Earthquake ground

motions at soft soil sites", Proceedings

of the Second International Conference

on Recent Advances in Geotechnical

Earthquake Engineering and Soil

Dynamics, St. Louis, Missouri, Vol. III,

1991. pp. 2265-2273.

[16] Dobry, R., Borcherdt, R., Crouse, B.,

Idriss, I., Joyner, W., Martin G., Power,

M., Rinne, E. and Seed, R., New Site

Coefficients and Site Classification

System Used in Recent Building Seismic

Code Provisions, Earthquake Spectra,

Vol. 16, No. 1, February 2000, pp. 41-

67,

[17] Dobry, R. and Susumu, I., Recent

Development in The Understanding of

Earthquake Site Response and

Associated Seismic Code

Implementation, In Proc GeoEng2000,

An International Conference on

Geotechnical & Geological

Engineering: Melbourne, Australia,

2000, pp. 186-129.

[18] Borcherdt, R., Estimates of Site-

Dependent Spectra for Design

(Methodology and Justification),

Earthquake Spectra, Vol. 10, No. 4,

1994, pp. 617-653.

[19] Borcherdt, R. and Fumal, T., Empirical

Evidence from the Northridge

Earthquake for Site-Specific

Amplification Factors Used in US

Building Codes in Proc. 12 World

Conference on Earthquake Engineering,

Auckland, NZ, 2000, pp. 1-6.

[20] Borcherdt, R., Empirical Evidence for

site Coefficients in Building Code

Provisions, Earthquake Spectra, Vol.

18, No. 2, 2002, pp. 189-217.

[21] Crouse, C. and McGuire, J., Site

Response Studies for the Purpose of

Revising NEHRP Seismic Provisions,

Earthquake Spectra, Vol. 12, No. 2,

2002, pp. 407-439.

[22] Rodriguez-Marek, A., Bray, J. and

Abrahamson, N., Characterization of

Site Response General Categories,

PEER Report 1999/03, Pacific

Earthquake Engineering Research

Center, Berkeley, California, 1999.

[23] Stewart, J., Liu, A. and Choi, Y.,

"Amplification factors for spectral

acceleration in tectonically active

regions" Bull. Seism. Soc. Am., 93 (1),

2003, pp. 332-352.

[24] Roesset, J., Soil Amplification in

Earthquakes, in Numerical Methods in

Geotechnical Engineering, ed. pp. 639-

682, McGraw Hill, New-York, 1977.

[25] Worku, A., Comparison of Seismic

Provisions of EBCS 8 and Current

Major Building Codes Pertinent to the

Equivalent Static Force analysis, Zede,

Journal of the Ethiopian Engineers and

Architects, Vol. 18, 2001, pp. 11-25.

[26] http://www.seismo.ethz.ch/static/gshap/

Gshap98-stc.html

*Email:alemh29@gmail.com

Journal of EEA, Vol. 28, 2011

PERFORMANCE ANALYSIS OF CHAOTIC ENCRYPTION USING A SHARED

IMAGE AS A KEY

Alem Haddush Fitwi* and Sayed Nouh

Department of Electrical and Computer Engineering

Addis Ababa Institute of Technology, Addis Ababa University

ABSTRACT

Most of the secret key encryption algorithms in use

today are designed based on either the feistel

structure or the substitution-permutation structure.

This paper focuses on data encryption technique

using multi-scroll chaotic natures and a publicly

shared image as a key.

A key is generated from the shared image using a

full period pseudo random multiplicative LCG.

Then, multi-scroll chaotic attractors are generated

using a hysteresis switched, second order linear

system. The bits of the image of the chaotic

attractors are mixed with a plaintext to obtain a

ciphertext. The plaintext can be recovered from the

ciphertext during the deciphering process only by

mixing the cipher with a chaos generated using the

same secret key. As validated by a functional, NIST

randomness, and Monte Carlo simulation tests, the

cipher is very much diffused and not prone to

statistical or selected cipher attacks.

In addition, the performance is measured and

analyzed using such metrics as encryption time,

encryption throughput, power consumption and

compared with such existing encryption algorithms

as AES and RSA. Then, the performance analysis

and simulation results verify that the chaotic based

data encryption algorithm is valid.

Key Words: Secret key encryption, shared image,

hysteresis switched second order system,

multiplicative LCG, chaotic attractors,

randomness.

INTRODUCTION

At present when the Internet provides essential

communication for tens of millions of people and is

being increasingly used as a tool for commerce,

security becomes a tremendously important issue to

deal with. There are many aspects to security and

many applications, ranging from secure commerce

and payments to private communications and

protecting passwords. The fast expansion of

computer connectivity necessitates protecting data

and messages from unauthorized tampering or

reading. Even the US courts have ruled that there

exists no legal expectation of privacy for email. It

is thus up to the user to ensure that communications

which are expected to remain private actually do

so. One of the techniques for ensuring privacy of

files and communications is Cryptography [1].

In general, there are three types of cryptographic

schemes: secret key (or symmetric) cryptography,

public-key (or asymmetric) cryptography, and hash

functions. In all cases, the initial unencrypted data

is referred to as plaintext. It is encrypted into

cipher-text, which will in turn be decrypted into

usable plaintext [1-3].

The paper is organized as follows: Firstly, related

works and progresses in the areas of cryptography

and chaos generation and applications are

examined. This is followed by the design, analysis

and testing of the chaotic encryption algorithm.

Performance measurements of the design and the

corresponding results are then presented. Finally

the conclusions that are drawn from the

investigation are given.

RELATED WORKS

Pertinent works and progresses in the areas of

cryptography and chaos are surveyed as follows:

Data Encryption Standard (DES) is a feistel

structure, block cipher that was selected by the

National Bureau of Standards as an official Federal

Information Processing Standard (FIPS) for the

United States in 1976 and which had subsequently

enjoyed widespread use internationally. DES is

now considered to be insecure for many

applications chiefly due to the 56-bit key size being

too small. In January, 1999, Distributed.net and

the Electronic Frontier Foundation collaborated to

publicly break a DES key in 22 hours and 15

minutes. Consequently, DES has been

withdrawn as a standard by the National

Institute of Standards and Technology and was

finally superseded by the Advanced Encryption

Standard (AES) on 26 May 2002 [1, 4 - 9].

Advanced Encryption Standard (AES) is an

encryption standard adopted by the US

Government. It was announced by National

Institute of Standards and Technology (NIST) as

U.S. FIPS PUB 197 (FIPS 197) on November 26,

Alem Haddush Fitwi and Dr. Sayed Nouh

18 Journal of EEA, Vol. 28, 2011

2001 after a 5-year standardization process. The

AES ciphers have been analyzed extensively and

are now used worldwide, as was the case with its

predecessor, DES. Until May 2009, the only

successful published attacks against the full AES

were side-channel attacks on some specific

implementations. The input and output for the AES

algorithm each consist of sequences of 128 bits.

The Cipher Key for the AES algorithm is a

sequence of 128, 192 or 256 bits. Other input,

output and Cipher Key lengths are not permitted by

this standard [1, 4, 10, 11].

RSA (which stands for Rivest, Shamir and

Adleman who first publicly described it) is an

algorithm for public-key cryptography. It is

believed to be secure given sufficiently long keys

and the use of up-to-date implementations. As of

2010, the largest (known) number factored by a

general-purpose factoring algorithm was 768

bits long, using a state-of-the-art distributed

implementation. RSA keys are typically 1024

2048 bits long. Some experts believe that 1024-bit

keys may become breakable in the near term

(though this is disputed); few see any way that

4096-bit keys could be broken in the foreseeable

future. Therefore, it is generally presumed that

RSA is secure if n, called modulus which is the

product of two large random prime numbers, is

sufficiently large. If n is 300 bits or shorter, it can

be factored in a few hours on a personal computer,

using software already freely available. As the key

size increases, it becomes more expensive

computationally [12, 13]

Elliptic curve cryptography (ECC) is an

approach to public-key cryptography based on

the algebraic structure of elliptic curves over finite

fields. An ECC with a key-length greater than 112-

bit said to be secure but slow when used for

bulky data encryption. As the key size increases,

encryption using ECC becomes computationally

more expensive [12-14].

Chaos" means "a state of disorder", but the

adjective "chaotic" is defined more precisely in

chaos theory. For a dynamical system to be

classified as chaotic, it must be sensitive to initial

conditions, and topologically mixing. Over the

last two decades, chaotic oscillators have been

found to be useful with great potential in

many technological disciplines such as

information and computer sciences, biomedical

engineering, power systems protection, encryption

and communications, etc. Recently, there has been

some increasing interest in exploiting chaotic

dynamics for real-world engineering

applications, in which much attention has been

focused on effectively generating chaos from

simple systems by using simple controllers. Then a

survey has been made on a number of techniques

which have been developed for generating chaotic

attractors and their application in papers [15-19].

The motivation to design and evaluate a chaotic

based encryption algorithm is, therefore, because

cryptographic algorithms play an astronomical role

in information security systems, and in recent

years, as the importance and the value of

exchanged data over the Internet or other media

types have been increasing alarmingly, there has

been a search for the best solution to oer the

necessary protection against the data thieves

attacks. On the other side, cryptographic algorithms

consume a signicant amount of such computing

resources as CPU time, memory, and battery

power. As a consequence, there has been a great

interest of designing cryptographic algorithms

which are secure (or reliable), faster, efficient and

with no known method of attacks.

DESIGN, ANALYSIS, AND TEST OF THE

CHAOTIC ENCRYPTION ALGORITHM

Design overview

In the abstract, the design of a chaotic based

crypto-system comprises five major tasks as

delineated in Fig. 1. The tasks include image

processing, key generation, generation of chaotic

attractors, enciphering process, and deciphering

process. In addition, the design is tested using a

sample plaintext to verify if it can function as

designed and required, and it is validated using

statistical randomness and Monte Carlo simulation

tests. Eventually, the type of techniques used to

manage the secret key of the designed chaotic

crypto-system, and to provide a digital finger print

of the shared image to check its integrity are

presented.

Performance Analysis of Chaotic Encryption using a Shared Image as a Key

Journal of EEA, Vol. 28, 2011 19

Figure 1 Chaotic crypto-system

Shared Image

In this crypto-system, the same image, in lieu of the

secret key itself, is shared amongst all

communicating (sending and receiving) parties

from which the secret key is extracted. It is

publicly shared by communicating parties just like

a public key of a public-key encryption, only the

information required to extract the key from the

image is communicated secretly.

Figure 2 Grayscale image.

Keys having lengths less than the image size

(width*length) are extracted from this shared

image. The shared image used in this paper and

from which a secret key is extracted is the one

portrayed in the Fig. 2. But also it is possible to use

any other image which is not completely black or

white as a key! The minimum key length allowed is

128 bits for it is the minimum secure key length

used in todays popular secret key encryption

algorithms. Above it, it can be of any length as

long as it is less than the size of the shared image.

The shared image is then processed to make it

convenient to extract the secret key from its pixel

values. The image processing here comprises such

processes as image reading, converting to

grayscale, and grabbing the pixel values of the

grayscale image. If the image is RGB, it is first

converted to a grayscale, as portrayed in Fig. 2,

using the method convertTogray() from which

pixel values, ranging from 0 to 255, are grabbed

into a two dimensional array. Then, such important

attributes as width (w), height (h), and pixel values

(image Pixels) are accessed from the grayscale

image in Fig. 2 as follows:

w = image.getWidth() (1)

h= image.getHeight() (2)

Image Pixel [w] [h]= readGrayImage (3)

Pixel (grayImage)

Key Generation

Any secret key of length less than the size of the

shared image can be extracted from the two

dimensional pixel values of the shared image stored

in the 2D array, ImagePixel[w][h], in Eq. 3.

Key. length<= w*h (4)

Where the values of w, and h are obtained in Eqs.1,

and .2, respectively.

In this paper, the key is extracted from the 2D pixel

values of the grayscale image using a full period

pseudo random generator called linear congruential

generator, LCG, constructed using defined values

in GF (m) with a period of m-1. Then, the extracted

key, keyExtract, is converted to binary values, and

finally substituted using a seven-bit input and five-

bit output S-Boxes to obtain the final enciphering

and deciphering key, keyFinal.

The pseudo random generator used to extract a key

from the grayscale image is given in Eq. 5, where

69,621 is the multiplier, and 2

31

-1 is the modulus. It

is called multiplicative LCG.

X

n

= (69,621X

n-1

) mod (2

31

-1) (5)

The random numbers generated using the above

algorithm [20] are used as indices of the 2D array

Alem Haddush Fitwi and Dr. Sayed Nouh

20 Journal of EEA, Vol. 28, 2011

of pixels, ImagePixel[][], to extract a key from the

2D pixel values of the grayscale image as follows,

where Xo and X

1

are seed values.

For i=1:key.length do

idx1=((69,621X

o

) mod (2

31

-1))mod w;

idx2=((69,621X

1

) mod (2

31

-1))mod h

keyExtract[i]=ImgPixel[idx1][idx2];

end

Then, the keyBinary[] is divided into blocks of

size 49 bit each, in turn, each block is divided into

seven 7-bit pieces before being processed by the

substitution boxes. Each of the seven S-boxes

replaces its seven input bits with five output bits

according to a non-linear transformation, provided

in the form of a look up table. The S-boxes

strengthen the security of the key; i.e substituted

bits are used instead of the actual bits randomly

extracted from the shared image thereby increasing

the efforts of cryptanalysts who try to infer the key

using brute force analysis or selected cipher attack.

The S-Boxes in this algorithm serve more or less

the same purpose as the S-Boxes used in DES and

AES; they are however different from those used in

DES and AES. Here seven S-Boxes are used. Each

of them is constructed using defined transformation

of values in GF (2

5

) comprising 4 unique rows and

32 columns. Each raw comprises 32 elements

starting from 0 to 31 in a thoroughly random

sequence. And the rows are numbered from 0

through 3.

The input bits are used as addresses in tables of the

S-boxes. Each group of seven bits will give us an

address in a different S-box. The first and last bits

of the 7-bit input indicate row number, and the

other 5 bits give the number of columns. Located

at that address will be a 5-bit number. This 5-bit

number will replace the original 7 bits. The net

result is that the seven groups of 7 bits are

transformed by the seven S-Boxes into seven

groups of 5 bits for 35 bits total to obtain

keyFinal[]= S-Boxes(keyExt).

Generation of Chaotic Attractors

In this paper, the required chaotic attractors are

generated using a hysteresis switched second order

linear system. The generation process comprises

calculation of initial conditions from keys

generated earlier, and solving the second order

linear system using the concept of second order

homogeneous differential equations.

Hysteresis Switched Second Order Linear

System

There are many techniques of generating chaos; in

this paper a system called Hysteresis Switched

second order linear system is used. It is a chaotic

oscillator triggered only by initial conditions. It has

no inputs except the initial conditions, X

o

and Y

o

.

Then once triggered by the initial values, it keeps

on oscillating and generating chaotic attractors for

a time t, and moves from one scroll to another

depending on the value of n (number of scrolls )

provided due to the feedback hysteresis series as

depicted in Fig 3.

Figure 3 Chaotic oscillator [15].

The mathematical description of the hysteresis

switched system in Fig 3 is given by:

|

x = y

y = -x +2oy +E(x, n)

(6)

where X

o

, and Y

o

are the initial conditions, is a

positive constant, x and y are state variables, H(x,

n) is a hysteresis series described in Eq. 7 and 8,

and n is the number of scrolls.

E(x, n) = b

(x)

n

=1

(7)

and

b

= |

1 or x > i -1

u or x < i

(8)

Solution of Second Order Linear System

Differentiating both sides of the first Equation of

system 6 produces the following:

dt

dy

dt

x d

=

2

2

(9)

Performance Analysis of Chaotic Encryption using a Shared Image as a Key

Journal of EEA, Vol. 28, 2011 21

Then the differentiated y in Eq. 9 is substituted by

the equivalent expression given in the second

equation of system (3.6). Hence, Eq. 3.9 becomes:

d

2

x

dt

2

=

dy

dt

= -x +2o

dx

dt

+E(x, n) (10)

Rearranging Eq. 10, gives out a homogeneous

equation of the form

( ) x f cx

dt

dx

b

dt

x ad

= + +

2

2

(11)

Where f(x)=0. Therefore Eq. 11 is solved as

follows, letting

mt

e x = , and =

dx

dt

c

mt

(o

2

+b +c) = u (12)

Then, if c

mt

is to be a solution,

0

2

= + + c bm am

a

ac b b

m

2

4

2

+

= (13)

Using the values of m1 and m2, obtained from

Eq. 13, the solutions are

t m

e x

1

1

= and

t m

e x

2

2

= ,

then combining both solutions to obtain the general

solution

2 2 1 1

x c x c x + =

t m t m

e c e c x

2 1

2 1

+ = (14)

If the system is to generate chaos, its solution must

be complex; i.e for a=1, b=2o , and c=1 (obtained

from Eq. 10, | o i m + = . Then,

m = o o

2

-1 = o +i[ (15)

Hence,

2

1 o | =

,

obtained by solving

Equation 13.

Eulers formulae,

u u

u u

u

u

sin cos

sin cos

i e

i e

i

i

=

+ =

(16)

If m

1

= | o i + and m

2

= | o i , then using the

Eulers formulae in 16, the solution in 15

becomes:

x(t) = c

ut

|Acos[t +Bsin[t] (17)

The solution for the state variable y is therefore

obtained as follows,

( )

| | ( )

dt

t B t A e d

dt

dx

t y

t

| |

o

sin cos +

= = (18)

Solving for y and x in Equation 18, produces

x(t) = c

ut

|X

o

cos[t +i I

c

-uX

c

] sin[t|

y(t) = c

ut

|

o

cos[t +

u(

c

-uX

c

)

sin[t| (19)

Calculation of Initial Conditions

In this paper, the initial conditions of system 6 are

calculated using the bit values of the keyFinal

array, which is the output of the S-Boxes, as

follows.

X

o

= :or + kcyFinolX

o

= :or +kcyFinol (20)

Where var is a user defined value, kept secret as

part of the key, keyFinalX and keyFinalY are two

different keys generated using different seed values

for the LCG.

Figure 4 Five-scroll chaotic attractors

Alem Haddush Fitwi and Dr. Sayed Nouh

22 Journal of EEA, Vol. 28, 2011

Figure 4 portrays the 5-scroll chaotic attractors

generated using the solutions of system 6 given in

Eq. 19. The chaos is generated using a key of

length 136 bits, =0.0049, and

2

1

=0.999976. The chaos is sensitive to the values,

and secure range of values are determined using

an algorithm that makes use of the monobit test,

described later

Enciphering Process

During the enciphering process, the plaintext is

mixed with the generated chaos using a logical

bitwise XOR operator as depicted in Fig 5.

Figure 5 Enciphering process

During chaos processing, three equal sections

namely upper, middle and lower are cropped from

the overall balanced chaos shown in Fig 4. and

converted to grayscales as depicted in Figs 6 (a),

(b), and (c), and XORed together to further

increase the probability of the balance of 1s and

0s by avoiding localized imbalances. Then, the

combination of the three crops is resized as per the

size of the input plaintext as portrayed in Fig. 7.

Through this process, all the security properties

which include one way encryption, semantic

security, and indistinguishability are achieved.

(a) Upper crop

(b) Middle crop

(c) Lower crop

Performance Analysis of Chaotic Encryption using a Shared Image as a Key

Journal of EEA, Vol. 28, 2011 23

(d) Mixed=a XOR b XOR c

Figure 6 Chaos cropping and mixing.

Figure 7 Resized balanced chaos.

Deciphering Process

The process of deciphering in this chaotic

algorithm is essentially the same as the enciphering

process. The rule is as follows: the same key used

during the enciphering process is used in the

deciphering process, but the cipher text, in lieu of

the plaintext, is used as input to the chaotic

algorithm. The ciphertext, which is the output of

the enciphering process, is mixed with the

appropriately sized chaos generated using the same

key and the XOR operator.

Design Test

To verify if the designed chaotic algorithm can

function as required, a sample plaintext was

enciphered and then deciphered. A plaintext is

browsed using the GUI depicted in Fig. 8, and is

enciphered to convert it into a form which is

unintelligible. Eventually, the ciphertext is

deciphered to check if the chaotic deciphering

process can fully recover the clear text (or

plaintext) back from it. As copied from the text

areas of the GUI in Fig. 8, portions of the plaintext,

ciphertext, and decrypted text are respectively

displayed below verifying that the chaotic

algorithm works as designed and required. i.e the

plaintext and recovered (or decrypted text) are the

same.

Plaintext=How do we protect our most valuable

assets?

Ciphertext=:"u1:u"0u%':!06!u:'u8:&!u#494790u4&

&0!&ju;

Deciphered text=How do we protect our most

valuable assets?

What is more, a number of NIST statistical tests

and Monte Carlo Simulation test were performed

on the chaotic sequence to attempt to compare and

evaluate the sequence to a truly random sequence

as depicted in Table 1. Then, the results validate

the algorithm.

Figure 8 Chaotic crypto system

Alem Haddush Fitwi and Dr. Sayed Nouh

24 Journal of EEA, Vol. 28, 2011

Table- 1: Summary of test results

NIST Test Objective P-value Decision

Rule

Monobit Proportion of

1s and 0s

0.9491

0.01

Block

Frequency

Proportion of

1s and 0s

0.9998

Run Oscillation b/n

1 and 0

0.9542

Spectral Reveal

periodicities

0.0695

Linear

Complexit

y

Length of

LFSR*

0.8030

*LFSR=Linear Feedback Shift Register

Key Exchange