Professional Documents

Culture Documents

Bin Pois PRI

Uploaded by

Imdadul HaqueOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bin Pois PRI

Uploaded by

Imdadul HaqueCopyright:

Available Formats

Improved Closed-Form Prediction Intervals for

Binomial and Poisson Distributions

K. Krishnamoorthy

a

and Jie Peng

b

a

Department of Mathematics, University of Louisiana at Lafayette, Lafayette, LA 70504

b

Department of Finance, Economics and Decision Science, St. Ambrose University, Davenport, Iowa 52803

The problems of constructing prediction intervals for the binomial and Poisson distributions are con-

sidered. Available approximate, exact and conditional methods for both distributions are reviewed and

compared. Simple approximate prediction intervals based on the joint distribution of the past samples and

the future sample are proposed. Exact coverage studies and expected widths of prediction intervals show

that the new prediction intervals are comparable to or better than the available ones in most cases. The

methods are illustrated using two practical examples.

Key Words: Clopper-Pearson approach; Conditional approach; Coverage probability; Hypergeomet-

ric distribution.

-

a

Corresponding author. Tel.: +1 337 482 5283; fax: +1 337 482 5346.

E-mail address: krishna@louisiana.edu (K. Krishnamoorthy).

2

1. Introduction

In many practical situations, one needs to predict the values of a future random variable based

on the past and currently available samples. Extensive literature is available for constructing predic-

tion intervals (PIs) for various continuous probability distributions and other continuous models such

as linear regression and one-way random models. Applications of prediction intervals (PIs) based on

continuous distributions are well-known; for examples, the prediction intervals based on gamma dis-

tributions are often used in environment monitoring (Gibbons, 1987), and normal based PIs are used

in monitoring and control problems (Davis and McNichols, 1987). Other applications and examples

can be found in the book by Aitchison and Dunsmore (1980). Compared to continuous distributions,

results on constructing PIs for discrete distributions are very limited. Prediction intervals for a discrete

distribution are used to predict the number of events that may occur in the future. For example, a

manufacturer maybe interested in assessing the number of defective items in the future production

process based on available samples. Faulkenberry (1973) provided an illustrative example in which the

number of breakdowns of a system in a year follows a Poisson distribution, and the objective is to

predict the number of breakdowns in a future year based on available samples. Bain and Patel (1993)

have noted a situation where it is desired to construct binomial prediction intervals (see Section 4).

The prediction problem that we will address concerns two independent binomial samples with the

same success probability p. Given that X successes are observed in n independent Bernoulli trials,

we like to predict the number of successes Y in another m independent Bernoulli trials. In particular,

we like to nd a prediction interval [L(X; n, m, ), U(X; m, n, )] so that

P

X,Y

(L(X; n, m, ) Y U(X; m, n, )) 1 2.

As the conditional distribution of one of the variables given the sum X + Y does not depend on the

parameter p, prediction intervals can be constructed based on the conditional distribution. Thatcher

(1964) noted that a PI for Y can be obtained from the conditional distribution of X given X +Y = s,

which is hypergeometric (with sample size s, number of defects n, and the lot size n + m) and does

not depend on p. Thatchers method is similar in nature to that of Clopper-Pearsons (1934) ducial

approach for constructing exact condence intervals for a binomial success probability. Kn usel (1994)

has pointed out that the acceptance regions of two-sample tests (one-sided tests for comparing success

probabilities of X and Y ) are exact PIs for Y . We found that the PIs for the binomial case given in

Kn usel (1994), and the one described in Aitchison and Dunsmore (1980, Section 5.5) are indeed the

same as the exact PIs that can be obtained by Thatchers (1964) method. Faulkenberry (1973) provided

a general method of constructing a PI on the basis of the conditional distribution given a complete

sucient statistic. He has illustrated his general approach for constructing PIs for the exponential and

Poisson distributions based on the conditional distribution of Y (the variable to be predicted) given

the sum X + Y . Bain and Patel (1993) have applied Faulkenberrys approach to construct PIs for

the binomial, negative binomial and hypergeometric distributions. Dunsmore (1976) has shown that

3

Faulkenberrys approach is not so quite general as claimed, and provided a counter example. Kn usel

(1994, Section 3) noted that Faulkenberrys approach is correct for the continuous case, but it is not

exact for the Poisson case.

Apart from the conditional methods given in the preceding paragraph, there is an asymptotic

approach proposed in Nelson (1982), which is also reviewed in Hahn and Meeker (1991). Nelsons

PI is based on the asymptotic normality of a standard pivotal statistic, and it is easier to compute

than the ones based on the conditional approaches. However, Wangs (2008) coverage studies and our

studies (see Figures 1b and 3) indicate that Nelsons PIs have poor coverage probabilities even for large

samples. Wang considered a modied Nelsons PI in which a factor has to be determined so that the

minimum coverage probability is close to the nominal condence level. Wang has provided a numerical

approach to nd the factor. A reviewer has brought to our attention that Wang (2010) has proposed

another closed-form PI for a binomial distribution. It should be noted that the exact and conditional

PIs were not included in Wangs (2008, 2010) coverage studies.

All the methods for the binomial case are also applicable for the Poisson case as shown in the sequel.

In this article, we propose a closed form approximate PIs based on the joint sampling approach

which is similar to the one used to nd condence interval in a calibration problem (e.g., see Brown,

1982, Section 1.2). The proposed PIs are simple to compute, and they are comparable to or better

than the available PIs in most cases. Furthermore, we show that the conditional PIs are narrower than

the corresponding exact PIs except for the extreme cases where the conditional PIs are not dened.

We also show that the new PI is included in or identical to Wangs (2010) PI.

The rest of the article is organized as follows. In the following section, we review the conditional

methods, Nelsons PIs, Wangs (2010) PIs, and present the new PIs based on the joint sampling

approach for the binomial case. The exact coverage probabilities and expected widths of the PIs are

evaluated. The results for the binomial case are extended to the Poisson distribution in Section 3.

Construction of the binomial and Poisson PIs are illustrated using two examples in Section 4. Some

concluding remarks are given in Section 5.

2. Binomial Distribution

Let X binomial(n, p) independently of Y binomial(m, p). The problem is to nd a 1 2

prediction interval for Y based on X. The conditional distribution of X given the sum X + Y = s

is hypergeometric with the sample size s, number of non-defects n, and the lot size n + m. The

conditional probability mass function is given by

P(X = x[X +Y = s, n, n +m) =

_

n

x

__

m

y

_

_

m+n

s

_ , max0, s m x minn, s.

4

Let us denote the cumulative distribution function (cdf) of X given X + Y = s by H(t; s, n, n + m).

That is,

H(t; s, n, n +m) = P(X t[s, n, n +m) =

t

i=0

_

n

i

__

m

si

_

_

m+n

s

_ . (1)

Note that the conditional cdf of Y given X +Y = s is given by H(t; s, m, n +m).

2.1 Binomial Prediction Intervals

2.1.1 The Exact Prediction Interval

Thatcher (1964) developed the following exact PI on the basis of the conditional distribution of X

given X + Y . Let x be an observed value of X. The 1 lower prediction limit L is the smallest

integer for which

P(X x[x +L, n, n +m) = 1 H(x 1; x +L, n, n +m) > . (2)

The 1 upper prediction limit U is the largest integer for which

H(x; x +U, n, n +m) > . (3)

Furthermore, [L, U] is a 12 two-sided PI for Y . Thatcher (1964) has noted that, for a xed (x, n, m),

the probability (3) is a decreasing function of U, and so a backward search, starting from m, can be

used to nd the largest integer U for which the probability in (3) is just greater . Similarly, we see

that the probability in (2) is an increasing function of L, and so a forward search method, starting

from a small value, can be used to nd the smallest integer L for which this probability is just greater

than .

The exact PIs for extreme values of X are dened as follows. When X = 0, the lower prediction

limit for Y is 0, and the upper one is determined by (3); when x = n, the upper prediction limit is m,

and the lower prediction limit is determined by (2).

2.1.2 The Conditional Predication Interval

Bain and Patel (1993) used Faulkenberrys (1973) conditional approach to develop a PI for Y . As

noted earlier, this PI is based on the conditional distribution of Y , conditionally given X + Y , and is

described as follows. Recall that the conditional cdf of Y given X+Y = s is given by P(Y t[X+Y =

s) = H(t; s, m, n + m). Let x be an observed value of X. The 1 lower prediction limit L for Y is

the largest integer so that

H(L 1; x +L, m, n +m) , (4)

and the 1 upper prediction limit U is the smallest integer for which

H(U; x +U, m, n +m) 1 . (5)

5

Furthermore, [L, U] is the 1 2 two-sided PI for Y . As noted by Dunsmore (1976), Faulkenberrys

method, in general, is not exact. We also note that the above prediction interval is not dened at the

extreme cases X = 0 and X = n.

2.1.3 The Nelson Prediction Interval

Let p =

X

n

, q = 1 p and

Y = m p. Using the variance estimate var(

Y Y ) = m p q(1 + m/n),

Nelson (1982) proposed an approximate PI based on the asymptotic result that

Y Y

_

var(

Y Y )

=

Y Y

_

m p q(1 +m/n)

N(0, 1). (6)

The resulting 1 2 PI is given by

Y z

1

_

m p q

_

1 +

m

n

_

, where z

is the 100 percentile of the

standard normal distribution. Notice that the above PI is not dened when X = 0 or n. As X assumes

these values with positive probabilities, the coverage probabilities of the above PI are expected to be

much smaller than the nominal level when p is at the boundary (Wang, 2008). To overcome the poor

coverage probabilities at the boundary, we can dene the sample proportion as p =

0.5

n

if X = 0 and

n0.5

n

if X = n. This type of adjustments is commonly used to handle extreme cases while estimating

the odds ratio or the relative risk involving two binomial distributions (e.g., see Agresti, 1999). Since

we are predicting a discrete random variable, the PI is formed by the set of integer values of Y satisfying

Y Y

m p q(1+m/n)

< z

1

, and is given by

__

Y z

1

Y (m

Y )

_

1

m

+

1

n

_

_

,

_

Y +z

1

Y (m

Y )

_

1

m

+

1

n

_

__

, (7)

where x| is the smallest integer greater than or equal to x, and x| is the largest integer less than or

equal to x.

2.1.4 Wangs Prediction Interval

Wang (2010) proposed a PI by employing an approach similar to the construction of the Wilsons

score CI for the binomial proportion p. Wangs PI is based on the result that

W(m, n, X, Y ) =

Y m p

__

X+Y +c/2

m+n+c

__

1

X+Y +c/2

m+n+c

_

m(m+n)

n

_1

2

N(0, 1), approximately, (8)

where c = z

2

1

. The 1 2 PI is the set of integer values of Y that satisfy [W(m, n, X, Y )[ < z

2

1

.

6

To write the PI explicitly, let

A = mn

_

2Xz

2

1

(n +m+z

2

1

) + (2X +z

2

1

)(m+n)

2

,

B = mn(m+n)z

2

1

(m+n +z

2

1

)

2

2(n X)[n

2

(2X +z

2

1

) + 4mnX + 2m

2

X]

+ nz

2

1

[n(2X +z

2

1

) + 3mn +m

2

],

C = 2n

_

(n +z

2

1

)(m

2

+n(n +z

2

1

)) +mn(2n + 3z

2

1

)

.

In terms of above notations, Wangs PI is given by [L|, U|], where

(L, U) =

A

C

B

C

. (9)

2.1.5 The Prediction Interval based on the Joint Sampling Approach

The PI that we propose is based on the complete sucient statistic X+Y for the binomial(n+m, p)

distribution. Dene p

xy

=

X+Y

n+m

, and var(m p

xy

Y ) = mn p

xy

q

xy

/(n + m), where q

xy

= 1 p

xy

. We

can nd a PI for Y based on the asymptotic joint sampling distribution that

m p

xy

Y

_

var(m p

xy

Y )

=

(mX nY )

_

mn p

xy

q

xy

(n +m)

N(0, 1). (10)

To handle the extreme cases of X, let us dene X to be 0.5 when X = 0, and to be n.5 when X = n.

The 1 2 prediction interval is the set of integer values of Y satisfying

(mX nY )

2

mn p

xy

q

xy

(n +m)

< z

2

1

. (11)

Treating as if Y is continuous, we can nd the roots of the quadratic equation

(mXnY )

2

mn p

xy

q

xy

(n+m)

= z

2

1

as

_

Y

_

1

z

2

1

m+n

_

+

mz

2

1

2n

_

z

1

_

Y (m

Y )

_

1

m

+

1

n

_

+

m

2

z

2

1

4n

2

_

1 +

mz

2

1

n(m+n)

_ = (L, U), say, (12)

where

Y = mX/n for X = 1, ..., n 1; it is .5m/n when X = 0 and (n .5)m/n when X = n. The

1 2 PI, based on the roots in (12), is given by [L| , U|] . We refer to this PI as the joint sampling

- prediction interval (JS-PI).

2.2 Comparison Studies and Expected Widths of Binomial Prediction Intervals

The conditional PI is either included in or identical to the corresponding exact PI except for the

extreme cases where the former PI is not dened (see Appendix A for a proof). In order to study the

coverage probabilities and expected widths of the conditional PI, we take it to be the exact PI when

7

X = 0 or n. Specically, the conditional PI is determined by (4) and (5) when X = 1, ..., n 1, and

by (2) or (3) when X = n or 0. Between our JS-PI and Wangs (2010) PI, the former is included in or

identical to Wangs (2010) PI (see Appendix B for a proof). There are several cases where our JS-PI

is strictly shorter than the Wang PI as shown in the following table.

95% PIs

(n, m) X Wangs PI JS-PI

(40, 20) 16 [3, 13] [4, 13]

33 [12, 20] [12, 19]

(100,50) 10 [1, 11] [1, 10]

(300,100) 18 [1, 12] [2, 11]

(1000,300) 278 [67,101] [67, 100]

(3000,100) 212 [2, 12] [3, 12]

Note that for all the cases considered in the above table, our JS-PI is shorter than Wangs PI by only

one unit.

To compare PIs on the basis of coverage probabilities, we note that, for a given (n, m, p, ), the

exact coverage probability of a PI [L(x, n, m, ), U(x, n, m, )] can be evaluated using the expression

n

x=0

m

y=0

_

n

x

__

m

y

_

p

x+y

(1 p)

m+n(x+y)

I

[L(x,n,m,),U(x,n,m,)]

(y), (13)

where I

A

(.) is the indicator function. For a good PI, its coverage probabilities should be close to the

nominal level.

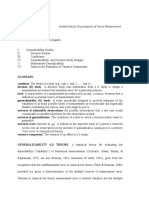

We evaluated the coverage probabilities of all ve PIs using (13) for some sample sizes and condence

coecient 0.95. In order to display the plots clearly, we plotted the coverage probabilities of Wangs

(2010) PIs in (9) and those of the JS-PIs (12) in Figure 1a. Even though we knew that the JS-PI

is contained in Wangs PI, the coverage probabilities of these two PIs should be evaluated because

both are approximate. It is clear from these plots that both PIs could be liberal, but their coverage

probabilities are barely below the nominal level 0.95. As expected, for all sample size congurations

considered, the coverage probabilities of Wangs PIs are greater than or equal to those of the JS-PIs.

Thus, Wangs PIs are more conservative than the JS-PIs for some parameter space, especially when p

is away from 0.5. As a result, Wangs PIs are expected to be wider than the JS-PIs. In view of these

comparison results, we will not include Wangs PIs for further comparison study in the sequel.

The coverage probabilities of the PIs based on the exact, conditional, Nelsons PIs and joint sampling

methods are plotted in Figure 1b. We rst observe from these plots that all the PIs, except the Nelson

PI, are conservative when p is close to zero or one. The exact PIs are too conservative even for large

samples; see the coverage plots for n = m = 50, n = 50, m = 20 and n = 100, m = 20. As expected, the

8

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = m = 15

Wang

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 20, m = 10

Wang

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 50, m = 50

Wang

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 50, m = 20

Wang

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 100, m = 50

Wang

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 100, m = 20

Wang

JS

Figure 1a. Coverage probabilities of 95% binomial PIs as a function of p

9

conditional PIs are less conservative than the exact PIs for all the cases considered. Furthermore, the

coverage probabilities of the conditional PIs are seldom fall below the nominal level 0.95; see the plots

for n = m = 15 and n = m = 50. Nelsons PIs are in general liberal, especially when the values of the

parameter are at the boundary. Overall, the exact PIs are too conservative, and Nelsons PIs are liberal

even for large samples. The conditional PI and the new one based on the joint sampling approach (JS-

PI) are comparable in some situations (see the plots for n = m = 15 and n = m = 50), and the latter

exhibits better performance than the former in other cases. In particular, in the most common case

where the past and current samples are larger than the future sample, the new PIs are better than the

corresponding conditional PIs (see the plots for the cases n = 50, m = 20 and n = 100, m = 20).

As the conditional PI and the new PI are the ones that control the coverage probabilities satisfac-

torily, we compare only these PIs with respect to expected widths. We evaluate the expected widths

of these PIs for the sample size congurations for which coverage probabilities were computed earlier.

The plots of the expected widths are given in Figure 2. These plots indicate that the expected widths of

the new PIs are comparable to or smaller than those of the conditional PIs for all the cases considered.

In general, the new prediction interval is preferable to the conditional PI with respect to coverage

properties and expected widths.

3. Poisson Distribution

Let X be the total counts in a sample of size n from a Poisson distribution with mean . Note that

X Poisson(n). Let Y denote the future total counts that can be observed in a sample of size m

from the same Poisson distribution so that Y Poisson(m). Note that the conditional distribution

of X, conditionally given X + Y = s, is binomial with number of trials s and the success probability

n/(n + m), binomial(s, n/(m + n)), and the conditional distribution of Y given the sum X + Y is

binomial(s, m/(m + n)). Let us denote the cumulative distribution function of a binomial random

variable with the number of trials N and success probability by B(x; N, ).

3.1 The Exact Prediction Interval

As noted earlier, the exact PI is based on the conditional distribution of X given X +Y . Let x be

an observed value of X. The smallest integer L that satises

1 B(x 1; x +L, n/(n +m)) > (14)

is the 1 lower prediction limit for Y . The 1 upper prediction limit U is the largest integer for

which

B(x; x +U, n/(n +m)) > . (15)

For X = 0, the lower prediction limit is dened to be zero, and the upper prediction limit is determined

by (15).

10

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = m = 15

exact

cond.

Nelson

..

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

.

.

.........

..

..

...

.

...

.

...

..

.

...

..

....

..

.....

...

....

.............

.....

.............

....

...

.....

..

....

..

...

.

..

...

.

...

.

...

..

..

.........

.

.

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

..

.

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 20, m = 10

exact

cond.

Nelson

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

...

.......

.....

..........................

.....

.................................................................

.....

..........................

.....

.......

...

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = m = 50

exact

cond.

Nelson

.

.

.

.

.

.

.

.

.

.

....

.

.

.

.

.

.

.

.

.

.

..

.

..

.

....

.

..

....

..

....

...

..................

...........

.........................................

...........

..................

...

....

..

....

..

.

....

.

..

.

..

.

.

.

.

.

.

.

.

.

.

....

.

.

.

.

.

.

.

.

.

.

.

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 50, m = 20

exact

cond.

Nelson

.

.

.

.

.

.

.

.

.

.

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

.

..

..

...

..

...

....................

......

.................................................................

......

....................

...

..

...

..

..

.

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

.

.

.

.

.

.

.

.

.

.

.

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 100, m = 50

exact

cond.

Nelson

.

.

.

.

.

..

.

.

.

.

.

..

...........

.............

......

.....

..................

......

.........

................

..

.................

.........

......

..................

.....

......

.............

...........

..

.

.

.

.

.

..

.

.

.

.

.

.

JS

0.9

0.91

0.92

0.93

0.94

0.95

0.96

0.97

0.98

0.99

1

0 0.2 0.4 0.6 0.8 1

n = 100, m = 20

exact

cond.

Nelson

.

.

..

.

.

.

.

.

.

.

.

.

.

..

..

...

............

...

............

.....

...............................

.....

.....................

.....

...............................

.....

............

...

............

...

..

..

.

.

.

.

.

.

.

.

.

.

..

.

.

.

JS

Figure 1b. Coverage probabilities of 95% binomial PIs as a function of p

11

4

5

6

7

8

9

10

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = m = 15

cond.

JS

2

2.5

3

3.5

4

4.5

5

5.5

6

6.5

7

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = 20, m = 10

cond.

JS

4

6

8

10

12

14

16

18

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = m = 50

cond.

JS

2

3

4

5

6

7

8

9

10

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = 50, m = 20

cond.

JS

2

4

6

8

10

12

14

16

18

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = 100, m = 50

cond.

JS

1

2

3

4

5

6

7

8

9

10

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

n = 100, m = 20

cond.

JS

Figure 2. Expected widths of 95% binomial prediction intervals as a function of p

12

3.2 The Conditional Prediction Interval

The conditional approach is based on the conditional distribution of Y given the sum X +Y . The

1 lower prediction limit is the largest integer L for which

B(L 1; x +L, m/(m+n)) , (16)

where x is an observed value of X. The 1 upper prediction limit U is the smallest integer for which

B(U; x +U, m/(m+n)) 1 . (17)

3.3 The Nelson Prediction Interval

Let

= X/n, and var(m

Y ) = m

2

(1/n + 1/m). Nelsons approximate PI is based on the

asymptotic result that (m

Y )/

_

var(m

Y ) N(0, 1), and is given by

[L|, U|] , with [L, U] =

Y z

1

Y

_

1

m

+

1

n

_

, (18)

where

Y = m

X

n

, for X = 1, 2, ..., and is

.5m

n

when X = 0.

3.4 The Prediction Interval Based on the Joint Sampling Approach

Let

xy

=

X+Y

m+n

. As in the binomial case, using the variance estimate var(m

xy

Y ) =

mn

xy

m+n

, we

consider the quantity

m

xy

Y

_

var(m

xy

Y )

=

(mX nY )

_

mn(X +Y )

,

whose asymptotic joint distribution is the standard normal. In order to handle the zero count, we take

X to be 0.5 when it is zero. The 1 2 PI is determined by the roots (with respect to Y ) of the

quadratic equation

(m

Y )

2

xy

m(1+m/n)

= z

2

1

. Based on these roots, the 1 2 PI is given by

[L|, U|], with [L, U] =

Y +

mz

2

1

2n

z

1

Y

_

1

m

+

1

n

_

+

m

2

z

2

1

4n

2

, (19)

where

Y = m

X

n

, for X = 1, 2, ..., and is

.5m

n

for X = 0.

3.5 Coverage Probabilities and Expected Widths of Poisson Prediction Intervals

As in the binomial case, it can be shown that the conditional PIs are either included in or identical

to the corresponding exact PIs except for the case of X = 0 where the former PIs are not dened. This

can be proved along the lines for the binomial case given in Appendix A, and so the proof is omitted.

13

In order to study the unconditional coverage probabilities and expected widths of the conditional PIs,

we take them to be exact PIs when X = 0.

For a given (n, m, , ), the exact coverage probability of a Poisson PI [L(x, n, m, ), U(x, n, m, )]

can be evaluated using the expression

x=0

y=0

e

(n+m)

(n)

x

(m)

y

x!y!

I

[L(x,n,m,),U(x,n,m,)]

(y).

The coverage probabilities of all PIs, except the exact one, are plotted in Figure 3 for some values of

(n, m). The exact PI is not included in the plots because it exhibited similar performance (excessively

conservative) as in the binomial case, and it is inferior to the conditional PI in terms of coverage

probabilities and expected widths. Regarding other PIs, we see from these six plots in Figure 3 that

Nelsons PIs are often liberal. The conditional PI and the new JS-PI behave similarly when n = m,

and the former is more conservative than the latter when n > m (see the plots for (n = 5, m = 2) and

(n = 10, m = 1)). Both PIs could be occasionally liberal, especially when is very small.

As the conditional PI and the new one based on the joint sampling approach are the ones satisfacto-

rily control the coverage probabilities, we further compare them with respect to expected widths. The

plots of expected widths are given in Figure 4. These plots clearly indicate that the expected widths of

these two PIs are not appreciably dierent for the situations they have similar coverage probabilities.

On the other hand, for the cases where the conditional PIs are conservative, the new PIs have shorter

expected widths; see the plots of (n = 5, m = 2) and (n = 10, m = 1).

We also evaluated coverage probabilities and expected widths of PIs with condence coecients

0.90 and 0.99. The results of comparison are similar to those of 95% PIs, and so they are not reported

here. On an overall basis, the new PI is preferable to other PIs in terms of coverage probabilities and

precisions.

4. Examples

4.1 An Example for Binomial Prediction Intervals

This example is adapted from Bain and Patel (1993). Suppose for a random sample of n =

400 devices tested, x = 20 devices are unacceptable. A 90% prediction interval is desired for the

number of unacceptable devices in a future sample of m = 100 such devices. The sample proportion

of unacceptable devices is p = 20/400 = .05 and

Y = m p = 5. The normal critical value required

to compute Nelsons PI and the new PI is z

.95

= 1.645. Nelsons PI given in (7) is [1, 9]. The new

JS-PI in (12) and Wangs PI in (9) are [2, 9]. To compute the conditional PI, we can use the new

prediction limits just obtained as initial guess values. In particular, using L = 2 as the initial guess

14

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = m = 1

Nelson-PI

cond. PI

JS-PI

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = m = 4

Nelson-PI

cond. PI

JS-PI

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = 5, m = 2

Nelson-PI

cond. PI

JS-PI

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = 2, m = 5

Nelson-PI

cond. PI

JS-PI

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = 10, m = 1

Nelson-PI

cond. PI

JS-PI

0.86

0.88

0.9

0.92

0.94

0.96

0.98

1

0 1 2 3 4 5 6 7 8 9

n = 1, m = 10

Nelson-PI

cond. PI

JS-PI

Figure 3. Coverage probabilities of 95% prediction intervals for Poisson distributions as a function of

15

4

6

8

10

12

14

16

0 1 2 3 4 5 6 7 8 9

n = m = 1

cond. PI

JS-PI

5

10

15

20

25

30

35

0 1 2 3 4 5 6 7 8 9

n = m = 4

cond. PI

JS-PI

2

4

6

8

10

12

14

16

18

20

0 1 2 3 4 5 6 7 8 9

n = 5, m = 2

cond. PI

JS-PI

0

2

4

6

8

10

12

14

0 1 2 3 4 5 6 7 8 9

n = 10, m = 1

cond. PI

JS-PI

Figure 4. Expected widths of 95% Poisson prediction intervals as a function of

16

value, we compute the probability in (4) as H(L 1 = 1, x + L = 22, 100, 500) = 0.0446 at L = 2;

H(L 1 = 2, x + L = 23, 100, 500) = 0.1272 > .05 at L = 3. So the lower endpoint is 2. Similarly,

we can nd the upper endpoint as 9. Thus, the conditional PI is also [2, 9]. To compute the exact

PI, we note that the probability in (2) at L = 2 is H(20; 22, 400, 500) = .1485, and at L = 1 is

1 H(19; 21, 400, 500) = .0540. Thus, the lower endpoint of the exact PI is 1. The upper endpoint can

be similarly obtained as 10. Thus, exact PI is [1, 10], which is the widest among all PIs.

4.2 An Example for Poisson Prediction Intervals

We shall use the example given in Hahn and Chandra (1981) for illustrating the construction of

PIs for a Poisson distribution. The number of unscheduled shutdowns per year in a large population

of systems follows a Poisson distribution with mean . Suppose there were 24 shutdowns over a period

of 5 years, and we like to nd 90% PIs for the number of unscheduled shutdowns over the next year.

Here n = 5, x = 24 and m = 1. The maximum likelihood estimate of is given by

=

24

5

= 4.8.

Nelsons PI in (7) is computed as [4.8 3.95|, 4.8 + 3.95|] = [1, 8]. The new PI based on the joint

sampling approach given in (19) is [2, 9]. To compute the conditional PI, we note that m/(m + n) =

.1667 and

B(8; 24 + 8, .1667) = 0.9271 and B(9; 24 + 9, .1667) = 0.9621.

So 9 is the upper endpoint of the 90% conditional PI. Furthermore, B(a1; 24+a, .1667) = 0.0541 at a =

2 and B(a 1; 24 +a, .1667) = 0.0105 at a = 1, and so the lower endpoint of the 90% PI is 1. Thus,

the conditional PI is [1, 9]. To nd the lower endpoint of the exact PI using (14), we note that

n/(n + m) = 5/6 = .8333, and 1 B(23; 24 + 1, .8333) = .0628. It can be veried that L = 1 is the

smallest integer for which 1 B(23; 24 + L, .8333 > .05. Similarly, using (15), we can nd the upper

endpoint of the 90% exact PI as 9. Thus, the exact PI and the conditional PI are the same [1, 9]

whereas the new PI is [2, 9].

5. Concluding Remarks

It is now well-known that the classical exact methods for discrete distributions are often too con-

servative producing condence intervals that are unnecessarily wide or tests that are less powerful.

Examples include Clopper-Pearson (1934) condence interval for a binomial proportion, Fishers exact

test for comparing two proportions, Przyborowski and Wilenskis (1940) conditional test for the ratio

of two Poisson means, and Thomas and Garts (1977) method for estimating the odds ratio involving

two binomial distributions; see Agresti and Coull (1998), Agresti (1999) and Brown, Cai and Das

Gupta (2001). Many authors have recommended alternative approximate approaches for constructing

condence intervals with satisfactory coverage probabilities and good precision. In this article, we have

established similar results for constructing PIs for a binomial or Poisson distribution. Furthermore, we

showed that the conditional PIs are narrower than the exact PIs, and so the former PIs have shorter

17

expected width. The joint sampling approach produces PIs for both binomial and Poisson distributions

with good coverage properties and precisions. In terms of simplicity and accuracy, the new PIs are

preferable to others, and can be recommended for applications.

Acknowledgements The authors are grateful a reviewer for providing useful comments and sugges-

tions.

References

Aitchison, J., Dunsmore, I. R. 1980. Statistical Prediction Analysis. Cambridge University Press, Cambridge,

UK.

Agresti, A. 1999. On logit condence intervals for the odds ratio with small samples. Biometrics 55, 597-602.

Agresti, A., Coull, B. A. 1988. Approximate is better than exact for interval estimation of binomial propor-

tion. The American Statistician 52, 119-125.

Bain, L.J., Patel, J. K. 1993. Prediction intervals based on partial observations for some discrete distributions.

IEEE Transactions on Reliability 42, 459-463.

Brown, P. J. 1982. Multivariate calibration. Journal of the Royal Statistical Society, Ser. B 44, 287-321.

Brown L. D., Cai T., Das Gupta A. 2001. Interval estimation for a binomial proportion (with discussion).

Statistical Science 16, 101-133.

Clopper, C. J., Pearson E. S. 1934. The use of condence or ducial limits illustrated in the case of the

binomial. Biometrika 26, 404-413.

Davis, C. B., McNichols, R. J. 1987. One-sided intervals for at least p of m observations from a normal

population on each of r future occasions. Technometrics 29, 359-370.

Dunsmore, I. R. 1976. A note on faulkenberrys method of obtaining prediction intervals. Journal of the

American Statistical Association 71, 193-194.

Faulkenberry, G. D. 1973. A method of obtaining prediction intervals. Journal of the American Statistical

Association 68, 433-435.

Gibbons, R. D. 1987. Statistical prediction intervals for the evaluation of ground-water quality. Ground Water

25, 455-265.

Hahn, G. J., Meeker, W. Q. 1991. Statistical Intervals: A Guide to Practitioners, Wiley, New York.

Hahn, G. J., Chandra, R. 1981. Tolerance intervals for Poisson and binomial variables. Journal of Quality

Technology 13, 100-110.

Kn usel, L. 1994. The prediction problem as the dual form of the two-sample problem with applications to the

poisson and the binomial distribution. The American Statistician 48, 214-219.

Nelson, W. 1982. Applied Life Data Analysis. Wiley, New York.

Przyborowski, J., Wilenski, H. 1940. Homogeneity of results in testing samples from Poisson series. Biometrika

31, 313-323.

18

Thatcher, A. R. 1964. Relationships between bayesian and condence limits for prediction. Journal of the

Royal Statistical Society, Ser. B 26, 176-192.

Thomas, D. G. and Gart, J. J. 1977. A table of exact condence limits for dierences and ratios of two

proportions and their odds ratios. Journal of the American Statistical Association 72, 73-76.

Wang, H. 2008. Coverage probability of prediction intervals for discrete random variables. Computational

Statistics and Data Analysis 53, 17-26.

Wang, H. 2010. Closed form prediction intervals applied for disease counts. The American Statistician 64,

250-256.

Appendix A

We shall now show that, for the binomial case, the conditional PI is always included in or equal to the

exact PI. Let L

c

and L

e

be the lower endpoints of the 1 2 conditional PI and exact PI, respectively.

To show that L

c

is always greater than or equal to L

e

, we recall that L

c

is the largest integer so that

P(Y L

c

1[x + L

c

, m, n + m) . This is the probability of observing L

c

1 or fewer defects

from a sample of size x +L

c

from a lot of size n +m that contains m defects. This is the same as the

probability of observing at least x+1 non-defects in a sample of size x+L

c

from a lot of size n+m that

contains n non-defects. That is, P(Y L

c

1[x+L

c

, m, n+m) = P(X x+1[x+L

c

, n, n+m) .

Note that x must be the smallest integer for which the above inequality holds. Therefore,

P(X x + 1[x +L

c

, n, n +m) P(X x[x +L

c

, n, n +m) > .

Thus, L

c

satises the condition in (2), and so it is greater than or equal to L

e

. To show that L

c

> L

e

for some sample sizes, recall that L

e

is the smallest integer for which P(X x[x +L

e

, n, n +m) > .

Arguing as before, it is easy to see that P(X x[x+L

e

, n, n+m) = P(Y L

e

[x+L

e

, m, n+m) > .

Since L

e

is the smallest integer for which this inequality holds, P(Y L

e

1[x+L

e

1, m, n+m) .

As noted in Thatcher (1964), the hypergeometric cdf is a decreasing function of the sample size while

other quantities are xed, and so

P(Y L

e

1[x +L

e

, m, n +m) < .

However, L

e

is not necessarily the largest integer satisfying the above inequality, and so it could be

smaller than L

c

. In fact, there are many situations where L

c

> L

e

. For examples, when n = 40, m = 32

and x = 22, the 95% exact and conditional PIs intervals are [10, 25] and [11, 24], respectively; when

(n, m, x) = (36, 22, 18), they are [5, 17] and [6, 16], respectively.

We shall now show that the upper endpoint U

c

of a 1 2 conditional PI is included in the

corresponding exact PI. Recall that U

c

is the smallest integer for which

P(Y U

c

[x +U

c

, m, n +m) 1 . (20)

19

As argued earlier, the above probability is the same as the probability of observing at least x non-defects

in a sample of size x+U

c

when the number of non-defects is n. That is, P(Y U

c

[x+U

c

, m, n+m) =

P(X x[x + U

c

, n, n + m). Because U

c

is the smallest integer satisfying (20), x must be the largest

integer satisfying P(X x[x +U

c

, n, n +m) 1 . Thus, if U

c

satises (20), then

P(X x[x +U

c

, n, n +m) 1 P(X x 1[x +U

c

, n, n +m) , (21)

which implies that P(X x[x+U

c

, n, n+m) > . In other words, U

c

satises (3), and so it is included

in the exact PI. Furthermore, using the argument similar to the one given for the lower endpoint, it

can be shown that there are situations where U

c

could be strictly less than the upper endpoint of the

corresponding exact PI.

Appendix B

We here show that the JS-PI in (12) is included in or identical to Wangs PI determined by (8).

For a given (n, m, X), let Y

0

be a value that is in the JS-PI. To show that Y

0

is included in Wangs

PI, it is enough to show that if Y

0

satises (12) then it also satises [W(n, m, X, Y

0

)[ < z

1

, where

W(n, m, X, Y ) is dened in (8). Equivalently, we need to show that

X +Y

0

m+n

_

1

X +Y

0

m+n

_

X +Y

0

+c/2

m+n +c

_

1

X +Y

0

+c/2

m+n +c

_

,

where c = z

2

1

. Let d = X +Y

0

and N = m+n. It is easy to check that the above inequality holds if

and only if

d

2

dN +

N

2

4

0.

The above quadratic function of d attains its minimum at d = N/2, and the minimum value is zero.

Thus, the JS-PI is included in or identical to Wangs PI.

You might also like

- Poisson Models For Count Data: 4.1 Introduction To Poisson RegressionDocument14 pagesPoisson Models For Count Data: 4.1 Introduction To Poisson Regressionwww.contalia.esNo ratings yet

- Large Sample ProblemsDocument9 pagesLarge Sample ProblemsDinesh PNo ratings yet

- Overdispersion Models and EstimationDocument20 pagesOverdispersion Models and EstimationJohann Sebastian ClaveriaNo ratings yet

- Bivariate Poissson CountDocument18 pagesBivariate Poissson Countray raylandeNo ratings yet

- 1983 S Baker R D Cousins-NIM 221 437-442Document6 pages1983 S Baker R D Cousins-NIM 221 437-442shusakuNo ratings yet

- Assignment 1 StatisticsDocument6 pagesAssignment 1 Statisticsjaspreet46No ratings yet

- Goodness of FitDocument5 pagesGoodness of Fitsophia787No ratings yet

- Chi-squared test explainedDocument31 pagesChi-squared test explainedJason maruyaNo ratings yet

- Ch5-Inference About MeanDocument36 pagesCh5-Inference About MeanarakazajeandavidNo ratings yet

- Heisenberg S Uncertainty PrincipleDocument19 pagesHeisenberg S Uncertainty PrincipleWai-Yen ChanNo ratings yet

- Bayesian Structural Source Identification Using Generalized Gaussian PriorsDocument8 pagesBayesian Structural Source Identification Using Generalized Gaussian PriorsMathieu AucejoNo ratings yet

- Nonparametric Analysis of Factorial Designs with Random MissingnessDocument38 pagesNonparametric Analysis of Factorial Designs with Random MissingnessHéctor YendisNo ratings yet

- Kerja Kursus Add MatDocument8 pagesKerja Kursus Add MatSyira SlumberzNo ratings yet

- Statistical Modeling of Difusion Processes With Free Knot SplinesDocument24 pagesStatistical Modeling of Difusion Processes With Free Knot SplinesFrank LiuNo ratings yet

- Chi - Square Test: Dr. Md. NazimDocument9 pagesChi - Square Test: Dr. Md. Nazimdiksha vermaNo ratings yet

- Casualidade EspacialDocument25 pagesCasualidade EspacialAna Carolina Da SilvaNo ratings yet

- Statistical ApplicationDocument18 pagesStatistical ApplicationArqam AbdulaNo ratings yet

- Estimation of The Sample Size and Coverage For Guaranteed-Coverage Nonnormal Tolerance IntervalsDocument8 pagesEstimation of The Sample Size and Coverage For Guaranteed-Coverage Nonnormal Tolerance IntervalsAnonymous rfn4FLKD8No ratings yet

- Disproving Common Probability and Statistics Misconceptions with CounterexamplesDocument12 pagesDisproving Common Probability and Statistics Misconceptions with CounterexamplesRemi A. SamadNo ratings yet

- On of Maximum And: The Principle Entropy The Earthquake Frequency - Magnitude RelationDocument9 pagesOn of Maximum And: The Principle Entropy The Earthquake Frequency - Magnitude RelationJoaquín AguileraNo ratings yet

- A Short Tutorial On The Expectation-Maximization AlgorithmDocument25 pagesA Short Tutorial On The Expectation-Maximization AlgorithmaezixNo ratings yet

- Statistics 21march2018Document25 pagesStatistics 21march2018Parth JatakiaNo ratings yet

- 1 s2.0 S0378375806002205 MainDocument10 pages1 s2.0 S0378375806002205 Mainjps.mathematicsNo ratings yet

- The Pitman Estimator of The Cauchy Location ParameterDocument14 pagesThe Pitman Estimator of The Cauchy Location ParametermaxsilverNo ratings yet

- Poisson Binomial DistributionDocument4 pagesPoisson Binomial Distributionhobson616No ratings yet

- Poisson Binomial DistributionDocument4 pagesPoisson Binomial Distributionanthony777No ratings yet

- Bhattacharya - 1967 - Simple Method Resolution Distribution Into Gaussian ComponentsDocument22 pagesBhattacharya - 1967 - Simple Method Resolution Distribution Into Gaussian ComponentsDaniel Mateo Rangel ReséndezNo ratings yet

- Robust Detection of Multiple Outliers in A Multivariate Data SetDocument30 pagesRobust Detection of Multiple Outliers in A Multivariate Data Setchukwu solomonNo ratings yet

- 10.1007@s10479 018 2857 4Document18 pages10.1007@s10479 018 2857 4Juan MercadoNo ratings yet

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Statistical Estimation Methods in Hydrological EngineeringDocument41 pagesStatistical Estimation Methods in Hydrological EngineeringshambelNo ratings yet

- 1994 Chen Weighted Sampling Max EntropyDocument14 pages1994 Chen Weighted Sampling Max EntropyRaulNo ratings yet

- 1992 Smith GelfandDocument6 pages1992 Smith GelfanddydysimionNo ratings yet

- Extended Bayesian Information Criteria For Model Selection With Large Model SpacesDocument27 pagesExtended Bayesian Information Criteria For Model Selection With Large Model SpacesJuan CarNo ratings yet

- Entropy: Statistical Information: A Bayesian PerspectiveDocument11 pagesEntropy: Statistical Information: A Bayesian PerspectiveMiguel Angel HrndzNo ratings yet

- Wilcox 1981Document31 pagesWilcox 1981Ahmed FenneurNo ratings yet

- Frequentist vs Bayesian Stats in OceanographyDocument17 pagesFrequentist vs Bayesian Stats in Oceanographyi333No ratings yet

- Test for Normality of Observations and Regression Residuals Using Score MethodDocument11 pagesTest for Normality of Observations and Regression Residuals Using Score MethodAnonymous 105zV1No ratings yet

- VLE data regression using maximum likelihoodDocument18 pagesVLE data regression using maximum likelihoodamoNo ratings yet

- Pearson's Chi-Squared TestDocument2 pagesPearson's Chi-Squared TestPratik verma (PGDM 17-19)No ratings yet

- Scalable Variational Inference For BayesianDocument36 pagesScalable Variational Inference For BayesianClaudio MelloNo ratings yet

- Importance Sampling Via Simulacrum: Alan E. WesselDocument13 pagesImportance Sampling Via Simulacrum: Alan E. WesselEric HallNo ratings yet

- Chi Square Test in Health SciencesDocument16 pagesChi Square Test in Health SciencesCrisPinosNo ratings yet

- Collin 2018Document27 pagesCollin 2018injuwanijuwaNo ratings yet

- DISTRIBUTIONSDocument20 pagesDISTRIBUTIONSAyush GuptaNo ratings yet

- littermanDocument7 pageslittermanzerdnaNo ratings yet

- Preprint of The Book Chapter: "Bayesian Versus Frequentist Inference"Document29 pagesPreprint of The Book Chapter: "Bayesian Versus Frequentist Inference"wtvarNo ratings yet

- Assessing Microdata Disclosure Risk Using The Poisson-Inverse Gaussian DistributionDocument16 pagesAssessing Microdata Disclosure Risk Using The Poisson-Inverse Gaussian DistributionAnbumani BalakrishnanNo ratings yet

- Gibbs Sampler Approach For Objective Bayeisan Inference in Elliptical Multivariate Random Effects ModelDocument32 pagesGibbs Sampler Approach For Objective Bayeisan Inference in Elliptical Multivariate Random Effects ModelChet MehtaNo ratings yet

- DocDocument19 pagesDocAngra ArdanaNo ratings yet

- Cointegracion MacKinnon, - Et - Al - 1996 PDFDocument26 pagesCointegracion MacKinnon, - Et - Al - 1996 PDFAndy HernandezNo ratings yet

- Statistical Undecidability is Statistically UndecidableDocument4 pagesStatistical Undecidability is Statistically Undecidableadeka1No ratings yet

- When Will A Large Complex System Be StableDocument4 pagesWhen Will A Large Complex System Be StabledmitworNo ratings yet

- Measurement Techniques, Vol. 56, No. 4, July, 2013Document6 pagesMeasurement Techniques, Vol. 56, No. 4, July, 2013ArzamasovNo ratings yet

- Model ComparisonDocument13 pagesModel ComparisonMiguel Antonio Barretto GarcíaNo ratings yet

- A Beginner's Notes On Bayesian Econometrics (Art)Document21 pagesA Beginner's Notes On Bayesian Econometrics (Art)amerdNo ratings yet

- Sampling Distributions: The Basic Practice of StatisticsDocument14 pagesSampling Distributions: The Basic Practice of StatisticsUsernamefireNo ratings yet

- Concordance C Index - 2 PDFDocument8 pagesConcordance C Index - 2 PDFnuriyesanNo ratings yet

- Blow-up Theory for Elliptic PDEs in Riemannian Geometry (MN-45)From EverandBlow-up Theory for Elliptic PDEs in Riemannian Geometry (MN-45)No ratings yet

- Ce 342:geotechnical Engineeringlaboratory: Experiment NoDocument1 pageCe 342:geotechnical Engineeringlaboratory: Experiment NoImdadul HaqueNo ratings yet

- Legendre eDocument5 pagesLegendre eImdadul HaqueNo ratings yet

- BUET Hom1Document3 pagesBUET Hom1Imdadul HaqueNo ratings yet

- Bin Pois PRIDocument19 pagesBin Pois PRIImdadul HaqueNo ratings yet

- 05 Failure TheoriesDocument4 pages05 Failure TheoriesImdadul HaqueNo ratings yet

- Trapezoidal RuleDocument2 pagesTrapezoidal RuleElango PaulchamyNo ratings yet

- Outline CD 2Document9 pagesOutline CD 2Imdadul HaqueNo ratings yet

- Mass Functions and Density FunctionsDocument4 pagesMass Functions and Density FunctionsImdadul HaqueNo ratings yet

- Chi SquareDocument3 pagesChi SquareankisluckNo ratings yet

- CE206 - System of Linear EquationsDocument17 pagesCE206 - System of Linear EquationsImdadul HaqueNo ratings yet

- 9 10 12 3Document3 pages9 10 12 3Imdadul HaqueNo ratings yet

- My Son The Fanatic, Short StoryDocument4 pagesMy Son The Fanatic, Short StoryScribdAddict100% (2)

- Delhi Mumbai Award Status Mar 23Document11 pagesDelhi Mumbai Award Status Mar 23Manoj DoshiNo ratings yet

- IntuitionDocument10 pagesIntuitionmailsonNo ratings yet

- Fictional Narrative: The Case of Alan and His FamilyDocument4 pagesFictional Narrative: The Case of Alan and His Familydominique babisNo ratings yet

- EDIBLE VACCINES: A COST-EFFECTIVE SOLUTIONDocument21 pagesEDIBLE VACCINES: A COST-EFFECTIVE SOLUTIONPritish SareenNo ratings yet

- Samuel Vizcaino: Professional ProfileDocument3 pagesSamuel Vizcaino: Professional ProfileVizcaíno SamuelNo ratings yet

- Rock Type Identification Flow Chart: Sedimentary SedimentaryDocument8 pagesRock Type Identification Flow Chart: Sedimentary Sedimentarymeletiou stamatiosNo ratings yet

- Historical Source Author Date of The Event Objective of The Event Persons Involved Main ArgumentDocument5 pagesHistorical Source Author Date of The Event Objective of The Event Persons Involved Main ArgumentMark Saldie RoncesvallesNo ratings yet

- Canterburytales-No Fear PrologueDocument10 pagesCanterburytales-No Fear Prologueapi-261452312No ratings yet

- Surface Coating ProcessesDocument7 pagesSurface Coating ProcessesSailabala ChoudhuryNo ratings yet

- XYZ Company Asset Inventory ReportDocument1 pageXYZ Company Asset Inventory ReportNini KitsNo ratings yet

- Marketing Budget: Expenses Q1 Q2 Q3 Q4 Totals Budget %Document20 pagesMarketing Budget: Expenses Q1 Q2 Q3 Q4 Totals Budget %Miaow MiaowNo ratings yet

- Food Science, Technology & Nutitions - Woodhead - FoodDocument64 pagesFood Science, Technology & Nutitions - Woodhead - FoodEduardo EstradaNo ratings yet

- Manual EDocument12 pagesManual EKrum KashavarovNo ratings yet

- Falling Weight Deflectometer Bowl Parameters As Analysis Tool For Pavement Structural EvaluationsDocument18 pagesFalling Weight Deflectometer Bowl Parameters As Analysis Tool For Pavement Structural EvaluationsEdisson Eduardo Valencia Gomez100% (1)

- ManuscriptDocument2 pagesManuscriptVanya QuistoNo ratings yet

- CA-Endevor Quick EditDocument31 pagesCA-Endevor Quick Editmariela mmascelloniNo ratings yet

- Project Report On Discontinuous Puf Panels Using Cyclopentane As A Blowing AgentDocument6 pagesProject Report On Discontinuous Puf Panels Using Cyclopentane As A Blowing AgentEIRI Board of Consultants and PublishersNo ratings yet

- Graphic Organizers for Organizing IdeasDocument11 pagesGraphic Organizers for Organizing IdeasMargie Tirado JavierNo ratings yet

- 1651 EE-ES-2019-1015-R0 Load Flow PQ Capability (ENG)Document62 pages1651 EE-ES-2019-1015-R0 Load Flow PQ Capability (ENG)Alfonso GonzálezNo ratings yet

- BBRC4103 - Research MethodologyDocument14 pagesBBRC4103 - Research MethodologySimon RajNo ratings yet

- Occupant Load CalculationsDocument60 pagesOccupant Load CalculationsKAIVALYA TIWATNENo ratings yet

- Videocon ProjectDocument54 pagesVideocon ProjectDeepak AryaNo ratings yet

- Defining Public RelationsDocument4 pagesDefining Public RelationsKARTAVYA SINGHNo ratings yet

- AMB4520R0v06: Antenna SpecificationsDocument2 pagesAMB4520R0v06: Antenna SpecificationsЕвгений ГрязевNo ratings yet

- New Japa Retreat NotebookDocument48 pagesNew Japa Retreat NotebookRob ElingsNo ratings yet

- Book of Lost Spells (Necromancer Games)Document137 pagesBook of Lost Spells (Necromancer Games)Rodrigo Hky91% (22)

- Product Manual 82434 (Revision C) : Generator Loading ControlDocument26 pagesProduct Manual 82434 (Revision C) : Generator Loading ControlAUGUSTA WIBI ARDIKTANo ratings yet

- Rethinking Classification and Localization For Object DetectionDocument13 pagesRethinking Classification and Localization For Object DetectionShah Nawaz KhanNo ratings yet

- The Seven Kings of Revelation 17Document9 pagesThe Seven Kings of Revelation 17rojelio100% (1)