Professional Documents

Culture Documents

Isom CH 14

Uploaded by

totally_fatal7848_56Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Isom CH 14

Uploaded by

totally_fatal7848_56Copyright:

Available Formats

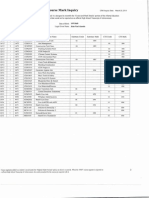

Multiple Regression Analysis General Descriptive Form of a multiple linear equation:

Y/hat = a + b1 X1 + b2X2 + bkXk

Use k to represent the number of independent variables, so k can be any positive integer. where:

a is the intercept, the value of Y/hat when all the Xs are zero. bj is the amount by which Y/hat changes when that particular Xj increases by one unit, with the values of all other independent variables held constant.

When there are two independent variables, the regression equation is:

Y/hat = a + b1 X1 + b2X2

If a multiple regression analysis contains more than two independent variables, we cannot use a graph to illustrate since graphs are limited to three dimensions. a = where regression equation (plane) intersects Y-axis, regression equation intercept, and constant b1 = first regression coefficient, for each increase in 1 b. it will increase by b. pay respects to negatives when inputting variables Y/hat is used to estimate the value of Y To use the regression equation, you need to know the values of the regression coefficients, bj o If a regression coefficient is negative, relationship is negative/inverse Evaluating a Multiple Regression Analysis The ANOVA Table The statistical analysis of a multiple regression equation is summarized in an ANOVA Table. The total variation of the dependent variable (Y) is divided into two components Regression, or the variation of Y explained by all the independent variables The error or residual, or unexplained variation of Y, difference between the actual and estimated value. These two are identified in the first column of an ANOVA table. Degrees of freedom are n-1 Degrees of freedom in regression is equal to the number of independent variables in multiple aggression equation Regression degrees of freedom = k Degrees of freedom associated with the error term is equal to the total df minus the regression df

In multiple aggression, df is n (k +1) Sum of Squares (SS) refers to the amount of variation for each source SS Total= Total sumof squares SSR = Regression sum of squares SSE = Residual or error sum of squares MS = mean squares for regression and residual, calculated by SS/df A smaller multiple standard error indicates a better or more effective prediction equation Coefficient of Multiple Determinationthe percent of a variation in the dependent variable, Y, explained by the variation in the set of independent variables, X1 etc R2 Can range from 0 to 1 Cannot assume negative values SSR/SS Total Adjusted Coefficient of Determination The coefficient of determination tends to increase as more independent variables are added to a multiple regression model. Each new one causes the predictions to be more accurate, and makes the SSE smaller and SSR larger. R2 increases only b/c total num of independent variables increase. If k = n, coefficient of determination is 1.0 Found by dividing SSE and total sum of squares by their respected degrees of freedom (SSE total/n-1) Inferences in Multiple Linear Regressions When you create confidence intervals/perferm hyp. Tests, you are viewing the data as a random sample from a population. In multiple regressions, we assume there is an unknown population regression equation that relates to the dependent variable to the k independent variables. This is called a model of the relationship.

Y/hat = +

1X1 + etc kXk

Greek letters=population parameters a and bj are sample statistics regression coefficient b2 is a point estimate for follows the normal probability distribution sampling distributions are = to the parameter values to be estimated

Global Test: Testing the Multiple Regression Model Global Testinvestigates whether it is possible all the independent variables have zero regression coefficients

Test whether the regression coefficients in the population are all zero is the

Null Hypothesis: H0= 1 = 2 = 3 = 0 (none of the independent variables can be used to estimate the dependent variable) Alternate: H1: Not all the s are 0. (

To test the null, employ F distribution There is a family of f distribution Cannot be negative Is continuous Positively skewed As X increases, F curve will approach the horizontal axis, but will never touch it Numerator is the regression sum of squares divided by its degrees of freedom, k. denominator is the residual sum of squares divided by its degrees of freedom , n (k+1) Tests the basic null hypothesis that two mean squares are equal If ew reject null, F will be large and to the far right tail of the F-distribution and p-value will be small, less than significance level and therefore rejected Critical value of F found in B.4 Testing the null can be based on p-value, and defined as the probability of observing an F-value as large or larger than F if true If less than sig. level, reject null An advantage to using p-value is that it gives us probability of making a Type I error Evaluating Regression Coefficients If is equal to 0,it implies that this particular independent variable is of no value in explaining any variation in the dependent value. If there are no coefficient for which null cannot be rejected, we may want to eliminate them from the regression equation Follows t-dist. With n (k +1) df Critical value for t is in B.2 Standard Error estimates the variability for each of these regression coefficients Testing independent variables individually to determine whether the net regression coefficients differ from zero bi refers to any regression coefficient and sbi refers tostandard deviation of that distribution of the regression coefficient. Can also use p-values to test individual regression coefficient

T = bi 0/ sbi

Strategy for deleting independent variables

1. Determine regression equation 2. Detail the global test, find out df and refer to Appendix B4 using whatever sig. level to figure out the decision and compute the value of F 3. Conduct a test of the regression coefficients individually; we want to determine if one or both regression coefficients are different from 0 4. This whole thing can be automated Evaluating the Assumptions of Multiple Regression Validity of Statistical and Global Tests 1. There is a linear relationship 2. Variation is the same for both large and small values of Y/hat 3. Residuals follow normal distribution 4. Independent variables should not be correlated 5. Residuals are independent

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Caterpillar Cat C7 Marine Engine Parts Catalogue ManualDocument21 pagesCaterpillar Cat C7 Marine Engine Parts Catalogue ManualkfsmmeNo ratings yet

- Praise and Worship Songs Volume 2 PDFDocument92 pagesPraise and Worship Songs Volume 2 PDFDaniel AnayaNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- D&D 5.0 Combat Reference Sheet Move Action: Interact With One Object Do Other Simple ActivtiesDocument2 pagesD&D 5.0 Combat Reference Sheet Move Action: Interact With One Object Do Other Simple ActivtiesJason ParsonsNo ratings yet

- Jonathan Livingston Seagull - Richard Bach - (SAW000) PDFDocument39 pagesJonathan Livingston Seagull - Richard Bach - (SAW000) PDFAdrià SonetNo ratings yet

- ABARI-Volunteer Guide BookDocument10 pagesABARI-Volunteer Guide BookEla Mercado0% (1)

- World War II D-Day Invasion by SlidesgoDocument55 pagesWorld War II D-Day Invasion by SlidesgoPreston SandsNo ratings yet

- Shri Naina Devi Aarti English 167Document5 pagesShri Naina Devi Aarti English 167ratt182No ratings yet

- VRPIN 01843 PsychiatricReportDrivers 1112 WEBDocument2 pagesVRPIN 01843 PsychiatricReportDrivers 1112 WEBeverlord123No ratings yet

- The Rise of Australian NovelDocument412 pagesThe Rise of Australian NovelSampath Kumar GummadiNo ratings yet

- (20836104 - Artificial Satellites) Investigation of The Accuracy of Google Earth Elevation DataDocument9 pages(20836104 - Artificial Satellites) Investigation of The Accuracy of Google Earth Elevation DataSunidhi VermaNo ratings yet

- A Content Analysis of SeabankDocument13 pagesA Content Analysis of SeabankMarielet Dela PazNo ratings yet

- Sundar Pichai PDFDocument6 pagesSundar Pichai PDFHimanshi Patle100% (1)

- Hanwha Q Cells Data Sheet Qpeak L-g4.2 360-370 2017-10 Rev02 NaDocument2 pagesHanwha Q Cells Data Sheet Qpeak L-g4.2 360-370 2017-10 Rev02 NazulfikarNo ratings yet

- State Space ModelsDocument19 pagesState Space Modelswat2013rahulNo ratings yet

- ..Product CatalogueDocument56 pages..Product Catalogue950 911No ratings yet

- Ultra ConductorsDocument28 pagesUltra ConductorsAnu Kp50% (8)

- Li JinglinDocument3 pagesLi JinglincorneliuskooNo ratings yet

- IT Level 4 COCDocument2 pagesIT Level 4 COCfikru tesefaye0% (1)

- A Literature Review of Retailing Sector and BusineDocument21 pagesA Literature Review of Retailing Sector and BusineSid MichaelNo ratings yet

- 18-MCE-49 Lab Session 01Document5 pages18-MCE-49 Lab Session 01Waqar IbrahimNo ratings yet

- Jul - Dec 09Document8 pagesJul - Dec 09dmaizulNo ratings yet

- 10 1108 - TQM 03 2020 0066 PDFDocument23 pages10 1108 - TQM 03 2020 0066 PDFLejandra MNo ratings yet

- Create A Visual DopplerDocument1 pageCreate A Visual DopplerRahul GandhiNo ratings yet

- (Sat) - 072023Document7 pages(Sat) - 072023DhananjayPatelNo ratings yet

- Universitas Tidar: Fakultas Keguruan Dan Ilmu PendidikanDocument7 pagesUniversitas Tidar: Fakultas Keguruan Dan Ilmu PendidikanTheresia Calcutaa WilNo ratings yet

- Acting White 2011 SohnDocument18 pagesActing White 2011 SohnrceglieNo ratings yet

- Victor 2Document30 pagesVictor 2EmmanuelNo ratings yet

- Img 20150510 0001Document2 pagesImg 20150510 0001api-284663984No ratings yet

- Ch-10 Human Eye Notes FinalDocument27 pagesCh-10 Human Eye Notes Finalkilemas494No ratings yet

- Mathmatcs Joint Form TwoDocument11 pagesMathmatcs Joint Form TwoNurudi jumaNo ratings yet