Professional Documents

Culture Documents

An Overview of Pattern Recognition

Uploaded by

Elie SalibaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

An Overview of Pattern Recognition

Uploaded by

Elie SalibaCopyright:

Available Formats

An overview of Pattern Recognition

Elie SALIBA elie.saliba@upazahle.net University of Burgundy, Antonine University

Abstract Pattern recognition has become more and more popular and important to us and it induces attractive attention coming from wider areas. The general processing steps of pattern recognition are discussed, starting with the preprocessing, then the feature extraction, and nally the classication. Several methods were used for each step of pattern recognition such as segmentation and noise removal in preprocessing, Gabor wavelets transform for feature extraction, Support Vector Machines (SVM) for classication, and so forth. Some pattern recognition methods are presented and their applications are given. The objective of this paper is to summarize and compare some methods for pattern recognition, and future research issues which need to be resolved and investigated further are given along with the new trends and ideas. Keywords: Pattern recognition, preprocessing, feature extraction, classication.

Introduction

Pattern recognition can be seen as a classication process. Its ultimate goal is to optimally extract patterns based on certain conditions and to separate one class from the others. The application of Pattern Recognition can be found everywhere. Examples include disease categorization, prediction of survival rates for patients of specic disease, ngerprint verication, face recognition, iris discrimination, chromosome shape discrimination, optical character recognition, texture discrimination, speech recognition, and etc. The design of a pattern recognition system should consider the application domain. A universally best pattern recognition system has never existed. The basic components of a pattern recognition system are preprocessing, feature extraction, and classication. Image pre-processing is a desirable step in every pattern recognition system to improve its performance. Its role is to segment the interesting pattern from the background of a given image, plus apply some noise ltering, smoothing and normalization to correct the image from dierent errors, such as strong variations in lighting direction and intensity. Many methods exist for image preprocessing, such as the Fermi energy-based segmentation method [9]. Feature extraction is a crucial step in invariant pattern recognition. In general, good features must satisfy the following requirements. First, intraclass variance must be small, which means that features derived from dierent samples of the same class should be close. Secondly, the interclass separation should be large, i.e., features derived from samples of dierent classes should dier signicantly. Also, a major problem associated in pattern recognition is the so-called curse of dimensionality. There are two reasons to explain why the dimension of the feature vector cannot be too large: rstly, the computational complexity would become too large; secondly, an increase of the dimension will ultimately cause a decrease of the performance. For the reduction of the feature space dimensionality, two dierent approaches exist. One is to discard certain elements of the feature vector and keep the most representative ones. This type of reduction is feature selection. Another one is the feature extraction, in which, the original feature vector is converted to a new one by a special transform and the new features have a much lower dimensions. Furthermore, in a robust pattern recognition system, features should be independent of the size, orientation, and location of the pattern. This independence can be achieved by extracting features that are translation-, rotation-, and scale-invariant. Choosing discriminative and independent features is the key to any pattern recognition method being successful in classication. Examples of features 1

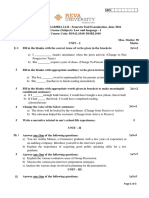

Figure 1: Pattern recognition process. that can be used: color, shape, size, texture, position, etc. Many methods exist for this step, such as nonlinear principal components analysis (NLPCA)[6], Radon transform [3], dual-tree complex wavelet transform [3][4], Fourier transform [3], Gabor wavelets [10], Curvelet transform [6], skeletonisation [9], rough set theory (RST) [9], Fuzzy invariant vector[7], etc. Some of these methods are reviewed in this paper. After the feature extraction step, the classication could be done. This step enables us to recognize an object or a pattern by using some characteristics (features) derived from the previous steps. It is the step which attempts to assign each input value of the feature vector to one of a given set of classes. For example, when determining whether a given image contains a face or not, the problem will be a face/non-face classication problem. Classes, or categories, are groups of patterns having feature values similar according to a given metric. Pattern recognition is generally categorized according to the type of learning used to generate the output value in this step. Supervised learning assumes that a set of training data (training set) has been provided, consisting of a set of instances that have been properly labeled by hand with the correct output. It generates a model that attempts to meet two sometimes conicting objectives: Perform as well as possible on the training data, and generalize as well as possible to new data. Unsupervised learning, on the other hand, assumes training data that has not been hand-labeled, and attempts to nd inherent patterns in the data that can then be used to determine the correct output value for new data instances. Classication learning techniques include Support Vector Machine (SVM) [4] [10] [6] [2], Multi-class SVM [12], Neural Networks [5], Logical combinatorial approach [11], Markov random eld models (MRF)[1], Fuzzy ART [7], CLAss-Featuring Information Compressing (CLAFIC) [8], K-nearest neighbor [3], rule generation [9], etc. The most important methods are reviewed later in this paper. The organization of this paper is as follows. Section 2 reviews the pattern recognition process. Section 3 reviews a comparison between some existing classication methods for pattern recognition. Section 4 reviews a small overview about the application of pattern recognition in agriculture. Finally, Section 5 draws the conclusions and gives future work to be done.

Pattern recognition process

Pattern recognition has been under constant development for many years. It includes lots of methods impelling the development of numerous applications in dierent elds. The basic components in pattern recognition are preprocessing, feature extraction, and classication. Once the dataset is acquired, it is preprocessed, so that it becomes suitable for subsequent sub-processes. The next step is feature extraction, in which, the dataset is converted into a set of feature vectors which are supposed to be representative of the original data. These features are used in the classication step to separate the data points into dierent classes based on the problem. The design of a pattern recognition system is showed in gure 1.

2.1

Preprocessing

As mentioned above, image preprocessing is a desirable step in every pattern recognition system to improve its performance. It is used to reduce variations and produce a more consistent set of data. Preprocessing should include some noise ltering, smoothing and normalization to correct the image from dierent errors, such as strong variations in lighting direction and intensity. Moreover, image segmentation could also be done in this step; it is typically used to locate objects and boundaries (lines, curves, etc.) in images and it is a way to change the representation of the given image into something more meaningful and easier to analyze. In some applications, segmentation of the interesting pattern of a given image from the background is very important, for example, dealing with diseases detection

Figure 2: (a) Image infected by sheath rot disease, segmented by, (b) Fermi energy based algorithm; (c) Otsus method and (d) k-means algorithm. in agriculture applications needs segmentation of the infected region of the diseased plant images. In this example, color image segmentation could extract the spot region image of the leaf since the color of spots is quite dierent from that of a green leaf as we see in Fig. 2(a). Many methods can be used for image segmentation; Fermi energy-based segmentation method give us the ability to identify special regions using color components of the images, it is designed to capture an object that exhibits high image gradients and shape compatible with the statistical model. Based on this, energy at each pixel position is calculated and compared with the threshold value for segmenting. The results of this method, plus two other segmentation methods (Otsu and k-means), are shown in Fig. 2b-d respectively, while Fig. 2a is the original image before segmentation.

2.2

Feature extraction

As mentioned before, feature extraction is used to overcome the problem of high dimensionality of the input set in pattern recognition. Therefore, the input data will be transformed into a reduced representation set of features, also named feature vector. Only the relevant information from the input data should be extracted in order to perform the desired task using this reduced representation instead of the full size input. Features extracted should be easily computed, robust, rotationally invariant, and insensitive to various distortions and variations in the images. Then optimal features subset than can achieve the highest accuracy results should be selected from the input space. Many methods for feature extraction exist in the literature; some of them are discussed in this section. 2.2.1 Fourier transform

Fourier transform has the ability to analyze a signal for its frequency content. A shift of a 1D or 2D function does not aect the magnitude of its Fourier coecients (translation property); also a rotation of a function will rotate its Fourier transform with the same angle (rotation property). It is used to eliminate the circular shift eect in the resultant feature domain by taking the spectrum magnitude of the Fourier coecients and then a rotation-invariant feature vector could be extracted. 2.2.2 Radon transform

Radon transform is a mapping from the Cartesian rectangular coordinates (x,y) to a distance and an angle, also known as polar coordinates. Applying the Radon transform on an image f(x,y) for a given set of angles can be thought of as computing the projection of the image along the given angles. The resulting projection is the sum of the intensities of the pixels in each direction. This transform can eectively capture the directional features in the pattern image by projecting the pattern onto dierent orientation slices. Also, the radon transform can be performed in the Fourier domain.

2.2.3

Gabor wavelets transform

Gabor wavelets, a wavelet-based transform, could be used for feature extraction. It provides the optimized resolution in both time and frequency domain for time-frequency analysis, plus it has the optimal basis to extract local features for pattern recognition and it has three motivations: biological, mathematical, and empirical. Due to its biological similarity to the human vision system, Gabor wavelets have been widely used in object recognition applications. Given a set of Gabor wavelets going through an initial parameter selection, a common feature extraction approach is to construct a feature vector by concatenating the inner product of an image with each wavelet. Rather than seeking the set of Gabor wavelets, which can approximate an image, we should seek Gabor wavelets that are tuned to discriminate one object from another, this can reduce the computation and memory cost. Boosting algorithms could be used to select only the relevant Gabor wavelets; they have the objective of selecting a number of very simple weak classiers, to combine them linearly into a single strong classier. 2.2.4 Fuzzy invariant vector

When an invariant feature vector is extracted, converting it into a fuzzy invariant vector could increase discrimination and reduce the impacts of low-frequency noise. The fuzzy invariant vector is computed using fuzzy numbers. In general, the power spectrum of an input pattern calculated with the Fourier transform has few major frequencies, which gives an impact to distinguish patterns. In a fuzzy-invariant vector, every harmonic of an input pattern has similar distribution, a characteristic which gives better discrimination than the original-invariant vector. Plus, when low-frequency noises are added to an image, some harmonics show higher or lower values than the normal range in the power spectrum of the pattern. With a Fuzzy membership function, the power of each harmonic for an input pattern is mapped identically into fuzzy numbers; these values are mapped to 1 or 0. Therefore, the impacts of low-frequency noise will be reduced.

2.3

Classication

During the classication task, the system uses the features extracted in the previous stage from each of the patterns to recognize them and to associate each one to its appropriate class. Two types of learning procedure are found in the literature. The classiers that contain the knowledge of each pattern category and also the criterion or metric to discriminate among patterns classes, which belong to the supervised learning. The unsupervised learning in which the system parameters are adapted using only the information of the input, and constrained by prespecied internal rules, it attempts to nd inherent patterns in the data that can then be used to determine the correct output value for new data instances. Some classication methods are discussed and reviewed in this section. 2.3.1 Fuzzy ART

Fuzzy ART neural networks can be used as an unsupervised vector classier. Adaptive Resonance Theory (ART) is compatible with the human brain in processing information, it has the ability to learn and memorize a large number of new concepts in a manner that does not necessarily cause existing ones to be forgotten. ART is able to classify input vectors which resemble each other according to the stored patterns. Also, it can adaptively create a new corresponding to an input pattern, if it is not similar to any existing category. ART1 was the rst model of ART; it can stably learn how to categorize binary input patterns presented in an arbitrary order. Plus, Fuzzy sets theory can imitate thinking process of human being widely and deeply. So the Fuzzy ART model, which incorporates computations from fuzzy set theory into the ART1 neural network, is capable of rapid stable learning of recognition categories in response to arbitrary sequences of analog or binary input patterns. Vigilance is a parameter which aects the performance of Fuzzy ART that is evaluated by the recognition rate. The maximal vigilance parameter enables Fuzzy ART to classify input patterns into the highest recognition rate. The combined eects of Fuzzy ART and Fuzzy Invariant Vector (FIV) described above, yields robustness.

2.3.2

Neural networks

The neural approach applies biological concepts to machines to recognize patterns. It is a promising and powerful tool for achieving high performance in pattern recognition. The outcome of this eort is the invention of articial neural networks which is set up by the elicitation of the physiology knowledge of human brain. Neural networks are composed of a series of dierent, associate unit. It is about mapping device between an input set and an output set. Since the classication problem is a mapping from the feature space to some set of output classes, we can formalize the neural network, especially two-layer Neural Network as a classier. While the usual scheme chooses one best network from amongst the set of candidate networks, better approach can be done by keeping multiple networks and running them all with an appropriate collective decision strategy. Multiple neural networks can be combined for higher recognition rate. The basic idea of the multiple networks is to develop N independently trained neural networks with relevant features, and to classify a given input pattern by utilizing combination methods to decide the collective classication. A genetic algorithm is a hybrid method which can be used to combine neural network classiers. It gives us an eective vehicle to determine the optimal weight parameters that are multiplied by the network output as coecients. It considers the dierence of performance of each network in combining the networks. The neuron having the maximum value is selected as the corresponding class. Two general approaches for combining the multiple neural networks, the fusion technique where the classication of an input is actually based on a set of real value measurements, or the voting technique which considers the result of each network as an expert judgment. 2.3.3 Markov random eld

Markov random eld (MRF) models are multi-dimensional in nature, for pattern recognition, they combine statistical and structural information. States are used to model the statistical information, and the relationships between states are used to represent the structural information. Only the best set of states should be considered. The global likelihood energy function can be rewritten with two parts, one used to model structural information that is described by the relationships among states, and the second models the statistical information because it is an output probability for the given observation and state. The recognition process is to minimize the likelihood energy function that is the summation of the clique functions. The design of neighborhood systems and cliques is based on connectedness and distance, although neighborhood systems can be of any shapes and sizes in theory where connectedness is about representing some patterns by feature points, and many feature points are not connected directly with others. Therefore, the neighborhood system consisting of the sites within a certain distance is adopted. Neighborhood systems of this kind are particularly suitable for recognizing patterns or retrieving images by critical point or feature point matching, because critical points are not connected. 2.3.4 Support Vector Machine

The Support Vector Machine (SVM) classier has been proved to be very successful in many applications. The strength of the SVM is its capacity to handle not only linearly separable data, but also non-linearly separable data using kernel functions. The kernel function can map the training examples in input space into a feature space such that the mapped training examples are linearly separable. The frequently used SVM kernels are: polynomial, Gaussian radial basis function, exponential radial basis function, spline, wavelet and autocorrelation wavelet kernel. Theoretically, features with any dimension can be fed into SVM for training, but practically, features with large dimension have computation and memory that cost to the SVM training and classication process, therefore, feature extraction and selection is a crucial step before the SVM classication. 2.3.5 Multi-class SVM

Multi-Classier System based on SVM for pattern recognition aims to obtain higher classication accuracy than individual classiers that make them up. Combination of classiers is able to complement the errors made by the individual classiers on dierent parts of the input space. The combination strategy

Classication method Fuzzy ART

Neural networks

Markov random eld SVM

Multi-class SVM

Characteristics - Capability of rapid stable learning of recognition categories in response to arbitrary sequences of analog or binary input patterns - Strong resistance to noise - Need to nd the optimal vigilance level - Ability to form complex decision regions - Strong points in the discriminative power - Capability to learn and represent implicit knowledge - Capability to combine multiple networks - Multi-dimensional in nature - Statistical and structural informations are combined - Handling non-linearly separable data - Possibility to eciently deal with a very large number of features - Any local solution is also a global optimum - Flexible - Good generalization performance while avoiding over-tting - Higher classication accuracy than individual classiers - Complementing the errors by the individual classiers

Table 1: Comparision between the classication methods. is based on stacked generalization, and it includes a two-level structure: a base-level one, it is a module of N kinds of SVM-based classiers trained by N features set, and a meta-level one, it is one module of SVM-based decision classier trained by meta-feature set which are generated through a data fusion mechanism. The main idea of Stacking is to combine classiers from dierent learners. Once the classiers have been generated, they must be combined. For the multi-class classication, many methods can be used, one of them is the one-against-all method, it can be used as multi-class SVM, in other words, when the number of classes is N, this method constructs N SVM classiers, each to classify one positive (+1) and N1 negative (1) classes. Multi-class SVM can be chosen as a meta-level learner because of its good generalization performance while avoiding over-tting.

Comparison between the classication methods

In this section, a comparison between the classication methods described in this paper is reviewed. In table 1, the caracteristics of Fuzzy ART, Markov random eld, neural networks, SVM and multiclass SVM are summarised. As we see, each method has its characteristics and advantages. For each application, a classication method should be ecient and its characteristics should t the application problem.

Pattern recognition application related to agriculture

Nowadays, pattern recognition is a technique used more and more in agriculture, applications could be done for the detection of plant diseases to minimize the loss, and achieve intelligent farming. Plant diseases are one of the most important reasons that lead to the destruction of plants and crops. Detecting those diseases at early stages enables us to overcome and treat them appropriately. The development of an automation system using pattern recognition for classifying diseases of the infected plants is a growing research area in precision agriculture. For the identication of the visual symptoms of plant diseases, texture, color, shape and position of the infected leaf area might be used as discriminator features. Diseased regions such as spots, stains or strikes should be identied, segmented, pre-processed, and a set of features should be extracted from each region. For the classication analyses, the set of features is the input of the classier, which can be a multi-classier system, such as the multi-class SVM described above.

Conclusion

In this paper we expatiate pattern recognition in the round, including the denition, the composition, the methods of pattern recognition, the comparison of each classication method, plus the application of pattern recognition in agriculture. The developing of pattern recognition is increasing very fast, the related elds and the applications of pattern recognition has become wider and wider. It aims to classify a pattern into one of a number of classes. It appears in various elds like computer vision, agriculture, robotics, biometrics, etc. In this context, a challenge consists of nding some suitable description features since commonly, the pattern to be classied must be represented by a set of features characterizing it. These features must have discriminative properties: ecient features must be aned insensitive transformations. They must be robust against noise and against elastic deformations. The feature extracting method and the classier should depend on the application itself. Future work will be done by searching for the right methods to develop a pattern recognition system for automatic detection of diseases in the infected plants.

Acknowledgements

I would like to thank my tutor, Prof. Albert DIPANDA for supporting and helping me, also for clarifying some doubts. Plus, I am thankful to Miss Nathalie VASSILEFF for her follow-up work.

References

[1] Jinhai Cai and Zhi-Qiang Liu. Pattern recognition using markov random eld models. Pattern Recognition, 35(3):725733, 2002. [2] A. Camargo and J.S. Smith. Image pattern classication for the identication of disease causing agents in plants. Computers and Electronics in Agriculture, 66(2):121 125, 2009. [3] Guangyi Chen, Tien D. Bui, and Adam Krzyzak. Invariant pattern recognition using radon, dualtree complex wavelet and fourier transforms. Pattern Recognition, 42(9):20132019, 2009. [4] Guangyi Chen and W. F. Xie. Pattern recognition with svm and dual-tree complex wavelets. Image Vision Comput., 25(6):960966, 2007. [5] Sung-Bae Cho. Pattern recognition with neural networks combined by genetic algorithm. Fuzzy Sets and Systems, 103(2):339 347, 1999. ce:titleSoft Computing for Pattern Recognition/ce:title. [6] Kun Feng, Zhinong Jiang, Wei He, and Bo Ma. A recognition and novelty detection approach based on curvelet transform, nonlinear pca and svm with application to indicator diagram diagnosis. Expert Syst. Appl., 38(10):1272112729, 2011. [7] Mun-Hwa Kim, Dong-Sik Jang, and Young-Kyu Yang. A robust-invariant pattern recognition model using fuzzy art. Pattern Recognition, 34(8):16851696, 2001. [8] D. Liu, Yukihiko Yamashita, and Hidemitsu Ogawa. Pattern recognition in the presence of noise. Pattern Recognition, 28(7):989995, 1995. [9] Santanu Phadikar, Jaya Sil, and Asit Kumar Das. Rice diseases classication using feature selection and rule generation techniques. Computers and Electronics in Agriculture, 90(0):76 85, 2013. [10] Lin-Lin SHEN and Zhen JI. Gabor wavelet selection and svm classication for object recognition. Acta Automatica Sinica, 35(4):350 355, 2009. [11] Jos Francisco Martnez Trinidad and Adolfo Guzmn-Arenas. The logical combinatorial approach to pattern recognition, an overview through selected works. Pattern Recognition, 34(4):741751, 2001. [12] Schenglian Lu Yuan Tian, Chunjiang Zhao and Xinyu Guo. Multiple classier combination for recognition of wheat leaf diseases. Intell Autom Soft Co, 17(5):519529, 2011.

You might also like

- An overview of pattern recognition methods and applicationsDocument8 pagesAn overview of pattern recognition methods and applicationsSorelle KanaNo ratings yet

- Pattern Recognition Seminar PresentationDocument12 pagesPattern Recognition Seminar PresentationSamuel UdoemaNo ratings yet

- (IJCST-V3I5P35) : P.V Nishana Rasheed, R. ShreejDocument11 pages(IJCST-V3I5P35) : P.V Nishana Rasheed, R. ShreejEighthSenseGroupNo ratings yet

- Study of The Pre-Processing Impact in A Facial Recognition SystemDocument11 pagesStudy of The Pre-Processing Impact in A Facial Recognition Systemalmudena aguileraNo ratings yet

- A Machine Learning Approach: SVM For Image Classification in CBIRDocument7 pagesA Machine Learning Approach: SVM For Image Classification in CBIRInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- PR Assignment 01 - Seemal Ajaz (206979)Document7 pagesPR Assignment 01 - Seemal Ajaz (206979)Azka NawazNo ratings yet

- Object Detection: Related ConceptsDocument9 pagesObject Detection: Related ConceptsRobert RobinsonNo ratings yet

- Validation Study of Dimensionality Reduction Impact On Breast Cancer ClassificationDocument10 pagesValidation Study of Dimensionality Reduction Impact On Breast Cancer ClassificationCS & ITNo ratings yet

- Classification of ImagesDocument14 pagesClassification of ImagesLokesh KumarNo ratings yet

- Research Paper On Image Segmentation Using MatlabDocument6 pagesResearch Paper On Image Segmentation Using Matlabegx2asvtNo ratings yet

- Assignment #1 Intro to Pattern RecognitionDocument8 pagesAssignment #1 Intro to Pattern RecognitionEngr. Sohaib JamalNo ratings yet

- Improving CBIR using wavelet-based feature extractionDocument8 pagesImproving CBIR using wavelet-based feature extractionCarolina WatanabeNo ratings yet

- IJETR031551Document6 pagesIJETR031551erpublicationNo ratings yet

- An Efficient Approach To Predict Tumor in 2D Brain Image Using Classification TechniquesDocument6 pagesAn Efficient Approach To Predict Tumor in 2D Brain Image Using Classification Techniqueshub23No ratings yet

- Unit 5 - Machine Learning - WWW - Rgpvnotes.in PDFDocument14 pagesUnit 5 - Machine Learning - WWW - Rgpvnotes.in PDFPratik GuptaNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document5 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Image Classification Techniques-A SurveyDocument4 pagesImage Classification Techniques-A SurveyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Recognition Using Particle Swarm Optimization-Based Selected FeaturesDocument16 pagesFace Recognition Using Particle Swarm Optimization-Based Selected FeaturesSeetha ChinnaNo ratings yet

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABNo ratings yet

- Unit 5 Notes NewDocument6 pagesUnit 5 Notes Newpatilamrutak2003No ratings yet

- Plant Uses and Disease Identification Using SVMDocument8 pagesPlant Uses and Disease Identification Using SVMKazi Mahbubur RahmanNo ratings yet

- Chapter 4Document24 pagesChapter 4ssahoo79No ratings yet

- DMWH M3Document21 pagesDMWH M3BINESHNo ratings yet

- Numeral Recognition Using Statistical Methods Comparison StudyDocument9 pagesNumeral Recognition Using Statistical Methods Comparison Studysar0000No ratings yet

- 6614 Ijcsit 12Document8 pages6614 Ijcsit 12Anonymous Gl4IRRjzNNo ratings yet

- The Techniques For Face Recognition With Support Vector MachinesDocument6 pagesThe Techniques For Face Recognition With Support Vector MachinesUdegbe AndreNo ratings yet

- Pattern RecognitionDocument57 pagesPattern RecognitionTapasKumarDashNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- PPAI PaperDocument9 pagesPPAI PaperMOHAMMED HANZLA HUSSAINNo ratings yet

- Guide to Image Processing Techniques for Plant Disease DetectionDocument20 pagesGuide to Image Processing Techniques for Plant Disease DetectionSayantan Banerjee100% (1)

- A Review of Genetic Algorithm Application For Image SegmentationDocument4 pagesA Review of Genetic Algorithm Application For Image Segmentationhvactrg1No ratings yet

- AI Unit-5 NotesDocument25 pagesAI Unit-5 NotesRajeev SahaniNo ratings yet

- Pattern RecognitionDocument9 pagesPattern RecognitionPriya MauryaNo ratings yet

- Abstract: Instance-Aware Semantic Segmentation For Autonomous VehiclesDocument7 pagesAbstract: Instance-Aware Semantic Segmentation For Autonomous Vehiclesnishant ranaNo ratings yet

- Data PrepDocument5 pagesData PrepHimanshu ShrivastavaNo ratings yet

- C45 AlgorithmDocument12 pagesC45 AlgorithmtriisantNo ratings yet

- Introduction To Pattern Recognition SystemDocument12 pagesIntroduction To Pattern Recognition SystemSandra NituNo ratings yet

- Boosting Image Classification With LDA-based Feature Combination For Digital Photograph ManagementDocument31 pagesBoosting Image Classification With LDA-based Feature Combination For Digital Photograph Managementjanybasha222No ratings yet

- Dimension ReductionDocument15 pagesDimension ReductionShreyas VaradkarNo ratings yet

- Image Segmentation Using Discontinuity-Based Approach: Rajiv Kumar M.Arthanari M.SivakumarDocument7 pagesImage Segmentation Using Discontinuity-Based Approach: Rajiv Kumar M.Arthanari M.SivakumarMohan Reddy KandiNo ratings yet

- Image Recognition Using CNNDocument12 pagesImage Recognition Using CNNshilpaNo ratings yet

- Improving Medical Image Segmentation Using Machine Learning to Correct BiasDocument5 pagesImproving Medical Image Segmentation Using Machine Learning to Correct BiasVenkatram PrabhuNo ratings yet

- Dimensionality Reduction-PCA FA LDADocument12 pagesDimensionality Reduction-PCA FA LDAJavada JavadaNo ratings yet

- Pattern Recognition BasicsDocument19 pagesPattern Recognition BasicsPriyansh KumarNo ratings yet

- Optimal Image Segmentation Techniques Project ReportDocument33 pagesOptimal Image Segmentation Techniques Project ReportMoises HernándezNo ratings yet

- A Review of Supervised Learning Based Classification For Text To Speech SystemDocument8 pagesA Review of Supervised Learning Based Classification For Text To Speech SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Review of Various Surface Defect Detection TechniquesDocument7 pagesA Review of Various Surface Defect Detection TechniquesChetan ChaudhariNo ratings yet

- Assignment 1 NFDocument6 pagesAssignment 1 NFKhondoker Abu NaimNo ratings yet

- Mesh Segmentation - A Comparative Study: M. Attene Imati-Cnr S. Katz Technion M. Mortara Imati-CnrDocument12 pagesMesh Segmentation - A Comparative Study: M. Attene Imati-Cnr S. Katz Technion M. Mortara Imati-CnrmpixlasNo ratings yet

- Pattern Recognition - A Statistical ApproachDocument6 pagesPattern Recognition - A Statistical ApproachSimmi JoshiNo ratings yet

- Conclusion and Future Scopes 5.1 ConclusionDocument3 pagesConclusion and Future Scopes 5.1 Conclusionshikhar guptaNo ratings yet

- Ieee Paper 2Document16 pagesIeee Paper 2manosekhar8562No ratings yet

- Ijettcs 2013 08 15 088Document5 pagesIjettcs 2013 08 15 088International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- C. Cifarelli Et Al - Incremental Classification With Generalized EigenvaluesDocument25 pagesC. Cifarelli Et Al - Incremental Classification With Generalized EigenvaluesAsvcxvNo ratings yet

- Victim Detection With Infrared Camera in A "Rescue Robot": Saeed MoradiDocument7 pagesVictim Detection With Infrared Camera in A "Rescue Robot": Saeed MoradiKucIng HijAuNo ratings yet

- Msc. Research Proposal Master ProgramDocument8 pagesMsc. Research Proposal Master ProgramYassir SaaedNo ratings yet

- DATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING: CLUSTER ANALYSIS and kNN CLASSIFIERS. Examples with MATLABNo ratings yet

- R Programming Unit-2Document29 pagesR Programming Unit-2padmaNo ratings yet

- Subject: Computer Science & ApplicationDocument12 pagesSubject: Computer Science & ApplicationSandeep Kumar TiwariNo ratings yet

- Bulk Carrier Safety ABS RulesDocument3 pagesBulk Carrier Safety ABS RulesHUNG LE THANHNo ratings yet

- 1683017424040KE12qarqkpITHwM4 PDFDocument3 pages1683017424040KE12qarqkpITHwM4 PDFselvavinayaga AssociatesNo ratings yet

- Ge - SR 489Document8 pagesGe - SR 489Geovanny Andres Cabezas SandovalNo ratings yet

- QP 5Document129 pagesQP 5Kaushal Suresh SanabaNo ratings yet

- CH 06A Operating System BasicsDocument49 pagesCH 06A Operating System BasicsAbdus Salam RatanNo ratings yet

- Bacnotan National High SchoolDocument5 pagesBacnotan National High Schooljoseph delimanNo ratings yet

- Handbook of Coil WindingDocument8 pagesHandbook of Coil WindingSREENATH S.SNo ratings yet

- DBA Info MemoDocument22 pagesDBA Info MemoSarfaraz AhmedNo ratings yet

- Symmetric Hash Encryption Image As KeyDocument5 pagesSymmetric Hash Encryption Image As KeySkaManojNo ratings yet

- Newton-Raphson Power-Flow Analysis Including Induction Motor LoadsDocument6 pagesNewton-Raphson Power-Flow Analysis Including Induction Motor LoadsthavaselvanNo ratings yet

- Learn Touch Typing Free - Typingclub: Related To: ClubDocument3 pagesLearn Touch Typing Free - Typingclub: Related To: ClubGwen Cabarse PansoyNo ratings yet

- Test Case Document Swiggy PaymentDocument5 pagesTest Case Document Swiggy Paymentsrimaya.mayaNo ratings yet

- Fifth Edition: Decision Structures and Boolean LogicDocument45 pagesFifth Edition: Decision Structures and Boolean LogicChloe LINNo ratings yet

- HLC-723GX Service Manual Rev.D PDFDocument278 pagesHLC-723GX Service Manual Rev.D PDFBiomedica100% (1)

- Scrivener User Manual For MacDocument516 pagesScrivener User Manual For MacisaakbabelNo ratings yet

- Supermicro TwinServer 2027PR-HC1R - 8coreDocument1 pageSupermicro TwinServer 2027PR-HC1R - 8coreAndreas CimengNo ratings yet

- Flexible Manufacturing SystemDocument4 pagesFlexible Manufacturing SystemJensen ManaloNo ratings yet

- FALCON: Smart Portable Solution: Condition Monitoring Has Never Been So Easy!!Document21 pagesFALCON: Smart Portable Solution: Condition Monitoring Has Never Been So Easy!!Pedro RosaNo ratings yet

- Correspondence Iso 20k 27k and 9kDocument4 pagesCorrespondence Iso 20k 27k and 9kabrahamNo ratings yet

- Covid Bakuna Center RegistryDocument17 pagesCovid Bakuna Center RegistryChris-Goldie LorezoNo ratings yet

- Case Study of Building A Data Warehouse With Analysis ServicesDocument10 pagesCase Study of Building A Data Warehouse With Analysis ServicesbabussnmcaNo ratings yet

- Lockheed Field Service Digest FSD Vol.3 No.6 Intro L1649 Starliner Part 2 of 3Document32 pagesLockheed Field Service Digest FSD Vol.3 No.6 Intro L1649 Starliner Part 2 of 3arizonaflyer100% (1)

- nAS 400 PDFDocument4 pagesnAS 400 PDFMatt TravisNo ratings yet

- Procurment 1Z0-1065Document79 pagesProcurment 1Z0-1065maikonlee94No ratings yet

- Optoma HD25 ManualDocument69 pagesOptoma HD25 Manualr521999No ratings yet

- Max Payne 2 CheatsDocument5 pagesMax Payne 2 Cheatsapi-19810764100% (1)

- Galaxy 5500 - SpecificationsDocument36 pagesGalaxy 5500 - SpecificationsVu Minh TuanNo ratings yet

- DI Cloud-GamingDocument20 pagesDI Cloud-GamingJosé Rafael Giraldo TenorioNo ratings yet

- Dual-Frequency Patch AntennaDocument8 pagesDual-Frequency Patch AntennaSukhmander SinghNo ratings yet