Professional Documents

Culture Documents

Efects of Training On Flight Simulator

Uploaded by

Mirza SinanovicOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Efects of Training On Flight Simulator

Uploaded by

Mirza SinanovicCopyright:

Available Formats

83 Journal of the Indian Academy of Applied Psychology, January - July 2005, Vol. 31, No.1-2, 83-91.

Effect of Training on Workload in Flight Simulation Task Performance

Indramani L. Singh, Hari Om Sharma and Anju L. Singh Banaras Hindu University, Varanasi

The present study examines the effect of training on workload in flight simulation task performance. A 2(training) x 2(session) x 3(block) mixed factorial design was used. Training was treated as between subjects factor, whereas sessions and blocks were treated as within subject factors. A revised version of multi-attribute task battery was used in this study. Thirty under graduate students of the Banaras Hindu University served as subjects. Each subject was required to detect automation malfunctions within stipulated time i.e. 10 sec. and to reset errors, if any, by pressing a designated key. The performance was recorded in terms of accuracy of target detection (hit rates), incorrect detection (false alarms) and reaction time. The NASA-TLX (Hart & Staveland, 1988) was used for the assessment of workload having six bipolar dimensions like mental demands, physical demands, temporal demands, effort, frustration and own performance before and after a 30-min (short) and a 60-min (long) sessions of manual training. Mean detection performance showed higher hit rates in long training session than in short training session. However, the mean difference between two training conditions was not found to be significant. Thus results suggested that the amount of training did not affect subjects system-monitoring task performance under automated mode. The main effects of session showed significant decrement in the detection of automation failures over sessions. Results also indicated that subjects reported significantly higher temporal workload between pre-and post test session in short training than long training condition. Further subjects showed significantly high degree of frustration workload in pre than post automated task performance in long training condition

Automation has encapsulated almost every domain of human life. Many automated systems have replaced the human component as a key element. Moreover, it is well established that automation can perform functions more efficiently, reliably, or accurately than the human operator. Monitoring of highly automated systems is a major concern for human performance efficiency and system safety in a wide variety of humanmachine system. Human operators using modern human-machine systems are faced with increased demands on

monitoring and supervisory control because of greater levels of automation (Parasuraman, 1987; Wiener, 1988). In general, human operators perform well in the diverse working environments, especially in the area of air traffic control, surveillance operations, power plants, intensive care units, and quality control in manufacturing. But it does not mean that errors do not occur. A study conducted by the US Air Transport Association on personnel, who conducted x-ray screening for weapons at airport security checkpoints recorded their monitoring

84

Flight Simulation Task Performance

performance. The detection rate of these weapons and explosives was typically good but not perfect. There could be several reasons for imperfect performance like unhealthy working environment (noisy and distracting), uncomfortable sitting arrangement in adverse visual conditions, lack of training, low wages, or lack of opportunities for career advancement. These technological advances have also been associated with certain behavioural problems like a loss of situation awareness, deregulation of mental workload (Parasuraman, 1987). A potential cost of automation has also been noted like automation-induced complacency (Parasuraman, Molloy, & Singh, 1993). Parasuraman et al. (1993) empirically validated the concept of automation-induced complacency. They found automated complacency in the multi-task environment rather than in the single task situation. In a follow-up study, Singh, Parasuraman, Deaton, & Molloy, (1993) also reported automated complacency on U.S. pilots. Furthermore, Singh, Molloy and Parasuraman (1993) developed complacency potential rating scale (CPRS) which had trust, confidence, and reliance as main factors. Accordingly they suggested that pilots trust, confidence and reliance in system might affect his or her use of automation. Moreover, automation has been designed with the objective to reduce operators mental workload; however, results suggested that automation does not necessarily reduce workload (Parasuraman, & Riley, 1997; Singh, & Parasuraman, 2001). Parasuraman (1999) suggested that automation could reduce human operators workload to an optimal level, if it is suitably designed. Further, if automation is implemented in a clumsy manner, workload reduction may not occur (Wiener, 1988). The first evidence appeared from a survey of commercial pilots and their attitudes toward advanced cockpit automation (Madigan, &

Tsang; 1990; Wiener, 1988) that workload may not be necessarily reduced. It could be because, firstly, automation may change the pattern of workload across work segments. Second, the demands of monitoring can be considerable (Parasuraman, Mouloua, Molloy, & Hilburn, 1996). Workload can be characterized as the interaction between the components of the machine and task on the one hand and the operators resource capabilities, motivation and the state of the mind on the other hand (Gopher & Donchin, 1986; Moray, 1988: Wickens, & Kramer, 1985). A more precise definition of workload has been defined as the costs a human operator incurs to complete an assigned task (Kramer, 1991).Automation, if properly designed, can reduce human operator s workload to a manageable level under peak loading conditions. Braby, Harris, and Muir (1993) reported that high levels of workload could lead to errors and system failures, whereas low workload could lead to complacency. It could be a reason for using automation in the first place to reduce high demands on the operator, resulting decrement in human errors. The findings further demonstrated that high automation often redistributed rather than reduced the workload within the system (Lee & Moray, 1992; Parasuraman & Mouloua, 1996; Singh etal., 2001; Wiener, 1988). This is because automation sometimes poses high demand and that become difficult to cope or manage, and which may require more attention. Some of the studies have examined the effects of automation and workload on monitoring/tracking performance. Wickens, and Kessel, (1981) studied the effect of workload on active and passive control of tracking and monitoring system. Results showed significantly poor detection of failures in the automated than in the manual condition. Similar results were obtained by

Indramani L. Singh, Hari Om Sharma and Anju L. Singh

85

Johannsen, Pfender, and Stein, (1976), wherein subjects were required to detect autopilot failures during a landing approach with simulated vertical gusts and in a study where pilots had to respond to unexpected wind-shear bursts while using an automated latitude hold. Contrarily, Liu, Fuld, and Wickens (1993) found that error detection was superior in the automated than in the manual condition. Hilburn, Jorna, and Parasuraman (1995) also found benefits of automation use on monitoring performance. However, none of the researcher so far attempted to examine the effect of extended manual training on workload and automated monitoring performance. Some of the studies reported association or dissociation for brief performance periods after small amount of practice. Thus several practical consideration emanate from this expectation for future researches in the area of man-machine interaction. In the present study an attempt has been made to examine whether automation reduce workload in multi-task environment with increased amount of manual training. Hypotheses The following hypotheses have been framed for testing in this study: 1) Amount of training would reduce automated complacency. 2) Increased training would reduce mental workload 3) Automated complacency would be progressively higher across time periods. Method Thirty graduate students of the Banaras Hindu University were randomly employed in this study. Each subject had normal (20/20) or corrected to normal visual acuity, and their age varied from 19 to 22 years. None of the subject had prior experience of flight simulation task.

Flight Simulation Task A revised version of Multi-Attribute Task Battery (MAT: Comstock & Arnegard, 1992) was used in the present study which comprised system-monitoring, tracking, and fuel resource management. Out of these three tasks, only system-monitoring task was automated and remaining two tasks i.e. tracking, and fuelmanagement tasks were performed manually. These tasks were displayed in separate windows on a 14" SVGA colour monitor of a computer. System-Monitoring Task The system-monitoring task, having four vertical gauges with moving pointers and green ok and red warning lights, was presented in upper left window of the monitor. The scales for gauges were marked for temperature and pressure of two engines of an aircraft. In normal condition, the green ok light remains on and the pointers fluctuate .25" from the centre of the gauges. Occasionally, a system malfunction occurred on one of the four gauges and the pointer went off the limits. Under automation mode, these malfunctions were detected and corrected automatically by system. However, from time to time, the automation failed to detect the malfunctions, and the subject was required to reset these malfunctions by pressing one of the corresponding function keys on the keyboard. All participants were required to detect malfunctions within 10 sec, failing which score would be recorded as miss. If the subject responded correctly within required time, score was recorded as hit score. Thus the performance measures were the correct detection (hit rates), incorrect detection (false alarms) and reaction time (RT). Tracking Compensatory Task A two dimensional compensatory tracking task with joystick control was presented in central window of the monitor. A circular target symbol representing the deviation of aircraft

86

Flight Simulation Task Performance

from its course fluctuated within the window. The subjects task was to keep the target symbol within the central rectangular area by applying the appropriate joysticks control inputs. Root mean square (RMS) error was computed as tracking performance score. Fuel Resource Management Task This task comprised six fuel tanks duly linked with eight pumps. The amount, rate and direction of fuel flow were displayed on the monitor. The subjects were instructed to maintain the fuel level at 2500 gallons, each in tank A and tank B, which consumed the fuel at the rate of 800 gallons per minute. The subjects could take the required fuel from lower supply tanks by activating the appropriate pump. All pumps had different pumping capacity and these could be turned on or off by pressing the corresponding numbered keys from 1 to 8 on the keyboard. A root mean square error (RMS) was computed as performance measure in this task. Assessment of Workload The NASA task load index (NASA-TLX; Hart, & Staveland, 1988) was used for the assessment of workload. The NASA-TLX is considered to be one of the most effective measures of perceived workload so far (Nygren, 1991). This scale has very high reliability (r = .83) and it has six sources of workload. Three of those sources reflect the demands which experimental tasks place on operator (mental, physical, and temporal demand), whereas remaining three sources characterize the interaction between the operator and the task (effort, frustration, and performance). This scale provides an overall workload score based on a weighted average of ratings. Design A 2(training) x 2(session) x 3(block) mixed factorial design was used in the present study.

Training was treated as between subjects factors, whereas sessions and blocks were treated as within subjects factors. The subjects were assigned randomly to either short or long training groups under static reliability (87.5%) condition from block to block. Procedure All subjects were required to fill-up a consent form individually for participating in this experiment. They were also required to complete a biographical questionnaire, which had several questions about their age, education, socio-economic status, knowledge about computer and frequency of practice on a computer. Subjects were also tested for their normal vision on Snellon vision chart in the lab. All thirty subjects received a 10-min common practice on all three subtasks, i.e., system-monitoring, tracking and fuel management of flight simulation task. At the end of practice, feedback was given to each participant on dependent measures. Thirty subjects, who scored about 60% or above on systemmonitoring task in practice session, were selected for final test sessions. Out of 30 subjects, 15 subjects were given short manual training (30-min) and 15 subjects were given long manual training (60-min) on flight simulation task. Feedback of performance for all the three tasks was given to the subjects. After short/long training, all subjects were required to perform final test session, comprising two 30-min sessions. NASA-Task Load Index was administered individually to all subjects once after training session and once after the automated task was completed. Results and Discussion The means and standard deviations for hits rate, false alarms, reaction time, and root mean square errors response criteria were computed separately for the common 10-min practice, short manual and long manual

Indramani L. Singh, Hari Om Sharma and Anju L. Singh

87

training and final six 10-min automated test sessions. Practice Performance Results indicated that the mean correct detection of malfunctions varied from 64% to 67% between short and long manual training conditions. However, mean difference in hits rate performance was not significant between short and long training. Similar results were obtained for F.As. and R. T. performance measures. The tracking, and fuel resource management RMS errors performance also did not show significant difference from short to long training conditions. Results indicated the equivalence of initial performance levels between two training conditions. Training Performance The mean difference on all five dependent measures after a 30-min or 60min manual training revealed that the mean

correct detection (hit rates) of short training and long training varied from 65% to 70%, respectively. However, the difference was not significant. This trend of results was maintained for the remaining other measures like false alarms, reaction time and RMS performances. Thus, results suggested that the extended training did not improve performance. Automation Task Performance Mean correct detection performance showed a marginally higher in long training condition (M = 41.67, SD = 5.51) than short training (M = 37.82, SD = 5.51). However, this difference was not found significant. Data were then subjected to a 2 (training) x 2 (session) x 3 (block) analysis of variance with repeated measures on the last two factors, summary of which is presented in Table 1.

Table 1: Summary of Analysis of Variance for Correct Detection of Malfunctions in Short and Long Training Conditions.

Source Sum of Squares df 1 28 1 1 28 2 2 56 2 2 56 Mean Squares 665.08 2741.69 6432.08 3.2 1295.44 2443.02 1049.68 1146.59 193.68 211.46 875.91 0.243 4.96* 0.002 2.13 0.91 0.221 0.241 F-values

Training 665.08 Error 76767.49 Session 6432.08 Session*Training 3.2 Errors 36272.38 Block 4886.04 Block*Training 2099.37 Error 64209.24 Session*Block 387.37 Session*Block*Training 422.93 Error 49051.02

The ANOVA results showed that main effect of training was not found significant, F (1, 28) = 0.24; p > .62, which revealed that amount of manual training given prior to the detection of automation failures has no impact on automated complacency. The

interactions were also not found significant. In this experiment, only 15 subjects were assigned in each training group, so the size of sample could be a factor for obtaining insignificant main effect of training. Thus, the result does not support the first

88

Flight Simulation Task Performance

hypothesis that the amount of training would reduce automated complacency. The significant main effect of session, F (1, 28) = 4.96; p < .05 showed significant decrement in detection of automation failures from session to session. This result supported the second hypothesis that detection of automation failures would progressively decline over sessions. The findings are consistent with other researchers (Parasuraman et al. 1993; Singh et al, 1997) who also reported automated complacency over sessions under multi-tasks environment. Automation and Perceived Workload The mean scores of the perceived workload dimensions indicated that premental, pre-temporal, pre-effort and prefrustration indices were high in both short and long training condition than their counterpart conditions i.e. post-mental, post-temporal, post-effort and postfrustration. The obtained mean scores are graphically presented in Figure 1 and 2 for short and long training conditions. Figure 1. Pre and Post Mean Workload Scores in Short Training Condition

100

Pre-workload Post-workload

Figure: 2. Pre and Post Mean Workload Scores in Long Training Condition

100 Pre-workl oad Post-workl oad

80

60

40

20

0

Mental Physical Temporal Effort Frustration Performance

These mean differences were further compared by using paired compared t-test, since these workload scores were obtained at two intervals of time i.e. before and after automated monitoring task performance in both short and long training groups. The obtained t-values for short and long training are presented separately in Table 2. Table 2. Summary of the Paired Sample ttest Values Between Pre- and Post Test Sessions after Short and Long Training Conditions (N = 15). Workload dimension Short training t-value Long training t-value 1.59 1.17 1.22 1.3 2.07*

80

Pre-Mental Demand vs Post-Mental Demand 1.20 Pre-Physical Demand vs Post-Physical Demand 1.26 Pre-Temporal Demand vs Post -Temporal Demand 3.23** Pre-Effort vs Post Effort .01 .72

60

40

20

0

Mental Physical Temporal Effort Frustration Performance

Pre-Frustration vs Post Frustration

Pre-Own Performance vs Post-own Performance 0.70 ** p<0.01 *p<0.01

1.08

Indramani L. Singh, Hari Om Sharma and Anju L. Singh

89

Results suggested that the amount of workload of all dimensions decreased in post test session after performing automated monitoring task but significant difference emerged only in temporal workload in short training and frustration workload in long training group. Paired t-test was also computed on the mean difference in pre-and post workload of all 30 participants, irrespective of the amount of manual training.These findings (see Table 3) demonstrated that automation significantly reduced mental (t = 2.02; p<.05), temporal (t = 2.70; p<.01) and frustration workload (t = 2.11; p<.04). However, the other dimensions of workload i.e. physical, effort and performance did not show significant difference which could be due to small sample size i.e. 30 subjects. Table 3. Paired Sample t-test on the Total Sample (N=30) Workload dimension Pre-Mental Demand vs Post-Mental Demand Pre-Physical Demand vs Post-Physical Demand Pre-Temporal Demand vs Post - Temporal Demand 2.70** Pre-Effort vsPost Effort 0.927 Pre-Frustration vs Post Frustration Pre-Own Performance vs Post-own Performance ** p<0.01 *p<0.01 1.29 29 2.11* 29 29 29 0.50 29 2.02* 29 t-value df

& Parasuraman, 1999). However, this trend of result may not be true in all the case. There is behavioural and subjective evidence for automation increasing workload, probably due to the operator having to monitor whether the automation is functioning correctly (Parasuraman & Riley, 1997; Wickens, Mavor, & McGee, 1977). Thus the results of the present study are relevant to the debate on technologycentered versus human-centered approaches to the design of automation. The dominant tendency of the technologycentered approach has been to implement automation wherever possible in order to reduce pilot workload and to reap the benefits of economies such as fuel efficiency and reduced training cost.

References

Braby, C. D., Harris, D., & Muir, H. C. (1993). A psychophysiological approach to the assessment of work underload, Ergonomics, 36, 1035-1042. Comstock, J. R., & Arnegard, R. J. (1992). The multi-attribute task battery for human operator workload and strategic behaviour research (Tech. Memorandum No. 104174). Hampton, VA: NASA Langley Research Center. Gopher, D., & Donchin, E. (1986). Workload: An examination of the concept. In K. R. Boff, L. Kaufman, & J. P. Thomas (eds.), Handbook of perception and human performance: Cognitive processes and performance, Vol. II. (pp. 41/1 41/44). New York: Wiley. Hart, S. G., & Staveland, L.E. (1988). Development of the NASA-Task Load Index (NASA-TLX); Results of empirical and theoretical research. In P.A. Hancock and N. Meshkati (eds.), Human mental workload (139-183). Amsterdam: Elsevier. Hilburn, B., Jorna, P. G. A. M., & Parasuraman, R. (1995). The effect of advanced ATC automation on mental workload and monitoring performance: An empirical

The obtained results partially support our assumption that automation would reduce mental workload. The high reliability of automated system use in the modern cockpit may reduces some of the workload of the pilot, but it may actually increase other components of workload (Masalonis, Duley

90

Flight Simulation Task Performance

investigation in Dutch airspace. Proceedings of the 8th International Symposium on Aviation Psychology. Columbus, OH: The Ohio State University. Johannsen, G., Pfendler, C., & Stein, W. (1976). Human performance and workload in simulated landing-approaches with autopilot failure. In T. B. Sheridan & G. Johannsen (eds.), Monitoring behaviour and supervisory control (pp. 83-93). New York: Plenum. Kramer, A. F. (1991). Physiological metrics of mental workload: A review of recent progress (pp. 279-328). In D. L. Damos (ed.) Multipletask performance. London: Taylor & Francis. Lee, J. D., & Moray, N. (1992). Trust control strategies, and allocation of function in human machine systems. Ergonomics, 35, 1243-1270. Liu, Y. R., Fuld, R., & Wickens, C. D. (1993). Monitoring behaviour in manual and automated scheduling systems. International Journal of Man-Machine Studies, 39, 10151029. Madigan Jr., E. F., & Tsang, P. S. (1990). A survey of pilot attitudes toward cockpit automation. Proceeding of the 5th Mid Central Ergonomics/Human Factors Conference, Norfolk: VA. Masalonis, A.J., Duley, J.A., & Parasuraman, R. (1999). Effects of manual and autopilot control on mental workload and vigilance during simulated general aviataion flight, Transportation Human Factors, 1, 187-200. Moray, N. (1988). Mental workload since 1979. In D. Oborne (ed.), International Review of Ergonomics, 2, 123-150. Nygren, T. E. (1991). Psychometric properties of subjective workload measurement techniques: Implications for their use in the assessment of perceived mental workload. Human Factors, 33, 17-33. Parasuraman, R. (1987). Human-computer monitoring. Human Factors, 29, 695-706. Parasuraman, R. (1999). Automation and human performance. In W. Karwowski (ed.)

International encyclopedia of ergonomics and human factors. New York: Taylor & Francis. Parasuraman, R., & Mouloua, M. (1996). Automation and human performance: Theory and applications. Hillsdale, New Jersey: Lawrence Erlbaum Associates. Parasuraman, R., & Riley, V. (1997). Humans and automation: Use, misuse, disuse, abuse. Human Factors, 39, 230-253. Parasuraman, R., Molloy, R., & Singh, I. L. (1993). Performance consequences of automation-induced complacency. International Journal of Aviation Psychology, 3, 1-23. Parasuraman, R., Mouloua, M., Molloy, R., & Hilburn, B. (1996). Monitoring of automated systems. In R. Parasuraman & M. Mouloua (eds.), Automation and human performance: Theory and applications (pp. 91-115). Hillsdale, NJ: LEA. Singh, I. L., & Parasuraman, R. (2001). Human performance in automated systems. Indian Psychological Abstract and Reviews, 8:, 235276. Singh, I. L., Molloy, R., & Parasuraman, R. (1993). Automation-induced complacency: Development of the complacency potential rating scale. International Journal of Aviation Psychology, 3, 111-122. Singh, I. L., Molloy, R. & Parasuraman, R. (1997). Automation-induced monitoring inefficiency: Role of display location.

International Journal of Human Computer Studies, 46, 17-46.

Singh, I. L., Parasuraman, R., Deaton, J., & Molloy, R. (1993, November). Adaptive function allocation enhances pilot monitoring performance. Proceedings of the Annual Meeting of the Psychonomic Society. 34th Annual Conference, Washington, DC, USA. Wickens, C. D., & Kessel, C. (1981). Failure detection in dynamic system. In J. Rasmussen , & W. B. Rouse (eds.) Human detection and diagnosis of system failures. New York: Plenum.

Indramani L. Singh, Hari Om Sharma and Anju L. Singh

Wickens, C. D., & Kramer, A. F. (1985). Engineering psychology, Annual Review of Psychology, New York: Annual Review Inc. Wickens, C. D., Mavor, A.S. & McGee, J.P.(1997). Flight to the future: Human factors

91

in air traffic control. Washington, DC: National Academy Press.. Wiener, E. L. (1988). Cockpit automation. In E. L. Wiener & D. C. Nagel (eds.), Human factors in aviation (pp. 433-461). San Diego: Academic

Indramani. L .Singh, PhD, Reader, Department of Psychology, Banaras Hindu University, Varanasi. Hari Om Sharma, Department of Psychology, Banaras Hindu University, Varanasi Anju L. Singh, Department of Psychology, Banaras Hindu University, Varanasi

With Best Wishes from

Prasad Psycho Corporation

J-1/58, Dara Nagar, Varanasi - 221 001 (India)

B-39, Gurunanakpura, Laxmi Nagar, New Delhi - 110 092 (India)

Phone: 011- 32903349, Fax: 011-41765277, Mobile: 09810782203

You might also like

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Strategies ForDocument6 pagesStrategies ForMirza SinanovicNo ratings yet

- G Code 111Document1 pageG Code 111Mirza SinanovicNo ratings yet

- G Code 11Document1 pageG Code 11Mirza SinanovicNo ratings yet

- Strategies For MassDocument7 pagesStrategies For MassMirza SinanovicNo ratings yet

- G Code 111111Document1 pageG Code 111111Mirza SinanovicNo ratings yet

- StrategiesDocument5 pagesStrategiesMirza SinanovicNo ratings yet

- G Code 1111Document2 pagesG Code 1111Mirza SinanovicNo ratings yet

- G Code 1111Document1 pageG Code 1111Mirza SinanovicNo ratings yet

- G Code 111Document1 pageG Code 111Mirza SinanovicNo ratings yet

- Dvodimenzionalni Nizovi - MatriceDocument8 pagesDvodimenzionalni Nizovi - MatriceMirza SinanovicNo ratings yet

- G Code 11Document1 pageG Code 11Mirza SinanovicNo ratings yet

- G Code 111111Document1 pageG Code 111111Mirza SinanovicNo ratings yet

- New Doc 2017-09-19 PDFDocument1 pageNew Doc 2017-09-19 PDFMirza SinanovicNo ratings yet

- New Doc 2017-09-20 PDFDocument57 pagesNew Doc 2017-09-20 PDFMirza SinanovicNo ratings yet

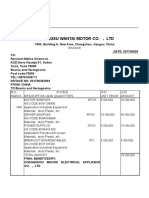

- Jiangsu Wantai Motor Co LTDDocument1 pageJiangsu Wantai Motor Co LTDMirza SinanovicNo ratings yet

- Disp ModulDocument8 pagesDisp ModulMirza SinanovicNo ratings yet

- INVOICE FlattenedDocument1 pageINVOICE FlattenedMirza SinanovicNo ratings yet

- FL038XBH15NCJLTDocument3 pagesFL038XBH15NCJLTDušan JovanovićNo ratings yet

- 14cfr60 11Document1 page14cfr60 11Mirza SinanovicNo ratings yet

- Letová Příručka KluzákuDocument31 pagesLetová Příručka KluzákuMirza SinanovicNo ratings yet

- Planos Router CNCDocument53 pagesPlanos Router CNCMiguel JaquetNo ratings yet

- Jiangsu Wantai Motor Co LTDDocument1 pageJiangsu Wantai Motor Co LTDMirza SinanovicNo ratings yet

- Jiangsu Wantai Motor Co LTDDocument1 pageJiangsu Wantai Motor Co LTDMirza SinanovicNo ratings yet

- Glider Cross Country OutlandingDocument2 pagesGlider Cross Country OutlandingMirza SinanovicNo ratings yet

- Federal Aviation Administration, DOT 60.15Document1 pageFederal Aviation Administration, DOT 60.15Mirza SinanovicNo ratings yet

- Sustainable DevelopmentDocument26 pagesSustainable DevelopmentDimas Haryo Adi PrakosoNo ratings yet

- Flight Simulator Kvalifikacijski StandardiDocument1 pageFlight Simulator Kvalifikacijski StandardiMirza SinanovicNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- MyLabX8 160000166 V02 LowRes PDFDocument8 pagesMyLabX8 160000166 V02 LowRes PDFhery_targerNo ratings yet

- Utah State Hospital Policies and Procedures RadiologyDocument9 pagesUtah State Hospital Policies and Procedures RadiologysultanarahmanNo ratings yet

- ForecastingDocument16 pagesForecastingSadain Bin MahboobNo ratings yet

- JPCC PDFDocument86 pagesJPCC PDFvenkatakrishna1nukalNo ratings yet

- Text-Book P3Document147 pagesText-Book P3Nat SuphattrachaiphisitNo ratings yet

- Prime Time FeaturesDocument15 pagesPrime Time FeaturesPruthwish PatelNo ratings yet

- Summer 2019 - OSD Exam Paper MS - FINALDocument13 pagesSummer 2019 - OSD Exam Paper MS - FINALAsifHossainNo ratings yet

- Nishat ISDocument11 pagesNishat ISSaeed MahmoodNo ratings yet

- Operations Management: Green Facility Location: Case StudyDocument23 pagesOperations Management: Green Facility Location: Case StudyBhavya KhattarNo ratings yet

- Best Evidence Rule CasesDocument5 pagesBest Evidence Rule CasesRemy Rose AlegreNo ratings yet

- Application Form ICDFDocument2 pagesApplication Form ICDFmelanie100% (1)

- Appointments & Other Personnel Actions Submission, Approval/Disapproval of AppointmentDocument7 pagesAppointments & Other Personnel Actions Submission, Approval/Disapproval of AppointmentZiiee BudionganNo ratings yet

- 42 Investors Awareness Towards Mutual FundsDocument8 pages42 Investors Awareness Towards Mutual FundsFathimaNo ratings yet

- CS8792 CNS Unit5Document17 pagesCS8792 CNS Unit5024CSE DHARSHINI.ANo ratings yet

- However, A Review of The Factual Antecedents of The Case Shows That Respondents' Action For Reconveyance Was Not Even Subject To PrescriptionDocument7 pagesHowever, A Review of The Factual Antecedents of The Case Shows That Respondents' Action For Reconveyance Was Not Even Subject To Prescriptionkemsue1224No ratings yet

- Mathswatch Student GuideDocument8 pagesMathswatch Student Guideolamideidowu021No ratings yet

- Manual de Serviços QQ PDFDocument635 pagesManual de Serviços QQ PDFLéo Nunes100% (1)

- Full Download Test Bank For Accounting Information Systems Hall 8th Edition PDF Full ChapterDocument36 pagesFull Download Test Bank For Accounting Information Systems Hall 8th Edition PDF Full Chapterfluiditytrenail7c8j100% (16)

- NAGIOS Inspeção Relatório de DadosDocument2 pagesNAGIOS Inspeção Relatório de DadosRuben QuintNo ratings yet

- Kesehatan Dan Keadilan Sosial inDocument20 pagesKesehatan Dan Keadilan Sosial inBobbyGunarsoNo ratings yet

- 14.quality of Life in Patients With Recurrent AphthousDocument7 pages14.quality of Life in Patients With Recurrent AphthousCoste Iulia RoxanaNo ratings yet

- Solar Smart Irrigation SystemDocument22 pagesSolar Smart Irrigation SystemSubhranshu Mohapatra100% (1)

- Cbjessco 13Document3 pagesCbjessco 13Fawaz ZaheerNo ratings yet

- in The Matter of The Estate of Remigia SaguinsinDocument3 pagesin The Matter of The Estate of Remigia SaguinsinAila AmpieNo ratings yet

- Revenue From LTCCDocument2 pagesRevenue From LTCCMarife RomeroNo ratings yet

- Is Your Money Safe With Builders Indulging in Criminal Disreputation Management - Story of Navin Raheja & Raheja Developers in GurgaonDocument44 pagesIs Your Money Safe With Builders Indulging in Criminal Disreputation Management - Story of Navin Raheja & Raheja Developers in Gurgaonqubrex1No ratings yet

- Casio Aq140w UputstvoDocument1 pageCasio Aq140w UputstvoalazovicNo ratings yet

- Gendex 9200 enDocument204 pagesGendex 9200 enArturo Jimenez Terrero80% (5)

- Apayao Quickstat: Indicator Reference Period and DataDocument4 pagesApayao Quickstat: Indicator Reference Period and DataLiyan CampomanesNo ratings yet

- Episode 1Document10 pagesEpisode 1ethel bacalso100% (1)