Professional Documents

Culture Documents

LECT - 2. Source Coding

Uploaded by

biruke6Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

LECT - 2. Source Coding

Uploaded by

biruke6Copyright:

Available Formats

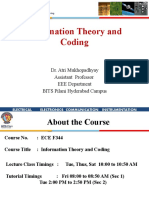

Graduat e Program

Depart ment of Elect rical and Comput er Engineering

Chapter 2: Source Coding

Sem. I, 2012/13

Overview

Source coding and mathematical models

Logarithmic measure of information

Average mutual information

Digital Communications Chapter 2: Source Coding

2

Sem. I, 2012/13

Source Coding

Information sources may be analog or discrete (digital)

Analog sources: Audio or video

Discrete sources: Computers, storage devices such as magnetic or

optical devices

Whatever the nature of the information sources, digital

communication systems are designed to transmit

information in digital form

Thus the output of sources must be converted into a format

that can be transmitted digitally

Digital Communications Chapter 2: Source Coding

3

Sem. I, 2012/13

Source Coding

This conversion is generally performed by the source

encoder, whose output may generally assumed to be a

sequence of binary digits

Encoding is based on mathematical models of information

sources and the quantitative measure of information

emitted by a source

We shall first develop mathematical models for information

sources

Digital Communications Chapter 2: Source Coding

4

Sem. I, 2012/13

Mathematical Models for Information Sources

Any information source produces an output that is random

and which can be characterized in statistical terms

A simple discrete source emits a sequence of letters from a

finite alphabet

Example: A binary source produces a binary sequence such as

1011000101100; alphabet: {0,1}

Generally, a discrete information with an alphabet of L possible

letters produces a sequence of letters selected from this alphabet

Assume that each letter of the alphabet has a probability of

occurrence p

k

such that

p

k

=P{X = x

k

}, 1 k L; and

p

k

= 1

Digital Communications Chapter 2: Source Coding

5

Sem. I, 2012/13

Two Source Models

1. Discrete Sources

Discrete Memoryless source (DMS): Output sequences from the

source are statistically independent

Current output does not depend on any of the past outputs

Stationary source: The discrete source outputs are statistically

dependent but statistically stationary

That is, two sequences of length n, (a

1

, a

2

, ..a

n

) and (a

1+m

, a

2+m

,

..a

n+m

) each have joint probabilities that are identical for all n 1

and for all shifts m

2. Analog sources has an output waveform x(t) that is a

sample function of a stochastic process X(t), where we

assume X(t) is a stationary process with autocorrelation

R

xx

() and power spectral density

xx

(f)

Digital Communications Chapter 2: Source Coding

6

Sem. I, 2012/13

Mathematical Models

When X(t) is bandlimited stochastic process such that

xx

(f) = 0 for f W; by the sampling theorem

Where {X(n/2W)} denote the samples of the process at the

sampling rate (Nyquist rate) of f = 2W samples/s

Thus applying the sampling theorem we may convert the

output of an analog source into an equivalent discrete-

time source

Digital Communications Chapter 2: Source Coding

7

|

.

|

\

|

|

.

|

\

|

|

.

|

\

|

=

=

W 2

n

t W 2

W 2

n

t W 2 sin

)

w 2

n

X( X(t)

n

Sem. I, 2012/13

Mathematical Models

The output is statistically characterized by the joint pdf

p(x

1,

x

2

, x

m

) for m 1 where x

n

=X(n/2W); 1 n m, are

the random variables corresponding to the samples of X(t)

Note that the samples {X(n/2W)} are in general continuous

and cannot be represented digitally without loss of

precision

Quantization may be used such that each sample is a

discrete value, but it introduces distortion (More on this

later)

Digital Communications Chapter 2: Source Coding

8

Sem. I, 2012/13

Overview

Source coding and mathematical models

Logarithmic measure of information

Average mutual information

Digital Communications Chapter 2: Source Coding

9

Sem. I, 2012/13

Logarithmic Measure of Information

Consider two discrete random variables X and Y such that

X = {x

1

, x

2

,x

n

} and Y = {y

1

,y

2

,.y

m

}

Suppose we observe Y = y

j

and wish to determine,

quantitatively, the amount of information Y = y

j

provides

about the event

X = x

i

, i = 1,2,..n

Note that if X and Y are statistically independent Y = y

j

provides no information about the occurrence of X = x

i

If they are fully dependent Y = y

j

determines the

occurrence of X = x

i

The information content is simply that provided by X = x

i

Digital Communications Chapter 2: Source Coding

10

Sem. I, 2012/13

Logarithmic Measure of Information

A suitable measure that satisfies these conditions is the

logarithm of the ratio of the conditional probabilities

P{X = x

i

Y = y

j

} = P{x

i

y

j

} and P{X = x

i

} = p(x

i

)

Information content provided by the occurrence of Y = y

j

about the event X = x

i

is defined as

I(x

i

, y

j

) is called the mutual information between x

i

and y

j

Unit of the information measure is the nat(s) if the natural

logarithm is used and bit(s) if base 2 is used

Note that ln a = ln 2. log

2

a = 0.69315 log

2

a

Digital Communications Chapter 2: Source Coding

11

) P( x

) y P( x

y x I

i

j i

j i

/

log ) , ( =

Sem. I, 2012/13

Logarithmic Measure of Information

If X and Y are independent I(x

i

, y

j

) = 0

If the occurrence of Y = y

j

uniquely determines the

occurrence of X = x

i

,

I(x

i

, y

j

) = log (1/ p(x

i

))

i.e., I(x

i

, y

j

) = -log p(x

i

) = I(x

i

) self information of event X

Note that a high probability event conveys less

information than a low probability event

For a single event x where p(x) = 1, I(x) = 0 the

occurrence of a sure event does not convey any information

Digital Communications Chapter 2: Source Coding

12

Sem. I, 2012/13

Logarithmic Measure of Information

Example

1. A binary source emits either 0 or 1 every

s

seconds with

equal probability. Information content of each output is

then given by

I(x

i

) = -log

2

p = -log

2

1/2 = 1 bit, x

i

= 0,1

2. Suppose successive outputs are statistically

independent (DMS) and consider a block of binary digits

from the source in time interval k

s

Possible number of k-bit block = 2

k

= M, each of which is

equally probable with probability 1/M = 2

-k

I(x

i

) = -log

2

2

-k

= k bits in k

s

sec

(Note the additive property)

Digital Communications Chapter 2: Source Coding

13

Sem. I, 2012/13

Logarithmic Measure of Information

Now consider the following relationships

and since

Information provided by y

j

about x

i

is the same as that

provided by x

i

about Y = y

j

Digital Communications Chapter 2: Source Coding

14

) (y p ) (x p

) y , (x p

log

) (y p ) (x p

) p(y ) y (x p

log

) x p(

) y x ( p

log ) y , I(x

j i

j i

j i

j j i

i

j i

j i

= = =

) p(x

) y , p(x

) x p(y

i

j i

i j

=

) , (

) (

) (

) , (

i j

j

i j

j i

x y I

y p

x y p

y x I = =

Sem. I, 2012/13

Logarithmic Measure of Information

Using the same procedure as before we can also define

conditional self-information as

Now consider the following relationships

Which leads to

Note that is self information about X = x

i

after having

observed the event Y = y

j

Digital Communications Chapter 2: Source Coding

15

) I(x ) y , I(x

) p(x

) p(x ) y p(x

log

i j i

i

i j i

=

(

(

) ( ) ( ) , (

j i i j i

y x I x I y x I =

) (

j i

y x I

) ( log

) (

1

log ) (

2 2 j i

j i

j i

y x p

y x p

y x I = =

Sem. I, 2012/13

Logarithmic Measure of Information

Further, note that

and thus

Indicating that the mutual information between two events

can either be positive or negative

Digital Communications Chapter 2: Source Coding

16

when y x I

j i

0 ) , (

) ( ) (

j i i

y x I x I >

0 ) ( 0 ) (

j i i

y x I and x I

Sem. I, 2012/13

Overview

Source coding and mathematical models

Logarithmic measure of information

Average mutual information

Digital Communications Chapter 2: Source Coding

17

Sem. I, 2012/13

Average Mutual Information

Average mutual information between X and Y is given by

Similarly, average self-information is given by

where X represents the alphabet of possible output letters

from a source

H(X) represents the average self information per source

letter and is called the entropy of the source

Digital Communications Chapter 2: Source Coding

18

= = = =

= =

n

i

m

j

j i

j i

j i j i j

n

i

m

j

i

y p x p

y x p

y x p y x I y x p I

1 1

2

1 1

0

) ( ) (

) , (

log ) , ( ) , ( ) , ( ) ; ( Y X

= = ) ( log ) ( ) ( ) ( ) (

2 i i i i

x p x p x I x p H X

Sem. I, 2012/13

Average Mutual Information

If p(x

i

) = 1/n for all i, then the entropy of the source

becomes

In general, H(X) log n for any given set of source letter

probabilities

Thus, the entropy of a discrete source is maximum when the output

letters are all equally probable

Digital Communications Chapter 2: Source Coding

19

n log

n

1

log

n

1

H(X)

2 2

= =

Sem. I, 2012/13

Average Mutual Information

Consider a binary source where the letters {0,1} are

independent with probabilities

p(x

0

=0)=q or p(x

1

=1)=1-q

The entropy of the source is given by

H(X)= -q log

2

q (1-q) log

2

(1-q) = H(q)

whose plot as a function is shown below

Digital Communications Chapter 2: Source Coding

20

Binary Entropy Function

Sem. I, 2012/13

Average Mutual Information

In a similar manner as above, we can define conditional

self-information or conditional entropy as follows

Conditional entropy is the information or uncertainty in X

after Y is observed

From the definition of average mutual information one can

show that

Digital Communications Chapter 2: Source Coding

21

) / (

1

log ) , ( ) / (

1 1

j i

n

i

m

j

j i

y x p

y x p H

= =

= Y X

| |

) (

) ( log ) / ( log ) , (

1 1

X (X/Y)

Y) I(X,

H H

x p y x p y x p

n

i

m

j

i j i j i

+ =

=

= =

Sem. I, 2012/13

Average Mutual Information

Thus I(X,Y) = H(X) H(X/Y) and H(X) H(X|Y), with

equality when X and Y are independent

H(X/Y) is called the equivocation

It is interpreted as the amount of average uncertainty

remaining in X after observation of Y

H(x) Average uncertainty prior to observation

I(X;Y) Average information provided about the set X by

the observation of the set Y

Digital Communications Chapter 2: Source Coding

22

Sem. I, 2012/13

Average Mutual Information

The above results can be generalized for more than

two random variable

Suppose we have a block of k random variables

x

1

,x

2

,..x

k

with joint probability P(x

1

,x

2

,..x

k

); the

entropy of the block will then be given by

Digital Communications Chapter 2: Source Coding

23

) ...... , ( log ) ...... , ( .... ) (

2 1

1

1

2

2

2 1 jk j j

n

j

n

j

nk

jk

jk j j

x x x P x x x P X H

=

Sem. I, 2012/13

Continuous Random Variables - Information Measure

If X and Y be continuous random variables with joint pdf

f (x,y) and marginal pdfs f (x) and f (y) the average Mutual

information between X and Y is defined as

The concept of self information does not exactly carry over

to continuous random variables since these would require

infinite number of binary digits to exactly represent them

and thus making their entropies infinite

Digital Communications Chapter 2: Source Coding

24

dy dx

y f x f

x f x y f

x y f x f Y X I

=

) ( ) (

) ( ) / (

log ) / ( ) ( ) , (

Sem. I, 2012/13

Continuous Random Variables

We can however define differential entropy for continuous

random variable as

And the average conditional entropy as

Average mutual information is then given by I(X,Y)= H(X) -

H(X/Y) or I(X,Y) = H(Y) - H(Y/X)

Digital Communications Chapter 2: Source Coding

25

= dx x f x f x H ) ( log ) ( ) (

= dxdy y x f y x f Y X H ) , ( log ) , ( ) / (

Sem. I, 2012/13

Continuous Random Variables

If X is discrete and Y is continuous, the density of Y is

expressed as

The mutual information about X = x

i

provided by the

occurrence of the event Y = y is given by

The average mutual information between X and Y is

Digital Communications Chapter 2: Source Coding

26

=

=

n

i

i i

x p x y f y f

1

) ( ) / ( ) (

) (

) / (

log

) ( ) (

) ( ) / (

log ) ; (

y f

x y f

x p y f

x p x y f

Y x I

i

i

i i

i

= =

) (

) / (

log ) ( ) / ( ) ; (

1

y f

x y f

x p x y f Y X I

i

n

i

i i

=

You might also like

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- PHP TutorialDocument54 pagesPHP Tutorialbiruke6No ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Ijtra 1503107Document6 pagesIjtra 1503107biruke6No ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- PHP and MYSQLDocument19 pagesPHP and MYSQLbiruke6No ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Lab ExerciseDocument1 pageLab Exercisebiruke6No ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Network Analysis SynthesisDocument531 pagesNetwork Analysis Synthesisbiruke6No ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- ECE632 Mobile CommunicationDocument181 pagesECE632 Mobile CommunicationUsama LatifNo ratings yet

- Power System Fundamentals: The Course OverviewDocument2 pagesPower System Fundamentals: The Course Overviewbiruke6No ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Smart GridDocument16 pagesSmart Gridbiruke6No ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Lecture8 UMTS RF PlanningDocument35 pagesLecture8 UMTS RF PlanningRokibul HasanNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Distribution TransformersDocument14 pagesDistribution Transformersbiruke6No ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hybrid Spread Spectrum Based Smart Meter NetworkDocument10 pagesHybrid Spread Spectrum Based Smart Meter Networkbiruke6No ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Basic Structure of A Power SystemDocument9 pagesBasic Structure of A Power Systembiruke6No ratings yet

- Microcontroller Based Substation Monitoring and Control System With GSM ModemDocument9 pagesMicrocontroller Based Substation Monitoring and Control System With GSM Modembiruke6100% (1)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Chapter 1Document33 pagesChapter 1biruke6No ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Analog Multiplier SDocument17 pagesAnalog Multiplier Sbiruke6No ratings yet

- Condominium Winners List August 2012Document125 pagesCondominium Winners List August 2012biruke6No ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Algorithms, Pseudo Code, FlowchartDocument6 pagesAlgorithms, Pseudo Code, Flowchartazuka_devil66674850% (1)

- Algorithms, Flowcharts, Data Types and Pseudo CodeDocument22 pagesAlgorithms, Flowcharts, Data Types and Pseudo CodeLim Jun Xin100% (2)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Mutual InformationDocument8 pagesMutual Informationbalasha_937617969No ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- IT Assignment 2Document2 pagesIT Assignment 2syed02No ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Book 1Document856 pagesBook 1sikandarbatchaNo ratings yet

- Bayesian BrainDocument341 pagesBayesian Brain@lsreshty88% (8)

- Epigenetics As A Mediator of Plasticity in Cancer: Review SummaryDocument12 pagesEpigenetics As A Mediator of Plasticity in Cancer: Review Summary111No ratings yet

- Multivariate Normal DistributionDocument20 pagesMultivariate Normal DistributionBishal SadiNo ratings yet

- SS 19Document22 pagesSS 19Yaning ZhaoNo ratings yet

- LLVIP A Visible-Infrared Paired Dataset For Low-Light VisionDocument9 pagesLLVIP A Visible-Infrared Paired Dataset For Low-Light VisionzugofnNo ratings yet

- ThesisDocument83 pagesThesisAnant AgnihotriNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (120)

- Stat Proof BookDocument381 pagesStat Proof BookAdam SmithNo ratings yet

- Abou-Faycal Trott ShamaiDocument12 pagesAbou-Faycal Trott ShamaiNguyễn Minh DinhNo ratings yet

- Shannon's Information Theory - Science4AllDocument13 pagesShannon's Information Theory - Science4AllKevin E. Godinez OrtizNo ratings yet

- Feature Selection in Machine Learning A New Perspective - 2018 - NeurocomputingDocument10 pagesFeature Selection in Machine Learning A New Perspective - 2018 - NeurocomputingJoelMathewKoshyNo ratings yet

- Information Theory and CodingDocument108 pagesInformation Theory and Codingsimodeb100% (2)

- Bio - Informatics Unit - 1 Introduction To Bio-InformaticsDocument28 pagesBio - Informatics Unit - 1 Introduction To Bio-InformaticsDileep KumarNo ratings yet

- A Survey On Semi-, Self - and Unsupervised Learning For Image ClassificationDocument33 pagesA Survey On Semi-, Self - and Unsupervised Learning For Image ClassificationLi JianNo ratings yet

- BayesiaLab Specifications enDocument17 pagesBayesiaLab Specifications enskathpaliaNo ratings yet

- Causal Emergence - HoelDocument18 pagesCausal Emergence - HoelFelipe LopesNo ratings yet

- EC401 M1-Information Theory & Coding-Ktustudents - in PDFDocument50 pagesEC401 M1-Information Theory & Coding-Ktustudents - in PDFPradeep Kumar SainiNo ratings yet

- Journalofnetworktheoryinfinance v1 No1Document146 pagesJournalofnetworktheoryinfinance v1 No1Nuno AzevedoNo ratings yet

- ITC Lectures MergedDocument135 pagesITC Lectures MergedTejaswi ReddyNo ratings yet

- Alam Uri 2014Document8 pagesAlam Uri 2014Neti SuherawatiNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Lecture NotesDocument324 pagesLecture NotesSeverus100% (1)

- Dependent. On TheDocument310 pagesDependent. On Thescribd fakeNo ratings yet

- James P. Crutchfield - Between Order and ChaosDocument9 pagesJames P. Crutchfield - Between Order and ChaosPhilip_Marlowe_314No ratings yet

- Digital Communication Unit 5Document105 pagesDigital Communication Unit 5mayur.chidrawar88No ratings yet

- Solved ProblemsDocument7 pagesSolved ProblemsTaher ShaheenNo ratings yet

- On Measures of Entropy and InformationDocument18 pagesOn Measures of Entropy and Informationyounge994No ratings yet

- Part 3 Comparing The Information Gain of Alternative Data and ModelsDocument3 pagesPart 3 Comparing The Information Gain of Alternative Data and ModelsWathek Al Zuaiby60% (5)

- Mutual Information and CollocationsDocument13 pagesMutual Information and CollocationsKaren McdowellNo ratings yet