Professional Documents

Culture Documents

Tugas Fismat

Uploaded by

Sudrajat SaputraOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Tugas Fismat

Uploaded by

Sudrajat SaputraCopyright:

Available Formats

9.

MAXIMUM AND MINIMUM LAGRANGE MULTIPLIERS

PROBLEMS

WITH

CONSTRAINTS;

Example 1. A wire is bent to fit the curve (figure 9.1). A string is stretched from the origin to a point (x,y) on the curve. Find (x,y) to minimize the length of the string. y (x,y) x

Figure 9.1 We want to minimize the distance from the origin to the point (x,y); this is equivalent to minimizing . But x and y are not independent: they are related by the equation of the curve. This is extra relation between the variables is what we mean by a constraint. Problems involving constraints occur frequently in applications. There are several ways to do a problem like this: (a) elimination, (b) implicit differentiation, (c) Lagrange multipliers. (c) Lagrange Multipliers However, methods (a) and (b) can involve an enermous amount of algebra. We can shortcut the algebra by a process known as the method of Lagrange multyipliers or undertemined multipliers. We want to consider a problem like the one in (a) or (b). In general, we want to find the maximum or minimum of a function f(x,y), where x and y are related by an equation (x,y) = const. Then f is really a function of one variable (say x). To find the maximum or minimum points of f, we set as in (9.1). Since (x,y) = const., we get d = 0.

Multiply the equation by (this is the undertemined multilpier we shall find its value later) and add it to the df equation; then we have (9.3) ( ) ( ) .

We now pick so that (9.4) Then from (9.3) and (9.4) we have (9.5)

Note the equations (9.4) and (9.5) are exactly the equations we would write if we had a function (9.6)

Thus we can state the method of Lagrange multipliers in following way: (9.7) To find the maximum or minimum values of f(x,y) when x and y are related by the equation (x,y) = const., form the function F(x,y) as in (9.6) and set the two partial derivatives of F equal to zero [equations (9.4) and (9.5)]. Then solve these two equations and the equation (x,y) = const. for the three unknowns x, y, and .

As a simple illustration of the method we shall do the problem of Example 1 by Lagrange multipliers. Here

and write the equations to minimize Namely (9.8) ,

We solve these simultaneously with the equation = 1. From the first equation in (9.8), either x = 0 or . If x = 0, y = 1 from the equation (and ). Itf the secondequation gives y = , and then the equation gives = . These are same values we had before. The method offers nothing new testing whether we have found a maximum or a minimum, so we shall not repeat that work; if it is possible to see from the geometry or the physics what we have found, we dont bother to test. But to find the maximum and minimum values of f(x,y,z) if we form the function and set three partial derivatives of F equal to zero. We solve these equations and the equation for x, y, z, and (For a problem with still more variables there are more equations, but no change in method.) To find the maximum or minimum of f subject to the conditions and define and set each of the partial derivatives of equal to zero. Solvethese equations and the equation for the variables and the s.

10. ENDPOINT OR BOUNDARY POINT PROBLEM So far we have been assuming that if is a maximum or minimum point, calculus will find it. Some simple examples (see Figures 10.1 to 10.4) show that this may not be true. Suppose, in a given problem, x can have values only between 0 and 1; this sort | | the graph of restriction occurs frequently in applications. For example, if | | except for x of exist for all real x, but it has no meaning if between 0 and 1. As another example, suppose x is the length of a rectangle whose perimeter is 2; then x < 0 is meaningless in this problem since x is a length, and x > 1 is impossible because the perimeter is 2. Let us ask for the largest and smallest values of each the function in Figures 10.1 to 10.4 for In Figure 10.1, calculus will give us the minimum point, but the maximum of occurs at x = 1 and cannot be obtained by calculus, since there. In Figure 10.2, both the maximum and the minimum of are at endpoints, the maximum at x = 0 and the minimum at x = 1. In Figure 10.3 a relative maximum at P and a relative minimum at Q are given by calculus, but the absolute minimum between 0 and 1 occurs at x = 0, and the absolute maximum at x = 1. Here is a practical example of this sort of function. It is said the geographers used to give as the highest hill; then it was found that the highest point is on the Alabama border! Figure 10.4 illustrates another way in which calculus may fail to give us a desired maximum or minimum point; here the derivative is discontunuous at the maximum point.

x x 0 1 FIGURE 10.1 y y 1 FIGURE 10.2

x x 0 P Q 1 0 1 FIGURE 10.4

FIGURE 10.3

These are difficulties we must watch out whenever there is any restriction on the values any of the variables may take (or any discontinuity in the functions or their derivatives). These restrictions are not usually stated in so many words; you have to see them for yourself. For example if x and y are both between -5 and +5. If , then | |must be greater then or equal to 1. If where is a first-quadrant angle, then If is discontunious at the origin.

11. CHANGE OF VARIABLES One important use of partial differentiation is in making changes of variables (for example, from rectangular to polar coordinates). This may give a simpler expression or a simpler differential equation or one more suited to the physical problem one is doing. For example, if you are working with the vibration of a circular membrane, or the flow of heat in a circular cylinder, polar coordinates are better. Consider the following problems.

12. DIFFERENTIATION OF INTEGRALS; LEIBNIZ RULE According to the definition of an integral as an antiderivative, if (12.1) then where (12.3) | ,

is a constant. If we differentiate (12.2) with respect to x, we have [ ]

by (12.1). Similiary, so (12.4) |

By replacing x in (12.3) by , and replacing x in (12.4) by , we can then write (12.5) and (12.6) Suppose and are function of x and we want When the integral is evaluated, the answer depends on the limits and . Finding is then a partial differentiation problem; is a function of and , which are functions of x. We can write (12.7) But means to differentiate with respect to when constant; this ist just (12.15), so . Similiarly, means that is constant and we can use (12.6) to get Then we have (12.8) . where

Finally we may want to find

when

where

and

are

constants. Under not too restrctive conditions, (12.9) ;

It is convenient to collect formulas (12.8) and (12.9) into one formula known as Leibniz rule : (12.10)

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- DNV Os C104 2014Document40 pagesDNV Os C104 2014Moe LattNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Refrigerador Haier Service Manual Mother ModelDocument32 pagesRefrigerador Haier Service Manual Mother Modelnibble1974100% (1)

- Module 4Document24 pagesModule 4MARIE ANN DIAMANo ratings yet

- The Nature of Philosophy and Its ObjectsDocument9 pagesThe Nature of Philosophy and Its Objectsaugustine abellanaNo ratings yet

- ATR 72 - Flight ManualDocument490 pagesATR 72 - Flight Manualmuya78100% (1)

- Homework Labs Lecture01Document9 pagesHomework Labs Lecture01Episode UnlockerNo ratings yet

- CG-SR SL Mechanical Drawing Ver1.40Document28 pagesCG-SR SL Mechanical Drawing Ver1.40Jose Cardoso100% (1)

- Timetable Saturday 31 Dec 2022Document1 pageTimetable Saturday 31 Dec 2022Khan AadiNo ratings yet

- SUDOKU DocumentDocument37 pagesSUDOKU DocumentAmbika Sharma33% (3)

- PSAB Product ManualDocument4 pagesPSAB Product ManualArnold StevenNo ratings yet

- Echotrac Mkiii: Operator'S ManualDocument48 pagesEchotrac Mkiii: Operator'S ManualKhắc PhongNo ratings yet

- Design of Bulk CarrierDocument7 pagesDesign of Bulk CarrierhoangductuanNo ratings yet

- Control Charts For Lognormal DataDocument7 pagesControl Charts For Lognormal Dataanjo0225No ratings yet

- Materials and Techniques Used For The "Vienna Moamin": Multianalytical Investigation of A Book About Hunting With Falcons From The Thirteenth CenturyDocument17 pagesMaterials and Techniques Used For The "Vienna Moamin": Multianalytical Investigation of A Book About Hunting With Falcons From The Thirteenth CenturyAirish FNo ratings yet

- DesignScript Summary User ManualDocument20 pagesDesignScript Summary User ManualEsper AshkarNo ratings yet

- SVCE Seminar Report Format (FINAL)Document6 pagesSVCE Seminar Report Format (FINAL)Vinod KumarNo ratings yet

- Straight Line MotionDocument12 pagesStraight Line MotionMZWAANo ratings yet

- Reliability Prediction Studies On Electrical Insulation Navy Summary Report NAVALDocument142 pagesReliability Prediction Studies On Electrical Insulation Navy Summary Report NAVALdennisroldanNo ratings yet

- Full Text 01Document110 pagesFull Text 01GumbuzaNo ratings yet

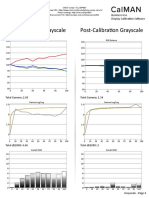

- TCL 55P607 CNET Review Calibration ResultsDocument3 pagesTCL 55P607 CNET Review Calibration ResultsDavid KatzmaierNo ratings yet

- Columns and preconditions reportDocument2 pagesColumns and preconditions reportIndradeep ChatterjeeNo ratings yet

- Manual X-C EFC Diversey Namthip - ENDocument37 pagesManual X-C EFC Diversey Namthip - ENthouche007No ratings yet

- Lab 2 ECADocument7 pagesLab 2 ECAAizan AhmedNo ratings yet

- CM-4G-GPS Quick Guide: Short Guide How To Start Using CM-GPRS ModuleDocument4 pagesCM-4G-GPS Quick Guide: Short Guide How To Start Using CM-GPRS Modulezakki ahmadNo ratings yet

- Unitplan2 Chi-SquareDocument11 pagesUnitplan2 Chi-Squareapi-285549920No ratings yet

- 20-SDMS-02 Overhead Line Accessories PDFDocument102 pages20-SDMS-02 Overhead Line Accessories PDFMehdi SalahNo ratings yet

- Libro de FLOTACIÓN-101-150 PDFDocument50 pagesLibro de FLOTACIÓN-101-150 PDFIsaias Viscarra HuizaNo ratings yet

- Section 1Document28 pagesSection 1Sonia KaurNo ratings yet

- A Short Guide To Arrows in ChemistryDocument1 pageA Short Guide To Arrows in ChemistryJefferson RibeiroNo ratings yet