Professional Documents

Culture Documents

Page Simulation

Uploaded by

Ajay KumarOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Page Simulation

Uploaded by

Ajay KumarCopyright:

Available Formats

TERM PAPER

TOPIC:- Simulation of page replacement

algorithm Submitted by:-

NAME:-AJAY KUMAR ROLL NO:-RK2005B36 SEC:-RK2005 Sub:-cse211

Submitted to:-

Prabhneet

Nayyar

Acknowledgement:I take this opportunity to present my votes of thanks to all those guidepost who really acted as lightening pillars to enlighten our way throughout this project that has led to successful and satisfactory completion of this study.

6.Reference Index:1.Introductio n 2.Types 3.Work 4.Applicatio n 5.Result

time it takes to swap in and swap out a page .

Types of page replacement algorithm

1. The theoretically optimal page replacement algorithm

The theoretically optimal page replacement algorithm (also known as OPT, clairvoyant replacement algorithm, or Belady's optimal page

Introduction

Page replacement algorithms decide which page to swap out from memory to allocate a new page to memory. Page fault happens when a page is not in memory and needs to be placed into memory. The idea is to find an algorithm that reduces the number of page faults which limits the

replacement policy) is an algorithm that works as follows: when a page needs to be swapped in, the

operating system swaps out the page whose next use will occur farthest in

the future. For example, a page that is not going to be used for the next 6 seconds will be swapped out over a page that is going to be used within the next 0.4 seconds. This algorithm cannot be implemented in the general purpose operating system because it is impossible to compute reliably how long it will be before a page is going to be used, except when all software that will run on a system is either known beforehand and is amenable to the static analysis of its memory reference patterns, or only a class of applications allowing run-time analysis. Despite this limitation, algorithms that can offer near-optimal performance the operating system keeps track of all pages referenced by the program, and it uses those data to decide which pages to swap in and out on subsequent runs. This

algorithm can offer nearoptimal performance, but not on the first run of a program, and only if the program's memory reference pattern is relatively consistent each time it runs. Analysis of the paging problem has also been done in the field of online algorithms. Efficiency of randomized online algorithms for the paging problem is measured using amortized analysis. The not recently used (NRU) page replacement algorithm is an algorithm that favours keeping pages in memory that have been recently used. This algorithm works on the following principle: when a page is referenced, a referenced bit is set for that page, marking it as referenced. Similarly, when a page is modified (written to), a modified bit is set. The setting of the bits is usually done by the hardware, although it is possible to do so on the software level as well.

At a certain fixed time interval, the clock interrupt triggers and clears the referenced bit of all the pages, so only pages referenced within the current clock interval are marked with a referenced bit. When a page needs to be replaced, the operating system divides the pages into four classes: Although it does not seem possible for a page to be not referenced yet modified, this page has its referenced bit cleared by the clock interrupt. The NRU algorithm picks a random page from the lowest category for removal. Note that this algorithm implies that a modified (within clock interval) but not referenced page is less important than a not modified page that is intensely referenced.

the part of theoperating system. The idea is obvious from the name - the operating system keeps track of all the pages in memory in a queue, with the most recent arrival at the back, and the earliest arrival in front. When a page needs to be replaced, the page at the front of the queue (the oldest page) is selected. While FIFO is cheap and intuitive, it performs poorly in practical application. Thus, it is rarely used in its unmodified form. This algorithm experiences Belady's anomaly. FIFO page replacement algorithm is used by the VAX/VMS operating system, with some modifications. Partial second chance is provided by skipping a limited number of entries with valid translation table references, [6] and additionally, pages are displaced from process working set to a systemwide pool from which they can be recovered if not already reused.

2.First-in, first-out

The simplest pagereplacement algorithm is a FIFO algorithm. The first-in, first-out (FIFO) page replacement algorithm is a low-overhead algorithm that requires little book-keeping on

As its name suggests, Secondchance gives every page a "second-chance" - an old page that has been referenced is probably in use, and should not be swapped out over a new page that has not been referenced.

3.Least recently used

The least recently used page (LRU) replacement algorithm, though similar in name to NRU, differs in the fact that LRU keeps track of page usage over a short period of time, while NRU just looks at the usage in the last clock interval. LRU works on the idea that pages that have been most heavily used in the past few instructions are most likely to be used heavily in the next few instructions too. While LRU can provide nearoptimal performance in theory (almost as good as Adaptive Replacement Cache), it is rather expensive to implement in practice. There are a few implementation methods for this algorithm that try to reduce the cost yet keep as much of the performance as possible. The most expensive method is the linked list method, which uses a linked list containing all the pages in memory. At the back of this list is the least recently used page, and at the front is the most recently used page. The cost of this implementation lies in the fact

that items in the list will have to be moved about every memory reference, which is a very time-consuming process. Another method that requires hardware support is as follows: suppose the hardware has a 64-bit counter that is incremented at every instruction. Whenever a page is accessed, it gains a value equal to the counter at the time of page access. Whenever a page needs to be replaced, the operating system selects the page with the lowest counter and swaps it out. With present hardware, this is not feasible because the OS needs to examine the counter for every page in memory. Because of implementation costs, one may consider algorithms (like those that follow) that are similar to LRU, but which offer cheaper implementations. One important advantage of the LRU algorithm is that it is amenable to full statistical analysis. It has been proven, for example, that LRU can

never result in more than Ntimes more page faults than OPT algorithm, where N is proportional to the number of pages in the managed pool. On the other hand, LRU's weakness is that its performance tends to degenerate under many quite common reference patterns. For example, if there are N pages in the LRU pool, an application executing a loop over array of N + 1 pages will cause a page fault on each and every access. As loops over large arrays are common, much effort has been put into modifying LRU to work better in such situations. Many of the proposed LRU modifications try to detect looping reference patterns and to switch into suitable replacement algorithm, like Most Recently Used (MRU).

4.Random

Random replacement algorithm replaces a random page in memory. This eliminates the overhead cost of tracking

page references. Usually it fares better than FIFO, and for looping memory references it is better than LRU, although generally LRU performs better in practice. OS/390 uses global LRU approximation This is in theory the best algorithm to use for page replacement. This algorithm looks ahead to see the likely hood that a value in the frame array is going to come up soon. If the value in value array doesnt come up soon it will be swapped out when a new page needs allocated is sufficient for making a good decision as to which page to swap out. Thus, aging can offer near-optimal performance for a moderate price. one page, increasing efficiency. These pages can then be placed on the Free Page List. The sequence of pages that works its way to

the head of the Free Page List resembles the results of a LRU or NRU mechanism and the overall effect has similarities to the Second-Chance algorithm described earlier.

5.optimal

This is in theory the best algorithm to use for page replacement . This algorithm looks ahead to see the likely hood that a value in the frame array is going to come up soon. If the value in value array doesnt come up soon it will be swapped out when a new page needs allocated .

Application of Page Reference Behavior

We can plot space-time graphs of references from the traces described above. For each execution interval (a point on the z axis) we plot a point for each page referenced in that interval

The y-axis values are relative page locations within the programs address space (since the applications address space is usually sparse and contains many unused regions, we leave out the address space holes and number the used pages from low addresses to high addresses on the y-axis). Due to space constraints we cannot include all space-time graphs. Following pages contain four representative samples

of the variety of the memory reference behavior for the sixteen applications. different from the rest. We refer interested readers to for all the space-time plots.) Observing the space-time graphs, we found that the applications fall into three categories. The first, which includes intensive-large numbers of pages are accessed during each execution interval. There are no clearly visible pattern.

Simulation Results

Since our traces contain only IN and OUT records, we cannot simulate SEQ accurately under all circumstances. Instead, we conduct a slightly conservative simulation. That is, if a chosen-for-replacement page is IDLE (i.e. it is not accessed

until its next IN record), the page is simply replaced; if the page is ACTIVE (i.e. it is between an IN record and an OUT record, which means it is accessed actively during this interval), we replace the page and then immediately simulate a fault on the page to bring it back into memory. This results in a simulation that slightly under-estimates

the actual performance of SEQ, because in reality the page fault would occur sometime later in the current or the next, interval. SEQ performs significantly better than LRU, and quite close to optimal, for the applications with clear access patterns (for example, gnuplot and turb3d). For other applications SEQs performance is quite similar to LRU. We have varied the three SEQ parameters (L, M, and N) and observed resultant performance changes. Intuitivelv. the larger the value of L, the more conservative the algorithm will be, because it is less likely that a run of faults will be long enough to be considered a sequence. Reducing L has the opposite effect. Similarly, the parameter &I is set

to guard against the case when pages in a sequence are reaccessed in a short time period. If the pages in the sequence are accessed only once, then M should be set to 1; however, if there is reuse of pages near the head of the sequence, then M should be larger to avoid replacing in-use pages.

References:1.http://www.wikipedia.com

2. L. A. Belady. A study of replacement

algorithms 3.M. Morris Mano

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Case Study On: Submitted ByDocument9 pagesCase Study On: Submitted ByAjay KumarNo ratings yet

- Wireless & Comunication NetworkDocument27 pagesWireless & Comunication NetworkAjay KumarNo ratings yet

- Project On Computr Graphics: Submitted ByDocument7 pagesProject On Computr Graphics: Submitted ByAjay KumarNo ratings yet

- Software Engineerinng CSE314Document34 pagesSoftware Engineerinng CSE314Ajay KumarNo ratings yet

- Linked List GraphicsDocument39 pagesLinked List GraphicsAjay Kumar100% (3)

- Railway ReservationDocument17 pagesRailway ReservationAjay KumarNo ratings yet

- Acknowledgement: Term Paper ON "Hostel Management"Document22 pagesAcknowledgement: Term Paper ON "Hostel Management"Ajay KumarNo ratings yet

- OF (Show The Day of Birth From Date of Birth)Document13 pagesOF (Show The Day of Birth From Date of Birth)Ajay KumarNo ratings yet

- Acknowledgement: Term Paper ON "Hostel Management"Document22 pagesAcknowledgement: Term Paper ON "Hostel Management"Ajay KumarNo ratings yet

- CSE-151 Term Paper: Topic: CalculatorDocument17 pagesCSE-151 Term Paper: Topic: CalculatorAjay KumarNo ratings yet

- Library Manage SystemDocument28 pagesLibrary Manage SystemAjay KumarNo ratings yet

- Term Paper Object Oriented Programming CSE202 Topic-Mobile Service Provider DatabaseDocument23 pagesTerm Paper Object Oriented Programming CSE202 Topic-Mobile Service Provider DatabaseAjay KumarNo ratings yet

- Calendar Program C CodeDocument4 pagesCalendar Program C CodethenewwriterNo ratings yet

- Design Problem On Text Editor: Submitted ByDocument17 pagesDesign Problem On Text Editor: Submitted ByAjay KumarNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- W3 Lesson 3 - Systems of Linear Equation - ModuleDocument11 pagesW3 Lesson 3 - Systems of Linear Equation - ModuleJitlee PapaNo ratings yet

- ANUM 2012 Bracketing MethodsDocument31 pagesANUM 2012 Bracketing MethodsKukuh KurniadiNo ratings yet

- 32 0 360 TC x1 x2 LHS Sign RHS 4360Document2 pages32 0 360 TC x1 x2 LHS Sign RHS 4360jemalNo ratings yet

- Communication II Lab (EELE 4170)Document6 pagesCommunication II Lab (EELE 4170)Fahim MunawarNo ratings yet

- AI SudokuSolverDocument3 pagesAI SudokuSolverJitendra GarhwalNo ratings yet

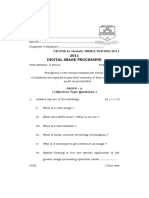

- Pgdgi 202 Digital Image Processing 2011Document3 pagesPgdgi 202 Digital Image Processing 2011cNo ratings yet

- Dispersive Delay Lines: Matched Filters For Signal ProcessingDocument7 pagesDispersive Delay Lines: Matched Filters For Signal ProcessingMini KnowledgeNo ratings yet

- Edge Detection PDFDocument9 pagesEdge Detection PDFRutvi DhameliyaNo ratings yet

- Questions For Codechef ContestDocument4 pagesQuestions For Codechef ContestVsno SnghNo ratings yet

- Table of Window Function Details - VRUDocument29 pagesTable of Window Function Details - VRUVivek HanchateNo ratings yet

- Components.: B e A F H GDocument31 pagesComponents.: B e A F H GAkbarNo ratings yet

- Coursera - Week 8 QuestionDocument9 pagesCoursera - Week 8 QuestionKapildev Kumar5% (20)

- Lecture 2: One Dimensional Problems: APL705 Finite Element MethodDocument5 pagesLecture 2: One Dimensional Problems: APL705 Finite Element MethodZafar AlamNo ratings yet

- Contents:: CS-201 Lab Manual Lab 4: Sorting: Insertion SortDocument5 pagesContents:: CS-201 Lab Manual Lab 4: Sorting: Insertion Sortirfan_chand_mianNo ratings yet

- Group Rings For Communications: Ted HurleyDocument32 pagesGroup Rings For Communications: Ted HurleyTing Sie KimNo ratings yet

- Deep Learning - IIT RoparDocument2 pagesDeep Learning - IIT RoparSelva KumarNo ratings yet

- LectureO09 PDFDocument40 pagesLectureO09 PDFAbdul RahimNo ratings yet

- Header Linked ListDocument13 pagesHeader Linked ListAnkit RajputNo ratings yet

- Optim NotesDocument19 pagesOptim NotesDipteemaya BiswalNo ratings yet

- Module 3Document79 pagesModule 3sabharish varshan410100% (1)

- Class 10 Number Systems Assignment 1Document5 pagesClass 10 Number Systems Assignment 1manojmathewNo ratings yet

- Assignment 2: EEL 709 Deepali Jain 2012ee10082Document9 pagesAssignment 2: EEL 709 Deepali Jain 2012ee10082AashishNo ratings yet

- BC0043 Computer Oriented Numerical Methods Paper 1Document13 pagesBC0043 Computer Oriented Numerical Methods Paper 1SeekEducationNo ratings yet

- PDFDocument349 pagesPDFOki NurpatriaNo ratings yet

- Laboratory Record: Experiment No: 08Document9 pagesLaboratory Record: Experiment No: 08Subhashree DashNo ratings yet

- 3 Error Detection and CorrectionDocument33 pages3 Error Detection and CorrectionNaveen ThotapalliNo ratings yet

- CSC508 TEST2 12july2023Document4 pagesCSC508 TEST2 12july20232021886362No ratings yet

- Data Structure MCQDocument16 pagesData Structure MCQMaaz AdhoniNo ratings yet

- L. PCM DM and DPCMDocument27 pagesL. PCM DM and DPCMMojtaba Falah alaliNo ratings yet

- Moving Average FilterDocument5 pagesMoving Average FilterMhbackup 001No ratings yet