Professional Documents

Culture Documents

Anova

Uploaded by

Nguyen BeCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Anova

Uploaded by

Nguyen BeCopyright:

Available Formats

1 Single-Factor Anova

1.1 Single-Factor Anova

Introdcution

The analysis of variance, or briey ANOVA, refers to a collection of statistical

procedures for the analysis of quantitative responses.

An experiment to study the eects of ve dierent brands of gasoline on

automobile engine operating eciency (mpg)

An experiment to study the eects of four dierent sugar solutions

(glucose, sucrose, fructose, and a mixture of the three) on bacterial

growth

An experiment to investigate whether hardwood concentration in pulp

(%) has an eect on tensile strength of bags made from the pulp

An experiment to decide whether the color density of fabric specimens

depends on the amount of dye used

ANOVA

Extends independent-samples t test

Compares the means of groups of independent observations

- Dont be fooled by the name. ANOVA does not compare variances.

Can compare more than two groups

Notation

Single-factor Anova focuses on a comparison of two or more populations. Let

I = the number of treatments being compared

1

= the mean of population 1 (or the true average response when

treatment 1 is applied)

I

= the mean of population I (or the true average response when

treatment I is applied)

Then the hypotheses of interest are

H

0

:

1

=

2

= =

I

versus

H

1

: at least two of the

i

s are dierent

Example 1.1 If I = 4, then H

0

is true only if all four

i

s are identical.

Here well focus on the case of equal sample sizes, denoted by J.

Let

X

ij

= the random variable (rv) denoting the jth measurement from the

ith population

x

ij

= the observed value of X

ij

when the experiment is performed

In these notation,

The rst subscript identies the sample number, corresponding to the

population or treatment being sampled

The second subscript denotes the position of the observation within that

sample.

The individual sample means will be denoted by X

1

, . . . , X

I

X

i

=

J

j=1

X

ij

J

The average of all IJ observations, called the grand mean, is

X =

I

i=1

J

j=1

X

ij

IJ

Additionally, let S

2

1

, S

2

2

, . . . , S

2

I

denote the sample variances

S

2

i

=

J

j=1

(X

ij

X

i

)

J 1

Assumptions

The I population or treatment distributions are all normal with the same

variance

2

. That is, each X

ij

is normally distributed with

E(X

ij

) =

i

, Var(X

ij

) =

2

Remark 1.2 (The Same Variance?) The I sample standard deviations

will generally dier somewhat even when the corresponding s are iden-

tical.

A rough rule of thumb is that if the largest s is not much more than two

times the smallest, it is reasonable to assume equal

2

s.

2

Levenes test.

Checking Normality

Denition 1.3 A normal probability plot is a plot of the n pairs ([100(i 0.5)/n]th z percentile, ith smallest observation)

The straightness of the pattern supports the normality assumption.

The individual sample sizes in ANOVA are typically too small for I

separate plots to be informative.

A single plot can be constructed by subtracting x

1

from each observation

in the rst sample, x

2

from each observation in the second, and so on,

and then plotting these IJ deviations against the z percentiles.

The straightness of the pattern gives strong support to the normality

assumption.

Sums of Squares and Mean Squares

Denition 1.4 The treatment sum of squares SSTr is given by

SSTr = J

i

(X

i

X)

2

The error sum of squares SSE is

SSE =

i,j

(X

ij

X

i

)

2

= (J 1)

i

S

2

i

where (J 1)S

2

i

/

2

2

J1

Remark 1.5 (J 1)S

2

i

/

2

2

J1

Theorem 1.6 If the basic assumptions of this section are satised, then

SSE/

2

2

I(J1)

.

3

when H

0

is true, SSTr/

2

2

I1

.

SSE and SSTr are independent random variables.

Proof. Let Y

i

= X

i

. Then Y

1

, . . . , Y

I

are independent and normally distributed

with the same mean under H

0

and with variance

2

/J. Thus

SSTr/

2

= J

i

(X

i

X)

2

/

2

=

(I 1)S

2

Y

2

/J

2

I1

On the other hand,

X

i

S

i

, i = SSTrSSE

Denition 1.7 The mean square for treatments: MSTr = SSTr/(I 1)

The mean square for error: MSE = SSE/[I(J 1)]

Proposition 1.8 E(MSE) =

2

.

When H

0

is true: E(MSTr) =

2

.

When H

0

is false: E(MSTr) >

2

.

Remark 1.9 both statistics are unbiased for estimating the common pop-

ulation variance

2

when H

0

is true

but MSTr tends to overestimate

2

when H

0

is false.

- Since the X

i

s tend to spread out more when H

0

is false than when it is

true.

Proof of the Proposition

E(SSE/

2

) = I(J 1) = E(MSE) =

2

In single-factor ANOVA with I treatments and J observations per treatment,

let =

i

/I.

1. Express E(X) in terms of .

2. Compute E(X

2

i

). [Hint: E(X

2

i

) = Var(X

2

i

) + [E(X

i

)]

2

.]

3. Compute E(X

2

)

4. Compute E(SSTr) and then show that

E(MSTr) =

2

+

J

I 1

i

(

i

)

2

5. Prove that

E(MSTr) =

2

when H

0

is true

E(MSTr) >

2

when H

0

is false

4

Theorem 1.10 Consider the test statistic

F =

MSTr

MSE

=

SSTr/

2

I 1

SSE/

2

I(J 1)

If H

0

is true, then F F

I1,I(J1)

.

Let x be the grand total: x =

i,j

x

ij

Sum of Squares df Denition Computing Formula

Total = SST IJ 1

i,j

(x

ij

x)

2

i,j

x

2

ij

x

2

IJ

Treatment I 1

i,j

(x

i

x)

2

1

J

i

x

2

i

x

2

IJ

= SSTr = J

i

(x

i

x)

2

Error = SSE I(J 1)

i,j

(x

ij

x

i

)

2

SST SSTr

F

MSTr

MSE

MSTr SSTr/(I 1)

MSE SSE/[I(J 1)]

x

2

/(IJ) is called the correction factor/ the correction for the mean.

Theorem 1.11 (The Fundamental ANOVA Identity)

SST = SSTr +SSE

Remark 1.12 1. Once any two of the SSs have been calculated, the remain-

ing one is easily obtained by addition or subtraction.

2. The decomposition of SST gives rise to the name ANOVA.

SST measures the total variation in the data - the sum of all squared

deviations about the grand mean.

SSE measures variation that would be present (within samples) even

if H

0

were true - the part of total variation that is unexplained by the

status of H

0

(true or false).

SSTr is the part of total variation (between samples) that can be ex-

plained by possible dierences in the

i

s.

If explained variation is large relative to unexplained variation, then H

0

is rejected in favor of H

1

.

An ANOVA Table

5

Source df Sum Mean Square F

of Variation of Squares

Treatments I 1 SSTr MSTr =

SSTr

I 1

MSTr

MSE

Error I(J 1) SSE MSE =

SSE

I(J 1)

Total IJ 1 SST

Remark 1.13 When the F test causes H

0

to be rejected, the experimenter will

often be interested in further analysis to decide which

i

s dier from which

others multiple comparison procedures.

Example 1.14 The accompanying data resulted from an experiment comparing

the degree of soiling for fabric copolymerized with three dierent mixtures of

methacrylic acid

Mixture Degree of Soiling x

i

x

i

1 .56 1.12 .90 1.07 .94 4.59 .918

2 .72 .69 .87 .78 .91 3.97 .794

3 .62 1.08 1.07 .99 .93 4.69 .938

x = 13.25

Solution. The null hypothesis H

0

:

1

=

2

=

3

The F critical value for the rejection region is F

.01,2,12

= 6.93

Source df Sum Mean Square F

of Variation of Squares

Treatments 2 .0608 0.0304 .99

Error 12 .3701 .0308

Total 14 .4309

Because F < F

.01,2,12

, H

0

is not rejected at signicance level .01.

Testing for the Assumption of Equal Variances

Introduction

One of the two assumptions for ANOVA is that the populations have

equal variances.

Bartletts test: Apply the likelihood ratio principle to testing for equal

variances for normal data - a generalization of the F test very

sensitive to the normality assumption.

Levenes test: much less sensitive to the assumption of normality.

6

The Levene Test

The Main Idea

The idea is to use absolute residuals x

ij

x

i

to compare the variability

of the samples.

The Levene test performs an ANOVA F test using the absolute residuals

|x

ij

x

i

| in place of x

ij

.

Remark 1.15 The ANOVA F-test is robust to the assumption of normality

The assumption can be relaxed somewhat

We can do an ANOVA on absolute residuals (although the they are not

normally).

The Levene test works in spite of the normality assumption.

Example 1.16 The accompanying data resulted from an experiment comparing

the degree of soiling for fabric copolymerized with three dierent mixtures of

methacrylic acid

Mixture Degree of Soiling x

i

x

i

1 .56 1.12 .90 1.07 .94 4.59 .918

2 .72 .69 .87 .78 .91 3.97 .794

3 .62 1.08 1.07 .99 .93 4.69 .938

x = 13.25

Check the validity of the assumption of equality of variances.

Mixture Degree of Soiling x

i

|x

ij

x

i

|

1 .56 1.12 .90 1.07 .94 .918

.358 .202 .018 .152 .022 .752

2 .72 .69 .87 .78 .91 .794

.074 .104 .076 .014 .116 .384

3 .62 1.08 1.07 .99 .93 .938

.318 .142 .132 .052 .008 .652

1.788

Now apply ANOVA to the absolute residuals

SST = .3701 1.7882/15 = .3701 .2131 = .1570

SSTr = [.752

2

+.384

2

+.652

2

]/5 .2131 = .2276 .2131 = .0145

SSE = .1570 .0145 = .1425 F =

.0145/2

.1425/12

= .61

Because .61 < F

.10,2,12

= 2.81, there is no reason to doubt that the variances

are equal.

7

1.2 Multiple Comparisons in Anova

Goal

If H

0

is rejected after doing Anova on the data, we will usually want to know

which of the

i

s are dierent from each other.

A method for carrying out this further analysis is called a multiple

comparisons procedure.

Central Idea

Calculate a condence interval for each pairwise dierence

i

j

with

i < j.

Judge for each pair of s whether or not they dier signicantly from

each other.

The procedures based on this idea dier in the method used to calculate

the various CIs

Tukeys Procedure

Denition 1.17 Let Z

1

, . . . , Z

m

be m independent standard normal rvs and

W

2

such that WZ. Then the distribution of

Q =

max |Z

i

Z

j

|

_

W/

=

max(Z

1

, . . . , Z

m

) min(Z

1

, . . . , Z

m

)

_

W/

is called the studentized range distribution

We denote the critical value that captures upper-tail area under the density

curve of Q by Q

,m,

.

Remark 1.18 The numerator of Q is indeed the range of the Z

i

s.

Z

i

/

_

W/ t

= Q is actually the range of m variables that have the

t distribution.

The Distribution of Q

First of all

Z

i

=

X

i

i

/

J

, m = I

and

W =

SSE

2

=

I(J 1)MSE

2

, = I(J 1)

Then

8

Q =

max

X

i

X

j

(

i

j

)

_

MSE/J

Therefore

1 = P

_

max |XiXj(ij)|

MSE/J

Q

,I,I(J1)

_

= P

_

X

i

X

j

Q

_

MSE/J

i

j

X

i

X

j

+Q

_

MSE/J, i, j

_

Proposition 1.19 For i < j, form the interval (there are I(I 1)/2 such

intervals)

x

i

x

j

Q

,I,I(J1)

_

MSE/J

The simultaneous condence level that every interval includes the corresponding

value of

i

j

is 100(1 )%.

Tukeys Procedure for Identifying Signicantly Dierent

i

s

1. Select , nd Q

,I,I(J1)

and calculate the Tukeys honestly signicantly

dierence (HSD): w = Q

,I,I(J1)

_

MSE/J.

2. List the sample means in increasing order and underline those pairs that

dier by less than w.

Any pair of sample means not underscored by the same line

corresponds to a pair of population or treatment means that are judged

signicantly dierent

Example 1.20 Suppose, for example, that I = 5 and that

x

2

< x

5

< x

4

< x

1

< x

3

Then start from x

2

1. If x

5

x

2

w, move to x

5

and repeat the process.

2. If x

5

x

2

< w, connect x

2

and x

5

with a line segment and extend this line

segment further to the right to the largest x

i

that diers from x

2

by less

than w (so the line may connect two, three, or even more means). Then

move to x

i+1

and repeat the process.

3. Keep the process going until the penultimate mean is reached.

Example 1.21 An experiment was carried out to compare ve dierent brands

of automobile oil lters with respect to their ability to capture foreign material.

Let

i

denote the true average amount of material captured by brand i lters

under controlled conditions. A sample of nine lters of each brand was used,

resulting in the following sample mean amounts

x

1

= 14.5, x

2

= 13.8, x

3

= 13.3, x

4

= 14.3, x

5

= 13.1

1. Prove that H

0

is rejected at level .05.

9

2. Use Tukeys procedure to identify signicantly dierent means.

Solution. Anova table

Source df Sum Mean Square F

of Variation of Squares

Treatments 4 13.32 3.33 37.84

Error 40 3.53 .088

Total 44 16.85

Since F

.05,4,40

< 2.61, H

0

is rejected at level .05

Tukeys procedure: Q

.05,5,40

= 4.04 = w = 4.04

_

.088/9 = .4 Then

x

5

x

3

x

2

x

4

x

1

13.1 13.3 13.8 14.3 14.5

If x

2

= 14.15 rather than 13.8 then

x

5

x

3

x

2

x

4

x

1

13.1 13.3 13.8 14.3 14.5

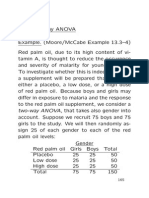

2 Two-Factor Anova

2.1 Two-Factor Anova with K

ij

= 1

Notation

When factor A consists of I levels and factor B consists of J levels, there

are IJ dierent combinations (pairs) of levels of the two factors, each

called a treatment.

K

ij

= the number of observations on the treatment consisting of factor

A = at level i and factor B at level j.

X

ij

= the rv denoting the measurement when factor A is held at level i

and factor B is held at level j.

Denote the grand mean and the averages of data obtained when one factor

is held by

X

i

=

1

J

J

j=1

X

ij

, X

j

=

1

I

I

i=1

X

ij

, X =

1

IJ

I

i=1

J

j=1

X

ij

Little xs (x

ij

, x

i

, x

j

, x) are used for observed values.

Totals rather than averages are denoted by omitting the bar.

The Fixed Eects Model

10

The Fixed Eects Model

Assume the existence of I parameters

1

, . . . ,

I

and J parameters

1

, . . . ,

J

,

such that

X

ij

= +

i

+

j

+

ij

where

I

i=1

i

= 0,

J

j=1

j

= 0, and

ij

N(0,

2

) are independent.

The Interpretation of the Parameters

is the true grand mean (over all levels of both factors)

i

is the eect of factor A at level i (a deviation from )

j

is the eect of factor B at level j (a deviation from )

i,j

= +

i

+

j

is the mean response.

Remark 2.1 The previous model is called an additive model.

Given

ij

, the parameters ,

i

,

j

are uniquely determined.

The dierence in mean responses for two levels of one of the factors is the

same for all levels of the other factor

ij

i

j

= ( +

i

+

j

) ( +

i

+

j

) =

i

i

,

which is independent of the level j of the second factor

Estimators for Parameters

Unbiased (and maximum likelihood) estimators for parameters

= X,

i

= X

i

,

j

= X

j

X

Hypotheses

H

0A

:

1

= =

I

= 0 versus H

1A

: at least one

i

= 0.

11

H

0B

:

1

= =

J

= 0 versus H

1B

: at least one

j

= 0.

Sums of Squares

Sum df Denition Computing Formula

SST IJ 1

i,j

(Xij X)

2

i,j

X

2

ij

X

2

IJ

SSA I 1

i,j

(Xi X)

2

1

J

i

X

2

i

X

2

IJ

SSB J 1

i,j

(Xj X)

2

1

I

i

X

2

j

X

2

IJ

SSE (I 1)(J 1)

i,j

(Xij Xi Xj +X)

2

SST SSASSB

Theorem 2.2 (The Fundamental ANOVA Identity)

SST = SSA+SSB +SSE

Remark 2.3 The expression for SSE results from replacing , i, j in

[Xij

( +i +j)]

2

by their respective estimators.

Error df is IJ [1 + (I 1) + (J 1)] = (I 1)(J 1)

Total variation is split into

SSE, a part that is not explained by either the truth or the falsity of H0A

or H0B.

SSA and SSB, two parts that can be explained by possible falsity of H0A

and H0B.

Test Procedures

ANOVA Table

Source df Sum Mean Square F

of Variation of Squares

Factor A I 1 SSA MSA =

SSA

I 1

FA =

MSA

MSE

Factor B J 1 SSB MSB =

SSB

J 1

FB =

MSB

MSE

Error (I 1)(J 1) SSE MSE

=

SSE

(I 1)(J 1)

Total IJ 1 SST

Test Procedures (cont)

Expected Mean Squares

12

E(MSE) =

2

(if the model is additive)

E(MSA) =

2

+

J

I 1

I

i=1

2

i

E(MSB) =

2

+

I

J 1

J

j=1

2

j

Remark 2.4 When H0A is true, MSA is an unbiased estimator of

2

, so F is

a ratio of two unbiased estimators of

2

.

When H0A is false, MSA tends to overestimate

2

, so H0A should be rejected

when the ratio FA is too large.

Similar comments apply to MSB and H0B.

Test Procedures (cont)

Decision Rule

Hypotheses Test Statistic Value Rejection Region

H0A versus H1A FA =

MSA

MSE

FA F

,I1,(I1)(J1)

H0B versus H1B FB =

MSB

MSE

FB F

,J1,(I1)(J1)

Example 2.5 Is it really as easy to remove marks on fabrics from erasable pens as

the word erasable might imply? Consider the following data from an experiment to

compare three dierent brands of pens and four dierent wash treatments with respect

to their ability to remove marks on a particular type of fabric. The response variable

is a quantitative indicator of overall specimen color change; the lower this value, the

more marks were removed.

Washing treatment

1 2 3 4 Total

Brand of Pen

1 .97 .48 .48 .46 2.39

2 .77 .14 .22 .25 1.38

3 .67 .39 .57 .19 1.82

Total 2.41 1.01 1.27 .90 5.59

Is there any dierence in the true average amount of color change due either to the

dierent brands of pen or to the dierent washing treatments? Assume that the level

of signicance is .05

Solution.

i,j

x

2

ij

= 3.2987, x

2

/(IJ) = 5.59

2

/12 = 2.6040

The sums of squares are then

SST = 3.2987 2.6040 .6947

SSA = [2.39

2

+ 1.38

2

+ 1.82

2

]/4 = .1282

SSB = [2.41

2

+ 1.01

2

+ 1.27

2

+.90

2

]/3 = .4797

SSE = .6947 (.1282 +.4797) = .0868

13

Here is the ANOVA table

Source df Sum Mean Square F

of Variation of Squares

Factor A 2 SSA = .1282 MSA = .0641 FA = 4.43

Factor B 3 SSB = .4797 MSB = .1599 FB = 11.05

Error 6 SSE = .0868 MSE = .01447

Total 11 SST = .6947

Because F.05,2,6 = 5.14 > FA = 4.43, H0A cannot be rejected at signicance

level .05. Thus, we cannot conclude that true average color change depends on

brand of pen.

Because F.05,3,6 = 4.76 < FB = 11.05, H0B is rejected at signicance level .05

in favor of the assertion that color change varies with washing treatment.

14

You might also like

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Ch. 12 The Analysis of Variance: ExampleDocument27 pagesCh. 12 The Analysis of Variance: Exampleesivaks2000No ratings yet

- I P S F E The Analysis of Variance: Ntroduction To Robability AND Tatistics Ourteenth DitionDocument68 pagesI P S F E The Analysis of Variance: Ntroduction To Robability AND Tatistics Ourteenth DitionMai Thư Pham NguyenNo ratings yet

- 3 UnequalDocument7 pages3 UnequalRicardo TavaresNo ratings yet

- Analysis of Variance (Anova) : Madam Siti Aisyah Zakaria EQT 271 SEM 2 2014/2015Document29 pagesAnalysis of Variance (Anova) : Madam Siti Aisyah Zakaria EQT 271 SEM 2 2014/2015Farrukh JamilNo ratings yet

- One-Way ANOVADocument18 pagesOne-Way ANOVATADIWANASHE TINONETSANANo ratings yet

- Anova BiometryDocument33 pagesAnova Biometryadityanarang147No ratings yet

- T-Test and F-Test HypothesesDocument25 pagesT-Test and F-Test HypothesesKabirNo ratings yet

- Module 2 in IStat 1 Probability DistributionDocument6 pagesModule 2 in IStat 1 Probability DistributionJefferson Cadavos CheeNo ratings yet

- Anova Slides PresentationDocument29 pagesAnova Slides PresentationCarlos Samaniego100% (1)

- Probability PresentationDocument26 pagesProbability PresentationNada KamalNo ratings yet

- Readings For Lecture 5,: S S N N S NDocument16 pagesReadings For Lecture 5,: S S N N S NSara BayedNo ratings yet

- Chapter 6 - SDocument93 pagesChapter 6 - SMy KiềuNo ratings yet

- Pertemuan 7zDocument31 pagesPertemuan 7zAnto LaeNo ratings yet

- Perancangan Eksperimen Week 2Document30 pagesPerancangan Eksperimen Week 2LOUIS MARTIN JMNo ratings yet

- AnovaDocument55 pagesAnovaFiona Fernandes67% (3)

- Statistics For Decision Making: ANOVA: Analysis of VarianceDocument32 pagesStatistics For Decision Making: ANOVA: Analysis of VarianceAmit AdmuneNo ratings yet

- LECTURE Notes On Design of ExperimentsDocument31 pagesLECTURE Notes On Design of Experimentsvignanaraj88% (8)

- 1.017/1.010 Class 19 Analysis of Variance: Concepts and DefinitionsDocument5 pages1.017/1.010 Class 19 Analysis of Variance: Concepts and DefinitionsDr. Ir. R. Didin Kusdian, MT.No ratings yet

- Do Not Use The Rules For Expected MS On ST&D Page 381Document18 pagesDo Not Use The Rules For Expected MS On ST&D Page 381Teflon SlimNo ratings yet

- Anova 1Document47 pagesAnova 1Avani PatilNo ratings yet

- Unit 5 - STUDENTS - ANOVADocument32 pagesUnit 5 - STUDENTS - ANOVAEdward MochekoNo ratings yet

- Chapter 5 18Document49 pagesChapter 5 18Nandana S SudheerNo ratings yet

- Analysis of Variance Analysis of Variance: An ExampleDocument5 pagesAnalysis of Variance Analysis of Variance: An Examplesou.beraNo ratings yet

- Biostatistics PPT - 5Document44 pagesBiostatistics PPT - 5asaduzzaman asadNo ratings yet

- Analysis of Variance (Anova) : School of Commerce & Management ScienceDocument26 pagesAnalysis of Variance (Anova) : School of Commerce & Management ScienceprasadNo ratings yet

- AnovaDocument105 pagesAnovaasdasdas asdasdasdsadsasddssaNo ratings yet

- Testing of Hypothesis Unit-IDocument16 pagesTesting of Hypothesis Unit-IanohanabrotherhoodcaveNo ratings yet

- QMDS 202 Data Analysis and Modeling: Chapter 14 Analysis of VarianceDocument7 pagesQMDS 202 Data Analysis and Modeling: Chapter 14 Analysis of VarianceFaithNo ratings yet

- AnnovaDocument19 pagesAnnovaLabiz Saroni Zida0% (1)

- (One-Way Anova Test) AssignmentDocument4 pages(One-Way Anova Test) AssignmentHannahFaith ClementeNo ratings yet

- Why Anova - Assumptions Used in ANOVA - Various Forms of ANOVA - Simple ANOVA Tables - Interpretation of Values in The Table - R Commands For ANOVA - ExercisesDocument15 pagesWhy Anova - Assumptions Used in ANOVA - Various Forms of ANOVA - Simple ANOVA Tables - Interpretation of Values in The Table - R Commands For ANOVA - Exercisesalokpoddar2010No ratings yet

- 04 Simple AnovaDocument9 pages04 Simple AnovaShashi KapoorNo ratings yet

- Statistics: 1.5 Oneway Analysis of VarianceDocument5 pagesStatistics: 1.5 Oneway Analysis of Varianceأبوسوار هندسةNo ratings yet

- Pertemuan 5 StatlanDocument16 pagesPertemuan 5 StatlanHanan TsabitahNo ratings yet

- T-And F-Tests: Testing HypothesesDocument26 pagesT-And F-Tests: Testing HypothesesparneetkaurbediNo ratings yet

- Randamized Block NotedDocument5 pagesRandamized Block NotedyagnasreeNo ratings yet

- Handout 1 Experiment DesignDocument15 pagesHandout 1 Experiment DesignFleming MwijukyeNo ratings yet

- Lecture1 PDFDocument40 pagesLecture1 PDFHien NgoNo ratings yet

- Analysis of Variance: Session 5Document25 pagesAnalysis of Variance: Session 5keziaNo ratings yet

- Sadia Ghafoor 1054 BS Chemistry 4 Morning Analytical Chemistry DR - Hussain Ullah Assignment: Chi Square and T Test Chi Square TestDocument7 pagesSadia Ghafoor 1054 BS Chemistry 4 Morning Analytical Chemistry DR - Hussain Ullah Assignment: Chi Square and T Test Chi Square TestPirate 001No ratings yet

- Estadistica para MoDocument29 pagesEstadistica para MoJuan Agustin Cuadra SotoNo ratings yet

- Week10 Analysis of VarianceDocument24 pagesWeek10 Analysis of VarianceHelga LukajNo ratings yet

- Advanced Statistics Hypothesis TestingDocument42 pagesAdvanced Statistics Hypothesis TestingShermaine CachoNo ratings yet

- MAS202 Chapter 11Document31 pagesMAS202 Chapter 11xuantoan501No ratings yet

- CheatsheetDocument9 pagesCheatsheetI FNo ratings yet

- Chapter No. 03 Experiments With A Single Factor - The Analysis of Variance (Presentation)Document81 pagesChapter No. 03 Experiments With A Single Factor - The Analysis of Variance (Presentation)Sahib Ullah MukhlisNo ratings yet

- 2s03 Session 3 CLT & Normal Dist (Handout)Document51 pages2s03 Session 3 CLT & Normal Dist (Handout)Sabrina RuncoNo ratings yet

- Lec. 2 Statistical AnalysisDocument12 pagesLec. 2 Statistical Analysisrkfw7nq7xrNo ratings yet

- Analysis of Variance: GlossaryDocument5 pagesAnalysis of Variance: Glossarysharkbait_fbNo ratings yet

- Topic 3 Single Factor ANOVADocument13 pagesTopic 3 Single Factor ANOVATeflon SlimNo ratings yet

- Statistics 512 Notes I D. SmallDocument8 pagesStatistics 512 Notes I D. SmallSandeep SinghNo ratings yet

- Experiments With A Single Factor (1) : Design of ExperimentDocument28 pagesExperiments With A Single Factor (1) : Design of ExperimentAchmad AlfianNo ratings yet

- MTH6134 Notes11Document77 pagesMTH6134 Notes11striaukasNo ratings yet

- Ch6-Comparisons of SeveralDocument43 pagesCh6-Comparisons of SeveralarakazajeandavidNo ratings yet

- 2 Way AnovaDocument20 pages2 Way Anovachawlavishnu100% (1)

- Statistic and ProbabilityDocument15 pagesStatistic and ProbabilityFerly TaburadaNo ratings yet

- Impulse Balance Theory and its Extension by an Additional CriterionFrom EverandImpulse Balance Theory and its Extension by an Additional CriterionRating: 1 out of 5 stars1/5 (1)

- Bab III Integral GandaDocument396 pagesBab III Integral GandaMessy CoolNo ratings yet

- 1 CH - 7 - WKSHTDocument8 pages1 CH - 7 - WKSHTJohnNo ratings yet

- Havasi, R., & Darabi, R. (2016)Document12 pagesHavasi, R., & Darabi, R. (2016)Wisnu B. MahendraNo ratings yet

- Am3 Central Limit Theorem ExamplesDocument3 pagesAm3 Central Limit Theorem ExamplesHồng HàNo ratings yet

- 1 - Fundamentals of Process Capability - 2017Document15 pages1 - Fundamentals of Process Capability - 2017Yo GoldNo ratings yet

- Amore Frozen Foods Case Solution - FinalDocument7 pagesAmore Frozen Foods Case Solution - FinalSaurabh Sharma100% (1)

- EA-4-16 G-2003. EA Guidelines On The Expression of Uncertainty in Quantitative TestingDocument28 pagesEA-4-16 G-2003. EA Guidelines On The Expression of Uncertainty in Quantitative Testingcolve87No ratings yet

- Cheating Formula Statistics - Final ExamDocument4 pagesCheating Formula Statistics - Final ExamM.Ilham PratamaNo ratings yet

- Statistics How To: The ANOVA TestDocument24 pagesStatistics How To: The ANOVA TestDebdeep GhoshNo ratings yet

- Applied Stochastic ProcessesDocument104 pagesApplied Stochastic ProcessesSumanth ReddyNo ratings yet

- How Many Discoveries Have Been Lost by Ignoring MoDocument16 pagesHow Many Discoveries Have Been Lost by Ignoring MoGIUSEPPE CURCINo ratings yet

- Detecting Earning ManagementDocument34 pagesDetecting Earning ManagementAlmizan AbadiNo ratings yet

- P6LabManual1 AY11-12Document134 pagesP6LabManual1 AY11-12roxy8marie8chanNo ratings yet

- 2 Normal DistributionDocument39 pages2 Normal DistributionDANELYN PINGKIANNo ratings yet

- ANSI ASQ Z1.9 2003 (R 2018) Sampling Procedures and Tables For Inspection by Variables For Percent Noncon FormingDocument16 pagesANSI ASQ Z1.9 2003 (R 2018) Sampling Procedures and Tables For Inspection by Variables For Percent Noncon Formingcdming100% (1)

- LESSON 4 Normal DistributionDocument60 pagesLESSON 4 Normal DistributionCarbon CopyNo ratings yet

- Statistics Probability Q3 Answer Sheet 1 PDFDocument53 pagesStatistics Probability Q3 Answer Sheet 1 PDFJohn Michael BerteNo ratings yet

- MATH 403 EDA Chapter 8Document19 pagesMATH 403 EDA Chapter 8Marco YvanNo ratings yet

- Contoh Output PenelitianDocument12 pagesContoh Output PenelitianAliyah AhdarNo ratings yet

- Laplacian of GaussianDocument18 pagesLaplacian of GaussianZeeshan Hyder BhattiNo ratings yet

- Normal Proby DistributionDocument12 pagesNormal Proby DistributionFrancis Remorin ViloriaNo ratings yet

- Stat2507 FinalexamDocument12 pagesStat2507 Finalexamyana22No ratings yet

- TChap2-sampling Dist & Confidence IntervalDocument3 pagesTChap2-sampling Dist & Confidence IntervalNurulFatimahalzahraNo ratings yet

- Tugas Addb 20220319Document6 pagesTugas Addb 20220319Arba PandhuNo ratings yet

- 1 PDFDocument44 pages1 PDFAftab KhanNo ratings yet

- Summer 578 Assignment 2 SolutionsDocument13 pagesSummer 578 Assignment 2 SolutionsGradu8tedOne100% (1)

- Set 6Document4 pagesSet 6Barbaros RosNo ratings yet

- Abrams 2005 Significance of Hydrocarbon Seepage Relative To Petroleum GenerationDocument21 pagesAbrams 2005 Significance of Hydrocarbon Seepage Relative To Petroleum GenerationGary PateNo ratings yet

- Quantitative Techniques by Amit RamawatDocument26 pagesQuantitative Techniques by Amit RamawatAmit RamawatNo ratings yet

- Mechanical Forensics - Friction Table UpdateDocument14 pagesMechanical Forensics - Friction Table UpdatepablojesdeNo ratings yet