Professional Documents

Culture Documents

The Uniform Distributn

Uploaded by

sajeerCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Uniform Distributn

Uploaded by

sajeerCopyright:

Available Formats

The Uniform Distribution

A continuous random variable X which has probability density function given by:

F (x) = 1 for a x b (1)

b - a

and f(x) = 0 if x is not between a and b) follows a uniform distribution with

parameters a and b. We write X ~ U (a , b)

Remember that the area under the graph of the random variable must be

equal to 1 (see continuous random variables).

Expectation and Variance

If X ~ U (a , b), then:

E (X) = (a + b)

Var (X) = (1/12)(b - a)

2

Proof of Expectation

2 2 2

1

2 2( ) 2

b

a

x x b a b a

dx

b a b a b a

1 +

1

]

Cumulative Distribution Function

The cumulative distribution function can be found by integrating the p.d.f between 0 and t:

1

( )

t

a

t a

F t dx

b a b a

Methods for generating random numbers

from uniform distribution :

First: Inverse Transform method

Suppose we can generate a uniform random number r on [0 1]. How can

we generate

numbers x with a given pdf P(x)?

To warm up our brain, let's first think about something else. Suppose we

generate a

uniform random number 0 < r < 1 and square it. So we have

2

x r

.

Clearly we also have

0 < x < 1. What is the pdf P(x) for x? A wild guess might be that it is just

the square of

the pdf for r, so x would also be uniform. It is however easy to see that this

can't be true.

Consider the probability p that x < 1/2. If x was uniform on [0 1] we would

get p = 1/2.

In order to get an x < 1/2 we must have gotten an

1/ 2 r <

. The

probability for this is 1/ 2 p , not p = 1/2. So P(x) can't be uniform.

To figure out what P(x) is, consider the probability p that x falls in the

interval [ ]

xx r + . In the limit 0 x we have, according to the definition

of a pdf, p = P(x) r . So if we can figure out p, we can compute P(x).

For x to fall in [ ]

xx r + , we must generate r in the range x x x

1

+

]

.

Since r is uniform, the probability for this (which is also p) is just the length

of this interval. So we get

p x x x +

and we now use p = P(x) r and solve for P(x), obtaining

( )

x x x

p x

x

+

Taking the limit 0 x we thus obtain

0

1

( ) lim

2

x

x x x d

p x x

x dx

x

+

With this result, we now have an instance of the inverse transform method

to generate

random numbers with pdf

1/ 2 x

: generate a uniform random number r

and square it.

The general form of the inverse transform method is obtained by

computing the pdf of

x = g(r) for some function g, and then trying to _nd a function g such that

the desired

pdf is obtained.

Let us assume that g(r) is invertible with inverse

1

( ) g x

. The chance that x

lies in the

interval [ ]

xx dx + is P(x)dx, for infinitesimal dx. What values of r should we

have gotten to get this? (Remember we are generating values x by calling

a uniform random number generator to get r and then setting x = g(r).)

We should have gotten an r in the interval [ r r + dr ], with r =

1

( ) g x

)

and r + dr =

1

g

(x + dx). Write out the last formula and get

1 1

| ( ) ( ) '( ) , r dr g x g x dx

+

where ' denotes the derivative. Using r =

1

( ) g x

we can simplify this to

( )

1

'( ) , dr g x dx

The probability for the r value to be in the interval [r r + dr] is just

dr. This is also the

probability for x to be in [x x + dx], which is P(x)dx. Using Eq. 1, we

get

1

( ) ( ) '( ) , p x dx g x dx

thus

1

( ) ( ) '( ). p x g x

Integrating both sides and remembering that ( ) ( )

x

F x P y dy

, gives us

1

( ) ( ), F x g x

or

1

( ) ( ), g x F x

provided F(x) has an inverse.

In summary, to generate a number x with pdf P(x) using the inverse

transform method , we first figure out the cdf F(x) from P(x). We then

invert that by solving r = F(x) for x, which gives the function

1

( ) F r

. We

then generate a uniform random number 0 < r < 1 and compute x =

1

( ) F r

Second: Acceptance and rejection method

Let u and v be two independent random numbers with uniform

distribution in the intervals 0 1 u < , and 1 1 v . Do the transformation

2

/ , x sv u a y u + . The rectangle in the (u,v) plane is transformed into a hat

function y = h(x) in the (x, y) plane. All (x, y) points will fall under the hat curve y = h(x)

which is uniform in the center and falls like

2

x

in the tails. h(x) is a useful hat function

for the rejection method. The acceptance condition is v < f(x)/k. s and a are chosen so

that ( ) ( ) f x kh x for all x, where

2

2

1......... ..

( )

...........

( )

for a s x a s

h x

s

elsewhere

x a

< +

' ;

The advantage of this method is that the calculations are simple and fast, and the

rejection rate is reasonable. Quick acceptance and quick rejection areas can be

applied. For discrete distributions, f(x) is replaced by f( x ) .

The following values are used for the hat parameters for the poisson, binomial and

hypergeometric distributions

1

,

2

a and +

2

1, 1

2 1 3 3

( )

2 2

s s s

e e

+ +

where

is the mean and

2

is the variance of the distribution (Ahrens & Dieter

1989). These values are reasonably close to the optimal values. It is possible to

calculate the optimal values for a and s (Stadlober 1989, 1990), but this adds to the

set-up time with only a marginal improvement in execution time. The optimal value of

k is of course the maximum of f(x): k = f(M), where M is the mode.

This method is used for the poisson, binomial and hypergeometric distributions in

StochasticLib.

Third: Convolution method

Convolution of Uniform Distributions

Consider a sum X of independent and uniformly distributed random

variables [ ]

, , 1,......, :

i i i

X U a b i n :

1

...

n

X X X + +

then the following is true.

The sum X is symmetrically distributed around

1 1

( ... ) ( ... )

2

n n

a a b b + + + + +

If

2

1

( )

n

n

i i

i

b a

(6)

then by the central limit theorem the distribution of

[ ]

[ ]

1

1

n

i

i

n

i

i

X E X

V X

tends for n to the standard normal distribution

Hence in case (6) and for sufficiently large n the distribution of X can be

approximated by the normal distribution

1 1

( [ ], [ ])

n n

i i

i i

N E X V X

. However,

it turns out that in spite of the symmetry relation convergence to the

normal distribution may be rather slow, if the lengths

i i

b a are very

different implying that the normal approximation might be bad even for

large values of n. Therefore, the exact distribution of X would be desirable.

Convolution of Identical Uniform Distributions

Historically, the case of independent and identically uniformly distributed

Xis played an important role, i. e.

1

...

n

X X X + + with [ ; ], 1,...,

i

X U a b i n : (8)

The distribution of X was first studied by N. I. Lobatchewski in 1842. He

wanted to use it to evaluate the error of astronomical measurements, in

order to decide whether the Euclidean or the non-Euclidean geometry is

valid in the universe.

the density function of X.

( ) ( )

1

( , )

( )

0

1

1 ( ) .... ..

( )

( 1)( )

0.....................................................................................

n

n n x

i

n

n

i

n

x na i b a if na x nb

f x

i n b a

otherwise

' ;

,

%

( ) , : [ ]

x na

n n x

b a

%

largest integer less than

x na

b a

It is well-known that the speed of convergence to the normal distribution is

extremely fast in the identically distributed case given by (8). Already for

n = 4 the difference between the normal approximation and the exact

distribution is often negligible.

If the single distributions are not identical, but have a common length of

their support,

.. .. 1,...,

i i

b a b a for i n ,then it is possible to reduce the problem of

deriving the

distribution of X to the identically distributed case by suitable

transformations. However, allowing arbitrary uniform distributions requires

a different approach for determining the distribution function of X.

Convolution of Arbitrary Uniform Distributions

The convolution of arbitrary uniform distributions can be obtained

by a result given

in [1] which refers to the distribution function of a linear

combination of independent

U [0, 1]-distributed random variables. However, the given formula is

rather unsuited for practical applications and, therefore, a different

representation of the distribution function is derived here.

You might also like

- Chapter 4Document36 pagesChapter 4Sumedh KakdeNo ratings yet

- 5 Continuous Random VariablesDocument11 pages5 Continuous Random VariablesAaron LeãoNo ratings yet

- HMWK 4Document5 pagesHMWK 4Jasmine NguyenNo ratings yet

- 1 Linearisation & DifferentialsDocument6 pages1 Linearisation & DifferentialsmrtfkhangNo ratings yet

- Probability Distributions: Discrete and Continuous Univariate Probability Distributions. Let S Be A Sample Space With A ProbDocument7 pagesProbability Distributions: Discrete and Continuous Univariate Probability Distributions. Let S Be A Sample Space With A ProbmustafaNo ratings yet

- Maximum Entropy Models and Minimum Relative Entropy PrinciplesDocument20 pagesMaximum Entropy Models and Minimum Relative Entropy PrinciplesMatthew HagenNo ratings yet

- Lecture Notes Week 1Document10 pagesLecture Notes Week 1tarik BenseddikNo ratings yet

- Class6 Prep ADocument7 pagesClass6 Prep AMariaTintashNo ratings yet

- Differential Entropy ExplainedDocument29 pagesDifferential Entropy ExplainedGaneshkumarmuthurajNo ratings yet

- 104 Inverse TrigsdfsdDocument6 pages104 Inverse TrigsdfsdJinnat AdamNo ratings yet

- Review SolDocument8 pagesReview SolAnuj YadavNo ratings yet

- KorovkinDocument10 pagesKorovkinMurali KNo ratings yet

- Chapter 2: Continuous Probability DistributionsDocument4 pagesChapter 2: Continuous Probability DistributionsRUHDRANo ratings yet

- Schoner TDocument12 pagesSchoner TEpic WinNo ratings yet

- Extending Continuous Real-Valued Functions on Closed SubsetsDocument3 pagesExtending Continuous Real-Valued Functions on Closed Subsetsdavid burrellNo ratings yet

- TMP 5 E24Document6 pagesTMP 5 E24FrontiersNo ratings yet

- Lecture 09Document15 pagesLecture 09nandish mehtaNo ratings yet

- A. Approximations: 1. The Linear Approximation LinearizationsDocument7 pagesA. Approximations: 1. The Linear Approximation LinearizationsbesillysillyNo ratings yet

- Siggraph03Document24 pagesSiggraph03Thiago NobreNo ratings yet

- EEC 126 Discussion 4 SolutionsDocument4 pagesEEC 126 Discussion 4 SolutionsHoward100% (1)

- Diet of Random VariablesDocument8 pagesDiet of Random VariablesvirbyteNo ratings yet

- Stationary Points Minima and Maxima Gradient MethodDocument8 pagesStationary Points Minima and Maxima Gradient MethodmanojituuuNo ratings yet

- Approximating Continuous FunctionsDocument19 pagesApproximating Continuous Functionsap021No ratings yet

- On the difference π (x) − li (x) : Christine LeeDocument41 pagesOn the difference π (x) − li (x) : Christine LeeKhokon GayenNo ratings yet

- Propagation of Error or Uncertainty: Marcel Oliver December 4, 2015Document8 pagesPropagation of Error or Uncertainty: Marcel Oliver December 4, 20154r73m154No ratings yet

- Practice Quiz 2 SolutionsDocument4 pagesPractice Quiz 2 SolutionsGeorge TreacyNo ratings yet

- Uniform continuity of the sinc functionDocument8 pagesUniform continuity of the sinc functionOmaar Mustaine RattleheadNo ratings yet

- Construction MaxmonotoneDocument19 pagesConstruction MaxmonotoneOmaguNo ratings yet

- Solution Set 2Document10 pagesSolution Set 2TomicaTomicatomicaNo ratings yet

- Practice Final Exam Solutions: 2 SN CF N N N N 2 N N NDocument7 pagesPractice Final Exam Solutions: 2 SN CF N N N N 2 N N NGilberth Barrera OrtegaNo ratings yet

- Basis ApproachesDocument9 pagesBasis ApproachesAgustin FloresNo ratings yet

- Chapter 2Document25 pagesChapter 2McNemarNo ratings yet

- Continuous RvsDocument34 pagesContinuous RvsArchiev KumarNo ratings yet

- Lesson 2: Probability Density Functions (Of Continuous Random Variables)Document7 pagesLesson 2: Probability Density Functions (Of Continuous Random Variables)Isabella MondragonNo ratings yet

- Contraction Mapping Theorem - General SenseDocument19 pagesContraction Mapping Theorem - General SenseNishanthNo ratings yet

- Christian Remling: N N N J J P 1/pDocument11 pagesChristian Remling: N N N J J P 1/pJuan David TorresNo ratings yet

- Lecture 6. Order Statistics: 6.1 The Multinomial FormulaDocument19 pagesLecture 6. Order Statistics: 6.1 The Multinomial FormulaLya Ayu PramestiNo ratings yet

- Multivariate DistributionsDocument8 pagesMultivariate DistributionsArima AckermanNo ratings yet

- Part 2 - Probability Distribution FunctionsDocument31 pagesPart 2 - Probability Distribution FunctionsSabrinaFuschettoNo ratings yet

- Chapter 5E-CRV - W0 PDFDocument31 pagesChapter 5E-CRV - W0 PDFaltwirqiNo ratings yet

- Important InequalitiesDocument7 pagesImportant InequalitiesBCIV101MOHAMMADIRFAN YATTOONo ratings yet

- CH 6Document54 pagesCH 6eduardoguidoNo ratings yet

- Fall 2009 Final SolutionDocument8 pagesFall 2009 Final SolutionAndrew ZellerNo ratings yet

- Stable Manifold TheoremDocument7 pagesStable Manifold TheoremRicardo Miranda MartinsNo ratings yet

- Probability Density FunctionsDocument8 pagesProbability Density FunctionsThilini NadeeshaNo ratings yet

- Indicator functions and expected values explainedDocument10 pagesIndicator functions and expected values explainedPatricio Antonio VegaNo ratings yet

- DifferentiationDocument9 pagesDifferentiationsalviano81No ratings yet

- Handout2 BasicsOf Random VariablesDocument3 pagesHandout2 BasicsOf Random Variablesozo1996No ratings yet

- Metric Spaces1Document33 pagesMetric Spaces1zongdaNo ratings yet

- Handout For Chapters 1-3 of Bouchaud: 1 DenitionsDocument10 pagesHandout For Chapters 1-3 of Bouchaud: 1 DenitionsStefano DucaNo ratings yet

- Probability Distributions: 4.1. Some Special Discrete Random Variables 4.1.1. The Bernoulli and Binomial Random VariablesDocument12 pagesProbability Distributions: 4.1. Some Special Discrete Random Variables 4.1.1. The Bernoulli and Binomial Random VariablesYasso ArbidoNo ratings yet

- Lecture11 (Week 12) UpdatedDocument34 pagesLecture11 (Week 12) UpdatedBrian LiNo ratings yet

- Handout 03 Continuous Random VariablesDocument18 pagesHandout 03 Continuous Random Variablesmuhammad ali100% (1)

- 2.1 Probability Spaces: I I I IDocument10 pages2.1 Probability Spaces: I I I INCT DreamNo ratings yet

- Numerical AnalysisDocument12 pagesNumerical AnalysiskartikeyNo ratings yet

- Mathematical FinanceDocument13 pagesMathematical FinanceAlpsNo ratings yet

- Analysis Distribution TH LecturesDocument79 pagesAnalysis Distribution TH Lecturespublicacc71No ratings yet

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- ATOM Install Notes ReadmeDocument2 pagesATOM Install Notes ReadmeRahulNo ratings yet

- Quick Start Guide for SanDisk SecureAccessDocument21 pagesQuick Start Guide for SanDisk SecureAccessbijan034567No ratings yet

- ThashahudDocument1 pageThashahudsajeerNo ratings yet

- Red Hat Enterprise Linux-7-Virtualization Security Guide-En-USDocument18 pagesRed Hat Enterprise Linux-7-Virtualization Security Guide-En-USsajeerNo ratings yet

- Perf Monitoring of The Apache Web ServerDocument77 pagesPerf Monitoring of The Apache Web ServersajeerNo ratings yet

- RHEL7 - Red Hat Enterprise Linux Technology Capabilities and LimitsDocument2 pagesRHEL7 - Red Hat Enterprise Linux Technology Capabilities and LimitssajeerNo ratings yet

- RHEL7 - 2.2.making Installation USB MediaDocument3 pagesRHEL7 - 2.2.making Installation USB MediasajeerNo ratings yet

- Red Hat Enterprise Linux-7-Power Management Guide-En-USDocument44 pagesRed Hat Enterprise Linux-7-Power Management Guide-En-USsajeerNo ratings yet

- Red Hat Enterprise Linux-7-7.0 Release Notes-ru-RUDocument60 pagesRed Hat Enterprise Linux-7-7.0 Release Notes-ru-RUsajeerNo ratings yet

- Managing Veritas Cluster From Command Line For OnlinesbiDocument6 pagesManaging Veritas Cluster From Command Line For OnlinesbisajeerNo ratings yet

- Practical Apache Web Server Administration with Jon PeckDocument2 pagesPractical Apache Web Server Administration with Jon PecksajeerNo ratings yet

- Perf Monitoring of The Apache Web ServerDocument77 pagesPerf Monitoring of The Apache Web ServersajeerNo ratings yet

- Kannur University: The Following Grades Are Awarded To Sri/Smt - KRISHNA KISHORE K.B at The Bachelor ofDocument1 pageKannur University: The Following Grades Are Awarded To Sri/Smt - KRISHNA KISHORE K.B at The Bachelor ofsajeerNo ratings yet

- AnsiDocument9 pagesAnsisajeerNo ratings yet

- MovelvfromvgtovgDocument3 pagesMovelvfromvgtovgsajeerNo ratings yet

- C++ and DatastructerDocument2 pagesC++ and DatastructersajeerNo ratings yet

- Normal Distribution and Probability: Mate14 - Design and Analysis of Experiments in Materials EngineeringDocument23 pagesNormal Distribution and Probability: Mate14 - Design and Analysis of Experiments in Materials EngineeringsajeerNo ratings yet

- Java FrameDocument2 pagesJava FramesajeerNo ratings yet

- Java Lab ManualDocument51 pagesJava Lab ManualsajeerNo ratings yet

- Calculatro in JavaDocument2 pagesCalculatro in JavasajeerNo ratings yet

- Significance of Searching Class PathDocument12 pagesSignificance of Searching Class PathsajeerNo ratings yet

- Kannur University Revised Exam TimetablesDocument8 pagesKannur University Revised Exam TimetablessajeerNo ratings yet

- Basic Statistics For LmsDocument23 pagesBasic Statistics For Lmshaffa0% (1)

- Third Quarter Exam in Stats and ProbabilityDocument3 pagesThird Quarter Exam in Stats and ProbabilityPing Homigop JamioNo ratings yet

- Probability and Statistics Symbols GuideDocument3 pagesProbability and Statistics Symbols GuideChindieyciiEy Lebaiey CwekZrdoghNo ratings yet

- Jasmine C. Santos DEEE Office, 9818500 loc 3300 jasmine.santos@eee.upd.edu.phDocument4 pagesJasmine C. Santos DEEE Office, 9818500 loc 3300 jasmine.santos@eee.upd.edu.phEmman Joshua BustoNo ratings yet

- Probability Via ExpectationDocument6 pagesProbability Via ExpectationMichael YuNo ratings yet

- stat-36700 homework 4 solutionsDocument14 pagesstat-36700 homework 4 solutionsDRizky Aziz SyaifudinNo ratings yet

- Niki Q4 S1 Exam Full MarksDocument2 pagesNiki Q4 S1 Exam Full MarksNazmun AliNo ratings yet

- Topic 03Document46 pagesTopic 03Solanum tuberosumNo ratings yet

- Billingsley CLTDocument5 pagesBillingsley CLTjiyaoNo ratings yet

- Module 3 - Discrete Probability DistributionsDocument38 pagesModule 3 - Discrete Probability DistributionsKhiel YumulNo ratings yet

- 4 Continuous Probability Distribution.9188.1578362393.1974Document47 pages4 Continuous Probability Distribution.9188.1578362393.1974Pun AditepNo ratings yet

- Chapter 4 Expected ValuesDocument13 pagesChapter 4 Expected ValuesJoneMark Cubelo RuayanaNo ratings yet

- 410Hw02 PDFDocument3 pages410Hw02 PDFdNo ratings yet

- Random Variable and Joint Probability DistributionsDocument33 pagesRandom Variable and Joint Probability DistributionsAndrew TanNo ratings yet

- An Introduction To Stochastic CalculusDocument102 pagesAn Introduction To Stochastic Calculusfani100% (1)

- Probability L2 PTDocument14 pagesProbability L2 PTpreticool_2003No ratings yet

- Exercise # 1: Statistics and ProbabilityDocument6 pagesExercise # 1: Statistics and ProbabilityAszyla ArzaNo ratings yet

- BS Unit 2Document24 pagesBS Unit 2Sherona ReidNo ratings yet

- Minimum Variance Portfolio WeightsDocument4 pagesMinimum Variance Portfolio WeightsDaniel Dunlap100% (1)

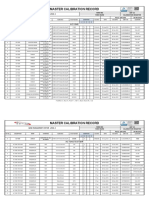

- Calibration Equipment ListDocument25 pagesCalibration Equipment ListArun SidharthNo ratings yet

- MGFDocument3 pagesMGFJuhi Taqwa Famala IINo ratings yet

- Discrete Distributions: Dr. Hanan HammouriDocument26 pagesDiscrete Distributions: Dr. Hanan HammouriAmal Al-AbedNo ratings yet

- Durbin Watson TablesDocument1 pageDurbin Watson TablesSUKEERTINo ratings yet

- Tutorial Letter 103/3/2013: Distribution Theory IDocument44 pagesTutorial Letter 103/3/2013: Distribution Theory Isal27adamNo ratings yet

- Types of Data and TabulationDocument2 pagesTypes of Data and TabulationKeshav karkiNo ratings yet

- A Basket Contains 10 Ripe Bananas and 4 Unripe BanDocument4 pagesA Basket Contains 10 Ripe Bananas and 4 Unripe BanAlexis Nicole Madahan100% (1)

- ECON4150 - Introductory Econometrics Lecture 1: Introduction and Review of StatisticsDocument41 pagesECON4150 - Introductory Econometrics Lecture 1: Introduction and Review of StatisticsMwimbaNo ratings yet

- Basic Probability ReviewDocument77 pagesBasic Probability Reviewcoolapple123No ratings yet

- Ramacivil India Construction Private LimitedDocument3 pagesRamacivil India Construction Private LimitedHimanshu ChaudharyNo ratings yet

- Measure and IntegralDocument5 pagesMeasure and Integralkbains7No ratings yet