Professional Documents

Culture Documents

The Economics of Censorship Resistance

Uploaded by

Rejeev CvOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Economics of Censorship Resistance

Uploaded by

Rejeev CvCopyright:

Available Formats

The Economics of Censorship Resistance

George Danezis and Ross Anderson

University of Cambridge, Computer Laboratory, 15 JJ Thomson Avenue, Cambridge CB3 0FD, United Kingdom. (George.Danezis, Ross.Anderson)@cl.cam.ac.uk

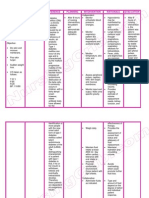

Abstract. We propose the rst economic model of censorship resistance. Early peer-to-peer systems, such as the Eternity Service, sought to achieve censorshop resistance by distributing content randomly over the whole Internet. An alternative approach is to encourage nodes to serve resources they are interested in. Both architectures have been implemented but so far there has been no quantitative analysis of the protection they provide. We develop a model inspired by economics and conict theory to analyse these systems. Under our assumptions, resource distribution according to nodes individual preferences provides better stability and resistance to censorship. Our results may have wider application too.

Introduction

Peer-to-peer designs have evolved in part as a response to technical censorship of early remailer systems such as penet [13], and early le distribution systems such as Napster [5]. By distributing functionality across all network nodes, we can avoid an obvious single point of failure where a simple attack could incapacitate the network. Although maintaining the availability of the les and censorship resistance is the ra son d etre of such systems, only heuristic security arguments have been presented so far to assess how well they full their role. Other network design issues have often overshadowed this basic security goal. Two main paradigms have emerged in the last few years in peer-to-peer systems. The rst is to scatter resources randomly across all nodes, hoping that this will increase the opponents censorship costs (we will call this the random model ). The theory is that censorship would inconvenience everyone in the network including nodes that are not interested in the censored material and more support would be gathered against censorship. Andersons Eternity Service [24] follows this strategy, followed by freenet [6] and Mojo Nation [25]. Structured peer-to-peer systems, including distributed hash table-based systems [21, 18] and others [24], scatter les around in a deterministic way on random nodes, which achieves a similar eect. The second paradigm allows peer nodes to serve content they have downloaded for their personal use, without burdening them with random les (we will call this the discretionary model ). The popular Guntella [17] and Kazaa [15] are real-world examples of such systems. Users choose to serve les they are

George Danezis and Ross Anderson

interested in and have downloaded. Other theoretical designs have assessed how such systems scale, by performing distributed information retrieval [7, 22]. The comparison between these two paradigms has so far concentrated on their eciency in systems and network engineering terms: the cost of search, retrieval, communications and storage. In this paper we are going to compare the two paradigms ability to resist censorship, as per the original intention of peer-to-peer systems. We present a model that incorporates the heterogeneous interests of the peer nodes, inspired by conict theory [14] and economic analysis. We will use this to estimate the cost of defending networks against censorship using the two dierent paradigms.

The red-blue utility model

We consider a network of N peer nodes. Each node ni has a dierent set of interests from the other nodes: it may prefer news articles to political philosophy essays, or prefer nuclear physics to cryptology. Furthermore each node might even have dierent political views from other nodes. We model this by considering two types of resources: red and blue1 . We assign to each node ni a preference for red ri [0, 1] and a preference for blue bi = 1 ri (note that ri + bi = 1). Each node likes having and serving resources, but it prefers to have or serve a balance of resources according to its preference ri and bi . For this reason we consider that the utility function of a node holding T resources out of which R are red resources and B are blue resources is (with T = R + B ): Ui (R, B ) = T (R/T ri 1)(R/T ri + 1) (1)

This is a quadratic function (as shown in gure 1) with its maximum at R = ri T , scaled by the overall number of resources T that the node ni holds. This utility function increases as the total number of resources does, but is also maximal when the balance between red and blue resources matches the preferences of the node. Other unimodal functions with their maxima at ri T , such as a normal distribution, give broadly similar results. This model diverges from the traditional economic analysis of peer-to-peer networks, under which peers have ex-ante no incentives to share [11]. This assumption might be true for copied music, but does not hold for other resources such as news, political opinions, or scientic papers. For example, a node with left-wing views might prefer to read, and redistribute articles, that come 80% from The Guardian and 20% from The Telegraph (ri = 0.8, bi = 0.2) while a node in the middle of the political spectrum might prefer to read and redistribute them equally (ri = 0.5, bi = 0.5) and a node on the right might prefer 80% from The Telegraph and 20% from The Guardian (ri = 0.2, bi = 0.8). Furthermore their respective utility increases the more they are able to distribute this material in volumes that are in line with their political preferences.

1

We follow the economics tradition of only considering two goods. Real life preferences have ner granularity, and our results also apply to n goods.

Random or Discretionary Resource Distribution?

100 95 90 85 80

Utility

Utility for each distribution of resources

Utility

s

U at 70 (maximum) U at 50 (system)

i

75 70 65 60 55 50 0 20 40 60 80 100 U at 10 (censor)

c

Red resources

Fig. 1. Example utility model for discretionary, random and censored distribution.

The utility of discretionary and random distribution

We will rst examine the utility of the network nodes when they can choose which les they store and help to serve (the discretionary model). This corresponds to the Gnutella or Kazaa philosophy. Assuming that a node has the ability to serve T les in total, its utility is maximised for a distribution of red and blue resources that perfectly matches its preferences: R = ri T and B = bi T . Our proposed utility function Ui is indeed maximal for Ui (ri T, bi T ) at each node ni . On the other hand, architectures such as Eternity and DHTs scatter the red and blue resources randomly across all nodes ni . What is the average or expected utility of each node ni ? If there exists in the system in total R red resources and B blue resources we can dene a system-wide distribution of resources (rs , bs ) that each node in the system will on average hold, with: R B bs = (2) R+B R+B Each node ni will have on average a utility equal to U (rs T, bs T ). It is worth noting early on that the utility each node will attain in the random case is always lower or equal to the utility a node node has under the discretionary model: rs = Ui (ri T, bi T ) Ui (rs T, bs T ) (3)

For this reason the discretionary peer-to-peer paradigm will be preferred, given the choice and in the absence of other mechanisms.

George Danezis and Ross Anderson

It is worth exploring in more detail the implications of the lower utility provided by the random distribution model. The equality Ui (ri T, bi T ) = Ui (rs T, bs T ) is true when rs = ri and bs = bi , in other words when the distribution of resources in the whole system aligns with the preferences of the particular node. The rst observation is that this cannot hold true for all nodes, unless they share the same preferences. Secondly it is in the self-interest of all nodes to try to tip the balance of R and B towards their preferences. With a utility function slightly more biased towards serving which we might call an evangelism utility this could mean ooding the network with red or blue les according to their preferences. An alternative is subversion. Red-loving nodes who consider the network overly biased toward Blue could just as easily try to deny service of Blue les, and in extremis they might try to deny service generally. Systems such as freenet and distributed hash tables can be quite prone to ooding, whether of the evangelism or service-denial variety. A number of systems, including FreeHaven [8], Mojonation [25] and Eternity [24], recognised that where the utility function places more value on consumption than on service, nodes have incentives to free-ride. Eternity proposed, and Mojonation tried to implement, a payment mechanism to align the incentives that nodes have to store and serve les. By storing and serving le nodes acquire mojo, a notional currency, that allows them to get service from other nodes. Due to implementation failures, poor modelling and ination [25] Mojonation provided sub-standard service and did not take o. FreeHaven took the route of a reputation system, whereby peers rate the quality of service that they provide each other and prioritise service to good providers. This is an active area of research. Perhaps such a system could be used to rate nodes using some collaborative mechanism that established rs and bs through voting, and then rated peers in accordance with their closeness to this social norm. This is not trivial; voting theory, also known as social choice theory, tells us that it is hard to create a voting system that is both ecient and equitable [20, 19]. The additional constraints of peer-to-peer networks nodes frequently joining and leaving, transient identities, and decentralisation make a democratic system a non-trivial problem. Some systems attempt to hide from the nodes which resources they are storing or serving, by encrypting them or dispersing them. This is thought to protect the nodes by providing plausible deniability against censors, but also preventing nodes deleting resources they do not like. In our framework these techniques amount to hiding from the nodes the actual distribution of red and blue resources they hold, and can even go as far as hiding the overall distribution rs , bs of the system. Eectively hiding this information makes these systems very expensive. The eects of the participating nodes state of uncertainty, on their incentives to honesty participate in such a network, should be the subject of further study. In what follows, we will for simplicity start o by considering the attackers to be exogenous, that is, external to our system.

Random or Discretionary Resource Distribution?

Censorship

So far we have compared the utility of nodes in the random model versus the discretionary model, and have found the latter to always provide as good or higher utility for all nodes in the absence of censorship. We will now examine how nodes react to censorship. We model censorship as an external entitys attempt to impose on a set of nodes a particular distribution of les rc and bc . The eect of the censor is not xed, but depends on the amount of resistance oered by the aected nodes. Assume that a node that is not receiving attention from the censor can store up to T resources. A node under censorship can chose to store fewer resources (T t) and invest an eort level of t to resist censorship. We dene the probability that a node will successfully ght censorship (and re-establish its previous distribution of resources) as P (t). With probability 1 P (t) the censor will prevail and impose the distribution rc , bc . We rst consider the discretionary case, where nodes select the content they serve. Knowing the nodes preferences ri , bi , the censors distribution rc , bc , the total resource bound T and the probability P (t) that it defeats the censor, we can calculate the optimal amount of resources a node will invest in resisting censorship. The expected utility of a node under censorship which invests t in resisting the censor is the probability of success, times the utility in that case, plus the probability of failure times the utility in that case: U = P (t)Ui (ri (T t), bi (T t)) + (1 P (t))Ui (rc (T t), bc (T t)) (4)

Our utility functions Ui are unimodal and smooth, so assuming that the functions P (t) are suciently well-behaved, there will be an optimal investment in resistance t in [0, T ] which we can nd by setting dU dt = 0. We will start with the simplest example, namely where the probability P (t) of resisting censorship is linear in the defense budget t. Assume that if a node invests all its resources in defence it will prevail for sure, but will have nothing left to actually serve any les. At the other extreme, if it spends nothing on lawyers (or whatever the relevant mode of combat) then the censor will prevail for sure. Therefore we dene P (t) as: t (5) T By maximising (4) with P (t) being dened as in (5) we nd that the optimal defence budget td will be: P (t) = td = T 2Ui (rc , bc ) Ui (ri , bi ) 2 Ui (rc , bc ) Ui (ri , bi ) (6)

The node will divert td resources from le service to resisting censorship. We will also assume, for now, that the cost of the attack for the censor is equal to the nodes defence budget t.

George Danezis and Ross Anderson

So much for the case of a discretionary peer-to-peer network like Kazaa or gnutella where nodes choose the resources they distribute. We now turn to the case of Eternity or DHTs where resources are scattered randomly around the network and each node expects to hold a mixture rs , bs of les. As in the previous example, the utility of a node under censorship will depend on its defence budget t, and the censors choice of rc , bc , but also the systems distribution of les rs , bs :

U = P (t)Ui (rs (T t), bs (T t)) + (1 P (t))Ui (rc (T t), bc (T t))

(7)

A similar approach is followed as above to derive the optimal defence budget t for each node: ts = T 2Ui (rc , bc ) Ui (rs , bs ) 2 Ui (rc , bc ) Ui (rs , bs ) (8)

However, not all nodes will be motivated to resist the censor! Some of them will nd that Ui (rs T, bs T ) Ui (rc T, bc T ) i.e. their utility under censorship increases. This is not an improbable situation: in a network where half the resources are red and half are blue (rs = 0.5, bs = 0.5) a censor that shifts the balance to rc = 0 will benet the blue-loving nodes, and if they are free to set their own defence budgets then they will select t = 0.

Who ghts censorship harder?

We have derived the defence budget td of a node in a discretionary network, and that ts of a node in a random network. These also equal the censors costs in the two types of network. We will now show that the aggregate defence budget, and thus the cost of consorship, is greater in the discretionary model than in the random one, except in the case where all the nodes have the same preferences (in which case equality holds). The example in gure 2 illustrates the defence budgets of the random model versus the discretionary model. Note that for the maximum value of the defence budget t to be positive in the interval [0, T ] the following condition must be true: 0< in other words, 2Ui (rc , bc ) < Ui (rs , bs ) (10) T 2Ui (rc , bc ) Ui (rs , bs ) 2 Ui (rc , bc ) Ui (rs , bs ) (9)

When this is not true, a node maximises its utility by not ghting at all and choosing t = 0 (as illustrated in gure 3).

Random or Discretionary Resource Distribution?

20 15 10 5

Utility

Defence budget and utility: Some defence

td ts

max max

= 30.7958 = 26.875

T = 100, r = 0.95, r = 0.6, r = 0.1

i s c

0 5 10 15 20 0 discretionary model random model 20 40

Defence budget

60

80

100

Fig. 2. Example defence budget of the two models.

90 80 70 60

Utility

Defence budget and utility: No defence

discretionary model random model

50 40 30 20 10 0 0 No fighting: U = 1 < 2 U = 2*0.8 = 1.68 i c T = 100, r = 0.8, r = 0.5, r = 0.4

i s c

20

40

Defence budget

60

80

100

Fig. 3. Example of zero defence budget in both models.

George Danezis and Ross Anderson

Given the observations above it follows that: i S , td ts

iS

td

iS

ts

(11)

Whatever the strategy of the attacker, it is at least as costly or more costly to attack a network architecture based on the discretionary model, compared with the random model. Equality holds when for each node, td = ts , which in turn means that ri = rs . This is the case of homogeneous preferences. In all other cases, the cost to censor a set of nodes will be maximised when resources are distributed according to their preferences rather than randomly.

Discussion

The above model is very simple but still gives some important initial insights into the economics of censorship resistance. Censorship is an economic activity; whether a particular kind of material is repressed using the criminal or civil law, or using military force, there are costs for the censor. Defence expenditure by the target (whether on lawyers, lobbying, technical protection measures or even on armed conict) can diminish the censors prospect of success. Until now, much work on censorship resistance has seen censorship as a binary matter; a document is either proscribed by a court or it is not, while a technical system is either vulnerable to attack or it is not. We believe such models are as unrealistic as the global adversary sometimes posited in cryptography an opponent that can record or modify all messages on network links. We suspect that all-powerful opponents would make censorship uninteresting as a technical issue; resistance would be impossible. Similarly, assuming that no-one can censor any nodes would provide little intuition into real systems. Technology changes can greatly aect the parameters. For example, the introduction of moveable type printing made it much harder to suppress books thought to be heretical or seditious, and this change in the underlying economics helped usher in the modern age. It is also possible that developments such as online publishing and trusted computing may make censorship easier once more, with eects we can only guess at. Therefore trying to analyse the cost of both censorship and resistance to censorship is important. Our work presents a rst model and a framework for doing this. 6.1 Preferences and utility

Modelling the nodes preferences also provides important insights. Simply assuming that all nodes will ght censorship for an abstract notion of freedom of speech restricts the model to a fraction of potential real-world users. To take real-life examples, there have been online tussles between Scientologists and people critical of their organisation, and about sado-masochistic material that is legal under Californian law but illegal in Tennessee. The average critic of Scientology may not care hugely about sexual freedon, while a collector of

Random or Discretionary Resource Distribution?

spanking pictures may be indierent to religious disputes. While there are some individuals who would take a stand on freedom of speech on a broad range of issues, there may be many more who are prepared to defend it on a specic issue. On the other hand, assuming that nodes will not put up any resistance at all and meekly surrender any disputed documents or photographs, is also unfaithful to real-world experience. Allowing nodes to express heterogeneous interests, and preferences, when it comes to material they want to promote and protect, enables us to enhance the systems stability and security. It also enables us to defend against certain types of service-denial attack. For example, when an Eternity Service was rst implemented and opened for public use [3], one of the rst documents placed in it (by an anonymous poster) advocated sex between men and under-age boys. While some people would defend such speech, many would feel reluctant to use a system that expressed it. A discretionary peer-to-peer system can deal with such issues, much as ISPs currently decide which usenet newsgroups to support depending on local laws and their clients preferencs. Thus objectionable content need not provide a universal attack tool. Our model provides a rst framework for thinking about such issues. In particular we have found that, in the presence of heterogeneous preferences, systems that distribute material randomly across all nodes are less ecient at resisting censorship than those which allow storage according to node preferences. As this ineciency increases with heterogeneity, we expect random distribution to be more successful within groups with roughly homogeneous interests. When interests diverge, systems should either allow users to choose their resources or they will tend to be unstable. Nodes will prefer to form alternative networks that match better their preferences. Using our model we explain why while most initial peer-to-peer systems advocated random distribution of resources, the most successful ones implemented a discretionary approach. Our model might be extended in a number of ways. Most obviously we use red and blue resources as a simple example. It is imperative that nodes can express arbitrary and much ner grained preferences, and the results we present can be generalised to unimodal multi-dimensional utility functions. We chose to model node utility locally, and did not take into account how available a resource is globally through the network. Forming a global view of availability is hard in many peer-to-peer systems. We also ignore the costs associated with search and retrieval. Some systems, such as distributed hash tables, allow very ecient retrieval but at a high search cost; other systems are more balanced or less ecient overall. Then again, there are various specic attacks on peer-to-peer systems, whether in the literature or in the eld; the model might be extended to deal with some of them. Random distribution may also introduce social choice problems that discretionary distribution avoids. There may be a need for explicit mechanisms to determine rs and bs , the relative number of red versus blue les that a typical node will be on average asked to serve. Nodes have incentives to shift these towards their preferences, they may be tempted to manipulate whatever voting or

10

George Danezis and Ross Anderson

reputation systems are implemented. Making these robust is a separate topic of research.

6.2

Censorship

Our model of censorship has been carefully chosen not to introduce additional social choice issues. The censor is targeting a set of individual nodes, and the success or not of his attack on a particular node depends only on the defence budget of that node. Of course, in the case where nodes are subject to legal action, a victory against one node may create a precedent that makes enforcement against other nodes cheaper in the future. Defence may thus take on some of the aspects of a public good, with the problems associated with free-riding and externalities. How it works out in detail will depend on whether the level of defence depends on the least eort, the greatest eort or the sum-of-eorts of all the nodes. For how to model these cases, see Varian [23]. Our model also assumes a censor that wishes to impose a certain selection of resources on nodes. This is appropriate to model censorship of news and the press, but might not be so applicable to the distribution of music online. There the strategy of the music industry is to increase the cost of censorship resistance to match the retail price of the music. In that context, our model suggests that it would be much harder for the industry to take on a diuse constellation of autonomous fan clubs than it would be to take on a monolithic le-sharing system. In that case, there might be attack-budget constraints; some performers might be unwilling to alienate their fans by too-aggressive enforcement. Our particular censorship model provides some further insights. For both random distribution and discretionary distribution, the censor will meet resistance from a node once his activities halve its utility. This is because, for the particular utility function we have chosen, a node will react to mild censorship by investing in other resources rather than engaging in combat. So mild censorship may attract little reaction, but at some point there will be nodes that start to ght back, starting with those nodes whose preferences are most dierent from the censors. This is consistent with intuition, and real-life experience. We have not modelled the incentives of the censor, or tried to nd his optimal strategy in attacking the network. Better attack models probably require more detail about the network architecture and operation. Finally, our model may have wider implications. It is well known that rather than ghting against government regulation and for market freedom in the abstract, rms are more likely to invest eort, through trade associations, in ghting for the freedoms most relevant to their own particular trade. There is also the current debate about whether increasing social diversity will necessarily undermine social solidarity [1, 12, 16]. The relevance of our model to such issues of political economy is a matter for discussion elsewhere.

Random or Discretionary Resource Distribution?

11

Conclusions

We have presented a model of node preferences in peer-to-peer systems, and assessed how two dierent design philosophies (random and discretionary distribution of resources) resist censorship. Our main nding is that, under the assumptions of our model, discretionary distribution is better. The more heterogeneous the preferences are, the more it outperforms random distribution. Nodes will on average invest more in ghting censorship of resources they value. Our model of censorship resistance is simple, but can be extended to explore other situations. In the discretionary model nodes do not have to collectively manage the overall content of the network, which gives them fewer incentives to subvert the control mechanisms. This in turn allows for simpler network designs that do not require election schemes, reputation systems or electronic cash, which can be cumbersome and dicult to implement. Finally, the discretionary model also leads to a more stable network. Each node can better maximise its utility and is less likely to leave the network to seek a better deal somewhere else. Acknowledgements The authors would like to thank Rupert Gatti and Thierry Rayna for their valuable input on the appropriateness of dierent utility functions to the problem studied, and the anonymous reviewers for valuable comments. This work was done with the nancial assistance of the Cambridge MIT Institute (CMI) as part of the project project on The design and implementation of third generation peer-to-peer systems.

References

1. David Aaronovitch. The joy of diversity. The Observer, February 29 2004. 2. Ross Anderson. The eternity service. In 1st International Conference on the Theory and Applications of Cryptology (Pragocrypt 96), pages 242252, Prague, Czech Republic, September/October 1996. Czech Technical University Publishing House. 3. Adam Back. The eternity service. Phrack Magazine, 7:51, 1997. http://www. cypherspace.org/ adam/eternity/phrack.html. 4. Tonda Benes. The strong eternity service. In Ira S. Moskowitz, editor, Proceedings of Information Hiding Workshop (IH 2001). Springer-Verlag, LNCS 2137, April 2001. 5. Bengt Carlsson and Rune Gustavsson. The rise and fall of napster - an evolutionary approach. In Jiming Liu, Pong Chi Yuen, Chun Hung Li, Joseph Kee-Yin Ng, and Toru Ishida, editors, AMT, volume 2252 of Lecture Notes in Computer Science, pages 347354. Springer, 2001. 6. Ian Clarke, Oskar Sandberg, Brandon Wiley, and Theodore W. Hong. Freenet: A distributed anonymous information storage and retrieval system. In Federrath [10], pages 4666. 7. Edith Cohen, Amos Fiat, and Haim Kaplan. Associative search in peer to peer networks: Harnessing latent semantics. In IEEE INFOCOM 2003, The 22nd Annual Joint Conference of the IEEE Computer and Communications Societies, San Franciso, CA, USA, March 30 - April 3 2003. IEEE, 2003.

12

George Danezis and Ross Anderson

8. Roger Dingledine, Michael J. Freedman, and David Molnar. The free haven project: Distributed anonymous storage service. In Federrath [10], pages 6795. 9. Peter Druschel, M. Frans Kaashoek, and Antony I. T. Rowstron, editors. Peerto-Peer Systems, First International Workshop, IPTPS 2002, Cambridge, MA, USA, March 7-8, 2002, Revised Papers, volume 2429 of Lecture Notes in Computer Science. Springer, 2002. 10. Hannes Federrath, editor. Designing Privacy Enhancing Technologies, International Workshop on Design Issues in Anonymity and Unobservability, Berkeley, CA, USA, July 25-26, 2000, Proceedings, volume 2009 of Lecture Notes in Computer Science. Springer, 2001. 11. Philippe Golle, Kevin Leyton-Brown, and Ilya Mironov. Incentives for sharing in peer-to-peer networks. In ACM Conference on Electronic Commerce, pages 264 267. ACM, 2001. 12. D Goodhart. Discomfort of strangers. The Guardian, Feruary 2 2004. pp 2425. 13. Johan Helsingius. Johan helsingius closes his internet remailer. http://www.penet. fi/press-english.html, August 1996. 14. Jack Hirshleifer. The dark side of the force. Cambridge University Press, 2001. 15. Kazaa media desktop. Web site, 2004. http://www.kazaa.com/. 16. The kindness of strangers? The Economist, February 26 2004. 17. Qin Lv, Sylvia Ratnasamy, and Scott Shenker. Can heterogeneity make gnutella scalable? In Druschel et al. [9], pages 94103. 18. Antony I. T. Rowstron and Peter Druschel. Pastry: Scalable, decentralized object location, and routing for large-scale peer-to-peer systems. In Rachid Guerraoui, editor, Middleware, volume 2218 of Lecture Notes in Computer Science, pages 329 350. Springer, 2001. 19. A Sen. Social choice theory. In K Arrow and MD Intriligator, editors, Handbook of Mathematical Economics, volume 3, pages 10731181. Elsevier, 1986. 20. Andrei Serjantov and Ross Anderson. On dealing with adversaries fairly. In The Third Annual Workshop on Economics and Information Security (WEIS04), Minnesota, US, May 1314 2004. 21. Ion Stoica, Robert Morris, David Kargerand M. Frans Kaashoek, and Hari Balakrishnan. Chord: A scalable peer-to-peer lookup service for internet applications. In ACM SIGCOMM 2001 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communication, pages 149160, San Diego, CA, USA, August 27-31 2001. ACM. 22. Chunqiang Tand, Zhichen Xu, and Sandhya Dwarkadas. Peer-to-peer information retrieval using self-organising semantic overlay networks. In SIGCOMM0, Karlsruhe, Germany, August 25-29 2003. ACM. 23. H Varian. System reliability and free riding. In Workshop on Economics and Information Security, University of California, Berkeley, May 2002. http://www.sims.berkeley.edu/resources/affiliates/workshops/ econsecurity/econws/49.pdf. 24. Weatherspoon, Westley Weimer, Chris Wells, and Ben Y. Zhao. Oceanstore: An architecture for global-scale persistent storage. In ASPLOS-IX Proceedings of the 9th International Conference on Architectural Support for Programming Languages and Operating Systems, volume 28 of SIGARCH Computer Architecture News, pages 190201, Cambridge, MA, USA, November 12-15 2000. 25. Bryce Wilcox-OHearn. Experiences deploying a large-scale emergent network. In Druschel et al. [9], pages 104110.

You might also like

- Heuristic Cheat Sheet Clickable LinksDocument2 pagesHeuristic Cheat Sheet Clickable Linksemily100% (1)

- (1908) Mack's Barbers' Guide: A Practical Hand-BookDocument124 pages(1908) Mack's Barbers' Guide: A Practical Hand-BookHerbert Hillary Booker 2nd100% (1)

- UST Corporation Law Syllabus 2020 2021Document26 pagesUST Corporation Law Syllabus 2020 2021PAMELA ALEXIA CASTILLONo ratings yet

- C779-C779M - 12 Standard Test Method For Abrasion of Horizontal Concrete SurfacesDocument7 pagesC779-C779M - 12 Standard Test Method For Abrasion of Horizontal Concrete SurfacesFahad RedaNo ratings yet

- Test Plan TemplateDocument3 pagesTest Plan TemplateMurtazaNo ratings yet

- Centiloquium of PtolemyDocument37 pagesCentiloquium of PtolemyNatasa Karalic Koprivica100% (9)

- 1973 Essays On The Sources For Chinese History CanberraDocument392 pages1973 Essays On The Sources For Chinese History CanberraChanna LiNo ratings yet

- Transformational and Charismatic Leadership: The Road Ahead 10th Anniversary EditionDocument32 pagesTransformational and Charismatic Leadership: The Road Ahead 10th Anniversary Editionfisaac333085No ratings yet

- Nursing Care Plan Diabetes Mellitus Type 1Document2 pagesNursing Care Plan Diabetes Mellitus Type 1deric85% (46)

- 2400 8560 PR 8010 - A1 HSE Management PlanDocument34 pages2400 8560 PR 8010 - A1 HSE Management PlanMohd Musa HashimNo ratings yet

- Distributed Computer Systems: Theory and PracticeFrom EverandDistributed Computer Systems: Theory and PracticeRating: 4 out of 5 stars4/5 (1)

- Exploiting Social Paradigms As Routing Strategies in Delay Tolerant Networks: An IntroductionDocument8 pagesExploiting Social Paradigms As Routing Strategies in Delay Tolerant Networks: An IntroductionClaudio FiandrinoNo ratings yet

- The Mathematics of Networks: M. E. J. NewmanDocument12 pagesThe Mathematics of Networks: M. E. J. NewmanΧρήστος ΝτάνηςNo ratings yet

- A Novel Approach For Allocating Network and IT Resources Offered by Different Service ProvidersDocument12 pagesA Novel Approach For Allocating Network and IT Resources Offered by Different Service ProvidersijdpsNo ratings yet

- Community Structure in Social and Biological Networks: M. Girvan and M. E. J. NewmanDocument6 pagesCommunity Structure in Social and Biological Networks: M. Girvan and M. E. J. NewmanJohn Genricson Santiago MallariNo ratings yet

- Sampling Community StructureDocument10 pagesSampling Community StructureMai Ngoc LamNo ratings yet

- (2012) Regional Subgraph Discovery in Social NetworksDocument2 pages(2012) Regional Subgraph Discovery in Social NetworksHainah Kariza LeusNo ratings yet

- Ijdps 040301Document15 pagesIjdps 040301ijdpsNo ratings yet

- Community Detection Using Statistically Significant Subgraph MiningDocument10 pagesCommunity Detection Using Statistically Significant Subgraph MiningAnonymousNo ratings yet

- Economics and Computation 1Document15 pagesEconomics and Computation 1Zachry WangNo ratings yet

- Approaches From Statistical Physics To Model and Study Social NetworksDocument19 pagesApproaches From Statistical Physics To Model and Study Social NetworksAbhranil GuptaNo ratings yet

- How To Win Elections: Lecture Notes of The Institute For Computer Sciences July 2017Document11 pagesHow To Win Elections: Lecture Notes of The Institute For Computer Sciences July 2017Solomon Joyles-BradleyNo ratings yet

- Taxonomy in WikipediaDocument5 pagesTaxonomy in WikipediaAndrea LucarelliNo ratings yet

- Cooperation in Wireless Ad Hoc Networks: (Vikram, Pavan, Rao) @CWC - Ucsd.eduDocument10 pagesCooperation in Wireless Ad Hoc Networks: (Vikram, Pavan, Rao) @CWC - Ucsd.eduகாஸ்ட்ரோ சின்னாNo ratings yet

- Evolution of The Social Network of Scientific ColaborationDocument25 pagesEvolution of The Social Network of Scientific ColaborationcjmauraNo ratings yet

- Cooperative Game Theory Framework For Energy Efficient Policies in Wireless NetworksDocument8 pagesCooperative Game Theory Framework For Energy Efficient Policies in Wireless NetworksMarysol AyalaNo ratings yet

- Entropy: Identifying Communities in Dynamic Networks Using Information DynamicsDocument25 pagesEntropy: Identifying Communities in Dynamic Networks Using Information DynamicsMario IordanovNo ratings yet

- Overlapping Community Detection at Scale: A Nonnegative Matrix Factorization ApproachDocument10 pagesOverlapping Community Detection at Scale: A Nonnegative Matrix Factorization ApproachGary NgaraNo ratings yet

- How To Win Elections: Lecture Notes of The Institute For Computer Sciences July 2017Document11 pagesHow To Win Elections: Lecture Notes of The Institute For Computer Sciences July 2017Andre FerrazNo ratings yet

- Extraction and Classification of Dense Communities in The WebDocument10 pagesExtraction and Classification of Dense Communities in The WebrbhaktanNo ratings yet

- Clique Approach For Networks: Applications For Coauthorship NetworksDocument6 pagesClique Approach For Networks: Applications For Coauthorship NetworksAlejandro TorresNo ratings yet

- Social-Aware Stateless Forwarding in Pocket Switched NetworksDocument5 pagesSocial-Aware Stateless Forwarding in Pocket Switched NetworksSalvia EdwardNo ratings yet

- A Novel Collaborative Filtering Model Based On Combination of Correlation Method With Matrix Completion TechniqueDocument8 pagesA Novel Collaborative Filtering Model Based On Combination of Correlation Method With Matrix Completion TechniquessuthaaNo ratings yet

- A Cooperative Peer Clustering Scheme For Unstructured Peer-to-Peer SystemsDocument8 pagesA Cooperative Peer Clustering Scheme For Unstructured Peer-to-Peer SystemsAshley HowardNo ratings yet

- Degree of Diffusion in Real Complex NetworksDocument12 pagesDegree of Diffusion in Real Complex NetworksICDIPC2014No ratings yet

- Enhanced Search in Unstructured Peer-to-Peer Overlay NetworksDocument10 pagesEnhanced Search in Unstructured Peer-to-Peer Overlay NetworkskanjaiNo ratings yet

- International Journal of Engineering Research and DevelopmentDocument5 pagesInternational Journal of Engineering Research and DevelopmentIJERDNo ratings yet

- Starvation in Ad-Hoc and Mesh Networks: Modeling, Solutions, and MeasurementsDocument6 pagesStarvation in Ad-Hoc and Mesh Networks: Modeling, Solutions, and MeasurementsADSRNo ratings yet

- Entropy: Bipartite Structures in Social Networks: Traditional Versus Entropy-Driven AnalysesDocument17 pagesEntropy: Bipartite Structures in Social Networks: Traditional Versus Entropy-Driven AnalysesPudretexDNo ratings yet

- Distributed Subgradient Methods For Multi-Agent OptimizationDocument28 pagesDistributed Subgradient Methods For Multi-Agent OptimizationHugh OuyangNo ratings yet

- Distributed AlgorithemDocument2 pagesDistributed AlgorithemFiras RahimehNo ratings yet

- Co-Operative Adaptation Strategy To Progress Data Transfer in Opportunistic NetworkDocument6 pagesCo-Operative Adaptation Strategy To Progress Data Transfer in Opportunistic NetworkWARSE JournalsNo ratings yet

- A P2P Recommender System Based On Gossip Overlays (Prego)Document8 pagesA P2P Recommender System Based On Gossip Overlays (Prego)Laura RicciNo ratings yet

- Fair Coalitions For Power-Aware Routing in Wireless NetworksDocument4 pagesFair Coalitions For Power-Aware Routing in Wireless NetworksMallikarjunaswamy SwamyNo ratings yet

- Social Features of Online Networks: The Strength of Intermediary Ties in Online Social MediaDocument14 pagesSocial Features of Online Networks: The Strength of Intermediary Ties in Online Social MediaWang AoNo ratings yet

- The Value of A NetworkDocument1 pageThe Value of A NetworksinnombrepuesNo ratings yet

- Granular Modularity and Community Detection in Social NetworksDocument5 pagesGranular Modularity and Community Detection in Social NetworksSuman KunduNo ratings yet

- GEPHI Modularity Statistics0801.1647v4Document22 pagesGEPHI Modularity Statistics0801.1647v4Mark MarksNo ratings yet

- PVT DBDocument12 pagesPVT DBomsingh786No ratings yet

- Storing and Indexing Spatial Data in P2P Systems: Verena Kantere, Timos Sellis, Spiros SkiadopoulosDocument13 pagesStoring and Indexing Spatial Data in P2P Systems: Verena Kantere, Timos Sellis, Spiros SkiadopoulosKrishna KanthNo ratings yet

- A Comparative AnalysisDocument18 pagesA Comparative AnalysisRaffaele CostanzoNo ratings yet

- Sdip: Structured and Dynamic Information Push and Pull Protocols For Distributed Sensor NetworksDocument14 pagesSdip: Structured and Dynamic Information Push and Pull Protocols For Distributed Sensor NetworksMarjh RamirezNo ratings yet

- Informative Pattern Discovery Using Combined MiningDocument5 pagesInformative Pattern Discovery Using Combined MiningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Birch: An Efficient Data Clustering Method For Very Large DatabasesDocument12 pagesBirch: An Efficient Data Clustering Method For Very Large Databasesiamme22021990No ratings yet

- On Social-Based Routing and Forwarding Protocols in OpportunisticDocument5 pagesOn Social-Based Routing and Forwarding Protocols in OpportunisticSatish NaiduNo ratings yet

- Smaller World My2e09Document9 pagesSmaller World My2e09mike_footy_crazy5675No ratings yet

- Item-Based Collaborative Filtering Recommendation AlgorithmsDocument11 pagesItem-Based Collaborative Filtering Recommendation AlgorithmsMirna MilicNo ratings yet

- A Robust and Scalable Peer-to-Peer Gossiping ProtocolDocument12 pagesA Robust and Scalable Peer-to-Peer Gossiping ProtocolPraveen KumarNo ratings yet

- Altman 06 SurveyDocument26 pagesAltman 06 SurveyLambourghiniNo ratings yet

- Negotiating Socially Optimal Allocations of ResourDocument45 pagesNegotiating Socially Optimal Allocations of ResourLioNo ratings yet

- Silva Widm2021Document44 pagesSilva Widm2021wowexo4683No ratings yet

- Siti TR 12 06Document109 pagesSiti TR 12 06Diogo SoaresNo ratings yet

- Complex NetworkDocument6 pagesComplex Networkdev414No ratings yet

- Community StructureDocument12 pagesCommunity Structurejohn949No ratings yet

- A MATLAB Toolbox For Large-Scale Networked SystemsDocument12 pagesA MATLAB Toolbox For Large-Scale Networked SystemsKawthar ZaidanNo ratings yet

- Distributed Implementations of Vickrey-Clarke-Groves MechanismsDocument9 pagesDistributed Implementations of Vickrey-Clarke-Groves MechanismsgkgjNo ratings yet

- 10 1016@j Inffus 2013 04 006Document31 pages10 1016@j Inffus 2013 04 006Zakaria SutomoNo ratings yet

- NSSL Discussion WhanaungatangaDocument16 pagesNSSL Discussion Whanaungatangasriram87No ratings yet

- Week 8 - Graph MiningDocument39 pagesWeek 8 - Graph Miningpchaparro15183No ratings yet

- Stumbl: Using Facebook To Collect Rich Datasets For Opportunistic Networking ResearchDocument6 pagesStumbl: Using Facebook To Collect Rich Datasets For Opportunistic Networking ResearchAjita GuptaNo ratings yet

- AAAI - 08 - Local Search For DCOPDocument6 pagesAAAI - 08 - Local Search For DCOPRejeev CvNo ratings yet

- Ucla Department of Computer Science Email: Ucla Department of Elecrical Engineering EmailDocument4 pagesUcla Department of Computer Science Email: Ucla Department of Elecrical Engineering EmailRejeev CvNo ratings yet

- New Covert Channels in HTTP: Adding Unwitting Web Browsers To Anonymity SetsDocument7 pagesNew Covert Channels in HTTP: Adding Unwitting Web Browsers To Anonymity SetsRejeev CvNo ratings yet

- Presentation On Using SPICE: by Shweta - KulkarniDocument24 pagesPresentation On Using SPICE: by Shweta - KulkarniRejeev CvNo ratings yet

- Presentation On Using SPICE: by Shweta - KulkarniDocument24 pagesPresentation On Using SPICE: by Shweta - KulkarniRejeev CvNo ratings yet

- Wave Length EstimatorDocument8 pagesWave Length EstimatorRejeev CvNo ratings yet

- 10 1 1 94 737Document16 pages10 1 1 94 737Gagan ChopraNo ratings yet

- Interdisciplinary Project 1Document11 pagesInterdisciplinary Project 1api-424250570No ratings yet

- I. Errors, Mistakes, Accuracy and Precision of Data Surveyed. A. ErrorsDocument53 pagesI. Errors, Mistakes, Accuracy and Precision of Data Surveyed. A. ErrorsJETT WAPNo ratings yet

- Solution Manual For Understanding Business 12th Edition William Nickels James Mchugh Susan MchughDocument36 pagesSolution Manual For Understanding Business 12th Edition William Nickels James Mchugh Susan Mchughquoterfurnace.1ots6r100% (51)

- 1) About The Pandemic COVID-19Document2 pages1) About The Pandemic COVID-19محسين اشيكNo ratings yet

- University Physics With Modern Physics 2nd Edition Bauer Test BankDocument24 pagesUniversity Physics With Modern Physics 2nd Edition Bauer Test BankJustinTaylorepga100% (42)

- Reading in MCJ 216Document4 pagesReading in MCJ 216Shela Lapeña EscalonaNo ratings yet

- PQS Catalogue 4 2Document143 pagesPQS Catalogue 4 2sagarNo ratings yet

- Chapter - 01 Geography The Earth in The Solar SystemDocument10 pagesChapter - 01 Geography The Earth in The Solar SystemKarsin ManochaNo ratings yet

- Unit 9 TelephoningDocument14 pagesUnit 9 TelephoningDaniela DanilovNo ratings yet

- KITZ - Cast Iron - 125FCL&125FCYDocument2 pagesKITZ - Cast Iron - 125FCL&125FCYdanang hadi saputroNo ratings yet

- Support of Roof and Side in Belowground Coal MinesDocument5 pagesSupport of Roof and Side in Belowground Coal MinesNavdeep MandalNo ratings yet

- Report Palazzetto Croci SpreadsDocument73 pagesReport Palazzetto Croci SpreadsUntaru EduardNo ratings yet

- Sabre V8Document16 pagesSabre V8stefan.vince536No ratings yet

- Lier Upper Secondary SchoolDocument8 pagesLier Upper Secondary SchoolIES Río CabeNo ratings yet

- Unit 2 Talents: Phrasal Verbs: TurnDocument5 pagesUnit 2 Talents: Phrasal Verbs: TurnwhysignupagainNo ratings yet

- 热虹吸管相变传热行为CFD模拟 王啸远Document7 pages热虹吸管相变传热行为CFD模拟 王啸远小黄包No ratings yet

- Emerging and Less Common Viral Encephalitides - Chapter 91Document34 pagesEmerging and Less Common Viral Encephalitides - Chapter 91Victro ChongNo ratings yet

- Tia Portal V16 OrderlistDocument7 pagesTia Portal V16 OrderlistJahidul IslamNo ratings yet

- Jazz - Installing LED DRLsDocument16 pagesJazz - Installing LED DRLsKrishnaNo ratings yet

- The Mutant Epoch Mature Adult Content Mutations v1Document4 pagesThe Mutant Epoch Mature Adult Content Mutations v1Joshua GibsonNo ratings yet