Professional Documents

Culture Documents

Ee5101 Ca1 PDF

Uploaded by

Hamid FarhanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ee5101 Ca1 PDF

Uploaded by

Hamid FarhanCopyright:

Available Formats

Assignment For EE5101 - Linear Systems

Sem I AY2010/2011

Linear State Feedback Controller Design

Phang Swee King

A0033585A

Email: king@nus.edu.sg

NGS/ECE Dept.

Faculty of Engineering

National University of Singapore

October 17, 2010

Abstract

This project describes the problems, solutions and ndings of a linear state-space system. In

this report, a typical third-order single input single output plant is provided in the form of

transfer functions. The state-space model and dynamic characteristics is to be investigated.

In order to stabilize and gain full control of the plant, a state feedback controller using the

Linear Quadratic Regulator (LQR) method is required. Then a practical situation in which

the state variables are not fully measurable is considered. As a solution, state observer is

used and it will be designed based on the pole placement method. Lastly, servo control is

formulated and implemented into the control loop to realize reference tracking and distur-

bance rejection. All the above investigations will be carried out or simulated by MATLAB

and Simulink.

Contents

1 Introduction 3

2 State-space Modelling and Analysis 4

2.1 State-space Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

2.2 Plant Dynamics Analysis and Simulation . . . . . . . . . . . . . . . . . . . . 5

3 State Feedback Design using Linear Quadratic Regulator (LQR) 8

3.1 State Feedback Controller Design . . . . . . . . . . . . . . . . . . . . . . . . 9

3.2 Change Weighting Factor 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

3.3 Change weighting factor Q . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

4 State Observer Design using Pole Placement 14

4.1 Design of Observer by Pole Placement . . . . . . . . . . . . . . . . . . . . . 14

4.2 Eects of Observer Pole Position . . . . . . . . . . . . . . . . . . . . . . . . 15

5 Servo Control 19

5.1 Servo Controller Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

5.2 Reduced Order Servo Controller . . . . . . . . . . . . . . . . . . . . . . . . . 21

6 Conclusion 23

2

Chapter 1

Introduction

Classical Control Theory was rst introduced in 1930-1940, which had achieved a great suc-

cess in the 20th century. However, it has some disadvantages, as it is based on input/output

relationship called transfer function. In computing the transfer function of a system, all

initial conditions are set to be zero. Also, it is dicult to design a controller by using clas-

sical methods like frequency response and root locus. Due to these inherent shortcomings,

Classical Control Theory was gradually replaced by Modern Control Theory.

Contrary to Classical Control Theory, Modern Control Theory uses the time domain repre-

sentation of the system known as the state-space representation. Thus it is able to provide

a complete description of the system. At the late 20th century, many modern control ideas

were developed upon the state-space framework, including feedback using pole placement,

optimum control, decoupling control, servo control, state observer and many more. Further-

more, the state space representation can handle problems with multiple inputs and multiple

outputs, and it is not restricted to linear systems or zero initial condition systems.

In our project, the performance of the plant is analyzed with non-zero initial state. Since

the controller for the plant is of 3rd order, it is not easy to design the controller by using

Classic Control Theory. Therefore, in this project, the controller is to designed based on the

state-space representation in time domain.

3

Chapter 2

State-space Modelling and Analysis

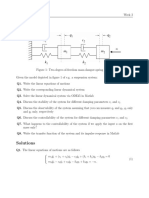

A plant shown in Fig. 2.1 is to be investigated, where

G

1

(:) =

1

:

2

+ 2c: + 5c

G

2

(:) =

2

:

The state space model of the plant needs to be deduced and analyzed.

Figure 2.1: A 3rd-Order Plant

2.1 State-space Modeling

Based on the last 4 digits of my matriculation number, the parameter c is given by

c = 3.585 (2.1)

Thus, in the simulation below, the transfer function for G

1

and G

2

will be assigned as

G

1

(:) =

1

:

2

+ 7.17: + 17.925

(2.2)

G

2

(:) =

2

:

(2.3)

To determine the overall state-space model, we consider G

1

(:) (with order two) and G

2

(:)

(with order one) together, the overall order of this plant will then be three. Thus, three state

4

variables are needed to represent the full model, and they are not unique. We can arbitrary

choose three state variables for our own convenience. Hence, we assign the output from G

2

as the rst state variable, r

1

, and output from G

1

as the second state variable, r

2

. The last

state variable, r

3

, resides inside the G

1

block and we choose it to be the derivative of r

2

for

easy calculation.

From the transfer function G

1

, we have

(:

2

+ 7.17: + 17.925)A

2

(:) = l(:) (2.4)

Taking La-place transform on both sides and consider r

2

= r

3

and r

2

= r

3

, we have

r

2

(t) + 7.17r

2

(t) + 17.925r

2

(t) = n(t)

r

3

(t) = 7.17r

3

(t) 17.925r

2

(t) +n(t) (2.5)

For state r

1

, we know

:A

1

(:) = 2A

2

(:) + 21(:) (2.6)

r

1

(t) = 2r

2

(t) + 2d(t) (2.7)

Thus, by letting r =

[

r

1

r

2

r

3

]

, we can obtain the full state representation of the system

r =

0 2 0

0 0 1

0 17.925 7.17

r +

0

0

1

n +

2

0

0

d ,

=

[

1 0 0

]

r (2.8)

where =

0 2 0

0 0 1

0 17.925 7.17

, 1

0

0

1

, 1

2

0

0

, and C =

[

1 0 0

]

.

2.2 Plant Dynamics Analysis and Simulation

At this section, the obtained state-space plant will be analyzed and simulated with non-zero

initial state. The bode plot and zero input response with the initial condition of r

1

= r

2

= r

3

= 1 are shown in Fig. 2.2 and Fig. 2.3 respectively. From the zero input response

plot, we can see that the output does not go back to zero, which tell us the system is not

asymptotically stable. The three system poles are at 0, 3.585 2.2523i. We see that two

of them are stable as their real part are negatives, but the zero pole might cause instability

to the system. However, since the zero eigenvalue (pole) has no Jordan block of order 2 or

higher, it is known that the system is stable in the sense of Lyapunov.

The output response to a step input and the output response to a constant disturbance is

shown in Fig. 2.4 and Fig. 2.5. They are both unbounded as time goes to innity. Thus, the

system is not bounded-input-bounded-output (BIBO) stable. In order to make the system

stable and maneuverable, state feedback control is needed. In the next chapter, we will

investigate the state feedback control law using LQR method in order to make the overall

closed-loop system stable and meet design requirements.

5

Figure 2.2: Bode plot of the original open-loop system

Figure 2.3: Zero input response with initial condition [1 1 1]

6

Figure 2.4: Output response to a constant input, l(:) =

1

Figure 2.5: Output response to a constant disturbance, 1(:) =

1

7

Chapter 3

State Feedback Design using Linear

Quadratic Regulator (LQR)

In this chapter, a state feedback controller is to be implemented using the LQR method. By

adopting LQR method, our target is to minimize the cost function,

J =

1

2

0

(r

Qr +n

1n) dt (3.1)

and

n = 1r (3.2)

Here, 1 is called the state feedback gain. With a non-zero initial condition, the state

variables are expected to go from their initial values to zero as time goes to innity. However,

to make sure that the optimal control system is stable and realizable, three criteria need to

be met:

1. The original system, (. 1), must be controllable;

2. The pair (. H) must be observable, where HH

= Q;

3. The weighting matrices Q and 1 must be both symmetric, semi-positive denite and

positive denite respectively.

Then, the control law will be

n = 1r = 1

1

11r (3.3)

where 1 is the solution of the Riccati equation

1 +1 +Q111

1

1

1 = 0 (3.4)

8

3.1 State Feedback Controller Design

In order to implement LQR state feedback controller, rst of all, the original system must

be controllable. Thus, we check the controllability matrix,

[1

. 1

.

2

1

] =

0 0 2

0 1 7.17

1 7.17 33.4839

(3.5)

which is of full rank, and thus controllable. Since Q and 1 are under the choice of the

designer, we just need to choose them wisely to fulll the requirements. For this case,

the simplest format of Q is a diagonal matrix with positive entries, and 1 is just a one

dimensional constant. We set up the base case for this problem as

Q =

1 0 0

0 1 0

0 0 1

(3.6)

1 = 1 (3.7)

By solving the Riccati equation shown above, or by substituting , 1

, Q, 1 into the

MATLAB function LQR, we obtained the state feedback gain,

1 =

[

1 0.8291 0.183

]

(3.8)

In addition, we set the initial state variables r =

[

1 1 1

]

, so that we can examine how

the three state variables drop to zero dynamically.

The simulation result is plotted in Fig. 3.1. As expected, all three state variables converge

to zero at about t = 30s. Thus, the closed-loop system is asymptotically stable which meets

the requirement. Note that the control signal, n, is also plotted for comparison with the

later cases.

3.2 Change Weighting Factor 1

In this section, two new cases with small adjustments to the original controller have been

tested. For the rst controller, 1 is decreased by 10 times from its original value. As for the

second controller, 1 is increased by 10 times from the original value. Table 3.1 shows the

computed feedback gains 1 with dierent value of 1. The simulation results are shown in

Fig. 3.2 and Fig. 3.3 respectively.

Weighting factor, 1 Controller gains, 1

0.1 [3.1623 2.9330 1.0321]

1 [1.0000 0.8291 0.1830]

10 [0.3162 0.2555 0.0425]

Table 3.1: Dierent control gains 1 with dierent weighting factor 1

9

Figure 3.1: State response for the base case

The simulation results shows that when 1 is much smaller than the original value, the states

r are able to converge to zero at a faster response. However, control signal, n, is large as

compared to the original simulated results.

It could be explained theoretically, as 1 is the weighting factor of input n with respect to

the cost function, if 1 is small, n can be very large because it is no longer the major cost

concern (see Fig. 3.2). On the other hand, if 1 is large, n becomes very costly and the

controller will try to keep it small (see Fig. 3.3).

If we compare the feedback gains calculated for the above two cases, the former has a much

larger gain compared to the latter. Without losing generality, we can say that a larger

feedback gain will cause faster system response. However, non-ideal conditions such as

noises, non-linearity and saturation will pose problems if the feedback gain is too large.

10

Figure 3.2: State response when 1 = 0.1

Figure 3.3: State response when 1 = 10

11

3.3 Change weighting factor Q

The values on the diagonal of matrix Q represent the weighting factors of their corresponding

state variables. For example, putting 50 times more weight on

11

would means that the

rst state variable is 50 times more important than the other two state variables in the cost

function.

Comparing the system response against the original controller where Q = 1

3

(see Fig. 3.1),

three new controllers are design with 100 times weighting on each states. The controller

gains correspond to each of the controller are listed in Table 3.2.

Weighting factor, Q Controller gains, 1

dicp(100. 1. 1) [10.000 7.5930 1.0516]

dicp(1. 100. 1) [1.0000 3.3365 0.5165]

dicp(1. 1. 100) [1.0000 1.3614 5.2450]

Table 3.2: Dierent control gains 1 with dierent weighting factor Q

Figure 3.4: State response when Q = dicp(100. 1. 1)

Based on the simulation results shown in Fig. 3.4, we see that by amplifying the weight

of state r

1

, the systems states converged to the zero states rather quickly compared to the

original controller. The control signal is larger, due to the fast system response.

Theoretically, if the disturbance d(t) is ignored, the system output is equivalent to the rst

state variable, r

1

(t). Thus, giving the rst state variable a higher cost weighting is indeed

a good solution since the output dynamic performance is our main concern. Thus, it is

12

Figure 3.5: State response when Q = dicp(1. 100. 1)

Figure 3.6: State response when Q = dicp(1. 1. 100)

reasonable and expected to see a better (faster) system response in the simulation.

According to Fig. 3.5 and Fig. 3.6, we see that for Q = dicp(1. 100. 1) and Q = dicp(1. 1. 100),

the system response are slower compared to the previous controllers. This is due to the in-

crement of the weighting of state r

2

or r

3

which in turn increases the damping of the system.

The controller eorts are smaller accordingly, too.

13

Chapter 4

State Observer Design using Pole

Placement

In the previous chapter, we are assuming that the state variables r

1

, r

2

and r

3

are completely

measurable. However, in most practical applications, not all state variables can be measured

directly. In this chapter, the idea of state estimation is implemented. A state observer will

be built to estimate the state variables based on our knowledge of input, output, and plant

model.

For the ease of investigation, we need to rst design a state feedback controller using the

pole placement method. As the plant we are studying now is a 3rd order system, the de-

sired closed-loop characteristic equation, o

(:) must be 3rd order, too. The choice of pole

placement can be done based on the 2nd order dominant pole strategy, with two conjugate

stable poles are rstly selected, then the third pole is arbitrary chosen to be far away from

the origin, so that it decays fast enough to be ignored.

we choose `

1

= 1+,, `

2

= 1, and `

3

= 10. Thus, the desired closed-loop polynomial,

o

(:) = (: + 1 ,)(: + 1 +,)(: + 10) = :

3

+ 12:

2

+ 22: + 20

Since the controllability matrix has already been calculated in the previous chapter, we can

apply Ackermanns formula to obtain the feedback gain:

1

=

[

0 0 1

]

0 0 2

0 1 7.17

1 7.17 33.4839

1

o

()

=

10

4.075

4.83

(4.1)

4.1 Design of Observer by Pole Placement

We consider a full-order observer:

r(t) = r(t) +1

(t) +1[ C r] (4.2)

14

where r(t) denotes the estimation of x(t) and 1 is the observer feedback gains. Let the

estimation error be r = r r. Then,

r = r

r = r +1

n { r +1

n +1( C r)}

= (r r) 1(Cr C r)

= (r r) 1C(r r)

= ( r) 1C( r) (4.3)

If we consider

det[:1 ( 1C)] = det[:1 ( 1C)]

= det[:1 ( 1C)

]

= det[:1 (

)]

= det[:1 (

1

1)] (4.4)

where

=

,

1 = C

, and

1 = 1

. It has changed to the same form as the normal state

feedback using pole placement problem, where the state r is replaced by the estimation error

r.

By the pole placement theorem, 1 can be found by arbitrarilty chosen eigenvalues of det[:1

( 1C)] = 0. The choice of observer pole placement is a trade-o between speed and

constraints imposed by noise, saturation and non-linearity. A simple guideline is to place

the observer poles 3-5 times faster than the control poles. Thus, we adapted this guideline

and the observer closed-loop characteristic equation is chosen as follows:

o

(`) = (` + 3 + 3i)(` + 3 3i)(` + 30)

= `

3

+ 36`

2

+ 198` + 540 (4.5)

Using Ackermanns formula, we obtain

1

=

[

28.8300 13.3180 107.1015

]

(4.6)

By using this feedback gain for the observer control loop with initial condition r(0) =

[

1 1 1

]

and r(0) =

[

1 1 1

]

, the zero input response, the state estimation error

and the control eort of the system are plotted in Fig. 4.1. We can see that the estimation

error for all three state variables converges to zero, and its dynamics is much faster than the

output response.

4.2 Eects of Observer Pole Position

Next, we will investigate the eects of observer pole position on state estimation error and

closed-loop control performance. In this section, two dierent pole placements wil be sim-

ulated. First, we try to put the observer pole at a position at the exact closed-loop pole

15

Figure 4.1: Zero input response, state estimation error and control eort of the system with

observer

position, where `

1

= 1 + i, `

2

= 1 i, and `

3

= 10. Again, using the Ackermanns

formula, we can get

1

1

=

[

4.8300 15.2781 76.2547

]

(4.7)

Next, we arbitrary selected fast poles, in this case, 10 times faster than the exact closed-loop

pole position, where `

1

= 10 + 10i, `

2

= 10 10i, and `

3

= 100. Then we have

1

2

=

[

112.8 686.5 4066.3

]

(4.8)

Both controller with observer feedback gains 1

1

and 1

2

are simulated, and the results are

compared to the original feedback gains 1

in Fig. 4.2 and Fig. 4.3.

According to Fig. 4.2, the zero input response of the system for using the exact closed-loop

pole positions is very slow and with large overshoot. However, as the observer pole positions

moves further from the origin (more negative poles), we notice that the performance of the

16

Figure 4.2: Zero input response of the system for dierent observer poles

observer is getting nearer to the real system without observer.

We notice from Fig. 4.3 that the error dynamics of the system performed better (errors decay

faster) when the observer poles are further away from the origin. Here we can deduce that

the observer poles have to be fast enough so that the control performance can be assured.

It seems that the faster the observer poles, the better the performance.

In practical applications, however, sensor or computer sampling frequency may not be fast

enough for the designed dynamics, and noises and measurement errors will be scaled up

by large observer feedback gain, which could cause bad performance and instability. Thus,

placing observer poles 3-5 times faster than the control poles is good enough based on many

researchers experience.

17

Figure 4.3: State estimation error and control eort of the system for dierent observer poles

18

Chapter 5

Servo Control

From the previous chapter, the system transient performance is adjusted by state feedback

and state feedback is designed by LQR or pole placement methods. However, all these have

not taken care of the steady-state performance of the system. In this chapter, our target

is to make the output of the system, (t), tracks a step reference input :(t) (asymptotic

tracking), despite a step disturbance d(t) (disturbance rejection).

5.1 Servo Controller Design

Based on the general single input single output servo control methodology, we need to de-

termine the servo mechanism followed by the stabilization of the system. In our simulation,

the reference signal and the disturbance signal are both step function. Thus, their Laplace

representations are of

1

based. However, one challenge is that d(t) or 1(:) does not go

directly into the output in our case. Thus, modication can be made by rst converting the

plant block diagram into an equivalent plant that we are more familiar with as shown below:

Figure 5.1: Servo control loop and blocks

Now, the equivalent disturbance \(:) = G

2

(:)1(:) is added directly at the output of the

system with a Laplace representation of

1

2

type. The standard procedures for servo control

19

can be applied. The plant transfer function is given by

G(:) = G

1

(:)G

2

(:) =

2

:

3

+ 7.17:

2

+ 17.925:

=

`(:)

1(:)

(5.1)

The servo mechanism is

1

Q(:)

=

1

:

2

(5.2)

which takes the least common denominator of unstable modes of 1(:) and \(:). We cascade

the servo mechanism with the plant G(:) to form the generalized plant,

G(:):

G(:) =

1

Q(:)

G(:) =

`(:)

Q(:)1(:)

=

2

:

5

+ 7.17:

4

+ 17.925:

3

(5.3)

We can now design a proper compensator to stabilize the generalized plant since G(s) is a

strictly proper with `(:) and 1(:) coprime, and no roots of Q(:) is a zero of the plant G(s).

Since the generalized plant is of order 5, the controller

1(:) needs to be of order 4. Let

1(:) =

1(:)

(:)

=

1

4

:

4

+1

3

:

3

+1

2

:

2

+1

1

: +1

0

4

:

4

+

3

:

3

+

2

:

2

+

1

: +

0

(5.4)

and the desired characteristic polynomial be

1

= (: + 1)

9

(5.5)

Thus, controller

1 can be calculated from:

1` +

1Q = 1

(5.6)

The solution of the above equation is

(:) = :

4

+ 1.83:

3

+ 4.9539:

2

+ 15.6778: 75.2084

1(:) = 192.1099:

4

+ 716.0552:

3

+ 18:

2

+ 4.5: + 0.5

1(:) =

192.1099:

4

+ 716.0552:

3

+ 18:

2

+ 4.5: + 0.5

:

4

+ 1.83:

3

+ 4.9539:

2

+ 15.6778: 75.2084

Thus, the complete controller is

1(:) =

1(:)

:

2

=

192.1099:

4

+ 716.0552:

3

+ 18:

2

+ 4.5: + 0.5

:

6

+ 1.83:

5

+ 4.9539:

4

+ 15.6778:

3

75.2084:

2

(5.7)

Substitute this 1(:) into Fig. 5.1, assign a constant reference of magnitude 1 and step

disturbance of magnitude 0.1 at time t = 50s, the system is simulated as shown in Fig. 5.2

From the simulation, we see that the output signal goes to the reference point 1 precisely

at steady-state, after both the step reference and disturbance are applied. However, the

dynamic response of the system is not satisfying as large overshoots occurs. Theoretically,

the occurance of large overshoots are due to the high order controller design. Thus, in order

to design a good servo controller, the dynamic performance can be improved by using an

alternate design method which lead to the following section.

20

Figure 5.2: System response to step reference and disturbance

5.2 Reduced Order Servo Controller

In some system, we can nd servo controllers in lower order than the one obtained by

the general method shown in the previous section. Fortunately, for our system, since the

transfer function G(:) already has an integrator term,

1

, it means that the controller only

needs another integrator,

1

, to realize the servo mechanism,

1

2

. Furthermore, there are two

stable poles for G(:) with characteristic polynomial :

2

+ 7.17: + 17.925. We can therefore

assign the same polynomial at the numerator of the controller such that they will cancel

each other to reduce the order of the system. Note that stable pole zero cancellation will

not aect system stability. Thus, we choose:

1(:) =

1(:)

(:)

=

(:

2

+ 7.17: + 17.925)(1

1

: +1

0

)

:(

3

:

3

+

2

:

2

+

1

: +

0

)

(5.8)

This leads to the closed-loop characteristic polynomial to be

o

(:) =

3

:

5

+

2

:

4

+

1

:

3

+

0

:

2

+ 21

1

: + 21

0

(5.9)

Let the desired characteristic polynomial to be

(: + 1)

5

= :

5

+ 5:

4

+ 10:

3

+ 10:

2

+ 5: + 1 (5.10)

21

By comparing coecients, We have

3

= 1

2

= 5

1

= 10

0

= 10

1

1

= 2.5

1

0

= 0.5

Thus, Eqn. 5.8 becomes:

1(:) =

1(:)

(:)

=

2.5:

3

+ 18.425:

2

+ 48.3975: + 8.9625

:

4

+ 5:

3

+ 10:

2

+ 10:

(5.11)

Substitute this 1(:) into Fig. 5.1 and assign a constant reference of magnitude 1 and a step

disturbance of magnitude 0.1 at time equals to 50 s as previously simulated, the system is

simulated as shown in Fig. 5.3.

Figure 5.3: System response to step reference and disturbance

Comparing Fig. 5.3 and Fig. 5.2, the dynamic of the system shows a better response as

it shows smaller overshoots and shorter settling time. The resultant behavior is due to the

stable pole-zero cancellation which results in a lower-ordered closed-loop transfer function.

Moreover, this method requires minimal amount of computation as the order of the controlled

system is reduced, thus it is highly desirable and preferable.

22

Chapter 6

Conclusion

In this project, the given single input single output (SISO) plant is converted from a transfer

function expression into the state-space representation. In chapter 2, the plants open loop

dynamics is analyzed and simulated. Simulation results show that it is neither asymptotically

stable nor BIBO stable. Thus, state feedback is needed to stabilize the system. In chapter

3, we assume that all state variables are measurable. State feedback control using the LQR

method is implemented and the eects of changing weighting factor Q and 1 are investigated.

In chapter 4, we consider the case when the state variables are not measurable. A full order

state observer controller is designed based on the pole placement method. The relationship

between the observer pole location and system performance is discussed. In the last chapter,

servo control which enable reference tracking and disturbance rejection is formulated. A

general solution and a special reduced order solution are both proposed and simulated.

23

You might also like

- Generator swing equation block diagram analysisDocument5 pagesGenerator swing equation block diagram analysisRojan Bhattarai100% (1)

- ME3405 Mechatronics Lab Student Name: Iskander Otorbayev Student Number: 180015732Document12 pagesME3405 Mechatronics Lab Student Name: Iskander Otorbayev Student Number: 180015732Iskander OtorbayevNo ratings yet

- Control a stationary self-balancing two-wheeled vehicleDocument7 pagesControl a stationary self-balancing two-wheeled vehiclekhooteckkienNo ratings yet

- Week 9 Labview: "Numerical Solution of A Second-Order Linear Ode"Document5 pagesWeek 9 Labview: "Numerical Solution of A Second-Order Linear Ode"Michael LiNo ratings yet

- Double Inverted Pendulum On A Cart-R07Document25 pagesDouble Inverted Pendulum On A Cart-R07Dragan ErcegNo ratings yet

- Two-degree-of-freedom mass-damper-spring system linear dynamicsDocument2 pagesTwo-degree-of-freedom mass-damper-spring system linear dynamicsReinaldy MaslimNo ratings yet

- Lab#7 Block Diagram Reduction and Analysis and Design of Feedback SystemsDocument19 pagesLab#7 Block Diagram Reduction and Analysis and Design of Feedback SystemsHammad SattiNo ratings yet

- Density Operator and Applications in Quantum and Nonlinear OpticsDocument60 pagesDensity Operator and Applications in Quantum and Nonlinear OpticsMoawwiz IshaqNo ratings yet

- Flight Direction Cosine MatrixDocument11 pagesFlight Direction Cosine MatrixsazradNo ratings yet

- Design ProjectDocument22 pagesDesign ProjectRobert T KasumiNo ratings yet

- 1 Flexible Link ProjectDocument15 pages1 Flexible Link Projectprasaad08No ratings yet

- Sliding Mode Control: EE5104 Adaptive Control System AY2009/2010 CA2 Mini-ProjectDocument24 pagesSliding Mode Control: EE5104 Adaptive Control System AY2009/2010 CA2 Mini-ProjectasbbabaNo ratings yet

- Pole Placement1Document46 pagesPole Placement1masd100% (1)

- Linear Systems Project Report Analysis and Control DesignDocument22 pagesLinear Systems Project Report Analysis and Control DesignJie RongNo ratings yet

- MCT Question BankDocument5 pagesMCT Question BankKalamchety Ravikumar SrinivasaNo ratings yet

- State Space Model TutorialDocument5 pagesState Space Model Tutorialsf111No ratings yet

- State Space DesignDocument47 pagesState Space DesigneuticusNo ratings yet

- Solve Ex 2.0Document6 pagesSolve Ex 2.0Omar Abd El-RaoufNo ratings yet

- Experiment No.03: Mathematical Modeling of Physical System: ObjectiveDocument5 pagesExperiment No.03: Mathematical Modeling of Physical System: ObjectiveSao SavathNo ratings yet

- Control Sol GA PDFDocument342 pagesControl Sol GA PDFRishabh ShuklaNo ratings yet

- Unit-III-State Space Analysis in Discrete Time Control SystemDocument49 pagesUnit-III-State Space Analysis in Discrete Time Control Systemkrushnasamy subramaniyan100% (2)

- LAB1 Report FileDocument4 pagesLAB1 Report FiletawandaNo ratings yet

- Lecture-3 Modeling in Time DomainDocument24 pagesLecture-3 Modeling in Time DomainRayana ray0% (1)

- State Errors - Steady: Eman Ahmad KhalafDocument28 pagesState Errors - Steady: Eman Ahmad KhalafAhmed Mohammed khalfNo ratings yet

- Comparison Between Full Order and Minimum Order Observer Controller For DC MotorDocument6 pagesComparison Between Full Order and Minimum Order Observer Controller For DC MotorInternational Journal of Research and DiscoveryNo ratings yet

- Experiment No.1 - Introduction To MATLAB and Simulink Software ToolsDocument2 pagesExperiment No.1 - Introduction To MATLAB and Simulink Software ToolsKalin GordanovichNo ratings yet

- System Identification Methods for Discrete Data SystemsDocument12 pagesSystem Identification Methods for Discrete Data SystemslvrevathiNo ratings yet

- Wheeled Inverted Pendulum EquationsDocument10 pagesWheeled Inverted Pendulum EquationsMunzir ZafarNo ratings yet

- Generalized Predictive Control Part II. Extensions and InterpretationsDocument12 pagesGeneralized Predictive Control Part II. Extensions and Interpretationsfiregold2100% (1)

- Bond Graph Modeling For Rigid Body Dynamics-Lecture Notes, Joseph Anand VazDocument18 pagesBond Graph Modeling For Rigid Body Dynamics-Lecture Notes, Joseph Anand VazBhupinder Singh0% (1)

- Time-Domain Solution of LTI State Equations 1 Introduction 2 ... - MITDocument32 pagesTime-Domain Solution of LTI State Equations 1 Introduction 2 ... - MITAbdul KutaNo ratings yet

- Labview GuideDocument8 pagesLabview GuideRameezNo ratings yet

- Unit 8 Inverse Laplace TransformsDocument34 pagesUnit 8 Inverse Laplace TransformsRaghuNo ratings yet

- Mechanism Final ProjectDocument14 pagesMechanism Final Projectsundari_murali100% (2)

- Digital Control Systems: Stability Analysis of Discrete Time SystemsDocument33 pagesDigital Control Systems: Stability Analysis of Discrete Time SystemsRohan100% (1)

- Embedded Model Predictive Control For An ESP On A PLCDocument7 pagesEmbedded Model Predictive Control For An ESP On A PLCRhaclley AraújoNo ratings yet

- Vidyarthiplus System Theory Question BankDocument11 pagesVidyarthiplus System Theory Question Banksyed1188100% (1)

- SC4070 Inverted Wedge Balance Experiment ControlDocument1 pageSC4070 Inverted Wedge Balance Experiment ControlphuceltnNo ratings yet

- Modeling and Simulation of Sensorless Control of PMSM With Luenberger Rotor Position Observer and Sui Pid ControllerDocument8 pagesModeling and Simulation of Sensorless Control of PMSM With Luenberger Rotor Position Observer and Sui Pid ControllerYassine MbzNo ratings yet

- Mechanical Translational Systems QuestionsDocument6 pagesMechanical Translational Systems Questionsdjun033No ratings yet

- 10-Lecture 41, 42 Pole PlacementDocument18 pages10-Lecture 41, 42 Pole PlacementHamza KhanNo ratings yet

- The Cart With An Inverted PendulumDocument5 pagesThe Cart With An Inverted PendulumvlrsenthilNo ratings yet

- Art N5 Luy p26-p29 PDFDocument4 pagesArt N5 Luy p26-p29 PDFsmprabu24317No ratings yet

- Lecture 4 Maximum PrincipleDocument21 pagesLecture 4 Maximum Principlemohamed-gamaaNo ratings yet

- Eee Previous Year Question PaperDocument8 pagesEee Previous Year Question PaperYuvraj KhadgaNo ratings yet

- Closed loop stability analysisDocument9 pagesClosed loop stability analysisjayababNo ratings yet

- Tutorial I Basics of State Variable ModelingDocument11 pagesTutorial I Basics of State Variable ModelingRebecca GrayNo ratings yet

- Lab 591 - B Analysis and Design of CSDocument2 pagesLab 591 - B Analysis and Design of CSDevine WriterNo ratings yet

- Trapezoidal RuleDocument10 pagesTrapezoidal RuleRicardo Wan Aguero0% (1)

- Digital System AnalysisDocument79 pagesDigital System AnalysisDereje Shiferaw100% (1)

- Nyquist Stability CriterionDocument20 pagesNyquist Stability CriterionmoosuhaibNo ratings yet

- Inverted PendulumDocument4 pagesInverted PendulumFerbNo ratings yet

- Introduction To Control: Dr. Muhammad Aamir Assistant Professor Bahria University IslamabadDocument117 pagesIntroduction To Control: Dr. Muhammad Aamir Assistant Professor Bahria University IslamabadEnGr Asad Hayat BanGashNo ratings yet

- Designing A Neuro PD With Gravity Compensation For Six Legged RobotDocument8 pagesDesigning A Neuro PD With Gravity Compensation For Six Legged RobotVishwanath KetkarNo ratings yet

- Assignment1Document2 pagesAssignment1Manoj KumarNo ratings yet

- Zarchan-Lecture Kalman Filter9Document104 pagesZarchan-Lecture Kalman Filter9larasmoyoNo ratings yet

- 012 - Chapter 2 - L10Document12 pages012 - Chapter 2 - L10nanduslns07No ratings yet

- Design of A Linear State Feedback ControllerDocument27 pagesDesign of A Linear State Feedback ControllerMohammad IkhsanNo ratings yet

- This Study Addresses The Robust Fault Detection Problem For Continuous-Time Switched Systems With State Delays.Document5 pagesThis Study Addresses The Robust Fault Detection Problem For Continuous-Time Switched Systems With State Delays.sidamineNo ratings yet

- Robust Adaptive Control for Fractional-Order Systems with Disturbance and SaturationFrom EverandRobust Adaptive Control for Fractional-Order Systems with Disturbance and SaturationNo ratings yet

- CMOS InverterDocument1 pageCMOS InverterHamid FarhanNo ratings yet

- Transistor NAND GateDocument1 pageTransistor NAND GateHamid FarhanNo ratings yet

- Q8Document1 pageQ8Hamid FarhanNo ratings yet

- Q6Document1 pageQ6Hamid FarhanNo ratings yet

- 8086 Interview Questions:: 8086 MicroprocessorDocument20 pages8086 Interview Questions:: 8086 MicroprocessorHamid FarhanNo ratings yet

- Q4Document1 pageQ4Hamid FarhanNo ratings yet

- 8085Document10 pages8085Hamid FarhanNo ratings yet

- KMurphy PDFDocument20 pagesKMurphy PDFHamid FarhanNo ratings yet

- Chap3 Data ExplorationDocument21 pagesChap3 Data ExplorationPhạm Đình SứngNo ratings yet

- Total Cost 10x3 + 25x7 +25x2 + 40x2 + 20x2 + 15x3 420Document1 pageTotal Cost 10x3 + 25x7 +25x2 + 40x2 + 20x2 + 15x3 420Hamid FarhanNo ratings yet

- Q7Document1 pageQ7Hamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (1110), If A High +10 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (1110), If A High +10 V and A Low 0 VHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Q5Document1 pageQ5Hamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Q4Document1 pageQ4Hamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Q3Document1 pageQ3Hamid FarhanNo ratings yet

- One Second Delay Subroutine. The Program Is To Be Based On Software Timing Loops and It WillDocument1 pageOne Second Delay Subroutine. The Program Is To Be Based On Software Timing Loops and It WillHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- 8051 Question1Document1 page8051 Question1Hamid FarhanNo ratings yet

- Simplex TableDocument1 pageSimplex TableHamid FarhanNo ratings yet

- OFDMDocument10 pagesOFDMHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- HWDocument3 pagesHWHamid FarhanNo ratings yet

- 8085 Questions and AnswersDocument5 pages8085 Questions and AnswersHamid FarhanNo ratings yet

- Determine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VDocument1 pageDetermine The Value of When The Digital Input Is (0110), If A High +5.0 V and A Low 0 VHamid FarhanNo ratings yet

- Syllabus Electrical DrivesDocument7 pagesSyllabus Electrical DrivespragatinareshNo ratings yet

- Robust UgDocument362 pagesRobust UgnileshsmateNo ratings yet

- Self Excited Periodic Motion in Underactuated Mechanical S - 2022 - Fuzzy Sets ADocument21 pagesSelf Excited Periodic Motion in Underactuated Mechanical S - 2022 - Fuzzy Sets AANDAHMOU SoulaimanNo ratings yet

- METR4200 - Advanced Control: Linear Quadratic Regulator Control (LQR)Document22 pagesMETR4200 - Advanced Control: Linear Quadratic Regulator Control (LQR)Michael PalmerNo ratings yet

- 2 SimPowerSystems FINALDocument0 pages2 SimPowerSystems FINALrpaladin1No ratings yet

- Implementation of Pacejka's AnalyticalDocument42 pagesImplementation of Pacejka's AnalyticalRenan AlvesNo ratings yet

- Modeling of Iec 61499 Function Blocks A Clue To Their VerificationDocument18 pagesModeling of Iec 61499 Function Blocks A Clue To Their VerificationTheMerovingNo ratings yet

- A LQR Optimal Method To Control The Position of An Overhead CraneDocument7 pagesA LQR Optimal Method To Control The Position of An Overhead CraneMuhammad FarisNo ratings yet

- Model Predictive Control - Approaches Based On The Extended State Space Model and Extended Non-Minimal State Space ModelDocument143 pagesModel Predictive Control - Approaches Based On The Extended State Space Model and Extended Non-Minimal State Space ModelumarsaboNo ratings yet

- EEEDDocument183 pagesEEEDkarthikeyantce502241No ratings yet

- PSD SyllabusDocument2 pagesPSD SyllabusChristopher OrtegaNo ratings yet

- R19 EPE EPS Syllabus PDFDocument60 pagesR19 EPE EPS Syllabus PDFSrinivasaraoNo ratings yet

- Robot-Cong-Nghiep - Lecture8 - (Cuuduongthancong - Com)Document2 pagesRobot-Cong-Nghiep - Lecture8 - (Cuuduongthancong - Com)Layla VuNo ratings yet

- An Autonomous Hovercraft With Minimum Energy ConsumptionDocument7 pagesAn Autonomous Hovercraft With Minimum Energy ConsumptionPrajwal CNo ratings yet

- Jet Engine Sensor Failure DetectionDocument44 pagesJet Engine Sensor Failure DetectionMorgen GumpNo ratings yet

- Fuzzy Control of Double Inverted Pendulum by Using State Varieties Fusion FunctionDocument4 pagesFuzzy Control of Double Inverted Pendulum by Using State Varieties Fusion Functionمحمد المهندسNo ratings yet

- CnotesDocument552 pagesCnotesPurple RainNo ratings yet

- Control System Lab Manual Revised Version Winter 2017Document75 pagesControl System Lab Manual Revised Version Winter 2017Sôhåîß KhåñNo ratings yet

- Short Answer (Chapter 1) : Jerry Croft Fri Sep 07 04:34:00 PDT 2012Document29 pagesShort Answer (Chapter 1) : Jerry Croft Fri Sep 07 04:34:00 PDT 2012Asad saeedNo ratings yet

- Robust Control System Design Advanced State Space Techniques 2ed PDFDocument309 pagesRobust Control System Design Advanced State Space Techniques 2ed PDFMauricioPachecoZeaNo ratings yet

- Elec4632 2011Document4 pagesElec4632 2011JosNo ratings yet

- Laboratory Manual Control System: B.E 4 SEMDocument27 pagesLaboratory Manual Control System: B.E 4 SEMR C Shah SubhamengineersNo ratings yet

- Introduction To Linear and Nonlinear ObserversDocument51 pagesIntroduction To Linear and Nonlinear ObserversBarış ÖzNo ratings yet

- Simulink Basics TutorialDocument143 pagesSimulink Basics TutorialHiếu HuỳnhNo ratings yet

- Nody D 23 01248 PDFDocument70 pagesNody D 23 01248 PDFLegis FloyenNo ratings yet

- Soln. of Transfer Functions.Document180 pagesSoln. of Transfer Functions.amm shijuNo ratings yet

- SMC Paper PendulumDocument6 pagesSMC Paper PendulumdorivolosNo ratings yet

- Overview Lecture 1: Anders Rantzer and Giacomo ComoDocument6 pagesOverview Lecture 1: Anders Rantzer and Giacomo Comohmalikn7581No ratings yet

- Model-Based H Control of A UPQCDocument12 pagesModel-Based H Control of A UPQCrakheep123No ratings yet