Professional Documents

Culture Documents

A Survey On Face Recognition Techniques

Uploaded by

Alexander DeckerOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Survey On Face Recognition Techniques

Uploaded by

Alexander DeckerCopyright:

Available Formats

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.

6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

A Survey on Face Recognition Techniques

Jay Prakash Maurya, M.Tech*(BIST), Bhopal(M.P) Mr.Akhilesh A. Waoo, Prof. BIST, Bhopal(M.P) DR. P.S Patheja, Prof. BIST, Bhopal(M.P) DR Sanjay Sharma, Prof. MANIT, Bhopal(M.P) jpeemaurya@gmail.com akhilesh_waoo@rediffmail pspatheja@gmail.com ssharma66@rediffmail.com Abstract Face detection is a computer technology that determines the locations and sizes of human faces in arbitrary (digital) images. It detects facial features and ignores anything else, such as buildings, trees and bodies. Face detection can be regarded as a specific case of object-class detection. In object-class detection, the task is to find the locations and sizes of all objects in an image that belong to a given class. Examples include upper torsos, pedestrians, and cars. Face detection can be regarded as a more general case of face localization. These days face detection is current research area. The face detection is normally done using ANN, CBIR, LDA and PCA. Keywords:- ANN, CBIR, LDA and PCA I.INTRODUCTION Early face-detection algorithms focused on the detection of frontal human faces, solve the more general and difficult problem of multi-view face detection. That is, the detection of faces that are either rotated along the axis from the face to the observer (in-plane rotation), or rotated along the vertical or left-right axis (out-of-plane rotation), or both. The algorithms take into account variations in the image or video by factors such as face appearance, lighting, and pose. Many algorithms implement the face-detection task as a binary pattern-classification task. That is, the content of a given part of an image is transformed into features, after which a classifier trained on example faces decides whether that particular region of the image is a face, or not. Often, a window-sliding technique is employed. That is, the classifier is used to classify the (usually square or rectangular) portions of an image, at all locations and scales, as either faces or non-faces (background pattern). Images with a plain or a static background are easy to process. Remove the background and only the faces will be left, assuming the image only contains a frontal face. Using skin color to find face segments is a vulnerable technique. The database may not contain all the skin colors possible. Lighting can also affect the results. Non-animate objects with the same color as skin can be picked up since the technique uses color segmentation. The advantages are the lack of restriction to orientation or size of faces and a good algorithm can handle complex backgrounds. [1]. A face model can contain the appearance, shape, and motion of faces. There are several shapes of faces. Some common ones are oval, rectangle, round, square, heart, and triangle. Motions include, but not limited to, blinking, raised eyebrows, flared nostrils, wrinkled forehead, and opened mouth. The face models will not be able to represent any person making any expression, but the technique does result in an acceptable degree of accuracy. [3] The models are passed over the image to find faces, however this technique works better with face tracking. Once the face is detected, the model is laid over the face and the system is able to track face movements. II.TECHNIQUES OF FACE DETECTION Methods for human face detection from color videos or images are to combine various methods of detecting color, shape, and texture. First, use a skin color model to single out objects of that color. Next, use face models to eliminate false detections from the color models and to extract facial features such as eyes, nose, and mouth. LDA Linear discriminant analysis (LDA) and the related Fisher's linear discriminant are methods used in statistics, pattern recognition and machine learning to find a linear combination of features which characterizes or separates two or more classes of objects or events. The resulting combination may be used as a linear classifier, or, more commonly, for dimensionality reduction before later classification. LDA is closely related to ANOVA (analysis of variance) and regression analysis, which also attempt to express one dependent variable as a linear combination of other features or measurements.[1][2] In the other two methods however, the dependent variable is a numerical quantity, while for LDA it is a categorical variable (i.e. the class label). Logistic regression and probit regression are more similar to LDA, as they also explain a categorical variable. These other methods are preferable in applications where it is not reasonable to assume that

11

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

the independent variables are normally distributed, which is a fundamental assumption of the LDA method. LDA is also closely related to principal component analysis (PCA) and factor analysis in that they both look for linear combinations of variables which best explain the data.[3] LDA explicitly attempts to model the difference between the classes of data. PCA on the other hand does not take into account any difference in class, and factor analysis builds the feature combinations based on differences rather than similarities. Discriminant analysis is also different from factor analysis in that it is not an interdependence technique: a distinction between independent variables and dependent variables (also called criterion variables) must be made. LDA works when the measurements made on independent variables for each observation are continuous quantities. When dealing with categorical independent variables, the equivalent technique is discriminant correspondence analysis.[4][5] EIGENFACE Eigenfaces are a set of eigenvectors used in the computer vision problem of human face recognition. The approach of using eigenfaces for recognition was developed by Sirovich and Kirby (1987) and used by Matthew Turk and Alex Pentland in face classification. It is considered the first successful example of facial recognition technology.[citation needed] These eigenvectors are derived from the covariance matrix of the probability distribution of the high-dimensional vector space of possible faces of human beings. Eigen Face Generation A set of eigenfaces can be generated by performing a mathematical process called principal component analysis (PCA) on a large set of images depicting different human faces. Informally, eigenfaces can be considered a set of "standardized face ingredients", derived from statistical analysis of many pictures of faces. Any human face can be considered to be a combination of these standard faces. For example, one's face might be composed of the average face plus 10% from eigenface 1, 55% from eigenface 2, and even -3% from eigenface 3. Remarkably, it does not take many eigenfaces combined together to achieve a fair approximation of most faces. Also, because a person's face is not recorded by a digital photograph, but instead as just a list of values (one value for each eigenface in the database used), much less space is taken for each person's face. The eigenfaces that are created will appear as light and dark areas that are arranged in a specific pattern. This pattern is how different features of a face are singled out to be evaluated and scored. There will be a pattern to evaluate symmetry, if there is any style of facial hair, where the hairline is, or evaluate the size of the nose or mouth. Other eigenfaces have patterns that are less simple to identify, and the image of the eigenface may look very little like a face. The technique used in creating eigenfaces and using them for recognition is also used outside of facial recognition. This technique is also used for handwriting analysis, lip reading, voice recognition, sign language/hand gestures interpretation and medical imaging analysis. Therefore, some do not use the term eigenface, but prefer to use 'eigen image'. IV.NEURAL NETWORK FACE DETECTION Pattern recognition is a modern day machine intelligence problem with numerous applications in a wide field, including Face recognition, Character recognition, Speech recognition as well as other types of object recognition. The field of pattern recognition is still very much in it is infancy, although in recent years some of the barriers that hampered such automated pattern recognition systems have been lifted due to advances in computer hardware providing machines capable of faster and more complex computation. Face recognition, although a trivial task for the human brain has proved to be extremely difficult to imitate artificially. It is commonly used in applications such as human-machine interfaces and automatic access control systems. Face recognition involves comparing an image with a database of stored faces in order to identify the individual in that input image. The related task of face detection has direct relevance to face recognition because images must be analyzed and faces identified, before they can be recognized. Detecting faces in an image can also help to focus the computational resources of the face recognition system, optimizing the systems speed and performance. Face detection involves separating image windows into two classes; one containing faces (targets), and one containing the background (clutter). It is difficult because although commonalities exist between faces, they can vary considerably in terms of age, skin colour and facial expression. The problem is further complicated by differing lighting conditions, image qualities and geometries, as well as the possibility of partial occlusion and disguise. An ideal face detector would therefore be able to detect the presence of any face under any set of lighting conditions, upon any background. For basic pattern recognition systems, some of these effects can be avoided by assuming and ensuring a uniform background and fixed uniform lighting conditions. This assumption 12

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

is acceptable for some applications such as the automated separation of nuts from screws on a production line, where lighting conditions can be controlled, and the image background will be uniform. For many applications however, this is unsuitable, and systems must be designed to accurately classify images subject to a variety unpredictable conditions. Each technique takes a slightly different approach to the face detection problem, and although most produce encouraging results, they are not without their limitations. The next section outlines the various approaches, and gives six examples of different face detection systems developed. III.CBIR Recent years increase in the size of digital image collections. This ever increasing amount of multimedia data creates a need for new sophisticated methods to retrieve the information one is looking for. The classical approach alone cannot keep up with the rapid growth of available data anymore. Thus content-based image retrieval attracted many researchers of various fields. There exist many systems for image retrieval meanwhile. Retrieval of Images from Image archive using Suitable features extracted from the content of Image is currently an active research area. The CBIR problem is identified because there is a need to retrieve the huge databases having images efficiently and effectively. For the purpose of content-based image retrieval (CBIR) an up-to-date comparison of state-of-the-art low-level color and texture feature extraction approach is discussed. In this paper we propose A New Approach for CBIR with interactive user feedback based image classification by Using Suitable Classifier.

Figure.1- Image Retrieval Using Relevance Feedback This Approach is applied to improve retrieval performance. Our aim is to select the most informative images with respect to the query image by ranking the retrieved images. This approach uses suitable feedback to repeatedly train the Histogram Intersection Kernel based Classifier. Proposed Approach retrieves mostly informative and correlated images. Figure 1 shows systematic retrieval process. Color Feature Color is the most popularly used features in image retrieval and indexing. On the other hand, due to its inherent nature of inaccuracy in description of the same semantic content by different color quantization and /or by the 13

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

uncertainty of human perception, it is important to capture this inaccuracy when defining the features. We apply fuzzy logic to the traditional color histogram to help capture this uncertainty in color indexing [3], [2].In image retrieval systems color histogram is the most commonly used feature. The main reason is that it is independent of image size and orientation. Also it is one of the most straight-forward features utilized by humans for visual recognition and discrimination. Statistically, it denotes the joint probability of the intensities of the three color channels. Once the image is segmented, from each region the color histogram is extracted. The major statistical data that are extracted are histogram mean, standard deviation, and median for each color channel i.e. Red, Green, and Blue. So totally 3 3 = 9 features per segment are obtained. All the segments need not be considered, but only segments that are dominant may be considered. Because this would speed up the Calculation and may not significantly affect the end result. Texture Feature Texture is another important property of images. Various texture representations have been investigated in pattern recognition and computer vision. Basically, texture representation methods can be classified into two categories: structural and statistical. Structural methods, including morphological operator and adjacency graph, describe texture by identifying structural primitives and their placement rules. They tend to be most effective when applied to textures that are very regular. There is no precise definition for texture. However, one can define texture as the visual patterns that have properties of homogeneity that do not result from the presence of only a single color or intensity. Texture determination is ideally suited for medical image retrievals].In this work, computation of gray level co occurrence matrix is done and from which a number of statistical measures are derived. The autocorrelation function of an image is used to quantify the regularity and the coarseness of a texture. This function is defined for an image I as: The notion of texture generally refers to properties of homogeneity direction information. Shape Feature Shape is used as another feature in image retrieval. However, it is evident that Retrieval by shape is useful only in very restricted environments, which provide a good basis for segmentation (e.g. art items in front of a homogeneous background). Shape descriptors are diverse, e.g. turning angle functions, deformable templates, algebraic moments, and Fourier coefficients. Combinations of Color, Texture, Shape Features Similarity is based on visual characteristics such as dominant colors, shapes and textures. Many systems provide the possibility to Combine or select between one or more models. In a combination of color, texture and contour features is used. Extends the color histogram with textural information by weighting each Pixels contribution with its Laplacian also provides several different techniques for image retrieval. These methods use the feedback from the user in order to automatically select or weight the different models, such that the requirements of the user are best fulfilled. Several systems perform segmentation and characterize each region by color, texture, and shape. As stated before these systems crucially depend on a good segmentation which is not always given. This can result in retrieval results which seem to have nothing in common with the query. Among various flowers the system returns an image that does not display a star-shaped object but for which the segmentation delivered a star-like region as an artificial boundary within a textured region. We therefore consider segmentation-based methods reliable only for applications that allow for a precise segmentation. Similarity Computation Similarity measurement is a key to CBIR algorithms. These algorithms search image database to find images similar to a given query, so, they should be able to evaluate the amount of similarities between images. Therefore, feature vectors, extracted from the database image and from the query, are often passed through the distance function d. The aim of any distance function (or similarity measure) is to calculate how close the feature vectors are to each other. There exist several common techniques for measuring the distance (dissimilarity) between two N-dimensional feature vector f and g. Each metric has some important characteristics related to an application. Image Classification It is processing techniques which apply quantitative methods to the values in a digital yield or remotely sensed scene to group pixels with similar digital number values into feature classes or categories Image classification and annotation are important problems in computer vision, but rarely considered together. Intuitively, annotations provide evidence for the class label, and the class label provides evidence for annotations. Image classification is conducted in three modes: supervised, unsupervised, and hybrid. General, a supervised classification requires the manual identification of known surface features within the imagery and then using a statistical package to determine the spectral signature of the identified feature. The spectral fingerprints of the identified features are then used to classify the rest of the image. An unsupervised classification scheme uses spatial statistics (e.g. the ISODATA algorithm) to classify the image into a predetermined number of categories 14

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

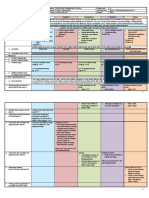

(classes). These classes are statistically significant within the imagery, but may not represent actual surface features of interest. Hybrid classification uses both techniques to make the process more efficient and accurate. We can use SVM (Support Vector Machine) for classification. Support Vector Machine, an important machine learning technique has been used efficiently for variety of classification purposes like object based image analysis, hand written digit recognition, and image segmentation among others. SVM can be used efficiently as a binary classifier as well as multi class classifier purpose. A Support Vector Machine (SVM) performs classification by constructing an N-dimensional hyper plane that optimally separates the data into two categories. SVM models are closely related to neural networks. In fact, a SVM model using a sigmoid kernel function is equivalent to a two-layer, perceptron neural network. Support Vector Machine (SVM) models are a close cousin to classical multilayer perceptron neural networks. Using a kernel function, SVMs are an alternative training method for polynomial, radial basis function and multi-layer perceptron classifiers in which the weights of the network are found by solving a quadratic programming problem with linear constraints, rather than by solving a non-convex, unconstrained minimization problem as in standard neural network training. Linear SVM is the simplest type of SVM classifier which separates the points into two classes using a linear hyper plane that will maximize the margin between the positive and negative set. The concept of non linearity comes when the data points cannot be classified into two different classes using a simple hyper plane, rather a nonlinear curve is required. In this case, the data points are mapped non-linearly to a higher dimensional space so that they become linearly separable. Table-1 given explain comparison between image classification techniques. Artificial neural Support vectorFuzzy logic Genetic algorithm Parameter network machine Type of approach Non parametric Non parametric Stochastic Large time series data with binary classifier Non linear decision Efficient when the Efficient when the Depends on prior Depends on the direction boundaries data have only few data have more knowledge for decision of decisions input variables input variables boundaries Training speed Network structure, Training data size, Iterative application of Referring irrelevant and momentum kernel parameter the fuzzy integral. noise genes. rate ,learning rate, converging criteria Accuracy Depends on number Depends on Selection of cutting Selection of genes. of input classes. selection of threshold optimal hyper plane. General performance Network structure Kernel parameter Fused fuzzy integral. Feature selection. Table 1. Comparision of image classification techniques CONCLUSION & FUTURE WORK As the research point of view any study is not enough that you can say perfect. This paper gives some interest in the following are for research. In these days the crime we should be care of our safety this field of research can held in this area. The two algorithms can be combining to find the batter results in respect to accuracy & broader classes. REFERENCES [1] Aamir Khan, Hasan Farooq, Principal Component Analysis-Linear Discriminant Analysis Feature Extractor for Pattern Recognition, IJCSI International Journal of Computer Science Issues, Vol. 8, Issue 6, No 2, Nov 2011. [2] Adam D. Gilbert, Ran Chang, and Xiaojun Qi A Retrieval pattern based inter query learning approach for content based image retrieval Proceedings of 2010 IEEE 17th International Conference on Image Processing September 26-29, 2010, Hong Kong. [3] Amy Safrina ,BintiMohd, Ali A Real Time Face Detection System 2010. [4] Approach to ASR, 11 International conferences on Information Sciences, Signal Processing & their Applications. IEEE 2012. [5] BotjanVesnicer, JernejaganecGros, Nikola Pavei, Vitomirtruc Face Recognition using Simplified Probabilistic Linear Discriminant Analysis International Journal of Advanced Robotic Systems 2012.

15

Computer Engineering and Intelligent Systems www.iiste.org ISSN 2222-1719 (Paper) ISSN 2222-2863 (Online) Vol.4, No.6, 2013 - Selected from International Conference on Recent Trends in Applied Sciences with Engineering Applications

[6] Chen Feng, Yu Song-nian Content-Based Image Retrieval by DTCWT Feature. [7] Chih-Chin Lai, Ying-Chuan ChenA User-Oriented Image Retrieval System Based on Interactive Genetic Algorithm, IEEE Transactions On Instrumentation and Measurement, Vol. 60, No. 10, October 2011. [8] Douglas R. Heisterkamp Building a Latent Semantic Index of an Image Database from Patterns of Relevance FeedbackDr. Fuhui Long, Dr. Hongjiang Zhang and Prof. David Dagan Feng, Fundamentals of Content Retrieval. [9] Feng Kang, Rong Jin, Joyce Y. Chai Regularizing Translation Models for Better Automatic Image Annotation, Thirteenth ACM international conference on Information and knowledge management, 8-13 November 2004. [10] G. Sasikala, R. Kowsalya, Dr. M. Punithavalli, A comparative study of Dimension reduction for content based image retrieval, The International journal of Multimedia & Its Applications (IJMA) Vol.2, No.3, August 2010. [11] GhanshyamRaghuwanshi, Nishchol Mishra, Sanjeev Sharma Content based Image Retrieval using Implicit and Explicit Feedback with Interactive Genetic Algorithm, International Journal of Computer Applications, Vol 43 No.16, April 2012 [12] H. Hu, P. Zhang,F. De la Torre, Face recognition using enhanced linear discriminant analysis, Published in IET Computer Vision , March 2009. [13] Haitao Zhao and Pong Chi Yuen, Incremental Linear Discriminant Analysis for Face Recognition, IEEE Transactions on systems, man, and cyberneticspart b: cybernetics, vol. 38, no. 1, Feb 2008. [14] Hao Ma,Jianke Zhu, Lyu, M.R.-T., King, I.Bridging the Semantic Gap between Image Contents and Tags, Multimedia, IEEE Transactions,Vol 12, Issue 5, Aug. 2010. [15] Hazim Kemal Ekenel, Rainer Stiefelhagen, Two-class Linear Discriminant Analysis for Face Recognition, Interactive Systems Labs, Computer Science Department, Universitat Karlsruhe (TH). [16] Hythem Ahmed, Jedra Mohamed, ZahidNoureddine, Face Recognition Systems Using Relevance Weighted Two Dimensional Linear Discriminant Analysis Algorithm, Journal of Signal and Information Processing, 2012. [17] HuiHui Wang, DzulkifliMohamad, N.A.Ismail. Image Retrieval:Techniques, challenge and Trend,

International conference on Machine Vision, Image processing and Pattern Analysis, Bangkok, Dec 2009.

[18] Hythem Ahmed, Jedra Mohamed, ZahidNoureddine, Face Recognition Systems Using Relevance Weighted Two Dimensional Linear Discriminant Analysis Algorithm ,Journal of Signal and Information Processing, 2012, 3, 130-135 doi:10.4236/jsip.2012.31017 Published Online February 2012. [19] J. Han, K. N. Ngan, M. Li, and H. J. Zhang, A Memory Learning Framework for Effective Image Retrieval, IEEE Trans. Image Processing, Vol. 14, No. 4, pp. 511-524, 2005. [20] Jerome Da Rugna, Gael Chareyron and Hubert Konik, About Segmentation Step in Content-based Image Retrieval Systems. [21] Jan-Ming Ho, Shu-Yu Lin, Chi-Wen Fann, Yu-Chun Wang, A Novel Content Based Image Retrieval System using K-means with Feature Extraction 2012 International Conference on Systems and Informatics (ICSAI 2012).

16

This academic article was published by The International Institute for Science, Technology and Education (IISTE). The IISTE is a pioneer in the Open Access Publishing service based in the U.S. and Europe. The aim of the institute is Accelerating Global Knowledge Sharing. More information about the publisher can be found in the IISTEs homepage: http://www.iiste.org CALL FOR PAPERS The IISTE is currently hosting more than 30 peer-reviewed academic journals and collaborating with academic institutions around the world. Theres no deadline for submission. Prospective authors of IISTE journals can find the submission instruction on the following page: http://www.iiste.org/Journals/ The IISTE editorial team promises to the review and publish all the qualified submissions in a fast manner. All the journals articles are available online to the readers all over the world without financial, legal, or technical barriers other than those inseparable from gaining access to the internet itself. Printed version of the journals is also available upon request of readers and authors. IISTE Knowledge Sharing Partners EBSCO, Index Copernicus, Ulrich's Periodicals Directory, JournalTOCS, PKP Open Archives Harvester, Bielefeld Academic Search Engine, Elektronische Zeitschriftenbibliothek EZB, Open J-Gate, OCLC WorldCat, Universe Digtial Library , NewJour, Google Scholar

You might also like

- Gardiner - Should More Matches End in CourtDocument4 pagesGardiner - Should More Matches End in CourtAllisha BowenNo ratings yet

- Eigedces Recognition: Matthew Alex PentlandDocument69 pagesEigedces Recognition: Matthew Alex PentlandrequestebooksNo ratings yet

- Detecting Faces in Images: A SurveyDocument25 pagesDetecting Faces in Images: A Surveyالدنيا لحظهNo ratings yet

- Face Detection and Recognition - A ReviewDocument8 pagesFace Detection and Recognition - A ReviewHenry MagdayNo ratings yet

- Face Recognition Techniques and Its ApplicationDocument3 pagesFace Recognition Techniques and Its ApplicationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Survey On Face Recognition TechniquesDocument5 pagesA Survey On Face Recognition TechniquesIJSTENo ratings yet

- Application of Soft Computing To Face RecognitionDocument68 pagesApplication of Soft Computing To Face RecognitionAbdullah GubbiNo ratings yet

- Automated Facial Recognition Entry Management System Using Image Processing TechniqueDocument6 pagesAutomated Facial Recognition Entry Management System Using Image Processing TechniqueosinoluwatosinNo ratings yet

- A Survey of Face Recognition Approach: Jigar M. Pandya, Devang Rathod, Jigna J. JadavDocument4 pagesA Survey of Face Recognition Approach: Jigar M. Pandya, Devang Rathod, Jigna J. JadavSalman umerNo ratings yet

- Face Dtection: Face Detection Is A Computer Technology That Determines The Locations and Sizes ofDocument13 pagesFace Dtection: Face Detection Is A Computer Technology That Determines The Locations and Sizes ofsagarbangiaNo ratings yet

- Ijaret: ©iaemeDocument8 pagesIjaret: ©iaemeIAEME PublicationNo ratings yet

- Algos For Face Detection and RecognitionDocument10 pagesAlgos For Face Detection and RecognitionsaicharanNo ratings yet

- 1.1. Overview: Department of Computer Science & Engineering, VITS, Satna (M.P.) IndiaDocument36 pages1.1. Overview: Department of Computer Science & Engineering, VITS, Satna (M.P.) IndiamanuNo ratings yet

- Jocn 1991 3 1 71 PDFDocument313 pagesJocn 1991 3 1 71 PDFRicardo A. LadinoNo ratings yet

- Face Recognition Report 1Document26 pagesFace Recognition Report 1Binny DaraNo ratings yet

- Face Recognition Using PCA and Wavelet Method: IjgipDocument4 pagesFace Recognition Using PCA and Wavelet Method: IjgipAhmed GhilaneNo ratings yet

- 6.report Face RecognitionDocument45 pages6.report Face RecognitionSuresh MgNo ratings yet

- Face Recognition Using Linear Discriminant Analysis: Manju Bala, Priti Singh, Mahendra Singh MeenaDocument8 pagesFace Recognition Using Linear Discriminant Analysis: Manju Bala, Priti Singh, Mahendra Singh MeenaAbid BadshahNo ratings yet

- Farooq CHAPTER ONE - ThreeDocument8 pagesFarooq CHAPTER ONE - ThreeOgunyemi VictorNo ratings yet

- Redundancy in Face Image RecognitionDocument4 pagesRedundancy in Face Image RecognitionIJAERS JOURNALNo ratings yet

- Face Tracking and Robot Shoot Using Adaboost MethodDocument7 pagesFace Tracking and Robot Shoot Using Adaboost MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Tracking and Robot Shoot: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Document9 pagesFace Tracking and Robot Shoot: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Are View On Face Detection MethodsDocument12 pagesAre View On Face Detection MethodsQuang MinhNo ratings yet

- Estimation of Facial Expression and Head Pose: SurveyDocument3 pagesEstimation of Facial Expression and Head Pose: SurveyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Recognition Using Neural Network and Eigenvalues With Distinct Block ProcessingDocument12 pagesFace Recognition Using Neural Network and Eigenvalues With Distinct Block Processingsurendiran123No ratings yet

- Face Detection and Face RecognitionDocument7 pagesFace Detection and Face RecognitionPranshu AgrawalNo ratings yet

- Eigenfaces For Recognition: Vision and Modeling GroupDocument16 pagesEigenfaces For Recognition: Vision and Modeling GroupPasquale LisiNo ratings yet

- Synopsis of M. Tech. Thesis On Face Detection Using Neural Network in Matlab by Lalita GurjariDocument15 pagesSynopsis of M. Tech. Thesis On Face Detection Using Neural Network in Matlab by Lalita GurjariLinguum100% (1)

- Review of Face Detection Systems Based Artificial Neural Networks AlgorithmsDocument16 pagesReview of Face Detection Systems Based Artificial Neural Networks AlgorithmsIJMAJournalNo ratings yet

- Review of Face Recognition System Using MATLAB: Navpreet KaurDocument4 pagesReview of Face Recognition System Using MATLAB: Navpreet KaurEighthSenseGroupNo ratings yet

- Shape Invariant Recognition of Segmented Human Faces Using EigenfacesDocument5 pagesShape Invariant Recognition of Segmented Human Faces Using EigenfacesFahad AslamNo ratings yet

- EC1124 - Neural Networks and Fuzzy Logic Assignment Face Feature ExtractionDocument18 pagesEC1124 - Neural Networks and Fuzzy Logic Assignment Face Feature ExtractionAdhithya RamamoorthyNo ratings yet

- Pattern RecognitionDocument12 pagesPattern RecognitionHarvinraj MohanapiragashNo ratings yet

- Effect of Distance Measures in Pca Based Face: RecognitionDocument15 pagesEffect of Distance Measures in Pca Based Face: Recognitionadmin2146No ratings yet

- The Use of Neural Networks in Real-Time Face DetectionDocument16 pagesThe Use of Neural Networks in Real-Time Face Detectionkaushik73No ratings yet

- Eigenfaces For Face Recognition: ECE 533 Final Project Report, Fall 03Document20 pagesEigenfaces For Face Recognition: ECE 533 Final Project Report, Fall 03Youssef KamounNo ratings yet

- Chapter 1Document20 pagesChapter 1AmanNo ratings yet

- Face Recognition: Identifying Facial Expressions Using Back PropagationDocument5 pagesFace Recognition: Identifying Facial Expressions Using Back PropagationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Referensi Mas Surya PDFDocument8 pagesReferensi Mas Surya PDFJamil Al-idrusNo ratings yet

- Missing Person Detection Using AI-IJRASETDocument6 pagesMissing Person Detection Using AI-IJRASETIJRASETPublicationsNo ratings yet

- 10 Chapter2Document25 pages10 Chapter2quinnn tuckyNo ratings yet

- Final Report On Face RecognitionDocument22 pagesFinal Report On Face RecognitionSubhash Kumar67% (6)

- Emotion Based Music PlayerDocument3 pagesEmotion Based Music Playerpratiksha jadhavNo ratings yet

- Real Time Face Detection and Tracking Using Opencv: Mamata S.KalasDocument4 pagesReal Time Face Detection and Tracking Using Opencv: Mamata S.KalasClevin CkNo ratings yet

- A Survey On Emotion Based Music Player Through Face Recognition SystemDocument6 pagesA Survey On Emotion Based Music Player Through Face Recognition SystemIJRASETPublicationsNo ratings yet

- Face RecognitionDocument27 pagesFace RecognitionManendra PatwaNo ratings yet

- Face Recognition: A Literature Review: A. S. Tolba, A.H. El-Baz, and A.A. El-HarbyDocument16 pagesFace Recognition: A Literature Review: A. S. Tolba, A.H. El-Baz, and A.A. El-HarbyMarta FloresNo ratings yet

- Face DetectionDocument21 pagesFace Detectionعلم الدين خالد مجيد علوانNo ratings yet

- A Literature Survey On Face Recognition TechniquesDocument6 pagesA Literature Survey On Face Recognition TechniquesseventhsensegroupNo ratings yet

- Face Recognition Literature ReviewDocument7 pagesFace Recognition Literature Reviewaflsqrbnq100% (1)

- B2Document44 pagesB2orsangobirukNo ratings yet

- 2D Face Detection and Recognition MSC (I.T)Document61 pages2D Face Detection and Recognition MSC (I.T)Santosh Kodte100% (1)

- Detecting Faces in Images: A SurveyDocument25 pagesDetecting Faces in Images: A SurveySetyo Nugroho100% (17)

- Face RecognitionDocument23 pagesFace RecognitionAbdelfattah Al ZaqqaNo ratings yet

- Robust Decision Making System Based On Facial Emotional ExpressionsDocument6 pagesRobust Decision Making System Based On Facial Emotional ExpressionsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Recognization: A Review: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Document3 pagesFace Recognization: A Review: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Face Recognition Using Feature Extraction Based On Descriptive Statistics of A Face ImageDocument5 pagesFace Recognition Using Feature Extraction Based On Descriptive Statistics of A Face ImageAnahi Fuentes RodriguezNo ratings yet

- A New Approach For Mood Detection Via Using Principal Component Analysis and Fisherface Algorithm 88 92Document5 pagesA New Approach For Mood Detection Via Using Principal Component Analysis and Fisherface Algorithm 88 92yokazukaNo ratings yet

- SVM Baysian PatilDocument7 pagesSVM Baysian PatilVSNo ratings yet

- Assessment of Knowledge, Attitude and Practices Concerning Food Safety Among Restaurant Workers in Putrajaya, MalaysiaDocument10 pagesAssessment of Knowledge, Attitude and Practices Concerning Food Safety Among Restaurant Workers in Putrajaya, MalaysiaAlexander DeckerNo ratings yet

- Assessing The Effect of Liquidity On Profitability of Commercial Banks in KenyaDocument10 pagesAssessing The Effect of Liquidity On Profitability of Commercial Banks in KenyaAlexander DeckerNo ratings yet

- Assessment For The Improvement of Teaching and Learning of Christian Religious Knowledge in Secondary Schools in Awgu Education Zone, Enugu State, NigeriaDocument11 pagesAssessment For The Improvement of Teaching and Learning of Christian Religious Knowledge in Secondary Schools in Awgu Education Zone, Enugu State, NigeriaAlexander DeckerNo ratings yet

- Analysis of Teachers Motivation On The Overall Performance ofDocument16 pagesAnalysis of Teachers Motivation On The Overall Performance ofAlexander DeckerNo ratings yet

- An Overview On Co-Operative Societies in BangladeshDocument11 pagesAn Overview On Co-Operative Societies in BangladeshAlexander DeckerNo ratings yet

- Analysis of Blast Loading Effect On High Rise BuildingsDocument7 pagesAnalysis of Blast Loading Effect On High Rise BuildingsAlexander DeckerNo ratings yet

- An Experiment To Determine The Prospect of Using Cocoa Pod Husk Ash As Stabilizer For Weak Lateritic SoilsDocument8 pagesAn Experiment To Determine The Prospect of Using Cocoa Pod Husk Ash As Stabilizer For Weak Lateritic SoilsAlexander DeckerNo ratings yet

- Agronomic Evaluation of Eight Genotypes of Hot Pepper (Capsicum SPP L.) in A Coastal Savanna Zone of GhanaDocument15 pagesAgronomic Evaluation of Eight Genotypes of Hot Pepper (Capsicum SPP L.) in A Coastal Savanna Zone of GhanaAlexander DeckerNo ratings yet

- Educ 511 SyllabusDocument7 pagesEduc 511 Syllabusapi-249464067No ratings yet

- Holy Redeemer School of Dasmarinas Cavite Inc.: Organizational StractureDocument1 pageHoly Redeemer School of Dasmarinas Cavite Inc.: Organizational Stracturenathan defeoNo ratings yet

- RCSD Phase 3 Announcement Letter 011221Document2 pagesRCSD Phase 3 Announcement Letter 011221Adam PenaleNo ratings yet

- Good Morning Ma'am Kim, It's: Daily Lesson Plan (COT2)Document9 pagesGood Morning Ma'am Kim, It's: Daily Lesson Plan (COT2)Roberto MabulacNo ratings yet

- SBFP CertificationDocument4 pagesSBFP CertificationJonalyn BaladhayNo ratings yet

- DLL Epp6-Entrep q1 w3Document3 pagesDLL Epp6-Entrep q1 w3Kristoffer Alcantara Rivera50% (2)

- 062eng HW w14 Mon Flipped Vocab CH 8 and Review PDFDocument2 pages062eng HW w14 Mon Flipped Vocab CH 8 and Review PDFDamla'nın Panda'sıNo ratings yet

- Nursing Theory-Patient-Centered Approaches To Nurses.Document10 pagesNursing Theory-Patient-Centered Approaches To Nurses.Bheru LalNo ratings yet

- Final Written ReportDocument6 pagesFinal Written ReportKitz TanaelNo ratings yet

- Dhanasekaran Covering LeterDocument10 pagesDhanasekaran Covering LeterAgotNo ratings yet

- Drone Technology Course PresentationDocument57 pagesDrone Technology Course PresentationChristopher Salber100% (1)

- Curriculum Framework Final Version Printed - CompressedDocument341 pagesCurriculum Framework Final Version Printed - CompressedNorbert UwimanaNo ratings yet

- EE21-2 For MEDocument6 pagesEE21-2 For MECJ GoradaNo ratings yet

- Abbca AnswerDocument3 pagesAbbca AnswerCarlz BrianNo ratings yet

- ENG - B1 1 0203G-Simple-Passive PDFDocument25 pagesENG - B1 1 0203G-Simple-Passive PDFankira78No ratings yet

- CBM IndexDocument8 pagesCBM IndexSaili SarmalkarNo ratings yet

- Unit 5 Modernist Approaches: StructureDocument11 pagesUnit 5 Modernist Approaches: StructureBharath VenkateshNo ratings yet

- TH e Problems of Geography EducationDocument12 pagesTH e Problems of Geography EducationLux King TanggaNo ratings yet

- Testable QuestionDocument40 pagesTestable Questionapi-235064920No ratings yet

- How To Have Bad SexDocument28 pagesHow To Have Bad Sexlfbarbal1949100% (2)

- Schaefer Courtney Resume 2022Document2 pagesSchaefer Courtney Resume 2022api-403788034No ratings yet

- Vocabulary & Spelling G12Document94 pagesVocabulary & Spelling G12Eugenia MigranovaNo ratings yet

- Resume Baigalmaa 2016Document3 pagesResume Baigalmaa 2016api-335537981No ratings yet

- Omnibus Certification of Authenticity and Veracity of DocumentsDocument2 pagesOmnibus Certification of Authenticity and Veracity of DocumentsLeizle Ann BajadoNo ratings yet

- Self-Assessment of Teacher Leader Qualities: X X X X XDocument4 pagesSelf-Assessment of Teacher Leader Qualities: X X X X Xapi-280948296No ratings yet

- Cognitive TheoryDocument19 pagesCognitive TheoryAriel De La CruzNo ratings yet

- Schedule of Events Isamme 2021-1Document7 pagesSchedule of Events Isamme 2021-1Helmi IlhamNo ratings yet

- PBL RegDocument328 pagesPBL RegFauziatul MardhiahNo ratings yet

- Linking Languages and LiteracyDocument2 pagesLinking Languages and LiteracypcoutasNo ratings yet