Professional Documents

Culture Documents

Enriching Experience

Uploaded by

whatuniOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Enriching Experience

Uploaded by

whatuniCopyright:

Available Formats

Bio: Dr George Ghinea is a Reader in Computing at Brunel University.

His interests lie in perceptual multimedia communications and he leads a group of six researchers exploring ways of furthering our understanding of interacting with computers.

Enriching Experience

The idea of computer-generated smell, known as olfactory data, is fast becoming a reality. Its an area of multimedia research that is generating interest right across the industry, as its possibilities seem endless. The idea that, through use of multiple media objects, consumers could be reached on a multi-sensory level is a tantalising one. Traditionally, media objects have engaged just two of our senses, namely our sight and hearing. Not for much longer it seems.

Olfaction, or smell, is one of the last challenges that multimedia applications have to conquer. The demand for increasingly sophisticated multimodal systems and applications that are highly interactive and multi-sensory in nature has led to the use of new media and new user interface devices in computing. Olfactory data is one of such media objects currently generating a lot of interest. In this way, information may be conveyed by the relationship between the combined media objects, as well as by the individual media objects. Benefits of multimodal systems include synergy, redundancy and an increased bandwidth of information transfer. Using olfactory data in computing is not without its challenges, however. One of these is the fact that olfactory data is virtual, unlike other data objects that have the ability to be stored in some computer data format. As such, olfactory data has to be stored in an external peripheral device attached to a computer with its emission generated by triggering the devices output of smell from the computer it is attached to. Nonetheless, areas of computing that have experienced significant usage of olfactory data over the years include virtual reality, multimodal displays and alerting systems, and media and entertainment systems. Sensory overload If we develop an effective way to involve scent in the users experience, it will mean that perception of a multimedia presentation or application is enriched in ways previously unimaginable. A benefit of having such multimodal information is that it appeals to all our senses at the same time, making the user experience even more complete and absorbing. Our visual and auditory senses are already overburdened with the respective visual and auditory cues we are required to respond to in computer information systems. Our attention spans are short and new techniques are needed to grab our awareness. Additional applications used to gain the users attention, more popularly known as notification or alerting systems, represent one of the areas in which olfactory data output

has been found to be quite beneficial. Research now distinguishes between odour output to convey information, where the smell released is related to the information to be conveyed (olfactory icons), and smell output to provide an abstract relationship with the data it expresses (also known as smicons). Another potential problem that is encountered when trying to develop and produce olfactory display devices is that whilst it is usually easy to combine other data formats (sound and video) to produce a desired output, it is extremely difficult to do the same with odour, as a standard additive process or model for combining different scents does not exist. Consequently, more often than not, smell-generating devices are limited to producing the specific smells that have been loaded into their individual storage systems; the system has its limits at the moment. Nonetheless, there has been some olfactory data usage in the computing field over the years, with a number of researchers building their own computer-generating smell systems and others relying on the few that are commercially available. More recently, researchers in Japan [3] have developed a smell-generating device which works by combining chemicals to produce the desired scents as and when required, but this device is also not yet commercially available and still at development stage. Smell of success Researchers in Scotland [1] have also used olfactory data for multimedia content searching, browsing and retrieval more specifically to aid in the search of digital photo collections. In their experiment, they compare the effects of using text-based tagging and smell-based tagging of digital photos by users to search and retrieve photos from a digital library. To achieve this, they developed an olfactory photo browsing and searching tool, which they called Olfoto. Smell and text tags from participants description of photos (personal photographs of participants were used) were created and participants had to use these tags to put a tag on their photos. Fragra, on the other hand, is a visual-olfactory virtual reality game [2] that enables players to explore the interactive relationship between olfaction and vision. The objective of the game is for the player to identify if the visual cues experienced correspond to the olfactory ones simultaneously. In the game, there is a mysterious tree that bears many kinds of foods. Players can catch these food items by moving their right hands and when they catch one of the items and move it in front of their nose, they can smell something that does not necessarily correspond to the food item they are holding. A similar interactive computer game, Cooking Game, has also been created by at the Tokyo Institute of Technology [3]. There is real potential in this area and the developing use of olfaction in the multimedia experience is likely to grow and become more sophisticated. Whilst there are undoubtedly challenges that need to be overcome, it is precisely these that spur on research scientists worldwide in the quest to create ever more complex and exciting

multimedia and multimodal applications for our use and enjoyment. The complete sensory experience is within our grasp.

(we dont usually cite references as its an article, not an academic piece.)

REFERENCES 1. Brewster, S.A., McGookin, D.K. & Miller, C.A. 2006, "Olfoto: Designing a smell-based interaction", CHI 2006: Conference on Human Factors in Computing Systems, pp. 653. 2. Mochizuki, A., Amada, T., Sawa, S., Takeda, T., Motoyashiki, S., Kohyama, K., Imura, M. & Chihara, K. 2004, "Fragra: a visual-olfactory VR game", SIGGRAPH '04: ACM SIGGRAPH 2004 SketchesACM Press, New York, NY, USA, pp. 123. 3. Nakamoto, T., Cooking game with scents, Tokyo Institute of Technology. Available: http://www.titech.ac.jp/news/e/news061129.html

You might also like

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Directions: Choose The Best Answer For Each Multiple Choice Question. Write The Best Answer On The BlankDocument2 pagesDirections: Choose The Best Answer For Each Multiple Choice Question. Write The Best Answer On The BlankRanulfo MayolNo ratings yet

- Organisation Restructuring 2023 MGMT TeamDocument9 pagesOrganisation Restructuring 2023 MGMT TeamArul AravindNo ratings yet

- Ivler vs. Republic, G.R. No. 172716Document23 pagesIvler vs. Republic, G.R. No. 172716Joey SalomonNo ratings yet

- Guide To U.S. Colleges For DummiesDocument12 pagesGuide To U.S. Colleges For DummiesArhaan SiddiqueeNo ratings yet

- Lesson Plan MP-2Document7 pagesLesson Plan MP-2VeereshGodiNo ratings yet

- Dan 440 Dace Art Lesson PlanDocument4 pagesDan 440 Dace Art Lesson Planapi-298381373No ratings yet

- Spelling Master 1Document1 pageSpelling Master 1CristinaNo ratings yet

- Screening: of Litsea Salicifolia (Dighloti) As A Mosquito RepellentDocument20 pagesScreening: of Litsea Salicifolia (Dighloti) As A Mosquito RepellentMarmish DebbarmaNo ratings yet

- Test Statistics Fact SheetDocument4 pagesTest Statistics Fact SheetIra CervoNo ratings yet

- D8.1M 2007PV PDFDocument5 pagesD8.1M 2007PV PDFkhadtarpNo ratings yet

- Birds (Aves) Are A Group Of: WingsDocument1 pageBirds (Aves) Are A Group Of: WingsGabriel Angelo AbrauNo ratings yet

- Pediatric Autonomic DisorderDocument15 pagesPediatric Autonomic DisorderaimanNo ratings yet

- Bagon-Taas Adventist Youth ConstitutionDocument11 pagesBagon-Taas Adventist Youth ConstitutionJoseph Joshua A. PaLaparNo ratings yet

- DigoxinDocument18 pagesDigoxinApril Mergelle LapuzNo ratings yet

- Upload A Document To Access Your Download: The Psychology Book, Big Ideas Simply Explained - Nigel Benson PDFDocument3 pagesUpload A Document To Access Your Download: The Psychology Book, Big Ideas Simply Explained - Nigel Benson PDFchondroc11No ratings yet

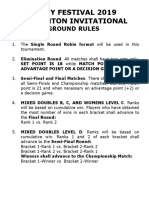

- Ground Rules 2019Document3 pagesGround Rules 2019Jeremiah Miko LepasanaNo ratings yet

- THE LAW OF - John Searl Solution PDFDocument50 pagesTHE LAW OF - John Searl Solution PDFerehov1100% (1)

- 1 - HandBook CBBR4106Document29 pages1 - HandBook CBBR4106mkkhusairiNo ratings yet

- Hygiene and HealthDocument2 pagesHygiene and HealthMoodaw SoeNo ratings yet

- Em - 1110 1 1005Document498 pagesEm - 1110 1 1005Sajid arNo ratings yet

- Grade 7 1ST Quarter ExamDocument3 pagesGrade 7 1ST Quarter ExamJay Haryl PesalbonNo ratings yet

- The Final Bible of Christian SatanismDocument309 pagesThe Final Bible of Christian SatanismLucifer White100% (1)

- Physics Syllabus PDFDocument17 pagesPhysics Syllabus PDFCharles Ghati100% (1)

- Mathematics Grade 5 Quarter 2: Answer KeyDocument4 pagesMathematics Grade 5 Quarter 2: Answer KeyApril Jean Cahoy100% (2)

- De La Salle Araneta University Grading SystemDocument2 pagesDe La Salle Araneta University Grading Systemnicolaus copernicus100% (2)

- 111Document1 page111Rakesh KumarNo ratings yet

- Preliminaries Qualitative PDFDocument9 pagesPreliminaries Qualitative PDFMae NamocNo ratings yet

- Capgras SyndromeDocument4 pagesCapgras Syndromeapi-459379591No ratings yet

- The Music of OhanaDocument31 pagesThe Music of OhanaSquaw100% (3)

- TugasFilsS32019.AnthoniSulthanHarahap.450326 (Pencegahan Misconduct)Document7 pagesTugasFilsS32019.AnthoniSulthanHarahap.450326 (Pencegahan Misconduct)Anthoni SulthanNo ratings yet