Professional Documents

Culture Documents

Web Crawlers - RTF Ja

Uploaded by

Warren RiveraOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Web Crawlers - RTF Ja

Uploaded by

Warren RiveraCopyright:

Available Formats

White Hat SEO Techniques To improve a Web page's position in a SERP, you have to know how search engines

work. Search engines categorize Web pages based on keywords -- important terms that are relevant to the content of the page. In our example, the term "skydiving" should be a keyword, but a term like "bungee jumping" wouldn't be relevant. Most search engines use computer programs called spiders or crawlers to search the Web and analyze individual pages. These programs read Web pages and index them according to the terms that show up often and in important sections of the page. Most SEO experts recommend that you use important keywords throughout the Web page, particularly at the top, but it's possible to overuse keywords. if you use a keyword too many times, some search engine spiders will flag your page as spam. That's because of a black hat technique called keyword stuffing, but more on that later. Keywords aren't the only important factor search engines take into account when generating SERPs. Just because a site uses keywords well doesn't mean it's one of the best resources on the Web. To determine the quality of a Web page, most automated search engines use link analysis. Link analysis means the search engine looks to see how many other Web pages link to the page in question. In other words, if the pages linking to your site are themselves ranked high in Google's system, they boost your page's rank more than lesser-ranked pages. . Another way is to offer link exchanges with other sites that cover material related to your content. You don't want to trade links with just anyone because many search engines look to see how relevant the links to and from your page are to the information within your page. Too many irrelevant links and the search engine will think you're trying to cheat the system. META TAGS Meta tags provide information about Web pages to computer programs but aren't visible to humans visiting the page. You can create a meta tag that lists keywords for your site, but many search engines skip meta tags entirely because some people used them to exploit search engines in the past. Black Hat SEO Techniques Some people seem to believe that on the Web, the ends justify the means. There are lots of ways webmasters can try to trick search engines into listing their Web pages high in SERPs, though such a victory doesn't usually last very long. One of these methods is called keyword stuffing, which skews search engine results by overusing keywords on the page. Usually webmasters will put repeated keywords toward the bottom of the page where most visitors won't see them. They can also use invisible text, text with a color matching the page's background. Since search engine spiders read content through the page's HTML code, they detect text even if people can't see it. Some search engine spiders can identify and ignore text that matches the page's background color. Webmasters might include irrelevant keywords to trick search engines. The webmasters look to see which search terms are the most popular and then use those words on their Web pages. While search engines might index the page under more keywords, people who follow the SERP links often leave the site once they realize it has little or nothing to do with their search terms.

A webmaster might create Web pages that redirect visitors to another page. The webmaster creates a simple page that includes certain keywords to get listed on a SERP. The page also includes a program that redirects visitors to a different page that often has nothing to do with the original search term. With several pages that each focus on a current hot topic, the webmaster can get a lot of traffic to a particular Web site. Page stuffing also cheats people out of a fair search engine experience. Webmasters first create a Web page that appears high up on a SERP. Then, the webmaster duplicates the page in the hopes that both pages will make the top results. The webmaster does this repeatedly with the intent to push other results off the top of the SERP and eliminate the competition. Most search engine spiders are able to compare pages against each other and determine if two different pages have the same content. Selling and farming links are popular black hat SEO techniques. Because many search engines look at links to determine a Web page's relevancy, some webmasters buy links from other sites to boost a page's rank. A link farm is a collection of Web pages that all interlink with one another in order to increase each page's rank. Small link farms seem pretty harmless, but some link farms include hundreds of Web sites, each with a Web page dedicated just to listing links to every other site in the farm. When search engines detect a link selling scheme or link farm, they flag every site involved. Sometimes the search engine will simply demote every page's rank. In other cases, it might ban all the sites from its indexes. Cheating the system might result in a temporary increase in visitors, but since people normally don't like to be fooled, the benefits are questionable at best. Who wants to return to a site that isn't what it claims to be? Plus, most search engines penalize Web pages that use black hat techniques, which means the webmaster trades a short success for a long-term failure. In the next section, we'll look at some factors that make SEO more difficult. SEO Obstacles The biggest challenge in SEO approaches is finding a content balance that satisfies both the visitors to the Web page and search engine spiders. A site that's entertaining to users might not merit a blip on a search engine's radar. A site that's optimized for search engines may come across as dry and uninteresting to users. It's usually a good idea to first create an engaging experience for visitors, then tweak the page's design so that search engines can find it easily. One potential problem with the way search engine spiders crawl through sites deals with media files. Most people browsing Web pages don't want to look at page after page of text. They want pages that include photos, video or other forms of media to enhance the browsing experience. Unfortunately, most search engines skip over image and video content when indexing a site. For sites that use a lot of media files to convey information, this is a big problem. Some interactive Web pages don't have a lot of text, which gives search engine spiders very little to go on when building an index. Webmasters with sites that rely on media files might be tempted to use some of the black hat techniques to help even the playing field, but it's usually a bad idea to do that. For one thing, the major search engines are constantly upgrading spider programs to detect and ignore (or worse, penalize) sites that use black hat approaches. The best

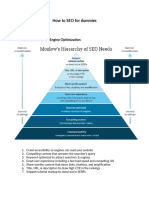

approach for these webmasters is to use keywords in important places like the title of the page and to get links from other pages that focus on relevant content. . Below diagram is a concise representation of Googles basis to create the order of the search results displayed on SERP

1. PageRank Page rank is the relevancy score or authority score that Google assigns to a website depending on various factors in its own trademark algorithm. PageRank is like the result of voting system. When you get link from some other website, Google considers it as that website has voted for you, so higher the number of unique and relevant (anchor text and voting website content somewhat matching to your blog/webpage topics) votes, higher is the PageRank that you can expect. But you should remember, every vote is not equal, it isnt like democracy! Thus, link quantity is counted but at the same time the weightage of the link source is also counted in the Google PageRank algorithms. We would go into details of the PageRank concept in the upcoming articles. 2. In Links In links are actually the links you get from the owners of other web sites. You can either contact to other site owners to submit your site or request the link from them or you can write or create such an attractive or essential or useful content on your website, that other websites are allured to link back to you. As we have already seen, the number of in links are the number of votes your website has got. But remember that do not build the links so rapid that Google would take its as spamming. Keep the process regular, as SEO really yields a lot when done slowly and steadily.

3. Frequency of Keywords Frequency of the keywords means how many times the keywords are relevantly repeated on your page. 4. Location of Keywords Google also gives importance to the adjacent text near to the keywords because it is irrelevant to repeat the keywords large number of times, without making some textual sense. 5. TrustRank Millions of websites are created an exhausted every week. Google gives more value to pre-established web pages or domains i.e. which do exist from a bit long time as they have a good Trust Rank in Google Keywords are really important for SEO and you should always check the important keywords or related keywords to attract more traffic to your site. Wordtracker is a free tool which suggest some keywords based on your search term. Wordtracker also filter out some offensive keywords to provide you the best suitable results. Before writing any post or article, it is always better to scan more and more keywords to target them thru the post. For example, I tried with the keyword Chrome and the results thrown by Wordtracker was like, Methods Getting indexed The leading search engines, such as Google, Bing and Yahoo!, use crawlers to find pages for their algorithmic search results. Pages that are linked from other search engine indexed pages do not need to be submitted because they are found automatically. Some search engines, notably Yahoo!, operate a paid submission service that guarantee crawling for either a set fee or cost per click.[30] Such programs usually guarantee inclusion in the database, but do not guarantee specific ranking within the search results.[31] Two major directories, the Yahoo Directory and the Open Directory Project both require manual submission and human editorial review.[32] Google offers Google Webmaster Tools, for which an XML Sitemap feed can be created and submitted for free to ensure that all pages are found, especially pages that are not discoverable by automatically following links.[33] Search engine crawlers may look at a number of different factors when crawling a site. Not every page is indexed by the search engines. Distance of pages from the root directory of a site may also be a factor in whether or not pages get crawled.[34]

Preventing crawling To avoid undesirable content in the search indexes, webmasters can instruct spiders not to crawl certain files or directories through the standard robots.txt file in the root directory of the domain. Additionally, a page can be explicitly excluded from a search engine's database by using a meta tag specific to robots. When a search engine visits a site, the robots.txt located in the root directory is the first file crawled. The robots.txt file is then parsed, and will instruct the robot as to which pages are not to be crawled. As a search engine crawler may keep a cached copy of this file, it may on occasion crawl pages a webmaster does not wish crawled. Pages typically prevented from being crawled include login specific pages such as shopping carts and user-specific content such as search results from internal searches. In March 2007, Google warned webmasters that they should prevent indexing of internal search results because those pages are considered search spam.[35]

A Web crawler is an Internet bot that systematically browses the World Wide Web, typically for the purpose of Web indexing. Web search engines and some other sites use Web crawling or spidering software to update their web content or indexes of others sites' web content. Web crawlers can copy all the pages they visit for later processing by a search engine that indexes the downloaded pages so that users can search them much more quickly. Crawlers can validate hyperlinks and HTML code. They can also be used for web scraping A Web crawler starts with a list of URLs to visit, called the seeds. As the crawler visits these URLs, it identifies all the hyperlinks in the page and adds them to the list of URLs to visit, called the crawl frontier. URLs from the frontier are recursively visited according to a set of policies. The large volume implies that the crawler can only download a limited number of the Web pages within a given time, so it needs to prioritize its downloads. The high rate of change implies that the pages might have already been updated or even deleted. The number of possible crawlable URLs being generated by server-side software has also made it difficult for web crawlers to avoid retrieving duplicate content. Endless combinations of HTTP GET (URL-based) parameters exist, of which only a small selection will actually return unique content. For example, a simple online photo gallery may offer three options to users, as specified through HTTP GET parameters in the URL. If there exist four ways to sort images, three choices of thumbnail size, two file formats, and an option to disable user-provided content, then the same set of content can be accessed with 48 different URLs, all of which may be linked on the site. This mathematical combination creates a problem for crawlers, as they must sort through endless combinations of relatively minor scripted changes in order to retrieve unique

content. ]Selection policy This requires a metric of importance for prioritizing Web pages. The importance of a page is a function of its intrinsic quality, its popularity in terms of links or visits, and even of its URL (the latter is the case of vertical search engines restricted to a single top-level domain, or search engines restricted to a fixed Web site). Designing a good selection policy has an added difficulty: it must work with partial information, as the complete set of Web pages is not known during crawling. The importance of a page for a crawler can also be expressed as a function of the similarity of a page to a given query. Web crawlers that attempt to download pages that are similar to each other are called focused crawler or topical crawlers Restricting followed links A crawler may only want to seek out HTML pages and avoid all other MIME types. In order to request only HTML resources, a crawler may make an HTTP HEAD request to determine a Web resource's MIME type before requesting the entire resource with a GET request. To avoid making numerous HEAD requests, a crawler may examine the URL and only request a resource if the URL ends with certain characters such as .html, .htm, .asp, .aspx, .php, .jsp, .jspx or a slash. This strategy may cause numerous HTML Web resources to be unintentionally skipped. Some crawlers may also avoid requesting any resources that have a "?" in them (are dynamically produced) in order to avoid spider traps that may cause the crawler to download an infinite number of URLs from a Web site. This strategy is unreliable if the site uses a rewrite engine to simplify its URLs. Re-visit policy The Web has a very dynamic nature, and crawling a fraction of the Web can take weeks or months. By the time a Web crawler has finished its crawl, many events could have happened, including creations, updates and deletions. From the search engine's point of view, there is a cost associated with not detecting an event, and thus having an outdated copy of a resource. The most-used cost functions are freshness and age.[25] Freshness: This is a binary measure that indicates whether the local copy is accurate or not. The freshness of a page p in the repository at time t is defined as: Proportional policy: This involves re-visiting more often the pages that change more frequently. The visiting frequency is directly proportional to the (estimated) change frequency.

(In both cases, the repeated crawling order of pages can be done either in a random or a fixed order.) the freshness of rapidly changing pages lasts for shorter period than that of less frequently changing pages. In other words, a proportional policy allocates more resources to crawling frequently updating pages, but experiences less overall freshness time from them. To improve freshness, the crawler should penalize the elements that change too often. [ The optimal re-visiting policy is neither the uniform policy nor the proportional policy. The optimal method for keeping average freshness high includes ignoring the pages that change too often, and the optimal for keeping average age low is to use access frequencies that monotonically (and sub-linearly) increase with the rate of change of each page. In both cases, the optimal is closer to the uniform policy than to the proportional policy: as Coffman et al. note, "in order to minimize the expected obsolescence time, the accesses to any particular page should be kept as evenly spaced as possible".[26] Explicit formulas for the re-visit policy are not attainable in general, but they are obtained numerically, as they depend on the distribution of page changes. Politeness policy Crawlers can retrieve data much quicker and in greater depth than human searchers, so they can have a crippling impact on the performance of a site. Needless to say, if a single crawler is performing multiple requests per second and/or downloading large files, a server would have a hard time keeping up with requests from multiple crawlers.

Parallelisation policyA parallel crawler is a crawler that runs multiple processes in parallel. The goal is to maximize the download rate while minimizing the overhead from parallelization and to avoid repeated downloads of the same page. To avoid downloading the same page more than once, the crawling system requires a policy for assigning the new URLs discovered during the crawling process, as the same URL can be found by two different crawling processes. [edit]Architectures

High-level architecture of a standard Web crawler A crawler must not only have a good crawling strategy, as noted in the previous sections, but it should also have a highly optimized architecture. Crawler identification Web crawlers typically identify themselves to a Web server by using the User-agent field of an HTTP request. Web site administrators typically examine their Web servers' log and use the user agent field to determine which crawlers have visited the web server

and how often. The user agent field may include a URL where the Web site administrator may find out more information about the crawler. Examining Web server log is tedious task therefore some administrators use tools such as CrawlTrack[39] or SEO Crawlytics[40] to identify, track and verify Web crawlers. Spambots and other malicious Web crawlers are unlikely to place identifying information in the user agent field, or they may mask their identity as a browser or other well-known crawler. It is important for Web crawlers to identify themselves so that Web site administrators can contact the owner if needed. In some cases, crawlers may be accidentally trapped in a crawler trap or they may be overloading a Web server with requests, and the owner needs to stop the crawler. Identification is also useful for administrators that are interested in knowing when they may expect their Web pages to be indexed by a particular search engine. Web indexing Web indexing (or Internet indexing) refers to various methods for indexing the contents of a website or of the Internet as a whole. Individual websites or intranets may use a back-of-the-book index, while search engines usually use keywords and metadata to provide a more useful vocabulary for Internet or onsite searching. With the increase in the number of periodicals that have articles online, web indexing is also becoming important for periodical websites. web indexes may be called "web site A-Z indexes". The implication with "A-Z" is that there is an alphabetical browse view or interface. This interface differs from that of a browse through layers of hierarchical categories (also known as a taxonomy) which are not necessarily alphabetical, but are also found on some web sites. Although an A-Z index could be used to index multiple sites, rather than the multiple pages of a single site, this is unusual. Web scraping Web scraping (web harvesting or web data extraction) is a computer software technique of extracting information from websites. Usually, such software programs simulate human exploration of the World Wide Web by either implementing low-level Hypertext Transfer Protocol (HTTP), or embedding a fully-fledged web browser, such as Internet Explorer or Mozilla Firefox. Web scraping is closely related to web indexing, which indexes information on the web using a bot or web crawler and is a universal technique adopted by most search engines. In contrast, web scraping focuses more on the transformation of unstructured data on the web, typically in HTML format, into structured data that can be stored and analyzed in a central local database or spreadsheet. Web scraping is also related to web automation, which simulates human browsing using computer software. Uses of web scraping include online price comparison, weather data monitoring, website change detection, research, web mashup and web data integration.

You might also like

- The Six Essential Factors of Organization Excellence:: Relevant Products and ServicesDocument11 pagesThe Six Essential Factors of Organization Excellence:: Relevant Products and ServicesBhavesh ValandNo ratings yet

- Techniques For Ensuring The Success of The Search Engine Unit Optimization Planucyna PDFDocument3 pagesTechniques For Ensuring The Success of The Search Engine Unit Optimization Planucyna PDFBramsen42SherrillNo ratings yet

- Search Engine OptimizationDocument11 pagesSearch Engine OptimizationWarren RiveraNo ratings yet

- SEO MeaningDocument9 pagesSEO MeaningSneh SharmaNo ratings yet

- Search Engine Optimization Does It Really WorksichdDocument3 pagesSearch Engine Optimization Does It Really WorksichdIrwinAndreassen4No ratings yet

- Introduction&Instruction To SEODocument10 pagesIntroduction&Instruction To SEOHo Thi HueNo ratings yet

- Module 2 SEO ProcessDocument13 pagesModule 2 SEO Processmanali vyasNo ratings yet

- D&SMM Notes - Unit 4Document36 pagesD&SMM Notes - Unit 4Arjun NayakNo ratings yet

- SEO Writing for both the Search Engines and Web Readers: How to Gain Trust, Authority, and Create Value to Attract Targeted Web VisitorsFrom EverandSEO Writing for both the Search Engines and Web Readers: How to Gain Trust, Authority, and Create Value to Attract Targeted Web VisitorsRating: 3 out of 5 stars3/5 (2)

- Boost Your Websites Understanding Making Use of These SEO ConceptswlynrDocument4 pagesBoost Your Websites Understanding Making Use of These SEO Conceptswlynrstovedrawer6No ratings yet

- Des - Responsive Web Design and Seo 2016 - 2017 Jae-Hee KimDocument6 pagesDes - Responsive Web Design and Seo 2016 - 2017 Jae-Hee Kimapi-352922616No ratings yet

- Seminar: Search Optimization (S O)Document35 pagesSeminar: Search Optimization (S O)Jeron P ThomasNo ratings yet

- Lecture 10 - Search Engine OptimizationDocument30 pagesLecture 10 - Search Engine Optimizationdemmm demmmNo ratings yet

- Website Traffic Secrets Unlocked: Learn Some of the Best Strategies to Get Traffic to Your WebsiteFrom EverandWebsite Traffic Secrets Unlocked: Learn Some of the Best Strategies to Get Traffic to Your WebsiteNo ratings yet

- Introduction To The SEO Toolkit: Part 1Document3 pagesIntroduction To The SEO Toolkit: Part 1Mahesh NairNo ratings yet

- Search Engine OptimisationDocument17 pagesSearch Engine OptimisationVenkat NikhilNo ratings yet

- SEO BasicsDocument18 pagesSEO BasicsAlexandra CiarnauNo ratings yet

- Link Building 101Document7 pagesLink Building 101egayamNo ratings yet

- Learn SEO TechniquesDocument53 pagesLearn SEO TechniqueseBooks DPF Download100% (1)

- DSMM Unit-1 NotesDocument12 pagesDSMM Unit-1 Notesanjanesh mauryaNo ratings yet

- Off-Page SEO: Off-Page SEO Simply Tells Google What Others Think About Your Site. For Example, IfDocument66 pagesOff-Page SEO: Off-Page SEO Simply Tells Google What Others Think About Your Site. For Example, IfDeb DattaNo ratings yet

- SEODocument18 pagesSEOA. K. M AhsanNo ratings yet

- Search Engine Optimization: Prepared by Sachin Jaiswal Sakshi MishraDocument26 pagesSearch Engine Optimization: Prepared by Sachin Jaiswal Sakshi MishraSachin JaiswalNo ratings yet

- 25 Website Must Haves For Driving Traffic Leads & SalesFrom Everand25 Website Must Haves For Driving Traffic Leads & SalesRating: 4 out of 5 stars4/5 (15)

- Overview: How Does A Search Engine Work?Document4 pagesOverview: How Does A Search Engine Work?Sandeep SandyNo ratings yet

- Off-Site SEO Guide: A Hands-On SEO Tutorial For Beginners & DummiesFrom EverandOff-Site SEO Guide: A Hands-On SEO Tutorial For Beginners & DummiesNo ratings yet

- SEO Basics Revisited OffpageDocument6 pagesSEO Basics Revisited OffpageGlenn Lelis TabucanonNo ratings yet

- Search Engine Optimization - NOtesDocument8 pagesSearch Engine Optimization - NOtesBounty HunterNo ratings yet

- DM Merged PDFDocument51 pagesDM Merged PDFSurabhi AgarwalNo ratings yet

- Unit 2 KMB N 207Document24 pagesUnit 2 KMB N 207Azhar AhamdNo ratings yet

- SEO GuideDocument3 pagesSEO GuideHimal GhimireNo ratings yet

- Seo Checklist: Your Definitive TechnicalDocument18 pagesSeo Checklist: Your Definitive TechnicalDavide ViciniNo ratings yet

- SEO Book The Basics of Search Engine Optimisation - PDF BooksDocument17 pagesSEO Book The Basics of Search Engine Optimisation - PDF BooksBrianNo ratings yet

- SEO Mastery 2024 #1 Workbook to Learn Secret Search Engine Optimization Strategies to Boost and Improve Your Organic Search RankingFrom EverandSEO Mastery 2024 #1 Workbook to Learn Secret Search Engine Optimization Strategies to Boost and Improve Your Organic Search RankingNo ratings yet

- Improving The Search Engine Rankings of Your BlogDocument2 pagesImproving The Search Engine Rankings of Your BlogEsra ShsNo ratings yet

- SEO ScriptDocument11 pagesSEO ScriptAbhishek Mathur100% (1)

- How Much Search Engine Optimization SEO Always Helps YouMaster Guide 2020Document10 pagesHow Much Search Engine Optimization SEO Always Helps YouMaster Guide 2020Parth ShahNo ratings yet

- Module 3Document23 pagesModule 3aashirwadmishra24No ratings yet

- Search Engine Optimization San JoseDocument7 pagesSearch Engine Optimization San JosetargetsanjoseseoNo ratings yet

- Module 1 - Lesson 4Document12 pagesModule 1 - Lesson 4therealsirjhunNo ratings yet

- What Is SEO?Document5 pagesWhat Is SEO?Vhishwa GunaNo ratings yet

- 16 Vapour Absorption Refrigeration Systems Based On Ammonia-Water PairDocument22 pages16 Vapour Absorption Refrigeration Systems Based On Ammonia-Water PairPRASAD326100% (4)

- Us 20020091460 A 1Document22 pagesUs 20020091460 A 1Warren RiveraNo ratings yet

- Hyd Manual PDFDocument175 pagesHyd Manual PDFWarren RiveraNo ratings yet

- Revision of Tariff in Students Special and Roam Free Plans Under Prepaid Mobile ServicesDocument1 pageRevision of Tariff in Students Special and Roam Free Plans Under Prepaid Mobile ServicesWarren RiveraNo ratings yet

- Hydraulic CircuitDocument18 pagesHydraulic Circuitshrikant_pesitNo ratings yet

- Us 4953306Document15 pagesUs 4953306Warren RiveraNo ratings yet

- Hydraulic Cylinder - ReferenceDocument95 pagesHydraulic Cylinder - ReferenceWarren RiveraNo ratings yet

- Lean01 PDFDocument27 pagesLean01 PDFWarren RiveraNo ratings yet

- FundamentalsDocument16 pagesFundamentalsBala SubramanianNo ratings yet

- vsm09 PDFDocument8 pagesvsm09 PDFWarren RiveraNo ratings yet

- Vapor Absorption Vs CompressionDocument3 pagesVapor Absorption Vs Compression78kbalajiNo ratings yet

- SQL Server InterviewDocument3 pagesSQL Server InterviewWarren RiveraNo ratings yet

- Toyota Kanban System PDFDocument10 pagesToyota Kanban System PDFAman SharmaNo ratings yet

- Optimization LiteratureDocument8 pagesOptimization LiteratureWarren RiveraNo ratings yet

- PremiumTechnicals-Nov26 15 GammonDocument2 pagesPremiumTechnicals-Nov26 15 GammonWarren RiveraNo ratings yet

- Kanban Kit PDFDocument11 pagesKanban Kit PDFWarren RiveraNo ratings yet

- Lean VSMDocument27 pagesLean VSMWarren RiveraNo ratings yet

- Re92060 2014-04Document28 pagesRe92060 2014-04Ibrahim GökmenNo ratings yet

- Fluid MechanicsDocument41 pagesFluid MechanicsRian SteveNo ratings yet

- Apply KanbanDocument13 pagesApply KanbanWarren RiveraNo ratings yet

- Varshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketDocument2 pagesVarshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketWarren RiveraNo ratings yet

- Varshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketDocument2 pagesVarshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketWarren RiveraNo ratings yet

- Stuck in A Range: Punter's CallDocument4 pagesStuck in A Range: Punter's CallWarren RiveraNo ratings yet

- Development of Kanban System at Local Manufacturing Company in Malaysia - Case StudyDocument6 pagesDevelopment of Kanban System at Local Manufacturing Company in Malaysia - Case StudyAarthy ChandranNo ratings yet

- IH01Document5 pagesIH01Warren RiveraNo ratings yet

- InterView PHPDocument8 pagesInterView PHPWarren RiveraNo ratings yet

- Javascript InterviewDocument5 pagesJavascript InterviewWarren RiveraNo ratings yet

- Pratap Leela Bhai Odedra Sr. 9662596080 SANJAY HADIYA JRDocument1 pagePratap Leela Bhai Odedra Sr. 9662596080 SANJAY HADIYA JRWarren RiveraNo ratings yet

- Varshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketDocument2 pagesVarshit Doshi: Mobile: +91 8866514555 Address: D-502, Sahaj Sapphire, Opp APMC MarketWarren RiveraNo ratings yet

- Innovheads Design Studio: DT 09/12/15 Sr. No Size Inch SQ - Ft. Glass Work 1 2 3 4 5Document6 pagesInnovheads Design Studio: DT 09/12/15 Sr. No Size Inch SQ - Ft. Glass Work 1 2 3 4 5Warren RiveraNo ratings yet

- Roblox Studio Cheat Sheet: GeneralDocument1 pageRoblox Studio Cheat Sheet: Generalssdwatame dankNo ratings yet

- Deploying PHP App On HerokuDocument23 pagesDeploying PHP App On HerokuZuhadMahmoodNo ratings yet

- Belinda Rao ResumeDocument1 pageBelinda Rao Resumebelindarao36No ratings yet

- Ds FUTRO X913 TDocument6 pagesDs FUTRO X913 Tconmar5mNo ratings yet

- ReadmeDocument3 pagesReadmemasterNo ratings yet

- An Introduction To Android Development: CS231M - Alejandro TroccoliDocument22 pagesAn Introduction To Android Development: CS231M - Alejandro TroccolisinteNo ratings yet

- Load Testing Oracle Business Intelligence Enterprise Edition OBIEE Using Oracle Load Testing v2Document51 pagesLoad Testing Oracle Business Intelligence Enterprise Edition OBIEE Using Oracle Load Testing v2Sagar NaiduNo ratings yet

- Mathworlds Install Guide Computer RDocument2 pagesMathworlds Install Guide Computer RBojan SlavkovicNo ratings yet

- Comtec Programming Manual1 PDFDocument82 pagesComtec Programming Manual1 PDFvagnelimaNo ratings yet

- AZ-204 Exam - 101 To 150Document60 pagesAZ-204 Exam - 101 To 150alexisboss31No ratings yet

- Module 1 PPTDocument93 pagesModule 1 PPTmahanth gowdaNo ratings yet

- EIL-MAN-024 - Rev 1 - WinTotal Installation Guide 4.5.00Document14 pagesEIL-MAN-024 - Rev 1 - WinTotal Installation Guide 4.5.00cesarintiNo ratings yet

- RCI 510 System SkyAzul Engl.. 3 PDFDocument2 pagesRCI 510 System SkyAzul Engl.. 3 PDFTom WilberNo ratings yet

- Configuration of Gateway and Proxy Devices OI 06 enDocument268 pagesConfiguration of Gateway and Proxy Devices OI 06 enOSunTzuONo ratings yet

- Qy5p9 Salesforce Service Cloud Joe PoozhikunnelDocument120 pagesQy5p9 Salesforce Service Cloud Joe PoozhikunnelANo ratings yet

- Image Compression: JPEG: Summary: SourcesDocument21 pagesImage Compression: JPEG: Summary: SourcesNgô Hiếu TrườngNo ratings yet

- Network Slicing For 5G With SDN/NFV: Concepts, Architectures and ChallengesDocument20 pagesNetwork Slicing For 5G With SDN/NFV: Concepts, Architectures and ChallengesAsad Arshad AwanNo ratings yet

- Line Clipping Against RectanglesDocument17 pagesLine Clipping Against RectanglesNithin SudhakarNo ratings yet

- Activate by Using Multiple Activation KeyDocument3 pagesActivate by Using Multiple Activation KeyMonokino GmzNo ratings yet

- Elevator Control Using Speech Recognition For People With Physical DisabilitiesDocument4 pagesElevator Control Using Speech Recognition For People With Physical Disabilitiesiot forumNo ratings yet

- InteliMains NT - Datasheet 2010-03Document4 pagesInteliMains NT - Datasheet 2010-03Clarice Alves de FreitasNo ratings yet

- Design Portfolio IIDocument23 pagesDesign Portfolio IIKyle KurokawaNo ratings yet

- Behind The Screen - How Gays and Lesbians Shaped Hollywood, 1910-1969 Ipad Android KindleDocument7,740 pagesBehind The Screen - How Gays and Lesbians Shaped Hollywood, 1910-1969 Ipad Android KindleRafael Der0% (3)

- Scheme of Study BS (IT) 2019-23Document21 pagesScheme of Study BS (IT) 2019-23Rashid MehmoodNo ratings yet

- ScanCONTROL 2900 Interface SpecificationDocument19 pagesScanCONTROL 2900 Interface SpecificationRadoje RadojicicNo ratings yet

- README Withered Arm V 1.33.7Document2 pagesREADME Withered Arm V 1.33.7august1929No ratings yet

- Documenting Requirements in Natural LanguageDocument15 pagesDocumenting Requirements in Natural LanguageSandra Suriya A/l JayasuriNo ratings yet

- Immersion V FitbitDocument29 pagesImmersion V FitbitJonah ComstockNo ratings yet

- VplusDocument1 pageVplusOM2019No ratings yet

- Glencoe Mathematics - Online Study ToolsDocument4 pagesGlencoe Mathematics - Online Study ToolsShahzad AhmadNo ratings yet