Professional Documents

Culture Documents

Entropy: A Dynamic Model of Information and Entropy

Uploaded by

cuprianOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Entropy: A Dynamic Model of Information and Entropy

Uploaded by

cuprianCopyright:

Available Formats

Entropy 2010, 12, 80-88; doi:10.

3390/e12010080

entropy

ISSN 1099-4300

www.mdpi.com/journal/entropy

Article

A Dynamic Model of Information and Entropy

Michael C. Parker * and Stuart D. Walker

School of Computer Sciences and Electronic Engineering, University of Essex, Wivenhoe Park,

Colchester, CO3 4HG, UK; E-Mail: stuwal@essex.ac.uk (S.D.W.)

* Author to whom correspondence should be addressed; E-Mail: mcpark@essex.ac.uk.

Received: 29 October 2009 / Accepted: 14 December 2009 / Published: 7 January 2010

Abstract: We discuss the possibility of a relativistic relationship between information and

entropy, closely analogous to the classical Maxwell electro-magnetic wave equations.

Inherent to the analysis is the description of information as residing in points of

non-analyticity; yet ultimately also exhibiting a distributed characteristic: additionally

analogous, therefore, to the wave-particle duality of light. At cosmological scales our

vector differential equations predict conservation of information in black holes, whereas

regular- and Z-DNA molecules correspond to helical solutions at microscopic levels. We

further propose that regular- and Z-DNA are equivalent to the alternative words chosen

from an alphabet to maintain the equilibrium of an information transmission system.

Keywords: information theory; entropy; thermodynamics

PACS Codes: 89.70.+c, 65.40.Gr, 42.25.Bs, 03.30.+p .

1. Introduction

The close relationship between information and entropy is well recognised [1,2], e.g., Brillouin

considered a negative change in entropy to be equivalent to a change in information (negentropy [3]),

and Landauer considered the erasure of information I to be associated with an increase in entropy S,

via the relationship -I = S [1,4]. In previous work, we qualitatively indicated the dynamic

relationship between information and entropy, stating that the movement of information is

accompanied by the increase of entropy in time [5,6], and suggesting that information propagates at the

speed of light in vacuum c [59]. Opinions on the physical nature of information have tended to be

contradictory. One view is that information is inherent to points of non-analyticity

OPEN ACCESS

Entropy 2010, 12

81

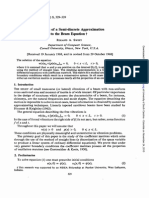

Figure 1. Information I (integration over imaginary ct-axis) and entropy S (integration

over real x-axis) due to a point of non-analyticity (pole) at the space-time position z

0

.

i

x

i

x

ict

ict

0

ct

0

ct

0

x

0

x

2 2 0

log log I k cdt k x

= =

2 2 0

log log S k dx k ct

= =

0

z

(discontinuities) [5,7,9] that do not allow prediction, whereas other researchers consider the

information to be more distributed (delocalised) in nature [1012]. Such considerations are akin to the

paradoxes arising from a wave-particle duality, with the question of which of the two complements

best characterises information. In the following analysis, by initially adopting the localised, point of

non-analyticity definition of information, we ultimately find that information and entropy may indeed

also exhibit wavelike properties, and may be described by a pair of coupled wave equations, analogous

to the Maxwell equations for electromagnetic (EM) radiation, and a distant echo of the simple

difference relationship above.

2. Analysis

We start our analysis by consideration of a meromorphic [13] function (z) in a reduced 1+1 (i.e.,

one space dimension and one time dimension) complex space-time z = x +ict, with a simple, isolated

pole at z

0

= x

0

+ ict

0

, as indicated in Figure 1:

(z) = _

ct

0

n

1

z-z

0

(1)

The point of non-analyticity z

0

travels at the speed of light in vacuum c [59]. We note that (z)

acts as an inverse-square law function, e.g., as proportional to the field around an isolated charge, or

the gravitational field around a point mass. It is also square-integrable, such that ] p|

t=0

-

Jx = 1, and

ic ] p|

x=0

-

Jt = 1, where p = |(z)|

2

, and obeys the Paley-Wiener criterion for causality [14]. We

now calculate the differential information [5,15] (entropy) of the function (z). By convention, in this

paper, we define the logarithmic integration along the spatial x-axis to yield the entropy S of the

function; whereas the same integration along the imaginary t-axis yields the information I of the

Entropy 2010, 12

82

function. This can be qualitatively understood to arise from the entropy of a system being related to its

spatial permutations (e.g., the spatial fluxions of a volume of gas), whereas the information of a system

is related to its temporal distribution (e.g., the arrival of a data train of light pulses down an optical

fibre.) Considering the differential entropy first, substituting Equation (1) with t = u and calculating

the sum of the residues of the appropriate closed contour integral in the z-plane, S is given by:

S = k _ p log

2

p Jx

-

= k log

2

ct

0

(2)

where k is the Boltzmann constant. We have defined S using a positive sign in front of the integral, as

opposed to the conventional negative sign [15], so that both the entropy and information of a space-

time function are calculated in a unified fashion. In addition, this has the effect of aligning equation (2)

with the 2nd Law of Thermodynamics, since S increases monotonically with increasing time t

0

.

Performing the appropriate closed contour integral in the z-plane with x = u we find that the

differential information is given by:

I = k _ p log

2

p cJt

-

= k log

2

x

0

(3)

Note that in (2) and (3) some constant background values of integration have been ignored, since

they disappear in the following differential analyses. We see that the entropy and information are given

by surprisingly simple expressions. The information is the logarithm of the space-time distance x

0

of

the point of non-analyticity from the temporal t-axis; whilst the entropy content is simply the logarithm

of the distance (i.e., time ct

0

) of the pole from the spatial x-axis. The overall info-entropy of the

function (z) is given by the summation of the two orthogonal logarithmic integrals, = I + iS,

where the entropy S is in quadrature to the information I, since I depends on the real axis quantity x

0

,

whereas S depends on the imaginary axis quantity ct

0

. Since the choice of reference axes is arbitrary,

we could have chosen an alternative set of co-ordinate axes to calculate the information and entropy.

For example, consider a co-ordinate set of axes x

i

- ct', rotated an angle 0 about the origin with

respect to the original x - ct axes. In this case, the unitary transformation describing the position of

the pole z

0

i

in the new co-ordinate framework is:

_

ct

0

i

x

0

i

] = [

cos 0 -sin0

sin0 cos 0

[

ct

0

x

0

(4)

Using (2) and (3), the resulting values of the information and entropy for the new frame of reference

are given by:

I' = k log

2

x

0

i

(5a)

S' = k log

2

ct

0

i

(5b)

Entropy 2010, 12

83

with overall info-entropy ' of the function again given by the summation of the two quadrature

logarithmic integrals, ' = I' + iS'. We next perform a dynamic calculus on the equations (5), with

respect to the original x - ct axes, using (4) to calculate:

oI'

ox

=

k

x

0

i

ox

0

i

ox

=

k

x

0

i

ox

0

i

ox

0

=

k

x

0

i

cos 0

(6a)

1

c

oI'

ot

=

k

x

0

i

1

c

ox

0

i

ot

=

k

x

0

i

1

c

ox

0

i

ot

0

=

k

x

0

i

sin0

(6b)

and:

1

c

oS'

ot

=

k

t

0

i

1

c

ot

0

i

ot

=

k

ct

0

i

ot

0

i

ot

0

=

k

ct

0

i

cos 0

(7a)

oS'

ox

=

k

ct

0

i

ot

0

i

ox

=

k

ct

0

i

1

c

ot

0

i

ox

0

=

-k

ct

0

i

sin0

(7b)

where we have ignored the common factor log 2, and we have assumed the equality of the calculus

operators o ox = o ox

0

and o ot = o ot

0

, since the trajectory of the pole in the z-plane is a straight

line with Jx = Jx

0

, and Jt = Jt

0

. Since the point of non-analyticity is moving at the speed of light in

vacuum c [59], such that x

0

= ct

0

, we must also have x

0

i

= ct

0

i

, as c is the same constant in any

frame of reference [16]. We can now see that equations (6) and (7) can be equated to yield a pair of

Cauchy-Riemann equations [17]:

oI

ox

=

1

c

oS

ot

(8a)

oS

ox

= -

1

c

oI

ot

(8b)

where we have dropped the primes, since equations (8) are true for all frames of reference. Hence, the

info-entropy function is analytic (i.e., holographic in nature [13]), and the Cauchy-Riemann

equations (8) indicate that information and entropy (as we have defined them) may propagate as waves

travelling at the speed c. In the Appendix we extend the analysis from 1+1 complex space-time to the

full 3+1 (three-space and one-time dimensions) case, so that it can be straightforwardly shown that

Equations (8) generalise to:

v I =

1

c

JS

Jt

(9a)

v S =

-1

c

uI

ut

(9b)

where I and S are in 3D vector form. Further basic calculus manipulations reveal that the scalar

gradient (divergence) of our information and entropy fields are both equal to zero:

v I = u

(10a)

Entropy 2010, 12

84

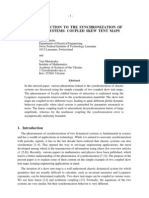

Figure 2. (a) Right-handed polarisation helical info-entropy wave, propagating in

positive x

-direction given by I S . (b) Left-handed polarisation helical info-entropy

wave travelling in same direction.

i

x

j

x

k

x

j

I

k

S

[0, cos( ), sin( )]

i i

I I x t I x t =

[0, sin( ), cos( )]

i i

S S x t S x t =

0

S

t

>

Right-handed helix

i

x

j

x

k

x

k

I

j

S

[0, sin( ), cos( )]

i i

I I x t I x t =

[0, cos( ), sin( )]

i i

S S x t S x t =

0

S

t

<

Left-handed helix

v S = u

(10b)

Together, Equations (9) and (10) form a set of equations analogous to the Maxwell equations [16]

(with no sources or isolated charges, and in an isotropic medium). Equations (9) can be combined to

yield wave equations for the information and entropy fields:

v

2

I -

1

c

2

J

2

I

Jt

2

= u

(11a)

v

2

S -

1

c

2

J

2

S

Jt

2

= u

(11b)

3. Discussion

As defined previously, the information and entropy fields are mutually orthogonal, since I S = u.

Analogous to the Poynting vector describing energy flow of an EM wave, the direction of propagation

of the wave is given by I S, e.g., see Figure 2. The dynamic relationship between I and S implies that

information flow tends to be accompanied by entropy flux [5,6], such that the movement of data is

dissipative in order to satisfy the 2nd Law of Thermodynamics. Analytic functions such as I and S only

have points of inflection, so that, again, the 2nd Law with respect to the S-field is a natural

consequence of its monotonicity. However, in analogy to an EM-wave, Equations (9) and (10) in

combination imply that the sum of information and entropy |I|

2

+ |S|

2

may be a conserved quantity.

Hence, the 2nd Law of Thermodynamics should perhaps be viewed as a conservation law for

info-entropy, similar to the 1st Law for energy. This has implications for the treatment of information

falling into a black hole.

The reason that the differential Equations (6) and (7) obey the laws of relativity is that they are

simple spatial reciprocal quantities, with their ratios equivalent to velocities, such that the relativistic

laws are applicable. This reciprocal space aspect implies that calculation of Fourier-space info-entropy

Entropy 2010, 12

85

quantities result in quantities proportional to real space, which are therefore also

relativistically-invariant and holographic.

Equation (9a) shows that a right-handed helical spatial information distribution may be associated

with an increase of entropy with time (Figure 2a); in contrast to a left-handed chirality where entropy

may decrease with time (Figure 2b). A link is therefore possibly revealed between the high information

density of right-handed DNA [18] and the overall increase of entropy with time in our universe [19]. A

molecule such as DNA is an efficient system for information storage. However, our dynamic model

suggests that information would radiate away, unless a localisation mechanism were present, e.g., the

existence of a standing wave. Such waves require forward and backward wave propagation of the same

polarisation. We see that the complementary anti-parallel (C2 spacegroup) symmetry of DNAs double

helix means that information waves of the same polarisation (chirality) would travel in both directions

along the helix axis to form a standing wave, thus localising the information. Small left-handed

molecules (e.g., amino acids, enzymes and proteins, as well as short sections of Z-DNA [20]) are

known to exist alongside DNA within the cell nucleus. Their chirality may be thus associated with a

decrease of entropy with time. In conjunction with right-handed molecules they can be understood to

possibly regulate the thermodynamic function of the DNA molecule. As is well known, highly

dynamic systems require negative feedback to direct and control their output, without which they

become unstable. We can draw parallels to the high entropic potential of DNA, with left-handed

molecules (as well as right-handed molecules) potentially acting as damping agents to control its

thermodynamic action.

4. Conclusions

In conclusion, our model grounds information and entropy onto a physical, relativistic basis, and

provides thermodynamic insights into the double-helix geometry of DNA. It offers the prediction that

non-DNA-based life forms will still tend to have helical structures, and suggests that black holes

conserve info-entropy.

References

1. Bennett, C.H. Demons, engines and the second law. Sci. Am. 1987, 257, 8896.

2. Landauer, R. Computation: A fundamental physical view. Phys. Scr. 1987, 35, 8895.

3. Brillouin, L. Science & Information Theory; Academic: New York, NY, USA, 1956.

4. Landauer, R. Information is physical. Phys. Today 1991, 44, 2329.

5. Parker, M.C.; Walker, S.D. Information transfer and Landauers principle. Opt. Commun. 2004,

229, 2327.

6. Parker, M.C.; Walker, S.D. Is computation reversible? Opt. Commun. 2007, 271, 274277.

7. Garrison, J .C.; Mitchell, M.W.; Chiao, R.Y.; Bolda, E.L. Superluminal signals: Causal loop

paradoxes revisited. Phys. Lett. A 1998, 245, 1925.

8. Brillouin, L. Wave Propagation and Group Velocity; Academic: New York, NY, USA, 1960.

9. Stenner, M.D.; Gauthier, D.J .; Neifeld, M.A. The speed of information in a fast-light optical

medium. Nature 2003, 425, 695697.

10. Wynne, K. Causality and the nature of information. Opt. Commun. 2002, 209, 85100.

Entropy 2010, 12

86

11. Carey, J .J .; Zawadzka, J .; J aroszynski, D.A.; Wynne, K. Noncausal time response in frustrated

total internal reflection. Phys. Rev. Lett. 2000, 84, 14311434.

12. Heitmann, W.; Nimtz, G. On causality proofs of superluminal barrier traversal of frequency band

limited wave packets. Phys. Lett. A 1994, 196, 154158.

13. Arfken, G.B.; Weber, H.J . Mathematical Methods for Physicists; Academic: New York, NY,

USA, 1995; Chapter 6.

14. Primas, H. Time, Temporality, Now: The Representation of Facts in Physical Theories; Springer:

Berlin, Germany, 1997; pp. 241263.

15. Gershenfeld, N. The Physics of Information Technology; Cambridge University Press: Cambridge,

UK, 2000; Chapter 4.

16. J ackson, J .D. Classical Electrodynamics; J ohn Wiley & Sons: New York, NY, USA, 1999;

Chapter 11.

17. Peiponen, K.-E.; Vartianen, E.M.; Asakura, T. Dispersion, Complex Analysis, and Optical

Spectroscopy: Classical Theory; Springer: Berlin, Germany, 1999.

18. Watson, J .D.; Crick, F.H.C. A structure for deoxyribose nucleic acid. Nature 1953, 171,

737738.

19. Zurek, W.H. Complexity, Entropy and the Physics of Information; Addison-Wesley: Redwood

City, CA, USA, 1989; Volume 8.

20. Siegel, J .S. Single-handed cooperation. Nature 2001, 409, 777778.

Appendix

We generalise the x-dimension to the i

th

space-dimension (i = 1,2,S), such that for a pole travelling

in the x

-direction (i.e., equivalent to a plane wave travelling in the x

-direction) the info- and

entropy-fields vibrate in the mutually-orthogonal x

]

- and x

k

-directions respectively, where

again ], k = 1,2,S and i = ] = k. The vector descriptions of the I and S fields are:

I = I

1

x

1

+I

2

x

2

+ I

3

x

3

(A1a)

S = S

1

x

1

+ S

2

x

2

+ S

3

x

3

(A1b)

In analogy to an EM plane-wave, two plane-wave polarisations are therefore possible:

(a) I

= u, I

]

= k log

2

x

i

, I

k

= u S

= u, S

]

= u, S

k

= k log

2

ct'

(A2a)

(b) I

= u, I

]

= u, I

k

= -k log

2

x

i

S

= u, S

]

= k log

2

ct' , S

k

= u

(A2b)

where we have generalised the position of the point of non-analyticity (pole) to a position z

i

= x

i

+

ict', i.e., dropped the subscript 0. We see that the information and entropy fields are mutually

orthogonal, since I S = u. Also, the direction of propagation of the wave is given by I S with the

Entropy 2010, 12

87

sign of I

],k

chosen so that flow is in the positive x

-direction. We make use of

the 4 4 transformation matrix g, relating one relativistic frame of reference to another [16]:

_

ict'

x

1

i

x

2

i

x

3

i

_ =

`

y -y[

1

-y[

1

1 + A[

1

2

-y[

2

-y[

3

A[

1

[

2

A[

1

[

3

-y[

2

A[

2

[

1

-y[

3

A[

3

[

1

1 + A[

2

2

A[

2

[

3

A[

3

[

2

1 +A[

3

2

/

_

ict

x

1

x

2

x

3

_

(A3)

where [

1

, [

2

, and [

3

are the normalised velocities (with respect to c) in the co-ordinate directions, with

[

2

= [

1

2

+ [

2

2

+ [

3

2

= 1 in this case, since the overall wave velocity is equal to c. In addition the

parameters y and A are related by y = 1 1 - [

2

, and also y = 1 + A[

2

. We note that equation (A3)

is made equivalent to (4), by substitutingy = cosh i0 , and [

1

= tanhi0 , with [

2

= [

3

= u .

Performing a dynamic calculus on equations (A2a), we derive the following expressions:

oI

]

ox

=

k

x

i

ox

i

ox

=

k

x

i

g

(A4a)

oS

k

oict

=

k

ict'

oict'

oict

=

k

ict'

g

00 (A4b)

oI

]

oict

=

k

x

i

ox

i

oict

=

k

x

i

g

0 (A4c)

oS

k

ox

=

k

ict'

oict'

ox

=

k

ict'

g

0 (A4d)

Consideration of the second polarisation (b) allows us to derive the additional

following expressions:

oI

k

ox

=

-k

x

i

ox

i

ox

=

-k

x

i

g

(A5a)

oS

]

oict

=

k

ict'

oict'

oict

=

k

ict'

g

00 (A5b)

oI

k

oict

=

-k

x

i

ox

i

oict

=

-k

x

i

g

0 (A5c)

oS

]

ox

=

k

ict'

oict'

ox

=

k

ict'

g

0 (A5d)

Since the direction of propagation is in the x

-direction, we must have that [

]

= [

k

= u, so that

[

= [, and therefore y = 1 + A[

2

, such that g

= g

00

, as well as g

0

= g

0

. Given x

i

= ct' as usual,

in the x

]

-direction the following pair of equations holds:

Entropy 2010, 12

88

-

oI

k

ox

=

1

c

oS

]

ot

(A6a)

-

oS

k

ox

= -

1

c

oI

]

ot

(A6b)

Likewise, in the x

k

-direction we find the following pair of equations holds:

oI

]

ox

=

1

c

oS

k

ot

(A7a)

oS

]

ox

= -

1

c

oI

k

ot

(A7b)

We see that both pairs of equations (A6) and (A7) respectively obey the Cauchy-Riemann

symmetries evident in Equations (8). Considering the three cyclic permutations of i,j and k, we

can write:

_

oI

]

ox

k

-

oI

k

ox

]

_x

3

=1

=

1

c

oS

ot

x

3

=1

(A8a)

_

oS

]

ox

k

-

oS

k

ox

]

_x

3

=1

= -

1

c

oI

ot

x

3

=1

(A8b)

Equations (A8) can be alternatively written in the compact vector notation of equations (9).

2010 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland.

This article is an open-access article distributed under the terms and conditions of the Creative

Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

You might also like

- Fast Analysis of Large AntennasDocument5 pagesFast Analysis of Large Antennasxmas82No ratings yet

- Multiscale Splitting Method For The Boltzmann-Poisson Equation: Application To The Dynamics of ElectronsDocument14 pagesMultiscale Splitting Method For The Boltzmann-Poisson Equation: Application To The Dynamics of ElectronsLuis Alberto FuentesNo ratings yet

- Method of Image ChargesDocument5 pagesMethod of Image ChargesSrikar VaradarajNo ratings yet

- Multiscale Splitting Method For The Boltzmann-Poisson Equation: Application To The Dynamics of ElectronsDocument14 pagesMultiscale Splitting Method For The Boltzmann-Poisson Equation: Application To The Dynamics of ElectronsLuis Alberto FuentesNo ratings yet

- Curved Finite Elements for Solving PDEsDocument13 pagesCurved Finite Elements for Solving PDEsFernando ZCenasNo ratings yet

- Electromagnetic PDFDocument13 pagesElectromagnetic PDFHamid FarhanNo ratings yet

- Physics 364: Problem Set 3Document2 pagesPhysics 364: Problem Set 3MachodogNo ratings yet

- The Geometry of Partial Least SquaresDocument28 pagesThe Geometry of Partial Least SquaresLata DeshmukhNo ratings yet

- Phy 206 Rlec 4Document9 pagesPhy 206 Rlec 4ph23b001No ratings yet

- Calculation of The Changes in The Absorption and Refractive Index For Intersubband Optical Transitions in A Quantum BoxDocument8 pagesCalculation of The Changes in The Absorption and Refractive Index For Intersubband Optical Transitions in A Quantum BoxWilliam RodriguezNo ratings yet

- EinsteinPauliYukawa Paradox What Is The Physical RealityDocument10 pagesEinsteinPauliYukawa Paradox What Is The Physical RealityahsbonNo ratings yet

- Network Models of Quantum Percolation and Their Field-Theory RepresentationsDocument13 pagesNetwork Models of Quantum Percolation and Their Field-Theory RepresentationsBayer MitrovicNo ratings yet

- Classical Interpretations of Relativistic Phenomena: Sankar HajraDocument13 pagesClassical Interpretations of Relativistic Phenomena: Sankar HajraJimmy LiuNo ratings yet

- Review of Special RelativityDocument13 pagesReview of Special RelativitySeaton HarnsNo ratings yet

- Solitary Wave Solution To The Generalized Nonlinear Schrodinger Equation For Dispersive Permittivity and PermeabilityDocument8 pagesSolitary Wave Solution To The Generalized Nonlinear Schrodinger Equation For Dispersive Permittivity and PermeabilityhackerofxpNo ratings yet

- Lorentz Transformation DerivationDocument3 pagesLorentz Transformation DerivationBrian DavisNo ratings yet

- Semiclassical Nonlinear Approach For Mesoscopic CircuitDocument4 pagesSemiclassical Nonlinear Approach For Mesoscopic CircuitIJAERS JOURNALNo ratings yet

- An Analytic Solution To The Scattering Fields of Shaped Beam by A Moving Conducting Infinite Cylinder With Dielectric CoatingDocument5 pagesAn Analytic Solution To The Scattering Fields of Shaped Beam by A Moving Conducting Infinite Cylinder With Dielectric CoatingDyra KesumaNo ratings yet

- Causality in Classical Physics: Asrarul HaqueDocument15 pagesCausality in Classical Physics: Asrarul Haqued00brightNo ratings yet

- Direct Solution by 2-D and 3-D Finite Element Method On Forward Problem of Low Frequency Current Fields in Inhomogeneous MediaDocument10 pagesDirect Solution by 2-D and 3-D Finite Element Method On Forward Problem of Low Frequency Current Fields in Inhomogeneous MediaNilesh BarangeNo ratings yet

- Proper Orthogonal DecompositionDocument10 pagesProper Orthogonal DecompositionKenry Xu ChiNo ratings yet

- S N 1 T NDocument14 pagesS N 1 T NsatyabashaNo ratings yet

- A Semi-Analytic Ray-Tracing Algorithm For Weak Lensing: Baojiu Li, Lindsay J. King, Gong-Bo Zhao, Hongsheng ZhaoDocument13 pagesA Semi-Analytic Ray-Tracing Algorithm For Weak Lensing: Baojiu Li, Lindsay J. King, Gong-Bo Zhao, Hongsheng ZhaoEntropyPrincipleNo ratings yet

- Induced Fermion Current in the O(3) Model in (2+1) DimensionsDocument4 pagesInduced Fermion Current in the O(3) Model in (2+1) DimensionsAngel EduardoNo ratings yet

- Electron Supercollimation in Graphene and Dirac Fermion Materials Using One-Dimensional Disorder PotentialsDocument35 pagesElectron Supercollimation in Graphene and Dirac Fermion Materials Using One-Dimensional Disorder PotentialscrocoaliNo ratings yet

- General Least Squares Smoothing and Differentiation of Nonuniformly Spaced Data by The Convolution Method.Document3 pagesGeneral Least Squares Smoothing and Differentiation of Nonuniformly Spaced Data by The Convolution Method.Vladimir PelekhatyNo ratings yet

- Lamb'S Problem For An Anisotropic Half-SpaceDocument18 pagesLamb'S Problem For An Anisotropic Half-SpaceToto ToualiNo ratings yet

- Properties of A Semi-Discrete Approximation To The Beam EquationDocument11 pagesProperties of A Semi-Discrete Approximation To The Beam EquationjtorerocNo ratings yet

- Possible Force-Entropy Correlation: Enrique CanessaDocument6 pagesPossible Force-Entropy Correlation: Enrique Canessaeid elsayedNo ratings yet

- Ic Tech Report 199704Document28 pagesIc Tech Report 199704Rafael Gonçalves de LimaNo ratings yet

- Linear Models of Dissipation whose Q is almost Frequency IndependentDocument11 pagesLinear Models of Dissipation whose Q is almost Frequency IndependentLê VõNo ratings yet

- Cyi3b YveqbDocument10 pagesCyi3b YveqbMohsin MuhammadNo ratings yet

- UJPA-18490206 MenuptlopDocument13 pagesUJPA-18490206 Menuptlopghio lokiopNo ratings yet

- Burda@th - If.uj - Edu.pl Atg@th - If.uj - Edu.pl Corresponding Author: Bwaclaw@th - If.uj - Edu.plDocument9 pagesBurda@th - If.uj - Edu.pl Atg@th - If.uj - Edu.pl Corresponding Author: Bwaclaw@th - If.uj - Edu.plbansyNo ratings yet

- Tmp84e3 TMPDocument4 pagesTmp84e3 TMPFrontiersNo ratings yet

- Particle Transport: Monte Carlo Methods Versus Moment EquationsDocument16 pagesParticle Transport: Monte Carlo Methods Versus Moment EquationsfieldpNo ratings yet

- Chirikjian The Matrix Exponential in KinematicsDocument12 pagesChirikjian The Matrix Exponential in KinematicsIgnacioNo ratings yet

- Inverse problem in optical tomography using iteratively regularized Gauss–Newton algorithmDocument22 pagesInverse problem in optical tomography using iteratively regularized Gauss–Newton algorithmMárcioBarbozaNo ratings yet

- Bosonic String TheoryDocument50 pagesBosonic String Theorysumairah1906No ratings yet

- Tamm States and Surface Electronic StructureDocument17 pagesTamm States and Surface Electronic StructureRyan BaliliNo ratings yet

- Mitja Perus - Neural Networks As A Basis For Quantum Associative NetworksDocument12 pagesMitja Perus - Neural Networks As A Basis For Quantum Associative Networksdcsi3No ratings yet

- Radiation Pattern of a Parallelogram Loop AntennaDocument8 pagesRadiation Pattern of a Parallelogram Loop AntennaMahima ArrawatiaNo ratings yet

- Bielajew Salvat 2001Document12 pagesBielajew Salvat 2001jiar001No ratings yet

- Inverse Kinematics Algorithms for Protein Structure DeterminationDocument13 pagesInverse Kinematics Algorithms for Protein Structure Determinationulysse_d_ithaqu7083No ratings yet

- The Complete Spectrum of The Area From Recoupling Theory in Loop Quantum GravityDocument12 pagesThe Complete Spectrum of The Area From Recoupling Theory in Loop Quantum GravityPatrick WongNo ratings yet

- How To: Minkowski DiagramsDocument4 pagesHow To: Minkowski DiagramsDerek StamponeNo ratings yet

- 1 s2.0 S003448771000025X Main PDFDocument32 pages1 s2.0 S003448771000025X Main PDFNelson SandovalNo ratings yet

- Cubical Homology in Digital ImagesDocument10 pagesCubical Homology in Digital ImagesSEP-PublisherNo ratings yet

- Frame Dragging With Optical VorticesDocument13 pagesFrame Dragging With Optical VorticesJames StrohaberNo ratings yet

- Quartet Condensation and Isovector Pairing Correlations in N Z NucleiDocument5 pagesQuartet Condensation and Isovector Pairing Correlations in N Z Nucleikibur1No ratings yet

- Modelo de IsingDocument27 pagesModelo de IsingidalmirNo ratings yet

- Mathematical aspects of impedance imaging algorithmsDocument4 pagesMathematical aspects of impedance imaging algorithmsRevanth VennuNo ratings yet

- The Photoelectric Effect Without PhotonsDocument15 pagesThe Photoelectric Effect Without PhotonsBenjamin CrowellNo ratings yet

- Two-D Triangular Ising Model ThermodynamicsDocument16 pagesTwo-D Triangular Ising Model ThermodynamicsSaroj ChhatoiNo ratings yet

- Homogenization of Interfaces Between Rapidly Oscillating Fine Elastic Structures and FluidsDocument23 pagesHomogenization of Interfaces Between Rapidly Oscillating Fine Elastic Structures and FluidsAtanu Brainless RoyNo ratings yet

- Shapley, 31,: Harlow, Age of (1919) - Ibid., 3'einstein, A., Trdgheit Korpers Energienhalt Abhdngig? Physik, (1905)Document4 pagesShapley, 31,: Harlow, Age of (1919) - Ibid., 3'einstein, A., Trdgheit Korpers Energienhalt Abhdngig? Physik, (1905)mihaiNo ratings yet

- Practical 1Document5 pagesPractical 1Pratik SinghNo ratings yet

- Atomic Photoionization in The Born Approximation and Angular Distribution of PhotoelectronsDocument13 pagesAtomic Photoionization in The Born Approximation and Angular Distribution of Photoelectronspcd09No ratings yet

- Shinozuka 1990 - Stochastic Methods in Wind Engineering PDFDocument15 pagesShinozuka 1990 - Stochastic Methods in Wind Engineering PDFWasim IlyasNo ratings yet

- Endocrine Disruptive Chemicals: Mechanisms of Action and Involvement in Metabolic DisordersDocument10 pagesEndocrine Disruptive Chemicals: Mechanisms of Action and Involvement in Metabolic DisorderscuprianNo ratings yet

- Ed 080 P 1474Document2 pagesEd 080 P 1474cuprianNo ratings yet

- Entropy: A Dynamic Model of Information and EntropyDocument9 pagesEntropy: A Dynamic Model of Information and EntropycuprianNo ratings yet

- Stimuli-Responsive Hydrogel Membranes Coupled With Biocatalytic ProcessesDocument5 pagesStimuli-Responsive Hydrogel Membranes Coupled With Biocatalytic ProcessescuprianNo ratings yet

- Bi 9708646Document10 pagesBi 9708646cuprianNo ratings yet