Professional Documents

Culture Documents

Null Hypothesis: The Several Populations Being Compared All Have The Same Mean Research Hypothesis: They Have Different Means

Uploaded by

Valerie MokOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Null Hypothesis: The Several Populations Being Compared All Have The Same Mean Research Hypothesis: They Have Different Means

Uploaded by

Valerie MokCopyright:

Available Formats

ANOVA (Analysis of Variance) A hypothesis-testing procedure for studies with 3 or more groups, with each group being an entirely

separate group of people. Analyzing variances to look at whether the sample means differ. Null hypothesis: The several populations being compared all have the same mean Research hypothesis: They have different means. Similarity with t-tests: 1. True population variance is not known. 2. Population variance can be estimated. 3. Assumption: all populations have the same variance. VARIATION WITHIN EACH SAMPLE Within-groups estimate of the population variance: Estimate of the variance of population of individuals based on the variation among the scores in each of the actual groups studied. (a single pooled estimate) It is not affected by whether the NH is true. o Comes out the same whether the means of the populations are the same or not o It focuses only on the variation WITHIN each population (different scores between different people IN a population) Variation in scores within samples are due to chance o People responding differently OR o Experimental error Hence, within-groups population variance estimate = estimate based on chance factors that cause different people in a study to have different scores. VARIATION BETWEEN THE MEANS OF SAMPLES When the null hypothesis IS true: All samples come from populations that have the same mean. *Assumptions: all populations have same variation and have normal curves. Hence: if NH is true, all populations have same mean, variation and normal curve. BUT, even then, samples will still be a little different. Difference: depends on how much variation there is IN each population. Little variation in scores: then means of samples from that population will tend to be similar. Meaning the chance factors are similar. Much variation in scores: then means of a sample from each population are likely to be very different. (if we take one sample from 5 different populations, they are likely to have very different means) Means of 3 different populations may turn out to be the same. BUT if there is actually a large variance between scores IN each population, if we take ONE sample from EACH of the 3 populations, the means of the 3 samples will be very different. THEREFORE: it should be possible to estimate the variance in each population from the variation among the means of our samples. (since they are so strongly related)

Between-groups estimate of the population variance: Estimate of the variance of the population of individuals based on the variation among the means of the groups (samples) studied. Conclusion: Estimate gives an accurate indication of variation within the population If the null hypothesis is NOT true: The populations themselves have different means. Hence variation among means of samples taken from them is caused by: 1. Chance factors IN populations 2. Variation AMONG population means. (Treatment effect) Different treatment received by the groups causing different means The larger the variation within the populations, the larger the variation among means of samples. Conclusion: This method is influenced by both variation IN populations (chance) and variation AMONG populations (treatment). Will NOT give accurate estimate of variation WITHIN the population since it will also be affected by variation AMONG populations. F-RATIO Ratio of the between-groups population variance estimate to the within-groups population variance estimate. When the null hypothesis is true: Both estimates should be about the same. Ratio should be approximately 1:1 (about 1) When the null hypothesis is false: Between-groups estimate should be larger than the within-groups estimate o Distribution of means (within-groups) less likely to have extreme scores Ratio should be greater than 1. Look up an F table to see how extreme a ratio is needed to reject NH at a significance level. F distribution To find the cutoff for an F ratio that is large enough to reject the null hypothesis, we need an F distribution. E.g Find the F ratio for samples of 5 from 3 populations. X 100 times. Exact shape depends on how many samples taken each time, and how many scores are in each sample. NOT symmetrical: Long tail to the right, positive skew o Because an F distribution is a distribution of ratios of variances (always positive numbers). Most scores pile near 1.

F table Takes into account 2 different degrees of freedom. 1. Between-groups degrees of freedom = Numerator degrees of freedom 2. Within-groups degrees of freedom = Denominator degrees of freedom HYPOTHESIS TESTING Assumptions: (similar to t-test for independent means) Populations must follow a normal curve Populations must have equal variances If NH is rejected, means are not the same. BUT, we do not know which population means are significantly different from each other. Planned contrasts/comparison (priori comparison): Planning in advance to contrast the results from specific groups. Bonferroni procedure/ Dunns test: Dividing the significance level by the number of planned contrasts so that each contrast is tested at a more stringent significance level. With multiple contrast, there is a higher chance of getting a significant result if the null hypothesis is true. E.g Flipping a coin gives 50% chance of heads. Flipping 5 coins gives a higher chance that at least ONE will be heads. Post hoc comparisons: Making pairwise comparisons (comparing all possible pairings of means), done AFTER an analysis of variance, as part of an exploratory analysis. BUT, since were comparing ALL possible combinations, Bonferroni procedure might result in a very small significance level, Scheffe Test Method of figuring the significance of post hoc comparisons that takes into account all possible comparisons that could be made. Divide F ratio for comparison by the overall studys dfBetween (Ngroups 1). Compare this smaller F to overall studys F cutoff. Keeps overall risk of a Type 1 error at 0.05 Doesnt reduce statistical power too drastically Advantages: Only method that can be used for BOTH simple comparisons and complex ones Disadvantages: Most conservative: Gives higher chance of significance BUT still lower than any other post hoc contrasts.

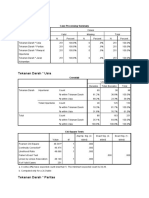

Figuring Within-groups estimate of the population variance 1. Figure population variance estimate based on each groups scores a. Figure sum of squared deviation scores b. Divide sum of squared deviation scores by groups df (N-1). 2. Average these variance estimates.

Figuring Between-groups estimate of population variance 1. Estimate variance of distribution of means a. Find mean of all scores (mean of all the sample means). b. Minus the overall mean from each sample mean (deviation score). c. Figure sum of squared deviation scores d. Divide sum of squared deviations by number of means 1. 2. Figure estimated variance of the population of individual scores a. Multiply variance of distribution of means by number of scores in each group.

Figuring the F Ratio

()

Hypothesis testing with analysis of variance 1. Restate question as a RH and NH about the populations a. Population 1: People with secure style b. Population 2: People with avoidant style c. Population 3: People with anxious style d. NH: These 3 populations have the same mean e. RH: These population means are not the same 2. Determine the characteristics of the comparison distribution a. F distribution b. State the 2 degrees of freedom 3. Determine cutoff sample score at which NH should be rejected a. Use the F table 4. Determine samples score on the comparison distribution. a. Figure out samples F ratio 5. Decide whether to reject the null hypothesis

Figuring planned contrasts (between 2 populations) 1. Estimate the variance of the distribution of means (S2M) 2. Figure estimated variance of population of individual scores (S2Between) 3. Calculate the F ratio. 4. Do hypothesis testing to see if the planned contrast is significant using the true significance level (Bonferroni procedure). OR for post hoc contrasts, use Scheffe test.

You might also like

- Inferential StatisticsDocument48 pagesInferential StatisticsNylevon78% (9)

- SOCI 301 Final Notes Chapter 5 - Hypothesis TestingDocument8 pagesSOCI 301 Final Notes Chapter 5 - Hypothesis TestingJustin LomatNo ratings yet

- Parametric Test RDocument47 pagesParametric Test RRuju VyasNo ratings yet

- Nester A NEW GRAVITATIONAL ENERGY EXPRESSION WITH A SIMPLE POSITIVITY PROOFDocument2 pagesNester A NEW GRAVITATIONAL ENERGY EXPRESSION WITH A SIMPLE POSITIVITY PROOFJason PayneNo ratings yet

- Stat - Lesson 12 ANOVADocument9 pagesStat - Lesson 12 ANOVAChed PerezNo ratings yet

- Anova MethodologyDocument8 pagesAnova MethodologyRana AdeelNo ratings yet

- AnovaDocument24 pagesAnovabatiri garamaNo ratings yet

- Chapter 7 AnovaDocument20 pagesChapter 7 AnovaSolomonNo ratings yet

- Unit 3 HypothesisDocument41 pagesUnit 3 Hypothesisabhinavkapoor101No ratings yet

- 생체의학공학 - 05 - Statistical AnlaysisDocument50 pages생체의학공학 - 05 - Statistical Anlaysis생따일괄기No ratings yet

- Biostatistics PPT - 5Document44 pagesBiostatistics PPT - 5asaduzzaman asadNo ratings yet

- Inferential Statistics For Data ScienceDocument10 pagesInferential Statistics For Data Sciencersaranms100% (1)

- Glossary StatisticsDocument6 pagesGlossary Statisticssilviac.microsysNo ratings yet

- Essay Comparing Two or More Groups Assignment PromptsDocument2 pagesEssay Comparing Two or More Groups Assignment PromptsM MNo ratings yet

- Data Science Interview Preparation (30 Days of Interview Preparation)Document27 pagesData Science Interview Preparation (30 Days of Interview Preparation)Satyavaraprasad BallaNo ratings yet

- Statistics: 1.5 Oneway Analysis of VarianceDocument5 pagesStatistics: 1.5 Oneway Analysis of Varianceأبوسوار هندسةNo ratings yet

- Hypothesis Testing - Analysis of Variance (ANOVA)Document14 pagesHypothesis Testing - Analysis of Variance (ANOVA)Kumar RajNo ratings yet

- Statistics For Management - 2Document14 pagesStatistics For Management - 2Nandhini P Asst.Prof/MBA100% (3)

- Comparison of Means: Hypothesis TestingDocument52 pagesComparison of Means: Hypothesis TestingShubhada AmaneNo ratings yet

- Assignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) SubjectDocument7 pagesAssignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) Subjectrida batoolNo ratings yet

- OneWayANOVA LectureNotesDocument13 pagesOneWayANOVA LectureNotesTizon CoffinNo ratings yet

- Where Are We and Where Are We Going?: Purpose IV DV Inferential TestDocument36 pagesWhere Are We and Where Are We Going?: Purpose IV DV Inferential TestNassir CeellaabeNo ratings yet

- Course: Business Research MethodsDocument37 pagesCourse: Business Research Methodsgurpreet luthraNo ratings yet

- Assignment 2Document19 pagesAssignment 2Ehsan KarimNo ratings yet

- A Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlDocument22 pagesA Simple Introduction To ANOVA (With Applications in Excel) : Source: MegapixlmelannyNo ratings yet

- Hypothesis Testing - Analysis of VarianceDocument19 pagesHypothesis Testing - Analysis of VarianceaustinbodiNo ratings yet

- 1) One-Sample T-TestDocument5 pages1) One-Sample T-TestShaffo KhanNo ratings yet

- Engineering Idea StatisticDocument7 pagesEngineering Idea StatisticVanessa NasutionNo ratings yet

- Completely Randomized DesignDocument5 pagesCompletely Randomized DesignQuinn's Yat100% (3)

- Sullivan ANOVADocument19 pagesSullivan ANOVAjeysamNo ratings yet

- Chapter 9: Analysis of Variance: For ExampleDocument9 pagesChapter 9: Analysis of Variance: For ExampleJoy AJNo ratings yet

- L 13, Independent Samples T TestDocument16 pagesL 13, Independent Samples T TestShan AliNo ratings yet

- Summary SPSS BramDocument39 pagesSummary SPSS BramSuzan van den WinkelNo ratings yet

- Quantitative Traits Are Influenced by Many Genes, Called Polygenes, Each One of WhichDocument8 pagesQuantitative Traits Are Influenced by Many Genes, Called Polygenes, Each One of Whichmaithili joshiNo ratings yet

- An o Va (Anova) : Alysis F RianceDocument29 pagesAn o Va (Anova) : Alysis F RianceSajid AhmadNo ratings yet

- Activity 5Document28 pagesActivity 5Hermis Ramil TabhebzNo ratings yet

- Summary SPSS BramDocument39 pagesSummary SPSS BramSuzan van den WinkelNo ratings yet

- One Way AnovaDocument26 pagesOne Way AnovaSachin JoshiNo ratings yet

- One-Way Analysis of Variance: Using The One-WayDocument25 pagesOne-Way Analysis of Variance: Using The One-WayAustria, Gerwin Iver LuisNo ratings yet

- Pearson R Correlation: TestDocument5 pagesPearson R Correlation: TestRichelle IgnacioNo ratings yet

- Pcba116 Module No. 9 AnovaDocument18 pagesPcba116 Module No. 9 AnovaSHARMAINE CORPUZ MIRANDANo ratings yet

- Spss Tutorials: One-Way AnovaDocument12 pagesSpss Tutorials: One-Way AnovaMat3xNo ratings yet

- Two-Sample Problems: ConceptsDocument13 pagesTwo-Sample Problems: ConceptsJessica Patricia FranciscoNo ratings yet

- What Is A HypothesisDocument4 pagesWhat Is A Hypothesis12q23No ratings yet

- VND - Openxmlformats Officedocument - Wordprocessingml.document&rendition 1Document4 pagesVND - Openxmlformats Officedocument - Wordprocessingml.document&rendition 1TUSHARNo ratings yet

- Parametric Tests, Sept 2020Document30 pagesParametric Tests, Sept 2020prasanna lamaNo ratings yet

- AgainstAllOdds StudentGuide Unit31Document21 pagesAgainstAllOdds StudentGuide Unit31Hacı OsmanNo ratings yet

- Hypothesis Testing Theory-MunuDocument6 pagesHypothesis Testing Theory-MunukarishmabhayaNo ratings yet

- Inferential StatisticsDocument28 pagesInferential Statisticsadityadhiman100% (3)

- Hypothesis Testing ANOVA Module 5Document49 pagesHypothesis Testing ANOVA Module 5Lavanya ShettyNo ratings yet

- ML Unit2 SimpleLinearRegression pdf-60-97Document38 pagesML Unit2 SimpleLinearRegression pdf-60-97Deepali KoiralaNo ratings yet

- Anova BiometryDocument33 pagesAnova Biometryadityanarang147No ratings yet

- AnovaDocument38 pagesAnovaaiman irfanNo ratings yet

- Statistical TreatmentsDocument34 pagesStatistical TreatmentsElmery Chrysolite Casinillo VelascoNo ratings yet

- Midterm II Review: Remember: Include Lots of Examples, Be Concise But Give Lots of InformationDocument6 pagesMidterm II Review: Remember: Include Lots of Examples, Be Concise But Give Lots of InformationKirsti JangaardNo ratings yet

- Anova: Analysis of VarianceDocument22 pagesAnova: Analysis of VarianceMary Grace ValenciaNo ratings yet

- L7 Hypothesis TestingDocument59 pagesL7 Hypothesis Testingمصطفى سامي شهيد لفتهNo ratings yet

- Analysis of VarianceDocument18 pagesAnalysis of Variancemia farrowNo ratings yet

- Anova (Analysis of Variance) : Test Statistic For ANOVADocument2 pagesAnova (Analysis of Variance) : Test Statistic For ANOVAAngelita Dela CruzNo ratings yet

- TtestDocument8 pagesTtestMarvel EHIOSUNNo ratings yet

- Dirac Spinors On Generalised Frame Bundles - A Frame Bundle Formulation For Einstein-Cartan-Dirac TheoryDocument66 pagesDirac Spinors On Generalised Frame Bundles - A Frame Bundle Formulation For Einstein-Cartan-Dirac TheoryJulien SorelNo ratings yet

- The Marginal RevolutionDocument4 pagesThe Marginal RevolutionLaurisah 2017No ratings yet

- Mbti TestDocument5 pagesMbti TestBen ShekalimNo ratings yet

- INTERMIC LPPT Ch4 Lecture Addition 14septDocument64 pagesINTERMIC LPPT Ch4 Lecture Addition 14septUzair ArifNo ratings yet

- Gregory S. Ezra - Classical-Quantum Correspondence and The Analysis of Highly-Excited States: Periodic Orbits, Rational Tori and BeyondDocument49 pagesGregory S. Ezra - Classical-Quantum Correspondence and The Analysis of Highly-Excited States: Periodic Orbits, Rational Tori and BeyondOmasazzNo ratings yet

- Sample Final MCDocument5 pagesSample Final MCGamal AliNo ratings yet

- Janina Marciak-Kozlowska Et Al - Engineering of The Polarisation of A Quantum Vacuum Using Ultra - Short Laser PulsesDocument7 pagesJanina Marciak-Kozlowska Et Al - Engineering of The Polarisation of A Quantum Vacuum Using Ultra - Short Laser PulsesPocxaNo ratings yet

- EE585 Fall2009 Hw3 SolDocument2 pagesEE585 Fall2009 Hw3 Solnoni496No ratings yet

- Solved Problems and Exercises. Part 2Document246 pagesSolved Problems and Exercises. Part 2zenkaevaaiymNo ratings yet

- M5CIA2 Dela Pena BSN1L 1Document2 pagesM5CIA2 Dela Pena BSN1L 1destroyerNo ratings yet

- P1Document3 pagesP1ArunkumarNo ratings yet

- Chisquared CalculationDocument2 pagesChisquared CalculationAbin VargheseNo ratings yet

- Biology CP Study Guide (Evolution & Classification)Document4 pagesBiology CP Study Guide (Evolution & Classification)misterbrownerNo ratings yet

- 7.6 Superpostions of Stationary StatesDocument9 pages7.6 Superpostions of Stationary StatesVia Monica DeviNo ratings yet

- A Primer On Strong Vs Weak Control of Familywise Error Rate: Michael A. Proschan Erica H. BrittainDocument7 pagesA Primer On Strong Vs Weak Control of Familywise Error Rate: Michael A. Proschan Erica H. BrittainjaczekNo ratings yet

- National Interests in Realism and ConstructivismDocument3 pagesNational Interests in Realism and ConstructivismAmujy100% (1)

- 1 - Big Five Personality, OCB PredictorDocument11 pages1 - Big Five Personality, OCB PredictorAstari Pratiwi NuhrintamaNo ratings yet

- Statistics For Business and Economics: Sampling and Sampling DistributionsDocument50 pagesStatistics For Business and Economics: Sampling and Sampling Distributionsfour threepioNo ratings yet

- Chapter 8Document56 pagesChapter 8VatshallaNo ratings yet

- BIVARIATDocument4 pagesBIVARIATSilmi RamdhaniatiNo ratings yet

- Do Not Log-Transform Count DataDocument5 pagesDo Not Log-Transform Count DataarrudajefersonNo ratings yet

- AnovaDocument5 pagesAnovaLinh Chi0% (1)

- Newbold Sbe8 Ch09Document87 pagesNewbold Sbe8 Ch09CathyNo ratings yet

- 4.2.5 Journal - Theories and Laws (Journal)Document5 pages4.2.5 Journal - Theories and Laws (Journal)bushraNo ratings yet

- Spectroscopy OvrviewDocument164 pagesSpectroscopy OvrviewKim Phillips100% (1)

- Relativity4 PDFDocument9 pagesRelativity4 PDFHarbir Singh ParmarNo ratings yet

- Random Process SyllabusDocument2 pagesRandom Process Syllabusmanjunath nNo ratings yet

- Business Statistics, 4e: by Ken BlackDocument38 pagesBusiness Statistics, 4e: by Ken BlackvijayNo ratings yet

- QTM Regression Analysis Ch4 RSHDocument40 pagesQTM Regression Analysis Ch4 RSHNadia KhanNo ratings yet