Professional Documents

Culture Documents

Neural Associative Memory With Finite State Technology

Uploaded by

geohawaiiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Neural Associative Memory With Finite State Technology

Uploaded by

geohawaiiCopyright:

Available Formats

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.

10, October 2008

295

Neural Associative Memory with Finite State Technology

D. Gnanambigai, P. Dinadayalan and R. Vasantha Kumari

Department of Computer Science, K. M. centre for P.G. Studies, Puducherry, India. Principal, Perunthalaivar Kamarajar Government Arts College, Puducherry, India. output pattern, which is the target or the desired pattern. In unsupervised learning, the target output is not present in the network, the system learns of its own by discovering and adapting to structural features in the input pattern. The information provided helps the network in its learning process. Artificial neural networks are a type of massively parallel computing architecture, which is based on a very simplified brain like information encoding and processing model. Neural network can and do exhibit brain-like behaviours such as association, recognition, generalization, classification, feature extraction and optimization. These behaviours fall under the general categories of searching, representation and learning which are closely related to the associative property and selforganizing capability of the brain. Association is one of the fundamental characteristics of human brain. The human brain operates in the associated manner; that is a portion of the recollection can produce an associated stream of data from the memory. It is used to store and retrieve information from the database. We store and retrieve data based on association (i.e) search a target by association. The exitement in neural network started mainly due to the difficulties in dealing with problems in the field of speech and image, natural languages and decision making using known methods of pattern recognition and artificial neural network. Automata theory [3] is one of the longest established areas in Computer Science. Over the past few years, Automata theory has not only developed in many different directions, but has also evolved in an exciting way at several levels: the exploration of specific new models and applications has at the same time stimulated a variety of deep mathematical theories. Standard applications of Automata theory include pattern matching, natural language processing and syntax analysis. Morphology [2][6] is a description of a linguistic phenomenon. Knowledge representation is representing linguistic information in particular morphological information. Morphology is the name given to the study of the "minimal unit of grammatical analysis". Morphology is the field of (Words as units in the lexicon are the subject matter of

Summary

Morphological learning approaches have been successfully applied to morphological tasks in computational linguistics including morphological analysis and generation. We take a new look at the fundamental properties of associative memory along with the power of turing machine and show how it can be adopted for natural language processing. The ability to store and recall stored patterns based on associations make these memories so potentially valuable for natural language processing. A neural associative memory recollects word pattern based on an input that resembles the input word pattern, which is sufficient to retrieve the complete pattern. Morphological parser and Turing lexicon are used to process regular inflectional morphology. The rules of unrestricted grammar are extracted from the morphological parser. Turing Lexicon processes input word using the unrestricted grammar. The associative learning algorithm is a classification method based on Euclidean minimum distance for stem or attribute. The stems and attributes are indexed in the associated memory for quick retrieval. The associative memory is used to recall correct and incorrect word patterns. A machine is designed to implement the morphological process as a special case by achieving both concatenative and non-concatenative phenomena of natural languages. The experimental results shows that the proposed approach has attained good performance in terms of speed and efficiency.

Keywords:

Artificial Neural Networks, Associative Memory, Morphology, Morpheme, Patterns, Turing machine, lexicon, parser.

1. Introduction

Neural associative memory with finite state technology is a new method which combines neural associative memory and Turing machine for languages processing. An Artificial Neural Network (ANN) [2][10], often just called a Neural Network(NN), is a mathematical model or computational model based on Biological neural network. It consists of an interconnected group of artificial neurons and processes information using a connectionist approach to computation. They can be used to model complex relationship between inputs and outputs or to find patterns in data. In neural network, mapping capabilities is that they can map input patterns to their associated output patterns. Learning [2] methods in neural network can be broadly classified into two basic types: supervised and unsupervised. In supervised learning every input pattern that is used to train the network is associated with an linguistics that studies the internal structure of words.

Manuscript received October 5, 2008 Manuscript revised October 20, 2008

296

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.10, October 2008 start state, an input alphabet, and a transition function that maps input symbols and current states to a next state. Computation begins in the start state with an input string. It changes to new states depending on the transition function. A Turing machine [3] is a simple mathematical model of modern digital computers having strong computational capability. The Turing machine is a very simple machine, but, logically speaking, has all the power of any digital computer. The Turing machine has the following features: it has an external memory which remembers arbitrarily long sequence of input and it has unlimited memory capacity. Morphology is the study of how words are built up from smaller units called morphemes. The main morpheme is called the stem and the others are affixes. The stem gives the core meaning and the affixes changes their meaning and grammatical function. Natural language processing [6] deals with words whose grammatical parts are dealt with the help of morphology under the categories of concatenative morphology and non-concatenative morphology. Concatenative morphology glues together stem and affixes. Non-concatenative morphology is more complex. It is a form of word formation in which the root is modified other way than by stringing morpheme together. Non-concatenative morphology is known as base modification, a form in which part of the root undergoes a phonological change. It is also referred to as truncation, deletion, or subtraction. This process removes phonological material from the root. Inflectional morphology is the combination of a base form with the grammatical morpheme in order to express a grammatical feature such as singular/plural or past/present tense. Derivational morphology refers to the creation of new word with a different class. The kind of application that needs morphological processing are grammar, spelling checkers and information retrieval (i.e) sometimes it is useful to search documents. In connection with the analysis we carried out towards the literature survey, we have started working on a new concept namely, Neural associative memory with finite state technology . We collected necessary materials, Journals, basic concepts and supporting evidence. From the literature survey it has been examined how the finite state machine is essential to model morphotactics. Morphotactics is a model of morpheme ordering. The beneficial of finite state automata in morphological analysis is acquired. The part played by finite state automata in the morphological study is realized. The significance of associative memory in morphological process is noted. The limitations of Finite state automata have been reviewed. While processing language using finite state

lexicology.) While words are generally accepted as being the smallest units of syntax, it is clear that in most (if not all) languages, words can be related to other words by rules. In this way, morphology is the branch of linguistics that studies patterns of word-formation within and across languages, and attempts to formulate rules that model the knowledge of the speakers of those languages.

2. Related Work

We aim towards a new technique, namely, Neural associative memory with finite state technology . This technique comprises of Turing Machine, Associative memory and Morphology. Turing machine is powerful than any other finite state machines like finite state automata, pushdown automata and linear bounded automata. Turing machine is capable of moving in both directions. It has finite memory which is used to record necessary changes while performing morphological processing. Turing machine is used for concatenative and orthographic morphological processing. To process nonconcatenative patterns we require large memory to efficiently retrieve the word patterns. Associative memory is used for this purpose. Neural associative memory implements pattern recognition and pattern association. A short introduction of neural associative memory, Turing machine and morphology of natural language is explained in this section. In its simplest form, Associative Memory [5][13] is a memory system that allows one data item to be associated to another, so that access to one data item allows access, by association, to the other. In the neural network literature, Associative Memories are referred to as being auto-associative or hetero-associative. An auto-associative memory allows recall of the same item that is put in. A hetero-associative memory allows recall of an associated item that is different from the input query. Patterns stored in an associative memory are addressed by their contents. Associative memory is content-addressable memory as well as parallel search memory. The major advantage of Associative memory is its capability to performing parallel search and parallel comparison operations. Associative neural memories are a class of artificial neural networks which have gained substantial attention relative to other neural net paradigms. A number of important contributions have appeared in conference proceedings and technical journals addressing various issues of associative neural memories, including multiple-layer architectures, recording/storage algorithms, capacity, retrieval dynamics and, fault-tolerance. Currently, associative neural memories are among the most extensively studied and understood neural paradigms. A model of computation [3] consisting of a set of states, a

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.10, October 2008 automata the time taken is more. When word is processed, the number of states taken by the finite state automata increases. When finite state automata is used to process morphology of a language, the FSA has to undergo more number of states. Therefore, it takes more traversing time to search a word. Finite state automaton is a read only machine. It reads the input only by forward traversing so it does not review the previous traversal. In addition the property of finite state automata states that these machines have no memory. Therefore, orthographic changes cannot be processed by finite state automaton. That is, since there is no writing capability in the finite state automaton modification is not possible. morphological parser and associative memory.

297

3. Architecture of Associative Memory and Turing Machine

We take a new look at the fundamental properties of associative memory and show how it can be coupled with the power of Turing machine for natural language processing. The ability to store and recall patterns based on associations make these memories so potentially valuable for natural language processing. Turing machine with memory is considered for describing the morphological processes of natural languages. It is used for identifying concatenative and non-concatenative morphology. Traditional methods does not contain memory therefore the transition changes every time.

Turing lexicon splits the stem and affixes to find the basic class of the morpheme to associate with the morphological parser which describes the set rules. Morphological parser describes the set of rules. Associative memory comprises of associated morphological patterns. Turing lexicon is used to encode morphotactic knowledge. Turing machine acts as a lexicon. When a natural language query is given it splits it into smaller unit called morphemes. The Turing lexicon is a three tape Turing machine which has read/write heads. The symbols can be read or modified. Tape1 holds the given input query. Tape2 holds the grammar of natural language processing which is loaded from the morphological parser. Tape3 consists of the results of the query. Morphological parser has a set of rules for concatenative and orthography morphology. The morphological parser is like a file. It is useful for splitting the query into stems and by adding affixes to form new words. When the nature of query is concatentive or orthographical the Turing lexicon, identifies the corresponding rule from the morphological parser. The lexicon splits the query into tokens to form other related concatenative words which is stored in Tape3. The morpheme of the query is sent to the associative memory to find out the correctness of the query if the morpheme is a valid query then the result is produced as output from the tape3 otherwise the query is rejected. For processing orthographic query, the input is slightly modified in Tape3 based on the rules from morphological parser.(like consonant doubling, e-deletion, e-insertion, yreplacement, k-insertion etc). Since the orthographic query processing is easily done it is inferred that the Turing machine is more powerful than the traditional approaches. Turing machine is capable of reading, writing, deleting, modifying and comparing facilities. The patterns that are not captured by grammar are considered to be non-concatenative. Therefore the query associates with the patterns in the associative memory to produce the required result. Associative memory is like a database. It has the different classes of look-up table. When a word and its associated patterns are to be read from the associative memory the content of word is specified. The memory locates the associated words which match the specified content and marks them for reading. The associative memory is uniquely suited to do parallel searches by data association. The learning techniques used in the associative memory are supervised learning as the given

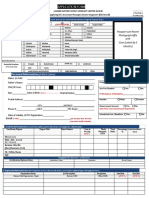

Morphological Parser Output

Input Turing Lexicon

Associative Memory

Fig.1 Structure of Turing machine and associative memory for Morphology

The new proposed machine remembers the finite number of symbols and this machine easily recalls whenever needed. The memory used is associative memory. This is used for pattern recognition and pattern association. The stems, irregular nouns and irregular verbs are present in associative memory in groups of different classes. Associative memory acts as a database (look-up-table). The system follows a modular architecture in which the input text is processed through a cascade of modules that handle different phases of translation process. In an overview the model comprises of Turing lexicon,

298

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.10, October 2008

input query is associated with the target pattern in the associative memory. To efficiently retrieve the associated data items we use the Euclidean distance method. wordi = min || Ci -M || (1)

(iii) e-deletion rule: <verb> <word>e <verb> <word><affix> <affix>ed (iv) concatenation rule: <noun> <word> <verb> <word><affix> <affix>s The input query is placed on tape1 of Turing lexicon. The input is surrounded by end markers $, for example $city$. The rule in tape2 is derived from the morphological parser. Each rule stored in tape2 is separated by two consecutives #. Tape3 is a derivation of input pattern based on the morphological rules. For concatenative morphology, any move on the tape are to the beginning or ending of the stem on the tape3 and if the last character of the stem needs changing this happens before the affix output is added. If the input query is non-concatenative in nature, it is associated with associative memory and the input query searches simultaneously in all the lookup tables of the associative memory. The look-up stage in a natural language system can involve much more than simple retrieval. For example, mouse is the input query and the associative word mice is stored in the look-up table. Using the minimum Euclidean distance formula we find the distance between the input query and the patterns stored in the associated memory. If there is no difference, the correct pattern is located and the result is produced, otherwise it rejects the input query. That is, the given query is not a valid word of the language. For simulation 200 samples of words are taken for the verification of the implemented system. The input pattern can be an inflected verb, infected noun, regular verbs, irregular verbs, regular nouns and irregular nouns. The sample patterns taken by the Turing Lexicon and the morphological parser are processed successfully to produce the desired output. For concatenative morphology 92% of the patterns are correctly recognized and recalled and 8% of the patterns leads to error. All the incorrect input patterns are rejected.

where wordi - is the desired output, Ci - closest patterns from the classes, M - the key morpheme. When a query is sent to the associative memory it searches for the associated minimum distance pattern from the associative memory. The searching is a parallel search mechanism. All the look-up-table are searched simultaneously. The time required to find an item stored in memory can be reduced considerably if stored data can be identified for access by its content. The distance between the given pattern and the target is determined. If there is no difference the exact content is located from the associative memory and all the associated patterns are produced. If the input query does not match the query is rejected (i.e) the input is invalid.

4. Simulation

The Turing lexicon and Morphological parser splits the input into morpheme and affixes and merges morphemes and affixes for concatenative morphology. The morphological parser has a set of rules for splitting and merging the input pattern. Some of the rules are given below. (i) y-replacement rule: <noun> <word>y <noun> <word><affix> <affix>ies

(ii) e-insertion rule: <noun> <word> <noun> <word><affix>s <affix> e (ii) consonant doubling rule: <verb> <word>g <verb> <word><affix> <affix>gg<con> <con> ed

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.10, October 2008

299

60 50 40 30 20 10 0

As given in the graph, the performance is compared with the two models Finite State Automaton and Turing Machine. While using Finite State Automata for natural languages processing, often the generated networks become too large to be practical. In such cases Turing Machine can make the size manageable.

5. Conclusion

Thus a small efficient machine that encodes general morphological knowledge has been developed by implementing Turing machine and Associative memory. Word recognition is made to check whether a word is a member of a language. Morphological process has been achieved for both concatenative and non-concatenative phenomena of natural languages. We believe that the proposed structure will be the basis of a general solution for morphological analysis of language processing. Future work consists in looking for better measures to incorporate this work with Counter-Propogation Network for pattern completion (noisy data). Further work involves more efficient and better methods for representing partsof-speech, adjectives (degrees of comparision) and multiword recognition etc. It can also be developed for Crosslanguage generation or translation.

Test1

Test2

Test3

Test4

FSA for Morphology Turing Machine for morphology

Fig.2 Comparison between FSA and TM

For non-concatenative morphology the given pattern is associated with the associative memory and successfully searched to produce the desired output. If the input pattern are present in the look-up table an associative search is made and the related output is produced. If the input pattern is not present in the look-up table then the pattern is rejected as it is not a word of English. For nonconcatenative morphology all correct words are recognized by this structure. There is no error in the nonconcatenative morphology. If input patterns are incorrect in non-concatenative, all incorrect patterns are rejected. Neural associative memory with finite state technology is combination of Turing Lexicon and Associative memory. The searching time depends the sum of the searching time of Turing Lexicon and Associative memory.

Total_Search_Time=TL_Time+AM_Search_Time (2)

References

[1] Sergio Rao, Fernando Nino (2003) Classification of natural language sentences using Neural Networks, The Florida AI Research Society Conference, pp. 444-449. [2] Goldsimith.J.A. (2002) Unsupervised Learning of the morphology of a Natural Language, Computational Linguistic,27:2 pp.153-198. [3] John E. Hopcroft, Jeffery D. Ullman (1994), Introduction to Automata Theory, Languages, and Computation, NPH. [4] Amengual. J.C and Benedi J.M, (2001) EUTRANS I speech translation system, Machine Translation Journal. [5] T. Gotez, H. Wunsch (2001) An abstract Machine Approach to Finite State Transduction over Large Character Sets, Finite State Methods in Natural Language Processing. [6] Chun-Hsien Chen, Vasant Honavar (2000) A Neural Network Architecture for Syntax Analysis, IEEE Transactions on Neural Networks, Vol. No.1. [7] Van Noord. G, Gerdemann. D (2003) An extensible regular expression compiler for Finite State approaches in Natural Language Processing, Natural Language Engineering , Cambridge Journals, 9: 65-85. [8] Cohen-Sygal. Y, Gerdemann. D, Wintner. S (2003) Computational Implementation of non-concatenative morphology, Proceedings of the workshop on Finite-State Methods in Natural Language Processing, pp.59-66. [9] Yona. S, Wintner. S (2005) A finite-State morphological grammar of Hebrew , Proceedings of the workshop on Computational Approaches to Semitic Languages. [10] Cohen Sygal. Y, Wintner . S (2006) Finite-State Registered Automata for NonConcatenative Morphology, Computational Linguistics, pp.49-82.

The Turing Lexicon searching time can be calculated in terms of number of states traversed. Associative memory search time is calculated based on the distance between actual pattern and target pattern. Thus the structure is tested and implemented successfully. The model is implemented using Java and Jflap tool. To evaluate the performance of finite state automata and proposed model, a large number of sample patterns are taken for experiment. The first thing we did was to retrieve all the nouns and verbs from Wordnet database.

300

IJCSNS International Journal of Computer Science and Network Security, VOL.8 No.10, October 2008 international conferences in the area of Artificial Neural Networks and Theory of Computer Science. He is working as a Lecturer (from 1996) in the Department of Computer Science, Kanchi Mamunivar Centre for Postgraduate Studies, Puducherry, India. Dr. R. Vasanthakumari obtained her Ph.D. degree from Pondicherry University, in 2005. She is working as a Principal in Government College, Puducherry, India. She has more than 27 years of teaching experience in P.G. and U.G colleges. She is guiding many research scholars and has published many papers in national and international conference and in many international journals.

[11] H. Kang (1994) Multilayer associative neural networks storage capacity versus perfect recall, IEEE Transactions on Neural Networks, vol.5, no.5, pp.812-822. [12] P.K. Simpson (1990) Higher-Ordered and interconnected bi-directional associative memories, IEEE Transactions on Systems, Man, and Cybernetics, vol.20, no.3, pp.637-652. [13] Ajit Narayanan & Lama Hashem (1993) An abstract Machine Approach to Finite State, In Proceedings of EACL'1993, pp.297-304. [14] Yingquan Wu and Dimitris A. Pados (2000) A Feedforward Bidirectional Associative Memory, IEEE Transactions on Neural networks, vol. 11, No.4. [15] H. Shi, Y. Zhao, and X. Zhuang (1998) A general model for bidirectional associative memories, IEEE Trans on Systems, Man, and Cybernetics, vol. 28, no. 4, pp. 511519. [16] Z. Wang (1996) A bidirectional associative memory based on optimal linear associative memory, IEEE Transactions on Computers, vol. 45, no. 10, pp. 1171--1179. [17] H. Kang (1994) Multilayer associative neural network (MANNs) Storage capacity versus perfect recall, IEEE Transactions on Neural Networks, vol. 5, no. 5, pp. 812--822. [18] Philip D. Wasserman (1989) Neural Computing Theory and Practice, VNR. [19] M. Anabda Rao (2003) Neural Networks Algorithms and Applications, NPH. [20] HOPF82 Hopfield, J. J. (1982) Neural networks and physical systems with emergent collective computational abilities, Proceedings of the National Academy of Sciences, Vol. 79, pp. 2,554-2,558.

Gnanambigai. D obtained her M.Sc. degree in Computer Science from Pondicherry University, India in 1996 and M.Phil. degree in Computer Science from Manonmaniam Sundaranar University, Tirunelveli, India in 2002. She is presently pursuing her Ph.D. in Computer Science from Vinayaka Missions University, Salem, India under the guidance of Dr. R. Vasanthakumari. She has published papers in national and international conferences in the area of Neural Networks and Automata theory. She is working as a Lecturer (from 2000) in the Department of Computer Science, Kanchi Mamunivar Centre for Post-graduate Studies, Puducherry, India. Dinadayalan. P. obtained his M.C.A degree in the year 1996 and M.Tech degree in Computer Science and Engineering in the year 2000 from Pondicherry University, India and M.Phil. degree in Computer Science from Manonmaniam Sundaranar University, Tirunelveli, India in the year 2002. He is presently pursuing his Ph.D. in Computer Science from Vinayaka Missions University, Salem, India under the guidance of Dr. R. Vasanthakumari. He has published papers in national and

You might also like

- Automated Texture Analysis With Gabor FilterDocument7 pagesAutomated Texture Analysis With Gabor FiltergeohawaiiNo ratings yet

- RSA Random Key064Document16 pagesRSA Random Key064geohawaiiNo ratings yet

- RSA Random Key064Document16 pagesRSA Random Key064geohawaiiNo ratings yet

- Factorization of A 768-Bit RSA ModulusDocument22 pagesFactorization of A 768-Bit RSA ModulusgeohawaiiNo ratings yet

- On The Use of Autocorrelation Analysis For Pitch DetectionDocument10 pagesOn The Use of Autocorrelation Analysis For Pitch DetectiongeohawaiiNo ratings yet

- Neural Memories and Search EnginesDocument18 pagesNeural Memories and Search EnginesgeohawaiiNo ratings yet

- A New Enhanced Nearest Feature Space (ENFS) Classi Er For Gabor Wavelets Features-Based Face RecognitionDocument8 pagesA New Enhanced Nearest Feature Space (ENFS) Classi Er For Gabor Wavelets Features-Based Face RecognitiongeohawaiiNo ratings yet

- Separating Depersonalisation and Derealisation: The Relevance of The "Lesion Method"Document3 pagesSeparating Depersonalisation and Derealisation: The Relevance of The "Lesion Method"geohawaiiNo ratings yet

- Batch Mode Active Learning and Its Application To Medical Image Classi CationDocument8 pagesBatch Mode Active Learning and Its Application To Medical Image Classi CationgeohawaiiNo ratings yet

- Bitmap SteganographyDocument15 pagesBitmap Steganographygaurav100inNo ratings yet

- Real-Time Fundamental Frequency Estimation byDocument24 pagesReal-Time Fundamental Frequency Estimation bygeohawaiiNo ratings yet

- Neural Memories and Search EnginesDocument18 pagesNeural Memories and Search EnginesgeohawaiiNo ratings yet

- Dynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexDocument13 pagesDynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexgeohawaiiNo ratings yet

- Expanding (3+1) - Dimensional Universe From A Lorentzian Matrix ModelDocument4 pagesExpanding (3+1) - Dimensional Universe From A Lorentzian Matrix ModelgeohawaiiNo ratings yet

- A High Resolution Fundamental Frequency Determination Based On Phase Changes of The Fourier TransformDocument6 pagesA High Resolution Fundamental Frequency Determination Based On Phase Changes of The Fourier TransformgeohawaiiNo ratings yet

- De Noise Lossless Compression-Based DenoisingDocument5 pagesDe Noise Lossless Compression-Based DenoisinggeohawaiiNo ratings yet

- Acoustical Measurement of The Human Vocal Tract: Quantifying Speech & Throat-SingingDocument73 pagesAcoustical Measurement of The Human Vocal Tract: Quantifying Speech & Throat-SinginggeohawaiiNo ratings yet

- Universal Lossless Compression of Erased SymbolsDocument12 pagesUniversal Lossless Compression of Erased SymbolsgeohawaiiNo ratings yet

- Lossless Compression - Pattern BasedDocument19 pagesLossless Compression - Pattern BasedgeohawaiiNo ratings yet

- Dynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexDocument13 pagesDynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexgeohawaiiNo ratings yet

- Dynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexDocument13 pagesDynamic Construction of Stimulus Values in The Ventromedial Prefrontal CortexgeohawaiiNo ratings yet

- WaveletDocument28 pagesWaveletIshmeet KaurNo ratings yet

- Batch Mode Active Learning and Its Application To Medical Image Classi CationDocument8 pagesBatch Mode Active Learning and Its Application To Medical Image Classi CationgeohawaiiNo ratings yet

- Lossless Compression - Pattern BasedDocument19 pagesLossless Compression - Pattern BasedgeohawaiiNo ratings yet

- CNS: A GPU-based Framework For Simulating Cortically-Organized NetworksDocument13 pagesCNS: A GPU-based Framework For Simulating Cortically-Organized NetworksgeohawaiiNo ratings yet

- Separating Depersonalisation and Derealisation: The Relevance of The "Lesion Method"Document3 pagesSeparating Depersonalisation and Derealisation: The Relevance of The "Lesion Method"geohawaiiNo ratings yet

- Mental Pratice With Motor Imagery in Strock RecoveryDocument14 pagesMental Pratice With Motor Imagery in Strock RecoverygeohawaiiNo ratings yet

- Brisk - Binary Robust Invariant Scalable KeypointsDocument8 pagesBrisk - Binary Robust Invariant Scalable KeypointsgeohawaiiNo ratings yet

- Finding An Extra Day A Week: The Positive Influence of Perceived Job Flexibility On Work and Family Life BalanceDocument11 pagesFinding An Extra Day A Week: The Positive Influence of Perceived Job Flexibility On Work and Family Life Balancegurvinder12No ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Congratulations To The Distinction Holders' - RGUHS Annual Exams 2011 The Pride of PharmacyDocument12 pagesCongratulations To The Distinction Holders' - RGUHS Annual Exams 2011 The Pride of PharmacyTjohngroup SocializeNo ratings yet

- School Form 2 (SF 2)Document4 pagesSchool Form 2 (SF 2)Michael Fernandez ArevaloNo ratings yet

- California Institut For Human ScienceDocument66 pagesCalifornia Institut For Human ScienceadymarocNo ratings yet

- Cambridge International Examinations: Design and Technology 0445/11 May/June 2017Document4 pagesCambridge International Examinations: Design and Technology 0445/11 May/June 2017knNo ratings yet

- Akhilesh Hall Ticket PDFDocument1 pageAkhilesh Hall Ticket PDFNeemanthNo ratings yet

- 50 Life Lessons From Marcus AureliusDocument10 pages50 Life Lessons From Marcus AureliusJayne SpencerNo ratings yet

- Purdue OWLDocument154 pagesPurdue OWLGe Marquez100% (1)

- 7th Grade Visual Arts Course OutlineDocument5 pages7th Grade Visual Arts Course OutlineMaureen Cusenza100% (1)

- Managing Communication ChannelsDocument28 pagesManaging Communication ChannelsSelena ReidNo ratings yet

- Passi Cover PageDocument6 pagesPassi Cover PageEdward PagayonaNo ratings yet

- Sample Detailed Lesson Plan in English For Teaching DemonstrationDocument6 pagesSample Detailed Lesson Plan in English For Teaching DemonstrationzehvNo ratings yet

- Soc FallDocument688 pagesSoc Falljpcarter1116No ratings yet

- Eamcet 2006 Engineering PaperDocument14 pagesEamcet 2006 Engineering PaperandhracollegesNo ratings yet

- Water ScarcityDocument8 pagesWater Scarcityapi-460354727No ratings yet

- Actas Digitales Congreso CompressedDocument328 pagesActas Digitales Congreso CompressedunomasarquitecturasNo ratings yet

- Beach and Pedersen (2019) Process Tracing Methods. 2nd EditionDocument330 pagesBeach and Pedersen (2019) Process Tracing Methods. 2nd EditionLuis Aguila71% (7)

- Bharat Institute of Engineering and TechnologyDocument14 pagesBharat Institute of Engineering and TechnologyvanamgouthamNo ratings yet

- Learning That Lasts - Webinar 01 PPT Slides (Survival Tips - Hints) TG 220420Document12 pagesLearning That Lasts - Webinar 01 PPT Slides (Survival Tips - Hints) TG 220420Özlem AldemirNo ratings yet

- Syllabi PG (09-06-16)Document401 pagesSyllabi PG (09-06-16)prembiharisaranNo ratings yet

- Human Relation MovementDocument10 pagesHuman Relation MovementLiew Sam Tat100% (1)

- Research Grant Opportunity for Early Career ScientistsDocument4 pagesResearch Grant Opportunity for Early Career ScientistsRAJ MOHANNo ratings yet

- TR - Carpentry NC II PDFDocument161 pagesTR - Carpentry NC II PDFBlueridge Pacific100% (3)

- Lesco FormDocument3 pagesLesco FormShakeel KhurrumNo ratings yet

- 1st COT DLLDocument4 pages1st COT DLLCzarina Mendez- CarreonNo ratings yet

- Four Year PlanDocument1 pageFour Year Planapi-299928077No ratings yet

- Business ProposalDocument11 pagesBusiness ProposalTheCarlosAlvarezNo ratings yet

- April 2, 2019Document63 pagesApril 2, 2019kim_santos_20No ratings yet

- FPT International Student Exchange Center: Abroad in VietnamDocument13 pagesFPT International Student Exchange Center: Abroad in VietnamKita 09No ratings yet

- Pathfit 2Document26 pagesPathfit 2Mary Lyn DatuinNo ratings yet

- The Adventures in Harmony CourseDocument183 pagesThe Adventures in Harmony CourseSeth Sulman100% (10)