Professional Documents

Culture Documents

Ijcia

Uploaded by

invincible_shalin6954Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijcia

Uploaded by

invincible_shalin6954Copyright:

Available Formats

International Journal of Computational Intelligence and Applications

World Scientific Publishing Company

1

INSTRUCTIONS FOR TYPESETTING MANUSCRIPTS

USING MSWORD

*

FIRST AUTHOR

University Department, University Name, Address,

City, State ZIP/Zone, Country

firstauthor_id@domain_name

SECOND AUTHOR

Group, Laboratory, Address,

City, State ZIP/Zone, Country

secondauthor_id@domain_name

Received Day Month Day

Revised Day Month Day

Clustering intends to divide the subset and group the similar objects with respect to the given

similarity measure. Clustering includes number of techniques which includes statistics, pattern recognition, data

mining and other fields. Projected clustering screens the data set into numerous disjoint clusters with the

outliers so that each cluster exists in a subspace. The majority of the clustering techniques are considerably

incompetent in providing the objective function. To overcome the attribute relevancy and the redundancy by

providing the objective function, we are going to implement a new technique termed Multi cluster Dimensional

Projection on Quantum Distribution (MDPQD). This technique evolved a discrete dimensional projection

clusters using the quantum distribution model. Multi cluster dimension on quantum distribution considers the

problem of relevancy of attribute and redundancy. An analytical and empirical result offers a multi cluster

formation based on objective function and to evolve a dimensional projection clusters. Performance of the Multi

cluster Dimensional Projection on Quantum Distribution is measured in terms of an efficient multi cluster

formation with respect to the data set dimensionality, comparison of accuracy with all other algorithms and

scalability of quantum distribution.

Keywords: Dimensional projection, Discrete, Interference data, Quantum distribution model, Redundancy,

Relevancy attributes, Multi cluster.

1. INTRODUCTION

Clustering is an accepted data mining technique for a diversity of applications. One of the

incentives for its recognition is the capability to work on datasets with minimum or no a

prior knowledge. This builds clustering realistic for real world applications. In recent

times, discrete dimensional data has awakened the interest of database researchers due to

its innovative challenges brought to the community. In discrete dimensional space, the

*

For the title, try not to use more than 3 lines. Typeset the title in 10 pt Times Roman, uppercase and boldface.

Typeset names in 10 pt Times Roman, uppercase. Use the footnote to indicate the present or permanent

address of the author.

State completely without abbreviations, the affiliation and mailing address, including country. Typeset in 8 pt

Times Italic.

2 Authors Names

space from a report to its adjacent neighbor can approach its space to the outermost

reports. In the circumstance of clustering, the problem causes the space between two

reports of the same cluster to move toward the space between two reports of different

clusters. Traditional clustering methods may not succeed to distinguish the precise

clusters using quantum distribution model.

Fuzzy techniques have been used for handling vague boundaries of arbitrarily

oriented clusters. However, traditional clustering algorithms tend to break down in high

dimensional spaces due to inherent sparsity of data. Charu Puri., and Naveen Kumar.,

2011 propose a modification in the function of Gustafson-Kessel clustering algorithm for

projected clustering and prove the convergence of the resulting algorithm. It present the

results of applying the proposed projected Gustafson-Kessel clustering algorithm to

synthetic and UCI data sets, and also suggest a way of extending it to a rough set based

algorithm.

As in the case of traditional clustering, the purpose of discrete dimensional

projected clustering algorithms is to form clusters with most encouraging quality.

However, the traditional functions used in estimate the cluster quality may not be

appropriate in the predictable case. The algorithms will consequently be likely to select

few attributes values for each cluster, which might be inadequate for clustering the

reports correctly. In some previous works on projected clustering (Mohamed Bouguessa.,

and Shengrui Wang., 2009), the clusters are evaluated by using one or more of the

following criteria:

Space between the values of the attribute to produce the relevance result

Size of the selected attribute value in the cluster

Size of the associate reports in the cluster

Spontaneously, a small standard space between attribute values in a cluster

indicates that the associate reports agree on a small range of values, which can make the

reports easily restricted. A large number of selected attributes value towards the reports

are analogous at a discrete dimensional, so they are very credible to belong to the same

real cluster. Finally, a large number of reports in the cluster point out there are a high

support for the selected attributes value, and it is improbable that the small distances are

merely by chance.

All these are indicators for a high-quality multiple clusters, but there is actually

a tradeoff between them. Assume a given set of reports, it selects only attributes value

that tend to create the common space among reports, fewer attributes will be selected.

Similarly for a space obligation, locating more reports into a cluster will probably

increase the average number of attribute value chosen.

Its important to point out that in this work; we focus on Multi cluster

Dimensional Projection on Quantum Distribution (MDPQD) under the situation of

quantum distribution model to determine a discrete dimensional clustering. This form of

multi cluster is unusual from all other clustering schemes. Thus we compare our Multi

Instructions for Typing Manuscripts (Papers Title) 3

cluster Dimensional Projection on Quantum Distribution with partitioned distance-based

projected clustering algorithm and enhanced approach for projecting clustering in terms

of efficient multi cluster formation with respect to the data set dimensionality,

comparison of accuracy with all other algorithms. An analytical and empirical result

offers a multi cluster formation based on objective function and to evolve a dimensional

projection clusters.

We provide here an overview of Multi cluster Dimensional Projection on

Quantum Distribution. The rest of this paper is arranged as follows: Section 2 introduces

architecture diagram of the proposed scheme. Section 3 describes about proposed

method; Section 4 shows the evolution and experimental evaluation; Section 5 evaluated

the results and discuss about it. Section 6 describes conclusion and prospect.

2. LITERATURE REVIEW

Most existing clustering algorithms become substantially inefficient if the

required similarity measure is computed between data points in the full-dimensional

space. To address this problem, Bouguessa, M., and Shengrui Wang., 2009 a number of

projected clustering algorithms have been proposed. However, most of them encounter

difficulties when clusters hide in subspaces with very low dimensionality.

Satish Gajawada., and Durga Toshniwal., 2012 propose VINAYAKA, a semi-

supervised projected clustering method based on DE. In this method DE optimizes a

hybrid cluster validation index. Subspace Clustering Quality Estimate index (SCQE

index) is used for internal cluster validation and Gini index gain is used for external

cluster validation in the proposed hybrid cluster validation index. Proposed method is

applied on Wisconsin breast cancer dataset.

Hierarchical clustering is one of the most important tasks in data mining.

However, the existing hierarchical clustering algorithms are time-consuming, and have

low clustering quality because of ignoring the constraints. In this paper, GuoYan Hang.,

et.Al., 2009, a Hierarchical Clustering Algorithm based on K-means with Constraints

(HCAKC) is proposed.

B Shanmugapriya and M Punithavalli., 2012., an algorithm called Modified

Projected K-Means Clustering Algorithm with Effective Distance Measure is designed to

generalize K-Means algorithm with the objective of managing the high dimensional data.

The experimental results confirm that the proposed algorithm is an efficient algorithm

with better clustering accuracy and very less execution time than the Standard K-Means

and General K-Means algorithms.

Survey by Hans peter kriegel., et.Al., 2009, tries to clarify: (i) the different

problem definitions related to subspace clustering in general; (ii) the specific difficulties

encountered in this field of research; (iii) the varying assumptions, heuristics, and

intuitions forming the basis of different approaches; and (iv) how several prominent

solutions tackle different problems. Rahmat Widia Sembiring., et.Al., 2010, PROCLUS

4 Authors Names

performs better in terms of time of calculation and produced the least number of un-

clustered data while STATPC outperforms PROCLUS and P3C in the accuracy of both

cluster points and relevant attributes found.

Inspired from the recent developments on manifold learning and L1-regularized

models for subset selection, Deng Cai Chiyuan., and Zhang Xiaofei He., 2010 propose in

a new approach, called Multi-Cluster Feature Selection (MCFS), for unsupervised feature

selection. Specifically, we select those features such that the multi-cluster structure of the

data can be best preserved. The corresponding optimization problem can be efficiently

solved, since it only involves a sparse eigen-problem and a L1-regularized least squares

problem.

Yun Yang., and Ke Chen., 2011., proposed weighted clustering ensemble

algorithm provides an effective enabling technique for the joint use of different

representations, which cuts the information loss in a single representation and exploits

various information sources underlying temporal data but does not contain the extracted

feature. Jung-Yi Jiang., et.Al., 2011., have one extracted feature for each cluster. The

extracted feature, corresponding to a cluster, is a weighted combination of the words

contained in the cluster. By this algorithm, the derived membership functions match

closely with and describe properly the real distribution of the training data.

Yanming Nie., et.Al., 2012., employs a probabilistic algorithm to estimate the most likely

location and containment for each object. By performing such online inference, it enables

online compression that recognizes and removes redundant information from the output

stream of this substrate. We have implemented a prototype of our inference and

compression substrate and evaluated it using both real traces from a laboratory warehouse

setup and synthetic traces emulating enterprise supply chains.

To evolve a discrete dimensional projection clusters, a new technique named Multi

cluster Dimensional Projection on Quantum Distribution (MDPQD) scheme is presented.

3. PROPOSED MULTI CLUSTER DIMENSIONAL PROJECTION USING

QUANTUM DISTRIBUTION MODEL

The proposed work is efficiently designed for projecting the clusters in discrete

dimensional by adapting the Multi cluster Dimensional Projection on Quantum

Distribution (MDPQD). The proposed Multi cluster Dimensional Projection on Quantum

Distribution is processed under different input, intermediate and output processes.

- The input unit takes the Habitats of Human data based on Socio Democratic

Cultures (HHSD) dataset.

- The objective functions are choosing to obtain the precise goal by eliminating

the relevancy and redundancy of attributes.

Instructions for Typing Manuscripts (Papers Title) 5

- The quantum distribution technique is used to discover a model without the

relevancy analysis and redundancy of attributes.

- The activity formed in the proposed model is the multi cluster formations using

the different set if attribute values.

- The output unit contains the discrete dimensional projection clusters.

The architecture diagram of the proposed Multi cluster Dimensional Projection

on Quantum Distribution (MDPQD) is shown in Fig 3.1.

Fig 3.1: Proposed Multi cluster Dimensional Projection on Quantum Distribution

(MDPQD) process

Habitats of

Human data

based on Socio

Democratic

Cultures (HHSD)

Dataset

Data Collection

INPUT

Objective

Function

DISCOVERY

Using Quantum Distribution

Model

TRACKING

ACTIVITIES

Multi cluster

formation with

attribute value

OUTPUT

Precise Dimensional Projection Clusters

CAUSE

6 Authors Names

In the above Figure while generating a HHSD dataset, the size of each cluster and the

domain of each attribute were first determined arbitrarily. This dataset is used to list the

different types of attributes (i.e) food habits, culture, weather conditions, business

conditions. The values are assigned to the attributes depending on the environment. The

similar attribute values are grouped together to form the clusters. Each cluster, then

precise in selecting the attribute value. The multiple clusters are formed for the different

types of attributes. For each attribute value of a cluster, a confined mean was chosen

precisely from the domain. Each report in the multi cluster determines whether to follow

the precise attribute values according to the data error rate.

Quantum distribution is optimized with a given constraints and with variables

that need to be minimized or maximized using programming techniques. An objective

function can be the result of an effort to communicate a business goal in mathematical

terms for use in assessment analysis and optimization studies. Applications are frequently

necessitate a QD model by simpler form, so that an available computational objective

function approach can be used. An a priori bound is resultant on the quantity of

error which such an rough calculation can encourage. This leads to a natural criterion

for selecting the most excellent precise attribute value is chosen.

3.1 Objective Function using QDM

We assume that the Habitats of Human data based on Socio Democratic

Cultures (HHSD) dataset consists of a set of incoming reports which are denoted by

1 Y . . .

Yi . It is assumed that the data point

Yi is received at the time stamp Si. It is

assumed that the discrete dimensionality of the HHSD data set h. The h

dimensions of the report

Yi are denoted by (y

1

i. . . y

d

i). In addition, each data point

has an error associated with the dissimilar dimensions. The error associated with the

kth dimension for data point Yi is denoted by k (

Yi ).

We remind that many indecisive data mining algorithms use the probability

density function in order to characterize the underlying behavior. Consequently, we

construct the more modest assumption that only error variances are available. Since

the diverse dimensions of the data may replicate diverse quantities, they may

correspond to very different scales. In order to take the precise behavior of the

different discrete dimensions into account, we need to perform quantum distribution

across different discrete dimensions. This is done by maintaining global statistics.

This statistics is used to compute global variances. These variances are used to scale

the data over time with values.

In order to include the greater importance of recent data points in a

developing stream, we use the concept of an objective function f(s), which quantifies

the relative importance of the different data points over time. The objective function

is drawn from the range (0, 1), and serves as a quantize factor for the relative

importance of a given data point. This function is a decreasing function, and

represents the objective of importance of a data point over time.

Instructions for Typing Manuscripts (Papers Title) 7

A commonly used objective function is the exponential objective function.

This function is defined as follows. The exponential objective function f(s) with

parameter is defined as follows as a function of f(s) = 2s (1). We note that the

value of f(s) reduces by a factor of 2 every 1/ time units. This corresponds to the

half-life of the function f(s).

3.2 Dimension Projection Clustring process

By the concept, it notify that the Discrete Dimensional Projection Clusters

do not depend on consumer limitation in determining the attribute value of each

cluster. It strives to maximize the quantum distribution technique for the each selected

attribute value and the number of selected attributes of each cluster at the same time.

As confer previously, when it indicates the superiority of a dimensional projected

cluster it maximizes the accuracy by eliminating the redundancy. It is the inclusion

process of multi clustering that allows us to implement a dynamic threshold

adjustment scheme that maximizes the criteria simultaneously.

There are two thresholds in the dimensional projections as

min

B and Q

min

that restricts the smallest amount of selected attributes for each cluster and the

minimum quantum attribute values of them. An attribute value is selected by a cluster

if and only if its quantum index with respect to the cluster is not less than Q

min.

Under

this MDPQD scheme, if an attribute value is not selected by either of two clusters, it

will be selected by the new cluster formed by merging them. However, if an attribute

value is selected by only one cluster, so that we can obtain a precise result to the

query depending on the variance of the mixed set of values at the attribute. Two

clusters are allowed to merge if and only if the resulting cluster has utmost

min

B

selected attributes.

Initially, both thresholds are set at the highest possible values, so that all

allowed merges are very feasible to engage reports from the analogous real cluster. At

some point, there will be no more talented merges with the current attribute values.

This signals the algorithm to release the values and establish a new round of

integration. The process repeats until no more merging is possible, or a target number

of clusters are reached with the attribute value. By vigorously adjusting the threshold

values in response to the merging process, the number and relevance of the selected

attributes are both exploit.

3.2.1 Algorithm for Multi cluster Dimensional Projection on Quantum

Distribution (MDPQD)

The below describes the steps to be performed

h: Habitats of Human data based on Socio Democratic Cultures (HHSD) dataset

dimensionality

min

B : Minimum number of selected attributes value per cluster

8 Authors Names

Q

min

: Minimum number of Quantum index of a selected attribute

k

th

dimensions of dataset

Begin

Step 1: Each report in a cluster

Step 2: For pace: = 0 to d-1 do

{

Step 3:

min

B : = d - pace

Step 4: Rmin: = 1 pace = (d -1)

Step 5: Foreach cluster C

Step 6: SelectAttrisVal(C, Q

min

)

Step 7: BuildQDmodel(

min

B , Q

min

)

Step 8: While Quantizeresult

{

Step 9: MC1 and MC2 are multiple clusters formed with objfunc

Step 10: Various attribute value which forms the new cluster Cn

Step 11: Cn: = MC1, MC2MCn

Step 12: SelectAttrisVal(Cn, Q

min

)

Step 13: Update Quantize result

Step 14: If clusters remained = k

Step 15: Goto 16

}

}

Step 16: Output result

End

To form the multiple clusters, each cluster keeps an objective function that

place the attribute value between the each clusters, and the best achieve is propagated.

After calculating the attribute value of all other clusters, the information of the best

cluster will be extracted from the dataset using the quantum distribution model. The

entries involving the two clusters with the same attribute value will be removed and

the value between the clusters will be inserted into the clusters. The process repeats

until no more possible merges exist, and a new clustering step will begin by achieving

the multi clustering concept.

4. EXPERIMENTAL EVALUATION

In habitats of human data based on social and demographic culture dataset,

clusters can be formed in discrete dimensions. Only a discrete dimension of attributes

is precise to each cluster, and each cluster can have a different set of precise attribute

value. An attribute is precise to a cluster if it helps identify the member reports of it.

This means the values at the precise attributes are distributed around some specific

values in the cluster, while the reports of other clusters are less likely to have such

values. Determining the multi clusters and their precise attribute value from a HHSD

dataset is known as the discrete dimensional projected clusters.

For each cluster, a discrete dimensional projected clustering algorithm

determines a set of attributes. It assumed to be more precise to the users. Discrete

Dimensional Projected clustering is potentially useful in grouping the clusters and

Instructions for Typing Manuscripts (Papers Title) 9

forms the multiple clusters using attribute value. In these datasets, the habitat levels of

different human being is taken as samples and recorded. We can view the cultural

level of different peoples as attributes of the samples or it is also taken as the samples

as the attributes of different culture people.

Clustering can be performed on attribute value of each sample. A set of precise

attribute value might co express simultaneously in only some samples. Alternatively,

a set of precise attribute value samples may have only some of the culture habits are

co expressed simultaneously. Identifying the precise attributes using the objective

function may help to improve the multi clustering objective. The selected attributes

may also suggest a smaller set of habitat for researchers to focus on, possibly reduces

the efforts spent on expensive natural experiments. In this section, we develop a

progression of experiments considered to estimate the correctness of the proposed

algorithm in terms of

i) Comparison of accuracy,

ii) Execution Time,

iii) Multi cluster formation efficiency.

5. RESULTS AND DISCUSSION

In this work we have seen how the clusters have been projected in discrete

dimensional projected spaces. The below table and graph describes the performance

of the proposed Multi cluster Dimensional Projection on Quantum Distribution

(MDPQD). In the consequence, we compared MDPQD against partitioned distance-

based projected clustering algorithm (PCKA) and Enhanced Approach for Projecting

Clustering (EAPC), in terms of accuracy.

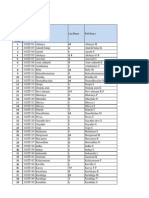

No. of clusters Data Accuracy (%)

Proposed MDPQD EAPC method Existing PCKA

10 90 82 65

20 89 83 68

30 88 85 69

40 86 81 70

50 89 79 69

60 92 78 71

70 93 80 73

80 94 81 75

90 95 82 75

100 95 83 74

Table 5.1 No. of clusters vs. Data Accuracy

10 Authors Names

The above table (table 5.1) describes the average data accuracy based on the

number of clusters formed with respect to the HHSD dataset. The dimensionality of the

cluster of the proposed Multi cluster Dimensional Projection on Quantum Distribution

(MDPQD) is compared with an existing partitioned distance-based projected clustering

algorithm (PCKA) and Enhanced Approach for Projecting Clustering (EAPC).

Fig 5.1 No. of clusters vs. Data Accuracy

Fig 5.1 describes the average data accuracy based on the number of clusters partitioned

with respect to the dataset. The set of experiments was used here to examine the impact

of accuracy in the Multi cluster Dimensional Projection on Quantum Distribution

algorithm. MDPQD is capable to accomplish vastly precise results and its performance is

normally reliable. As we can see from Fig. 5.1, MDPQD is more scalable and accuracy in

cluster formation than the existing EAPC and PCKA algorithm. If the average clusters

accuracy is very low, only in the EAPC and PACKA by providing unsatisfactory results.

Experiments showed that the proposed MDPQD algorithm efficiently identifies the

clusters using the objective function and its dimensions precisely in a variety of

situations.

MDPQD eradicates the choice of inappropriate dimensions in all the data sets

used for experiments. This can be achieved by the fact that MDPQD initiates its process

by detecting all the regions and their positions in every dimension, facilitating it to

control the calculation of the discrete dimensions. Compared to an existing PCKA and

EAPC, the proposed MDPQD achieved better accuracy and the variance is 30-40% high.

0

10

20

30

40

50

60

70

80

90

100

10 20 30 40 50 60 70 80 90 100

No.of clusters

D

a

t

a

A

c

c

u

r

a

c

y

(

%

)

Proposed MDPQD EAPC method Existing PCKA

Instructions for Typing Manuscripts (Papers Title) 11

Discrete Data

Dimensionality

Execution Time (sec)

Proposed MDPQD EAPC method Existing PCKA

50 2 8 4

100 3 11 7

150 5 14 11

200 8 18 12

250 11 22 14

300 12 24 16

350 14 25 17

400 17 32 25

450 20 45 33

500 22 49 45

Table 5.2 Discrete Data Dimensionality vs. Execution Time

The above table (table 5.2) describes the presence of time taken to execute based

on the discrete data dimensionality partitioned with respect to the HHSD dataset. The

execution time of the cluster of the proposed Multi cluster Dimensional Projection on

Quantum Distribution (MDPQD) is compared with an existing partitioned distance-based

projected clustering algorithm (PCKA) and Enhanced Approach for Projecting Clustering

(EAPC).

Fig 5.2 Discrete Data Dimensionality vs. Execution Time

Fig 5.2 describes the presence of time to execute based on the discrete data

dimensionality with respect to the HHSD dataset. As observed from the figure 5.2,

MDPQD exhibit reliable performance from the first set of experiments on data sets with

lesser execution time taken. In tricky cases, MDPQD presents much improved results

0

10

20

30

40

50

60

50 100 150 200 250 300 350 400 450 500

Discrete Data Dimensionality

E

x

e

c

u

t

i

o

n

T

i

m

e

(

s

e

c

)

Proposed MDPQD EAPC method Existing PCKA

12 Authors Names

than the existing EAPC and PCKA method. The results stated in Fig. 5.2 recommend that

the proposed MDPQD is more interested to the proportion of data sets in execution time

parameter.

Fig 5.3 describes the consumption of time to perform the dimensional projection

of clustered based on the quantum distribution model described in the dataset. The

proposed MDPQD balances linearly with the increase in the data dimensionality. As

specified in the scalability experiments with respect to the data set size, the execution

time of MDPQD is generally provides improved results than that of EAPC and PCKA

when the time required to project the clusters in discrete dimensionality employed for

regular runs is also included.

The time consumption is measured in terms of seconds. Compared to the

existing PCKA and EAPC, the proposed MDPQD consumes less time since it gives

better cluster dimensionality result and the variance in time consumption is

approximately 30-40% low in the proposed MDPQD.

Dataset

Dimensionality

Multi Cluster formation Efficiency (%)

Proposed MDPQD EAPC method Existing PCKA

100 90 76 70

200 91 78 71

300 92 79 73

400 93 80 75

500 95 81 76

600 96 82 77

700 97 83 78

800 98 85 80

900 95 86 81

1000 96 87 82

Table 5.3 Dataset Dimensionality vs. Multi Cluster formation Efficiency

The above table (table 5.3) describes the multi cluster formation efficiency with

respect to the dataset dimensionality. The multi cluster formation efficiency for the

proposed Multi cluster Dimensional Projection on Quantum Distribution (MDPQD) is

Instructions for Typing Manuscripts (Papers Title) 13

compared with an existing partitioned distance-based projected clustering algorithm

(PCKA) and Enhanced Approach for Projecting Clustering (EAPC).

Fig 5.3 Dataset Dimensionality vs. Multi Cluster formation Efficiency

Fig 5.3 describes the multi cluster formation with the help of the objective

function. This function forms the cluster with the precise attribute values. The different

types of attributes with different set of values are used to achieve a multi cluster.

Compared to an existing EAPC and PCKA, the proposed MDPQD provides the efficient

multi cluster formation and the variance in is approximately 20-25% high in the proposed

MDPQD.

6. CONCLUSION

In this work, we efficiently achieve the multi clustering concept in Habitats of

Human data based on Socio Democratic Cultures (HHSD) dataset by professionally

introducing the proposed Multi cluster Dimensional Projection on Quantum Distribution

(MDPQD) model. The proposed scheme describes the quantum distribution model by

analyzing the data and rectify the redundancy occur on the attribute value in the dataset.

We compared MDPQD with partitioned distance-based projected clustering algorithm

and Enhanced Approach for Projecting Clustering, in terms of accuracy, and multi cluster

formation efficiency. Our experimental evaluations showed that dimensional projection

clusters considerably outperforms objective function especially in multi clustering. The

0

20

40

60

80

100

120

1

0

0

3

0

0

5

0

0

7

0

0

9

0

0

Dataset Dimensionality

M

u

l

t

i

C

l

u

s

t

e

r

f

o

r

m

a

t

i

o

n

E

f

f

i

c

i

e

n

c

y

(

%

)

Proposed MDPQD

EAPC method

Existing PCKA

14 Authors Names

experimental results showed that the proposed MDPQD scheme for the sensitive data

attributes worked efficiently by improving 25 35 % scalability and less execution time.

We show that the use of objective function in the computations can significantly improve

the quality of the underlying results.

1. Major Headings

Major headings should be typeset in boldface with the first letter of important words

capitalized.

1.1. Sub-headings

Sub-headings should be typeset in boldface italic and capitalize the first letter of the first

word only. Section number to be in boldface roman.

1.1.1. Sub-subheadings

Typeset sub-subheadings in medium face italic and capitalize the first letter of the first

word only. Section numbers to be in roman.

1.2. Numbering and spacing

Sections, sub-sections and sub-subsections are numbered in Arabic. Use double spacing

before all section headings, and single spacing after section headings. Flush left all

paragraphs that follow after section headings.

1.3. Lists of items

Lists may be laid out with each item marked by a dot:

- item one,

- item two,

- item three.

Alternatively items may also be numbered in lowercase Roman numerals:

(i) item one,

(ii) item two,

(a) lists within lists can be numbered with lowercase alphabets,

(b) second item.

(iii) item three,

(iv) item four.

Instructions for Typing Manuscripts (Papers Title) 15

or

(1) item one,

(2) item two,

(a) lists within lists can be numbered with lowercase alphabets,

(b) second item.

(3) item three,

(4) item four.

2. Equations

Displayed equations should be numbered consecutively in the paper, with the number set

flush right and enclosed in parentheses.

( ) ( )

( )

1

0

1 ,

( , )

1 ( )

=

=

< =

=

=

}

o

o o

i i

i

t

d t N d n

n t

N n d

. (1)

Equations should be referred to in abbreviated form, e.g. Eq. (1) or (2). In multiple-

line equations, the number should be given on the last line.

Displayed equations are to be centered on the page width. Standard English letters

like x are to appear as x (italicized) in the text if they are used as mathematical symbols.

Punctuation marks are used at the end of equations as if they appeared directly in the text.

Theorem 3.1. Theorems, lemmas, etc. are to be numbered consecutively in the paper.

Use double spacing before and after theorems, lemmas, etc.

Proof. Proofs should end with

3. Illustrations and Photographs

Figures are to be inserted in the text nearest their first reference. Please send one set of

originals with copies. If the author requires the publisher to reduce the figures, ensure that

the figures (including letterings and numbers) are large enough to be clearly seen after

reduction. If photographs are to be used, only black and white ones are acceptable.

Figures are to be sequentially numbered in Arabic numerals. The caption must be

placed below the figure. Typeset in 8 pt Times Roman with line spacing of 10 pt. Use

double spacing between a caption and the text that follows immediately.

16 Authors Names

Previously published material must be accompanied by written permission from the

author and publisher.

4. Tables

Tables should be inserted in the text as close to the point of reference as possible. Some

space should be left above and below the table. Tables should be numbered sequentially

in the text in Arabic numerals. Captions are to be centralized above the tables. Typeset

tables and captions in 8 pt Times roman with line spacing of 10 pt.

Table 1. This is the caption for the table. If the caption is less than one line then it is

centered. Long captions are justified to the table width manually.

Schedule Capacity Level

Business plan Financial planning

a

Planning

Production planning Resource requirement plan (RRP)

Final assembly schedule Capacity control

Master production schedule (MPS) Rough cut capacity plan (RCCP)

Stock picking schedule Inventory control

Order priorities Factory order control Execution

Scheduling Machine (work-centre) control

Operation sequencing Tool control

b

Note: Table notes.

a

Table footnote A.

b

Table footnote B.

If tables need to extend over to a second page, the continuation of the table should be

preceded by a caption, e.g., Table 1 (Continued).

5. Citation

Fig. 1. This is the caption for the figure. If the caption is less than one line then it needs to be manually

centered.

Instructions for Typing Manuscripts (Papers Title) 17

Reference citations in the text are to be numbered consecutively in Arabic numerals, in

the order of first appearance. They are to be typed in superscripts after punctuation

marks, e.g.

(1) in the statement.

1

(2) have proven

2

that this equation

When the reference forms part of the sentence, then they are typed as:

(1) One can deduce from Ref. 3 that

(2) See Refs. 13, 5 and 7 for more details.

6. Running Heads

Please provide a shortened running head (not more than eight words) for the title of your

paper.

7. Footnotes

Footnotes should be numbered sequentially in superscript lowercase Roman letters.

a

Acknowledgments

This section should come before the References. Funding information may also be

included here.

Appendix A. This is the Appendix

Appendices should be used only when sophisticated technical details are crucial to be

included in the paper.

A.1. This is the subappendix

They should come before the References. Number displayed equations occurring in the

Appendix in this way, e.g., (A.1), (A.2), etc.

2

eff

( ) 1

R H

k

S f

nV R f

o

~ . (A.1)

A.1.1. Sub-subappendix

This is the sub-subappendix.

Appendix B. Another Appendix

If there is more than one appendix, number them alphabetically.

a

Footnotes should be typeset in 8 pt Times Roman at the bottom of the page.

18 Authors Names

( ) ( )

( )

o

o o

=

=

< =

=

=

}

1

0

1 ,

( , )

1 ( )

i i

i

t

d t N d n

n t

N n d

. (B.1)

References

The references section should be labeled References and should appear at the end of

the paper. References are to be listed alphabetically with Arabic numerals. For journal

names, use the standard abbreviations. Typeset references in 9 pt Times Roman. Use the

style shown in the following examples.

Journal paper

1. R. Lorentz and D. B. Benson, Deterministic and nondeterministic flow-chart interpretations, J.

Comput. Syst. Sci. 27 (1983) 400433.

2. M. Joliat, A simple technique for partial elimination of unit productions from LR(k) parsers,

IEEE Trans. Comput. 27 (1976) 753764.

Authored book

3. M. Tinkham, Group Theory and Quantum Mechanics (McGraw-Hill, New York, 1964).

4. V. F. Kiselev and O. V. Krilov, Electron Phenomenon in Adsorption and Catalysis on

Semiconductors and Dielectrics (Nauka, Moscow, 1979) (in Russian).

Edited book

5. T. Tel, in Experimental Study and Characterization of Chaos, ed. Hao Bailin (World Scientific,

Singapore, 1990), p. 149.

6. J. K. Srivastava, S. C. Bhargava, P. K. Iyengar and B. V. Thosar, in Advances in Mssbauer

Spectroscopy: Applications to Physics, Chemistry and Biology, eds. B. V. Thosar, P. K.

Iyengar, J. K. Srivastava and S. C. Bhargava (Elsevier, Amsterdam, 1983), pp. 39, 89.

Proceedings

7. A. N. Kolmogorov, Thorie gnrale des sytmes dynamiques et mcanique classique, in Proc.

Int. Congr. Mathematicians, Amsterdam, 1954, Vol. I (North-Holland, Amsterdam, 1957), pp.

315333.

References

1. R. Lorentz and D. B. Benson, Deterministic and nondeterministic flow-chart interpretations, J.

Comput. Syst. Sci. 27 (1983) 400433.

2. M. Joliat, A simple technique for partial elimination of unit productions from LR(k) parsers,

IEEE Trans. Comput. 27 (1976) 753764.

3. M. Tinkham, Group Theory and Quantum Mechanics (McGraw-Hill, New York, 1964).

4. V. F. Kiselev and O. V. Krilov, Electron Phenomenon in Adsorption and Catalysis on

Semiconductors and Dielectrics (Nauka, Moscow, 1979) (in Russian).

5. T. Tel, in Experimental Study and Characterization of Chaos, ed. Hao Bailin (World Scientific,

Singapore, 1990), p. 149.

Instructions for Typing Manuscripts (Papers Title) 19

6. J. K. Srivastava, S. C. Bhargava, P. K. Iyengar and B. V. Thosar, in Advances in Mssbauer

Spectroscopy: Applications to Physics, Chemistry and Biology, eds. B. V. Thosar, P. K.

Iyengar, J. K. Srivastava and S. C. Bhargava (Elsevier, Amsterdam, 1983), pp. 39, 89.

7. A. N. Kolmogorov, Thorie gnrale des sytmes dynamiques et mcanique classique, in Proc.

Int. Congr. Mathematicians, Amsterdam, 1954, Vol. I (North-Holland, Amsterdam, 1957), pp.

315333.

You might also like

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- 4.4 - Computer Implementation - Data Structures, Reproduction, Crossover and MutationDocument4 pages4.4 - Computer Implementation - Data Structures, Reproduction, Crossover and Mutationinvincible_shalin6954No ratings yet

- Library Based AssignmentDocument1 pageLibrary Based Assignmentinvincible_shalin6954No ratings yet

- 4.2 - Robustness of Traditional Optimization and Search TechniquesDocument4 pages4.2 - Robustness of Traditional Optimization and Search Techniquesinvincible_shalin6954No ratings yet

- 4.1 - Introduction To GADocument3 pages4.1 - Introduction To GAinvincible_shalin6954No ratings yet

- 4.5 - Mapping Objective Functions To Fitness FormDocument4 pages4.5 - Mapping Objective Functions To Fitness Forminvincible_shalin6954No ratings yet

- 4.3 - The Goals of OptimizationDocument4 pages4.3 - The Goals of Optimizationinvincible_shalin6954No ratings yet

- Last Date For Submission: 28.01.2017: Assignment-1: Soft Computing (11I006)Document1 pageLast Date For Submission: 28.01.2017: Assignment-1: Soft Computing (11I006)invincible_shalin6954No ratings yet

- S7, S5, S3 - Optional Test Registration Form - PT IDocument1 pageS7, S5, S3 - Optional Test Registration Form - PT Iinvincible_shalin6954No ratings yet

- Operating System MCQDocument18 pagesOperating System MCQinvincible_shalin6954No ratings yet

- Last Date For Submission: 15.03.2017: Assignment-2: Soft Computing (11I006)Document1 pageLast Date For Submission: 15.03.2017: Assignment-2: Soft Computing (11I006)invincible_shalin6954No ratings yet

- Futura Presentation ABSTRACT PDFDocument3 pagesFutura Presentation ABSTRACT PDFinvincible_shalin6954No ratings yet

- Bannari Amman Institute of Technology Department of Information TechnologyDocument7 pagesBannari Amman Institute of Technology Department of Information Technologyinvincible_shalin6954No ratings yet

- PDF DSP - Real Time Digital Signal ProcessingDocument503 pagesPDF DSP - Real Time Digital Signal Processingapi-3721347100% (4)

- PDF DSP - Real Time Digital Signal ProcessingDocument503 pagesPDF DSP - Real Time Digital Signal Processingapi-3721347100% (4)

- Base Paper1 PDFDocument16 pagesBase Paper1 PDFinvincible_shalin6954No ratings yet

- AndroidDocument53 pagesAndroidinvincible_shalin6954No ratings yet

- PING@emergency: K.Padma Sri Lakshmi (152IT165) S.Sindhuja (152IT189)Document3 pagesPING@emergency: K.Padma Sri Lakshmi (152IT165) S.Sindhuja (152IT189)invincible_shalin6954No ratings yet

- Base Paper PDFDocument14 pagesBase Paper PDFinvincible_shalin6954No ratings yet

- Bse Paper 3 PDFDocument14 pagesBse Paper 3 PDFinvincible_shalin6954No ratings yet

- Sathyamangalam: (Repeat The Three Steps For 8 Times)Document8 pagesSathyamangalam: (Repeat The Three Steps For 8 Times)invincible_shalin6954No ratings yet

- 4 2Document6 pages4 2invincible_shalin6954No ratings yet

- Bannari Amman Institute of Technology Department of Information TechnologyDocument7 pagesBannari Amman Institute of Technology Department of Information Technologyinvincible_shalin6954No ratings yet

- Sic XEDocument17 pagesSic XEinvincible_shalin6954No ratings yet

- Bannari Amman Institute of TechnologyDocument2 pagesBannari Amman Institute of Technologyinvincible_shalin6954No ratings yet

- Ch1 BackgroundDocument27 pagesCh1 Backgroundinvincible_shalin6954No ratings yet

- Poly SyllDocument45 pagesPoly SyllArun RajNo ratings yet

- Hard Is CD RivesDocument21 pagesHard Is CD RivesMark DiazNo ratings yet

- S7 IT CTS Eligible ListDocument22 pagesS7 IT CTS Eligible Listinvincible_shalin6954No ratings yet

- Ei73 2 MarksDocument26 pagesEi73 2 MarksSandeep Reddy KankanalaNo ratings yet

- Direct HR Cts B.tech ItDocument6 pagesDirect HR Cts B.tech Itinvincible_shalin6954No ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Leaflet No.2Document7 pagesLeaflet No.2Sabra A.S.No ratings yet

- Autovue IntegrationDocument4 pagesAutovue IntegrationmansrallyNo ratings yet

- The Flow Chart of Tires Pyrolysis EquipmentDocument4 pagesThe Flow Chart of Tires Pyrolysis EquipmentpyrolysisoilNo ratings yet

- B200S-LF Low Freq Sounder Base Install 56-4151-003R-06-10Document4 pagesB200S-LF Low Freq Sounder Base Install 56-4151-003R-06-10George P ReynoldsNo ratings yet

- TS2015 Quick Start Guide PDFDocument7 pagesTS2015 Quick Start Guide PDFAbel Otero RamírezNo ratings yet

- LG Rad 226B PDFDocument65 pagesLG Rad 226B PDFFrancisReis0% (1)

- A Cylindrical Shadow Eclipse Prediction Model For LEO Satellites With Application To IRS SatellitesDocument14 pagesA Cylindrical Shadow Eclipse Prediction Model For LEO Satellites With Application To IRS SatellitesAsia Pacific Journal of Engineering Science and TechnologyNo ratings yet

- Guide To Petrophysical Interpretation PDFDocument147 pagesGuide To Petrophysical Interpretation PDFDwiandaru DarmawanNo ratings yet

- SLAManualDocument187 pagesSLAManualfarhadhassan100% (1)

- CHEG320 Electrochemistry LecturesDocument114 pagesCHEG320 Electrochemistry LecturesqalanisNo ratings yet

- Machine Element Design CheatsheetDocument25 pagesMachine Element Design CheatsheetDarien ChiaNo ratings yet

- SAI GLOBAL, Index House, Ascot, Berks, SL5 7EU, UKDocument73 pagesSAI GLOBAL, Index House, Ascot, Berks, SL5 7EU, UKtracyhopyNo ratings yet

- Wanyiri S K - Traffic Congestion in Nairobi CBDDocument65 pagesWanyiri S K - Traffic Congestion in Nairobi CBD1man1bookNo ratings yet

- CM P1CM EP1 Operation ManualDocument24 pagesCM P1CM EP1 Operation Manualnguyen vuNo ratings yet

- Manufacturer'S Test Certificate: National Builtech Trading and Contracting CoDocument1 pageManufacturer'S Test Certificate: National Builtech Trading and Contracting CoQc QatarNo ratings yet

- Chapter 5Document64 pagesChapter 5pintu13No ratings yet

- Valve PagesDocument5 pagesValve PagesJoyal ThomasNo ratings yet

- Feild Inspection of Shell and Tube Heat ExchangersDocument4 pagesFeild Inspection of Shell and Tube Heat ExchangersMatthew BennettNo ratings yet

- Wolf Range TopDocument2 pagesWolf Range TopArt BowlingNo ratings yet

- 500 Technical Questions Safety&Fire-1Document26 pages500 Technical Questions Safety&Fire-1Saad GhouriNo ratings yet

- A Review of Power Electronics Based Microgrids: Josep M. Guerrero, Xiongfei Wang, Zhe Chen, and Frede BlaabjergDocument5 pagesA Review of Power Electronics Based Microgrids: Josep M. Guerrero, Xiongfei Wang, Zhe Chen, and Frede BlaabjergSaksham GuptaNo ratings yet

- Valbart: API 6D & 6A Trunnion Mounted Ball ValvesDocument36 pagesValbart: API 6D & 6A Trunnion Mounted Ball Valvesbabis1980No ratings yet

- Greenbrier Europe Freight Wagon Catalogue (2018)Document188 pagesGreenbrier Europe Freight Wagon Catalogue (2018)Aaron Hore100% (2)

- Brige Lauching Cap 1 PDFDocument16 pagesBrige Lauching Cap 1 PDFAnonymous VkzquW39No ratings yet

- SWOT Analysis of Viyellatex Spinning LimitedDocument81 pagesSWOT Analysis of Viyellatex Spinning LimitedHossain RanaNo ratings yet

- IGS-NT Application Guide 05-2013 PDFDocument104 pagesIGS-NT Application Guide 05-2013 PDFNikitaNo ratings yet

- 8×8 LED Matrix MAX7219 With Scrolling Text & Android Control Via BluetoothDocument15 pages8×8 LED Matrix MAX7219 With Scrolling Text & Android Control Via BluetoothakashlogicNo ratings yet

- L3 - Hardening Soil Models in PlaxisDocument33 pagesL3 - Hardening Soil Models in PlaxisOng Tai BoonNo ratings yet

- LODLOD Church Cost Proposal For Supply of Labor of 6" CHB With Lintel Beam/ColumnDocument1 pageLODLOD Church Cost Proposal For Supply of Labor of 6" CHB With Lintel Beam/ColumnJeve MilitanteNo ratings yet

- Yale PD2, C85, D85Document28 pagesYale PD2, C85, D85LGWILDCAT73No ratings yet