Professional Documents

Culture Documents

Self Learning Real Time Expert System

Uploaded by

CS & ITCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Self Learning Real Time Expert System

Uploaded by

CS & ITCopyright:

Available Formats

SELF LEARNING REAL TIME EXPERT SYSTEM

Latha B. Kaimal1, Abhir Raj Metkar2, and Rakesh G3

1,2,3

1

Control & Instrumentation Group, C-DAC,Thiruvanathapuram

latha@cdac.in,

2

ametkar@cdac.in,

rakeshg@cdac.in

ABSTRACT

In a Power plant with a Distributed Control System ( DCS ), process parameters are continuously stored in databases at discrete intervals. The data contained in these databases may not appear to contain valuable relational information but practically such a relation exists. The large number of process parameter values are changing with time in a Power Plant. These parameters are part of rules framed by domain experts for the expert system. With the changes in parameters there is a quite high possibility to form new rules using the dynamics of the process itself. We present an efficient algorithm that generates all significant rules based on the real data. The association based algorithms were compared and the best suited algorithm for this process application was selected. The application for the Learning system is studied in a Power Plant domain. The SCADA interface was developed to acquire online plant data.

KEYWORDS

Machine learning, Data Mining, Root cause analysis, Inference Engine, Tertius algorithm

1. INTRODUCTION

Machine Learning Technique is a scientific discipline that is concerned with the design and development of algorithms that allow computers to evolve behaviors based on empirical data, such as from sensor data or databases. A major focus of machine learning research is to automatically learn to recognize complex patterns and make intelligent decisions based on data; the difficulty lies in the fact that the set of all possible behaviors given all possible inputs is too large to be covered by the set of observed examples (training data). Hence the learner must generalize from the given examples, so as to be able to produce a useful output in new cases[1][2]. Knowledge Based System (KBS) has an important role to play, particularly in fault diagnosis of process plants, which involve lot of challenges starting from commonly occurring malfunctions to rarely occurring emergency situations. Machine Learning is discovering new knowledge from large databases (Data Mining). The field of machine learning is concerned with methods that can automatically learn from experience. The experience is usually given in the form of learning examples from which machine learning methods can automatically learn a model. Sometimes it is very important that the learned model can be read by human, and one of the most understandable types of models are rule sets[3][4]. Expert systems are Knowledge-Based Systems(KBS) in which The Knowledge Base(KB) holds information and logical rules for performing inference between facts. The KBS approach is promising as it captures efficient problem-solving of experts, guides the human operator in rapid fault detection, explains the line of reasoning to the human operator, and supports modification

Jan Zizka (Eds) : CCSIT, SIPP, AISC, PDCTA - 2013 pp. 361372, 2013. CS & IT-CSCP 2013 DOI : 10.5121/csit.2013.3641

362

Computer Science & Information Technology (CS & IT)

and refinement of the process knowledge as experience is gained. Real time Expert System Shell is a software development environment containing the basic components of expert systems. The Shell Toolkit can be used to develop Intelligent System for Diagnosis and Root Cause Analysis and operator guidance in process plants etc. The Expert System when enhanced with Learning Engine, updates the KB with new learned rules & thus improves the efficiency of the system in root cause analysis of faults.

Figure. 1 Expert System Components

2. KNOWLEDGE REPRESENTATION

Knowledge Base (KB): The system uses a Hybrid Knowledge base, the main components of which are Meta Rules, Rules & Frames. The Knowledge Base can be built with Domain specific information using the web based, user friendly Knowledge Base Editor GUI. A web based Knowledge Base Editor (KBE) provides facility to input different rules of an application by the domain expert using user-friendly GUI. Rules and other application specific attributes will be represented using Frames and Production rules. Knowledge base consists of domain specific information that is fed into the system through GUI. This information is used to interpret and diagnose real-time data received from controllers and sensors. The structures used to store knowledge consist of: Meta-rule: Structure - If Complaint Then Hypothesis Meta-Rules are the supervisory rules to categorize Sub-rules in broad areas or nodes. Meta rules decide what domain rules should be applied next or in what order they should be applied. Rules: Structure - If Condition Then Action Rules are basic components of the Knowledge base. The condition and action parts of the rules are defined as parameters. The condition and action parts can have more than one parameter separated by logical operators. The parameters are specific data variables, which are configurable by the user. Frames: Frames are general data structure holding the static information about any equipment or unit.

3. INFERENCE ENGINE

The Inference Engine is the heart of the expert system. Since the expert system is intended for diagnosis of faults, a Backward Chaining inference strategy is preferred. This inference engine will use knowledge stored in Production Rules and Frames for arriving at a conclusion or a goal while performing a fault diagnosis using backward reasoning method

Computer Science & Information Technology (CS & IT)

Meta Rule Interpreter GUI/CDI KB/ DB Rule Interpreter Get Initial Status Production Memory Parameter Interpreter Inference Manager Frame Interpreter

363

Working Memory

Figure. 2 Internal Block Diagram of Inference Engine

Working Principle of Inference Engine: The critical alarms are made as Meta-rules and subcauses are depicted as rules in the knowledgebase. When the critical alarm occurs in the system, the relevant meta-rule will be fired; consequently the rules having the condition part true will get fired in sequence till the final root cause of the failure is reached. The inference engine algorithms is as follows: Backward reasoning: Backward chaining involves, working back from possible conclusions of the system to the evidence, using the rules and frames. Thus backward chaining behaves in a goal-driven manner. One needs to know which possible conclusions of the system one wishes to test for. In frame based systems rules play an auxiliary role. Frames represent here a major source of knowledge both methods and demons are used to add actions to the frames. Goal can be established either in a method or in a demon. Inference Manager is used for processing of all rules based on the user input or SCADA input. Meta Rule Interpreter search through the Meta Rule set for finding out the complaints part (antecedent) which matches the user defined complaint/Alarm. Inference Manager gets the hypothesis list (in the consequent part of Meta Rule) and Load it into the Working memory. Then Inference Manager will process hypothesis based on the certainty factor one by one (Hypothesis with the highest CF processed first).Then Inference Manager searches through the Rule set for finding out the action part which matches the hypothesis (THEN) part of the Meta rule. Rule Interpreter gets the condition part of Rule and evaluate each parameter in it. For this Parameter Interpreter checks the parameter type. Parameter can be database type or (within the working memory) or frame type or GUI or Computed. Evaluated parameters are added to working memory. This process is repeated till all rules are evaluated. Fired rules and parameters evaluated are logged into the database for generating explanation by the Explanation module

4. LEARNING ENGINE DEVELOPMENT FOR ENHANCING EXPERT SYSTEM

The Learning Engine is an extension of the Expert systems where new rules have to be learned by the shell for any domain. For this we need to do data preprocessing and data mining of the historic Data. The new rules generated have to be validated by the expert and then can be updated to the knowledge base. In our work we used Association based Learning methods for generating new rules from data[5]. To enhance the inferencing capability of Real time Expert System Shell new rules have to be found from the historic data. The parameter name and values from the historic data are used as

364

Computer Science & Information Technology (CS & IT)

input for Learning Engine. For learning, the association between parameters at different time has to be found. The major Machine Learning Techniques are as below: Classification Supervised data mining. Forms unseen classification. Numerical prediction Subgroup of Classifier. Formed outcome is a numeric quantity and not a discrete class or classifier. Clustering Unsupervised learning. Association Any association of one or more than one attribute at a time.

Figure 3: Architecture Diagram of Expert System with Learning Engine

The output of Machine Learning algorithms can be of the form of Decision tables, Decision trees, Association rules, Clustering Diagrams etc. Association rule learning (Dependency modeling) Searches for relationships between variables. Thus we have studied and compared the Association algorithms.

4.1 Association rule learning

Association Mining is finding frequent patterns, associations, correlations or causal structures among sets of items or objects in transaction databases and other information repositories. When given a set of transactions, this will find rules that will predict the occurrence of an item based on the occurrences of other items in the transaction. Association rules are usually required to satisfy a user-specified minimum support and a userspecified minimum confidence at the same time. Association rule generation is usually split up into two separate steps: 1. First, minimum support is applied to find all frequent item sets in a database. 2. These frequent item sets and the minimum confidence constraint are used to form rules. In Association rule learning following algorithms were compared & results summarized: 1. Apriori 2. Predictive Apriori 3. FPGrowth 4. Tertius

Computer Science & Information Technology (CS & IT)

365

4.1.1 Apriori In computer science and data mining, Apriori is a classic algorithm for learning association rules. Apriori is designed to operate on databases containing transactions (for example, collections of items bought by customers, or details of a website frequentation). Apriori uses a "bottom up" approach, where frequent subsets are extended one item at a time (a step known as candidate generation), and groups of candidates are tested against the data. The algorithm Apriori, suffers from a number of inefficiencies or trade-offs, which have spawned other algorithms. Candidate generation generates large numbers of subsets (the algorithm attempts to load up the candidate set with as many as possible before each scan). Bottom-up subset exploration (essentially a breadth-first traversal of the subset lattice) finds any maximal subset S only after all 2 | S | 1 of its proper subsets. 4.1.2 Predictive Apriori It searches with an increasing support threshold for the best 'n' rules concerning a support-based corrected confidence value. When evaluating association rules, rules that differ in both support and confidence have to be compared; a larger support has to be traded against a higher confidence. The constraint of Predictive Apriori is that it also creates transaction database before rule making and the time required for rule making was much high compared to other algorithms. 4.1.3 FP Growth FP Growth allows frequent itemset discovery without candidate itemset generation. It has two step approach: Step 1: Build a compact data structure called the FP-tree Built using 2 passes over the data-set. Step 2: Extracts frequent itemsets directly from the FP-tree Traversal through FP-Tree FP-Tree is an efficient algorithm for finding frequent patterns in transaction databases A compact tree structure is used Mining based on the tree structure is significantly more efficient than Apriori transaction databases

The constraint of FP Tree is that it supports only binary attributes and also creates transaction database before rule making. 4.1.4 Tertius Tertius uses a top-down search algorithm when creating the association rules. The search first starts with an empty rule. Next, Tertius iteratively refines the rule by adding new-attribute values. Tertius continues to refine the rule as long as such refinements increase the confidence value. Finally, Tertius adds the rule and restarts the search to create new rules. Tertius ends when no additional rules can be created with sufficient confidence values. Afterwards, it returns the set of rules along with their confidence values.

366

Computer Science & Information Technology (CS & IT)

Tertius does not create itemset and transaction databases. It starts directly from creating rules thus the algorithm implementation of Tertius requires less memory compared to the other association algorithms.

4.2 The Learning Module Implementation: Working Principle

Knowledge discovery in databases is the process of identifying valid, novel, potentially useful, and ultimately understandable patterns or models in data. Data mining is a step in the knowledge discovery process consisting of particular data mining algorithms that, under some acceptable computational efficiency limitations, find patterns or models in data. This Learning Engine was developed as an enhancement module to the Real Time Expert System for Root Cause Analysis of alarms in Plants. The development is done using Java and Flex languages and uses MySql database. In power plants application we have to take into consideration the historic data, online data for updating the knowledge base with the new knowledge acquired. Figure 4 shows the general architecture of the Learning Engine Module. The Learning Engine Module mainly consists of the following sub modules: User Interface Preprocessor Algorithm implementer Post processor Rule Validator

Figure 4: Block Architecture Diagram of Learning Engine

The administrator/user can initiate the Learning process from the GUI. The data has to be selected from the history by configuring the start time/date to end time/date for Learning Engine. Figure 5 shows the start/end date and parameter selection configuaration.

Computer Science & Information Technology (CS & IT)

367

4.2.1 Preprocessor

This data in the historian data base has to be preprocessed and the suitable output structure has to be created before feeding to the Learning algoritm model to get the output. The history data preprocessing involves the following methods. 1. Data cleaning - The historic data contains the field Quality with data 1(good) and 0 (bad). Filter out or discard instances with quality attribute value 0. 2. Data transformation- Data Transformation involves conversion of the data value (Parameter Value) using alarm code mapping to corresponding nominal values 3. Data reduction The unwanted columns in the historic data are to be removed. The historic data after preprocessing has to be fed to the Learning algorithms.

Figure 5: Learning Engine- Prepocessor

4.2.2 Algorithm Implementer The pre-processed learning data table in database is the input to Algorithm Implementer module. This module is using Tertius algorithm as found most suitable among the association algorithms for this domain of application. Tertius does a complete top-down A* search over the space of possible rules. If you have A attributes with on the average V values and search for rules with up to n literals, the number of possible rules is of the order (AV)n. The output is the set of rules from the Rule generator block. The parameters which are configurable for Tertius algorithm are Confirmation Threshold and Frequency Threshold whose default values are set as 0.3 and 0.1 respectively.

368

Computer Science & Information Technology (CS & IT)

Figure 6: Learning Engine- New rules formation

4.2.3 Post processor Module

The input to this module is the set of rules generated from the rule generator(Algorithm Implementer) module. The rules generated have to be post processed so that it is in the same format as the Knowledge Base(KB) Rules. The rules with normal conditions (in both head and body) have to be discarded as only rules for alarm conditions are present in Knowledge Base. The duplicate rules have to be removed as part of post processing i.e with literals reverse in head and body. The rules shall be in the if-then format as in the KB. Post processor module converts the rules generated to if-then format.

4.2.4 Rule Validator

The input to the Rule Validator module is the Postprocessor module output rules. The Rule Validator compares the input rules with the existing Knowledge Base Rules. If the rule already exists in Knowledge Base, it is discarded. The new rules are output to the GUI. After confirmation from domain expert, the Knowledge Base. is updated with the new rules.

Figure 7: Learning Engine- Rule added to Knowledgebase

Computer Science & Information Technology (CS & IT)

369

5. APPLICATION

The data used for the testing is generated from a Plant Test Setup using Analog and Digital Input cards connected to suitable sources. The interface between Plant Distributed Control System (DCS) and Expert System is via OPC DA. The alarm MS Header Temp LO is considered for testing. This is an actual alarm studied from NTPC Plant.

No

MS header temperature low? (AI2<50) Yes No

L/R spray CV closed? (AI-3< 30)

No fault

Y es Yes

FW diff temp across econ high?(AI4< 25)

L/R system temperature control on Manual? (DI-1)

Yes

No

No

Syst em temperat ure control on manual (DI-2)

FW temp at econ I/L low?(AI-5< 28)

No Yes

Oxygen in FG low?(AI-6< 20) CV position difference from control signal? (AI-7< 45)

RH/SH Soot deposit(DI-7)

Y es

FW Htrs Problems (DI6)

Y es

Control Valve Stuck (DI-3)

Low excess air (DI-5)

No SH Tubes soot deposit/ Running Burners trip (DI4)

Figure 8: Fault Tree for Alarm: MS Header Temp low

Historic data is read from database and analog values are converted to nominal type for inputting to association algorithms. Here 13 attributes (6 analog and 7 digital) and 70 instances are used for testing. The test data is given as shown below. attribute 1- AI-3 {LO,NORMAL} attribute 2- AI-2 {LO,NORMAL} attribute 3- DI-7 {TRUE,FALSE}attribute 4- DI-6 {TRUE,FALSE} attribute 5- DI-5 {TRUE,FALSE}attribute 6- DI-4 {TRUE,FALSE} attribute 7- DI-3 {TRUE,FALSE}attribute 8- DI-2 {TRUE,FALSE} attribute 9- DI-1 {TRUE,FALSE}attribute 10-AI-7 {LO,NORMAL} attribute 11-AI-6 {LO,NORMAL}attribute 12-AI-5 {LO,NORMAL} attribute 13-AI-4 {HI,NORMAL} Existing Knowledge Base Rules If AI-3<30(LO) OR DI-1=True OR AI-7<45(LO) OR DI-4=True Then AI-2<50(LO) If AI-4>25(HI) OR AI-6<20(LO) OR DI-4=TRUE Then AI-3<30(LO)

370

Computer Science & Information Technology (CS & IT)

If AI-5 < 28(LO) OR DI-7 = True Then AI-4>25(HI) If DI-6 = True Then AI-5<28(LO) If DI-5 = True Then AI-6<20(LO) If DI-2 = True Then DI-1=True If DI-3 = True Then AI-7<45(LO) Algorithm Run Outputs 1. Apriori Configuration No of rules=100000 , Minimum support: 0.25 (17 instances) confidence: 0.1, Instances: 70, Attributes: 13 AI-3, AI-2, DI-7, DI-6, DI-5, DI-4, DI-3, DI-2, DI-1, AI-7, AI-6, AI-5, AI-4 Best rules found: Seven best rules are found using Apriori algorithm. One sample rule is shown below AI-3=LO 40 ==> AI-2=LO 40 conf:(1) 2. Predictive Apriori Configuration No of rules=100000, Instances: 70, Attributes: 13 AI-3, AI-2, DI-7, DI-6, DI-5, DI-4, DI-3, DI-2, DI-1, AI-7, AI-6, AI-5, AI-4 Best rules found: Twenty three best rules are found using Predictive Apriori algorithm. One sample rule is shown below AI-4=HI 20 ==> AI-3=LO AI-2=LO 20 acc:(0.99454) 3. FPGrowth Configuration, No of rules=100000, Minimum support: 0.25 (17 instances) Minimum confidence: 0.1, Instances: 70, Attributes: 13 AI-3, AI-2, DI-7, DI-6, DI-5, DI-4, DI-3, DI-2, DI-1, AI-7, AI-6, AI-5, AI-4 FPGrowth found 47458 rules Rules without NORMAL and FALSE attribute values are only displayed and no such rules are found FPGrowth supports only binary attribute values. 4. Tertius Instances: 70, Attributes: 13 AI-3, AI-2, DI-7, DI-6, DI-5, DI-4, DI-3, DI-2, DI-1, AI-7, AI-6, AI-5, AI-4 Thirty two best rules are found using Tertius algorithm. Some sample rules is shown below. AI-4 = HI ==> DI-7 = TRUE or DI-6 = TRUE AI-5 = LO ==> AI-4 = HI AI-4 = HI ==> DI-7 = TRUE AI-4 = HI ==> DI-6 = TRUE AI-4 = HI ==> AI-5 = LO Highlighted rules are existing KB rules.

Computer Science & Information Technology (CS & IT)

371

Number of hypotheses considered: 73507 Number of hypotheses explored: 38490

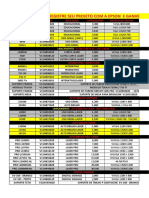

6. COMPARISON OF ASSOCIATION ALGORITHMS

Comparison of Machine learning Association algorithms was required to find the pros and cons of each algorithm. The Table 1 shows that out of these four algorithms Apriori and Tertius give satisfactory results with respect to this application. The Apriori Association Algorithm requires more time and memory if the number of attributes are high (more than 20 attributes). The FPGrowth Association Algorithm supports only binary attribute values. The Predictive Association Algorithm takes more time and will not work with more attributes. Tertius Association Algorithm has been tested up to 250 attributes and got acceptable results. So it is concluded that Tertius Association Algorithm is more suitable for our application as it can support quite high number of attributes with highest number of valid results.

Table 1: Association Algorithms output comparison

Ap r io r i No of a tt rib ut e s No o f in st ances Tim e 13 70 L e ss t ha n 1 mi n M in C F = 0. 1 F P Gro wth 13 70 L ess t han 1 m i n M in C F = 0. 1 Pre d icti ve A p rio r i 13 70 M o re th an 5 h rs T er ti u s 13 70 L ess th a n 1 m i n M i n C F = 0. 1 M i n s up po rt = 0.2 5 M a x su pp ort =1 N u m rul es= 1 05 23 0 32 9

M i n s up p ort =0. 25 M i n s up p ort = 0.2 5 P rop e rti es M a x su pp ort = 1 Nu m rul es= 1 05 No of rul e s ge nerat ed Ex is ti ng KB rul es 7 4 M ax su pp ort = 1 Nu m rul es=1 0 5 0 0

7. CONCLUSIONS

The Learning Engine enhances the Expert System by forming new rules dynamically. The new rules formed are validated by the domain expert and then the rules are updated to the knowledgebase. The association based learning algorithms are compared and it was found that the Tertius Algorithm is most suitable for this process application as it generates maximum number of valid rules in shortest possible time. Hence this algorithm has been used for implementing the Learning Engine.

ACKNOWLEDGEMENTS

Our special thanks to NTPCs Rajiv Gandhi Combined Cycle Power Project (RGCCPP) at Kayamkulam, Kerala for offering their domain expertise in developing the Expert System and the Learning Engine for Operator Guidance and for allowing us to implement this system in the plant.

372

Computer Science & Information Technology (CS & IT)

REFERENCES

[1] [2] [3] [4] [5] [6] [7] Peter Flach, Valentina Maraldi, Fabrizio Riguzzi. Algorithms for Efficiently and Effectively Using Background Knowledge in Tertius. Rakesh Agrawal, Tomasz Imielinski, Arun Swami. 1993 Mining Association Rules between Sets of Items in Large Databases. ACM Tom M. Mitchell. July 2006 The Discipline of Machine Learning Peter a. Flach, Nicolas Lachiche. Kluwer Academic Publishers, Boston. Manufactured in The Netherlands. Confirmation-guided discovery of first-order rules with Tertius. Randall W. Pack, Paul M. Lazar, Renata V. Schmidt, Catherine D. Gaddy. Expert systems for plant operations training and assistance. T. S. Dahl. March 1999. Background Knowledge in the Tertius First Order Knowledge Discovery Tool. University of Bristol.

Authors

Abhir Raj Metkar obtained his bachelor's degree in Instrumentation Engineering in 1999 and Master's degree in Process Control Engineering in 2001. Currently he is working as a Senior Engineer in Control and Instrumentation Group of CDAC Trivandrum.

Latha B Kaimal Graduated in Electronics and Communication Engineering from the College of Engineering, Trivandrum in 1977. Joined the Digital Electronics R&D Group of the State owned KELTRON group of companies in 1978 as Development Engineer. Was a key member of the Computer and Communication Group in Electronics Research and Development Centre of India (ER&DCI). Joined the Control & Instrumentation Group(CIG) in 1994 and involved in the implementation of Distribution Automation Project for Thiruvananthapuram city.Presently Associate Director in CIG in CDAC(T) and working as the Project leader of Development of Real Time Expert System Shell project Rakesh G obtained his bachelor's degree in Computer Engineering in 2005.Currently he is working as a Senior Engineer in control and instrumentation group of CDAC Trivandrum Involved in the design and development of "Real Time Expert System Shell project under the Automation Systems Technologies Programme ( ASTeC - Learning domain).

You might also like

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- S L R T E S: ELF Earning EAL IME Xpert YstemDocument13 pagesS L R T E S: ELF Earning EAL IME Xpert YstemKutale TukuraNo ratings yet

- Perf-Eval-lecture NotesDocument48 pagesPerf-Eval-lecture Notesemmybliss8783No ratings yet

- Lecture 10Document12 pagesLecture 10mashNo ratings yet

- Accounting Expert SystemsDocument5 pagesAccounting Expert SystemsCatalin FilipNo ratings yet

- Expert System For Detecting and Diagnosing Car Engine Starter Cranks Fault Using Dynamic Control SystemDocument22 pagesExpert System For Detecting and Diagnosing Car Engine Starter Cranks Fault Using Dynamic Control SystemUMAR MUHAMMAD GARBANo ratings yet

- Logica Fuzzy y Redes NeuronalesDocument10 pagesLogica Fuzzy y Redes Neuronalesmivec32No ratings yet

- Rule Based SystemDocument6 pagesRule Based SystemSaksham SharmaNo ratings yet

- Towards Flexibility in A Distributed Data Mining Framework: Jos e M. Pe Na Ernestina MenasalvasDocument4 pagesTowards Flexibility in A Distributed Data Mining Framework: Jos e M. Pe Na Ernestina MenasalvasShree KanishNo ratings yet

- DocuDocument44 pagesDocuysf19910% (1)

- AI UNIT 4 Lecture 2Document7 pagesAI UNIT 4 Lecture 2Sunil NagarNo ratings yet

- What Is Expert SystemDocument5 pagesWhat Is Expert SystemRX l ThunderNo ratings yet

- Clinical Decision Support System Development View: The Rules and Associations of Compiled DataDocument3 pagesClinical Decision Support System Development View: The Rules and Associations of Compiled DataChamz DezalaNo ratings yet

- Automated Job Monitoring in A High Performance Computing EnvironmentDocument2 pagesAutomated Job Monitoring in A High Performance Computing EnvironmentALIAHMAD AliNo ratings yet

- KKTDocument10 pagesKKTGarima PrashalNo ratings yet

- Expert SystemDocument22 pagesExpert SystemMiyu HaruNo ratings yet

- Machine Learning Ai Manufacturing PDFDocument6 pagesMachine Learning Ai Manufacturing PDFNitin RathiNo ratings yet

- Machine Learning For Expert Systems in Data Analysis: Ezekiel T. Ogidan Kamil Dimililer Yoney Kirsal EverDocument5 pagesMachine Learning For Expert Systems in Data Analysis: Ezekiel T. Ogidan Kamil Dimililer Yoney Kirsal EvertekgucNo ratings yet

- Employing Clustering For Assisting Source Code Maintainability Evaluation According To ISO/IEC-9126Document5 pagesEmploying Clustering For Assisting Source Code Maintainability Evaluation According To ISO/IEC-9126Emil StankovNo ratings yet

- A Rule-Based Inference Engine PDFDocument14 pagesA Rule-Based Inference Engine PDFLidya SeptianieNo ratings yet

- Using Control Theory To Achieve Service Level Objectives in Performance ManagementDocument21 pagesUsing Control Theory To Achieve Service Level Objectives in Performance ManagementJose RomeroNo ratings yet

- Programming Assign1Document2 pagesProgramming Assign1classicyobra17No ratings yet

- Intelligent Co-Operative PIM Architecture For Image Analysis and Pattern RecognitionDocument5 pagesIntelligent Co-Operative PIM Architecture For Image Analysis and Pattern RecognitionAsim Rafiq JanjuaNo ratings yet

- The Basics of Expert (Knowledge Based) SystemsDocument6 pagesThe Basics of Expert (Knowledge Based) SystemsSolomon DemissieNo ratings yet

- Tca1651021 Asn 4 CT3 PDFDocument7 pagesTca1651021 Asn 4 CT3 PDFPratik PandeyNo ratings yet

- Company Lab Management SystemDocument43 pagesCompany Lab Management Systemmahi mNo ratings yet

- Techi UssifDocument7 pagesTechi UssifSAPEH LAWRENCENo ratings yet

- Answer All Questions.: 1. Explain Different Categories of A SystemDocument23 pagesAnswer All Questions.: 1. Explain Different Categories of A SystemDeepak Aryan100% (3)

- Expert System: Artificial IntelligenceDocument16 pagesExpert System: Artificial IntelligenceAkhil DhingraNo ratings yet

- Methods For The Design and Development: Abstract. After The Domain-Spanning Conceptual Design, Engineers From DifferentDocument2 pagesMethods For The Design and Development: Abstract. After The Domain-Spanning Conceptual Design, Engineers From DifferentYãbeçk MâmNo ratings yet

- World's Largest Science, Technology & Medicine Open Access Book PublisherDocument23 pagesWorld's Largest Science, Technology & Medicine Open Access Book PublisherMuhammad KhubaibNo ratings yet

- Expert Systems and Applied Artificial Intelligence 11.1 What Is Artificial Intelligence?Document18 pagesExpert Systems and Applied Artificial Intelligence 11.1 What Is Artificial Intelligence?Anonymous EImkf6RGdQNo ratings yet

- Dynamic Complex Event Processing - Adaptive Rule Engine: Bhargavi R, Ravi Pathak, Vaidehi VDocument6 pagesDynamic Complex Event Processing - Adaptive Rule Engine: Bhargavi R, Ravi Pathak, Vaidehi VMario LopezNo ratings yet

- MATLAB, Step by StepDocument5 pagesMATLAB, Step by StepNidhi KulkarniNo ratings yet

- Sad Module 3 (Edited Jan 23)Document41 pagesSad Module 3 (Edited Jan 23)febwinNo ratings yet

- Chapter-Two: Requirement Gathering and TechniquesDocument36 pagesChapter-Two: Requirement Gathering and Techniqueskerya ibrahimNo ratings yet

- Online Job PortalDocument126 pagesOnline Job PortalMegha SahuNo ratings yet

- Natf Ems External Modeling Reference Document - OpenDocument20 pagesNatf Ems External Modeling Reference Document - Open陆华林No ratings yet

- Formal Language - C2+TC2Document21 pagesFormal Language - C2+TC2Aksh KhandelwalNo ratings yet

- Existing Systems: Customer Description, Center Description, Ser-Vice DemandsDocument22 pagesExisting Systems: Customer Description, Center Description, Ser-Vice DemandsVarun PotnuruNo ratings yet

- Student Counseling System: A Rule-Based Expert System Based On Certainty Factor and Backward Chaining ApproachDocument5 pagesStudent Counseling System: A Rule-Based Expert System Based On Certainty Factor and Backward Chaining ApproachInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Assnment Breef 2 Part 3Document2 pagesAssnment Breef 2 Part 3ranasinghage yuresh IndikaNo ratings yet

- System Analysis and Modeling: 3.2 Software Requirement SpecificationDocument47 pagesSystem Analysis and Modeling: 3.2 Software Requirement SpecificationGirma GodahegneNo ratings yet

- Research Article: Direct Data-Driven Control For Cascade Control SystemDocument11 pagesResearch Article: Direct Data-Driven Control For Cascade Control SystemNehal ANo ratings yet

- Feature Subset Selection With Fast Algorithm ImplementationDocument5 pagesFeature Subset Selection With Fast Algorithm ImplementationseventhsensegroupNo ratings yet

- Ijdps 040203Document11 pagesIjdps 040203ijdpsNo ratings yet

- OS Modules SummaryDocument6 pagesOS Modules SummaryshilpasgNo ratings yet

- Medical Shop Management SystemDocument58 pagesMedical Shop Management SystemShekar A Naidu83% (30)

- A New Two-Phase Sampling Algorithm For Discovering Association RulesDocument24 pagesA New Two-Phase Sampling Algorithm For Discovering Association Rulesanand_gsoft3603No ratings yet

- JewelDocument79 pagesJewelMichael WellsNo ratings yet

- Developing Thinking Skill - A3Document5 pagesDeveloping Thinking Skill - A3abhinav8179kaNo ratings yet

- Self Tuning ControllerDocument3 pagesSelf Tuning ControllerNilesh TambeNo ratings yet

- BekkkkiDocument6 pagesBekkkkiNimpa JaredNo ratings yet

- System Administration and Maintenance Module 1Document31 pagesSystem Administration and Maintenance Module 1ABEGAIL CABIGTINGNo ratings yet

- Ads Phase5Document5 pagesAds Phase5RAJAKARUPPAIAH SNo ratings yet

- CyberspaceDocument64 pagesCyberspaceAkansha ChopraNo ratings yet

- A Novel Web Based Expert System Architecture For On-Line and Off-Line Fault Diagnosis and Control (FDC) of TransformersDocument5 pagesA Novel Web Based Expert System Architecture For On-Line and Off-Line Fault Diagnosis and Control (FDC) of TransformersVickyNo ratings yet

- Objective of Python Projects On Airline Reservation SystemDocument43 pagesObjective of Python Projects On Airline Reservation SystemGokul KrishnanNo ratings yet

- Modern Agriculture DotnetDocument52 pagesModern Agriculture DotnetramalanfaizalNo ratings yet

- An Intelligent System To Help Expert Users: Application To Drug PrescriptionDocument7 pagesAn Intelligent System To Help Expert Users: Application To Drug PrescriptionpostscriptNo ratings yet

- Call For Papers-The International Journal of Ambient Systems and Applications (IJASA)Document2 pagesCall For Papers-The International Journal of Ambient Systems and Applications (IJASA)CS & ITNo ratings yet

- Graph HocDocument1 pageGraph HocMegan BellNo ratings yet

- International Journal of Game Theory and Technology (IJGTT)Document2 pagesInternational Journal of Game Theory and Technology (IJGTT)CS & ITNo ratings yet

- International Journal of Game Theory and Technology (IJGTT)Document2 pagesInternational Journal of Game Theory and Technology (IJGTT)CS & ITNo ratings yet

- Free Publications : Scope & TopicsDocument2 pagesFree Publications : Scope & TopicscseijNo ratings yet

- Call For Papers-The International Journal of Ambient Systems and Applications (IJASA)Document2 pagesCall For Papers-The International Journal of Ambient Systems and Applications (IJASA)CS & ITNo ratings yet

- Ijait CFPDocument2 pagesIjait CFPijaitjournalNo ratings yet

- Graph HocDocument1 pageGraph HocMegan BellNo ratings yet

- Ijait CFPDocument2 pagesIjait CFPijaitjournalNo ratings yet

- International Journal of Game Theory and Technology (IJGTT)Document2 pagesInternational Journal of Game Theory and Technology (IJGTT)CS & ITNo ratings yet

- International Journal of Chaos, Control, Modelling and Simulation (IJCCMS)Document3 pagesInternational Journal of Chaos, Control, Modelling and Simulation (IJCCMS)Anonymous w6iqRPANo ratings yet

- International Journal of Chaos, Control, Modelling and Simulation (IJCCMS)Document3 pagesInternational Journal of Chaos, Control, Modelling and Simulation (IJCCMS)Anonymous w6iqRPANo ratings yet

- Graph HocDocument1 pageGraph HocMegan BellNo ratings yet

- International Journal of Game Theory and Technology (IJGTT)Document2 pagesInternational Journal of Game Theory and Technology (IJGTT)CS & ITNo ratings yet

- International Journal On Soft Computing, Artificial Intelligence and Applications (IJSCAI)Document2 pagesInternational Journal On Soft Computing, Artificial Intelligence and Applications (IJSCAI)ijscaiNo ratings yet

- International Journal of Security, Privacy and Trust Management (IJSPTM)Document2 pagesInternational Journal of Security, Privacy and Trust Management (IJSPTM)ijsptmNo ratings yet

- International Journal On Soft Computing (IJSC)Document1 pageInternational Journal On Soft Computing (IJSC)Matthew JohnsonNo ratings yet

- Ijait CFPDocument2 pagesIjait CFPijaitjournalNo ratings yet

- International Journal of Peer-To-Peer Networks (IJP2P)Document2 pagesInternational Journal of Peer-To-Peer Networks (IJP2P)Ashley HowardNo ratings yet

- Free Publications : Scope & TopicsDocument2 pagesFree Publications : Scope & TopicscseijNo ratings yet

- Call For Papers-The International Journal of Ambient Systems and Applications (IJASA)Document2 pagesCall For Papers-The International Journal of Ambient Systems and Applications (IJASA)CS & ITNo ratings yet

- Scope & Topics: ISSN: 0976-9404 (Online) 2229-3949 (Print)Document2 pagesScope & Topics: ISSN: 0976-9404 (Online) 2229-3949 (Print)ijgcaNo ratings yet

- Graph HocDocument1 pageGraph HocMegan BellNo ratings yet

- Free Publications : Scope & TopicsDocument2 pagesFree Publications : Scope & TopicscseijNo ratings yet

- Role of Project Management Consultancy in ConstructionDocument4 pagesRole of Project Management Consultancy in Constructionmnahmed1972No ratings yet

- AC 25.899-1 Electrical Bonding and Protection Against Static ElectricityDocument11 pagesAC 25.899-1 Electrical Bonding and Protection Against Static ElectricitysheyunfengNo ratings yet

- Gentsuki Untenmenkyo Japanese Edition E BookDocument4 pagesGentsuki Untenmenkyo Japanese Edition E BookEuNo ratings yet

- Hl-Dv7W HL-DV5: One-Piece Digital Camera/Recorder With DVCAM FormatDocument8 pagesHl-Dv7W HL-DV5: One-Piece Digital Camera/Recorder With DVCAM FormatMuhammad Akhfash RaffayNo ratings yet

- Azure 104Document25 pagesAzure 104HjhjhNo ratings yet

- Unit 2Document17 pagesUnit 2PRASATH RNo ratings yet

- Hanyoung Control SwitchDocument3 pagesHanyoung Control SwitchBeruang Kodding LagiNo ratings yet

- My Updated CVDocument5 pagesMy Updated CVSaleem UddinNo ratings yet

- DORNA EPS-B1 Servo User Manual V1.11 OcrDocument191 pagesDORNA EPS-B1 Servo User Manual V1.11 Ocrnapo3tNo ratings yet

- Registre Seu Projeto Com A Epson E Ganhe Desconto: Modelo Aplicaçao Luminsidade Resoluçao NativaDocument20 pagesRegistre Seu Projeto Com A Epson E Ganhe Desconto: Modelo Aplicaçao Luminsidade Resoluçao NativaJairo RodriguesNo ratings yet

- Plantronics Voyager Pro Plus ManualDocument10 pagesPlantronics Voyager Pro Plus Manualbsd_storesNo ratings yet

- T & H P A: Understanding Person AccountsDocument2 pagesT & H P A: Understanding Person AccountsPriya JuligantiNo ratings yet

- Data Flow Diagram Level-0: Health Insurance System Customer InsurerDocument1 pageData Flow Diagram Level-0: Health Insurance System Customer InsurerVarun DhimanNo ratings yet

- HiLook Product Catalog 2019H2 PDFDocument58 pagesHiLook Product Catalog 2019H2 PDFCatur WijayaNo ratings yet

- Licenseid TDD/FDD License DescriptionDocument34 pagesLicenseid TDD/FDD License DescriptionfazadoNo ratings yet

- QR Code Reader and ScannerDocument6 pagesQR Code Reader and ScannerNgwang Leonard Tenzi100% (1)

- CWCTDocument5 pagesCWCTtroyel99No ratings yet

- T21 FSAE PowertrainOptimization BS ThesisDocument171 pagesT21 FSAE PowertrainOptimization BS ThesisSayanSanyalNo ratings yet

- Manual CNC97 BystronicDocument220 pagesManual CNC97 BystronicSantiago Vanderlei PrataNo ratings yet

- Robotic Surgery: A Seminar Report OnDocument16 pagesRobotic Surgery: A Seminar Report OnWendy CannonNo ratings yet

- Traffic Sign Detection and Recognition Using Image ProcessingDocument7 pagesTraffic Sign Detection and Recognition Using Image ProcessingIJRASETPublicationsNo ratings yet

- M. of St. Lighting Poles Installation & Related LCPDocument18 pagesM. of St. Lighting Poles Installation & Related LCPWaleed Salih100% (1)

- HP Laptop 15-Bs0xxDocument124 pagesHP Laptop 15-Bs0xxmanoj14febNo ratings yet

- GeofencingDocument3 pagesGeofencinggirishtiwaskarNo ratings yet

- Experiment No.7: Aim: Design Differential Amplifier Software Used: AWR Design Environment 10 TheoryDocument12 pagesExperiment No.7: Aim: Design Differential Amplifier Software Used: AWR Design Environment 10 TheorySaurabh ChardeNo ratings yet

- Eaton 9PX Brochure (SEA)Document4 pagesEaton 9PX Brochure (SEA)Anonymous 6N7hofVNo ratings yet

- Grades of Reinforcing SteelDocument15 pagesGrades of Reinforcing Steelshuckss taloNo ratings yet

- 10515-0413-4200 Operacion PDFDocument345 pages10515-0413-4200 Operacion PDFSaul Tula100% (4)

- F A N T - Filtering and Noise Adding Tool: Author DateDocument4 pagesF A N T - Filtering and Noise Adding Tool: Author DateAhmed Khalid KadhimNo ratings yet

- R15V0L PitDocument4 pagesR15V0L PitRoberto AlarmaNo ratings yet