Professional Documents

Culture Documents

Week05 - Linear Algebraic Equations

Uploaded by

Cahaya FitrahkuOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Week05 - Linear Algebraic Equations

Uploaded by

Cahaya FitrahkuCopyright:

Available Formats

WEEK 5

Linear Algebraic Equations

Cramer Rule

Gauss Elimination

Gauss Jordan

Gauss Seidel

2

At the end of this topic, the students will be able:

To solve problems involving linear algebraic

equations

LESSON OUTCOMES

4

An equation of the form ax+by+c=0 or equivalently

ax+by= -c is called a linear equation in x and y variables.

ax+by+cz=d is a linear equation in three variables x, y,

and z.

Thus, a linear equation in n variables is

a

1

x

1

+a

2

x

2

+ +a

n

x

n

= b

If you need to work more than one linear equations,

a system of linear equations must be solved

simultaneously.

Linear Algebraic Equations

5

Simultaneous linear algebraic equations can be

represented generally as:

a

11

x

1

+a

12

x

2

+ +a

1n

x

n

= b

1

a

21

x

1

+a

22

x

2

+ +a

2n

x

n

= b

2

. .

. .

. .

a

n1

x

1

+a

n2

x

2

+ +a

nn

x

n

= b

n

where : as are constant coefficients

bs are constant

n is number of equations

6

Noncomputer Methods for Solving Systems of Equations

For small number of equations (n 3), linear

equations can be solved readily by simple

techniques.

Linear algebra provides the tools to solve such

systems of linear equations.

Nowadays, easy access to computers makes the

solution of large sets of linear algebraic equations

possible and practical.

7

Involves combining equations to eliminate unknowns.

Solving Small Numbers of Equations

There are many ways to solve a system of linear equations:

Graphical method

Cramers rule

Method of elimination

Computer methods

For n 3

Gauss Elimination

8

Graphical Method

For two equations:

Solve both equations for x

2

:

2 2 22 1 21

1 2 12 1 11

b x a x a

b x a x a

= +

= +

22

2

1

22

21

2

1 2

12

1

1

12

11

2

intercept (slope)

a

b

x

a

a

x

x x

a

b

x

a

a

x

+

|

|

.

|

\

|

=

+ = +

|

|

.

|

\

|

=

9

Plot x

2

vs. x

1

,

the values of x

1

and x

2

at

the intersection of the lines

represent the solution.

1

2

1

2 2

9

2

3

18 2 3

So,

: Example

1 2 2 1

1 2 2 1

+ = = +

+ = = +

x x x x

x x x x

For a set of three

simultaneous equations, each

equation would be a plane in

three dimensional space.

The solution, if exists, is the

point of intersection of the

three planes.

10

Three cases that can pose problems when solving sets of

linear equations.

Case where the two

equations represent

parallel lines.

There is no solution

because the lines

never cross.

Case where the two

lines are coincident.

There is an infinite

number of solutions.

Case where difficult

to identify the exact

point at which the

lines intersect. These

systems are said to

be ill-conditioned.

11

Determinants

Determinant of a square matrix is unique scalar

number that represents the value of the matrix.

A determinant of a matrix A is denoted by |A|.

12

22 31 32 21

32 31

22 21

13

23 31 33 21

33 31

23 21

12

23 32 33 22

33 32

23 22

11

33 32 31

23 22 21

13 12 11

a a a a

a a

a a

D

a a a a

a a

a a

D

a a a a

a a

a a

D

a a a

a a a

a a a

D

= =

= =

= =

=

For a square

matrix of order 3,

the minor of an

element a

ij

is the

determinant of the

matrix of order 2

by deleting row i

and column j of

[A].

* It is only

defined for a

square matrix.

13

Exercise:

2 2

18 2 3

2 1

2 1

= +

= +

x x

x x

2

1

2

1

1

2

1

2 1

2 1

= +

= +

x x

x x

2 2

1

2

1

2 1

2 1

= +

= +

x x

x x

1 . 1

5

3 . 2

1

2

1

2 1

2 1

= +

= +

x x

x x

A matrix A is a singular matrix if its determinant is equal

to zero; that is the inverse of A does not exist.

14

(

(

(

=

3 5 1

2 4 3

1 2 1

A

(

(

(

=

1 5 1

2 4 2

1 2 1

B

Exercise:

15

Cramers Rule

Technique for a small set of simultaneous equations

( 3 or less).

The method requires the calculation of the

determinant of the matrix.

For example, consider the following set of linear

algebraic equations:

a

11

x

1

+a

12

x

2

+ a

13

x

3

= b

1

a

21

x

1

+a

22

x

2

+ a

23

x

3

= b

2

a

31

x

1

+a

32

x

2

+ a

33

x

3

= b

3

16

Equation can be represented in the matrix form as

where:

| |{ } | | b x A =

| | } { | |

(

(

(

=

(

(

(

=

3

2

1

3

2

1

33 32 31

23 22 21

13 12 11

b

b

b

b

x

x

x

x

a a a

a a a

a a a

A

17

D

b a a

b a a

b a a

x

D

a b a

a b a

a b a

x

D

a a b

a a b

a a b

x

3 32 31

2 22 21

1 12 11

3

33 3 31

23 2 21

13 1 11

2

33 32 3

23 22 2

13 12 1

1

= = =

Cramers Rule states that each unknown in a system

of LAE may be expressed as a fraction of two

determinants with denominator D and with the

numerator obtained from D by replacing the column

of coefficients of the unknown in question by the

constants b

1

,b

2

,b

n

.

18

Exercise:

0.3x

1

+0.52x

2

+ x

3

= - 0.01

0.5x

1

+x

2

+ 1.9

x

3

= 0.67

0.1x

1

+0.3x

2

+ 0.5

x

3

= -0.44

Use Cramers Rule to solve:

19

Method of Elimination

The basic strategy is to successively solve one

of the equations of the set for one of the

unknowns and to eliminate that variable from

the remaining equations by substitution.

The elimination of unknowns can be extended

to systems with more than two or three

equations; however, the method becomes

extremely tedious to solve by hand.

20

Nave Gauss Elimination

The word naive is used because elimination

processes are done without any modification to the

original system of LAE.

Extension of method of elimination to large sets of

equations by developing a systematic scheme or

algorithm to eliminate unknowns and to back

substitute.

As in the case of the solution of two equations, the

technique for n equations consists of two phases:

Forward elimination of unknowns

Back substitution

21

22

Example:

3x

1

- 0.1x

2

- 0.2x

3

= 7.85

0.1x

1

+7x

2

- 0.3

x

3

= -19.3

0.3x

1

- 0.2x

2

+10x

3

= 71.4

Use Naive Gauss elimination to solve:

23

Pitfalls of Elimination Methods

Division by zero.

It is possible that during both elimination and back-substitution

phases a division by zero can occur.

Round-off errors.

Ill-conditioned systems.

Systems where small changes in coefficients result in large changes

in the solution. Alternatively, it happens when two or more

equations are nearly identical, resulting a wide ranges of answers to

approximately satisfy the equations. Since round off errors can

induce small changes in the coefficients, these changes can lead to

large solution errors.

Singular systems.

When two equations are identical, we would loose one degree of

freedom and be dealing with the impossible case of n-1 equations

for n unknowns.

24

Techniques for Improving Solutions

Use of more significant figures

The simplest remedy for ill-conditioning system.

Pivoting.

Pivoting is necessary to avoid zeros on the major diagonal. Two

types of pivoting.

Partial pivoting: Switching the rows so that the largest

element is the pivot element.

Maximal (or complete) pivoting: Searching for the largest

element in all rows and columns then switching.

Scaling.

Scaling is accomplished by dividing the elements of each row

(including b) by the largest element in the row (excluding b). After

scaling, pivoting is then employed.

25

Example:

2x

2

+ x

3

= 5

4x

1

+ x

2

- x

3

= -3

- 2x

1

+ 3x

2

- 3x

3

= 5

Use Gauss elimination with partial pivoting to solve:

26

Example:

Use Gauss elimination with Scaling and

Pivoting to solve:

3x

1

+ 2x

2

+105x

3

= 104

2x

1

-3x

2

+103

x

3

= 98

x

1

+ x

2

+ 3x

3

= 3

27

Gauss Jordan

It is a variation of Gauss elimination. The

major differences are:

When an unknown is eliminated, it is eliminated

from all other equations rather than just the

subsequent ones.

All rows are normalized by dividing them by their

pivot elements.

Elimination step results in an identity matrix.

Consequently, it is not necessary to employ back

substitution to obtain solution.

28

29

Example:

3x

1

- 0.1x

2

- 0.2x

3

= 7.85

0.1x

1

+7x

2

- 0.3

x

3

= -19.3

0.3x

1

- 0.2x

2

+10x

3

= 71.4

Use Gauss Jordan technique to solve:

30

Exercise:

Solve:

1 4 3

2 2 2 6

3

3 2 1

3 2 1

3 2 1

= + +

= + +

= +

x x x

x x x

x x x

With

a) Naive Gauss elimination

b) Gauss Elimination with partial pivoting

c) Gauss Jordan without partial pivoting

31

Gauss Seidel

Iterative or approximate methods provide an

alternative to the elimination methods. The

Gauss-Seidel method is the most commonly

used iterative method.

The system [A]{X}={B} is reshaped by

solving the first equation for x

1

, the second

equation for x

2

, and the third for x

3

, and n

th

equation for x

n

.

For conciseness, we will limit ourselves to a

3x3 set of equations.

32

33

2 32 1 31 3

3

22

3 23 1 21 2

2

11

3 13 2 12 1

1

a

x a x a b

x

a

x a x a b

x

a

x a x a b

x

=

=

=

Now we can start the solution process by choosing guesses

for the xs.

A simple way to obtain initial guesses is to assume that they

are zero. These zeros can be substituted into x

1

equation to

calculate a new x

1

=b

1

/a

11

.

33

New x

1

is substituted to calculate x

2

and x

3

. The procedure

is repeated until the convergence criterion is satisfied:

For all i, where j and j-1 are the present and previous

iterations.

s

j

i

j

i

j

i

i a

x

x x

c c <

=

% 100

1

,

34

Exercise:

3x

1

- 0.1x

2

- 0.2x

3

= 7.85

0.1x

1

+7x

2

- 0.3

x

3

= -19.3

0.3x

1

- 0.2x

2

+10x

3

= 71.4

Use Gauss Seidel method to solve:

35

Convergence Criterion for the Gauss-Seidel Method

Gauss-Seidel method similar to simple fixed point iteration

technique.

The Gauss-Seidel method has two fundamental problems as

any iterative method:

It is sometimes nonconvergent, and

If it converges, converges very slowly.

Recalling that sufficient conditions for convergence of two

nonlinear equations, u(x,y) and v(x,y) are

1

1

<

c

c

+

c

c

<

c

c

+

c

c

y

v

x

v

y

u

x

u

36

Similarly, in case of two simultaneous equations, the Gauss-

Seidel algorithm can be expressed as

The partial derivatives equations as

1

22

21

22

2

2 1

2

11

12

11

1

2 1

) , (

) , (

x

a

a

a

b

x x v

x

a

a

a

b

x x u

=

=

0

0

2 22

21

1

11

12

2 1

=

c

c

=

c

c

=

c

c

=

c

c

x

v

a

a

x

v

a

a

x

u

x

u

37

Substitution into convergence criterion of two nonlinear

equations yield:

In other words, the absolute values of the slopes must be less

than unity for convergence:

1

1

22

21

11

12

<

<

a

a

a

a

=

=

>

>

>

n

i j

j

j i ii

a a

a a

a a

1

,

21 22

12 11

: equations n For

38

The method is best employed when the matrix of

coefficients is diagonally dominant; that is when

That is the diagonal element in each of the equations must

be larger that the sum of the absolute values of the other

elements in the equation.

This is to ensure that the method converges to the exact

solution. Thus, the criterion is a sufficient condition but not

necessary.

=

=

>

n

i j

j

j i ii

a a

1

,

39

Convergence of the

Gauss Seidel method

Divergence of the

Gauss Seidel method

40

Gauss-Seidel with relaxation

To improve convergence of the Gauss-Seidel method, relaxation is

introduced.

The new value of x to be used in the successive equations is

modified by a weighted average of the present and previous

iterations:

if = 1 : no modification

0<<1 : underrelaxation

1<<2 : overrelaxation

Selection of the optimum value for is highly problem-specific and

is normally determined by empirical or numerical means.

For most systems of LAEs, overrelaxation is more appropriate while

underrelaxation is used when the Gauss Seidel algorithm causes the

solution to overshoot by dampening out oscillations.

factor ) (weighting relaxation a where

) 1 (

=

+ =

old

j

new

j

new

j

x x x

41

Exercise:

Use Gauss Seidel method

a) without relaxation

b) With relaxation factor =0.95

to solve the following system to a

tolerance of

s

= 5%. If necessary,

rearrange the equations to achieve

convergence.

40 9 6

3 6

50 12 3

3 2 1

3 2 1

3 2 1

= + +

=

= + +

x x x

x x x

x x x

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Rsa (Cryptosystem) : RsaisaDocument10 pagesRsa (Cryptosystem) : RsaisaXerus AnatasNo ratings yet

- Process To Manufacture 1000kg/h of Methyl Ethyl Ketone From Dehydrogenation of 2-ButanolDocument51 pagesProcess To Manufacture 1000kg/h of Methyl Ethyl Ketone From Dehydrogenation of 2-Butanolstephenbwogora95% (21)

- CRYPTOGRAPHYDocument2 pagesCRYPTOGRAPHYmovie REGION 10No ratings yet

- Mek L&eDocument154 pagesMek L&eRoseAnne BellaNo ratings yet

- Soy Sauce 2Document39 pagesSoy Sauce 2Cahaya FitrahkuNo ratings yet

- ICIS Chemical Business Jun 7-Jun 13, 2010 277, 20 ABI/INFORM CompleteDocument1 pageICIS Chemical Business Jun 7-Jun 13, 2010 277, 20 ABI/INFORM CompleteCahaya FitrahkuNo ratings yet

- Task Chap10&11Document3 pagesTask Chap10&11Cahaya FitrahkuNo ratings yet

- Assignment Envi Tinggal Anep JeDocument43 pagesAssignment Envi Tinggal Anep JeCahaya FitrahkuNo ratings yet

- Lab 1 InstrumentDocument20 pagesLab 1 InstrumentCahaya FitrahkuNo ratings yet

- Week10&11 Differential EquationsDocument34 pagesWeek10&11 Differential EquationsCahaya FitrahkuNo ratings yet

- 5.2.1 Exple Resume A Mining Position v0.2Document2 pages5.2.1 Exple Resume A Mining Position v0.2Cahaya FitrahkuNo ratings yet

- Lab 1 InstrumentDocument20 pagesLab 1 InstrumentCahaya FitrahkuNo ratings yet

- Week12&13 Optimization PDFDocument33 pagesWeek12&13 Optimization PDFnoorhafiz07No ratings yet

- UV Result and DiscussionDocument1 pageUV Result and DiscussionCahaya FitrahkuNo ratings yet

- Chap 4 - Engineered Systems For WWT PDFDocument11 pagesChap 4 - Engineered Systems For WWT PDFCahaya FitrahkuNo ratings yet

- Lab 3 PDFDocument16 pagesLab 3 PDFomglikeseriouslyNo ratings yet

- NP CompleteDocument8 pagesNP CompleteshastryNo ratings yet

- Simplified DESDocument13 pagesSimplified DESmagesswaryNo ratings yet

- (JCAM 122) Brezinski C.-Numerical Analysis 2000. Interpolation and Extrapolation. Volume 2 (2000)Document355 pages(JCAM 122) Brezinski C.-Numerical Analysis 2000. Interpolation and Extrapolation. Volume 2 (2000)Afolabi Eniola AbiolaNo ratings yet

- Task 1: Cryptography (Crypto) : InputDocument3 pagesTask 1: Cryptography (Crypto) : InputDũng MinhNo ratings yet

- A Gentle Introduction To Mini-Batch Gradient Descent and How To Configure Batch SizeDocument16 pagesA Gentle Introduction To Mini-Batch Gradient Descent and How To Configure Batch SizerajeevsahaniNo ratings yet

- Data Science SyllabusDocument3 pagesData Science Syllabusmohammed habeeb VullaNo ratings yet

- معامل تضخم التباين PDFDocument19 pagesمعامل تضخم التباين PDFAmani100% (1)

- Animal Model in R - Example MrodeDocument6 pagesAnimal Model in R - Example Mrodemarco antonioNo ratings yet

- Geostatistical Clustering Potential Modeling and Traditional Geostatistics A New Coherent WorkflowDocument8 pagesGeostatistical Clustering Potential Modeling and Traditional Geostatistics A New Coherent WorkflowEfraim HermanNo ratings yet

- Assign 3Document1 pageAssign 3Abhishek VermaNo ratings yet

- Index of Lab Programs (Amity University 1st Semester)Document4 pagesIndex of Lab Programs (Amity University 1st Semester)Rushan KhanNo ratings yet

- Solve Systems All Methods Mixed Review PDFDocument10 pagesSolve Systems All Methods Mixed Review PDFYeit Fong TanNo ratings yet

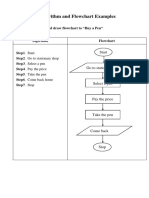

- Write Algorithm and Draw Flowchart To "Buy A Pen"Document7 pagesWrite Algorithm and Draw Flowchart To "Buy A Pen"akhilesh sahooNo ratings yet

- EE3054 Signals and Systems: Continuous Time ConvolutionDocument29 pagesEE3054 Signals and Systems: Continuous Time Convolutionman-895142No ratings yet

- Breaking The Coppersmith-Winograd Barrier For Matrix Multiplication AlgorithmsDocument73 pagesBreaking The Coppersmith-Winograd Barrier For Matrix Multiplication AlgorithmsAWhNo ratings yet

- Decision TreesDocument52 pagesDecision TreeslailaNo ratings yet

- SteganographyDocument11 pagesSteganographyashaNo ratings yet

- Randomness in AnyLogic ModelsDocument22 pagesRandomness in AnyLogic ModelsSergiy OstapchukNo ratings yet

- A Gentle Introduction To Neural Machine TranslationDocument14 pagesA Gentle Introduction To Neural Machine TranslationDragan ZhivaljevikjNo ratings yet

- Key Management LifecycleDocument55 pagesKey Management LifecycleSherif SalamaNo ratings yet

- Parameter Optimization of Single Exponential Smoothing Using Golden Section Method For Groceries ForecastingDocument9 pagesParameter Optimization of Single Exponential Smoothing Using Golden Section Method For Groceries ForecastingGoval SirviandoNo ratings yet

- ISYE 8803 - Kamran - M1 - Intro To HD and Functional Data - UpdatedDocument87 pagesISYE 8803 - Kamran - M1 - Intro To HD and Functional Data - UpdatedVida GholamiNo ratings yet

- Sorting: Data Structures and Algorithms in JavaDocument67 pagesSorting: Data Structures and Algorithms in JavaNguyễn Tuấn KhanhNo ratings yet

- Ai AssignmentDocument53 pagesAi AssignmentSoum SarkarNo ratings yet

- BSCS PPT Daa N01Document38 pagesBSCS PPT Daa N01Jacque Landrito Zurbito100% (1)

- Designing Machine Learning Workflows in Python Chapter4Document38 pagesDesigning Machine Learning Workflows in Python Chapter4FgpeqwNo ratings yet

- Block 1-Data Handling Using Pandas DataFrameDocument17 pagesBlock 1-Data Handling Using Pandas DataFrameBhaskar PVNNo ratings yet

- Syllabus - CS 231N PDFDocument1 pageSyllabus - CS 231N PDFvonacoc49No ratings yet