Professional Documents

Culture Documents

Springer - Turbo-Like Codes

Uploaded by

Deepak SalianCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Springer - Turbo-Like Codes

Uploaded by

Deepak SalianCopyright:

Available Formats

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) i of xviii August 1, 2007 13:41

Turbo-like Codes

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) iii of xviii August 1, 2007 13:41

Aliazam Abbasfar

Turbo-like Codes

Design for High Speed Decoding

1 3

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) iv of xviii August 1, 2007 13:41

A C.I.P. Catalogue record for this book is available from the Library of Congress.

ISBN-10 9781402063903

ISBN-13 9781402063909

Published by Springer,

P.O. Box 17, 3300 AA Dordrecht, The Netherlands.

www.springeronline.com

Printed on acid-free paper

All Rights Reserved

c 2007

No part of this work may be reproduced, stored in a retrieval system, or transmitted

in any form or by any means, electronic, mechanical, photocopying, microlming, recording

or otherwise, without written permission from the Publisher, with the exception

of any material supplied specically for the purpose of being entered

and executed on a computer system, for exclusive use by the purchaser of the work.

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) v of xviii August 1, 2007 13:41

Dedicated to my wife

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) vii of xviii August 1, 2007 13:41

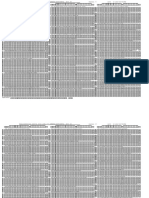

Contents

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

List of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

2 Turbo Concept . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.1 Turbo Codes and Turbo-like Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.1.1 Turbo Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.1.2 RepeatAccumulate Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.1.3 Product Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Iterative Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.3 Probability Propagation Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.4 Message-passing Algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.5 Graphs with Cycles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.6 Codes on Graph . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.6.1 Parity-check Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.6.2 Convolutional Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.6.3 Turbo Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3 High-speed Turbo Decoders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.2 BCJR Algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Turbo Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.4 Pipelined Turbo Decoder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3.5 Parallel Turbo Decoder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

vii

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) viii of xviii August 1, 2007 13:41

viii Contents

3.6 Speed Gain and Efciency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.6.1 Denitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.6.2 Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.7 Interleaver Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

3.7.1 Low Latency Interleaver Structure . . . . . . . . . . . . . . . . . . . . . . . . 31

3.7.2 Interleaver Design Algorithm. . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.7.3 Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.8 Hardware Complexity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

3.9 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4 Very Simple Turbo-like Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.1.1 Bounds on the ML Decoding Performance of Block Codes . . . . 40

4.1.2 Density Evolution Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.2 RA Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2.1 ML Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.2 DE Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.3 RA Codes with Puncturing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3.1 ML Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.3.2 Performance of Punctured RA Codes with ML Decoding. . . . . . 53

4.3.3 DE Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

4.4 ARA Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.4.1 ML Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4.4.2 Performance of ARA Codes with ML Decoding . . . . . . . . . . . . . 58

4.4.3 DE Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

4.5 Other Precoders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4.5.1 Accumulator wih Puncturing . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4.6 Hardware Complexity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4.7 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

5 High Speed Turbo-like Decoders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.2 Parallel ARA Decoder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

5.3 Speed Gain and Efciency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

5.4 Interleaver Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

5.5 Projected Graph . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

5.5.1 Parallel Turbo Decoder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

5.5.2 Other Known Turbo-like Codes . . . . . . . . . . . . . . . . . . . . . . . . . . 71

5.5.3 Parallel LDPC Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5.5.4 More AccumulateRepeatAccumulate Codes . . . . . . . . . . . . . . 74

5.6 General Hardware Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

5.7 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) ix of xviii August 1, 2007 13:41

List of Figures

1 The block diagram of a PCCC encoder 6

2 The block diagram of a SCCC encoder 6

3 An example of a HCCC encoder 6

4 RepeatAccumulator code block diagram 6

5 Block diagram of a product code 6

6 The iterative turbo decoding block diagram 8

7 Examples of Tanner graphs: (a) tree (b) with cycles 8

8 Probabilistic graphs 9

9 Variable x and its connections in the graph 10

10 One constraint node and its connections in graph 11

11 A tree graph 12

12 Tanner graph for Hamming code, H(7,4) 14

13 Tanner graph for regular LDPC (3,5) 14

14 Convolutional code Tanner graph 15

15 The Tanner graph of Convolutional codes with state variables 15

16 A trellis section 16

17 An example of the graph of a PCCC 16

18 The messages in a convolutional code 20

19 Block diagram of the SISO 20

20 Timing diagram of the traditional SISO 21

21 Message passing between the constituent codes of turbo codes 21

22 The iterative decoding structure 22

23 Pipelined turbo decoder 23

24 Parallel turbo decoder structure 24

25 Timing diagram of the parallel SISOs 24

26 Timing diagram of the parallel SISOs in vector notation 25

ix

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) x of xviii August 1, 2007 13:41

x List of Figures

27 Partitioned graph of a simple PCCC 25

28 Parallel turbo decoder with shared processors for two constituent codes 26

29 Performances of parallel decoder 28

30 Efciency and speed gain 28

31 Efciency vs. signal to noise ratio 29

32 (a) Bit sequence in matrix form (b) after row interleaver

(c) A conict-free interleaver (d) Bit sequence in sequential order

(e) The conict-free interleaved sequence 30

33 Data and extrinsic sequences in two consecutive iterations for turbo

decoder with reverse interleaver 31

34 Sequences in two consecutive iterations for parallel turbo decoder

with reverse interleaver 32

35 Scheduling diagram of the parallel decoder 32

36 The owchart of the algorithm 34

37 Performance comparison for B = 1,024 36

38 Performance comparison for B = 4,096 37

39 (a) alpha recursion (b) beta recursion (c) Extrinsic computation 37

40 Probability density function of messages in different iterations 42

41 Constituent code model for density evolution 42

42 Constituent code model for density evolution 43

43 SNR improvement in iterative decoding 44

44 RepeatAccumulator code block diagram 44

45 Density evolution for RA codes (q = 3) 46

46 Accumulator with puncturing and its equivalent for p = 3 47

47 Block diagram of accumulator with puncturing 47

48 Block diagram of check_4 code and its equivalents 51

49 Normalized distance spectrum of RA codes with puncturing 54

50 Density evolution for RA codes with puncturing (q = 4, p = 2) 56

51 The block diagram of the precoder 57

52 ARA(3,3) BER performance bound 58

53 ARA(4,4) BER performance bound 59

54 Normalized distance spectrum of ARA codes with puncturing 60

55 Density evolution for ARA codes with puncturing (q = 4, p = 2) 61

56 Performance of ARA codes using iterative decoding 62

57 The block diagram of the new precoder 63

58 Tanner graph for new ARA code 63

59 Performance of the new ARA code 64

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) xi of xviii August 1, 2007 13:41

List of Figures xi

60 The partitioned graph of ARA code 68

61 Parallel turbo decoder structure 68

62 Projected graph 69

63 Projected graph with conict-free interleaver 70

64 A PCCC projected graph with conict-free interleaver 71

65 (a) PCCC with 3 component codes (b) SCCC (c) RA(3) (d) IRA(2,3) 72

66 A parallel LDPC projected graph 73

67 Simple graphical representation of a LDPC projected graph 74

68 ARA code without puncturing 75

69 (a) Rate 1/3 ARA code (b) rate 1/2 ARA code 75

70 (a) Rate 1/2 ARA code (b) New rate 1/3 ARA code (c) New rate 1/4

ARA code 76

71 Improved rate 1/2 ARA codes 77

72 Irregular rate 1/2 ARA codes 77

73 Irregular ARA code family for rate >1/2 78

74 Parallel decoder hardware architecture 79

75 Window processor hardware architecture 79

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) xiii of xviii August 1, 2007 13:41

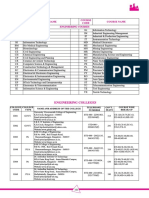

List of Tables

I Probability Denitions 9

II State Constraint 16

III The Decoder Parameters 26

IV Characteristic Factors for the Parallel Decoder @SNR = 0.7 dB

(BER = 10E 8) 29

V An Example of the Interleaver 33

VI Cut-off Thresholds for RA Codes with Puncturing 54

VII Cut-off Threshold for Rate 1/2 ARA Codes 61

VIII Cutoff Threshold for ARA Codes with Rate <1/2 76

IX Cutoff Threshold for Improved ARA Codes with Rate <1/2 77

X Cutoff Threshold for ARA Codes with Rate >1/2 78

xiii

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) xv of xviii August 1, 2007 13:41

Acknowledgments

First and foremost, I would like to express my deepest gratitude to my wife for her

patience and sacrices throughout this research. She has been a constant source

of assistance, support, and encouragement. My heartfelt thanks go to my parents

for their generous love, encouragement, and prayers. Their sacrices have been

my inspiration throughout my career and I am deeply indebted to them in all my

successes and accomplishments. Words cannot express the deep feeling of gratitude

I have for my family.

There are so many people that I would like to thank for making my experience

at UCLA truly one of a kind. I would like to thank my advisor Professor Kung Yao

for all the help, support, and opportunities he has provided me over these years. His

valuable advice, guidance, and unconditional support helped me overcome all the

challenges of the doctoral process. I also want to thank Dr. Flavio Lorenzelli for his

support and fruitful discussions throughout my research.

My special thanks go to Dr. Dariush Divsalar who has been the motivation force

to go into the specic eld of channel coding. I have gained most of my knowledge

in the eld from discussions with him. He has had a profound effect on my Ph.D.

both as a mentor and a colleague.

Finally, I would also like to thank Professor Parviz Jabehdar-Maralani of the

University of Tehran for his continued support and encouragement.

xv

00-Abbasfar-Prelims SDO003-abbasfar (Typeset by spi publisher services, Delhi) xvii of xviii August 1, 2007 13:41

Abstract

The advent of turbo codes has sparked tremendous research activities around the

theoretical and practical aspects of turbo codes and turbo-like codes. The crucial

novelty in these codes is the iterative decoding.

In this work, rst a novel high-speed turbo decoder is presented that exploits

parallelization. Parallelism is achieved very efciently by exploiting the message-

passing algorithm. It has been shown that very large speed gains can be achieved

by this scheme while the efciency is maintained reasonably high. Memory access,

which poses a practical problem for the proposed parallel turbo decoder, is solved by

introducing the conict-free interleaver. The latency is further improved by designing

a special kind of conict-free interleaver. Furthermore, an algorithm to design such

an interleaver is presented. Simulation results show that the performance of turbo

code is not sacriced by using the interleaver with the proposed structure.

Although turbo code has near Shannon-capacity performance and the proposed

architecture for parallel turbo decoder provides a very efcient and highly regular

hardware, the circuit is still very complex and demanding for very high-speed

decoding. Therefore, it becomes necessary to nd turbo-like codes that not only

achieve excellent error correction capability, but are also very simple. As a result,

a class of new codes for different rates and block sizes, called AccumulateRepeat

Accumulate (ARA) codes, was invented during this search. The performance of ARA

codes is analyzed; and it has been shown that some ARA codes perform very close

to random codes, which achieve the Shannon limit.

The architecture for high-speed ARA decoder is presented and practical issues

discussed. This leads us to a general class of turbo-like codes with parallelism

capability, i.e. codes with projected graphs. It is shown that parallel turbo decoder,

discussed earlier, is in the same class. Projected graph provides a powerful and yet

simple method for designing parallelizable turbo-like codes.

xvii

01-Abbasfar-c01 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 1 of 4 May 14, 2007 20:35

Chapter 1

Introduction

Efcient and reliable data communication over noisy channels has been pursued

more and more for many decades. Applications such as wire-line modems, wireless

and satellite communications, Internet data transfer, digital radio broadcasting, and

data storage devices are only a few examples that accelerated the development of

data communication systems.

The issue of efciency and reliability in communication systems was fundamen-

tally addressed by Shannon [30] in 1948. Shannon introduced the capacity (C) for a

noisy channel, which determines the maximum data rate (R) that can be transferred

over the channel reliably (i.e. without any error). In other words, there exists a coding

scheme of rate (R < C) with arbitrarily small error probability. The proof of this

was done in a nonconstructive way, which means that it does not give any method

for construction of capacity-approaching codes.

The pursuit of capacity-approaching codes took almost 50 years. The intro-

duction of turbo codes by Berrou, Glavieux, and Thitimajshima [9] was a major

breakthrough in the world of practical capacity-approaching codes causing a rev-

olution in the eld of error-correcting codes. As a result, several other classes of

capacity-approaching codes were rediscovered and invented including Low-Density

Parity-Check (LDPC) codes [14], RepeatAccumulate (RA) codes [12], and product

codes.

The trend in data communications is towards high data rate applications which

require high-speed decoding. Although very excellent codes have been proposed and

their efcient decoding algorithms are known, design of codes that are suitable for

high-speed applications is still a challenging task. This includes design of high-speed

decoding architectures, as well as low complexity codes, which are naturally more

suitable for parallelism.

1.1 Outline

The advent of turbo codes has sparked tremendous research activities around the

theoretical and practical aspects of turbo codes and turbo-like codes. This study

introduces different types of turbo codes and turbo-like codes. These codes include

the Parallel Concatenated Convolutional Code (PCCC), originally introduced by

Berrou [9], Serial Concatenated Convolutional Codes (SCCC) later introduced in

[7], RA codes [12], and product codes.

Aliazam Abbasfar, Turbo-Like Codes, 13. 1

c Springer 2007

01-Abbasfar-c01 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 2 of 4 May 14, 2007 20:35

2 1 Introduction

The common property among turbo-like code is that they consist of very simple

constituent codes that are connected to each other with random or pseudorandom

interleavers. The crucial novelty in these codes is the iterative decoding. This means

that the constituent codes are decoded separately, which is efcient and practically

feasible since they are very simple codes. Then, they pass new information to each

other in a course of a few iterations.

It has been shown that iterative decoding is a generalization of the well-known

probability or belief propagation algorithm. The belief propagation algorithm that

has been essential for development of new ideas throughout this work is described

in the context of coding. The basic theorems for this algorithm are explained and

proven in the following paragraphs. Thisis then followed by a description of the

computational algorithm. The probability propagation algorithm is proven in con-

junction with a tree-structured graph graphs without any cycle. In fact, the graphical

representation of any problem solved by this algorithm is the centerpiece of the

algorithm. The generalization of the algorithm for graphs with cycles is presented

later on.

Representation of codes on graph is the next step towards characterization of the

iterative decoding as an example of the probability propagation algorithm. The graph

representations are presented for a few codes that are commonly used in turbo-like

codes.

In Chapter 3, rst the traditional turbo decoder is introduced. Second, a novel

high-seed turbo decoder is presented that exploits parallelization. Parallelism is

achieved very efciently by exploiting the message-passing algorithm. Finally, two

characterization factors for the proposed parallel turbo decoder are investigated:

speed gain and efciency. It has been shown by simulations that very large speed

gains can be achieved by this scheme while the efciency is maintained reasonably

high.

Memory access poses a practical problem for the proposed parallel turbo decoder.

This problem is solved in the next section. Conict-free interleaver is introduced to

address the memory access problem. The latency is further improved by designing

a special kind of conict-free interleaver. Lastly, an algorithm to design such an

interleaver is presented. Simulation results show that the performance of turbo code

is not sacriced by using this interleaver.

Hardware complexity, which is of major concern, is investigated and the overall

architecture of the hardware for high-speed turbo decoder is presented.

Although turbo code has near Shannon-capacity performance and the proposed

architecture for parallel turbo decoder gives a very efcient and highly regular

hardware, the circuit is still very complex and demanding for very high-speed

decoding. Therefore, Chapter 4 examines simple turbo-like codes that can achieve

excellent error correction capability. In order to investigate the performance of the

turbo-like codes some useful analysis tools are used. Some maximum likelihood

(ML) performance bounds are briey explained which evaluate the performance of

codes using ML decoding. The density evolution (DE) method, which analyzes the

behavior of turbo-like codes using iterative decoding, is also described.

01-Abbasfar-c01 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 3 of 4 May 14, 2007 20:35

1.1 Outline 3

The RAcode provided valuable insight in the search for low-complexity turbo-like

codes. A class of new codes for different rates and block sizes, called ARA code, was

invented during this search. The performance of ARA codes is analyzed and some

are shown to perform very close to random codes, which achieve the Shannon limit.

The simplicity of ARA codes allows us to build a high-speed ARA decoder with

very low complexity hardware.

Chapter 5 rst presents the architecture for high-speed ARA decoder and then

discusses practical issues. This leads us to a general structure for turbo-like codes

with parallelization capability. The concept of projected graph is presented. In fact,

codes with projected graph comprise a class of turbo-like code. The parallel turbo

decoder discussed earlier is in the same class. Projected graph provides a powerful

and yet simple method for designing parallelizable turbo-like codes, which is used

in future research.

Finally, future research directions are discussed in light of this studys ndings.

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 5 of 18 August 1, 2007 13:41

Chapter 2

Turbo Concept

2.1 Turbo Codes and Turbo-like Codes

2.1.1 Turbo Codes

Turbo code introduced by Berrou [9] is a PCCC, which consists of two or more

convolutional codes encoding different permuted versions of a block of information.

A block diagram of a PCCC turbo encoder is shown in Figure 1.

Each convolutional code is called a constituent code. Constituent codes may be

same or different, systematic or nonsystematic. However, they should be of recursive

type in order to have good performance. In most cases the C

0

is a systematic code,

which means that the input sequence is transmitted along with the coded sequences;

the overall code is systematic too. If the code rate of the constituent codes is r

0

,

r

1

, . . . , r

n

, then the overall rate is r

0

||r

1

|| ||r

n

; like parallel resistors.

Later on SCCC were introduced in [7]. A block diagram of a SCCC is drawn in

Figure 2.

The block of information is encoded by the rst constituent code. Then the output

is interleaved and encoded by the second constituent code, and so on and so forth.

The output of the last stage is sent over the communication channel. If the code rate

of the constituent codes are r

1

, r

2

, . . . , r

n

, the overall rate is r

1

r

2

r

n

. To

obtain a systematic code all constituent codes should be systematic.

We can combine the PCCC and SCCC codes to come up with various Hybrid

Concatenated Convolutional Codes (HCCCs). Such a HCCC is shown in Figure 3.

We can extend the above-described turbo codes to obtain more generalized codes.

In the above codes all the constituent codes are convolutional. If we remove this

limitation and let them be arbitrary block codes, then we will have a broader class

of codes called turbo-like codes. Some examples of turbo-like codes are given in the

sequel.

2.1.2 RepeatAccumulate Codes

Perhaps the simplest type of turbo-like codes is RA codes; which makes it very

attractive for analysis. The general block diagram of this code is given in Figure 4.

It is a serial concatenated code of two constituent codes: repetition code and

Aliazam Abbasfar, Turbo-Like Codes, 517. 5

c Springer 2007

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 6 of 18 August 1, 2007 13:41

6 2 Turbo Concept

C

0

I

1

C

1

C

n

I

n

u

Fig. 1 The block diagram of a PCCC encoder

C

0

u

C

1

I

1

I

n

C

n

Fig. 2 The block diagram of a SCCC encoder

C

0

I

3

C

3

u

C

1

I

1

C

2

I

2

Fig. 3 An example of a HCCC

encoder

rep(q)

u

ACC I

N qN qN qN

Fig. 4 RepeatAccumulator code

block diagram

row-wise

block codes

u

row-column

interleaver

coulmn-wise

block codes

c

Fig. 5 Block diagram of a product code

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 7 of 18 August 1, 2007 13:41

2.2 Iterative Decoding 7

accumulate code. An information block of length N is repeated q times and

interleaved to make a block of size qN, and then followed by an accumulator.

Using rate formula for serial concatenated codes, the code rate for RA codes

would be 1/q.

2.1.3 Product Codes

The serial concatenation of two block codes with a rowcolumn interleaver results

in a product code. In fact, each block code consists of several identical smaller block

codes that construct rows/columns of the code word. A block diagram of this code is

shown in Figure 5.

2.2 Iterative Decoding

The crucial novelty in turbo codes is the introduction of iterative decoding. The other

key component is random or pseudorandom interleaver, which is discussed later.

Having exploited these concepts, turbo codes achieve excellent performance with a

moderate complexity.

The iterative decoding algorithm is based on maximum a posteriori (MAP) esti-

mation of the input sequence. However, since it is difcult to nd the MAP solution

by considering all the observations at the same time, the MAP decoding is performed

on the observations of each constituent code separately. This is explained for a

PCCC with two constituent codes. Since two codes have been produced from one

input sequence, the a posteriori probability (APP) of data bits coming from the rst

constituent decoder can be used by the second decoder and vice versa. Therefore

the decoding process is carried out iteratively. At the beginning we do not have

any information about input sequence. The MAP decoding of the rst constituent

code is performed without any prior knowledge of the input sequence. This process

generates APP of the input sequence bits that can be exploited in the second

decoder. The information passed to the other constituent decoder is called extrinsic

information.

BCJR algorithm [5] is an efcient algorithm that recursively computes the APPs

for a convolutional code. In [6] a general unit, called SISO, is introduced that

generates the APPs in the most general case. Chapter 2 explains the BCJR algorithm

based on decoding on graphs with tree structure.

Since the second constituent code is using the permuted version of the input

sequence, therefore, extrinsic information should also be permuted before being used

by the second decoder. Likewise, the extrinsic information of the second decoder is

to be permuted in reverse order for the next iteration of the rst decoder. The iterative

decoding block diagram is shown in Figure 6.

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 8 of 18 August 1, 2007 13:41

8 2 Turbo Concept

I SISO

y

1

I

-1

SISO

y

2

Fig. 6 The iterative turbo decoding block diagram

2.3 Probability Propagation Algorithms

It has been shown that the iterative decoding is the generalization of the well-known

probability or belief propagation algorithm. This algorithm has been developed in

the articial intelligence and expert system literature, most notably by Pearl [25] and

Lauritzen and Spiegelhalter [19]. Connection between the Pearls belief propagation

algorithm with coding was rst discovered by MacKay and Neal [22, 23], who

showed the Gallager algorithm [14] for decoding LDPC codes is essentially an

instance of belief propagation algorithm. McEliece et al. [24] independently showed

that turbo decoding is also an instance of belief propagation algorithm. We describe

this algorithm in a way that is more suitable for coding applications.

If we have some variables that are related to each other, there is a bipartite graph

representation showing their dependence, which is called the Tanner graph [31]. The

nodes are divided between variable nodes (circles) and constraint nodes (squares).

Two examples of such graphs are shown in Figure 7.

If we have some noisy observation of the variables, then it becomes a probabilistic

graph. In some communication systems some of the variables are not sent through

the channel, hence, there is no observation available for them in the receiver side.

Therefore, we should distinguish between observed and unobserved variables. Two

examples of probabilistic graphs are shown in Figure 8.

We denote all variables with vector x and their observations with vector y. Some

useful denitions are listed in Table I.

x

2

x

1

x

3

x

6

x

4

x

5

x

8

x

9

x

7

x

0

1 0 6

5 11 2

10 12

3 4

8

7 9

x

2

x

1

x

4

x

3

x

6

x

7

x

5

x

0

1 0 6

5 11 2

10 12

3 4

8

7 9

(a) (b)

Fig. 7 Examples of Tanner graphs: (a) tree, (b) with cycles

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 9 of 18 August 1, 2007 13:41

2.3 Probability Propagation Algorithms 9

(a) Tree (b) loopy

x

2

x

1

x

3

x

6

x

4

x

5

x

8

x

9

x

7

x

0

1 0

6

5

11 2

10 12

3 4

8

7 9

x

2

x

1

x

4

x

3

x

6

x

7

x

5

x

0

1 0 6

5 11 2

10 12

3 4

8

7 9

x

Constraint

node

Unobserved

Variable node

x

Observed

Variable node

Fig. 8 Probabilistic graphs

The probability propagation algorithm is proven for graphs with tree structure.

Given a graphical code model, the probability propagation algorithm is used to

compute the APP of variables very efciently. However, for graphs with cycles

there is no known efcient algorithm for computing the exact APPs, which makes

it practically infeasible. The probability propagation algorithm can be extended to

graphs with cycles by proceeding with the algorithm as if there is no cycle in the

graph. Although there is no solid proof of convergence in this case, it usually gives

very excellent results in practice. The turbo codes performance is a testimony for

effectiveness of the algorithm. The following theorems are very helpful in order to

explain the algorithm.

Theorem 1: In a probabilistic tree, the likelihood of each variable can be decom-

posed into the local likelihood and some independent terms, which are called mes-

sages or extrinsics in the case of turbo decoding.

We prove this theorem using the graph shown in Figure 9.

In Figure 9 we have lumped all the variables connecting to an edge into one

vector and denoted them and their observations as x

1

, x

2

, x

3

, y

1

, y

2

, y

3

; i.e. x =

{x, x

1

, x

2

, x

3

}, y = {y, y

1

, y

2

, y

3

}. The likelihood of variable x is determined by

marginalization of x. We have

P(y | x) =

x

1

,x

21

,x

3

P(x, y) =

x

1

,x

21

,x

3

P(x

1

, y

1

, x

2

, y

2

, x

3

, y

3

| x) P(y | x) (1)

Table I Probability Denitions

Denition Probability

Likelihood of x P(y | x)

Likelihood of x P(y | x)

Local likelihood of x P(y | x)

Local reliability of x P( x, y) = P(y | x)P(x)

Reliability of x P( x, y) = P( y | x)P(x)

A Posteriori Probability (APP) of x P( x | y) = P( x, y)/ P(y)

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 10 of 18 August 1, 2007 13:41

10 2 Turbo Concept

x

x

2

y

2

x

3

y

3

1 2

3

x

1

y

1

Fig. 9 Variable x and its connections in the

graph

It can be simplied as following

P(y | x) =

x

1

P(x

1

, y

1

| x)

x

2

P(x

2

, y

2

| x)

x

3

P(x

3

, y

3

| x)

P(y | x) (2)

P(y | x) = P(y

1

| x)P(y

2

| x)P(y

3

| x)P(y | x) (3)

P(y | x) = IM

1

(x) IM

2

(x) IM

3

(x)P(x, y) = IM

1

(x) IM

2

(x) IM

3

(x)P(y | x)

In the above formula we have three incoming messages (IM) each one coming

from one part of the graph, i.e. a subgraph. Since the variable is connected to each

subgraph by one edge, therefore, there is a correspondence between IM and the edges

connected to a variable node. In other words, the IM are communicated along the

edges of the graph towards the variable nodes.

As the mathematical expression shows, the incoming message is the likeli-

hood of the variable x given the only observations that are connected to x

via each edge. Since the graph is a tree, for every variable node decomposi-

tion of the graph into some disjoint subgraphs is always possible. That proves

theorem 1.

Furthermore, we dene the outgoing messages (OM) as follows:

OM

1

(x) = P(y

2

, y

3

, y | x) = IM

2

(x) IM

3

(x)P(y | x) (4)

OM

2

(x) = P(y

1

, y

3

, y | x) = IM

1

(x) IM

3

(x)P(y | x) (5)

OM

3

(x) = P(y

1

, y

2

, y | x) = IM

1

(x) IM

2

(x)P(y | x) (6)

Therefore, we have

P(y | x) = IM

1

(x) OM

1

(x) = IM

2

(x) OM

2

(x) = IM

3

(x) OM

3

(x) (7)

These messages are communicated along the edges emanated from variable x

towards constraints nodes. Because every edge of the graph connects one variable

node to a constraint node, there are two messages related to each edge: one incoming

and the other outgoing. Therefore the message indices can be regarded as edge

indices. We will use these messages in theorem 2.

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 11 of 18 August 1, 2007 13:41

2.3 Probability Propagation Algorithms 11

x

2

y

2

x

3

y

3

1 2

3

x

1

y

1

x

1

x

2

x

3

x

Fig. 10 One constraint node and its

connections in graph

It should be noted that messages are a function of the variable. If the variable

is a quantized variable, the messages should be computed for all the levels of the

variable. For example for binary variables the message includes the likelihood of

being 0 and 1. However, in practice the likelihood ratio is used to simplify the

operations and to save the memory for storage.

Theorem 2: For each constraint node the IM on one edge can be computed from the

OMs on the other edges connected to the constraint node.

We prove this theorem using the graph shown in Figure 10.

In Figure 10 we have lumped all the variables connecting to an edge into

one vector and denoted them and their observations as x

1

, x

2

, x

3

, y

1

, y

2

, y

3

.

Each edge is directly connected to one variable, which is donated by x

1

x

1

,

x

2

x

2

, and x

3

x

3

. Therefore, we have x

1

= {x

1

, x

1

}, x

2

= {x

2

, x

2

}, and x

3

=

{x

3

, x

3

}. The likelihood of variable x is determined by the marginalization of x.

We have

IM(x) = P(y

1

, y

2

, y

3

| x) =

x

1

,x

2

,x

3

P(x

1

, y

1

, x

2

, y

2

, x

3

, y

3

| x)

=

x

1

,x

2

,x

3

P(x

1

, y

1

, x

2

, y

2

, x

3

, y

3

| x

1

, x

2

, x

3

)P(x

1

, x

2

, x

3

| x)

x

1

,x

2

,x

3

1

,x

2

,x

P(x

1

, y

1

, x

2

, y

2

, x

3

, y

3

| x

1

, x

2

, x

3

)P(x

1

, x

2

, x

3

| x)

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 12 of 18 August 1, 2007 13:41

12 2 Turbo Concept

=

x

1

,x

2

,x

3

1

P(x

1

, y

1

| x

1

)

2

P(x

2

, y

2

| x

2

)

1

P(x

3

, y

3

| x

3

)

P(x

1

, x

2

, x

3

| x)

x

1

,x

2

,x

3

[P(y

1

| x

1

)P(y

2

| x

2

)P(y

3

| x

3

)P(x

1

, x

2

, x

3

| x)]

=

x

1

,x

2

,x

3

[OM

1

(x

1

) OM

2

(x

2

) OM

3

(x

3

)P(x

1

, x

2

, x

3

| x)] (8)

As we see the IM is obtained by marginalizing the appropriate weighted product

of OMs. The weight function explicitly shows the effect of the constraint.

2.4 Message-passing Algorithm

Theorems 1 and 2 provide the basic operations needed for computing the reliability

of all the variables in the graph in an efcient way. The algorithm is called message

passing, which is essentially the marginalization algorithm. There are many variants

of the algorithm that are different only in the scheduling of the computations. Two

important versions of the algorithm are described in the sequel.

Efcient schedule: In this schedule the algorithm starts from the OMs for leaf

vertices in the graph, which are simply the local likelihood of the variables. Messages

propagate from the leaves toward the inside of the graph and then back towards

the leaves; this time they are IMs. The messages are computed only when all the

required messages are ready. The order of message computation for the graph shown

in Figure 11 is:

OM

0

, OM

1

, OM

2

, OM

3

, OM

4

, OM

5

, OM

6

IM

7

, IM

8

, IM

9

OM

10

, OM

11

, OM

12

x

2

x

1

x

3

x

6

x

4

x

5

x

8

x

9

x

7

x

0

1 0 6

5 11 2

10 12

3 4

8

7

9

Fig. 11 A tree graph

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 13 of 18 August 1, 2007 13:41

2.5 Graphs with Cycles 13

IM

0

, IM

10

, IM

11

, IM

12

OM

7

, OM

8

, OM

9

IM

1

, IM

2

, IM

3

, IM

4

, IM

5

, IM

6

Flooding schedule: In this schedule all messages are initialized by the local

likelihood of the variables. In each step all the messages are computed regardless

of the location of the edge in the graph and the status of other messages. Although

this schedule is not efcient, this is the fastest algorithm that can be conceived.

After several steps all the messages converge to the correct value. The maximum

number of steps is the depth of the graph. However, in big graphs the number of steps

needed for a given accuracy of the messages is much less that the depth of the graph.

This scheduling is shown in the following. The bold messages are those that are

nal.

OM

0

, OM

1

, OM

2

, OM

3

, OM

4

, OM

5

, OM

6

, OM

7

, OM

8

, OM

9

, OM

10

, OM

11

, OM

12

IM

0

, IM

1

, IM

2

, IM

3

, IM

4

, IM

5

, IM

6

, IM

7

, IM

8

, IM

9

, IM

10

, IM

11

, IM

12

OM

0

, OM

1

, OM

2

, OM

3

, OM

4

, OM

5

, OM

6

, OM

7

, OM

8

, OM

9

, OM

10

, OM

11

, OM

12

IM

0

, IM

1

, IM

2

, IM

3

, IM

4

, IM

5

, IM

6

, IM

7

, IM

8

, IM

9

, IM

10

, IM

11

, IM

12

OM

0

, OM

1

, OM

2

, OM

3

, OM

4

, OM

5

, OM

6

, OM

7

, OM

8

, OM

9

, OM

10

, OM

11

, OM

12

IM

0

, IM

1

, IM

2

, IM

3

, IM

4

, IM

5

, IM

6

, IM

7

, IM

8

, IM

9

, IM

10

, IM

11

, IM

12

2.5 Graphs with Cycles

Although the message-passing algorithm is proved for cycle-free graphs, it has been

used for graph with cycles as well. The success of turbo codes shows the importance

of the extension of this algorithm for graph with cycles. All good turbo-like codes

have graph with cycles. In fact, they are very rich in cycles that make the codes more

powerful. However, it is well known that short cycles should be avoided in order to

have good performance.

In essence, the message-passing algorithmdoes not change for this class of graphs.

It simply ignores the presence of loops. The messages propagate through the graph

based on the same rules; i.e. theorems 1 and 2. Because of the loops the effect of one

observation can be fed back to itself after some steps; i.e. the messages passed along

the loop come back to their origin. This creates some correlation between messages,

which make theorems 1 and 2 no longer valid. To alleviate this effect, short cycles

are removed from the graph. In this case the effect of one variable on return message

is well attenuated.

Different scheduling can be used in the message-passing algorithm. Efcient

scheduling is not applicable here because it is based on a graph having a tree struc-

ture. However, there is some scheduling that is more efcient than others. Flooding

scheduling can be used for very fast and low latency applications. We usually stop

the message passing when the messages are well propagated.

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 14 of 18 August 1, 2007 13:41

14 2 Turbo Concept

3 4

1

2

5

6

7

Fig. 12 Tanner graph for Hamming code,

H(7,4)

2.6 Codes on Graph

The natural setting for codes decoded by iterative algorithms is their graph repre-

sentation. In this section the graph representation of some commonly used codes are

illustrated and explained.

2.6.1 Parity-check Codes

Parity-check codes are binary linear block codes. Parity-check matrix determines the

constraints between binary variables. As an example, the parity-check matrix of for

Hamming code H(7,4) is given as follows:

H =

1 0 1 1 1 0 0

1 1 1 0 0 1 0

1 1 0 1 0 0 1

(9)

The Tanner graph for Hamming code, H(7,4), is shown in Figure 12.

LDPC codes are referred to those codes that have very sparse parity-check matrix.

Regular LDPC, rst introduced by Gallager [14], has a matrix with xed number of

nonzero elements in each row and column. The Tanner graph for a regular LDPC

code with variable and check nodes with degree 3 and 5 is shown in Figure 13.

1

Interleaver

2 3 4 N

. . .

. . .

Fig. 13 Tanner graph for regular LDPC (3,5)

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 15 of 18 August 1, 2007 13:41

2.6 Codes on Graph 15

0 1 2 3 4 5 6 7 c

0 1 2 3 4 5 6 7 u

Fig. 14 Convolutional code Tanner graph

Luby et al. [20, 21] found out that using irregular LDPC codes and optimizing the

degree distribution of variable and check nodes give very superior codes. Building

on the analytical techniques developed by Luby et al., Richardson et al. [27, 28]

designed long irregular LDPC codes that practically achieve the Shannon limit.

2.6.2 Convolutional Codes

In convolutional codes, the information bits goes through a lter. The code bits

are derived from a few previous information and code bits. The dependency is

dened by the generator polynomials. For example, for a recursive convolutional

code with feedback polynomial Den(D) = 1 + D + D

2

and forward polynomial of

Num(D) = 1 + D

2

, the following constraint hold for all n:

c[n] + c[n 1] + c[n 2] + u[n] + u[n 2] = 0

u[1] = u[2] = 0 (10)

c[1] = c[2] = 0

Where x[n] is the nth information bit, y[n] is the nth output bit and the plus sign is

a modulo 2 addition operator. The above constraints are depicted in Figure 14.

However, this graph representation has many short cycles (length 4), which is not

desirable for message-passing algorithm. With the introduction of state variables we

can eliminate all the loops. State variables are ctitious variables that are not part of

the code word; i.e. they are not sent over the channel. The size of state variables is

(K 1) bits where K is the constraint length of the convolutional code; i.e. it takes

on values between 0 and 2

K1

1. The graph of the convolutional code for 8-bit

information block size with the state variables is drawn in Figure 15.

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7

x

8

x x x x x

x x

0 1 2 3 4 5 6 7

c

u

7

8

x

codeword bits

State

variable

State

constraint

Fig. 15 The Tanner graph of Convolutional codes with state variables

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 16 of 18 August 1, 2007 13:41

16 2 Turbo Concept

Table II State Constraint

S[n] u[n] c[n] S[n + 1]

0 0 0 0

0 1 1 2

1 0 1 0

1 1 0 2

2 0 0 1

2 1 1 3

3 0 1 1

3 1 0 3

0

3

2

1

0

3

2

1

0/00

1/11

0/01

1/10

1/10

1/11

0/00

0/01

Fig. 16 Atrellis section

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7

C1

x

8

x x x x x x x

0 1 2 3 4 5 6 7

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7

C2

x 8 x x x x x x x

c1

c2

u

Fig. 17 An example of the graph of a PCCC

02-Abbasfar-c02 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 17 of 18 August 1, 2007 13:41

2.6 Codes on Graph 17

The state constraint shows the relationship between code word bits and the state

variables. For convolutional code in the previous example, the state constraint only

allows the cases that are listed in Table II.

The state constraint can be also described by trellis section of the convolu-

tional codes, which is shown in Figure 16, whose information on each edge

is u/uc.

2.6.3 Turbo Codes

The graph of turbo codes is derived from connecting the graphs of its own constituent

convolutional codes. The graphical representation of a rate 1/3 PCCCwith block size

of 8 is shown in Figure 17.

The graph of other codes can be drawn the same way. It should be noted that

despite the fact that the graph of a constituent convolutional code is loopless, the

overall graph has many loops.

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 19 of 38 July 17, 2007 16:37

Chapter 3

High-speed Turbo Decoders

3.1 Introduction

The graphical representation of a code gives the natural setting for iterative decoding,

which is basically the message-passing algorithm. In order to grasp the high-speed

turbo decoding architecture, it is essential that the traditional approach for turbo

decoding is appreciated rst.

In traditional turbo decoding each constituent code is processed one at a time. The

algorithm for efciently computing the APPs of bits is called the BCJR algorithm

after their rst inventors [5]. It presents an efcient way to compute the APP of bits

for any convolutional code. In [4] it is shown that BCJR algorithm is indeed the

message-passing algorithm. Here we briey describe the structure of this algorithm

and relate it to the message-passing algorithm.

3.2 BCJR Algorithm

The three main steps of this algorithm are as follows:

Forward Recursion (FR): In this step we compute the likelihood of all the states

in the trellis given the past observations. Starting from a known state, we will go

forward along the trellis and compute the likelihood of all the states in one trellis

section from the likelihood of the states in the previous trellis section. This recursive

scheme is continued until the likelihood of all the states, which are called alpha

variables, are computed in the forward direction.

The forward recursion is exactly equivalent of computing the forward messages as

it is shown in Figure 18. Starting from rst state, which is a leaf vertex, forward mes-

sages are computed recursively from observations and previous forward messages.

Since the state variables are not observed from the channel, the IM and OM for each

state variable is the same. Hence, only one of them is drawn in Figure 18.

It is very instructive to note that the forward messages are the likelihood of states

given the past observations. In the sequel we will use alpha variables and forward

messages interchangeably.

Backward Recursion (BR): This step is quite similar to the forward recursion.

Starting from a known state at the end of the block, we compute the likelihood of

Aliazam Abbasfar, Turbo-Like Codes, 1938. 19

c Springer 2007

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 20 of 38 July 17, 2007 16:37

20 3 High-speed Turbo Decoders

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7

x

8

x x x x x x x

0 1 2 3 4 5 6 7

c

u

Forward messages

Backward messages

extrinsics

Fig. 18 The messages in a convolutional code

previous states in one trellis section. Therefore we compute the likelihood of all the

states in the trellis given the future observations, which are called beta variables. This

iterative processing is continued until the beginning of the trellis.

The backward recursion is exactly equivalent of computing the backward mes-

sages as shown in Figure 18. Starting from the last state, which is a leaf vertex, back-

ward messages are computed recursively from observations and previous backward

message. It is very instructive to note that the backward messages are the likelihood

of states given the future observations. We will use beta variables and backward

messages interchangeably.

Output Computation (OC): Once the forward and backward likelihood of

the states are computed, the extrinsic information can be computed from them.

The extrinsic information can be viewed as the likelihood of each bit given all the

observations.

Output computation is equivalent of computing the IM for the code word bits.

These messages match the denition of extrinsic information. As we observe in

Figure 18, the likelihood of both input and output bits for a convolutional code is

computed. However, depending on the connection between the constituent codes in

a turbo code, some of the extrinsics might not be used.

Bennedetto et al. [6] introduced a general unit, called SISO, which generates the

APPs in the most general case. It should be noted that SISO is a block, which

implements the BCJR algorithm. The inputs to the SISO block are the observations

(r

1

or r

2

), initial values for alpha and beta variables, (

0

and

N

) and the extrinsics

coming from other SISOs. The outputs are the alpha and beta variables at the end

of forward and backward recursions, which are not used any more, and the new

extrinsics that will pass to the other SISO. The block diagram of a SISO for a

convolutional code of length N is sketched in Figure 19.

In the traditional realization of the SISO, the timing scheduling for the three men-

tioned steps is as follows. The backward recursion is done completely for the entire

SISO

r1

a

0

b

0

a

N

b

N

x

y

Fig. 19 Block diagram of the SISO

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 21 of 38 July 17, 2007 16:37

3.3 Turbo Decoding 21

Backward: y

N1

y

N2

y

1

y

0

b

N

b

N1

b

2

b

1

b

0

Forward: y

0

y

1

y

N2

y

N1

a

0

a

1

a

N2

a

N1

a

N

Output: x

0

x

1

x

N2

x

N1

Fig. 20 Timing diagram of the traditional SISO

block and all beta variables are stored in a memory. Then, the forward recursion starts

from the rst trellis section and computes the alpha variables one by one. Since at

this time both alpha and beta variables are available for the rst trellis section, the

extrinsic for the rst bit is computed at this time. Therefore the extrinsic computation

is done along with the forward recursion. The sequence of variables in time is shown

in Figure 20. Alpha and beta variables are denoted by a and b, and incoming and

outgoing extrinsics are denoted by y and x. We could exchange the order in which

forward and backward recursion is done. However, this scheduling outputs the results

in the reverse order.

3.3 Turbo Decoding

Once two or more convolutional codes are connected together with interleavers,

turbo codes are produced. The decoding is performed by passing the messages

between the constituent codes. Each constituent code receives the incoming extrin-

sics and computes new extrinsics by using the BCJR algorithm. The new extrinsics

are used by other constituent codes. Figure 21 sketches the message passing between

the constituent codes for a simple PCCC and SCCC.

The decoding starts from one constituent code and proceeds to other constituent

codes. This process is considered as one iteration. It takes several iterations to obtain

SISO

SISO

I

u c1

c2

u

SISO I

c1

c2

SISO

Fig. 21 Message passing between the constituent codes of turbo codes

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 22 of 38 July 17, 2007 16:37

22 3 High-speed Turbo Decoders

SISO

r1

r2

Interleaver

SISO

a

0

b

0

a

0

b

0

a

N

a

N

b

N

b

N

x y

x

I

y

I

Fig. 22 The iterative decoding structure

very good performance for bit decisions. Turbo codes are designed such that the

probability density of the extrinsics is shifted toward higher values in each iteration.

This phenomenon is known as DE, which is also used to compute the capacity

of codes using the iterative decoding. The decisions are made based on messages

on information bits. Hence, the decisions are getting better and better with more

iterations.

Since the second constituent code uses the permuted version of the bit sequence,

the extrinsic information should also be permuted before being used by the second

SISO. Likewise, the extrinsic information of the second SISO is to be permuted in

reverse order for the next iteration of the rst SISO. The structure of the iterative

decoder is shown in Figure 22.

SISO block processes the observation serially and outputs the extrinsics serially.

Hence, in the sequel the traditional iterative turbo decoding is referred as serial

decoding. It should be noted that only one SISO is working at a time.

Without any loss of generality, only the simple PCCC, which is the most popular

turbo code, is investigated from now on. The methods described here can be applied

to all other turbo codes with simple modications.

3.4 Pipelined Turbo Decoder

One possible way to speed up the decoding is to perform the iterations in a pipelined

way. In this method there is one exclusive SISO for each constituent code at certain

iteration. The constituent codes are decoded and the results passed to next stage for

further iterations. The block diagram of such a decoder is drawn in Figure 23.

All the SISOs are running at the same time, but working on different received

blocks of coded data. In this method the decoding rate has been increased by 2I

times, where I is the number of iterations. Although we have achieved some speed

gain, the latency remains the same. To get the bit decisions we have to wait until all

the stages are nished for a block of data.

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 23 of 38 July 17, 2007 16:37

3.5 Parallel Turbo Decoder 23

SISO

0

x

Int

x

I

SISO

0

y

Int

1

y

I

...

SISO

I

x

Int

x

I

SISO

I'

y

Int

-1

y

I

r1(t-2I+2) r2(t-2I+1) r1(t) r2(t-I)

y

I

Fig. 23 Pipelined turbo decoder

The increase in the decoding rate comes at the expense of more hardware. As we

see in Figure 23, the hardware needed for the decoding is also increased 2I times.

This hardware consists of memory to store the observations and extrinsics and the

logic to compute the SISO computations.

This method has another disadvantage. The number of iterations is xed and

cannot be changed, which is essential for power reduction. Therefore this method

is not interesting for high-speed turbo decoding.

3.5 Parallel Turbo Decoder

In this section, we present a novel method for iterative decoding of turbo codes

that can be used for very high-speed decoders. This method was rst introduced

by Abbasfar and Yao [3]. Although this method is applicable for every turbo code,

we will explain it in the case of a block PCCC code. The algorithm is as follows.

First of all, the received data for each constituent codes are divided into sev-

eral contiguous nonoverlapping sub-blocks, called windows. Then, each window

is decoded separately in parallel using the BCJR algorithm. In other words, each

window processor is a vector decoder. However, the initial values for alpha and beta

variables come from previous iteration of adjacent windows. Since all the windows

are being processed at the same time, in the next iteration the initial values for all

of them are ready to load. Moreover, there is no extra processing needed for the

initialization of state probabilities at each iteration. The size of windows is a very

important parameter that will be discussed later. The structure of the decoder is

shown in Figure 24.

The timing diagram of the messages for one constituent code is shown in

Figure 25.

The above timing diagram can be simplied by using vector notation, which

is shown in Figure 26. The variables that computed at the same time are simply

replaced with a vector. Each vector has M elements, which belong to different

window processors (SISOs). For example, we have a

0

= [a

0

a

N

a

2N

a

MNN

]

T

and b

0

= [b

0

b

N

b

2N

b

MNN

]

T

.

This notation is the generalization of the serial decoder. It will also help to

appreciate the new interleaver structure for the parallel decoder discussed later.

The proposed structure stems from the message-passing algorithm itself. We

have only partitioned the graph into some subgraphs and used parallel scheduling

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 24 of 38 July 17, 2007 16:37

24 3 High-speed Turbo Decoders

Fig. 24 Parallel turbo decoder structure

for different partitions. Partitioning helps us to parallelize the decoding of

one constituent code. The graph of a PCCC and its partitions is shown in

Figure 27.

There are two types of messages that are communicated between sub-blocks. First,

the messages associated with the information bits, i.e. the extrinsic information,

which is communicated between two constituent codes in the traditional approach.

Second, the messages that are related to the states in window boundaries; we call

them state messages. In fact we have introduced new messages that are passed

between sub-blocks at each iteration. These messages are the same as alpha and

SISO

1

:

Backward: y

N1

y

N2

. . . y

1

y

0

b

N

b

N1

. . . b

2

b

1

b

0

Forward: y

0

y

1

. . . y

N2

y

N1

a

0

a

1

. . . a

N2

a

N1

a

N

Output: x

0

x

1

. . . x

N2

x

N1

SISO

2

:

Backward: y

2N1

y

2N2

. . . y

N+1

y

N

B

2N

b

2N1

. . . b

N+2

b

N+1

b

N

Forward: y

N

y

N+1

. . . y

2N2

y

2N1

a

N

a

N+1

. . . a

2N2

a

2N1

a

2N

Output: x

N

x

N+1

. . . x

2N2

x

2N1

.

.

.

SISO

M

:

Backward: y

MN1

y

MN2

. . . y

(M1)N+1

y

(M 1)N

b

MN

b

MN1

. . . b

(M1)N+2

b

(M1)N+1

b

(M1)N

Forward: y

(M1)N

y

(M1)N+1

. . . y

MN2

y

MN1

a

(M1)N

a

(M1)N+1

. . . a

MN2

a

MN1

a

N

Output: x

(M1)N

x

(M1)N+1

. . . x

MN2

x

MN1

Fig. 25 Timing diagram of the parallel SISOs

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 25 of 38 July 17, 2007 16:37

3.5 Parallel Turbo Decoder 25

Backword: y

N1

y

N2

y

1

y

0

b

N

b

N1

b

2

b

1

b

0

Forword: y

0

y

1

y

N2

y

N1

a

0

a

1

a

N2

a

N1

a

N

Output: x

0

x

1

x

N2

x

N1

Fig. 26 Timing diagram of the parallel SISOs in vector notation

beta variables that are computed in forward and backward recursion of the BCJR

algorithm. In the rst iteration there is no prior knowledge available about the state

probabilities. Therefore the messages are set to equal probability for all the states. In

each iteration, these messages are updated and passed across the border of adjacent

partitions.

The optimum way to process a window is the serial processing using forward

and backward recursions; i.e. BCJR algorithm. Therefore each window processor

is a SISO. The processing of the windows in two constituent codes can be run in

parallel. However, when we discuss the interleaver for the parallel decoder, we will

nd out that this is not necessary. In parallel turbo code, when the constituent codes

are the same we can share the SISO blocks for all constituent codes. Therefore the

architecture of the decoder of the choice only needs half of the processors as it is

shown in Figure 28.

Table III shows the parameters of a parallel decoder. For window size at two

extremes, the approach is reduced to known methods. If window size is B, and the

number of windows is 1, it turns out to the traditional approach. If the window size

is 1, the architecture reduces to a fully parallel decoder, which was proposed by

Frey et al. [13]. It should be noted that the memory requirement for all cases is the

same.

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7 c1

x 8 x x x x x x x

0 1 2 3 4 5 6 7

2 3 4 5 6 1 7 0

0 1 2 3 4 5 6 7

c2

x 8 x x x x x x x

Fig. 27 Partitioned graph of a simple PCCC

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 26 of 38 July 17, 2007 16:37

26 3 High-speed Turbo Decoders

W

1

a

0

b

0

x

2

W

2

a

N

b

N

...

W

M

a

2N

b

2N

a

MN

b

MN

Interleaver/Deinterleaver

y

2

x

1

y

1

x

M

y

M

a

MN-N

b

MN-N

Fig. 28 Parallel turbo decoder with shared processors for two constituent codes

Processing time is the time needed to decode one block. Since all windows are

processed at the same time, each SISO is done after T

w

. We assume that all message-

passing computation associated with one state constraint node is done in one clock

cycle (T

clk

). We have I iterations and each iteration has two constituent codes, so it

takes 2I T

W

to complete the decoding. It is worth mentioning that the processing

time determines the latency as well. Therefore any speed gain is equivalent to lower

latency.

Processing load is the amount of computations that we need. The processing load

for each SISO is proportional to the number of the state constraints. Hence, it is kB,

where k is a constant factor which depends on the complexity of the state constraints.

It should be noted that processing load in serial and parallel SISO are the same.

Therefore the total processing load is 2I kB.

3.6 Speed Gain and Efciency

3.6.1 Denitions

Two characteristic factors should be studied as performance gures. One is the

speed gain and the other is the efciency. In ideal parallelization the efciency

is always 1. It means that there is no extra processing load needed for parallel

processing.

Table III The Decoder Parameters

Parameter Denition

N Window size

M Number of windows (SISOs)

B = M N Block size

I Number of iterations

T

W

= 2N T

clk

Window Processing Time

T = 2I T

W

Processing Time (Latency)

P = k 2I B Processing Load

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 27 of 38 July 17, 2007 16:37

3.6 Speed Gain and Efciency 27

They are dened as follows:

Speed gain = T

0

/T

Efciency = P

0

/P

Where T

0

and P

0

are the processing time and processing load for the serial

approach, i.e. W = B case. The factors can be further simplied to:

Speed gain = M I

0

/I

Efciency = I

0

/I

This is a very interesting result. The speed gain and the efciency are proportional

to the ratio between number of iterations needed for serial case and parallel case.

If the number of iterations required for the parallel case is the same as the serial

case, we enjoy a speed gain of M without degrading the efciency, which is ideal

parallelization. Therefore we should look at the number of iterations required for a

certain performance to further quantify the characteristic factors. In next section we

will investigate these factors with some simulations.

3.6.2 Simulation Results

For simulations, a PCCC with block size of 4,800 is chosen. The rst constituent

code is a rate 1/2 of systematic code and the second code is a rate one nonsystematic

recursive code. The feed forward and feedback polynomials are the same for both

codes and are 1 + D + D

3

and 1 + D

2

+ D

3

, respectively. Thus coding rate is 1/3.

The simulated channel is an additive white Gaussian noise (AWGN) channel. The bit

error rate (BER) performance of the proposed high-speed decoder has been simulated

for window sizes of N = 256, 128, 64, 48, 32, 16, 8, 4, 2, and 1.

The rst observation was that this structure does not sacrice performance for

speed. We can always increase the maximum number of iterations to get simi-

lar performance as of the serial decoder. The maximum number of iterations for

each case is chosen such that the BER performance of the decoder equals that

of the serial decoder after 10 iterations (I

0

= 10). Figure 29 shows the BER

performance of the decoder with different window sizes. The curves are almost

indistinguishable.

However, in practice, the iterations are stopped based on a criterion that shows

the decoded data is reliable or correct. We have simulated such a stopping criterion

in order to obtain the average number of iterations needed. The stopping rule that

we use is the equality between the results of two consecutive iterations. The average

number of iterations is used for the efciency computation. The average number of

iterations for low signal to noise ratio is the maximum number of iterations for each

window size.

Efciency and speed gain of the parallel decoder with different window sizes is

shown in Figure 30. It clearly shows that we have to pay some penalty in order

03-Abbasfar-c03 SDO003-abbasfar (Typeset by spi publisher services, Delhi) 28 of 38 July 17, 2007 16:37

28 3 High-speed Turbo Decoders

Fig. 29 Performances of parallel decoder

Fig. 30 Efciency and speed gain