Professional Documents

Culture Documents

A Es Implementation On Open CL

Uploaded by

Phuc Hoang0 ratings0% found this document useful (0 votes)

29 views6 pagesThis document summarizes a study on implementing the Advanced Encryption Standard (AES) symmetric key cryptography algorithm using OpenCL for multi-core and many-core architectures. It describes AES and its implementation (clAES) in OpenCL, which allows the code to run across different processor types including GPUs. Benchmark results are presented comparing performance of clAES on various multi-core systems. The goal is to develop a full OpenSSL library implementation that utilizes heterogeneous computing devices.

Original Description:

Original Title

A Es Implementation on Open Cl

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document summarizes a study on implementing the Advanced Encryption Standard (AES) symmetric key cryptography algorithm using OpenCL for multi-core and many-core architectures. It describes AES and its implementation (clAES) in OpenCL, which allows the code to run across different processor types including GPUs. Benchmark results are presented comparing performance of clAES on various multi-core systems. The goal is to develop a full OpenSSL library implementation that utilizes heterogeneous computing devices.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

29 views6 pagesA Es Implementation On Open CL

Uploaded by

Phuc HoangThis document summarizes a study on implementing the Advanced Encryption Standard (AES) symmetric key cryptography algorithm using OpenCL for multi-core and many-core architectures. It describes AES and its implementation (clAES) in OpenCL, which allows the code to run across different processor types including GPUs. Benchmark results are presented comparing performance of clAES on various multi-core systems. The goal is to develop a full OpenSSL library implementation that utilizes heterogeneous computing devices.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 6

The AES implantation based on OpenCL for multi/many core architecture

Osvaldo Gervasi, Diego Russo

Dept. of Mathematics and Computer Science

University of Perugia

Via Vanvitelli,1 06123 Perugia, Italy

osvaldo@unipg.it, diegor.it@gmail.com

Flavio Vella

Istituto Nazionale di Fisica Nucleare

Universit` a degli Studi di Perugia

Perugia, Italy

avio.vella@pg.infn.it

AbstractIn this article we present a study on an imple-

mentation, named clAES, of the symmetric key cryptography

algorithm Advanced Encryption Standard (AES) using the

Open Computing Language (OpenCL) emerging standard. We

will show a comparison of the results obtained benchmarking

clAES on various multi/many core architectures. We will also

introduce the basic concepts of AES and OpenCL in order to

describe the details of clAES implementation.

This study represents a rst step in a broader project which

nal goal is to develop a full OpenSSL library implementation

on heterogeneous computing devices such as multi-core CPUs

and GPUs.

Keywords-AES; GPU Computing; cryptography; multi/many

core architectures; parallel computing.

I. INTRODUCTION

Recently, modern GPUs (Graphical Processing Units),

with their high number of parallel stream processors, are

more and more used for general purpose applications that

require a considerable computational power. In particular,

the adoption of GPU devices in cryptographic applications

is very important [1]. This domain is known as GPU

computing.

The major vendors of graphic processors are releasing

hardware products and programming frameworks that enable

the development of applications able to fully exploit the

GPU computational power.

However applications developed with these frameworks

on such hardware devices are not easily portable among

different architectures. This problem should be overcome by

applications that comply to the Open Computing Language

(OpenCL) emerging standard [2], which vendors have com-

mitted to foster.

OpenCL is the rst open royalty-free standard for parallel

cross-platform programming of modern processors such

as GPUs and many-core CPUs. OpenCL is dened and

supported by the Khronos Group

1

, a consortium jointly

held by the largest companies in the industry and many

other promoters, supporters and members of academia. The

Kronos Group purpose is to dene open standards for the

use and proliferation of multiplatform parallel computing.

1

http://www.khronos.org/

The denition of version 1.0 of the standard has been

published in February 2009 and GPU vendors have already

released OpenCL compatible driver and development envi-

ronments for their products.

II. AES

A big role in current encryption communications and

security panorama is played by the Advanced Encryption

Standard (AES) algorithm [3]. AES is a valid instrument

to encrypt data in applications ranging from personal to

highly condential domains. The algorithm is developed

by Joan Daemen and Vincent Rijmen that submitted it to

AES selection process with Rindael codename (derived

by inventors name) [4]. Due to its characteristics, it can

greatly benet from a parallel implementation [5] and in

particular from a GPU implementation [6].

As a matter of fact AES operates on data split in 4x4

matrix of bytes, called the state, and uses a symmetric

key of size 128, 192, or 256 bits. The algorithm consists

in several execution cycles that convert the original data

into encrypted/decrypted data. Each cycle consists of several

steps, including the rst one, which depends on the key

length. The following algorithm is used to encrypt data:

KeyExpansion using Rijndaels key schedule

Add the First round key to the state before starting the

rounds with AddRoundKey when every byte of the state

is combined with the round key

First Round to n-1 Rounds

1) SubBytes on linear substitution where every

byte is replaced by another one

2) ShiftRows transposition where every row of the

state is cyclically shifted in a number of steps

3) MixColumns mixes the columns of the state

4) AddRoundKey every byte of the state is com-

bined with the round key

Final Round (no MixColumns)

1) SubBytes

2) ShiftRows

3) AddRoundKey

The algorithm used to decrypt data is the following:

KeyExpansion using Rijndaels key schedule

2010 International ConIerence oI Computational Science and Its Applications

!"#$%$"&!'$(!!!$")*% ,-&.%% / -%*% 0111

230 *%.**%!)04456.-%*%.77

*-!

2010 International ConIerence on Computational Science and Its Applications

!"#$%$"&!'$(!!!$")*% ,-&.%% / -%*% 0111

230 *%.**%!)04456.-%*%.77

*-!

Add the Last round key to the state before starting the

rounds with AddRoundKey when every byte of the state

is combined with the round key

Final Round down to second round

1) InvShiftRows transposition where every row of

the state is cyclically shifted in a number of steps

2) InvSubBytes on linear substitution where every

byte is replaced by another one

3) AddRoundKey every byte of the state is com-

bined with the round key

4) InvMixColumns mixes the columns of the state

First Round (no InvMixColumns)

1) InvShiftRows

2) InvSubBytes

3) AddRoundKey

AES uses the following structures:

Forward S-box: is generated by determining the multi-

plicative inverse for a given number in Rijndaels nite

eld. The multiplicative inverse is then transformed

using an afne transformation. Forward S-box is used

to encrypt data.

Inverse S-box: is simply the S-box run in reverse. First

it is calculated the inverse afne transformation of the

input value and then the multiplicative inverse. Inverse

S-box is used to decrypt data.

RCON: The round constant word array. It is used by

KeyExpansion and it is the exponentiation of 2 to a

user-specied value t performed in Rijndaels nite

eld.

For complete details about AES refer to references [3]

and [4].

III. OPENCL BASE CONCEPT

OpenCL is an open standard aimed at providing a pro-

gramming environment suitable to access heterogeneous

architectures. In particular OpenCL allows to execute com-

putational programs on multi many-core processors. Consid-

ering the increasing availability of such types of processors,

OpenCL is playing a crucial role to enable the wide access of

portable applications to innovative computational resources.

To achieve this aim, various levels of abstraction have been

introduced in the OpenCL model. OpenCL can be seen as a

hierarchical collection of models from close-to-metal layer

to the higher layer. The OpenCL 1.0 specication is made

up of three main layer:

Platform: the platform layer performs an abstraction

of the number and type of computing devices in the

hardware platform. At this level are made available

to the developer the routines to query and to manage

the computing device, to create the contexts and work-

queues to submit instructions on it (it is called kernel).

Execution: is based on the concept of kernel. The kernel

is a collection of instructions that are executed on

the computing device,GPU or CPU multicore, called

OpenCL device. The execution of OpenCL applications

can be divided in two parts: host program and kernel

program. The host program is executed on CPU, denes

the context (platform layer) for the kernels and manages

their execution. Citing the OpenCL specication ...

when a kernel is submitted for execution by the host,

an index space is dened. An instance of the kernel

executes for each point in this index space. This kernel

instance is called a work-item and is identied by its

point in the index space, which provides a global ID

for the work-item. Each work-item executes the same

code on distinguished data. ... [7]. This denition

highlights a very important concept: the higher number

of cores (of CPU or GPU) the greater number of

work-items will be executed in parallel. Work-items are

organized into work-groups providing a more coarse-

grained decomposition of the index space.

Language: the language specication describes the syn-

tax and programming interface for writing compute ker-

nels (set of instructions to execute on computing device

such as GPUs and multi-core CPUs). The language

used is based on a subset of ISO standard C99 [8].

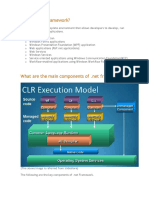

To understand in depth OpenCL, we have to analyze its

memory model. The gure 1 shows the OpenCL device

architecture, with memory regions and how they are related

to the platform model.

Figure 1. OpenCL device architecture with memory scopes

As you can see, there are four memory level:

Global Memory: permits read/write to all work-items in

all work-groups. It is advisable to minimize transfers to

and from this type of memory.

Constant Memory: global memorys section that is

immutable during kernel execution.

Local Memory: permits read/write to all work-items

owned by a work-group. Can be used to allocate

variables shared by in a work-group.

*(% *(%

Private Memory: permits read/write to a work-item.

Variables into private memory are not visible to another

work-items.

The application running on the host uses the OpenCL

API to create memory objects in global memory, and to

enqueue memory commands that operate on these memory

objects. Besides you can synchronize enqueue command by

command-enqueue barrier or using contexts events.

IV. CLAES IMPLEMENTATION

Here is reported the high level description of our imple-

mentation. All steps except the second-last one are executed

in CPU mode. Instead Perform kernel is executed by the

OpenCL device.

1) Read input le (plain text or ciphered): read from

console the le to encrypt or decrypt

2) Read AES parameters: across interactive input, key

length, the key and the action to perform (encryption

or decryption) are typed by the user

3) Transfer memory objects to device global memory:

through OpenCL API it transfers all buffer memory

objects to device global memory. Objects consist of:

input le, output le, SBOX or RSBOX (it depends

by the action) and others memory buffers.

4) Key expansion: this step is executed only one time

for execution. It takes the key and with Rijndaels key

schedule expands short key into a number of separate

round keys.

5) Perform kernel: this step is executed by OpenCL

device. Some sub-operations are included in a unique

kernel that uses the shared memory for optimal execu-

tion to minimize the read/write latency from/to global

memory. The Shared memory is used like a memory

buffer, where you can store intermediates states. As

soon as the last state is computed, this buffer is copied

into the global memory.

SubBytes: each byte is update using a SBOX

ShiftRows: each row is shifted by a certain offset

MixColumns: each column is multiplied with a

x polynomial c(x)

AddRoundKey: the subkey is combined with the

state with a XOR

The above steps are serial functions called by the ker-

nel. The kernel uses data-parallel paradigm executing

a lot of operations in multiple data domains. In fact,

the input le is splitted into multiple parts depending

on the local work size (number of work-item for work-

group). Each Compute Unit of the OpenCL device

processes the workload in a parallel way.

6) Transfer memory objects from device global mem-

ory: when the kernel nishes its computation, in the

global memory there is an area with output data.

Through OpenCL API it transfers this area from

OpenCL device to hosts RAM and eventually into

the hard disk or other mass storage.

An important aspect of our approach is the use of uchar4

data type. Vector literals can be used to create vectors from

a set of scalars, or vectors. In this case we have used a

vector of unsigned char that counts of 4 elements. This is

due to the size of the state that is a matrix 4x4. With a single

variable it addresses a single row of the state. Following this

philosophy you can view input le like a big array of uchar4

where each element is a row. Besides, two uchar4 memory

buffer are used in shared memory to store transitional states.

An example of uchar4 is:

uchar4 test;

test.x = a;

test.y = b;

test.z = c;

test.w = d;

--

test = (uchar4)(a,b,c,d);

For example the function ShiftRows is implemented as

follows, where row is a row of the state and j is the row

number:

uchar4

shiftRows(uchar4 row, unsigned int j)

{

uchar4 r = row;

for(uint i=0; i < j; ++i)

{

r = r.yzwx;

}

return r;

}

When we operate with the columns of the state we should

have synchronized all rows of the state. For this reason we

have to use a lock to synchronize the rows before executing

the MixColumns function.

V. CLAES PERFORMANCE

In this section we describe the hardware used for testing

the performance of the AES algorithm considering the serial

[9] and the OpenCL parallel implementation.

A. Hardware description

clAES has been tested on two video card technologies, the

ATI Firestream 9270 and the Nvidia GeForce 8600 GT using

the two vendors implementation of the OpenCL driver [10],

[11], and on a CPU Intel Duo E8500 device with the AMD

OpenCL driver. The output of the OpenCL query on the

three devices, the ATI FireStream 9270, the CPU Intel Duo

E8500, and the Nvidia GeForce 8600 GT, is the following:

Device ATI RV770

CL_DEVICE_TYPE: CL_DEVICE_TYPE_GPU

CL_DEVICE_MAX_COMPUTE_UNITS: 10

CL_DEVICE_MAX_WORK_ITEM_SIZES: 256 / 256 / 256

CL_DEVICE_MAX_WORK_GROUP_SIZE: 256

CL_DEVICE_MAX_CLOCK_FREQUENCY: 750 MHz

CL_DEVICE_IMAGE_SUPPORT: 0

CL_DEVICE_GLOBAL_MEM_SIZE: 512 MByte

CL_DEVICE_LOCAL_MEM_SIZE: 16 KByte

CL_DEVICE_MAX_MEM_ALLOC_SIZE: 256 MByte

*(* *(*

CL_DEVICE_QUEUE_PROPERTIES: CL_QUEUE_PROFILING_ENABLE

Device Intel(R) Core(TM)2 Duo CPU E8500 @ 3.16GHz

CL_DEVICE_TYPE: CL_DEVICE_TYPE_CPU

CL_DEVICE_MAX_COMPUTE_UNITS: 2

CL_DEVICE_MAX_WORK_ITEM_SIZES: 1024 / 1024 / 1024

CL_DEVICE_MAX_WORK_GROUP_SIZE: 1024

CL_DEVICE_MAX_CLOCK_FREQUENCY: 3166 MHz

CL_DEVICE_IMAGE_SUPPORT: 0

CL_DEVICE_GLOBAL_MEM_SIZE: 1024 MByte

CL_DEVICE_LOCAL_MEM_SIZE: 32 KByte

CL_DEVICE_MAX_MEM_ALLOC_SIZE: 512 MByte

CL_DEVICE_QUEUE_PROPERTIES: CL_QUEUE_PROFILING_ENABLE

Device GeForce 8600 GT

CL_DEVICE_TYPE: CL_DEVICE_TYPE_GPU

CL_DEVICE_MAX_COMPUTE_UNITS: 4

CL_DEVICE_MAX_WORK_ITEM_SIZES: 512 / 512 / 64

CL_DEVICE_MAX_WORK_GROUP_SIZE: 512

CL_DEVICE_MAX_CLOCK_FREQUENCY: 1188 MHz

CL_DEVICE_IMAGE_SUPPORT: 1

CL_DEVICE_GLOBAL_MEM_SIZE: 255 MByte

CL_DEVICE_LOCAL_MEM_SIZE: 16 KByte

CL_DEVICE_QUEUE_PROPERTIES: CL_QUEUE_PROFILING_ENABLE

Considering the reported hardware information, it is im-

portant to underline the following aspects:

CL DEVICE MAX COMPUTE UNIT: this value repre-

sents the number of computing devices available; re-

spectively ten for the ATI Firestream 9270 and two for

the CPU Intel E8500.

CL DEVICE MAX MEM ALLOC SIZE: represents the

maximum dimension of the allocatable memory space

for a single variable. The ATI Firestream 9270 value,

256, limits in such platform the clAES encryption to

le size up to 256 Megabytes.

CL QUEUE PROFILING ENABLE: enabling this ag is

possible to evaluate the execution time of clAES in the

kernel program and the time of transfer of data from/to

global memory (space of memory in VRAM of GPU

device or space of memory reservation in RAM for

CPU device). This ag has been activated to calculate

the values reported in section V-B.

B. Performance tests

In this section we will present the performance tests of

clAES made on three different devices using a key size of

192 bit. In gure 2 are plotted the kernel execution times

(in ms) of the ATI Firestream 9270 (green curve) and of

the CPU Intel E8500. The study of the CPU Intel E8500

has been performed executing the serial implementation of

clAES (orange curve) and the OpenCL implementation of

clAES to exploit the many core property of such CPU (blue

curve).

We observe that considering a le size of 128B the

execution time on the ATI Firestream 9270 is higher than on

the CPU (serial or OpenCL implementations), because of the

cost we need to pay to access the GPU device. Considering

a le size of 256B, the measured kernel execution time on

the ATI Firestream 9270 is lower than that measured on the

Figure 2. Plot of the kernel execution times on the ATI Firestream 9270

(green curve), on the CPU Intel E8500 Dual Core using the OpenCL

implementation (blue curve) and on the same CPU using the serial

implementation (orange curve) as a function of the size of the le to encrypt.

CPU executing the serial implementation, and higher than

that measured in the CPU with the OpenCL implementation.

From le size of 1KB the ATI Firestream 9270 is the fastest

device, with a progressive increase in performance with the

input le size.

In Figures 3 and 4 the kernel execution times are reported

for small input le sizes (up to 2KB), respectively, without

counting the time needed to transfer the data in the GPU

memory and including it. We can observe that the time

necessary to copy the input data to the GPU memory

plays a role, but it is not the main reason that determines

the higher execution times observed for the OpenCL GPU

implementation for small input le sizes.

Figure 3. Plot of the kernel execution times on the ATI Firestream 9270

(green curve), on the CPU Intel E8500 Dual Core using the OpenCL

implementation (blue curve) and on the same CPU using the serial

implementation (orange curve) for input le sizes in the range 128 2048

Bytes, ignoring the time required to copy the input (output) data into (from)

the OpenCL device global memory.

*(- *(-

We can observe that the transfer of the input data in the

GPU memory inuences the total time measured for the

kernel execution and determines an higher value of the input

le size to which the curve of the execution times in the

GPU (green curve) crosses those related to the execution

times in the CPU, considering the serial (orange curve)

and the parallel (green curve) implementations of the AES

algorithm.

Figure 4. Plot of the kernel execution times on the ATI Firestream 9270

(green curve), on the CPU Intel E8500 Dual Core using the OpenCL

implementation (blue curve) and on the same CPU using the serial

implementation (orange curve) for input le sizes in the range 128 2048

Bytes, including the time required to copy the input (output) data into

(from) the OpenCL device global memory.

We have performed some tests also using the graphic

device Nvidia 8600GT.

In gure 5 are plotted the kernel execution times on

two graphic processors: the Nvidia GeForce 8600GT (blue

curve) and the ATI Firestream 9270 (green curve).

It is important to notice that the porting of the code in

the three tested devices (the Nvidia and ATI GPUs and the

multicore CPU Intel) was very simple, being sufcient to

change the PATH variable associated to the related OpenCL

library.

Finally in gure 6 we report in a graph a comparison

between the multicore CPU Intel E8500 and the GPU ATI

Firestream 9270.

The three curves represent the values of the following

ratios as a function of the input le size:

(kernel execution time on CPU Intel E8500, OpenCL

parallel implementation) / (kernel execution time on

GPU ATI Firestream 9270) (blue curve): the curve

shows that the ATI Firestream 9270 execution time is

higher than that of multicore CPU Intel E8500 for input

le size up to 1024 bytes, approximately. If we count

also the time needed to copy the input data in the GPU

local memory (see gure 3), the le size at which the

execution times is the same in both devices, increases

Figure 5. Kernel execution times to encrypt les which size ranges from

128 bytes to 2048 bytes for the Nvidia GeForce 8600GT (blue curve) and

the ATI Firestream 9270 (green curve).

slightly. For larger input le sizes, the GPU performs

better than the multicore CPU.

(kernel execution time on the CPU Intel E8500 using

the serial implementation) / (kernel execution time on

GPU ATI Firestream 9270) (green curve): the curve

shows that the ATI FireStream 9270 is less efcient

than the CPU Intel E8500 (serial implementation) for

very small input le size, because of the time required

to access the GPU device. Starting from the tested input

le size value of 256B, the GPU is more efcient than

the CPU. Up to input le sizes of 32KB the efciency

increase dramatically with the increase of the input

le size. For input les larger than 32KB, the gain in

efciency remains approximately constant.

(kernel execution time on the CPU Intel E8500 using

the serial implementation) / (kernel execution time on

CPU Intel E8500, OpenCL parallel implementation)

(orange curve): the curve shows that the OpenCL

implementation is much more efcient of the serial

implementation, and is really able to exploit the multi

/ many cores properties of the CPU. However also in

such case for small valued of the input le size the

gain in efciency is increasing with the input le size,

ranging from 1.85 to 4.

In conclusion, respect to the serial implementation of

the code executed on a CPU Dual Core Intel E8500, we

observe an increase in performance of a factor of 4 when

considering its OpenCL implementation, demonstrating a

capability of exploiting the many core CPUs property, and

a factor of 11 on the ATI FireStream 9270, ignoring the

time necessary for copying data in the GPU memory. We

expect even a more signicant improvement in performance

on the ATI Firestream 9270 after having revised the OpenCL

implementation and optimizing the code.

*(( *((

Figure 6. Comparison between the multicore CPU Intel E8500 and

the GPU ATI Firestream 9270, expressed by the following ratios: (kernel

execution time on CPU Intel E8500, OpenCL parallel implementation) /

(kernel execution time on GPU ATI Firestream 9270) (blue curve), (kernel

execution time on the CPU Intel E8500 using the serial implementation)

/ (kernel execution time on GPU ATI Firestream 9270) (green curve),

and (kernel execution time on the CPU Intel E8500 using the serial

implementation) / (kernel execution time on CPU Intel E8500, OpenCL

parallel implementation) (orange curve).

VI. CONCLUSION

In this paper we presented the results of the performance

tests made running an OpenCL implementation of the AES

cryptographic algorithm on GPUs and on multi core CPU.

The results demonstrates the full capability of the OpenCL

standard to exploit the many core property of CPUs and

GPUs.

These preliminary, very good results, can lead to better

performances on GPUs after a further optimization of the

OpenCL code.

As for scalability and portability, we demonstrated how

OpenCL applications can scale on various heterogeneous

computing resources.

Our future work will involve the following activities:

optimization of clAES code

development of a patch for the inclusion of the OpenCL

approach in OpenSSL [12]

development of the OpenCL implementation of all

cryptographic algorithms adopted in OpenSSL

We are condent that OpenCL is a promising standard

for parallel programming and hopefully it will be extended

to other platforms cooperating with actual parallel pro-

gramming models (for example POSIX Threads, MPI) and

parallel languages.

REFERENCES

[1] D. L. Cook, J. Ioannidis, A. D. Keromytis, J. Luck, Cryp-

toGraphics: Secret Key Cryptography Using Graphics Cards,

RSA Conference, Cryptographers Track (CT-RSA),2005.

[2] Khronos Group, OpenCL 1.0 Specication,

http://www.khronos.org/opencl

[3] National Institute of Standards and Technology (NIST), FIPS

197: Advanced Encryption Standard (AES), 1999.

[4] J. Daemen, V. Rijmen, AES Proposal: Rijndael. Original

AES Submission to NIST, 1999. AES Processing Standards

Publications,

http://www.csrc.nist.gov/publications/ps/ps197/ps-197.pdf

[5] N. Sklavos, O. Koufopavlou, Architectures and VLSI Imple-

mentations of the AES-Proposal Rijndael, IEEE Transactions

on Computers, Vol. 51, Issue 12, pp. 1454-1459,2002.

[6] Svetlin A. Manavski, CUDA compatible GPU as an efcient

hardware accelerator for AES cryptography, In Proc. IEEE

International Conference on Signal Processing and Communi-

cation, ICSPC 2007, (Dubai, United Arab Emirates), pp.65-68,

2007.

[7] Khronos Group, Kernel denition,page 20 of

http://www.khronos.org/registry/cl/specs/opencl-1.0.48.pdf

[8] The features of C99 are described in the document Rationale

for International Standard Programming Languages C ,

Revision 5.10, available at the URL: http://www.open-std.org/

jtc1/sc22/wg14/www/C99RationaleV5.10.pdf

[9] Hoozi AES Serial implementation, www.hoozi.com/Articles/

AESEncryption.htm

[10] AMD, ATI Stream SDK v2.0 Beta,

http://developer.amd.com/gpu/ATIStreamSDKBetaProgram/

Pages/default.aspx

[11] Nvidia,OpenCL GPU Computing Support on NVIDIAs

CUDA Architecture GPUs,

http://www.nvidia.com/object/cuda opencl.html

[12] OpenSSL Project http://www.openssl.org/

*(7 *(7

You might also like

- A Package For Opencl Based Heterogeneous Computing On Clusters With Many Gpu DevicesDocument7 pagesA Package For Opencl Based Heterogeneous Computing On Clusters With Many Gpu DevicesclaudiojlfNo ratings yet

- Parallel Implementation of Cryptographic Algorithm Aes Using Opencl On GpuDocument5 pagesParallel Implementation of Cryptographic Algorithm Aes Using Opencl On Gpuvijaya gunjiNo ratings yet

- 2nd Session Unit III 1Document26 pages2nd Session Unit III 1balakrishnaNo ratings yet

- Performance and Overhead in A Hybrid Reconfigurable ComputerDocument8 pagesPerformance and Overhead in A Hybrid Reconfigurable ComputerHarish MudimelaNo ratings yet

- NEON Crypto: Abstract. NEON Is A Vector Instruction Set Included in A Large FracDocument20 pagesNEON Crypto: Abstract. NEON Is A Vector Instruction Set Included in A Large FracPosegeNo ratings yet

- Creating HWSW Co-Designed MPSoPCs From High Level Programming ModelsDocument7 pagesCreating HWSW Co-Designed MPSoPCs From High Level Programming ModelsRamon NepomucenoNo ratings yet

- Unit 6 Chapter 2 Parallel Programming Tools OpenCLDocument61 pagesUnit 6 Chapter 2 Parallel Programming Tools OpenCLPallavi BhartiNo ratings yet

- DNA Assembly With de Bruijn Graphs On FPGA PDFDocument4 pagesDNA Assembly With de Bruijn Graphs On FPGA PDFBruno OliveiraNo ratings yet

- Statistical Analysis With Software Application: Presented by BSMA 2-4Document68 pagesStatistical Analysis With Software Application: Presented by BSMA 2-4azy delacruzNo ratings yet

- Node Node Node Node: 1 Parallel Programming Models 5Document5 pagesNode Node Node Node: 1 Parallel Programming Models 5latinwolfNo ratings yet

- ERTS Unit 3Document38 pagesERTS Unit 3mohanadevi chandrasekarNo ratings yet

- Unit - 3Document42 pagesUnit - 3hejoj76652No ratings yet

- Verilog IntroductionDocument11 pagesVerilog Introductionsrikar_dattaNo ratings yet

- Lecture 10Document34 pagesLecture 10MAIMONA KHALIDNo ratings yet

- 3 Heterogeneous Computer Architectures: 3.1 GpusDocument16 pages3 Heterogeneous Computer Architectures: 3.1 GpusSoumya SenNo ratings yet

- Microprocessor Array SystemDocument7 pagesMicroprocessor Array SystemBsal SpktNo ratings yet

- Android DisklessDocument4 pagesAndroid DisklessVivek ParmarNo ratings yet

- Oss - 2023 - Claimed - 2307.06824Document17 pagesOss - 2023 - Claimed - 2307.06824Jagadeesh MNo ratings yet

- Processor Verification Using Open Source Tools and The GCC Regression Test Suite: A Case StudyDocument13 pagesProcessor Verification Using Open Source Tools and The GCC Regression Test Suite: A Case StudyPromachNo ratings yet

- Gpu Rigid Body Simulation Using Opencl: Bullet 2.X RefactoringDocument22 pagesGpu Rigid Body Simulation Using Opencl: Bullet 2.X RefactoringJaneNo ratings yet

- Embedded SW Development With ECosDocument35 pagesEmbedded SW Development With ECosRakesh MenonNo ratings yet

- Ioan Mang Raport Plagiat ucv-IC11Document12 pagesIoan Mang Raport Plagiat ucv-IC11OmRauNo ratings yet

- Amp ViiiDocument3 pagesAmp ViiiAnil Kumar GonaNo ratings yet

- Design and Implementation of Real Time Aes-128 On Real Time Operating System For Multiple Fpga CommunicationDocument6 pagesDesign and Implementation of Real Time Aes-128 On Real Time Operating System For Multiple Fpga Communicationmussadaqhussain8210No ratings yet

- Linux Multi Pa ThingDocument24 pagesLinux Multi Pa ThingJagadish MuttNo ratings yet

- Hands On Opencl: Created by Simon Mcintosh-Smith and Tom DeakinDocument258 pagesHands On Opencl: Created by Simon Mcintosh-Smith and Tom DeakinLinh NguyenNo ratings yet

- Csit3913 PDFDocument12 pagesCsit3913 PDFDeivid CArpioNo ratings yet

- Parallel ComputingDocument6 pagesParallel ComputingAsfa SaadNo ratings yet

- Technical Report: Frenetic: A High-Level Language For Openflow NetworksDocument20 pagesTechnical Report: Frenetic: A High-Level Language For Openflow NetworksAlexandre BorgesNo ratings yet

- Integration of Hardware Cryptography Acceleration On Embedded Systems Under Linux 2.6Document6 pagesIntegration of Hardware Cryptography Acceleration On Embedded Systems Under Linux 2.6kapilhaliNo ratings yet

- East West Institute of Technology: Department of Computer Science & Engineering (2021-22 Even Semester)Document11 pagesEast West Institute of Technology: Department of Computer Science & Engineering (2021-22 Even Semester)gangambikaNo ratings yet

- CA Classes-11-15Document5 pagesCA Classes-11-15SrinivasaRaoNo ratings yet

- KRNLDocument7 pagesKRNLPreeti Preeti PNo ratings yet

- Fpga: Digital Designs: Team Name:Digital DreamersDocument8 pagesFpga: Digital Designs: Team Name:Digital DreamersRishabhNo ratings yet

- OsperfDocument5 pagesOsperfNalgolasNo ratings yet

- Questions C#Document168 pagesQuestions C#Yorch CanulNo ratings yet

- Benchmarking State-of-the-Art Deep Learning Software ToolsDocument6 pagesBenchmarking State-of-the-Art Deep Learning Software ToolsLuis Dominguez LeitonNo ratings yet

- Task and Data Distribution in Hybrid Parallel SystemsDocument9 pagesTask and Data Distribution in Hybrid Parallel SystemsAlex AdamiteiNo ratings yet

- Linux: Company DeveloperDocument9 pagesLinux: Company Developertalwinder1508No ratings yet

- Survey About Satey Critical Automoblie AppsDocument5 pagesSurvey About Satey Critical Automoblie AppsMohamed SolimanNo ratings yet

- Tristram FP 443Document6 pagesTristram FP 443sandokanaboliNo ratings yet

- Lab Manual (Network Lab)Document27 pagesLab Manual (Network Lab)master9_2_11No ratings yet

- CD MulticoreDocument10 pagesCD Multicorebhalchimtushar0No ratings yet

- CG ManualDocument39 pagesCG Manualsanthosh reddyNo ratings yet

- (Redhat) Linux Important StuffDocument14 pages(Redhat) Linux Important StuffJagmohan JagguNo ratings yet

- Scoop Easy Draft PDFDocument11 pagesScoop Easy Draft PDFJPYadavNo ratings yet

- Sarthak DCN FileDocument14 pagesSarthak DCN FileOm DwivediNo ratings yet

- VHDL Tutorial: Peter J. AshendenDocument84 pagesVHDL Tutorial: Peter J. AshendenCristi MarcuNo ratings yet

- 4.Cn Lab Manual 2020Document41 pages4.Cn Lab Manual 2020shettyayush139No ratings yet

- AWP NotesDocument8 pagesAWP Noteschaubeyabhi7070No ratings yet

- Model For Cluster OmputingDocument10 pagesModel For Cluster Omputinghulla_raghNo ratings yet

- Parallel AES Encryption EnginesDocument12 pagesParallel AES Encryption EnginesmohamedNo ratings yet

- A New Lightweight Cryptographic Algorithm For Enhancing Data Security in Cloud ComputingDocument5 pagesA New Lightweight Cryptographic Algorithm For Enhancing Data Security in Cloud Computingraounek arifNo ratings yet

- Debugging Real-Time Multiprocessor Systems: Class #264, Embedded Systems Conference, Silicon Valley 2006Document15 pagesDebugging Real-Time Multiprocessor Systems: Class #264, Embedded Systems Conference, Silicon Valley 2006lathavenkyNo ratings yet

- Computer Architecture and OrganizationDocument50 pagesComputer Architecture and OrganizationJo SefNo ratings yet

- BD & CCDocument3 pagesBD & CCpratuaroskarNo ratings yet

- Lab2 Layered VerficiationDocument13 pagesLab2 Layered Verficiationfake godNo ratings yet

- E AddressBookProjectReportDocument55 pagesE AddressBookProjectReportSahil SethiNo ratings yet

- A Low Power Charge Based Integrate and Fire Circuit For Binarized Spiking NeuralDocument11 pagesA Low Power Charge Based Integrate and Fire Circuit For Binarized Spiking NeuralPhuc HoangNo ratings yet

- Efficient Non-Profiled Side Channel Attack Using Multi-Output Classification Neural NetworkDocument4 pagesEfficient Non-Profiled Side Channel Attack Using Multi-Output Classification Neural NetworkPhuc HoangNo ratings yet

- Cyber Security Intelligence and AnalyticsDocument1,476 pagesCyber Security Intelligence and AnalyticsJonathan Seagull LivingstonNo ratings yet

- Dop-DenseNet: Densely Convolutional Neural Network-Based Gesture Recognition Using A Micro-Doppler RadarDocument9 pagesDop-DenseNet: Densely Convolutional Neural Network-Based Gesture Recognition Using A Micro-Doppler RadarPhuc HoangNo ratings yet

- Zhang2012 PDFDocument13 pagesZhang2012 PDFPhuc HoangNo ratings yet

- 1709 01015Document32 pages1709 01015hrushiNo ratings yet

- LORA Water MonitoringDocument10 pagesLORA Water MonitoringPhuc HoangNo ratings yet

- PDA-based Portable Wireless ECG MonitorDocument4 pagesPDA-based Portable Wireless ECG MonitorPhuc HoangNo ratings yet

- InvMixColumn Decomposition and Multilevel Resource-2005Document4 pagesInvMixColumn Decomposition and Multilevel Resource-2005Phuc HoangNo ratings yet

- Underwater Optical Wireless CommunicationDocument30 pagesUnderwater Optical Wireless CommunicationPhuc HoangNo ratings yet

- Lower Bounds On Power-Dissipation For DSP Algorithms-Shanbhag96Document6 pagesLower Bounds On Power-Dissipation For DSP Algorithms-Shanbhag96Phuc HoangNo ratings yet

- Key Management Systems For Sensor Networks in The Context of The Internet of ThingsDocument13 pagesKey Management Systems For Sensor Networks in The Context of The Internet of ThingsPhuc HoangNo ratings yet

- 1709 01015Document32 pages1709 01015hrushiNo ratings yet

- Digital Signal Processing Techniques To Compensate For RF ImperfectionsDocument4 pagesDigital Signal Processing Techniques To Compensate For RF ImperfectionsPhuc HoangNo ratings yet

- Design of 512-Bit Logic Process-Based Single Poly EEPROM IPDocument9 pagesDesign of 512-Bit Logic Process-Based Single Poly EEPROM IPPhuc HoangNo ratings yet

- Canonical Signed Digit Representation For FIR Digital FiltersDocument11 pagesCanonical Signed Digit Representation For FIR Digital FiltersPhuc HoangNo ratings yet

- Active Semi 2014 PSG LED General Lighting Applications ReleasedDocument22 pagesActive Semi 2014 PSG LED General Lighting Applications ReleasedPhuc HoangNo ratings yet

- AES VLSI ArchitectureDocument11 pagesAES VLSI ArchitectureAbhishek JainNo ratings yet

- Single - and Multi-Core Configurable AESDocument12 pagesSingle - and Multi-Core Configurable AESshanmugkitNo ratings yet

- 14Document14 pages14suchi87No ratings yet

- 05325652Document7 pages05325652Banu KiranNo ratings yet

- A Digital Design Flow For Secure Integrated CircuitsDocument12 pagesA Digital Design Flow For Secure Integrated CircuitsDebabrata SikdarNo ratings yet

- Avnet Spartan-6 LX9 MicroBoard Configuration Guide v1 1Document43 pagesAvnet Spartan-6 LX9 MicroBoard Configuration Guide v1 1Phuc HoangNo ratings yet

- Newton Programme Vietnam Information Session Hanoi July 2015 Incl Presentation LinksDocument2 pagesNewton Programme Vietnam Information Session Hanoi July 2015 Incl Presentation LinksPhuc HoangNo ratings yet

- Ches02 AES SboxesDocument15 pagesChes02 AES SboxesPhuc HoangNo ratings yet

- Verilog HDL Testbench PrimerDocument14 pagesVerilog HDL Testbench Primernirmal_jate1No ratings yet

- Near-Field Magnetic Sensing System With High-Spatial Resolution and Application For Security of Cryptographic LSIs-2015 PDFDocument9 pagesNear-Field Magnetic Sensing System With High-Spatial Resolution and Application For Security of Cryptographic LSIs-2015 PDFPhuc HoangNo ratings yet

- A Novel Multicore SDR Architecture For Smart Vehicle Systems-Yao-Hua2012Document5 pagesA Novel Multicore SDR Architecture For Smart Vehicle Systems-Yao-Hua2012Phuc HoangNo ratings yet

- Adaptive Pipeline Depth Control For Processor Power ManagementDocument4 pagesAdaptive Pipeline Depth Control For Processor Power ManagementPhuc HoangNo ratings yet

- HP LaserJet Pro 400Document118 pagesHP LaserJet Pro 400Armando Curiel100% (1)

- Ayoun CV Process SafetyDocument5 pagesAyoun CV Process SafetyAyoun Ul HaqueNo ratings yet

- A Model For The Lean IT Organization - Mark McDonaldDocument5 pagesA Model For The Lean IT Organization - Mark McDonaldLucianoNo ratings yet

- Redbook Vol1part1 LPCBDocument755 pagesRedbook Vol1part1 LPCBvbgiri0% (1)

- Informer 37 PDFDocument32 pagesInformer 37 PDFthesmartsakthiNo ratings yet

- ADYEY Deep Dive 3Document28 pagesADYEY Deep Dive 3zeehenNo ratings yet

- Portable DC Ohm's Law Training BoardDocument44 pagesPortable DC Ohm's Law Training Boardsiony millanesNo ratings yet

- Id Ash Boards Turn Your Data Into InsightDocument10 pagesId Ash Boards Turn Your Data Into InsightThiago LimaNo ratings yet

- Electrical Equipment Check ListDocument3 pagesElectrical Equipment Check ListPuran Singh LabanaNo ratings yet

- Automated Total Body Imaging With Integrated Body MappingDocument4 pagesAutomated Total Body Imaging With Integrated Body MappingkberenangNo ratings yet

- ME406 LMod 11 of 2 LabDocument23 pagesME406 LMod 11 of 2 LabRosette BartolomeNo ratings yet

- Introduction To DC Motors: Experiment 13Document4 pagesIntroduction To DC Motors: Experiment 13Apna VeerNo ratings yet

- Exadata Patching Recipe V1.0 - NetsoftmateDocument48 pagesExadata Patching Recipe V1.0 - Netsoftmateraghu12421No ratings yet

- Pam PPM PWM Modulation Demodulation Trainer Scientech 2110Document1 pagePam PPM PWM Modulation Demodulation Trainer Scientech 2110Arkan ahmed husseinNo ratings yet

- Toshiba T1950 T1950CS T1950CT - Maintenance Manual AddendumToshiba T1950 T1950CS T1950CT - Maintenance Manual AddendumDocument18 pagesToshiba T1950 T1950CS T1950CT - Maintenance Manual AddendumToshiba T1950 T1950CS T1950CT - Maintenance Manual Addendumdeath914No ratings yet

- Microwave CommunicationsDocument79 pagesMicrowave CommunicationsKatyrynne GarciaNo ratings yet

- Finals PMTADocument3 pagesFinals PMTAStephanieNo ratings yet

- Victory Solution For Technical ConsultationsDocument4 pagesVictory Solution For Technical Consultationsamul prakashNo ratings yet

- Nordex N90 Turbine Specs SheetDocument16 pagesNordex N90 Turbine Specs SheetSaeedAhmedKhanNo ratings yet

- DiodeDocument39 pagesDiodeMr humanNo ratings yet

- Microprocessor and Microcontroller Kec 502Document2 pagesMicroprocessor and Microcontroller Kec 50220ME082 Yash litoriyaNo ratings yet

- Receptor TV Synaps - T20 T30 T50Document20 pagesReceptor TV Synaps - T20 T30 T50nikushorNo ratings yet

- 2023-01-06 15-29-26Document9 pages2023-01-06 15-29-26Lukass PedersenNo ratings yet

- Kenr5131kenr5131-02 PDocument60 pagesKenr5131kenr5131-02 PPaulo CNo ratings yet

- FS Input Sheet For L1 and Book and Bill ProjectsDocument4 pagesFS Input Sheet For L1 and Book and Bill ProjectsShreeNo ratings yet

- Chiller IQ Checklist - 2021.05Document2 pagesChiller IQ Checklist - 2021.05Yu-Chih PuNo ratings yet

- An Introduction To The CISSP & SSCP Certifications: Wilfred L. Camilleri, CISSPDocument49 pagesAn Introduction To The CISSP & SSCP Certifications: Wilfred L. Camilleri, CISSPchirag2beNo ratings yet

- Mekelle University Mekelle Institute of TechnologyDocument17 pagesMekelle University Mekelle Institute of Technologysltanbrhane50No ratings yet

- Motor Controls Troubleshooting of Electric MotorsDocument34 pagesMotor Controls Troubleshooting of Electric MotorsAdil RezoukNo ratings yet

- ProductsDocument7 pagesProductsAnonymous G1iPoNOKNo ratings yet

- iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]From EverandiPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]Rating: 5 out of 5 stars5/5 (2)

- iPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsFrom EverandiPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsRating: 5 out of 5 stars5/5 (2)

- Chip War: The Quest to Dominate the World's Most Critical TechnologyFrom EverandChip War: The Quest to Dominate the World's Most Critical TechnologyRating: 4.5 out of 5 stars4.5/5 (227)

- Chip War: The Fight for the World's Most Critical TechnologyFrom EverandChip War: The Fight for the World's Most Critical TechnologyRating: 4.5 out of 5 stars4.5/5 (82)

- CompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102From EverandCompTIA A+ Complete Review Guide: Core 1 Exam 220-1101 and Core 2 Exam 220-1102Rating: 5 out of 5 stars5/5 (2)

- iPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XFrom EverandiPhone X Hacks, Tips and Tricks: Discover 101 Awesome Tips and Tricks for iPhone XS, XS Max and iPhone XRating: 3 out of 5 stars3/5 (2)

- CompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002From EverandCompTIA A+ Complete Review Guide: Exam Core 1 220-1001 and Exam Core 2 220-1002Rating: 5 out of 5 stars5/5 (1)

- CompTIA Security+ All-in-One Exam Guide, Sixth Edition (Exam SY0-601)From EverandCompTIA Security+ All-in-One Exam Guide, Sixth Edition (Exam SY0-601)Rating: 5 out of 5 stars5/5 (1)

- CompTIA A+ Certification All-in-One Exam Guide, Eleventh Edition (Exams 220-1101 & 220-1102)From EverandCompTIA A+ Certification All-in-One Exam Guide, Eleventh Edition (Exams 220-1101 & 220-1102)Rating: 5 out of 5 stars5/5 (2)

- Mastering IoT For Industrial Environments: Unlock the IoT Landscape for Industrial Environments with Industry 4.0, Covering Architecture, Protocols like MQTT, and Advancements with ESP-IDFFrom EverandMastering IoT For Industrial Environments: Unlock the IoT Landscape for Industrial Environments with Industry 4.0, Covering Architecture, Protocols like MQTT, and Advancements with ESP-IDFNo ratings yet

- Amazon Web Services (AWS) Interview Questions and AnswersFrom EverandAmazon Web Services (AWS) Interview Questions and AnswersRating: 4.5 out of 5 stars4.5/5 (3)

- Arduino Sketches: Tools and Techniques for Programming WizardryFrom EverandArduino Sketches: Tools and Techniques for Programming WizardryRating: 4 out of 5 stars4/5 (1)

- How to Jailbreak Roku: Unlock Roku, Roku Stick, Roku Ultra, Roku Express, Roku TV with Kodi Step by Step GuideFrom EverandHow to Jailbreak Roku: Unlock Roku, Roku Stick, Roku Ultra, Roku Express, Roku TV with Kodi Step by Step GuideRating: 1 out of 5 stars1/5 (1)

- Amazon Echo Manual Guide : Top 30 Hacks And Secrets To Master Amazon Echo & Alexa For Beginners: The Blokehead Success SeriesFrom EverandAmazon Echo Manual Guide : Top 30 Hacks And Secrets To Master Amazon Echo & Alexa For Beginners: The Blokehead Success SeriesNo ratings yet

- CompTIA A+ Complete Practice Tests: Core 1 Exam 220-1101 and Core 2 Exam 220-1102From EverandCompTIA A+ Complete Practice Tests: Core 1 Exam 220-1101 and Core 2 Exam 220-1102No ratings yet

- Essential iPhone X iOS 12 Edition: The Illustrated Guide to Using iPhone XFrom EverandEssential iPhone X iOS 12 Edition: The Illustrated Guide to Using iPhone XRating: 5 out of 5 stars5/5 (1)

- Raspberry Pi Retro Gaming: Build Consoles and Arcade Cabinets to Play Your Favorite Classic GamesFrom EverandRaspberry Pi Retro Gaming: Build Consoles and Arcade Cabinets to Play Your Favorite Classic GamesNo ratings yet

![iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/728318688/198x198/f3385cbfef/1714829744?v=1)