Professional Documents

Culture Documents

Robability Undamentals: Sampling

Uploaded by

Lincs lincolnOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Robability Undamentals: Sampling

Uploaded by

Lincs lincolnCopyright:

Available Formats

19

CHAPTER 2

PROBABILITY FUNDAMENTALS

Statistical methods are based on random sampling. Four functions are used to characterize the

behavior of random variables:

1. The probability density function

2. The cumulative distribution function

3. The reliability function

4. The hazard function

If any one of these four functions is known, the others can be derived. This chapter describes

these functions in detail.

Sampling

Statistical methods are used to describe populations by using samples. If the population of

interest is small enough, statistical methods are not required; every member of the population

can be measured. If every member of the population has been measured, a condence interval

for the mean is not necessary, because the mean is known with certainty (ignoring measurement

error).

For statistical methods to be valid, all samples must be chosen randomly. Suppose the time to

fail for 60-watt light bulbs is required to be greater than 100 hours, and the customer veries

this on a monthly basis by testing 20 light bulbs. If the rst 20 light bulbs produced each month

are placed on the test, any inferences about the reliability of the light bulbs produced during

the entire month would be invalid because the 20 light bulbs tested do not represent the entire

month of production. For a sample to be random, every member of the population must have

an equal chance of being selected for the test.

Now suppose that when 20 light bulbs, randomly selected from an entire months production,

are placed on a test stand, the test is ended after 10 of the light bulbs fail. Table 2.1 gives an

example of what the test data might look like.

What is the average time to fail for the light bulbs? It obviously is not the average time to fail

of the 10 failed light bulbs, because if testing were continued until all 20 light bulbs failed,

10 data points would be added to the data set that are all greater than any of the 10 previous

data points. When testing is ended before all items fail, the randomness of the sample has

been destroyed. Because only 10 of the light bulbs failed, these 10 items are not a random

sample that is representative of the population. The initial sample of 20 items is a random sample

20

Accvivv~:vu Tvs:ixc

representative of the population. By ending testing after 10 items have failed, the randomness

has been destroyed by systematically selecting the 10 items with the smallest time to fail.

This type of situation is called censoring. Statistical inferences can be made using censored

data, but special techniques are required. The situation described above is right censoring; the

time to fail for a portion of the data is not known, but it is known that the time to fail is greater

than a given value. Right-censored data may be either time censored or failure censored. If

testing is ended after a predetermined amount of time, it is time censored. If testing is ended

after a predetermined number of failures, the data are failure censored. The data in Table 2.1

are failure censored. Time censoring is also known as Type I censoring, and failure censoring

is also known as Type II censoring.

The opposite of right censoring is left censoring. For a portion of the data, the absolute value is

not known, but it is known that the absolute value is less than a given value. An example of this

is an instrument used to measure the concentration of chemicals in solution. If the instrument

cannot detect the chemical below certain concentrations, it does not mean there is no chemical

present, but that the level of chemical is below the detectable level of the instrument.

Another type of right censoring is multiple censoring. Multiple censoring occurs when items

are removed from testing at more than one point in time. Field data are often multiple censored.

Table 2.2 gives an example of multiple censoring. A + next to a value indicates the item was

removed from testing at that time without failing.

TABLE 2.1

TIME TO FAIL FOR LIGHT BULBS

23 63 90

39 72 96

41 79

58 83

10 units survive for 96 hours without failing.

TABLE 2.2

MULTIPLE CENSORED DATA

112 172 220

145 + 183 225 +

151 184 + 225 +

160 191 225 +

166 + 199 225 +

There are two types of data: (1) continuous, and (2) discrete. Continuous variables are unlimited

in their degree of precision. For example, the length of a rod may be 5, 5.01, or 5.001 inches.

21

Pvon~niii:v Fuxu~:vx:~is

It is impossible to state that a rod is exactly 5 inches longonly that the length of the rod falls

within a specic interval. Discrete variables are limited to specic values. For example, if a

die is rolled, the result is either 1, 2, 3, 4, 5, or 6. There is no possibility of obtaining any value

other than these six values.

Probability Density Function

The probability density function, f x ( ), describes the behavior of a random variable. Typically,

the probability density function is viewed as the shape of the distribution. Consider the histo-

gram of the length of sh as shown in Figure 2.1.

Figure 2.1 Example histogram.

1

5

0

1

5

5

1

6

0

1

6

5

1

7

0

1

7

5

1

8

0

1

8

5

1

9

0

1

9

5

2

0

0

2

0

5

2

1

0

2

1

5

2

2

0

2

2

5

2

3

0

2

3

5

2

4

0

2

4

5

2

5

0

0

20

40

60

80

100

Length

F

r

e

q

u

e

n

c

y

A histogram is an approximation of the shape of the distribution. The histogram shown in Fig-

ure 2.1 appears symmetrical. Figure 2.2 shows this histogram with a smooth curve overlying

the data. The smooth curve is the statistical model that describes the populationin this case,

the normal distribution. When using statistics, the smooth curve represents the population, and

the differences between the sample data represented by the histogram and the population data

represented by the smooth curve are assumed to be due to sampling error. In reality, the differ-

ences could also be caused by lack of randomness in the sample or an incorrect model.

22

Accvivv~:vu Tvs:ixc

The probability density function is similar to the overlaid model shown in Figure 2.2. The area

below the probability density function to the left of a given value x is equal to the probability

of the random variable represented on the x-axis being less than the given value x. Because the

probability density function represents the entire sample space, the area under the probability

density function must equal 1. Negative probabilities are impossible; therefore, the probability

density function, f x ( ), must be positive for all values of x. Stating these two requirements

mathematically,

f x ( )

-

= 1

(2.1)

and f x ( ) 0 for continuous distributions. For discrete distributions,

f x

n

( ) =

1 (2.2)

for all values of n, and f x ( ) 0.

The area below the curve in Figure 2.2 is greater than 1; thus, this curve is not a valid prob-

ability density function. The density function representing the data in Figure 2.2 is shown in

Figure 2.3.

Figure 2.2 Example histogram with overlaid model.

1

5

0

1

5

5

1

6

0

1

6

5

1

7

0

1

7

5

1

8

0

1

8

5

1

9

0

1

9

5

2

0

0

2

0

5

2

1

0

2

1

5

2

2

0

2

2

5

2

3

0

2

3

5

2

4

0

2

4

5

2

5

0

0

20

40

60

80

100

Length

F

r

e

q

u

e

n

c

y

23

Pvon~niii:v Fuxu~:vx:~is

Figure 2.4 demonstrates how the probability density function is used to compute probabilities.

The area of the shaded region represents the probability of a single sh, drawn randomly from

the population, having a length less than 185. This probability is 15.9%.

Figure 2.5 demonstrates the probability of the length of one randomly selected sh having a

length greater than 220.

The area of the shaded region in Figure 2.6 demonstrates the probability of the length of one

randomly selected sh having a length greater than 205 and less than 215. Note that as the width

of the interval decreases, the area, and thus the probability of the length falling in the interval,

decreases. This also implies that the probability of the length of one randomly selected sh

having a length exactly equal to a specic value is 0. This is because the area of a line is 0.

Example 2.1: A probability density function is dened as

f x

a

x

x ( ) = , 1 10

For f x ( ) to be a valid probability density function, what is the value of a?

Figure 2.3 Probability density function for the length of sh.

1

5

5

1

5

9

1

6

3

1

6

7

1

7

1

1

7

5

1

7

9

1

8

3

1

8

7

1

9

1

1

9

5

1

9

9

2

0

3

2

0

7

2

1

1

2

1

5

2

1

9

2

2

3

2

2

7

2

3

1

2

3

5

2

3

9

2

4

3

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Length

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

24

Accvivv~:vu Tvs:ixc

Figure 2.4 The probability of the length being less than 185.

1

5

5

1

5

9

1

6

3

1

6

7

1

7

1

1

7

5

1

7

9

1

8

3

1

8

7

1

9

1

1

9

5

1

9

9

2

0

3

2

0

7

2

1

1

2

1

5

2

1

9

2

2

3

2

2

7

2

3

1

2

3

5

2

3

9

2

4

3

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Length

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

Figure 2.5 The probability of the length being greater than 220.

1

5

5

1

5

9

1

6

3

1

6

7

1

7

1

1

7

5

1

7

9

1

8

3

1

8

7

1

9

1

1

9

5

1

9

9

2

0

3

2

0

7

2

1

1

2

1

5

2

1

9

2

2

3

2

2

7

2

3

1

2

3

5

2

3

9

2

4

3

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Length

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

25

Pvon~niii:v Fuxu~:vx:~is

Solution: To be a valid probability density function, all values of f x ( ) must be positive,

and the area beneath f x ( ) must equal 1. The rst condition is met by restricting

a and x to positive numbers. To meet the second condition, the integral of f x ( )

from 1 to 10 must equal 1.

a

x

a x

a a

a

1

10

1

10

1

1

10 1 1

1

10

=

=

( ) - ( ) =

=

( )

ln

ln ln

ln

Cumulative Distribution Function

The cumulative distribution function, F x ( ), denotes the area beneath the probability density

function to the left of x, as shown in Figure 2.7.

Figure 2.6 The probability of the length being between 205 and 215.

1

5

5

1

5

9

1

6

3

1

6

7

1

7

1

1

7

5

1

7

9

1

8

3

1

8

7

1

9

1

1

9

5

1

9

9

2

0

3

2

0

7

2

1

1

2

1

5

2

1

9

2

2

3

2

2

7

2

3

1

2

3

5

2

3

9

2

4

3

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Length

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

26

Accvivv~:vu Tvs:ixc

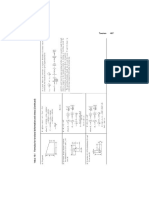

The area of the shaded region of the probability density function in Figure 2.7 is 0.2525. This

is the corresponding value of the cumulative distribution function at x = 190. Mathematically,

the cumulative distribution function is equal to the integral of the probability density function

to the left of x.

F x f d

x

( ) = ( )

-

t t

(2.3)

Example 2.2: A random variable has the probability density function f x x ( ) = 0 125 . , where

x is valid from 0 to 4. The probability of x being less than or equal to 2 is

F x dx

x

x

2 0 125

0

2

2

2

( )

= . =

0.125

2

= 0.0625 = 0.25

0

2

0

2

| |

Figure 2.7 Cumulative distribution function.

155 159 163 167 171 175 179 183 187 191 195 199 203 207 211 215 219 223 227 231 235 239 243

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Length

155 159 163 167 171 175 179 183 187 191 195 199 203 207 211 215 219 223 227 231 235 239 243

0.000

0.200

0.400

0.600

0.800

1.000

Length

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

C

u

m

u

l

a

t

i

v

e

D

i

s

t

r

i

b

u

t

i

o

n

F

u

n

c

t

i

o

n

27

Pvon~niii:v Fuxu~:vx:~is

Example 2.3: The time to fail for a transistor has the following probability density function.

What is the probability of failure before t = 200?

f t e

t

( ) =

-

0 01

0 01

.

.

Solution: The probability of failure before t = 200 is

P t e dt

e

t

t

< ( ) =

= -

-

-

200 0 01

0 01

0

200

0 01

0

200

.

.

.

= - - -

( )

= - =

- -

e e e

2 0 2

1 0 865 .

Reliability Function

The reliability function is the complement of the cumulative distribution function. If modeling

the time to fail, the cumulative distribution function represents the probability of failure, and

the reliability function represents the probability of survival. Thus, the cumulative distribution

function increases from 0 to 1 as the value of x increases, and the reliability function decreases

from 1 to 0 as the value of x increases. This is shown in Figure 2.8.

As seen from Figure 2.8, the probability that the time to fail is greater than 190, the reliability,

is 0.7475. The probability that the time to fail is less than 190, the cumulative distribution

function, is

1 0 7475 0 2525 - = . .

Mathematically, the reliability function is the integral of the probability density function from

x to innity.

R x f d

x

( ) = ( )

t t (2.4)

Hazard Function

The hazard function is a measure of the tendency to fail; the greater the value of the hazard

function, the greater the probability of impending failure. Technically, the hazard function is

the probability of failure in the very small time interval, x

0

to x

0+d

, given survival until x

0

. The

hazard function is also known as the instantaneous failure rate. Mathematically, the hazard

function is dened as

h x

f x

R x

( ) =

( )

( )

(2.5)

28

Accvivv~:vu Tvs:ixc

Figure 2.8 The reliability function.

155 159 163 167 171 175 179 183 187 191 195 199 203 207 211 215 219 223 227 231 235 239 243

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Time to Fail

155 159 163 167 171 175 179 183 187 191 195 199 203 207 211 215 219 223 227 231 235 239 243

0.000

0.200

0.400

0.600

0.800

1.000

Time to Fail

Reliability

Function

Cumulative

Distribution

Function

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

P

r

o

b

a

b

i

l

i

t

y

Using Eq. 2.5 and Eqs. 2.6 and 2.7, if the hazard function, the reliability function, or the prob-

ability density function is known, the remaining two functions can be derived.

R x e

h d

x

( ) =

- ( )

-

t t

(2.6)

f x h x e

h d

x

( ) = ( )

- ( )

-

t t

(2.7)

Example 2.4: Given the hazard function, h x x ( ) = 2 , derive the reliability function and the

probability density function.

29

Pvon~niii:v Fuxu~:vx:~is

Solution: The reliability function is

R x e

R x e

xdx

x

x

( ) =

( ) =

-

-

-

2

2

The probability density function is

f x h x R x xe

x

( ) = ( ) ( ) =

-

2

2

Expectation

Several terms are used to describe distributions. The most common terms are the mean, vari-

ance, skewness, and kurtosis. These descriptors are derived from moment-generating functions.

Readers with an engineering background may recall that the center of gravity of a shape is

cog

xf x dx

f x dx

=

( )

( )

-

(2.8)

The mean, or average, of a distribution is its center of gravity. In Eq. 2.8, the denominator is

equal to the area below f x ( ), which is equal to 1 by denition for valid probability distributions.

The numerator in Eq. 2.8 is the rst moment-generating function about the origin. Thus, the

mean of a distribution can be determined from the expression

E x xf x dx ( ) = ( )

-

(2.9)

The second moment-generating function about the origin is

E x x f x dx

2 2

( )

= ( )

-

(2.10)

The variance of a distribution is equal to the second moment-generating function about the

mean, which is

s m

2 2 2

= ( ) -

-

x f x dx

(2.11)

The variance is a measure of the dispersion in a distribution. In Figure 2.9, the variance of

Distribution A is greater than the variance of Distribution B.

30

Accvivv~:vu Tvs:ixc

Figure 2.9 Distribution variance.

1

5

5

1

5

9

1

6

3

1

6

7

1

7

1

1

7

5

1

7

9

1

8

3

1

8

7

1

9

1

1

9

5

1

9

9

2

0

3

2

0

7

2

1

1

2

1

5

2

1

9

2

2

3

2

2

7

2

3

1

2

3

5

2

3

9

2

4

3

0.000

0.010

0.020

0.030

0.040

A

B

X

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

The skewness of a distribution is equal to the third moment-generating function about the mean.

If the skewness is positive, the distribution is right skewed. If the skewness is negative, the

distribution is left skewed. Right and left skewness are demonstrated in Figure 2.10.

Kurtosis is the fourth moment-generating function about the mean and is a measure of the

peakedness of the distribution.

31

Pvon~niii:v Fuxu~:vx:~is

Figure 2.10 Right and left skewness.

0

1

2

2

4

3

6

4

8

6

0

7

2

8

4

9

6

1

0

8

1

2

0

1

3

2

1

4

4

1

5

6

1

6

8

1

8

0

1

9

2

2

0

4

2

1

6

2

2

8

2

4

0

0.000

0.005

0.010

0.015

0.020

0.025

0.030

Left Skewed

Right Skewed

X

P

r

o

b

a

b

i

l

i

t

y

D

e

n

s

i

t

y

Summary

A statistical distribution can be described by the following functions:

Probability density function, f x ( )

Cumulative distribution function, F x ( )

Reliability function, R x ( )

Hazard function, h x ( )

When one of these functions is dened, the others can be derived. These functions, with char-

acteristics concerning central tendency, spread, and symmetry, provide a description of the

population being modeled.

You might also like

- Lab 1 Experimental ErrorsDocument7 pagesLab 1 Experimental ErrorsAaron Gruenert100% (1)

- ANSI Y14 - 5-1994 Geometric Tolerancing CheatsheetDocument2 pagesANSI Y14 - 5-1994 Geometric Tolerancing Cheatsheetjlel12100% (1)

- Chapter 4 Solutions Solution Manual Introductory Econometrics For FinanceDocument5 pagesChapter 4 Solutions Solution Manual Introductory Econometrics For FinanceNazim Uddin MahmudNo ratings yet

- Chapter 5 Solutions Solution Manual Introductory Econometrics For FinanceDocument9 pagesChapter 5 Solutions Solution Manual Introductory Econometrics For FinanceNazim Uddin MahmudNo ratings yet

- Machinery HandbookDocument3 pagesMachinery Handbooksenthil031277100% (1)

- Devore Ch. 1 Navidi Ch. 1Document16 pagesDevore Ch. 1 Navidi Ch. 1chinchouNo ratings yet

- Unit 3Document38 pagesUnit 3swingbikeNo ratings yet

- SQCR Practical File - Yash Verma 40515611117 F13 MEDocument21 pagesSQCR Practical File - Yash Verma 40515611117 F13 MEIsh ShilpNo ratings yet

- 05 Diagnostic Test of CLRM 2Document39 pages05 Diagnostic Test of CLRM 2Inge AngeliaNo ratings yet

- Phys111 Lab ManualDocument180 pagesPhys111 Lab ManualM Furkan ÖNo ratings yet

- PHYS111 LabManualDocument90 pagesPHYS111 LabManualfarintiranNo ratings yet

- Process Control Concepts Through Statistics: N X N X X X X XDocument14 pagesProcess Control Concepts Through Statistics: N X N X X X X XVeeresha YrNo ratings yet

- Chi Sqaure &anovaDocument14 pagesChi Sqaure &anovaShivam SharmaNo ratings yet

- Chapter 6. Comparing Means: 1 BoxplotsDocument42 pagesChapter 6. Comparing Means: 1 BoxplotsPETERNo ratings yet

- Making Sense of Data Mooc Notes PDFDocument32 pagesMaking Sense of Data Mooc Notes PDFS Aisyah43No ratings yet

- Assumption of Normality Dr. Azadeh AsgariDocument27 pagesAssumption of Normality Dr. Azadeh AsgariDr. Azadeh AsgariNo ratings yet

- Statistical Application Da 2 SrishtiDocument7 pagesStatistical Application Da 2 SrishtiSimran MannNo ratings yet

- Continuous Probability DistributionDocument0 pagesContinuous Probability Distributionshahzaiblatafat12No ratings yet

- ECON1203 HW Solution Week10Document7 pagesECON1203 HW Solution Week10Bad Boy100% (1)

- Visual Presentation of Data: by Means of Box PlotsDocument4 pagesVisual Presentation of Data: by Means of Box PlotshutomorezkyNo ratings yet

- New Method For The Statistical Evaluation of Rfi MeasurementsDocument6 pagesNew Method For The Statistical Evaluation of Rfi MeasurementsDianeNo ratings yet

- M7L36Document6 pagesM7L36abimanaNo ratings yet

- DWDMDocument18 pagesDWDMYEGUVAPALLE aravind reddyNo ratings yet

- Monte Carlo Experiments: Version: 4-10-2010, 20:37Document6 pagesMonte Carlo Experiments: Version: 4-10-2010, 20:37Mian AlmasNo ratings yet

- Stats ch7Document20 pagesStats ch7Elvira ToktasinovaNo ratings yet

- Chap 01Document27 pagesChap 01floopydriveNo ratings yet

- BTH780 Reliability Engineering Lecture Material#4Document120 pagesBTH780 Reliability Engineering Lecture Material#4Neuky ElsNo ratings yet

- Unit 6 Chi-Square Distribution SLMDocument22 pagesUnit 6 Chi-Square Distribution SLMVineet SharmaNo ratings yet

- Chapter 1: Descriptive Statistics: Example 1: Making Steel RodsDocument20 pagesChapter 1: Descriptive Statistics: Example 1: Making Steel RodsCindyNo ratings yet

- 1995 Practical Experiments in StatisticsDocument7 pages1995 Practical Experiments in StatisticsMiguel AlvaradoNo ratings yet

- Homework/Assignment: (Chapter 9: Input Modelling)Document11 pagesHomework/Assignment: (Chapter 9: Input Modelling)Lam Vũ HoàngNo ratings yet

- Binomial PoissonDocument19 pagesBinomial PoissonRoei ZoharNo ratings yet

- The Gum Supplement 1 and The Uncertainty Evaluations of Emc MeasurementsDocument5 pagesThe Gum Supplement 1 and The Uncertainty Evaluations of Emc MeasurementsMade Thomas KDNo ratings yet

- Chapter 8 (Cont.) : Gauge and Measurement System Capability AnalysisDocument11 pagesChapter 8 (Cont.) : Gauge and Measurement System Capability AnalysisRanjith KumarNo ratings yet

- Malnutrition in The WorldDocument11 pagesMalnutrition in The WorldReginaCristineCabudlanMahinayNo ratings yet

- MRKDocument15 pagesMRKparapencarituhanNo ratings yet

- 6338 - Multicollinearity & AutocorrelationDocument28 pages6338 - Multicollinearity & AutocorrelationGyanbitt KarNo ratings yet

- Esa - 2022 - Ue21cs241aDocument7 pagesEsa - 2022 - Ue21cs241aEnrica Morais PCMS 54No ratings yet

- Making Sense of Data Statistic CourseDocument39 pagesMaking Sense of Data Statistic CoursePANKAJ YADAVNo ratings yet

- Krebs Chapter 05 2013Document35 pagesKrebs Chapter 05 2013microbeateriaNo ratings yet

- Empirical Probability DistributionDocument11 pagesEmpirical Probability DistributionAngelyka RabeNo ratings yet

- Statistical Leakage Estimation of Double Gate Finfet Devices Considering The Width Quantization PropertyDocument4 pagesStatistical Leakage Estimation of Double Gate Finfet Devices Considering The Width Quantization PropertyKumar PuspeshNo ratings yet

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDocument6 pagesIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)No ratings yet

- An Adaptive High-Gain Observer For Nonlinear Systems: Nicolas Boizot, Eric Busvelle, Jean-Paul GauthierDocument8 pagesAn Adaptive High-Gain Observer For Nonlinear Systems: Nicolas Boizot, Eric Busvelle, Jean-Paul Gauthiertidjani73No ratings yet

- Exp 1Document7 pagesExp 1Hafiz SallehNo ratings yet

- A Cusp Catastrophe Model For Developing Marketing Strategies For Online Art AuctionDocument10 pagesA Cusp Catastrophe Model For Developing Marketing Strategies For Online Art AuctionNha ReshNo ratings yet

- Getting To Know Your Data: 2.1 ExercisesDocument8 pagesGetting To Know Your Data: 2.1 Exercisesbilkeralle100% (1)

- An Introduction To Error Analysis: Chem 75 Winter, 2011Document19 pagesAn Introduction To Error Analysis: Chem 75 Winter, 2011conejo69No ratings yet

- Introductory Econometrics For Finance Chris Brooks Solutions To Review Questions - Chapter 5Document9 pagesIntroductory Econometrics For Finance Chris Brooks Solutions To Review Questions - Chapter 5Huyền my lifeNo ratings yet

- Identifying Potentially Influential Subsets in Multivariate RegressionDocument35 pagesIdentifying Potentially Influential Subsets in Multivariate RegressionDarfElfDrizzt100% (1)

- Parkinson (1980) The Extreme Value Method For Estimating The Variance of The Rate of Return PDFDocument6 pagesParkinson (1980) The Extreme Value Method For Estimating The Variance of The Rate of Return PDFJoe average100% (1)

- 8.3 Estimation of α from the sample range. The sample range, usually denoted by w, is theDocument1 page8.3 Estimation of α from the sample range. The sample range, usually denoted by w, is theRanniBps-ErlNo ratings yet

- Project 2: Technical University of DenmarkDocument11 pagesProject 2: Technical University of DenmarkRiyaz AlamNo ratings yet

- An Adjusted Boxplot For Skewed Distributions: M. Hubert, E. VandervierenDocument16 pagesAn Adjusted Boxplot For Skewed Distributions: M. Hubert, E. VandervierenRaul Hernan Villacorta GarciaNo ratings yet

- Random Sets Approach and Its ApplicationsDocument12 pagesRandom Sets Approach and Its ApplicationsThuy MyNo ratings yet

- 405 Econometrics: by Domodar N. GujaratiDocument47 pages405 Econometrics: by Domodar N. Gujaratimuhendis_8900No ratings yet

- QM2 Tutorial 3Document26 pagesQM2 Tutorial 3ducminhlnilesNo ratings yet

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- A Study of Three-Node Triangular Plate Bending ElementsDocument42 pagesA Study of Three-Node Triangular Plate Bending ElementsGirish Dev Chaurasiya100% (1)

- Roark's Formulas For Stress and Strain 10.1Document1 pageRoark's Formulas For Stress and Strain 10.1Lincs lincolnNo ratings yet

- SANS 347 - 2012 - Ed 2 - OPENDocument51 pagesSANS 347 - 2012 - Ed 2 - OPENLincs lincolnNo ratings yet

- Solution Methods For Nonlinear Finite Element Analysis (NFEA)Document39 pagesSolution Methods For Nonlinear Finite Element Analysis (NFEA)janaaidaas1996No ratings yet

- Numerical Methods For Scientific and Engineering Computation - M. K. Jain, S. R. K. Iyengar and R. K. JainDocument152 pagesNumerical Methods For Scientific and Engineering Computation - M. K. Jain, S. R. K. Iyengar and R. K. JainRishabh Singhal50% (10)

- Heat Exchanger NomenclatureDocument1 pageHeat Exchanger NomenclatureSikandar HayatNo ratings yet

- Rules of Thumb For Steel StructuresDocument5 pagesRules of Thumb For Steel StructuresLincs lincoln100% (1)

- Pipe and Tubing FormulaeDocument1 pagePipe and Tubing FormulaeLincs lincolnNo ratings yet

- UnitsDocument3 pagesUnitsLincs lincolnNo ratings yet

- 20090727143031273Document8 pages20090727143031273Lincs lincolnNo ratings yet

- A Geometric Constraint SolverDocument30 pagesA Geometric Constraint SolverLincs lincolnNo ratings yet

- 02Document42 pages02Lincs lincolnNo ratings yet

- Material Selection & Corrosion Resistance Alloys For Petroleum IndustryDocument4 pagesMaterial Selection & Corrosion Resistance Alloys For Petroleum Industryعزت عبد المنعمNo ratings yet

- 01 - Fatigue Theory (Part 1)Document42 pages01 - Fatigue Theory (Part 1)Lincs lincolnNo ratings yet

- 20090727143031273Document8 pages20090727143031273Lincs lincolnNo ratings yet

- CMA Fill Heights Over Precast CulvertsDocument6 pagesCMA Fill Heights Over Precast CulvertsLincs lincolnNo ratings yet

- Materials Selection Guide Final VersionDocument77 pagesMaterials Selection Guide Final VersionRahul ManeNo ratings yet

- 01Document8 pages01Katai MwanzaNo ratings yet

- Transmission Characteristics of IR Signals in The AtmosphereDocument54 pagesTransmission Characteristics of IR Signals in The AtmosphereLincs lincolnNo ratings yet

- 01Document29 pages01Lincs lincolnNo ratings yet

- Pipe and Tubing FormulaeDocument1 pagePipe and Tubing FormulaeLincs lincolnNo ratings yet

- 20090727143031273Document8 pages20090727143031273Lincs lincolnNo ratings yet

- The Scope of Analytical Chemistry: Ground Rules and FundamentalsDocument10 pagesThe Scope of Analytical Chemistry: Ground Rules and FundamentalsLincs lincolnNo ratings yet

- Units: 3. U.S. Units of Measurement 4. Tables of EquivalentsDocument8 pagesUnits: 3. U.S. Units of Measurement 4. Tables of EquivalentsLincs lincolnNo ratings yet

- Grating Safe Load TablesDocument3 pagesGrating Safe Load TablesLincs lincolnNo ratings yet

- 2 Kinematics and Dynamics of A ParticleDocument13 pages2 Kinematics and Dynamics of A ParticleLincs lincolnNo ratings yet

- 01 PDFDocument11 pages01 PDFLincs lincolnNo ratings yet

- A Guide To Model Selection For Survival AnalysisDocument26 pagesA Guide To Model Selection For Survival AnalysisDebela NanessoNo ratings yet

- Bedasa TessemaDocument89 pagesBedasa TessemaDawit MarkosNo ratings yet

- Garcia RCM 1Document7 pagesGarcia RCM 1Caroline Tamara Garcia RamirezNo ratings yet

- Premium Calculation On Health Insurance Implementing DeductibleDocument8 pagesPremium Calculation On Health Insurance Implementing DeductibleParakh AhoojaNo ratings yet

- Chapter 01Document10 pagesChapter 01BonjamesNgNo ratings yet

- Reliability MathematicsDocument341 pagesReliability MathematicssadeqNo ratings yet

- 6 TTE RegressionDocument37 pages6 TTE Regressionaraz artaNo ratings yet

- Three Ways To Estimate Remaining Useful Life For Predictive Maintenance - MATLAB & SimulinkDocument2 pagesThree Ways To Estimate Remaining Useful Life For Predictive Maintenance - MATLAB & SimulinkMarcos Aurelio de SouzaNo ratings yet

- Statistical Analysis of Caterpillar 793D Haul Truck Engine DataDocument10 pagesStatistical Analysis of Caterpillar 793D Haul Truck Engine DataSaul Leonidas Astocaza AntonioNo ratings yet

- Affordable Reliability Engineering - Life-Cycle Cost Analysis For Sustainability and Logistical Support (CRC, 2015)Document374 pagesAffordable Reliability Engineering - Life-Cycle Cost Analysis For Sustainability and Logistical Support (CRC, 2015)Sithick Mohamed100% (2)

- Capitulo 1 IntroducccionDocument59 pagesCapitulo 1 IntroducccionerikaNo ratings yet

- Reliability NotesDocument231 pagesReliability NotesAndre De BeerNo ratings yet

- Gretl Guide (401 450)Document50 pagesGretl Guide (401 450)Taha NajidNo ratings yet

- Reliability Analysis For RepairableDocument261 pagesReliability Analysis For RepairableMuhammad GhufranNo ratings yet

- B1 3LDocument36 pagesB1 3LDEIVID STEWART RAMIREZ CASTILLONo ratings yet

- Chap1 PDFDocument12 pagesChap1 PDFMuhamad MustainNo ratings yet

- Quality and Reliability 5323SWM15Document3 pagesQuality and Reliability 5323SWM15krishna kumarNo ratings yet

- PreviewpdfDocument55 pagesPreviewpdfMissy ;No ratings yet

- Estimating Survival Functions From The Life Table : J. Chron. Dis. 1969Document16 pagesEstimating Survival Functions From The Life Table : J. Chron. Dis. 1969Hemangi KulkarniNo ratings yet

- MJC - The STJM CommandDocument38 pagesMJC - The STJM Commandhubik38No ratings yet

- Development of A Predictive Model For Industrial Circuit Breaker Degradation in Stochastic EnvironmentsDocument17 pagesDevelopment of A Predictive Model For Industrial Circuit Breaker Degradation in Stochastic EnvironmentsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Analysis of RAM (Reliability, Availability, Maintainability) Production of Electric Voltage From 48 V PV (Photovoltaic) at Pantai Baru Pandansimo, IndonesiaDocument9 pagesAnalysis of RAM (Reliability, Availability, Maintainability) Production of Electric Voltage From 48 V PV (Photovoltaic) at Pantai Baru Pandansimo, IndonesiabellaNo ratings yet

- Class 06 - Time Dependent Failure ModelsDocument37 pagesClass 06 - Time Dependent Failure Modelsosbertodiaz100% (1)

- ST5212: Survival Analysis: ScheduleDocument4 pagesST5212: Survival Analysis: ScheduleSnu Cho IDNo ratings yet

- Reliab 3 BagusDocument22 pagesReliab 3 BagusMuhamad YusufNo ratings yet

- Quality & Product ExcellenceDocument104 pagesQuality & Product ExcellenceAbhishek SrivastavaNo ratings yet

- Risk Analysis For Information and Systems Engineering: INSE 6320 - Week 3 Session 2Document23 pagesRisk Analysis For Information and Systems Engineering: INSE 6320 - Week 3 Session 2Deepika ManglaNo ratings yet

- HP425 Seminar 3: Non-Parametric Survival Analysis IDocument3 pagesHP425 Seminar 3: Non-Parametric Survival Analysis ITú Minh ĐặngNo ratings yet

- PDFDocument597 pagesPDFmsa_imegNo ratings yet

- Bobbio pmccs3 PDFDocument5 pagesBobbio pmccs3 PDFAriana Lucia Rocha MendezNo ratings yet