Professional Documents

Culture Documents

Bayesian Cs FR Structural Health Monitoring Yong Huang, Beck, Li, Wu

Uploaded by

survinderpalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bayesian Cs FR Structural Health Monitoring Yong Huang, Beck, Li, Wu

Uploaded by

survinderpalCopyright:

Available Formats

Robust Diagnostics for Bayesian Compressive Sensing with

Applications to Structural Health Monitoring

Yong Huang*

a,b

, James L Beck

b

, Hui Li

a

, Stephen Wu

b

a

School of Civil Engineering, Harbin Institute of Technology, Harbin, China150090;

b

Division of Engineering and Applied Science, California Institute of Technology, Pasadena

CA,USA 91125

ABSTRACT

In structural health monitoring (SHM) systems for civil structures, signal compression is often important to reduce the

cost of data transfer and storage because of the large volumes of data generated from the monitoring system.

Compressive sensing is a novel data compressing method whereby one does not measure the entire signal directly but

rather a set of related (projected) measurements. The length of the required compressive-sensing measurements is

typically much smaller than the original signal, therefore increasing the efficiency of data transfer and storage. Recently,

a Bayesian formalism has also been employed for optimal compressive sensing, which adopts the ideas in the relevance

vector machine (RVM) as a decompression tool, such as the automatic relevance determination prior (ARD). Recently

publications illustrate the benefits of using the Bayesian compressive sensing (BCS) method. However, none of these

publications have investigated the robustness of the BCS method. We show that the usual RVM optimization algorithm

lacks robustness when the number of measurements is a lot less than the length of the signals because it can produce sub-

optimal signal representations; as a result, BCS is not robust when high compression efficiency is required. This induces

a tradeoff between efficiently compressing data and accurately decompressing it. Based on a study of the robustness of

the BCS method, diagnostic tools are proposed to investigate whether the compressed representation of the signal is

optimal. With reliable diagnostics, the performance of the BCS method can be monitored effectively. The numerical

results show that it is a powerful tool to examine the correctness of reconstruction results without knowing the original

signal.

Keywords: Bayesian Compressive Sensing, data compression, structural health monitoring, relevance vector machine,

automatic relevance determination, robust diagnostics

1. INTRODUCTION

Structural health monitoring (SHM) systems are an active and well-established research area. They seek to detect

damage and predict remaining service life in civil structures through structural sensor networks. However, the

complexity and large scale of civil structures induce large monitoring systems, often with hundreds to thousands of

sensor nodes. For example, For example, approximately 800 sensors were installed on the Wind and Structural Health

Monitoring System (WASHMS) on Tsing Ma, Ting Kau, and Kap Shui Mun bridges in Hong Kong, China [1]. A large

amount of sensor data is usually produced, especially when the long lifetime of a structure is considered. Therefore,

innovative sensor data compression techniques are necessary to reduce the cost of signal transfer and storage generated

from such a large-scale sensor network.

Several novel data compression techniques for SHM systems, especially for wireless sensor networks have been

developed, including wavelet-based compression techniques [2-4] and Huffman lossless compression technique [5].

However, all these methods belong to traditional framework where the sampling rate satisfies the conditions of the

NyquistShannon theorem.

*yonghuan@caltech.edu

Smart Sensor Phenomena, Technology, Networks, and Systems 2011,

edited by Wolfgang Ecke, Kara J. Peters, Theodore E. Matikas, Proc. of SPIE Vol. 7982

79820J 2011 SPIE CCC code: 0277-786X/11/$18 doi: 10.1117/12.880687

Proc. of SPIE Vol. 7982 79820J-1

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

Recent results demonstrate that sparse or compressible signals can be directly acquired at a rate significantly lower than

the Nyquist rate, using a novel sampling strategy called compressive sensing (CS) [6-10]. Much smaller number of

measurements is obtained by projecting the signal onto a small set of vectors, which are incoherent with respect to the

basis vectors that give a sparse representation of the signal. This new technique may come to underlie procedures for

sampling and compressing data simultaneously, therefore increasing the efficiency of data transfer and storage. The

signals can be subsequently recovered from the acquired measurements by a special decompression algorithm. Recently,

there has been much interest to extend the CS method, including Bayesian CS (BCS) [11], distributed CS [12] and 1-BIT

CS [13].

As a damage detection and characterization strategy for civil structures, SHM systems should guarantee that the

reconstructed signal from data compression is accurate. For compressive sensing based on solving a convex optimization

problem, a condition on the number of measurements needed for perfect reconstruction of signal is provided by Candes

et al. [14], Donoho et al. [15] and Baraniuk et al. [16]. However, they require the assumption that the sparsity of the

original signal is known in advance.

A BCS technique has been proposed by employing a Bayesian formalism to estimate the underlying signal based on CS

measurements. This technique adopts the ideas of the automatic relevance determination (ARD) prior in the relevance

vector machine (RVM) as a decompression tool [21, 22]. One of the benefits of using the BCS method is that

measurement of confidence in the inverted signal is given through variances on each reconstructed data point. He and

Carin [17] pointed out that these variances could be used to determine whether a sufficient number of CS measurements

have been performed, although the exact strategy to implement it has not been presented.

In this paper, the robustness property of the BCS technique is studied; diagnostic tools are proposed to investigate

whether the compressed representation of the signal is optimal. A set of numerical results are used to validate the

proposed methods.

2. BAYESIAN COMPRESSIVE SENSING

Consider a discrete-time signal x = |x(1), x(N)]

1

in R represented in terms of an orthogonal basis as

x = w

]

N

n=1

V

]

or x = Vw (1)

where V = |V

1

, , V

N

] is the N N basis matrix with the orthonormal basis of N 1 vectors {V

n

]

n=1

N

as columns; w is

a sparse vector, i.e., most of its components are zero or very small (with minimal impact on the signal); the location of

the nonzero components of w is referred to as the model indices and the number of them represent the sparsity of the

model, representation of the signal x in Equation (1)

In the framework of CS, one infers the coefficients w

]

of interest from compressed data instead of directly sampling the

signal x. The compressed data vector y is composed of K individual measurements obtained by linearly projecting the

signal x using a chosen random projection matrix 1:

y = 1x +n = 1Vw +n = 0w +n (2)

where = 1V is known and n represents the acquisition noise which is modeled as a zero-mean Gaussian vector with

covariance matrix

2

I

K

. Since 1 is a K N matrix with K < N, to give good data compression, the inversion to find

the signal x is ill-posed.

However, by exploiting the sparsity of the representation of x in basis {V

n

]

n=1

N

, the ill-posed problem can be solved by

an optimization formulation to estimate w,

w = org min{[y -0w[

2

2

+p[w[

1

] (3)

Proc. of SPIE Vol. 7982 79820J-2

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

where the parameter p balances the trade-off between the first and the second expressions in the equation, i.e. between

how well the data is fitted and how sparse the signal is [14,15].

The ill-posed data inverse problem can also be tackled using a Bayesian perspective, which has certain distinct

advantages compared to previously published CS inversion algorithms such as linear programming [18] and greedy

algorithm [19-20]. It also provides a sparse solution to estimate the underlying signal, and it provides a measure of the

uncertainty for the reconstructed signal, in particular.

Ji et al. [11] adopt the ideas in the relevance vector machine (RVM) proposed in [21-23] for regression that was RVM

method is implemented by using the ARD prior that not only allows regularization of the inversion but also controls

model complexity by automatically selecting the relevant regression terms to give a sparse representation of the signal.

The characteristic feature of this prior is that there is an independent hyperparameter for each basis coefficient:

p(w|

1

,

2

,

N

) = j(2)

-12

(

n

)

12

exp]-

1

2

(

n

)w

n

2

[

N

n=1

= N(u, uiag(

1

-1

, ,

N

-1

)) (4)

where hyperparameter

n

is the inverse of the prior variance of each coefficient w

n

.

The hyperparameter are selected by maximizing the Bayesian evidence (marginal likelihood):

p(y|) = ]p (y|w)p(w|)uw (5)

where the likelihood function is given by:

p(y|w) = N(w,

2

I

K

) (6)

because of the Gaussian model for the noise in Equation (2). Maximizing the evidence penalized signal models that are

too simple or too complex and this has an interesting information-theoretic interpretation [24].

3. ROBUSTNESS PROBLEM FOR BCS RECONSTRUCTION

In order to increase the efficiency of compressive sensing, the number of the measurements must be reduced to be much

smaller than the length of the original signal. As a result, there is a large number of local maximas in the evidence over o

that can trap the optimization [25].

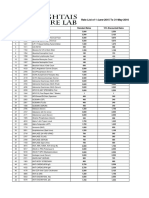

Figure 1 illustrates the problem by showing some results from the fast optimization algorithm in [23] to different

samples of the data y from the same signal x by choosing different random projection matrices 1, while the orthogonal

basis V was held fixed, the signal of length N = S12 consisted of I = 2u uniformly spaced spikes. The reconstruction

error and converged value of the log evidence are plotted against the size of the final reconstructed signal (a constant

noise variance

2

was used that was 10% of the 2-norm of the measurement y). The figure shows only a few of the

optimization runs produce the correct signal size of 20 corresponding to the global maximum of the log evidence near

400. Most of the runs give local maxima of the evidence that correspond to larger amounts of non-zero signal

components.

It is also found that the global maximum of the evidence corresponds to the most stable signal model; this is almost the

only one model in the adjacent area. The signal models with local maximas of the evidence are much less stable; they

have the potential to converge to other models if perturbed slightly and re-run.

4. ROBUST DIAGNOSTIC METHODS FOR BCS RECONSTRUCTION

We conclude that the BCS technique lacks robustness when high compression efficiency is required, and so a robustness

diagnostic which works without knowing of the original signal is necessary. Such robust diagnosing methods are

proposed here that utilize the different level of stability between the reconstructed signals that correspond to global and

Proc. of SPIE Vol. 7982 79820J-3

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

local maxima of the evidence. The signal indices of the previous final reconstructed signal are used to initialize a new

diagnostic process. This process tries to perturb the original converged result to another local maximum based on the

various strategies described next. If the current local maximum is actually the global maximum, then this process will not

make any changes in the results, revealing that the optimum reconstructed signal has been achieved.

(a) (b)

Figure 1. Reconstruction error and log evidence versus size of the final models

4.1 Change in noise variance n

2

The selection of the noise variance

2

may affect algorithm significantly due to the underdetermined nature of the

inverse problem. The noise variance

2

has significant influence on the trade-off between how well the signal model fits

the data and how sparse it is.

The sparsity of the final reconstructed signals versus noise variance

2

is investigated as shown in Figure 2. The larger

2

, sparser is the final reconstructed signal. This is because larger noise variance

2

reduces the information to support

the more complicated reconstructed signals.

Figure 2. Plot of sparsity as a function of increasing noise variance

2

(Different training process with fixed

2

, the same original

signal and the same projection matrix)

20 25 30 35 40 45 50 55 60 65 70

-0.2

0

0.2

0.4

0.6

0.8

1

1.2

1.4

1.6

Size of reconstructed signal model

R

e

c

o

n

s

t

r

u

c

t

i

o

n

e

r

r

o

r

N=512,K=100,T=20,signal with uniform spikes

20 25 30 35 40 45 50 55 60 65 70

200

250

300

350

400

450

Size of reconstruction signal model

L

o

g

e

v

i

d

e

n

c

e

N=512,K=100,T=20, signal with uniform spikes

1 20 40 60 80 100 120 140 160 180 200

15

20

25

30

35

40

45

50

55

60

65

Multiplier of noise variance

S

i

z

e

o

f

r

e

c

o

n

s

t

r

u

c

t

e

d

s

i

g

n

a

l

m

o

d

e

l

Correct reconstructed signal Models (No multiplier )

Incorrect reconstructed signal Models (No multiplier )

Proc. of SPIE Vol. 7982 79820J-4

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

4.2 Change in indices of the signal model

Another method to perturb the converged solution for the reconstructed signal is to delete or add some indices to the

signal model (i.e. add or delete non-zero coefficients in w). Since the signals corresponding to local maximum of

evidence are less stable than that for the global maximum, changing the indices of the signal with a local maximum will

lead to convergence to a new local maximum, showing that the reconstructed signal is sub-optimal.

4.3 Summary of robust diagnostic procedure

The method employs signal indices of the previous final reconstructed model to initialize a new diagnostic learning

process and the initial values of the hyperparameter for every signal index are re-estimated. There are four diagnostic

steps shown below (the value of the noise variance for the last iteration of original training process is

or

2

):

(1). Initialize with 80% of the signal indices obtained from first trial (randomly choosing), take noise variance

ncw,1

2

to

be twice

or

2

, then perform the second trial with constant

ncw,1

2

.

(2). Initialize with all signal indices obtained from first trial and 20% of extra random signal indices, take noise variance

ncw,2

2

as half of

or

2

, then perform the second trial with constant

ncw,2

2

.

(3). Set the final signal of the second trial of step 1 as the original signal model and perform step 2 again.

(4). Set the final signal of the second trial of step 2 as the original signal model and perform step 1 again.

This procedure for diagnosis detects incorrect reconstruction signals when either the original signal model (non-zero

coefficients in w) is not included in the final signal model or the final signal model is not included in the original one for

the diagnosing steps. If there is at least one diagnosis step that shows the reconstructed signal is incorrect, the results

can be judged as an incorrect reconstructed signal.

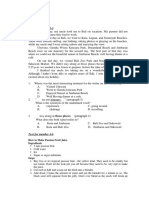

4.4 Numerical validations for robust diagnosis

For the numerical validations of the robust diagnosis procedure, 1000 runs are performed, as shown in Figure 4. Signals

with length N = S12 and non-zero spikes created by choosing T = 2u discrete times at random are considered; the non-

zero spikes of the signals are drawn from two different probability distributions, one is uniform _1 random spikes, and

the other one is zero-mean unit variance Gaussian spikes (Figure 3). Uniform random projection is employed to construct

the projection matrix . In the experiment, the number of measurements is K = 6u for signals with non-uniform spikes

and K = 9u for signals with uniform spikes. For the BCS implementation [11], we used the bcs_ver0.1 package

available online at http://people.ee.duke.edu/~lcarin/BCS.html, and the BCS parameters were set as those suggested by

bcs_ver0.1. Original signal reconstruction is defined as incorrect if the actual reconstruction error is larger than 0.01,

which we can compute because in the test the original signal is known. The trials are sorted to better visualize the

accuracy of different diagnostic processes. Figure 4(a) and (b) show that the robust diagnostic procedure is a powerful

tool to examine the correctness of reconstruction results without knowing the original signal.

Proc. of SPIE Vol. 7982 79820J-5

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

Figure 3. Signal with uniform spikes and non-uniform spikes

(a) N=512, T=20, K=90; Signal with uniform spike

0 50 100 150 200 250 300 350 400 450 500

-2

0

2

Signal with unifrom spikes

0 50 100 150 200 250 300 350 400 450 500

-5

0

5

Signal with non-unifrom spikes

Proc. of SPIE Vol. 7982 79820J-6

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

(b) N=512, T=20, K=60; Signal with non-uniform spike

Figure 4. Results of the robust diagnosis

5. CONCLUSION

The robustness of the BCS method is investigated. We show that the optimization algorithm of RVM lacks robustness

when the number of measurements is a lot less than the length of the signals because it often converges to sub-optimal

signal representations that are local maxima of evidence, so BCS is not robust when high compression efficiency is

required.

Based on a study of the robustness of the BCS method, diagnostic tools are proposed to investigate whether the

compressed representation of the signal is optimal. With reliable diagnostics, the performance of the BCS method can be

monitored. Numerical results based on simulating SHM signals show that it is a powerful tool to examine the correctness

of reconstruction results without knowing the original signal.

ACKNOWLEDGMENTS

One of the authors (Yong Huang) acknowledges the support provided by the China Scholarship Council while he was a

Visiting Student Researcher at the California Institute of Technology. This research is also supported by grants from

National Natural Science Foundation of China (NSFC grant nos. 50538020, 50278029 and 50525823), which supported

the first and third authors (Yong Huang and Hui Li).

Proc. of SPIE Vol. 7982 79820J-7

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

REFERENCES

[1] Ko, J. M., Health monitoring and intelligent control of cable-supported bridges. Proceedings of the

International Workshop on Advanced Sensors, Structural Health Monitoring and Smart Structures, Keio

University, Tokyo, Japan, 1011 (2003).

[2] Zhang, Y. and Li, J., "Wavelet-based vibration sensor data compression technique for civil infrastructure

condition monitoring," Journal of Computing in Civil Engineering 20(6), 390-399 (2006).

[3] Xu, N., Rangwala, S., Chintalapudi, K., Ganesan, D., Broad, A., Govindan, R. and Estrin, D.. "A wireless

sensor network for structural monitoring," Proceedings of the ACM Conference on Embedded Networked

Sensor Systems. Baltimore, MD, USA (2004).

[4] Caffrey, J., Govindan, R., Johnson, E., Kiishnamachari, B., Masri, S.,Sukhatme, G., Chintalapudi, K.,

Dantu, K., Rangwala, S., Sridharan, A., Xu, N. and Zuniga, M., "Networked Sensing for Structural Health

Monitoring," Proceedings of the 4th International Workshop on Structural Control, New York, NY, 5766

(2004).

[5] Lynch, J. P., Sundararajan, A., Law, K. H, Kiremidjian, A. S., and Carryer, E. "Power-efficient data

management for a wireless structural monitoring system," Proceedings of the 4th International Workshop on

Structural Health Monitoring, Stanford, CA, 11771184 (2003).

[6] Candes, E. J. "Compressive sampling," Proceedings of the International Congress of Mathematicians, Madrid,

Spain, 14331452 (2006).

[7] Donoho, D., "Compressed sensing," IEEE Transactions on Information Theory 52(4), 12891306 (2006).

[8] Candes, E. J. and Tao, T., "Near-optimal signal recovery from random projections: Universal encoding

strategies?" IEEE Transactions on Information Theory 52(12), 54065425 (2006).

[9] Candes, E. J. and Tao, T., "Decoding by linear programming," IEEE Transactions on Information Theory 51,

42034215 (2005).

[10] Donoho, D. "For most large underdetermined systems of linear equations, the minimal l

1

norm solution is also

the sparsest solution," Communications on Pure and Applied Mathematics, 59(6), 797829 (2006).

[11] Ji, S., Xue, Y. and Carin L., "Bayesian compressive sensing," IEEE Transactions on Signal Processing, 56(6),

2346 - 2356 (2008)

[12] Baron, D., Duarte, M. F., Wakin, M. B., Sarvotham, S. and Baraniuk, R.G., "Distributed compressive sensing,"

Preprint (2005)

[13] Boufounos, P. and Baraniuk, R., "1-Bit compressive sensing," Conf. on Info. Sciences and Systems (CISS),

Princeton, New Jersey (2008)

[14] Candes, E. J., Romberg, J. and Tao, T., "Robust uncertainty principles: Exact signal reconstruction from highly

incomplete frequency information," IEEE Transactions on Information Theory, 52(2), 489-509 (2006).

[15] Donoho, D.,"Compressed sensing," IEEE Trans Inform Theory 52(4), 1289-1306 (2006).

[16] Baraniuk, R., Davenport, M., DeVore, R. and Wakin, M.. "A simple proof of the restricted isometry property

for random matrices". Constructive Approximation, 28(3),253-263, (2008).

Proc. of SPIE Vol. 7982 79820J-8

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

[17] He, L. and Carin L., "Exploiting Structure in Wavelet-Based Bayesian Compressive Sensing,"

IEEE Transactions on Signal Processing, 57(9), 3488-3497 (2009).

[18] Chen, S. S., Donoho, D. L. and Saunders, M. A., "Atomic decomposition by basis pursuit," SIAM Journal on

Scientific and Statistical Computing, 20(1), 3361(1999).

[19] Tropp, J. A. and Gilbert, A. C., "Signal recovery from random measurements via orthogonal matching pursuit, "

IEEE Transactions on Information Theory, 53(12), 4655-4666 (2007).

[20] Donoho, D. L. and Tanner, J., "Sparse nonnegative solution of underdetermined linear equations by linear

programming," Proceedings of the National Academy of Sciences, 102(27), 9446-9451 (2005).

[21] Tipping, M. E., "Sparse Bayesian learning and the relevance vector machine". Journal of Machine Learning

Research 1, 211-244 (2001).

[22] Tipping, M. E., "Bayesian inference: An introduction to Principles and practice in machine learning." Advanced

Lectures on Machine Learning 3176, 41-62 (2004)

[23] Tipping, M. E. and Faul, A. C., "Fast marginal likelihood maximisation for sparse Bayesian models,"

Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Key West, FL (2003).

[24] Beck, J. L., Bayesian system identification based on probability logic, Structural Control and Health

Monitoring, 17 (7), 825-847(2010).

[25] Faul, A. C. and M. E. Tipping, "Analysis of sparse Bayesian learning," Advances in Neural Information

Processing Systems 14, 383389 (2002).

Proc. of SPIE Vol. 7982 79820J-9

Downloaded From: http://proceedings.spiedigitallibrary.org/ on 09/28/2013 Terms of Use: http://spiedl.org/terms

You might also like

- My ThesisDocument125 pagesMy ThesisAjinkya DeshmukhNo ratings yet

- Toeplitz Matrices Review by GrayDocument98 pagesToeplitz Matrices Review by GraysurvinderpalNo ratings yet

- B GL 354 001 Tactical Electronic WarfareDocument165 pagesB GL 354 001 Tactical Electronic WarfareJared A. Lang100% (2)

- Ee263 Course ReaderDocument430 pagesEe263 Course ReadersurvinderpalNo ratings yet

- Use of Machine Learning in Petroleum Production Optimization Under Geological UncertaintyDocument5 pagesUse of Machine Learning in Petroleum Production Optimization Under Geological UncertaintysurvinderpalNo ratings yet

- CS106L From StanfordDocument548 pagesCS106L From StanfordModick BasnetNo ratings yet

- Maximum Entropy DistributionDocument11 pagesMaximum Entropy DistributionsurvinderpalNo ratings yet

- 05 EQS Fiels, Boundary - HausDocument73 pages05 EQS Fiels, Boundary - HausElcan DiogenesNo ratings yet

- 2407 Electronics Technician Volume 4 Radar SystemsDocument86 pages2407 Electronics Technician Volume 4 Radar SystemsBhukya VenkateshNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Gayatri Mantras of Several GodDocument10 pagesGayatri Mantras of Several GodAnup KashyapNo ratings yet

- A Lightweight True Random Number Generator For Root of Trust ApplicationsDocument11 pagesA Lightweight True Random Number Generator For Root of Trust ApplicationsMADDULURI JAYASRINo ratings yet

- Ferrozine Rapid Liquid Method Method 8147 0.009 To 1.400 MG/L Fe Pour-Thru CellDocument6 pagesFerrozine Rapid Liquid Method Method 8147 0.009 To 1.400 MG/L Fe Pour-Thru CellCarlos Andres MedinaNo ratings yet

- Amendment No. 2 To AS 2047-2014 Windows and External Glazed Doors in BuildingsDocument2 pagesAmendment No. 2 To AS 2047-2014 Windows and External Glazed Doors in BuildingsTommy AndersNo ratings yet

- Water Treatment Lecture 4Document34 pagesWater Treatment Lecture 4pramudita nadiahNo ratings yet

- Questions and Answers About Lead in Ceramic Tableware: Contra Costa Health Services / Lead Poisoning Prevention ProjectDocument4 pagesQuestions and Answers About Lead in Ceramic Tableware: Contra Costa Health Services / Lead Poisoning Prevention Projectzorro21072107No ratings yet

- Fighting The Sixth Mass ExtinctionDocument25 pagesFighting The Sixth Mass ExtinctionRichard J. MarksNo ratings yet

- My Ideal Home: Name No. Class Date Mark TeacherDocument5 pagesMy Ideal Home: Name No. Class Date Mark TeacherQuadrado MágicoNo ratings yet

- C.4. Quiz Conservation of BiodiversityDocument2 pagesC.4. Quiz Conservation of Biodiversitylaura pongutaNo ratings yet

- Rate List of 1-June-2015 To 31-May-2016: S.No Code Test Name Standard Rates 15% Discounted RatesDocument25 pagesRate List of 1-June-2015 To 31-May-2016: S.No Code Test Name Standard Rates 15% Discounted RatesMirza BabarNo ratings yet

- Harrington SOAP NoteDocument5 pagesHarrington SOAP NoteDanielle100% (4)

- Mechanical Smoke Ventilation Calculations For Basement (Car Park)Document7 pagesMechanical Smoke Ventilation Calculations For Basement (Car Park)Mahmoud Abd El-KaderNo ratings yet

- Medical BiotechnologyDocument4 pagesMedical Biotechnologyعمر بن عليNo ratings yet

- Foods 09 01560 PDFDocument10 pagesFoods 09 01560 PDFkim cheNo ratings yet

- Clinical Science of Guilen Barren SyndromeDocument2 pagesClinical Science of Guilen Barren SyndromemanakimanakuNo ratings yet

- 2017 Induction Programme V1Document3 pages2017 Induction Programme V1AL SolmiranoNo ratings yet

- PRICELIST E Katalogs IVD 06042023 INDOPUTRA - PDF TerbaruDocument6 pagesPRICELIST E Katalogs IVD 06042023 INDOPUTRA - PDF Terbaruseksi sspk sarprasNo ratings yet

- Thermal Imaging Tech ResourceDocument20 pagesThermal Imaging Tech Resourceskimav86100% (1)

- MSDS Aradur 2965 PDFDocument9 pagesMSDS Aradur 2965 PDFkamalnandreNo ratings yet

- Ace of Spades + Outlaw 125 2019Document85 pagesAce of Spades + Outlaw 125 2019Nelson RodrigoNo ratings yet

- CR300 Wireless Communication ProtocolDocument130 pagesCR300 Wireless Communication ProtocolHenry Martinez BedoyaNo ratings yet

- Disorders of The Endocrine System and Dental ManagementDocument63 pagesDisorders of The Endocrine System and Dental ManagementSanni FatimaNo ratings yet

- Board of Technical Education Uttar Pradesh Lucknow: CODE 2298Document2 pagesBoard of Technical Education Uttar Pradesh Lucknow: CODE 2298Md Shaaz100% (1)

- Eye Examination: Dr. Seng Serey / Prof .Kenn Freedman Prof - Kong PisethDocument78 pagesEye Examination: Dr. Seng Serey / Prof .Kenn Freedman Prof - Kong PisethSela KHNo ratings yet

- Pharma Iii To Viii PDFDocument57 pagesPharma Iii To Viii PDFRaja PrabhuNo ratings yet

- Text For Number 1-3: How To Make Passion Fruit Juice IngredientsDocument4 pagesText For Number 1-3: How To Make Passion Fruit Juice Ingredientsakmal maulanaNo ratings yet

- Grade 7 Information Writing: The Bulldog: A Dog Like No OtherDocument5 pagesGrade 7 Information Writing: The Bulldog: A Dog Like No Otherapi-202727113No ratings yet

- Zeolites and Ordered Porous Solids - Fundamentals and ApplicationsDocument376 pagesZeolites and Ordered Porous Solids - Fundamentals and ApplicationsHenrique Souza100% (1)

- Version 2 Dog Legged Stair ES EN 1992-1-1 2015Document29 pagesVersion 2 Dog Legged Stair ES EN 1992-1-1 2015Khaja100% (2)

- r315 Quick Start PDFDocument80 pagesr315 Quick Start PDFfdsfasdsfadsNo ratings yet