Professional Documents

Culture Documents

Cache Mapping

Uploaded by

Akshay MeenaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cache Mapping

Uploaded by

Akshay MeenaCopyright:

Available Formats

Cache Mapping

Goals

Cache Mapping

COMP375 Computer Architecture and dO Organization i ti

Understand how the cache system finds a d t item data it in i th the cache. h Be able to break an address into the fields used by the different cache mapping schemes.

Cache Challenges

The challenge in cache design is to ensure th t the that th desired d i dd data t and di instructions t ti are in the cache. The cache should achieve a high hit ratio. The cache system must be quickly searchable as it is checked every memory reference.

Cache to Ram Ratio

A processor might have 512 KB of cache and d 512 MB of f RAM RAM. There may be 1000 times more RAM than cache. The cache algorithms have to carefully select the 0 0.1% 1% of the memory that is likely to be most accessed.

COMP375

Cache Mapping

Tag Fields

A cache line contains two fields

Data from RAM The address of the block currently in the cache.

Cache Lines

The cache memory is divided into blocks y lines can range g from 16 or lines. Currently to 64 bytes. Data is copied to and from the cache one line at a time. The lower log2(line size) bits of an address specify if a particular i l b byte i in a li line. Line address Offset

The part of the cache line that stores the address of the block is called the tag field. Many different addresses can be in any given cache line. The tag field specifies the address currently in the cache line. Only the upper address bits are needed.

Line Example

0110010100 0110010101 0110010110 0110010111 0110011000 0110011001 0110011010 0110011011 0110011100 0110011101 0110011110 0110011111

Computer Science Search

If you ask COMP285 students how to search hf for a data d t item, it they th should h ld b be able bl to tell you Linear Search O(n) Binary Search O(log2n) Hashing O(1) Parallel Search O(n/p)

These boxes represent RAM addresses

With a line size of 4, the offset is the log2(4) = 2 bits. The lower 2 bits specify which byte in the line

COMP375

Cache Mapping

Mapping

The memory system has to quickly determine if a given address is in the cache. There are three popular methods of mapping addresses to cache locations. Fully Associative Search the entire cache for an address. Direct Each address has a specific place in the cache. Set Associative Each address can be in any of a small set of cache locations.

Direct Mapping

Each location in RAM has one specific place in cache where the data will be held. Consider C id th the cache h t to b be lik like an array. Part of the address is used as index into the cache to identify where the data will be held. y be Since a data block from RAM can only in one specific line in the cache, it must always replace the one block that was already there. There is no need for a replacement algorithm.

Direct Cache Addressing

The lower log2(line size) bits define which b t in byte i th the bl block k The next log2(number of lines) bits defines which line of the cache The remaining upper bits are the tag field. Tag Line Offset

Cache Constants

cache size / line size = number of lines log2(line size) = bits for offset log2(number of lines) = bits for cache index remaining upper bits = tag address bits

COMP375

Cache Mapping

Example direct address

Assume you have 32 bit addresses (can address 4 GB) 64 byte lines (offset is 6 bits) 32 KB of cache Number of lines = 32 KB / 64 = 512 Bits to specify which line = log2(512) = 9 17 bits Tag 9 bits Line 6 bits Offset

Example Address

Using the previous direct mapping scheme with ith 17 bit tag, t 9 bit i index d and d 6 bit offset ff t 01111101011101110001101100111000 01111101011101110 001101100 111000 Tag Index offset Compare the tag field of line 001101100 (10810) for the value 01111101011101110. If it matches, return byte 111000 (5610) of the line

How many bits are in the tag, line and offset fields?

Direct Mapping 24 bit addresses 64K bytes of cache 16 byte cache lines

1. 2. 3. 4. tag=4, line=16, offset=4 tag=4, line=14, offset=6 tag=8, line=12, offset=4 tag=6, line=12, offset=6

g= 4 ta

Associative Mapping

In associative cache mapping, the data from any location in RAM can be stored in any location in cache. cache When the processor wants an address, all tag fields in the cache as checked to determine if the data is already in the cache. Each tag line requires circuitry to compare the desired address with the tag field. All tag fields are checked in parallel.

25% 25% 25% 25%

t= 4

t= 4

t= 6

,o

ffs e

ffs e

ffs e

,o

=1 4

,o

=1 6

in e

in e

=1 2

,l

,l

,l

g= 4

g= 8

COMP375

ta

ta

ta

g= 6

,l

in e

in e

=1 2

,o

ffs e

t= 6

Cache Mapping

Associative Cache Mapping

The lower log2(line size) bits define which b t in byte i th the bl block k The remaining upper bits are the tag field. For a 4 GB address space with 128 KB cache and 32 byte blocks: 27 bits Tag 5 bits Offset

Example Address

Using the previous associate mapping scheme h with ith 27 bit t tag and d 5 bit offset ff t 01111101011101110001101100111000 011111010111011100011011001 11000 Tag offset Compare all tag fields for the value 011111010111011100011011001. If a match is found, return byte 11000 (2410) of the line

How many bits are in the tag and offset fields?

Associative Mapping 24 bit addresses 128K bytes of cache 64 byte cache lines

1. 2. 3. 4. tag= 20, offset=4 tag=19, offset=5 tag=18, offset=6 tag=16, offset=8

g= ta

Set Associative Mapping

Set associative mapping is a mixture of pp g direct and associative mapping. The cache lines are grouped into sets. The number of lines in a set can vary from 2 to 16. A portion of the address is used to specify which set will hold an address. The data can be stored in any of the lines in the set.

25% 25% 25% 25%

ffs et =4

et =6

ff s

ff s

,o

g= 19 ,o

g= 18 ,o

ta

ta

COMP375

ta

g= 16 ,o

20

ff s

et =8

et =5

Cache Mapping

Set Associative Mapping

When the processor wants an address, it indexes to the set and then searches the tag fields of all lines in the set for the desired address. n = cache size / line size = number of lines b = log2(line size) = bit for offset w = number of lines / set s = n / w = number of sets

Example Set Associative

Assume you have 32 bit addresses 32 KB of cache 64 byte lines Number of lines = 32 KB / 64 = 512 4 way set associative Number of sets = 512 / 4 = 128 Set bits = log2(128) = 7 19 bits Tag 7 bits Set 6 bits Offset

Example Address

Using the previous set-associate mapping with ith 19 bit tag, t 7 bit i index d and d 6 bit offset ff t 01111101011101110001101100111000 0111110101110111000 1101100 111000 Tag Index offset Compare the tag fields of lines 110110000 to 110110011 for the value 0111110101110111000. If a match is found, return byte 111000 (56) of that line

How many bits are in the tag, set and offset fields?

2-way Set Associative 24 bit addresses 128K bytes of cache 16 byte cache lines

1. 2. 3. 4. tag=8, g set = 12, offset=4 tag=16, set = 4, offset=4 tag=12, set = 8, offset=4 tag=10, set = 10, offset=4

25% 25% 25% 25%

et =4

et =4

et =4

12 ,o

4, o

8, o

et

et

,s et

6, s

2, s

g= 8

g= 1

g= 1

ta

ta

ta

COMP375

ta

g= 1

0, s

et

10 ,

of fs et

ff s

ff s

ff s

=4

Cache Mapping

Replacement policy

When a cache miss occurs, data is copied into some location in cache. With Set Associative or Fully Associative mapping, the system must decide where to put the data and what values will be replaced. Cache performance is greatly affected by properly choosing data that is unlikely to be referenced again.

Replacement Options

First In First Out (FIFO) Least Recently Used (LRU) Pseudo LRU Random

LRU replacement

LRU is easy to implement for 2 way set associative. i ti You only need one bit per set to indicate which line in the set was most recently used LRU is difficult to implement for larger ways. For an N way mapping mapping, there are N! different permutations of use orders. It would require log2(N!) bits to keep track.

Pseudo LRU

Pseudo LRU is frequently used in set pp g associative mapping. In pseudo LRU there is a bit for each half of a group indicating which have was most recently used. For 4 way set associative, one bit i di indicates that h the h upper two or l lower two was most recently used. For each half another bit specifies which of the two lines was most recently used. 3 bits total.

COMP375

Cache Mapping

Comparison of Mapping Fully Associative

A Associative i i mapping i works k the h b best, b but i is complex to implement. Each tag line requires circuitry to compare the desired address with the tag field. cache, such as the Some special purpose cache virtual memory Translation Lookaside Buffer (TLB) is an associative cache.

Comparison of Mapping Direct

Direct has the lowest performance, but is easiest to implement. Direct is often used for instruction cache. Sequential addresses fill a cache line and then go to the next cache line. Intel Pentium level 1 instruction cache uses direct mapping.

Comparison of Mapping Set Associative

Set associative is a compromise between the other two. The bigger the way the better the performance, but the more complex and expensive. Intel Pentium uses 4 way set associative caching for level 1 data and 8 way set associative for level 2.

COMP375

You might also like

- FIRE Study MaterialDocument25 pagesFIRE Study MaterialAkshay MeenaNo ratings yet

- IC-57 Fire and ConsequentialDocument312 pagesIC-57 Fire and ConsequentialAshish Keshan84% (38)

- IC 45 Underwriting III EbookDocument241 pagesIC 45 Underwriting III EbookAkshay Meena95% (20)

- m4 1fDocument17 pagesm4 1fMayurRawoolNo ratings yet

- Syllogism Possibility Detailed AnalysisDocument2 pagesSyllogism Possibility Detailed AnalysisNitish PandeyNo ratings yet

- Sample Project ReportDocument30 pagesSample Project ReportPreeti VermaNo ratings yet

- RRB Previous Year ExamDocument12 pagesRRB Previous Year ExamAkshay MeenaNo ratings yet

- 12 Maintenance12121Document25 pages12 Maintenance12121Akshay MeenaNo ratings yet

- Project Report of HyundaiDocument69 pagesProject Report of HyundaiPuneet Gupta73% (26)

- RDBMS Concepts: DatabaseDocument83 pagesRDBMS Concepts: DatabaseAkshay MeenaNo ratings yet

- Web Enabled Booking System For SEM LaboratoryDocument16 pagesWeb Enabled Booking System For SEM LaboratoryAkshay MeenaNo ratings yet

- Mobile Number PortabilityDocument19 pagesMobile Number PortabilityAkshay MeenaNo ratings yet

- Ohm2nd 140129124544 Phpapp01Document36 pagesOhm2nd 140129124544 Phpapp01Akshay MeenaNo ratings yet

- AbstractDocument1 pageAbstractAkshay MeenaNo ratings yet

- LinksDocument1 pageLinksAkshay MeenaNo ratings yet

- Mobile Number PortabilityDocument19 pagesMobile Number PortabilityAkshay MeenaNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (120)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- SuperRACK InstallationGuideDocument16 pagesSuperRACK InstallationGuideCricri CriNo ratings yet

- Tm244acc6 LCDDocument4 pagesTm244acc6 LCDهيثم الملاحNo ratings yet

- Activity 1 - 2021 HpcaDocument4 pagesActivity 1 - 2021 HpcaPRATIK DHANUKANo ratings yet

- Intel MicroprocessorDocument35 pagesIntel MicroprocessorLissa JusohNo ratings yet

- Datasheet 25c040Document22 pagesDatasheet 25c040Perona PabloNo ratings yet

- Altera Training Course Altera Training CourseDocument222 pagesAltera Training Course Altera Training CoursetptuyenNo ratings yet

- Magnetic Core MemoryDocument7 pagesMagnetic Core MemoryRohan Nagpal0% (1)

- E7A62v1 2 PDFDocument109 pagesE7A62v1 2 PDFAlan SublimeNo ratings yet

- ACS880 DC/DC Converter Control Program: Firmware ManualDocument210 pagesACS880 DC/DC Converter Control Program: Firmware ManualKechaouNo ratings yet

- CA 2mark and 16 Mark With AnswerDocument109 pagesCA 2mark and 16 Mark With AnswerJagadeesh Mohan100% (1)

- Macro Placement VLSI Basics and Interview QuestionsDocument3 pagesMacro Placement VLSI Basics and Interview Questionsprasanna810243100% (1)

- Board SchematicDocument1 pageBoard SchematicJOSE LENIN RIVERA VILLALOBOS0% (1)

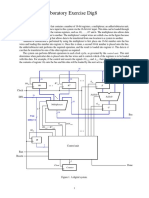

- Laboratory Exercise D: R0, - . - , R7 and A. The Multiplexer Also Allows DataDocument6 pagesLaboratory Exercise D: R0, - . - , R7 and A. The Multiplexer Also Allows DataMunya RushambwaNo ratings yet

- EE103 Exp9 TOSHIT - 2101CB59Document5 pagesEE103 Exp9 TOSHIT - 2101CB59Rahul PalNo ratings yet

- 7 Series Fpgas Data Sheet: Overview: General DescriptionDocument18 pages7 Series Fpgas Data Sheet: Overview: General DescriptionPraveen MeduriNo ratings yet

- CPU Technology Explained With ExamplesDocument3 pagesCPU Technology Explained With Exampleswinbenitez123No ratings yet

- AT83C26 Software Driver User GuideDocument20 pagesAT83C26 Software Driver User GuideSam B.medNo ratings yet

- IFL90 Ch5 Dis Assembly Guide IntelDocument45 pagesIFL90 Ch5 Dis Assembly Guide IntelExpa KoroNo ratings yet

- Low Power MuxDocument4 pagesLow Power MuxPromit MandalNo ratings yet

- SHARC Hardware AcceleratorsDocument2 pagesSHARC Hardware Acceleratorsjcxz6053No ratings yet

- LabTool 48UXP User'sManual (En)Document72 pagesLabTool 48UXP User'sManual (En)Osama YaseenNo ratings yet

- 4 Bit Fast Adder Design Topology and Layout With Self Resetting Logic For Low Power VLSI Circuits 197 205Document9 pages4 Bit Fast Adder Design Topology and Layout With Self Resetting Logic For Low Power VLSI Circuits 197 205MohamedNo ratings yet

- Logic Synthesis and Optimization Benchmarks User Guide: Saeyang YangDocument45 pagesLogic Synthesis and Optimization Benchmarks User Guide: Saeyang YangReinaldo RibeiroNo ratings yet

- PD InterviewDocument5 pagesPD Interviewjayanthi_kondaNo ratings yet

- 502, Sri Sairam Polytechnic College Board Practical Examinations:: OCT - 2022Document2 pages502, Sri Sairam Polytechnic College Board Practical Examinations:: OCT - 2022harivigneshaNo ratings yet

- Trenton CBI 5721jDocument148 pagesTrenton CBI 5721jCristian FernandezNo ratings yet

- Device Tree Made EasyDocument22 pagesDevice Tree Made Easym3y54mNo ratings yet

- Interfacing Between ECL / LVECL / PECL / Lvpecl - To - TTL / LVTTL / Cmos / LvcmosDocument6 pagesInterfacing Between ECL / LVECL / PECL / Lvpecl - To - TTL / LVTTL / Cmos / LvcmosSirsendu DuttaNo ratings yet

- CSO Lecture Notes 1-5 For SVVV StudentsDocument97 pagesCSO Lecture Notes 1-5 For SVVV Studentss. ShuklaNo ratings yet

- Unit 1: MPI (CST-282, ITT-282) SUBMISSION DATE: 14.02.2020Document9 pagesUnit 1: MPI (CST-282, ITT-282) SUBMISSION DATE: 14.02.2020Shruti SinghNo ratings yet