Professional Documents

Culture Documents

Theory of Probability

Uploaded by

Shibu Kumar SOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Theory of Probability

Uploaded by

Shibu Kumar SCopyright:

Available Formats

7.

Theory of Probability

7.1 Introduction to Probability One commonality found in the problems weve examined thus far is that each problem involves a large number of possibly true situations and we are interested in narrowing down the set to one definitely true situation. In the weighing problem for example, at the beginning any one of the objects might be the bad one (heavy or light) and we seek to gather enough information to determine that one is definitely bad, and the others are definitely not. Probability theory is studied to provide the groundwork for modeling such problems and provide information about what to expect in various situations. Interestingly enough, it was originally developed by Blaise Pascal and Pierre de Fermat to study gambling schemes. We can define a set of all the possible outcomes in a problem, called a sample space, in which each outcome has a probability equal to the proportion of cases it is expected to be true. In the weighing problem, any of the objects could be the bad one and there is an outcome in the sample space for each such scenario. The probability of each is equal to 1 divided by the number of objects, assuming they all have equal likelihood of being bad. We can determine our expected outcome to some situation by representing the outcome as some function of the sample space points, and averaging the function over the entire sample space. This is often much simpler than it sounds, as the function referred to frequently outputs the point itself, as long as it can be represented by a number and can be averaged. For example, we could determine someones expected bowling score by summing from 0 to 300 i*p(i), where p(i) is the probability that he will score i. We are simply averaging f(i) = i over the sample space. More on this in the next section. Commonly we can represent the potential situations by strings of binary digits (bits). In the remainder of these notes we assume that the sample space consists of points that are represented as such. 7.2 Definitions and Concepts Event An event is any subset of the sample space. For example, having all 1s in the string is an event, as is having the last bit be 0. Any other property of the strings in the sample space corresponds to an event. The subset for event E consists of the points in the sample space having the property that defines E.

Random Variable A random variable is a function defined on the points of the sample space. For example, the function could be the number of 1s in the string, the position of the first 1, how much one could win in a betting scheme if the given bit string were

true, or any other (usually numeric) function on the strings. An indicator random variable for an event E is a special kind of random variable that is either 1 or 0. It is 1 if the string is in the event subset and 0 otherwise. We shall call the indicator variable I(E). Given any random variable f, let E(f=x) be the event that f(p) = x, where p is a bit string in the sample space. Thus, I(E(f=x)) is 1 iff f(p) = x. Therefore, we can write:

x[I (E ( f

all x

= x ))]

Probability Every point q in the sample space is true a certain proportion of the time, p(q), that we call the probability. Clearly, these probabilities cannot be 0. Also, the probabilities must sum 1 over the entire sample space. This is true since the sample space includes all possibilities, and thus the proportion of time that one of outcomes in the sample space occurs is 1. Uniform Sample Space This is a sample space in which all the outcomes have the same probability. Since they must sum to 1, the probability must be the reciprocal of the number of points in the sample space. In a uniform sample space of N outcomes, the probability of each outcome is 1/N. Event Probability The probability of event E, p(E), is the sum of p(q) over all the strings q in the subset corresponding to E. Expected Value _ The expected value of a random variable f, denoted as f or <f>, is the sum over all points q in the sample space of p(q)*f(q). This is the same as the mean or average of the variable. Notice that the expectation of an indicator variable for an event is the probability of that event. Expectation is a linear property of random variables. variables f and g, with constants a and b: Linearity of Random Variables <af +bg> = a<f> + b<g> Deviation The deviation of f from its mean value is f - <f>. Variance That is, for random

The variance of a random variable is the mean square deviation of f, or <(f <f>)2>. This simplifies to <f2> - <f>2 (proof deferred to exercises), or the difference between the expectation of its square and the square of its expectation. Variance is denoted as 2(f). Thus, (f) is the root mean square deviation of f. The variance of a function f is quadratic in f, thus 2(af) = a22(f) (prove in exercises).

Conditional Probability Recall that an event E corresponds to a subset of the sample space. The conditional probability of q given E, written p(q|E), is the probability that q is true, given the reduced sample space of event E. That is, given that we are restricted to Es subset of points, the probability that q occurs is the conditional probability. We can write the probability of q as the sum of p(s)*I(s) for all points s in the sample space divided by the sum of all p(s) in the sample space, where I is the indicator variable for q occurring. In a full sample space, the sum of all p(s) is 1 and the sum of all p(s)*I(s) is p(q). In our reduced sample space, the sum of all p(s) is simply p(E), since the sample space is reduced to the subset for event E. Also, the indicator variable for q given E is the product of the indicator variables for q and E, since the points we are interested in are those for which q is satisfied and E is satisfied. When they are both satisfied, Iq*IE is 1, otherwise, it equals 0. This is appropriate behavior for our indicator variable. Thus, the p(q|E) is the sum of all p(s)*Iq(s)*IE(s) divided by the sum of IE(s)*p(s) for all s. This is the same as saying P(q and E) (oft written as P(q E), divided by p(E). To summarize this: p(q|E) = p(E q) / p(E)

Of course, by the same formula, we know that p(E|q) = p(q E) / p(Q). Since p(q E) is the same as p(E q), we can solve for this quantity, and substitute it into the boxed equation above to get p(q|E) in terms of p(E|q). Specifically, p(q|E) = p(E|q)*p(q)/p(E). This tells us that p(q|E) differs from p(q) by a factor of p(E|q)/p(E). Mutually Exclusive and p(A B) Two events are mutually exclusive if they never occur at the same time. A simple example is the event that the bit string has more 1s than 0s, and the event that the bit string has more 0s than 1s. The probability that either of the two mutually exclusive events occurs is simply the sum of the probabilities of the two events.

For two events that can occur at the same time, notice that adding the probabilities of the two events double counts certain situations. Suppose our sample space contains all 2-bit strings (00 01 10 11). If one event is that the string starts with 1, and the other is that the string has at least one 1 in it, the bit string 10 is counted in both events. Thus, to get the probability that either of the two events occurs, we sum the probabilities and then subtract out the probability that both occur, so that nothing is counted twice. For all events A,B P(A or B) = P(A) + P(B) P(A B) Similarly for three events, two find the probability that one or more occurs, we sum up the individual probabilities and subtract out the probability that any combination of two occurs. That is, we subtract out P(A B), P(A C) and P(B C). However, for any scenario that satisfies all three events, we have added it in three times (adding the individual probabilities) and subtracted it out three times leaving nothing, so we must add in again. Thus, we add P(A B C). For events A1,A2,A3 P(A1 or A2 or A3) = all j P(Aj) j<kP(Aj Ak) + P(A1 A2 A3) You will have to find a similar statement for four events in the exercises. Independence Two events are said to be independent if the probability of A given B is the same as the probability of A alone. That is, two events are independent if knowing that one occurs does not affect the probability of the other occurring. Knowing the formula for A given B, and equating it to the probability of A, it is easy to see that independence implies that the probability of A and B is equal to the product of their probabilities. For independent events A and B P(A B) = P(A)P(B) Random variables said to be independent if knowing the value of one does not affect the probabilities of the possible values of the other. For two variables f and g, we know p(f=a) = p(f=a|g =b) = p(f =a & g=b)/p(g=b). Thus, p(f=a & g=b) = p(f=a)*p(g=b). Multiplying both sides of this by a*b and summing over all possible a and b, we obtain a relation for the expectation of the product of f and g. For independent random variables <f*g> = <f> * <g>

It follows that the indicator variable relation I(f=a,g=b) = I(f=a)*I(g=b) holds as well. It should be obvious that the expectation of the sum of two random variables is the sum of their expectations. Covariance Now lets examine the variance of the sum of two random variables. We start knowing that variance in this case is <(f+g)2> - <f+g>2. Var(f+g) = <(f+g)2> - <f+g>2 = <f2 + 2f*g + g2> - <f>2 2<f><g> -<g>2 = <f2> - <f>2 + <g2> - <g>2 + 2(<f*g> - <f><g>) = Var(f) + Var(g) +2(<f*g> - <f><g>) We can define the quantity (<f*g> - <f><g>) as the covariance of two random variables. Thus: Var(f+g) = Var(f) + Var(g) +2Cov(f,g) As we determined above, the <f*g> = <f><g> for independent variables, and thus the covariance is 0. For independent random variables Var(f+g) = Var(f) + Var(g) This innocent looking statement is actually very curious and important. We have here a linear type property of a quadratic function of f and g. It is the basis of the results we will need, which concern sums and averages of many independent random variables. 7.3 Comments and Clarifications At first, it may seem as though we have made a lot of definitions but gotten essentially nowhere. However, now that we have these concepts fleshed out, we can examine some important results. One thing to note before moving on is that the field of probability contains a lot of loaded words that do not necessarily mean what they sound like. The expectation of a random variable, for example, does not necessarily mean that we expect a certain value for that variable. Rather, the expectation of a variable is the average over its sample space, weighted according to probability. In the case of indicator variables, the expectation is equal to the probability of the event (between 0 and 1), even though the variable can only be 0 or 1. Certainly we do not actually expect that the value of an indicator variable is an intermediate number between 0 and 1. However, if the variance is very small, we actually do expect that the value of a random variable will be near its expectation. Thus, to draw accurate conclusions from

probability theory, we need to be able to learn something about the expectation of f, based on its variance. Lets take a look at situations that can be modeled as long sequences of independent random variables. Specifically we will look at variables that can only take on 0 or 1 as there value. We call these Boolean random variables. Several Boolean variables with the same probability of being 1 are said to be identically distributed. This is because, if we continuously assign and reassign values to two such variables based on their probability of being 1, the distribution of 1s and 0s for the two variables should be the same. If we are concerned with a sequence of length N of independent and identically distributed Boolean random variables then we can make very strong statements about their sum and their average. We can determine the probability that their sum or average takes on a given value. As we relax the conditions here, so that the variables do not have to all be identical, can take on other values, and can have limited dependence on one another, we can say somewhat less, but for a wide variety of circumstances there is a similar conclusion, called the central limit theorem. It has many variations based on varying assumptions about the situation modeled, usually with roughly the same conclusion. We will not go into this much here. There is a weaker statement called the law of large numbers, which is sufficient for our purposes here. It states that if you choose a value from a set of independent identically distributed random variables many times, the proportion of the time that you get any particular value will be close to the probability that your random variable takes on that value, as your number of picks increases. There are actually several laws of large numbers on this topic. This justifies the interpretation of the probability of an event as the proportion of the time the event would occur if you kept putting yourself in the situation modeled by your sample space over and over again, independently. The rest of the notes consists of a description of our last tool and how variance is involved, and then some of the consequences that can be deduced from all this about sequences of random variables. 7.4 Chebyshevs Inequality As mentioned, the definition of the variance of a random variable f is the mean square of fs deviation from its mean. We can use this concept of variance to put an upper limit on the probability the variable takes on values with deviations from the mean that are greater than x*, where 2 is variance, and x is any value greater than 1. One such bound is Chebyshevs inequality, which answers the question: What is the maximum proportion of a variables sample space that can correspond to a deviation greater than x*.?

Lets call that proportion of the sample space z. That is, the product of z and the number of elements in the sample space is the number that deviate from the mean by at least x*. The minimum variance in this scenario would result when all of the points with deviation greater than x* had deviance that was only infinitesimally greater and all the points with deviation less than x* have deviance of 0. The square deviation of the samples with deviation > x* would be (x)2, while the square deviation of the other samples would be 0. Since a proportion of z of the samples have the non-zero deviation, then the mean square deviation (variance) is equal to z(x)2. This of course is at most 2, since the optimal possible variance must be less than the actual variance of the variable. Thus, z 1/x2. Recall that z is the proportion of the sample space with deviation greater than x. Thus Chebyshevs Inequality: P(|F f| k) 2/k2 , where k = x and F is a value taken on by f. This implies that when is very small, the portion of the sample space over which the value of the random variable is close to the mean is very large. This is a law of large numbers, as goes to 0. 7.5 Conclusions Suppose that we have N independent Boolean variables (having values 0 or 1), having a probability p of being 1. When k of the variables have value 1, the sum will be k. In this case, N-k have 0 as there value. For each of the many ways of choosing k of the N variables to be 1 and N-k to be 0, the probability of the specific arrangement is pk(1-p)N-k since the variables are independent. Using basic combinatorics, there are C(N,k) mutually exclusive ways of this occurring. Thus the probability that the sum is k is: pk(1-p)N-kC(N,k) The distribution of sums of the variables or of the when each is assigned a 1 or 0 based on a fixed p and large N is called a binomial distribution. It forms a bell curve shape with each different sum along the x-axis and its corresponding probability (calculated above) on the y-axis. We know that the mean of the distribution, or the expected sum, is the expected value of each variable summed together. This is simply pN, since there are N variables, each with expected value of p. The mean of this average then is p itself. The variance of each variable is p(1-p) (prove in exercises) and thus the variance of the sum is Np(1-p). The variance of their average is p(1-p)/N, since variance is a quadratic property and thus we must divide by N2 when averaging it. By the Chebyshev inequality, we now know that the average of the N variables approaches p as long as p does not approach 0. This is the Weak Law of Large Numbers.

This statement provides a meaning of sorts, to the probability p of a single variable. It is also exactly the statement we will need in section 8 of the course notes. Similar results to all of those above hold when assumptions are weakened. Here all variables are assumed to be independent, and identically distributed. Thus, as long as the variables are independent and each of their variances are small compared to the sum of the variances, it is possible to prove that the resulting probability for the average of the variables, is not unlike a binomial distribution, except it will have mean given by the average of the ps and variance given by the sum of the individual variances divided by N2. This holds true for sums of random variables which take on more values than just 0 and 1, and even (with some changes) when there is a limited amount of dependence among the variables, as well. Results of this kind are called central limit theorems. When we add a sum of a large number of independent and identically distributed random variables, and the sum of their means stays finite, we get a special case of a binomial distribution, called a Poisson distribution. When the mean of the Poisson distribution approaches , the distribution has the form: p(k) = e- k/k!. Again, distributions can tend to this one with much weakened assumptions about them. You should also realize that the facts about probability that we have noticed above, that the mean of a sum is the sum of the means in all cases, that the variance of the sum of independent variables is the sum of the variances, etc. are extremely useful tools for solving probabilistic questions, as you will see in the exercises below.

Exercises Exercise 1 Prove that the variance of a random variable <(f-<f>)2> is equal to <f2> <f>2. Prove that the variance of a random variable f is quadratic. That is, prove that 2(af) = a22(f). What is the general statement of the probability that one or more of four events will occur?

Exercise 2

Exercise 3

Exercise 4

Prove that the variance of a Boolean variable, having probability of p that it has a value of 1, is p(1-p).

Exercise 5

Use a spreadsheet to plot (chart x y scatter) p(k/N) vs k for binomial distributions having p=1/2 and p=1/3 for values of N from 5 to however high you can, on one sheet for each p. Derive the formula for a Poisson distribution from the binomial distribution with p=m/N, for finite k as N gets large. Given a class of N students with independently chosen birthdays, deduce the probability that no two have the same birthday. This can be done by deducing a formula for it and taking logs and expanding them to approximate the answer. Another way to estimate the answer is by assuming you have a Poisson distribution of the number of pairs of students with the same birthday. How do these answers compare?

Exercise 6

Exercise 7

Exercise 8

a. Suppose you pick 2 numbers between 0 and 1 at random (that is, the probability of picking a number in any interval of length d is 1/d) for each). What is the expected value of their difference? Hint: what is the answer if you pick 3 points at random on a unit circle? b. Suppose you pick 2 distinct numbers at random between 1 and N. What is the expected value of their difference? Hint: compare with part a. c. Suppose you pick k distinct numbers at random out of N? Same question.

Exercise 9

Suppose k persons get on an elevator in the basement of an office building one mornings and they get out each with equal probability of emerging at each floor there being m floors at which they might get out, and suppose they get out independently. Find the expected number of floors at which the elevator does not stop, because nobody gets out. Evaluate for m=11 and k=10 and vice versa.

-Steven Kannan

You might also like

- Lecture Notes On Stochastic Calculus (NYU)Document138 pagesLecture Notes On Stochastic Calculus (NYU)joydrambles100% (2)

- Mathematical Foundations of Information TheoryFrom EverandMathematical Foundations of Information TheoryRating: 3.5 out of 5 stars3.5/5 (9)

- Technology ForecastingDocument38 pagesTechnology ForecastingSourabh TandonNo ratings yet

- Science Grade 10 (Exam Prep)Document6 pagesScience Grade 10 (Exam Prep)Venice Solver100% (3)

- NCPDocument6 pagesNCPJoni Lyn Ba-as BayengNo ratings yet

- Basic Terms of ProbabilityDocument7 pagesBasic Terms of ProbabilityAbdul HafeezNo ratings yet

- Learning Module - Joints, Taps and SplicesDocument9 pagesLearning Module - Joints, Taps and SplicesCarlo Cartagenas100% (1)

- Practical Data Science: Basic Concepts of ProbabilityDocument5 pagesPractical Data Science: Basic Concepts of ProbabilityRioja Anna MilcaNo ratings yet

- Stochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008Document132 pagesStochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008.cadeau01No ratings yet

- L1 QueueDocument9 pagesL1 QueueThiem Hoang XuanNo ratings yet

- Basic Probability and Statistical DistributionDocument10 pagesBasic Probability and Statistical Distributionsusan mwingoNo ratings yet

- Probability Theory: 1.1. Space of Elementary Events, Random EventsDocument13 pagesProbability Theory: 1.1. Space of Elementary Events, Random EventsstumariNo ratings yet

- Probability NotesDocument18 pagesProbability NotesVishnuNo ratings yet

- Lecture 1Document7 pagesLecture 1guzz5671No ratings yet

- Probability NotesDocument15 pagesProbability NotesJUAN DIGO BETANCURNo ratings yet

- Probability and Event Space-2-8Document7 pagesProbability and Event Space-2-8justin maxtonNo ratings yet

- Probability Theory - MIT OCWDocument16 pagesProbability Theory - MIT OCWOscarNo ratings yet

- College of Engineering Department of Electrical and Computer Engineering (Electronics and Communication Stream)Document41 pagesCollege of Engineering Department of Electrical and Computer Engineering (Electronics and Communication Stream)Gemechis GurmesaNo ratings yet

- UNIT3Document5 pagesUNIT3Ayush NighotNo ratings yet

- Coin FlipDocument10 pagesCoin Flip林丽莹No ratings yet

- Notes 5Document4 pagesNotes 5defence servicesNo ratings yet

- MSO201 Week1 Lecture NotesDocument7 pagesMSO201 Week1 Lecture NotesKevalNo ratings yet

- Stochastic Calculus Notes, Lecture 1Document21 pagesStochastic Calculus Notes, Lecture 1TarunNo ratings yet

- ProbabilityDocument6 pagesProbabilityjanvincentcentinoNo ratings yet

- ProbabilityDocument3 pagesProbabilityAnchalNo ratings yet

- 1probability & Probability DistributionDocument45 pages1probability & Probability DistributionapurvaapurvaNo ratings yet

- Mathematics For Computer ApplicationDocument4 pagesMathematics For Computer ApplicationalokpandeygenxNo ratings yet

- MSO201 Week 2 Lecture NotesDocument11 pagesMSO201 Week 2 Lecture NotesKevalNo ratings yet

- BechorDocument8 pagesBechorEpic WinNo ratings yet

- Maths 3Document41 pagesMaths 3Lëmä FeyTitaNo ratings yet

- Independence and Bernoulli Trials (Euler, Ramanujan and Bernoulli Numbers)Document40 pagesIndependence and Bernoulli Trials (Euler, Ramanujan and Bernoulli Numbers)hari_shankar55100% (1)

- CH 6 - ProbabilityDocument7 pagesCH 6 - ProbabilityhehehaswalNo ratings yet

- Unit 4 Part 1Document62 pagesUnit 4 Part 1Girraj DohareNo ratings yet

- Math Prob Slinker Measure Theory Infinite HeadsDocument8 pagesMath Prob Slinker Measure Theory Infinite HeadsPrez H. CannadyNo ratings yet

- STM Summary NotesDocument18 pagesSTM Summary NotesisoaidNo ratings yet

- Probability Theory: 1 Heuristic IntroductionDocument17 pagesProbability Theory: 1 Heuristic IntroductionEpic WinNo ratings yet

- Probability and Counting Techniques "Key Concepts and Formulas" (A) SetsDocument7 pagesProbability and Counting Techniques "Key Concepts and Formulas" (A) Setsalvin bautistaNo ratings yet

- Stochastic Modelling Notes DiscreteDocument130 pagesStochastic Modelling Notes DiscreteBrofessor Paul NguyenNo ratings yet

- Probability 2Document16 pagesProbability 2Mays MiajanNo ratings yet

- Res SssssssDocument6 pagesRes Sssssssvinay kumarNo ratings yet

- MIT6 436JF08 Lec01 PDFDocument13 pagesMIT6 436JF08 Lec01 PDFIam MeNo ratings yet

- Chapter 5 Elementery ProbabilityDocument25 pagesChapter 5 Elementery ProbabilityyonasNo ratings yet

- Prob MIT LecturesDocument240 pagesProb MIT LecturesÀlex RodriguezNo ratings yet

- Eda Midterms-CompilationDocument12 pagesEda Midterms-CompilationDonya PangilNo ratings yet

- MIT18 05S14 Reading2Document6 pagesMIT18 05S14 Reading2Jesha Carl JotojotNo ratings yet

- Probability TeachDocument32 pagesProbability TeachANDREW GIDIONNo ratings yet

- Threshold Phenomena: 9.1 AsidesDocument6 pagesThreshold Phenomena: 9.1 AsidesAshoka VanjareNo ratings yet

- Basic ProbabilityDocument14 pagesBasic Probabilityzhilmil guptaNo ratings yet

- Chapter 10, Probability and StatsDocument30 pagesChapter 10, Probability and StatsOliver GuidettiNo ratings yet

- BIT 2212: Business Systems Modeling: Basic ProbabilityDocument25 pagesBIT 2212: Business Systems Modeling: Basic Probabilitybeldon musauNo ratings yet

- Probability Theory: Probability - Models For Random PhenomenaDocument116 pagesProbability Theory: Probability - Models For Random PhenomenasanaNo ratings yet

- Formal Methods in Software Engineering: Logic of PropositionsDocument56 pagesFormal Methods in Software Engineering: Logic of PropositionsIzhar HussainNo ratings yet

- Stat230 Chapter03Document30 pagesStat230 Chapter03Maya Abdul HusseinNo ratings yet

- Proofs of Quadratic ReciprocityDocument9 pagesProofs of Quadratic Reciprocityمهبولة ايمانNo ratings yet

- Random Variables: 1.1 Elementary ExamplesDocument14 pagesRandom Variables: 1.1 Elementary ExamplesjsndacruzNo ratings yet

- Probability and Measures: Unit 1Document4 pagesProbability and Measures: Unit 1Harish YadavNo ratings yet

- Probability ReviewDocument12 pagesProbability Reviewavi_weberNo ratings yet

- Chapter 10 ProbabilityDocument14 pagesChapter 10 Probabilitydannielujan14No ratings yet

- M6-Discrete ProbabilityDocument38 pagesM6-Discrete ProbabilityRon BayaniNo ratings yet

- Seeing TheoryDocument66 pagesSeeing TheoryJohn AvgerinosNo ratings yet

- Summary StatisticsDocument2 pagesSummary StatisticsAshley N. KroonNo ratings yet

- Probability Theory: Probability - Models For Random PhenomenaDocument82 pagesProbability Theory: Probability - Models For Random PhenomenaChidvi ReddyNo ratings yet

- © Ncert Not To Be Republished: ProbabilityDocument57 pages© Ncert Not To Be Republished: ProbabilityLokeshmohan SharmaNo ratings yet

- The Ramanujan Journal Vol.06, No.4, December 2002Document126 pagesThe Ramanujan Journal Vol.06, No.4, December 2002fafarifafuNo ratings yet

- Disc CapDocument33 pagesDisc CapShibu Kumar SNo ratings yet

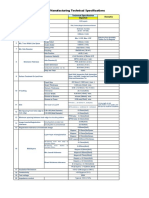

- Proposal of Solar PowerDocument9 pagesProposal of Solar PowerShibu Kumar SNo ratings yet

- ABHI POWER SOLUTIONS Quotation Solar PV SYstem KareemzarcadeDocument3 pagesABHI POWER SOLUTIONS Quotation Solar PV SYstem KareemzarcadeShibu Kumar SNo ratings yet

- Mtp3055e PDFDocument7 pagesMtp3055e PDFShibu Kumar SNo ratings yet

- Log 16f16Document1 pageLog 16f16Shibu Kumar SNo ratings yet

- Government of India Ministry of New & Renewable Energy (SPV Division)Document21 pagesGovernment of India Ministry of New & Renewable Energy (SPV Division)Shibu Kumar SNo ratings yet

- S Series Gap-Kap: Vishay BccomponentsDocument4 pagesS Series Gap-Kap: Vishay BccomponentsShibu Kumar SNo ratings yet

- ABHI POWER SOLUTIONS Quotation Solar PV SYstemDocument3 pagesABHI POWER SOLUTIONS Quotation Solar PV SYstemShibu Kumar SNo ratings yet

- Banshee 350 Gear AssemblyDocument1 pageBanshee 350 Gear AssemblyShibu Kumar SNo ratings yet

- Half Bridge Driver With IR2153 IGBT PDFDocument4 pagesHalf Bridge Driver With IR2153 IGBT PDFShibu Kumar SNo ratings yet

- ABHI POWER SOLUTIONS Quotation FencingDocument1 pageABHI POWER SOLUTIONS Quotation FencingShibu Kumar SNo ratings yet

- 2 Stroke CarburetorDocument6 pages2 Stroke CarburetorShibu Kumar SNo ratings yet

- Half Bridge Driver With IR2153 IGBT PDFDocument4 pagesHalf Bridge Driver With IR2153 IGBT PDFShibu Kumar SNo ratings yet

- Half Bridge Driver With IR2153 IGBT PDFDocument4 pagesHalf Bridge Driver With IR2153 IGBT PDFShibu Kumar SNo ratings yet

- Irf3205 PDFDocument9 pagesIrf3205 PDFJuanito Naxito Jebus ArjonaNo ratings yet

- MTP3055EDocument7 pagesMTP3055EShibu Kumar SNo ratings yet

- PIC16F145x PDFDocument404 pagesPIC16F145x PDFArsalan88No ratings yet

- A FastenerTorqueChartsDocument2 pagesA FastenerTorqueChartsMuhammad JawadNo ratings yet

- Use of A Representative: Sivanandan Shibu KumarDocument2 pagesUse of A Representative: Sivanandan Shibu KumarShibu Kumar SNo ratings yet

- Needle SelectionDocument3 pagesNeedle SelectionShibu Kumar SNo ratings yet

- 8 TungstenDocument1 page8 TungstenShibu Kumar SNo ratings yet

- Untitled - Notepad PDFDocument1 pageUntitled - Notepad PDFShibu Kumar SNo ratings yet

- ATmega8 Mini VoltmeterDocument1 pageATmega8 Mini VoltmeterĐuro MijićNo ratings yet

- JT Gear RatioDocument1 pageJT Gear RatioAsrul RashidNo ratings yet

- AWGDocument1 pageAWGShibu Kumar SNo ratings yet

- 1N4001-1N4007 (Vishay) DatasheetDocument5 pages1N4001-1N4007 (Vishay) DatasheetShinwell JohnsonNo ratings yet

- Ball and Roller BearingsDocument1 pageBall and Roller BearingsShibu Kumar SNo ratings yet

- Invertec 135sDocument10 pagesInvertec 135sShibu Kumar SNo ratings yet

- Calculations For Design Parameters of Transformer - Engineer ExperiencesDocument12 pagesCalculations For Design Parameters of Transformer - Engineer ExperiencesShibu Kumar SNo ratings yet

- Capabilities IMP Import PCBDocument1 pageCapabilities IMP Import PCBShibu Kumar SNo ratings yet

- Offshore Training Matriz Matriz de Treinamentos OffshoreDocument2 pagesOffshore Training Matriz Matriz de Treinamentos OffshorecamiladiasmanoelNo ratings yet

- Ep Docx Sca SMSC - V2Document45 pagesEp Docx Sca SMSC - V290007No ratings yet

- Agency Procurement Request: Ipil Heights Elementary SchoolDocument1 pageAgency Procurement Request: Ipil Heights Elementary SchoolShar Nur JeanNo ratings yet

- Report On Monitoring and Evaluation-Ilagan CityDocument5 pagesReport On Monitoring and Evaluation-Ilagan CityRonnie Francisco TejanoNo ratings yet

- OMM 618 Final PaperDocument14 pagesOMM 618 Final PaperTerri Mumma100% (1)

- Ceo DualityDocument3 pagesCeo Dualitydimpi singhNo ratings yet

- 04 Membrane Structure NotesDocument22 pages04 Membrane Structure NotesWesley ChinNo ratings yet

- Nuttall Gear CatalogDocument275 pagesNuttall Gear Catalogjose huertasNo ratings yet

- RADMASTE CAPS Grade 11 Chemistry Learner GuideDocument66 pagesRADMASTE CAPS Grade 11 Chemistry Learner Guideamajobe34No ratings yet

- PRINCIPLES OF TEACHING NotesDocument24 pagesPRINCIPLES OF TEACHING NotesHOLLY MARIE PALANGAN100% (2)

- Internship Report PDFDocument71 pagesInternship Report PDFNafiz FahimNo ratings yet

- Ce Licensure Examination Problems Rectilinear Translation 6Document2 pagesCe Licensure Examination Problems Rectilinear Translation 6Ginto AquinoNo ratings yet

- Distillation ColumnDocument22 pagesDistillation Columndiyar cheNo ratings yet

- Model 900 Automated Viscometer: Drilling Fluids EquipmentDocument2 pagesModel 900 Automated Viscometer: Drilling Fluids EquipmentJazminNo ratings yet

- Jordan CVDocument2 pagesJordan CVJordan Ryan SomnerNo ratings yet

- Adding and Subtracting FractionsDocument4 pagesAdding and Subtracting Fractionsapi-508898016No ratings yet

- Pepperberg Notes On The Learning ApproachDocument3 pagesPepperberg Notes On The Learning ApproachCristina GherardiNo ratings yet

- Multibody Dynamics Modeling and System Identification For A Quarter-Car Test Rig With McPherson Strut Suspension PDFDocument122 pagesMultibody Dynamics Modeling and System Identification For A Quarter-Car Test Rig With McPherson Strut Suspension PDFnecromareNo ratings yet

- Die Openbare BeskermerDocument3 pagesDie Openbare BeskermerJaco BesterNo ratings yet

- Aashirwaad Notes For CA IPCC Auditing & Assurance by Neeraj AroraDocument291 pagesAashirwaad Notes For CA IPCC Auditing & Assurance by Neeraj AroraMohammed NasserNo ratings yet

- PUPiApplyVoucher2017 0006 3024Document2 pagesPUPiApplyVoucher2017 0006 3024MätthëwPïńëdäNo ratings yet

- Volcano Lesson PlanDocument5 pagesVolcano Lesson Planapi-294963286No ratings yet

- Genie PDFDocument277 pagesGenie PDFOscar ItzolNo ratings yet

- Subject: PSCP (15-10-19) : Syllabus ContentDocument4 pagesSubject: PSCP (15-10-19) : Syllabus ContentNikunjBhattNo ratings yet

- 4D Beijing (Muslim) CHINA MATTA Fair PackageDocument1 page4D Beijing (Muslim) CHINA MATTA Fair PackageSedunia TravelNo ratings yet

- NJEX 7300G: Pole MountedDocument130 pagesNJEX 7300G: Pole MountedJorge Luis MartinezNo ratings yet