Professional Documents

Culture Documents

Handbook of Multisensor Data Fusion: Review

Uploaded by

Wendy GuzmanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Handbook of Multisensor Data Fusion: Review

Uploaded by

Wendy GuzmanCopyright:

Available Formats

Book Review

Handbook of Multisensor Data Fusion

Edited by David Hall and James Llinas CRC Press, 2001

A book which includes dirty secrets in multisensor data fusion, the unscented filter and the Bayesian iceberg cannot be all bad. This handbook consists of 26 chapters, many of which are excellent, including well-written solid quantitative state-of-the-art surveys by Bar-Shalom, Poore, Uhlmann, Julier, Stone, Mahler, and Kinibarajan, among others, The chapters in this handbook range from tutorial to cutting-edge research, so there is something for everyone. Mary Nichols gives a broad and rapid survey of military multisensor data fusion systems in Chapter 22. A plethora of websites about data fusion and related subjects is included at the end of this handbook. The most ambitious and interesting chapter, by far, is on Random Set Theoryfor Target Tracking and Identification, by Ron Mahler. Random set theory is clearly a good idea for data fusion, owing to the uncertainty in the number of targets and the uncertain origin of measurements in many practical applications. I n fact, random sets are essentially required, implicitly or explicitly, to describe the problem of tracking in a dense multiple target environment. Mahlers chapter is edifying but sometimes obscure. In particular, what Mahler calls turn-the-crank formulas require derivatives and integrals with respect to sets: these are not the bread and butter of normal engineers, and hence, a few simple explicit examples of these would have been helpful, and the sooner the better. Mahlers chapter is written with much authority and enthusiasm, as witnessed by his short history of multitarget filtering (page 24, Chapter 14) which is rather fun to read and should be compared with Larry Stones version of history (page 22, Chapter 10). Two chapters by Julier and Uhlmann, on covariance intersection and nonlinear systems are particularly well done, being lucid, practical, and innovative; there is no doubt about how to use this new theory, as the MATLAB source code is included. The second chapter describes a novel algorithm for nonlinear filtering that has vastly superior performance compared with the extended Kalman filter (EKF) for certain applications. The EKF uses a simple linearization of the nonlinear equations to approximate the propagation of uncertainty in nonlinear transformations, whereas the new filter uses a more accurate approximation, based on sampling the probability density at carefully chosen points to

approximate an n-dimensional integral, similar to Gauss-Hermite quadrature. This is called the unscented filter for obvious reasons. Covariance intersection (CI) is a simple method of combining covariance matrices for fusion, when there is uncertainty about the statistical correlations involved. Roughly speaking, CI computes the largest one-sigma error ellipsoid that fits within the intersection of the two error ellipsoids being fused. This is a more conservative approach than using the standard Kalman filter equations, which assume perfect knowledge of the statistical correlations. However, when the two error ellipsoids are equal, the intersection is the same as the original ellipsoids, and, hence, using CI there is no apparent benefit from fusion; some engineers might view this as being much too conservative. One of the longest and most provocative chapters in this handbook is by Joseph Carl on Bayesian vs. Dempster-Shafer (D-S) decision algorithms, which have been the subject of heated debates over the last several decades. Carl says that many would argue that probability theory is not suitable for practical implementation on complex real-world problems, which is a very interesting assertion, but, Carl does not explain how D-S theory improves the situation. In particular, it is well-known and not subject to debate that given a decision problem with completely defined probability distribution functions (pdfs), that Bayesian decision rules are optimal. Therefore, the only way in which D-S could improve performance is in the case in which the pdfs are not completely specified. The problem of incomplete pdfs was the original motivation for Dempsters seminal work published in 1967 and 1968, and it has been the subject of extensive research since then, but without any practical results so far (see [6] and pages 57-61 in [7] for a survey of this research). The best work that I know of on this subject is Chapter 5 of Kharins recent book [8], which basically concludes, that for most sensible models of uncertainty in pdfs, the standard Bayesian decision rule, or a minor modification of it, is the most robust approach. There is no formula or theorem in Carls chapter that shows how much D-S improves performance relative to Bayesian methods, nor does Carl assert that D-S is better than Bayes, but rather in the detailed numerical example that is worked out, the D-S and Bayesian decisions are qualitatively the same. This agrees with other comparisons of D-S with Bayes reported in the

IEEE AESS Swfenis Muguzine. October 2001

15

literature, when such calculations are done correctly. There are, unfortunately, notorious cases of papers published by engineers in which the D-S advocate claimed superiority over Bayes, owing to the engineers faulty understanding of elementary probability theory. Moreover, it is well-known that Dempsters rule of combining evidence assumes that the data are statistically independent, whereas Bayesian methods do not require this assumption, with the result that for problems with statistically dependent data, the Bayesian performance can be vastly superior to D-S. This is a fundamental limitation of D-S compared with Bayes, but Carl does not mention it in his chapter, which is a curious lacuna in a handbook on data fusion such as this. A common misconception among engineers is that Bayesian methods require Gaussian pdfs, uniform prior distributions, and/or statistically independent data, whereas, in fact, Bayesian decision rules are optimal for any pdf of the data (Gaussian or non-Gaussian), any prior pdf (uniform or non-uniform) and data that are statistically dependent or independent, Three good references on D-S vs. Bayesian methods, which are both readable by normal engineers as well as mathematically correct are [6, 11 Chapter 9, 121. Carl mentions near the end of his chapter that D-S is not equipped with a clear-cut decision making rule, which many readers will find somewhat surprising, because D-S has been touted as a better method of decision making. For those wondering what it means to say that D-S has no decision rules, one should read chapters 13 to 17 in [9]. Moreover, in the Foreword to Shafers famous book [lo], Dempster very clearly says that: I differ from Shafer . . . I believe that Bayesian inference will always be a basic tool for practical everyday statistics, if only because questions must be answered. and decisions must be taken, So, Professor Dempster is apparently a Bayesian, and he would not advocate the use of D-S for decision making. About ten years ago, mathematicians gave up trying to make better decisions than Bayes; the recent academic papers on D-S say that there is more to life than making decisions, that Bayes wins at making decisions, and that maybe D-S is useful to represent uncertainty. However, hard-boiled engineers are only interested in making decisions; representing uncertainty is for poets, not engineers. Any reader interested in data fusion should study the authoritative and nicely written chapters by Professors Poore, BarShalom, Kirubarajan and Uhlmann (it only seems like Uhlmann wrote half of the chapters in this handbook), as well as by Julier, Mahler, and Stone, which provide a solid mathematical foundation for data fusion. However, this handbook would still benefit from a healthy dose of the three R s: Resolution, Registration and Real world applications. First, the issue of limited sensor resolution is briefly mentioned by Stone, but, otherwise, it is essentially ignored by the other authors. For typical multisensor data fusion applications, the problem of limited sensor resolution is often more important

than data association [l-31. Second, there is only one very short chapter on data registration (and this chapter is limited to images), but [:hiscrucial topic, often the Achilles heel of real world multismensor data fusion applications, deserves much more treatment in a handbook such as this [4-51. Third, very few chapters of this handbook describe significant real world applications or simulations of such applications. One yearns for a handbook of multisensor data fusion more like Skolnik!; Radar Handbook, in which nearly every chapter was written by an engineer with a firm grasp of theory as well as substantial real world experience, and which includes practical formulas, many useful plots, lessons learned from designing and testing real systems, but without a mClange of buzzwords, platitudes, and taxonomies. - Fred Daum, Raytheon

REFERENCES

[ I ] Koch, W. and Van Keuk, G . , 1997,

Multi,ple hypothesis track maintenance with possibly unresolved measurements, IEEE Transactions on Aerospace and Electronic Systems, 33,883-892. [2] Blair, W.D. and Brandt-Peace, M., July 1999, NNJPDA f o r tracking closely-spaced Rayleigh Targers wirh possibly merged measurements, Proceedings of SPIE Conference on Signal and Data Processing, Vol. 3809,396-408. [3] Daum, F.E. and Fitzgerald, R.J., April 1994, The importance of resolution in multiple target tracking. Proceedings of SPIE Conference on Signal and Data Processing, Vol. 2235,329-338.

[4] Dana, 1vI.P.. 1990, Regi:itration: a prerequisitef o r multiple sensor tracking, Chapter 5 in Multitarget-Multisensor Tracking, edited by Yaakov Bar-Shalom, Artech House. [5] Mori, S . and Chung, C.-Y., June 2000, Effects of unpaired objects and sensor biases on track-to-track association, Proceedings of MSS Sensor and Data Fusion, 137-151. [6] Walley, P., 1991, Staristical Reasoning with Imprecise Probabilities, Chapman and Hall. [7] RobusiBayesian Analysis, edited by D. lnsua and F. Ruggeri., Springer-Verlag, 2000. [SI Khariu, Y., 1996, Robustness in Staristical Pattern Recognition, Kluwer. [9] Advances in the Dempster-Shafer Theory of Evidence, edited by R.R. Yager, et al., John Wiley & Sons, 1994. [lo] Sharer, Q., 1976, A Mathematical Theory of Evidence, Princeton University Press. [ l 11 Pearl, J., 1988, Probabilistic Reasoning in Intelligent Systems, Morgan Kaufmann. [I21 Waserman, L., 1990, Belieffunctions and statistical inference, Canadian Joumal of Statistics.

16

IEEE AESS Swenu Muguzine. October 2001

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- TutorialDocument10 pagesTutorialWendy GuzmanNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Handbook of Multisensor Data Fusion: ReviewDocument2 pagesHandbook of Multisensor Data Fusion: ReviewWendy GuzmanNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- SurveyDocument17 pagesSurveyWendy GuzmanNo ratings yet

- Introduccion To Descentralised Data FusionDocument194 pagesIntroduccion To Descentralised Data FusionWendy GuzmanNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- General Data Fusion ArchitectureDocument8 pagesGeneral Data Fusion ArchitecturesumasuthanNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Auto Compen Sac I OnDocument10 pagesAuto Compen Sac I OnWendy GuzmanNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Types of Solids 1Document16 pagesTypes of Solids 1Fern BaldonazaNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Cleats CatalogueDocument73 pagesCleats Cataloguefire123123No ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- TMT Boron CoatingDocument6 pagesTMT Boron Coatingcvolkan1100% (2)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Solutionbank D1: Edexcel AS and A Level Modular MathematicsDocument30 pagesSolutionbank D1: Edexcel AS and A Level Modular MathematicsMaruf_007No ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- RomerDocument20 pagesRomerAkistaaNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Notes For Class 11 Maths Chapter 8 Binomial Theorem Download PDFDocument9 pagesNotes For Class 11 Maths Chapter 8 Binomial Theorem Download PDFRahul ChauhanNo ratings yet

- Plagiarism - ReportDocument6 pagesPlagiarism - ReportDipesh NagpalNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Implant TrainingDocument5 pagesImplant Trainingrsrinath91No ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- 16620YDocument17 pages16620YbalajivangaruNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Introduction To Java Programming ReviewerDocument90 pagesIntroduction To Java Programming ReviewerJohn Ryan FranciscoNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- DocuDocument77 pagesDocuDon'tAsK TheStupidOnesNo ratings yet

- EI 6702-Logic and Distributed Control SystemDocument2 pagesEI 6702-Logic and Distributed Control SystemMnskSaro50% (2)

- SR-X Script Reference - EDocument24 pagesSR-X Script Reference - EDomagoj ZagoracNo ratings yet

- Thermocouple: Seeback EffectDocument8 pagesThermocouple: Seeback EffectMuhammadHadiNo ratings yet

- Zener Barrier: 2002 IS CatalogDocument1 pageZener Barrier: 2002 IS CatalogabcNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Fiberglass Fire Endurance Testing - 1992Document58 pagesFiberglass Fire Endurance Testing - 1992shafeeqm3086No ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Number System Questions PDFDocument20 pagesNumber System Questions PDFMynur RahmanNo ratings yet

- Combined Geo-Scientist (P) Examination 2020 Paper-II (Geophysics)Document25 pagesCombined Geo-Scientist (P) Examination 2020 Paper-II (Geophysics)OIL INDIANo ratings yet

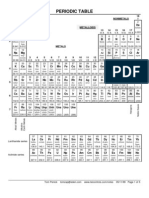

- Periodic Table and AtomsDocument5 pagesPeriodic Table and AtomsShoroff AliNo ratings yet

- Project 10-Fittings DesignDocument10 pagesProject 10-Fittings DesignVishwasen KhotNo ratings yet

- Bootloader3 PDFDocument18 pagesBootloader3 PDFsaravananNo ratings yet

- New Features in IbaPDA v7.1.0Document39 pagesNew Features in IbaPDA v7.1.0Miguel Ángel Álvarez VázquezNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Roles of ComputerDocument1 pageThe Roles of ComputerDika Triandimas0% (1)

- SM700 E WebDocument4 pagesSM700 E WebrobertwebberNo ratings yet

- Thermal Breakthrough Calculations To Optimize Design of Amultiple-Stage EGS 2015-10Document11 pagesThermal Breakthrough Calculations To Optimize Design of Amultiple-Stage EGS 2015-10orso brunoNo ratings yet

- Ug CR RPTSTDDocument1,014 pagesUg CR RPTSTDViji BanuNo ratings yet

- Spark: Owner's ManualDocument5 pagesSpark: Owner's Manualjorge medinaNo ratings yet

- Fisher Paykel SmartLoad Dryer DEGX1, DGGX1 Service ManualDocument70 pagesFisher Paykel SmartLoad Dryer DEGX1, DGGX1 Service Manualjandre61100% (2)

- 21 API Functions PDFDocument14 pages21 API Functions PDFjet_mediaNo ratings yet

- 7PA30121AA000 Datasheet enDocument2 pages7PA30121AA000 Datasheet enMirko DjukanovicNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)