Professional Documents

Culture Documents

Guide To Creating and Configuring A Server Cluster Under Windows Server 2003

Uploaded by

Joaquin Campista EnriquezOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Guide To Creating and Configuring A Server Cluster Under Windows Server 2003

Uploaded by

Joaquin Campista EnriquezCopyright:

Available Formats

Guide to Creating and Configuring a Server Cluster under Windows Server 2003

Topics on this Page Introduction Checklists for Server Cluster Configuration Cluster Installation Configuring the Cluster Service Post-Installation Configuration Test Installation Appendix Related Links

By Elden Christensen

Microsoft Corporation

Published: Last modified 5/30/2003

Abstract

This guide provides step-by-step instructions for creating and configuring a typical single quorum device multi-node server cluster using a shared disk on servers running the Microsoft Windows Server 2 "nterprise "dition and Windows Server 2 ! #atacenter "dition operating systems$ !

#ownload this guide

Introduction

% server cluster is a group of independent servers working collectively and running the Microsoft &luster Service 'MS&S($ Server clusters provide high availability) failback) scalability) and manageability for resources and applications

Server clusters allow client access to applications and resources in the event of failures and planned outages$ *f one of the servers in the cluster is unavailable because of a failure or maintenance requirements) resources and applications move to other available cluster nodes$

+or Windows &lustering solutions) the term ,high availability- is used rather than ,fault tolerant$- +aulttolerant technology offers a higher level of resilience and recovery$ +ault-tolerant servers typically use a high degree of hardware redundancy plus speciali.ed software to provide near-instantaneous recovery from any single hardware or software fault$ These solutions cost significantly more than a Windows

&lustering solution because organi.ations must pay for redundant hardware that waits in an idle state for a fault$

Server clusters do not guarantee non-stop operation) but they do provide sufficient availability for most mission-critical applications$ The cluster service can monitor applications and resources and automatically recogni.e and recover from many failure conditions$ This provides fle/ibility in managing the workload within a cluster$ *t also improves overall system availability$

&luster service benefits include0

High Availability 0 With server clusters) ownership of resources such as disk drives and *nternet protocol '*1( addresses is automatically transferred from a failed server to a surviving server$ When a system or application in the cluster fails) the cluster software restarts the failed application on a surviving server) or disperses the work from the failed node to the remaining nodes$ %s a result) users e/perience only a momentary pause in service$

Failback0 The &luster service will automatically re-assign the workload in a cluster when a failed server comes back online to its predetermined preferred owner$ This feature can be configured) but is disabled by default$

anageability 0 2ou can use the &luster %dministrator tool '&lu%dmin$e/e( to manage a cluster as a single system and to manage applications as if they were running on a single server$ 2ou can move applications to different servers within the cluster$ &luster %dministrator can be used to manually balance server workloads and to free servers for planned maintenance$ 2ou can also monitor the status of the cluster) all nodes) and resources from anywhere on the network$

Scalability 0 &luster services can grow to meet increased demand$ When the overall load for a cluster-aware application e/ceeds the cluster3s capabilities) additional nodes can be added$

This document provides instructions for creating and configuring a server cluster with servers connected to a shared cluster storage device and running Windows Server 2 2 ! "nterprise "dition or Windows Server

! #atacenter "dition$ *ntended to guide you through the process of installing a typical cluster) this

document does not e/plain how to install clustered applications$ Windows &lustering solutions that implement non-traditional quorum models) such as Ma4ority 5ode Set 'M5S( clusters and geographically dispersed clusters) also are not discussed$ +or additional information about server cluster concepts as well as installation and configuration procedures) see the Windows Server 2 ! 6nline 7elp$

Checklists for Server Cluster Configuration:

This checklist helps you prepare for installation$ Step-by-step instructions begin after the checklist$

Software !e"uire#ents

Microsoft Windows Server 2 ! "nterprise "dition or Windows Server 2 ! #atacenter "dition

installed on all computers in the cluster$

% name resolution method such as #omain 5ame System '#5S() #5S dynamic update protocol) Windows *nternet 5ame Service 'W*5S() 76STS) and so on$ %n e/isting domain model$ %ll nodes must be members of the same domain$ % domain-level account that is a member of the local administrators group on each node$ % dedicated account is recommended$

Hardware !e"uire#ents

&lustering hardware must be on the cluster service 7ardware &ompatibility 8ist '7&8($ To find the latest version of the cluster service 7&8) go to the Windows 7ardware &ompatibility 8ist at http099www$microsoft$com9whdc9hcl9default$msp/) and then search for cluster$ The entire solution must be certified on the 7&8) not 4ust the individual components$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

! <!<= The Microsoft Support 1olicy for Server &lusters and the 7ardware

$ote

*f you are installing this cluster on a storage area network 'S%5( and plan to have

multiple devices and clusters sharing the S%5 with a cluster) the solution must also be on the ,&luster9Multi-&luster #evice- 7ardware &ompatibility 8ist$ +or additional information) see the following article in the Microsoft :nowledge ;ase0 ! >>?= Support for Multiple &lusters %ttached to the Same S%5 #evice

Two mass storage device controllers@Small &omputer System *nterface 'S&S*( or +ibre &hannel$ % local system disk for the operating system '6S( to be installed on one controller$ % separate peripheral component interconnect '1&*( storage controller for the shared disks$

Two 1&* network adapters on each node in the cluster$ Storage cables to attach the shared storage device to all computers$ Aefer to the manufacturers instructions for configuring storage devices$ See the appendi/ that accompanies this article for

additional information on specific configuration needs when using S&S* or +ibre &hannel$

%ll hardware should be identical) slot for slot) card for card) ;*6S) firmware revisions) and so on) for all nodes$ This makes configuration easier and eliminates compatibility problems$

$etwork !e"uire#ents

% unique 5et;*6S name$ Static *1 addresses for all network interfaces on each node$

$ote

Server &lustering does not support the use of *1 addresses assigned from #ynamic 7ost

&onfiguration 1rotocol '#7&1( servers$

%ccess to a domain controller$ *f the cluster service is unable to authenticate the user account used to start the service) it could cause the cluster to fail$ *t is recommended that you have a domain controller on the same local area network '8%5( as the cluster is on to ensure availability$

"ach node must have at least two network adapters@one for connection to the client public network and the other for the node-to-node private cluster network$ % dedicated private network adapter is required for 7&8 certification$

%ll nodes must have two physically independent 8%5s or virtual 8%5s for public and private communication$ *f you are using fault-tolerant network cards or network adapter teaming) verify that you are using the most recent firmware and drivers$ &heck with your network adapter manufacturer for cluster compatibility$

Shared %isk !e"uire#ents&

%n 7&8-approved e/ternal disk storage unit connected to all computers$ This will be used as the clustered shared disk$ Some type of a hardware redundant array of independent disks 'A%*#( is recommended$

%ll shared disks) including the quorum disk) must be physically attached to a shared bus$

$ote

The requirement above does not hold true for Ma4ority 5ode Set 'M5S( clusters) which are not

covered in this guide$

Shared disks must be on a different controller then the one used by the system drive$ &reating multiple logical drives at the hardware level in the A%*# configuration is recommended rather than using a single logical disk that is then divided into multiple partitions at the operating

system level$ This is different from the configuration commonly used for stand-alone servers$ 7owever) it enables you to have multiple disk resources and to do %ctive9%ctive configurations and manual load balancing across the nodes in the cluster$

% dedicated disk with a minimum si.e of = megabytes 'M;( to use as the quorum device$ % partition of at least = M; is recommended for optimal 5T+S file system performance$

Berify that disks attached to the shared bus can be seen from all nodes$ This can be checked at the host adapter setup level$ Aefer to the manufacturer3s documentation for adapter-specific instructions$

S&S* devices must be assigned unique S&S* identification numbers and properly terminated according to the manufacturer3s instructions$ See the appendi/ with this article for information on installing and terminating S&S* devices$

%ll shared disks must be configured as basic disks$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

2!CD=! #ynamic #isk &onfiguration Enavailable for Server &luster #isk Aesources

Software fault tolerance is not natively supported on cluster shared disks$ %ll shared disks must be configured as master boot record 'M;A( disks on systems running the F>-bit versions of Windows Server 2 !$

%ll partitions on the clustered disks must be formatted as 5T+S$ 7ardware fault-tolerant A%*# configurations are recommended for all disks$ % minimum of two logical shared drives is recommended$

Cluster Installation

'nstallation (verview

#uring the installation process) some nodes will be shut down while others are being installed$ This step helps guarantee that data on disks attached to the shared bus is not lost or corrupted$ This can happen when multiple nodes simultaneously try to write to a disk that is not protected by the cluster software$ The default behavior of how new disks are mounted has been changed in Windows 2 behavior in the Microsoft Windows 2 operating system$ *n Windows 2 ! Server from the

!) logical disks that are not

on the same bus as the boot partition will not be automatically mounted and assigned a drive letter$ This helps ensure that the server will not mount drives that could possibly belong to another server in a comple/ S%5 environment$ %lthough the drives will not be mounted) it is still recommended that you follow

the procedures below to be certain the shared disks will not become corrupted$

Ese the table below to determine which nodes and storage devices should be turned on during each step$

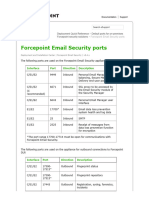

The steps in this guide are for a two-node cluster$ 7owever) if you are installing a cluster with more than two nodes) the 5ode 2 column lists the required state of all other nodes$ Ste) Setting up networks $ode * $ode 2 6n 6n 6ff Storage Co##ents Berify that all storage devices on the shared bus are turned off$ Turn on all nodes$ Shutdown all nodes$ Turn on the shared storage) then turn on the first node$ Turn on the first node) turn on second node$ Aepeat for nodes ! and > if necessary$ Turn off all nodesG turn on the first node$ Turn on the second node after the first node is successfully configured$ Aepeat for nodes ! and > as necessary$ %ll nodes should be on$

Setting up shared disks Berifying disk configuration &onfiguring the first node &onfiguring the second node 1ost-installation

6n

6ff

6n

6ff

6n

6n

6n 6n

6ff 6n

6n 6n

6n

6n

6n

Several steps must be taken before configuring the &luster service software$ These steps are0

*nstalling Windows Server 2 operating system on each node$

! "nterprise "dition or Windows Server 2

! #atacenter "dition

Setting up networks$ Setting up disks$

1erform these steps on each cluster node before proceeding with the installation of cluster service on the first node$

To configure the cluster service) you must be logged on with an account that has administrative permissions to all nodes$ "ach node must be a member of the same domain$ *f you choose to make one of the nodes a domain controller) have another domain controller available on the same subnet to eliminate a single point of failure and enable maintenance on that node$

'nstalling the Windows Server 2003 ()erating Syste#

Aefer to the documentation you received with the Windows Server 2 install the system on each node in the cluster$ ! operating system package to

;efore configuring the cluster service) you must be logged on locally with a domain account that is a member of the local administrators group$ $ote The installation will fail if you attempt to 4oin a node to a cluster that has a blank password for the local administrator account$ +or security reasons) Windows Server 2 ! prohibits blank administrator passwords$

Setting +) $etworks

"ach cluster node requires at least two network adapters with two or more independent networks) to avoid a single point of failure$ 6ne is to connect to a public network) and one is to connect to a private network consisting of cluster nodes only$ Servers with multiple network adapters are referred to as ,multi-homed$;ecause multi-homed servers can be problematic) it is critical that you follow the network configuration recommendations outlined in this document$

Microsoft requires that you have two 1eripheral &omponent *nterconnect '1&*( network adapters in each node to be certified on the 7ardware &ompatibility 8ist '7&8( and supported by Microsoft 1roduct Support Services$ &onfigure one of the network adapters on your production network with a static *1 address) and configure the other network adapter on a separate network with another static *1 address on a different subnet for private cluster communication$

&ommunication between server cluster nodes is critical for smooth cluster operations$ Therefore) you must configure the networks that you use for cluster communication are configured optimally and follow all hardware compatibility list requirements$

The private network adapter is used for node-to-node communication) cluster status information) and cluster management$ "ach node3s public network adapter connects the cluster to the public network where clients reside and should be configured as a backup route for internal cluster communication$ To do so) configure the roles of these networks as either H'nternal Cluster Co##unications (nlyH or HAll Co##unicationsH for the &luster service$

%dditionally) each cluster network must fail independently of all other cluster networks$ This means that two cluster networks must not have a component in common that can cause both to fail simultaneously$ +or e/ample) the use of a multiport network adapter to attach a node to two cluster networks would not satisfy this requirement in most cases because the ports are not independent$

To eliminate possible communication issues) remove all unnecessary network traffic from the network adapter that is set to 'nternal Cluster co##unications only 'this adapter is also known as the heartbeat or private network adapter($

To verify that all network connections are correct) private network adapters must be on a network that is on a different logical network from the public adapters$ This can be accomplished by using a cross-over cable in a two-node configuration or a dedicated dumb hub in a configuration of more than two nodes$ #o not use a switch) smart hub) or any other routing device for the heartbeat network$ $ote &luster heartbeats cannot be forwarded through a routing device because their Time to 8ive 'TT8( is set to ?$ The public network adapters must be only connected to the public network$ *f you have a virtual 8%5) then the latency between the nodes must be less then = milliseconds 'ms($ %lso) in Windows Server 2 !) heartbeats in Server &lustering have been changed to multicastG therefore) you may want to make a Madcap server available to assign the multicast addresses$ +or additional information) see the following article in the Microsoft :nowledge ;ase0 ! C<F2 Multicast Support "nabled for the &luster 7eartbeat +igure ? below outlines a four-node cluster configuration$

Figure *

Connections for a four,node cluster-

General $etwork Configuration&

$ote This guide assumes that you are running the default Start menu$ The steps may be slightly different if you are running the &lassic Start menu$ %lso) which network adapter is private and which is public depends upon your wiring$ +or the purposes of this white paper) the first network adapter '8ocal %rea &onnection( is connected to the public network) and the second network adapter '8ocal %rea &onnection 2( is connected to the private cluster network$ 2our network may be different$ .o !ena#e the /ocal Area $etwork 'cons *t is recommended that you change the names of the network connections for clarity$ +or e/ample) you might want to change the name of Local rea Connection 2 to something like Pri!ate$ Aenaming will help

you identify a network and correctly assign its role$ ?$ 2$ !$ &lick Start) point to Control 0anel) right-click $etwork Connections) and then click ()en Aight-click the /ocal Area Connection 2 icon$ &lick !ena#e$

>$ =$

Type 0rivate in the te/tbo/) and then press "5T"A$ Aepeat steps ? through !) and then rename the public network adapter as 0ublic$

Figure 2 F$

!ena#ed icons in the $etwork Connections window-

The renamed icons should look like those in +igure 2 above$ &lose the 5etwork &onnections window$ The new connection names will appear in &luster %dministrator and automatically replicate to all other cluster nodes as they are brought online$

.o Configure the 1inding (rder $etworks on All $odes ?$ 2$ !$ &lick Start) point to Control 0anel) right-click $etwork Connections) and then click ()en 6n the Advanced menu) click Advanced Settings$ *n the Connections bo/) make sure that your bindings are in the following order) and then click (20 a$ 1ublic b$ 1rivate c$ Aemote %ccess &onnections

Configuring the 0rivate $etwork Ada)ter

?$ 2$ Aight-click the network connection for your heartbeat adapter) and then click 0ro)erties$ 6n the General tab) make sure that only the 'nternet 0rotocol 3.C04'05 check bo/ is selected) as shown in +igure ! below$ &lick to clear the check bo/es for all other clients) services) and protocols$

Figure 3

Click to select only the 'nternet 0rotocol check bo6 in the 0rivate 0ro)erties

dialog bo6!$ *f you have a network adapter that is capable of transmitting at multiple speeds) you should manually specify a speed and duple/ mode$ #o not use an auto-select setting for speed) because some adapters may drop packets while determining the speed$ The speed for the network adapters must be hard set 'manually set( to be the same on all nodes according to the card manufacturerIs specification$ *f you are not sure of the supported speed of your card and connecting devices) Microsoft recommends you set all devices on that path to *0 #egabytes )er second 'Mbps( and Half %u)le6) as shown in +igure > below$ The amount of information that is traveling across the heartbeat network is small) but latency is critical for communication$ This configuration will provide enough bandwidth for reliable communication$ %ll network adapters in a cluster attached to the same network must be configured identically to use the same %u)le6 ode) /ink S)eed7 Flow Control) and so on$ &ontact your adapterIs manufacturer for specific information about appropriate speed and duple/ settings for your network adapters$

Figure 8

Setting the s)eed and du)le6 for all ada)tors-

$ote Microsoft does not recommended that you use any type of fault-tolerant adapter or HTeamingH for the heartbeat$ *f you require redundancy for your heartbeat connection) use multiple network adapters set to 'nternal Co##unication (nly and define their network priority in the &luster configuration$ *ssues seen with early multi-ported network adapters) verify that your firmware and driver are at the most current revision if you use this technology$ &ontact your network adapter manufacturer for information about compatibility on a server cluster$ +or additional information) see the following article in the Microsoft :nowledge ;ase0 2=>? ? 5etwork %dapter Teaming and Server &lustering >$ =$ &lick *nternet 1rotocol 'T&19*1() and then click 1roperties$ 6n the General tab) verify that you have selected a static *1 address that is not on the same subnet or network as any other public network adapter$ *t is recommended that you put the private network adapter in one of the following private network ranges0

? $ $ $ through ? $2==$2==$2== '&lass %( ?C2$?F$ $ through ?C2$!?$2==$2== '&lass ;( ?<2$?FD$ $ through ?<2$?FD$2==$2== '&lass &(

%n e/ample of a good *1 address to use for the private adapters is ? $? $? $? on node ? and ? $? $? $?? on node 2 with a subnet mask of 2==$ $ $ ) as shown in +igure = below$ ;e sure that this is a completely different *1 address scheme then the one used for the public network$ $ote +or additional information about valid *1 addressing for a private network) see the following article in the Microsoft :nowledge ;ase0 ?>2DF! Balid *1 %ddressing for a 1rivate 5etwork

Figure 9 F$ C$ D$ <$

An e6a#)le of an '0 address to use for )rivate ada)ters-

Berify that there are no values defined in the %efault Gateway bo/ or under +se the Following %$S server addresses $ &lick the Advanced button$ 6n the %$S tab) verify that no values are defined$ Make sure that the !egister this connection:s addresses in %$S and +se this connection:s %$S suffi6 in %$S registration check bo/es are cleared$ 6n the W'$S tab) verify that there are no values defined$ &lick %isable $et1'(S over .C04'0 as shown in +igure F below$

Figure ; ? $ ??$

<erify that no values are defined on the W'$S tab-

When you close the dialog bo/) you may receive the following prompt0 ,"his connection has an empty primary #$%& address' (o you )ant to continue* - *f you receive this prompt) click =es &omplete steps ? through ? on all other nodes in the cluster with different static *1 addresses$

Configuring the 0ublic $etwork Ada)ter

$ote *f *1 addresses are obtained via #7&1) access to cluster nodes may be unavailable if the #7&1 server is inaccessible$ +or this reason) static *1 addresses are required for all interfaces on a server cluster$ :eep in mind that cluster service will only recogni.e one network interface per subnet$ *f you need assistance with T&19*1 addressing in Windows Server 2 !) please see the 6nline 7elp$

<erifying Connectivity and $a#e !esolution

To verify that the private and public networks are communicating properly) ping all *1 addresses from each node$ 2ou should be able to ping all *1 addresses) locally and on the remote nodes$

To verify name resolution) ping each node from a client using the node3s machine name instead of its *1 address$ *t should only return the *1 address for the public network$ 2ou may also want to try a 0'$G >a command to do a reverse lookup on the *1 %ddresses$

<erifying %o#ain

e#bershi)

%ll nodes in the cluster must be members of the same domain and be able to access a domain controller and a #5S server$ They can be configured as member servers or domain controllers$ 2ou should have at least one domain controller on the same network segment as the cluster$ +or high availability$ another domain controller should also be available to remove a single point of failure$ *n this guide) all nodes are

configured as member servers$

There are instances where the nodes may be deployed in an environment where there are no pre-e/isting Microsoft Windows 5T >$ domain controllers or Windows Server 2 ! domain controllers$ This

scenario requires at least one of the cluster nodes to be configured as a domain controller$ 7owever) in a two-node server cluster) if one node is a domain controller) then the other node also must be a domain controller$ *n a four-node cluster implementation) it is not necessary to configure all four nodes as domain controllers$ 7owever) when following a ,best practices- model and having at least one backup domain controller) at least one of the remaining three nodes should be configured as a domain controller$ % cluster node must be promoted to a domain controller by using the #&1romo tool before the cluster service is configured$

The dependence in Windows Server 2

! on the #5S further requires that every node that is a domain

controller also must be a #5S server if another #5S server that supports dynamic updates and9or SAB records is not available '%ctive directory integrated .ones recommended($

The following issues should be considered when deploying cluster nodes as domain controllers0

*f one cluster node in a two-node cluster is a domain controller) the other node must be a domain controller

There is overhead associated with running a domain controller$ %n idle domain controller can use anywhere between ?! and ?> M; of A%M) which includes having the &lustering service running$ There is also increased network traffic from replication) because these domain controllers have to replicate with other domain controllers in the domain and across domains$

*f the cluster nodes are the only domain controllers) then each must be a #5S server as well$ They should point to each other for primary #5S resolution and to themselves for secondary resolution$

The first domain controller in the forest9domain will take on all 6perations Master Aoles$ 2ou can redistribute these roles to any node$ 7owever) if a node fails) the 6perations Master Aoles assumed by that node will be unavailable$ Therefore) it is recommended that you do not run 6perations Master Aoles on any cluster node$ This includes Scheme Master) #omain 5aming Master) Aelative *# Master) 1#& "mulator) and *nfrastructure Master$ These functions cannot be clustered for high availability with failover$

&lustering other applications such as Microsoft SJ8 Server K or Microsoft "/change Server in a scenario where the nodes are also domain controllers may not be optimal due to resource constraints$ This configuration should be thoroughly tested in a lab environment before deployment

;ecause of the comple/ity and overhead involved in making cluster-nodes domain controllers) it is recommended that all nodes should be member servers$

Setting +) a Cluster +ser Account

The &luster service requires a domain user account that is a member of the 8ocal %dministrators group on each node) under which the &luster service can run$ ;ecause setup requires a user name and password) this user account must be created before configuring the &luster service$ This user account should be dedicated only to running the &luster service) and should not belong to an individual$ $ote The cluster service account does not need to be a member of the #omain %dministrators group$ +or security reasons) granting domain administrator rights to the cluster service account is not recommended$ The cluster service account requires the following rights to function properly on all nodes in the cluster$ The &luster &onfiguration Wi.ard grants the following rights automatically0

%ct as part of the operating system %d4ust memory quotas for a process ;ack up files and directories *ncrease scheduling priority 8og on as a service Aestore files and directories

+or additional information) see the following article in the Microsoft :nowledge ;ase0

2F<22< 7ow to Manually Ae-&reate the &luster Service %ccount .o Set +) a Cluster +ser Account ?$ 2$ !$ >$ &lick Start) point to All 0rogra#s) point to Ad#inistrative .ools) and then click Active %irectory +sers and Co#)uters$ &lick the plus sign 'L( to e/pand the domain if it is not already e/panded$ Aight-click +sers) point to $ew) and then click +ser$ Type the cluster name) as shown in +igure C below) and then click $e6t$

Figure ? =$

.y)e the cluster na#e-

Set the password settings to +ser Cannot Change 0assword and 0assword $ever @6)ires$ &lick $e6t7 and then click Finish to create this user$ $ote *f your administrative security policy does not allow the use of passwords that never e/pire) you must renew the password and update the cluster service configuration on each node before password e/piration$ +or additional information) see the following article in the Microsoft :nowledge ;ase0 ! =D?! 7ow to &hange the &luster Service %ccount 1assword

F$ C$ D$ <$

Aight-click Cluster in the left pane of the %ctive #irectory Esers and &omputers snap-in) and then click 0ro)erties on the shortcut menu$ &lick Add e#bers to a Grou)$ &lick Ad#inistrators7 and then click (2$ This gives the new user account administrative privileges on this computer$ Juit the %ctive #irectory Esers and &omputers snap-in$

Setting u) Shared %isks

Warning To avoid corrupting the cluster disks) make sure that Windows Server 2 ! and the &luster service are installed) configured) and running on at least one node before you start an operating system on another node$ *t is critical to never have more then one node on until the &luster service is configured$ To proceed) turn off all nodes$ Turn on the shared storage devices) and then turn on node ?$

About the Auoru# %isk

The quorum disk is used to store cluster configuration database checkpoints and log files that help manage the cluster and maintain consistency$ The following quorum disk procedures are recommended0

&reate a logical drive with a minimum si.e of = M; to be used as a quorum disk) = optimal for 5T+S$

M; is

#edicate a separate disk as a quorum resource$

'#)ortant % quorum disk failure could cause the entire cluster to failG therefore) it is strongly recommended that you use a volume on a hardware A%*# array$ #o not use the quorum disk for anything other than cluster management$ The quorum resource plays a crucial role in the operation of the cluster$ *n every cluster) a single resource is designated as the quorum resource$ % quorum resource can be any 1hysical #isk resource with the following functionality0

*t replicates the cluster registry to all other nodes in the server cluster$ ;y default) the cluster registry is stored in the following location on each node0 +&ystem,oot+-Cluster-Clusdb$ The cluster registry is then replicated to the M&C&-Ch.///'tmp file on the quorum drive$ These files are e/act copies of each other$ The M&C&-0uolo1'lo1 file is a transaction log that maintains a record of all changes to the checkpoint file$ This means that nodes that were offline can have these changes appended when they re4oin the cluster$

*f there is a loss of communication between cluster nodes) the challenge response protocol is initiated to prevent a Hsplit brainH scenario$ *n this situation) the owner of the quorum disk resource becomes the only owner of the cluster and all the resources$ The owner then makes the resources available for clients$ When the node that owns the quorum disk functions incorrectly) the surviving nodes arbitrate to take ownership of the device$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

! <?DF 7ow the &luster Service Takes 6wnership of a #isk on the Shared ;us

#uring the cluster service installation) you must provide the drive letter for the quorum disk$ The letter A is commonly used as a standard) and J is used in the e/ample$ .o Configure Shared %isks ?$ 2$ !$ >$ Make sure that only one node is turned on$ Aight click y Co#)uter) click anage) and then e/pand Storage$ #ouble-click %isk anage#ent$ *f you connect a new drive) then it automatically starts the Write Signature and Epgrade #isk Wi.ard$ *f this happens) click $e6t to step through the wi.ard$ $ote The wi.ard automatically sets the disk to dynamic$ To reset the disk to basic) right-click %isk n 'where n specifies the disk that you are working with() and then click !evert to 1asic %isk$ =$ F$ C$ D$ <$ Aight-click unallocated disk s)ace$ &lick $ew 0artition $ The 5ew 1artition Wi.ard begins$ &lick $e6t$ Select the 0ri#ary 0artition partition type$ &lick $e6t$ The default is set to ma/imum si.e for the partition si.e$ &lick $e6t$ 'Multiple logical disks are

? $

recommended over multiple partitions on one disk$( Ese the drop-down bo/ to change the drive letter$ Ese a drive letter that is farther down the alphabet than the default enumerated letters$ &ommonly) the drive letter A is used for the quorum disk) then A) S)and so on for the data disks$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

!?D=!> ;est 1ractices for #rive-8etter %ssignments on a Server &luster $ote *f you are planning on using volume mount points) do not assign a drive letter to the disk$ +or additional information) see the following article in the Microsoft :nowledge ;ase0 2D 2<C 7ow to &onfigure Bolume Mount 1oints on a &lustered Server ??$ +ormat the partition using 5T+S$ *n the <olu#e /abel bo/) type a name for the disk$ +or e/ample) %rive A) as shown in +igure D below$ *t is critical to assign drive labels for shared disks) because this can dramatically reduce troubleshooting time in the event of a disk recovery situation$

Figure B

't is critical to assign drive labels for shared disks-

*f you are installing a F>-bit version of Windows Server 2

!) verify that all disks are formatted as M;A$

Mlobal 1artition Table 'M1T( disks are not supported as clustered disks$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

2D>?!> Server &lusters #o 5ot Support M1T Shared #isks

Berify that all shared disks are formatted as 5T+S and designated as M;A ;asic$ .o <erify %isk Access and Functionality ?$ 2$ !$ >$ Start Windows "/plorer$ Aight-click one of the shared disks 'such as %rive A&C() click $ew7 and then click .e6t %ocu#ent$ Berify that you can successfully write to the disk and that the file was created$ Select the file) and then press the %el key to delete it from the clustered disk$

=$ F$

Aepeat steps ? through > for all clustered disks to verify they can be correctly accessed from the first node$ Turn off the first node) turn on the second node) and repeat steps ? through > to verify disk access and functionality$ %ssign drive letters to match the corresponding drive labels$ Aepeat again for any additional nodes$ Berify that all nodes can read and write from the disks) turn off all nodes e/cept the first one) and then continue with this white paper$

Configuring the Cluster Service

2ou must supply all initial cluster configuration information in the first installation phase$ This is accomplished using the &luster &onfiguration Wi.ard$

%s seen in the flow chart) the form '&reate a new &luster( and the Noin '%dd nodes( take a couple different paths) but they have a few of the same pages$ 5amely) &redential 8ogin) %naly.e) and Ae-%naly.e and Start Service are the same$ There are minor differences in the following pages0 Welcome) Select &omputer) and &luster Service %ccount$ *n the ne/t two sections of this lesson) you will step through the wi.ard pages presented on each of these configuration paths$ *n the third section) after you follow the step-through sections) this white paper describes in detail the %naly.e) )Ae-%naly.e and Start Service pages) and what the information provided in these screens means$

$ote #uring &luster service configuration on node ?) you must turn off all other nodes$ %ll shared storage devices should be turned on$ .o Configure the First $ode ?$ 2$ &lick Start) click All 0rogra#s) click Ad#inistrative .ools) and then click Cluster Ad#inistrator$ When prompted by the 6pen &onnection to &luster Wi.ard) click Create new cluster in the Action drop-down list) as shown in +igure < below$

Figure D !$

.he Action dro),down list-

Berify that you have the necessary prerequisites to configure the cluster) as shown in +igure ? below$ &lick $e6t$

Figure *0 >$

A list of )rere"uisites is )art of the $ew Server Cluster WiEard Welco#e )age-

Type a unique 5et;*6S name for the cluster 'up to ?= characters() and then click $e6t$ *n the e/ample shown in +igure ?? below) the cluster is named yCluster$( %dherence to #5S naming rules is recommended$ +or additional information) see the following articles in the Microsoft :nowledge ;ase0

?F!> < 5et;*6S Suffi/es '?Fth &haracter of the 5et;*6S 5ame(

2=>FD #5S 5amespace 1lanning

Figure ** =$

Adherence to %$S na#ing rules is reco##ended when na#ing the cluster-

*f you are logged on locally with an account that is not a %o#ain Account with /ocal Ad#inistrative )rivileges ) the wi.ard will prompt you to specify an account$ This is not the account the &luster service will use to start$ $ote *f you have appropriate credentials) the prompt mentioned in step = and shown in +igure ?2 below may not appear$

Figure *2 F$

.he $ew Server Cluster WiEard )ro#)ts you to s)ecify an account-

;ecause it is possible to configure clusters remotely) you must verify or type the name of the server that is going to be used as the first node to create the cluster) as shown in +igure ?! below$

&lick $e6t$

Figure *3

Select the na#e of the co#)uter that will be the first node in the cluster-

$ote The *nstall wi.ard verifies that all nodes can see the shared disks the same$ *n a comple/ storage area network the target identifiers 'T*#s( for the disks may sometimes be different) and the Setup program may incorrectly detect that the disk configuration is not valid for Setup$ To work around this issue you can click the Advanced button) and then click Advanced 3#ini#u#5 configuration $ +or additional information) see the following article in the Microsoft :nowledge ;ase0 !!?D ? &luster Setup May 5ot Work When 2ou %dd 5odes

C$

+igure ?> below illustrates that the Setup process will now analy.e the node for possible hardware or software problems that may cause problems with the installation$ Aeview any warnings or error messages$ 2ou can also click the %etails button to get detailed information about each one$

Figure *8 )roble#sD$

.he Setu) )rocess analyEes the node for )ossible hardware or software

Type the unique cluster *1 address 'in this e/ample ?C2$2F$2 >$? () and then click $e6t$

%s shown in +igure ?= below) the 5ew Server &luster Wi.ard automatically associates the cluster *1 address with one of the public networks by using the subnet mask to select the correct network$ The cluster *1 address should be used for administrative purposes only) and not for client connections$

Figure *9

.he $ew Server Cluster WiEard auto#atically associates the cluster '0 address

with one of the )ublic networks<$ Type the user na#e and )assword of the cluster service account that was created during preinstallation$ '*n the e/ample in +igure ?F below) the user name is ,&luster-($ Select the domain name in the %o#ain drop-down list) and then click $e6t$

%t this point) the Cluster Configuration Wi.ard validates the user account and password$

Figure *;

.he wiEard )ro#)ts you to )rovide the account that was created during )re,

installation? $ Aeview the Su##ary page) shown in +igure ?C below) to verify that all the information that is about to be used to create the cluster is correct$ *f desired) you can use the quorum button to change the quorum disk designation from the default auto-selected disk$

The summary information displayed on this screen can be used to reconfigure the cluster in the event of a disaster recovery situation$ *t is recommended that you save and print a hard copy to keep with the change management log at the server$ $ote The Auoru# button can also be used to specify a Ma4ority 5ode Set 'M5S( quorum model$ This is one of the ma4or configuration differences when you create an M5S cluster

Figure *? ??$

.he 0ro)osed Cluster Configuration )age-

Aeview any warnings or errors encountered during cluster creation$ To do this) click the plus signs to see more) and then click $e6t$ Warnings and errors appear in the &reating the &luster page as shown in +igure ?D$

Figure *B ?2$

Warnings and errors a))ear on the Creating the Cluster )age-

&lick Finish to complete the installation$ +igure ?< below illustrates the final step$

Figure *D

.he final ste) in setting u) a new server cluster-

$ote To view a detailed summary) click the <iew /og button or view the te/t file stored in the following location0 OSystemAootOPSystem!2P8og+ilesP&lusterP&l&fgSrv$8og

<alidating the Cluster 'nstallation

Ese the &luster %dministrator '&lu%dmin$e/e( to validate the cluster service installation on node ?$ .o <alidate the Cluster 'nstallation ?$ 2$ &lick Start) click 0rogra#s) click Ad#inistrative .ools) and then click Cluster Ad#inistrator$ Berify that all resources came online successfully) as shown in +igure 2 below$

*f your browser does not support inline frames) click here to view on a separate page$

Figure 20

.he Cluster Ad#inister verifies that all resources ca#e online successfully-

$ote %s general rules) do not put anything in the cluster group) do not take anything out of the cluster group) and do not use anything in the cluster group for anything other than cluster administration$

Configuring the Second $ode

*nstalling the cluster service on the other nodes requires less time than on the first node$ Setup configures the cluster service network settings on the second node based on the configuration of the first node$ 2ou can also add multiple nodes to the cluster at the same time) and remotely$

$ote +or this section) leave node ? and all shared disks turned on$ Then turn on all other nodes$ The cluster service will control access to the shared disks at this point to eliminate any chance of corrupting the volume$ ?$ 2$ !$ >$ =$ 6pen Cluster Ad#inistrator on 5ode ?$ &lick File) click $ew) and then click $ode$ The %dd &luster &omputers Wi.ard will start$ &lick $e6t$ *f you are not logged on with appropriate credentials) you will be asked to specify a domain account that has administrative rights over all nodes in the cluster$ "nter the machine name for the node you want to add to the cluster$ &lick Add$ Aepeat this step) shown in +igure 2? below) to add all other nodes that you want$ When you have added all nodes) click $e6t$

Figure 2* F$ C$ D$

Adding nodes to the cluster-

The Setup wi.ard will perform an analysis of all the nodes to verify that they are configured properly$

Type the password for the account used to start the cluster service$ Aeview the summary information that is displayed for accuracy$ The summary information will be used to configure the other nodes when they 4oin the cluster$ <$ Aeview any warnings or errors encountered during cluster creation) and then click $e6t$ ? $ &lick Finish to complete the installation$

Post-Installation Configuration

Heartbeat Configuration

5ow that the networks have been configured correctly on each node and the &luster service has been configured) you need to configure the network roles to define their functionality within the cluster$ 7ere is

a list of the network configuration options in &luster %dministrator0

@nable for cluster use0 *f this check bo/ is selected) the cluster service uses this network$ This check bo/ is selected by default for all networks$

Client access only 3)ublic network50 Select this option if you want the cluster service to use this network adapter only for e/ternal communication with other clients$ 5o node-to-node communication will take place on this network adapter$

'nternal cluster co##unications only 3)rivate network5 0 Select this option if you want the cluster service to use this network only for node-to-node communication$ All co##unications 3#i6ed network50 Select this option if you want the cluster service to use the network adapter for node-to-node communication and for communication with e/ternal clients$ This option is selected by default for all networks$

This white paper assumes that only two networks are in use$ *t e/plains how to configure these networks as one mi/ed network and one private network$ This is the most common configuration$ *f you have available resources) two dedicated redundant networks for internal-only cluster communication are recommended$ .o Configure the Heartbeat ?$ 2$ !$ Start &luster %dministrator$ *n the left pane) click Cluster Configuration7 click $etworks) right-click 0rivate7 and then click 0ro)erties$ &lick 'nternal cluster co##unications only 3)rivate network5) as shown in +igure 22 below$

Figure 22 >$ =$ F$ C$

+sing Cluster Ad#inistrator to configure the heartbeat-

&lick (2$ Aight-click 0ublic7 and then click 0ro)erties 'shown in +igure 2! below($ &lick to select the @nable this network for cluster use check bo/$ &lick the All co##unications 3#i6ed network5 option) and then click (2$

Figure 23

.he 0ublic 0ro)erties dialog bo6-

Heartbeat Ada)ter 0rioritiEation

%fter configuring the role of how the cluster service will use the network adapters) the ne/t step is to prioriti.e the order in which they will be used for intra-cluster communication$ This is applicable only if two or more networks were configured for node-to-node communication$ 1riority arrows on the right side of the screen specify the order in which the cluster service will use the network adapters for communication between nodes$ The cluster service always attempts to use the first network adapter listed for remote procedure call 'A1&( communication between the nodes$ &luster service uses the ne/t network adapter in the list only if it cannot communicate by using the first network adapter$ ?$ 2$ !$ Start &luster %dministrator$ *n the left pane) right-click the cluster name 'in the upper left corner() and then click 0ro)erties$ &lick the $etwork 0riority tab) as shown in +igure 2> below$

Figure 28 >$ =$

.he $etwork 0riority tab in Cluster Ad#inistratorove +) or ove %own buttons to

Berify that the 0rivate network is listed at the top$ Ese the change the priority order$ &lick (2$

Configuring Cluster %isks

Start &luster %dministrator) right-click any disks that you want to remove from the cluster) and then click %elete$ $ote ;y default) all disks not residing on the same bus as the system disk will have 1hysical #isk Aesources created for them) and will be clustered$ Therefore) if the node has multiple buses) some disks may be listed that will not be used as shared storage) for e/ample) an internal S&S* drive$ Such disks should be removed from the cluster configuration$ *f you plan to implement Bolume Mount points for some

disks) you may want to delete the current disk resources for those disks) delete the drive letters) and then create a new disk resource without a drive letter assignment$

Auoru# %isk Configuration

The &luster &onfiguration Wi.ard automatically selects the drive that is to be used as the quorum device$ *t will use the smallest partition that is larger then = M;$ 2ou may want to change the automatically selected disk to a dedicated disk that you have designated for use as the quorum$ .o Configure the Auoru# %isk ?$ 2$ !$ >$ Start &luster %dministrator '&lu%dmin$e/e($ Aight-click the cluster name in the upper-left corner) and then click 0ro)erties$ &lick the Auoru# tab$ *n the Auoru# resource list bo/) select a different disk resource$ *n +igure 2= below) #isk J is selected in the Auoru# resource list bo/$

Figure 29 =$

.he Auoru# resource list bo6-

*f the disk has more than one partition) click the partition where you want the cluster-specific data to be kept) and then click (2$

+or additional information) see the following article in the Microsoft :nowledge ;ase0

2D !=! 7ow to &hange Juorum #isk #esignation

Creating a 1oot %elay

*n a situation where all the cluster nodes boot up and attempt to attach to the quorum resource at the same time) the &luster service may fail to start$ +or e/ample) this may occur when power is restored to all

nodes at the e/act same time after a power failure$ To avoid such a situation) increase or decrease the .i#e to %is)lay list of o)erating syste#s setting$ To find this setting) click Start) point to Co#)uter) right-click y

y Co#)uter) and then click 0ro)erties$ &lick the Advanced tab) and then click

Settings under Startu) And !ecovery$

Test Installation

There are several methods for verifying a cluster service installation after the Setup process is complete$ These include0

Cluster Ad#inistrator& *f installation was completed only on node ?) start &luster %dministrator) and then attempt to connect to the cluster$ *f a second node was installed) start &luster %dministrator on either node) connect to the cluster) and then verify that the second node is listed$

Services A))let& Ese the services snap-in to verify that the cluster service is listed and started$ @vent /og& Ese the "vent Biewer to check for ClusSvc entries in the system log$ 2ou should see entries confirming that the cluster service successfully formed or 4oined a cluster$ Cluster service registry entries& Berify that the cluster service installation process wrote the correct entries to the registry$ 2ou can find many of the registry settings under

HKEY_LOCAL_MACHINE\Cluster

&lick Start) click !un) and then type the Birtual Server name$ Berify that you can connect and see resources$

.est Failover

.o <erify that !esources will Failover ?$ &lick Start) click 0rogra#s) click Ad#inistrative .ools) and then click Cluster Ad#inistrator) as shown in +igure 2F below$

Figure 2; 2$

.he Cluster Ad#inistrator window-

Aight-click the %isk Grou) * group) and then click ove Grou)$ The group and all its resources will be moved to another node$ %fter a short period of time) the %isk F& G& will be brought online on the second node$ Watch the window to see this shift$ Juit &luster %dministrator$

&ongratulationsQ 2ou have completed the configuration of the cluster service on all nodes$ The server cluster is fully operational$ 2ou are now ready to install cluster resources such as file shares) printer spoolers) cluster aware services like #istributed Transaction &oordinator) #7&1) W*5S) or cluster-aware programs such as "/change Server or SJ8 Server$

Appendix

Advanced .esting

5ow that you have configured your cluster and verified basic functionality and failover) you may want to conduct a series of failure scenario tests that will demonstrate e/pected results and ensure the cluster will respond correctly when a failure occurs$ This level of testing is not required for every implementation) but may be insightful if you are new to clustering technology and are unfamiliar how the cluster will respond or if you are implementing a new hardware platform in your environment$ The e/pected results listed are for a clean configuration of the cluster with default settings) this does not take into consideration any user customi.ation of the failover logic$ This is not a complete list of all tests) nor should successfully completing these tests be considered ,certified- or ready for production$ This is simply a sample list of some tests that can be conducted$ +or additional information) see the following article in the Microsoft :nowledge ;ase0

?<C >C +ailover9+ailback 1olicies on Microsoft &luster Server

Test: Start &luster %dministrator) right-click a resource) and then click , 'nitiate Failure-$ The resource should go into an failed state) and then it will be restarted and brought back into an online state on that node$

E/pected ,esult: Aesources should come back online on the same node

Test: &onduct the above ,'nitiate Failure- test three more times on that same resource$ 6n the fourth failure) the resources should all failover to another node in the cluster$

E/pected ,esult: Aesources should failover to another node in the cluster

Test: Move all resources to one node$ Start &omputer Management) and then click Services under Services and A))lications$ Stop the &luster service$ Start &luster %dministrator on another node and verify that all resources failover and come online on another node correctly$

E/pected ,esult: Aesources should failover to another node in the cluster

Test: Move all resources to one node$ 6n that node) click Start7 and then click Shutdown$ This will turn off that node$ Start &luster %dministrator on another node) and then verify that all resources failover and come online on another node correctly$

E/pected ,esult: Aesources should failover to another node in the cluster

Test: Move all resources to one node) and then press the power button on the front of that server to turn it off$ *f you have an %&1* compliant server) the server will perform an ,"mergency Shutdown- and turn off the server$ Start &luster %dministrator on another node and verify that all resources failover and come online on another node correctly$ +or additional information about an "mergency Shutdown) see the following articles in the Microsoft :nowledge ;ase0

!2=!>! 76W T60 1erform an "mergency Shutdown in Windows Server 2

2<C?= 1ower ;utton on %&1* &omputer May +orce an "mergency Shutdown

E/pected ,esult: Aesources should failover to another node in the cluster Warning 1erforming the "mergency Shutdown test may cause data corruption and data loss$ #o not conduct this test on a production server Test: Move all resources to one node) and then pull the power cables from that server to simulate a hard

failure$ Start &luster %dministrator on another node) and then verify that all resources failover and come online on another node correctly

E/pected ,esult: Aesources should failover to another node in the cluster Warning 1erforming the hard failure test may cause data corruption and data loss$ This is an e/treme test$ Make sure you have a backup of all critical data) and then conduct the test at your own risk$ #o not conduct this test on a production server Test: Move all resources to one node) and then remove the public network cable from that node$ The *1 %ddress resources should fail) and the groups will all failover to another node in the cluster$ +or additional information ) see the following articles in the Microsoft :nowledge ;ase0

2DF!>2 5etwork +ailure #etection and Aecovery in Windows Server 2

! &lusters

E/pected ,esult: Aesources should failover to another node in the cluster

Test: Aemove the network cable for the 1rivate heartbeat network$ The heartbeat traffic will failover to the public network) and no failover should occur$ *f failover occurs) please see the ,&onfiguring the 1rivate 5etwork %daptor- section in earlier in this document

E/pected ,esult: There should be no failures or resource failovers

SCS' %rive 'nstallations

This appendi/ is provided as a generic set of instructions for S&S* drive installations$ *f the S&S* hard disk vendor3s instructions conflict with the instructions here) always follow the instructions supplied by the vendor$

The S&S* bus listed in the hardware requirements must be configured prior to cluster service installation$ &onfiguration applies to0

The S&S* devices$ The S&S* controllers and the hard disks so that they work properly on a shared S&S* bus$ 1roperly terminating the bus$ The shared S&S* bus must have a terminator at each end of the bus$ *t is possible to have multiple shared S&S* buses between the nodes of a cluster$

*n addition to the information on the following pages) refer to documentation from the manufacturer of your S&S* device or to the S&S* specifications) which can be ordered from the %merican 5ational Standards *nstitute '%5S*($ The %5S* Web site includes a catalog that can be searched for the S&S* specifications$

Configuring the SCS' %evices

"ach device on the shared S&S* bus must have a unique S&S* identification number$ ;ecause most S&S* controllers default to S&S* *# C) configuring the shared S&S* bus includes changing the S&S* *# number on one controller to a different number) such as S&S* *# F$ *f there is more than one disk that will be on the shared S&S* bus) each disk must have a unique S&S* *# number$

.er#inating the Shared SCS' 1us

There are several methods for terminating the shared S&S* bus$ They include0

SCS' controllers

S&S* controllers have internal soft termination that can be used to terminate the bus) however this method is not recommended with the cluster server$ *f a node is turned off with this configuration) the S&S* bus will be terminated improperly and will not operate correctly$

Storage enclosures

Storage enclosures also have internal termination) which can be used to terminate the S&S* bus if the enclosure is at the end of the S&S* bus$ This should be turned off$

= cables

2 cables can be connected to devices if the device is at the end of the S&S* bus$ %n e/ternal active terminator can then be attached to one branch of the 2 cable in order to terminate the S&S* bus$ This method of termination requires either disabling or removing any internal terminators that the device may have$

+igure 2C outlines how a S&S* cluster should be physically connected$

*f your browser does not support inline frames) click here to view on a separate page$

Figure 2?

A diagra# of a SCS' cluster hardware configuration-

$ote %ny devices that are not at the end of the shared bus must have their internal termination disabled$ 2 cables and active terminator connectors are the recommended termination methods because they will provide termination even when a node is not online$

Storage Area $etwork Considerations

There are two supported methods of +ibre &hannel-based storage in a Windows Server 2 cluster0 arbitrated loops and switched fabric$

! server

'#)ortant When evaluating both types of +ibre &hannel implementation) read the vendor3s documentation and be sure you understand the specific features and restrictions of each$ %lthough the term 2ibre Channel implies the use of fiber-optic technology) copper coa/ial cable is also allowed for interconnects$ Arbitrated /oo)s 3FC,A/5 % +ibre &hannel arbitrated loop '+&-%8( is a set of nodes and devices connected into a single loop$ +&-%8 provides a cost-effective way to connect up to ?2F devices into a single network$ %s with S&S*) a ma/imum of two nodes is supported in an +&-%8 server cluster configured with a hub$ %n +&-%8 is illustrated in 2D$

Figure 2B

FC,A/ Connection

+&-%8s provide a solution for two nodes and a small number of devices in relatively static configurations$ %ll devices on the loop share the media) and any packet traveling from one device to another must pass through all intermediate devices$

*f your high-availability needs can be met with a two-node server cluster) an +&-%8 deployment has several advantages0

The cost is relatively low$ 8oops can be e/panded to add storage 'although nodes cannot be added($

8oops are easy for +ibre &hannel vendors to develop$

The disadvantage is that loops can be difficult to deploy in an organi.ation$ ;ecause every device on the loop shares the media) overall bandwidth in the cluster is lowered$ Some organi.ations might also be unduly restricted by the ?2F-device limit$ Switched Fabric 3FC,SW5 +or any cluster larger than two nodes) a switched +ibre &hannel switched fabric '+&-SW( is the only supported storage technology$ *n an +&-SW) devices are connected in a many-to-many topology using +ibre &hannel switches 'illustrated in +igure 2<($

*f your browser does not support inline frames) click here to view on a separate page$

Figure 2D

FC,SW Connection

When a node or device communicates with another node or device in an +&-SW) the source and target set up a point-to-point connection 'similar to a virtual circuit( and communicate directly with each other$ The fabric itself routes data from the source to the target$ *n an +&-SW) the media is not shared$ %ny device can communicate with any other device) and communication occurs at full bus speed$ This is a fully scalable enterprise solution and) as such) is highly recommended for deployment with server clusters$

+&-SW is the primary technology employed in S%5s$ 6ther advantages of +&-SW include ease of deployment) the ability to support millions of devices) and switches that provide fault isolation and rerouting$ %lso) there is no shared media as there is in +&-%8) allowing for faster communication$ 7owever) be aware that +&-SWs can be difficult for vendors to develop) and the switches can be e/pensive$ Bendors also have to account for interoperability issues between components from different vendors or manufacturers$

+sing SA$s with Server Clusters

+or any large-scale cluster deployment) it is recommended that you use a S%5 for data storage$ Smaller S&S* and stand-alone +ibre &hannel storage devices work with server clusters) but S%5s provide superior fault tolerance$

% S%5 is a set of interconnected devices 'such as disks and tapes( and servers that are connected to a common communication and data transfer infrastructure '+&-SW) in the case of Windows Server 2 !

clusters($ % S%5 allows multiple server access to a pool of storage in which any server can potentially access any storage unit$

The information in this section provides an overview of using S%5 technology with your Windows Server 2 ! clusters$ +or additional information about deploying server clusters on S%5s) see the Windows

&lustering0 Storage %rea 5etworks link on the Web Aesources page at http099www$microsoft$com9windows9reskits9webresources9$ $ote Bendors that provide S%5 fabric components and software management tools have a wide range of tools for setting up) configuring) monitoring) and managing the S%5 fabric$ &ontact your S%5 vendor for details about your particular S%5 solution$ SCS' !esets "arlier versions of Windows server clusters presumed that all communications to the shared disk should be treated as an isolated S&S* bus$ This behavior may be somewhat disruptive) and it does not take advantage of the more advanced features of +ibre &hannel to both improve arbitration performance and reduce disruption$

6ne key enhancement in Windows Server 2

! is that the &luster service issues a command to break a

A"S"AB%T*65) and the Stor1ort driver can do a targeted or device reset for disks that are on a +ibre &hannel topology$ *n Windows 2 server clusters) an entire bus-wide S&S* A"S"T is issued$ This causes

all devices on the bus to be disconnected$ When a S&S* A"S"T is issued) a lot of time is spent resetting devices that may not need to be reset) such as disks that the &7%88"5M"A node may already own$

Aesets in Windows 2 ?$ 2$ !$

! occur in the following order0

?$ Targeted logical unit number '8E5( 2$ Targeted S&S* *# !$ "ntire bus-wide S&S* A"S"T

$ote Targeted resets require functionality in the host bus adapter '7;%( drivers$ The driver must be written for Stor1ort and not S&S*1ort$ #rivers that use S&S*1ort will use the &hallenge and #efense the same as it is currently in Windows 2 $ &ontact the manufacturer of the 7;% to determine if it supports Stor1ort$ SCS' Co##ands

The &luster service uses the following S&S* commands0

S&S* reserve0 This command is issued by a host bus adapter or controller to maintain ownership of a S&S* device$ % device that is reserved refuses all commands from all other host bus adapters e/cept the one that initially reserved it) the initiator$ *f a bus-wide S&S* reset command is issued) loss of reservation occurs$

S&S* release0 This command is issued by the owning host bus adapterG it frees a S&S* device for another host bus adapter to reserve$

S&S* reset0 This command breaks the reservation on a target device$ This command is sometimes referred to globally as a Hbus reset$H

The same control codes are used for +ibre &hannel as well$ These parameters are defined in this partner article0

! <?DF 7ow the &luster Service Takes 6wnership of a #isk on the Shared ;us

!?C?F2 Supported +ibre &hannel &onfigurations

The following sections provide an overview of S%5 concepts that directly affect a server cluster deployment$ H1As 7ost bus adapters '7;%s( are the interface cards that connect a cluster node to a S%5) similar to the way that a network adapter connects a server to a typical "thernet network$ 7;%s) however) are more difficult to configure than network adapters 'unless the 7;%s are preconfigured by the S%5 vendor($ %ll 7;%s in all nodes should be identical and be at the same driver and firmware revision Foning and /+$ asking

Roning and 8E5 masking are fundamental to S%5 deployments) particularly as they relate to a Windows Server 2 ! cluster deployment$

Foning

Many devices and nodes can be attached to a S%5$ With data stored in a single cloud) or storage entity) it is important to control which hosts have access to specific devices$ Roning allows administrators to partition devices in logical volumes and thereby reserve the devices in a volume for a server cluster$ That means that all interactions between cluster nodes and devices in the logical storage volumes are isolated within the boundaries of the .oneG other noncluster members of the S%5 are not affected by cluster activity$

+igure ! is a logical depiction of two S%5 .ones 'Rone % and Rone ;() each containing a storage controller 'S?and S2) respectively($

Figure 30

Foning

*n this implementation) 5ode % and 5ode ; can access data from the storage controller S?) but 5ode & cannot$ 5ode & can access data from storage controller S2$

Roning needs to be implemented at the hardware level 'with the controller or switch( and not through software$ The primary reason is that .oning is also a security mechanism for a S%5-based cluster) because unauthori.ed servers cannot access devices inside the .one 'access control is implemented by the switches in the fabric) so a host adapter cannot gain access to a device for which it has not been configured($ With software .oning) the cluster would be left unsecured if the software component failed$

*n addition to providing cluster security) .oning also limits the traffic flow within a given S%5 environment$ Traffic between ports is routed only to segments of the fabric that are in the same .one$

/+$

asking

% 8E5 is a logical disk defined within a S%5$ Server clusters see 8E5s and think they are physical disks$ 8E5 masking) performed at the controller level) allows you to define relationships between 8E5s and cluster nodes$ Storage controllers usually provide the means for creating 8E5-level access controls that

allow access to a given 8E5 to one or more hosts$ ;y providing this access control at the storage controller) the controller itself can enforce access policies to the devices$

8E5 masking provides more granular security than .oning) because 8E5s provide a means for .oning at the port level$ +or e/ample) many S%5 switches allow overlapping .ones) which enable a storage controller to reside in multiple .ones$ Multiple clusters in multiple .ones can share the data on those controllers$ +igure !? illustrates such a scenario$

*f your browser does not support inline frames) click here to view on a separate page$

Figure 3*

Storage Controller in

ulti)le Fones

8E5s used by &luster % can be masked) or hidden) from &luster ; so that only authori.ed users can access data on a shared storage controller$ !e"uire#ents for %e)loying SA$s with Windows Server 2003 Clusters The following list highlights the deployment requirements you need to follow when using a S%5 storage solution with your server cluster$ +or a white paper that provides more complete information about using S%5s with server clusters) see the Windows &lustering0 Storage %rea 5etworks link on the Web Aesources page at http099www$microsoft$com9windows9reskits9webresources9$

"ach cluster on a S%5 must be deployed in its own .one$ The mechanism the cluster uses to protect access to the disks can have an adverse effect on other clusters that are in the same .one$ ;y using .oning to separate the cluster traffic from other cluster or noncluster traffic) there is no chance of interference$

%ll 7;%s in a single cluster must be the same type and have the same firmware version$ Many storage and switch vendors require that all 7;%s on the same .one@and) in some cases) the same fabric@share these characteristics$

%ll storage device drivers and 7;% device drivers in a cluster must have the same software version$

5ever allow multiple nodes access to the same storage devices unless they are in the same cluster$

5ever put tape devices into the same .one as cluster disk storage devices$ % tape device could misinterpret a bus rest and rewind at inappropriate times) such as during a large backup$ Guidelines for %e)loying SA$s with Windows Server 2003 Server Clusters *n addition to the S%5 requirements discussed in the previous section) the following practices are highly

recommended for server cluster deployment0

*n a highly available storage fabric) you need to deploy clustered servers with multiple 7;%s$ *n these cases) always load the multipath driver software$ *f the *96 subsystem sees two 7;%s) it assumes they are different buses and enumerates all the devices as though they were different devices on each bus$ The host) meanwhile) is seeing multiple paths to the same disks$ +ailure to load the multipath driver will disable the second device because the operating system sees what it thinks are two independent disks with the same signature$

#o not e/pose a hardware snapshot of a clustered disk back to a node in the same cluster$ 7ardware snapshots must go to a server outside the server cluster$ Many controllers provide snapshots at the controller level that can be e/posed to the cluster as a completely separate 8E5$ &luster performance is degraded when multiple devices have the same signature$ *f the snapshot is e/posed back to the node with the original disk online) the *96 subsystem attempts to rewrite the signature$ 7owever) if the snapshot is e/posed to another node in the cluster) the &luster service does not recogni.e it as a different disk and the result could be data corruption$ %lthough this is not specifically a S%5 issue) the controllers that provide this functionality are typically deployed in a S%5 environment$

+or additional information) see the following articles in the Microsoft :nowledge ;ase0

! ?F>C &luster Service *mprovements for Storage %rea 5etworks

! >>?= Support for Multiple &lusters %ttached to the Same S%5 #evice

2D C>! Windows &lustering and Meographically Separate Sites

Related Links

See the following resources for further information0

Microsoft &luster Service *nstallation Aesources at http099support$microsoft$com9SidT2=<2FC Juorum #rive &onfiguration *nformation at http099support$microsoft$com9SidT2D !>= Aecommended 1rivate H7eartbeatH &onfiguration on &luster Server at http099support$microsoft$com9SidT2=DC= 5etwork +ailure #etection and Aecovery in a Server &luster at http099support$microsoft$com9S idT2>2F

7ow to &hange Juorum #isk #esignation at http099support$microsoft$com9SidT2D !=! Microsoft Windows &lustering0 Storage %rea 5etworks at http099www$microsoft$com9windows$netserver9techinfo9overview9san$msp/ Meographically #ispersed &lusters in Windows Server 2 ! at

http099www$microsoft$com9windows$netserver9techinfo9overview9clustergeo$msp/ Server &luster 5etwork Aequirements and ;est 1ractices at http099www$microsoft$com9technet9prodtechnol9windowsserver2 !9maintain9operate9clstntbp$asp

+or the latest information about Windows Server 2 http099www$microsoft$com9windowsserver2

!) see the Windows 2

! Server Web site at

!9default$msp/

This is a preliminary document and may be changed substantially prior to final commercial release of the software described herein$

The information contained in this document represents the current view of Microsoft &orporation on the issues discussed as of the date of publication$ ;ecause Microsoft must respond to changing market conditions) it should not be interpreted to be a commitment on the part of Microsoft) and Microsoft cannot guarantee the accuracy of any information presented after the date of publication$

This document is for informational purposes only$ M*&A6S6+T M%:"S 56 W%AA%5T*"S) "U1A"SS 6A *M18*"#) %S T6 T7" *5+6AM%T*65 *5 T7*S #6&EM"5T$

&omplying with all applicable copyright laws is the responsibility of the user$ Without limiting the rights under copyright) no part of this document may be reproduced) stored in or introduced into a retrieval system) or transmitted in any form or by any means 'electronic) mechanical) photocopying) recording) or otherwise() or for any purpose) without the e/press written permission of Microsoft &orporation$

Microsoft may have patents) patent applications) trademarks) copyrights) or other intellectual property rights covering sub4ect matter in this document$ "/cept as e/pressly provided in any written license agreement from Microsoft) the furnishing of this document does not give you any license to these patents) trademarks) copyrights) or other intellectual property$

V2

! Microsoft &orporation$ %ll rights reserved$

Microsoft) Windows) the Windows logo) and Windows 5T are either registered trademarks or trademarks of Microsoft &orporation in the Enited States and9or other countries$

The names of actual companies and products mentioned herein may be the trademarks of their respective

owners$

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- XVL Products License Management GuideDocument46 pagesXVL Products License Management GuideKAUR DISPARIPORANo ratings yet

- Vmware All Product OveriewDocument74 pagesVmware All Product OveriewSunil GentyalaNo ratings yet

- Cloud Vs On Premise CalculatorDocument1 pageCloud Vs On Premise CalculatorSharif RaziNo ratings yet