Professional Documents

Culture Documents

Christian Dittmar and Christian Uhle

Uploaded by

jaknoppCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Christian Dittmar and Christian Uhle

Uploaded by

jaknoppCopyright:

Available Formats

Audio Engineering Society

Convention Paper 6031

Presented at the 116th Convention 2004 May 811 Berlin, Germany

This convention paper has been reproduced from the author's advance manuscript, without editing, corrections, or consideration by the Review Board. The AES takes no responsibility for the contents. Additional papers may be obtained by sending request and remittance to Audio Engineering Society, 60 East 42nd Street, New York, New York 10165-2520, USA; also see www.aes.org. All rights reserved. Reproduction of this paper, or any portion thereof, is not permitted without direct permission from the Journal of the Audio Engineering Society.

Further Steps towards Drum Transcription of Polyphonic Music

Christian Dittmar1, Christian Uhle2

1

Fraunhofer Institute for Digital Media Technology IDMT, Ilmenau, Germany dmr@idmt.fraunhofer.de Fraunhofer Institute for Digital Media Technology IDMT, Ilmenau, Germany uhle@idmt.fraunhofer.de

ABSTRACT This publication presents a new method for the detection and classification of un-pitched percussive instruments in real world musical signals. The derived information is an important pre-requisite for the creation of a musical score, i.e. automatic transcription, and for the automatic extraction of semantic meaningful meta-data, e.g. tempo and musical meter. The proposed method applies Independent Subspace Analysis using Non-Negative Independent Component Analysis and principles of Prior Subspace Analysis. An important extension of Prior Subspace Analysis is the identification of frequency subspaces of percussive instruments from the signal itself. The frequency subspaces serve as information for the detection of the percussive events and the subsequent classification of the occurring instruments. Results are reported on 40 manually transcribed test items.

1. 1.1.

INDRODUCTION Motivation information enables further categorization of musical content such as classification of genre (based on characteristic rhythmical patterns) determination of rhythmic complexity, expressivity and groove of a musical item. The measurement of less subjective descriptors like tempo and musical meter significantly benefits from the availability of a drum score as well. Thus, automated extraction of the drum score is an essential tool for cataloguing musical content and is able to contribute to todays music retrieval algorithms immensely.

Where the description of musical audio signals by means of metadata is concerned, an important branch constitutes the analysis of rhythm. Although rhythm is an essential concept for musical structure, which is contained in the voices of all sounding instruments theres little doubt that especially percussive instruments contribute to the rhythmical impression. High level description of any rhythmical content is only feasible when drum scores are available. This

Dittmar, Uhle

Further Steps towards Drum Transcription

1.2.

State of the Art

The transcription of percussive un-pitched instruments represents a less challenging task than the comprehensive transcription of all played instruments in a musical peace, including harmonic sustained instruments. This is due to a number of reasons. First, no melodic information has to be detected, since with most percussive sounds pitch plays only a subordinate role. Second, percussive instruments commonly do not produce sustained notes (there are numerous exceptions, e.g. guiro, cymbal crescendos, as well as instruments residing in a grey area between un-pitched and pitched instruments, e.g. the Brazilian quica), so the duration of the notes has not to be detected in the general case. The challenge with identification of percussive instruments resides in the fact, that a great variety of sounds can be generated using a single instrument. This work focusses on the vast field of popular music, and only a limited set of percussive un-pitched instruments is presumed to be present. There are mainly two instruments classes in scope: membranophones and idiophones (as well as their electronic counterparts). The membranophones usually occupy the lower frequency regions of the audio signal. To name a few examples ordered according to their dominant frequency regions kick drum, tomtom, snare drum, timbales, conga and bongo are enumerated here. The list of examples can be continued with respect to the dominant frequency range by idiophones like woodblock, shaker, cymbal, tambourine and hi-hat. Unfortunately for the retrieval task, the instruments are not clearly separable along the frequency axis and there are many ambiguities due to different playing techniques and styles, recording situations and electronic effects, which are eventually applied to drumsounds. Previous work on the transcription of percussive instruments includes the doctoral thesis by Schloss [1], which addresses the transcription of pure percussive music. The developed system detects note onsets from the slope of the amplitude envelope and subsequently identifies the source of each note. The events are classified into damped and un-damped strokes, and subsequently into high and low frequency drum-sounds. The analyzed percussive instruments are membranophones, exclusively. The resulting note-list is used for the metrical analysis. Other work relating to the detection and classification of events in musical audio signals containing only drumsounds is described in [2], [3]. Gouyon et al. presented a system for automatic labeling of short drum kit performances, in which the instruments do not occur

simultaneously. The audio signal is segmented using a tatum grid, and each segment is represented as a vector of low-level features (e.g. spectral kurtosis, temporal centroid and zero-crossing rate). Various clustering techniques were examined to identify similar instrument sounds. Paulus et. al. described a system for the labelling of synthesized drum sequences with simultaneously occurring sounds using higher-level statistical modelling with n-grams. A manually detected tatum grid is applied for the segmentation of the drum tracks. A number of authors have suggested systems for the detection and classification of percussive instruments in the presence of pitched instruments. McDonald proposes the use of a bank of wavelet filters to produce a spectrogram of the audio signal. The spectrogram is further processed by a bank of Meddis Inner Hair Cell models for the detection of note onsets. Note onsets are detected from the amplitude data in sub-bands of one octave width scaled with the phase congruency per subband. The detected events are then classified using the similarity between the sonogram data of a short excerpt following an onset and trained samples [4]. An analysis/synthesis approach to extraction of drumsounds from polyphonic music is presented in [5]. The extraction of the two dominant percussive instruments and their occurrences is done by an iterative correlation method of matching a simple drum model witch the actual drum-sounds in the analyzed signal. The extracted drum-sounds are not explicitly classified but subjected to be used as an audio-signature for the signal. Some of the most recent work relates to the decomposition of the audio-signal using Independent Subspace Analysis (ISA). Casey et al. introduced this method for separation of sound sources from single channel mixtures. No explicit focus on percussive instruments has been emphasized, but the decomposition of a drum-loop into single sounds is featured as an illustrative example [6]. Iroro Orife adopts ISA to separate and detect salient rhythmic and timing information with regard to a better understanding of rhythm, as well as computer based performance and composition [7]. In [8] ISA is employed to separate real world musical signals into percussive and harmonic sustained fragments using a decision-network based on measures describing the spectral and time-based features of a fixed number of independent components. Further developments were conducted by Fitzgerald et al. [9] through introducing the principal of Prior Subspace Analysis, where generalized spectral profiles

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 2 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

for different percussive instruments are used to extract amplitude basis functions, which are then subjected to ICA to achieve statistical independence. Peak picking in such separated amplitude bases enables onset detection corresponding to the occurrence of drum instruments a priori assumed to be contained in the musical signal. The application of PSA in terms of detection and classification of drum instruments has moved from percussive to polyphonic music with promising results in [10]. This step is motivated by the assumption that the drum instruments are stationary in pitch. 2. 2.1. SYSTEM OVERVIEW Blockdiagram

2.2.

Spectral Representation

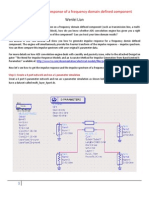

An overview of the proposed system is presented in figure 1. The subsequent sections will give a more in depth account of the different stages endorsed the signal processing chain.

PCM audio signal

Time Frequency Transformation

X

Differentiation

& X

Halfwave Rectification

X

Peak Picking

X t

PCA

~ X

Non-Negative ICA Feature Extraction and Classification Acceptance of drum-like Onsets Drumscore

The digital audio signals used for further analysis are mono files with 16bit per sample at a sampling frequency of 44.1kHz. They are submitted to preprocessing in the time domain using a software-based emulation of an acoustic effect device often referred to as Exciter. In this context , the Exciter stage emphasizes the higher frequency content of the audio signal. This is achieved by applying non-linear distortion to a highpass filtered version of the signal and adding that distorted signal to the original. It turns out, that this is a vital issue when assessing hi-hats or similar high sounding idiophones with low intensity. Their energetic weight in respect to the whole musical signal is increased by that step, while most harmonic sustained instruments and lower sounding drum types are not affected. Another positive side effect consists in the fact that formerly MP3-encoded (and in the process low pass filtered) files can regain higher-frequency information to some extent. A spectral representation of the preprocessed time signal is computed using a Short Time Fourier Transformation (STFT). Thereby a relatively large block-size and high overlap are necessary due to two reasons. First the need for a fine spectral resolution in the lower frequency bins has to be fulfilled. Second the time resolution is increased to a required accuracy by a small hop-size between adjacent frames. From the above mentioned steps a spectrogram representation of the original signal is derived. The unwrapped phaseinformation and the absolute spectrogram values X are taken into further consideration. The magnitude spectrogram X possesses n frequency bins and m frames. The time-variant slopes of each spectral bin are differentiated over all frames in order to decimate the influence of sustained sounds and to simplify the subsequent detection of transients. The differentiation leads to some negative values, so a half wave rectification is appended to remove this effect. This

is way, a non-negative difference-spectrogram X computed for the further processing.

2.3. Event Detection

Figure 1 System Overview

The detection of multiple local maxima associated with transient onset events in the musical signal is conducted in a quite simple manner. At first a time tolerance is defined which separates two successive drum onsets. In this implementation 68ms have been used as a constant value that is translated to the time resolution in the spectral domain where it determines the number of frames which must at least occur between two consecutive onsets. The usage of this minimum distance

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 3 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

was proposed in [11] and is also supported by the consideration that a sixteenth note lasts 60ms at an upper tempo limit of 250bpm, which is quite close to the value presumed above. To derive a detection function on which the peak-picking can be executed the spectral bins of the differentiated spectrogram are simply summed up. A relatively smooth function e is obtained by convolving the summed spectrogram with a suitable Hann window. To achieve the positions t of the maxima a sliding window of the tolerance length is then shifted along the whole vector e thus achieving the ability to detect one maximum per step. The trick is now to keep only those maxima stored which appear in the window for longer terms, because these are very likely the peaks of interest. The unwrapped phase information of the original spectrogram serves as reliability function in this context. It can be observed that a significant positive phase jump must occur near the estimated onset-time t in order to avoid mistaking small ripples for onsets. The main concept of the further process is the storage of a short excerpt of the

Thereby, T describes a transformation matrix which is actually a subset of the manifold of eigenvectors. Additionally the reciprocal values of the eigenvalues are incorporated as scaling factors yielding not only a decorrelation but also a variance normalization, which in turn implies whitening [12]. Alternatively a Singular

according to [6], Value Decomposition (SVD) of X t [8] can achieve the same goal. With small modifications it is proven to be equivalent to the PCA using EVD

[13]. The whitened components X are subsequentially fed into the ICA-computation stage described in the next section. 2.5. Non-Negative Independent Component Analysis

(namely one frame) at the difference-spectrogram X time of the onset. From these frames the significant spectral profiles will be gathered in the next stages.

2.4. Reduction of Dimensionality

From the steps described in the preceding section the information about the time of occurrence t and the

is deduced. With spectral composition of the onsets X t real-world musical signals, one quite frequently encounters a high number of transient events within the duration of the musical piece. Even the simple example of a 120bpm piece shows that there could be 480 events in a 4 minute excerpt given the case that only quarter notes occur. With regard to the goal of finding only a few significant subspaces Principal Component . Using this well Analysis (PCA) is applied to X t known technique it is possible to break down the whole set of collected spectra to a limited number of decorrelated principal components, thus resulting in a good representation of the original data with small reconstruction error. For this purpose an Eigenvalue Decomposition (EVD) of the datasets covariance matrix is computed. From the set of eigenvectors the ones related to the d largest eigenvalues are chosen to provide the coefficients for a linear combination of the original vectors according to equation (1). ~ X=X t T

(1 )

Independent Component Analysis is a technique that is applied for separation of a set of linear mix signals into their original sources. A requirement for optimum performance of the algorithm is the statistical independency of the sources. Over the last years, extremely active research has been conducted in the field of ICA. One very interesting approach is the recent Non-Negative ICA [14], [15]. Where other commonly deployed algorithms like JADE-ICA [16] or FAST-ICA [17] exploit higher order statistics of the signals, NonNegative ICA uses the very intuitive concept of optimising a cost function describing the non-negativity of the components. This cost function is related to the reconstruction error introduced by axis pair rotations of two or more variables in the positive quadrant of the joint probability density function (PDF). The assumptions for this model imply that the original source signals are positive and well grounded, which means they exhibit a non-zero PDF at zero, and they are to some extent linearly independent. The first concept is always fulfilled for the data considered in this publication, because the vectors subjected to ICA originate from the differentiated and halfwave rectified

of the amplitude-spectrogram X , which version X does not contain any values lower than zero, but certainly some values at zero. The second constraint is taken into account when the spectra collected at onset times are regarded as the linear combinations of a small set of original source-spectra characterizing the involved instruments. This seems, of course, to be a rather coarse approximation, but it holds up well in the majority of the cases. The onset-spectra of real-world drum instruments do not exhibit invariant patterns, but are more or less subjected to changes in their spectral composition. Nevertheless, however, it may safely be

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 4 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

assumed that there are some characteristic properties inherent to spectral profiles of drum-sounds [9] that

~ allow us to separate the whitened components X into their potential sources F according to (2). ~ F = AX

(2 )

Ci, j =

Fi F T j Fi FiT

(5 )

for i = 1K d , j = 1K d and j i In fact this value is related to the well known crosscorrelation coefficient, but it uses a different normalization.

Where A denotes the d d unmixing matrix estimated by the ICA-process, which does actually separate the individual components X . The sources F will be named spectral profiles from here forth. Like the original spectrogram they own n frequency bins, but consist only of one frame. That means they only hold the spectral information related to the onset spectrum. To circumvent arbitrary scaling of the components introduced by PCA and ICA, a transformation matrix R is constructed according to (3).

2.7.

Extraction of amplitude bases

The preceding steps followed the main goal to compute a certain number of spectral profiles. These spectral profiles can be used to extract the spectrograms amplitude basis, from here forward referred to as amplitude envelopes according to (6).

R = T AT

(3 )

E = FX

(6 )

Normalizing R with its absolute maximum value leads to weighting coefficients in a range 1...1 so that spectral profiles, which are extracted using

As a second source of information the differentiated version of the amplitude envelopes can be extracted from the difference spectrogram according to (7).

= FX E

(7 )

R F=X t

(4 )

possess values in the range of the original spectrogram. Further normalization is achieved by dividing each spectral profile by its L2-norm. 2.6. Crosstalk Profiles

As stated earlier the independence and invariance assumption for the given spectral slices suffer some weaknesses. So it is no surprise that the unmixed spectral profiles still display some dependencies. But that should not be regarded as erroneous behaviour. Tests with spectral profiles of single drum-sounds recorded under real-world conditions also yielded strong interdependence between the onset-spectra of different percussive instruments. One way to measure the degree of mutual overlapping and similarity along the frequency axis is the conduction of crosstalk measurements. As an illustrative metaphor the spectral profiles gained from the ICA-process can be regarded as transfer-function of highly frequency-selective parts in a filter-bank where overlapping pass-bands lead to crosstalk in the output of the filter-bank channels. The crosstalk measure between two spectral profiles is computed according to (5).

This procedure is closely related to the principle of PSA. The main difference is that the priors used here are not some generalized class specific spectra. The second modification comprises in the fact that no further ICA-computation on the amplitude envelopes is applied. Instead, highly specialized spectral profiles very close to the spectra of the instruments really appearing in the signal are employed. Nevertheless the extracted amplitude envelopes are only in some cases nice detection functions with sharp peaks (e.g. for dance oriented music with predominant percussive rhythm tracks). Mostly they are accompanied by smaller peaks and plateaus stemming from the crosstalk effects mentioned above. 2.8. Component Classification

It is a well known problem [6] that the actual number of components is unknown for real world musical signals. Components is in this context used as general term for both the spectral profiles and the corresponding amplitude envelopes. If the number d of extracted components is too low artefacts of the suppressed component are likely to appear in some other components. If too many components are extracted the most prominent ones are likely to be split up into

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 5 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

several components. Unfortunately this division may even occur with the right number of components and accidentally suppress detection of the real components. Hence, special care has to be taken when considering the results. This issue is approached by choosing a maximum number d of components in the PCA or ICA process, respectively. Afterwards, the extracted components are classified using a set of spectral-based and time-based features. The classification shall provide two sources of information. First, components should be excluded from the rest of the process which are clearly non-percussive. Second, the remaining components should be assigned to pre-defined instrument classes. A suitable measure for the distinction of the amplitude envelopes is represented by the percussiveness, which is introduced in [8]. Here, a modified version is applied using the correlation coefficient between corresponding

detailed information on the shape of the spectral profile are extracted. These comprise global centroid, spread and skewness as measures describing the overall distribution. More advanced features are the center frequencies of the most prominent local partials, their intensity, spread and skewness. 2.9. Acceptance of drum-like onsets

and E . The degree of amplitude envelopes in E correlation between both vectors tends to be small, when the characteristic plateaus related to harmonic sustained sounds occur in the non-differentiated amplitude envelopes E . These are likely to almost . Both vectors disappear in the differentiated version E resemble each other far more in the case of transient amplitude envelopes originating from percussive sounds.

A spectral-based measure is constituted by the spectral dissonance, earlier described in [18], [8]. It is employed here to separate spectra of harmonic sustained sounds from the ones related to percussive sounds. In the implementation presented here, again a modified version of the computation of this measure is used, which exhibits tolerance to spectral leakage, dissonance with all harmonics and a suitable normalization. A higher degree of computational efficiency has been achieved by substituting the original dissonance function with a weighting matrix for frequency pairs. The assignment of spectral profiles to a priori trained classes of percussive instruments is provided by a simple k-nearest neighbour classifier with spectral profiles of single instruments as training-database. The distance function is calculated from the correlation coefficient between query-profile and database-profile. To verify the classification in cases of low reliability (low correlation-coefficients) or several occurrences of the same instruments, additional features representing

Drum-like onsets are detected in the amplitude envelopes using conventional peak picking methods. Only peaks near the original times t are regarded as candidates, the remaining ones are stored for further considerations. The value of the amplitude envelopes magnitude is assigned to every onset candidate at its position. If this value does not exceed a predetermined dynamic threshold then the onset is not accepted. The threshold varies over time according to the amount of energy in a larger area surrounding the onsets. Most of the crosstalk influences of harmonic sustained instruments as well as concurrent percussive instruments can be reduced in this step. Of crucial importance is the determination whether simultaneous onsets of distinct percussive instruments are indeed present or exist only due to crosstalk effects mentioned earlier. A simple solution is to accept those circumstantial instruments occurences, whose value is relatively high in comparison to the value of the strongest instrument at the onset-time. Unfortunately, the relevance of this procedure in terms of musical sense is low. 3. 3.1. RESULTS Testdata

To quantify the abilities of the presented algorithm, drum scores of 40 excerpts from real world musical signals were extracted manually by trained listeners as a reference. Each excerpt consists of 30 seconds duration at 44.1 kHz samplingrate and 16 bits amplitude resolution. Different musical genres are contained among these examples featuring rock, pop, latin, soul and house only to name a few. They were chosen because of their distinct musical characteristics, and the intention to confront the system with a significant variety of possible percussive instruments and sounds.

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 6 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

3.2.

Experimental Results

The drum scores automatically extracted by the proposed system were compared to the manually transcribed reference scores. The results are listed in table 1. The featured instruments represent the most frequently appearing drum types, for which the numbers are representative.

there are more of them, can not be simply deduced from intensity thresholds. That is the reason for the high numbers of erroneous shaker- and hihat onsets. Unfortunately, direct comparison to the results presented in [10] is not feasible because of the disjunctive test data-bases and the wider range of percussive instruments considered in this publication. 4. CONCLUSIONS

Class Kick Snare Hi-hat Cymbal Shaker Table 1

Found 83 % 75 % 77 % 43 % 60 %

Missed 9% 21 % 17 % 55 % 35 %

Added 23 % 35 % 58 % 26 % 93 %

Drum Transcription Results

The detected onsets show deviations from the reference onsets. The average time difference is thereby 2 blocks. This value corresponds to approximately 19 ms and is below the presumed tolerance. 3.3. Discussion

In this paper a method for automatic detection and classification of un-pitched percussive instruments in real world music signals has been presented. The results are extremely promising when considering the extraction of significant rhythmical information rather than perfect note-to-note transcription. It can be expected that further improvements will be made in the near future with regards to the classification stage and the onset-acceptance. Furthermore, additional information has to be collected and algorithmic methods have to be invented in order to correctly assess the few exceptional situations where the ISA-model does not deliver the desired results. 5. ACKNOWLEDGEMENTS

Some common problems can be observed. For a small part of the test-files no satisfying separation of spectral profiles has been achieved. In those cases spectral profiles that were identified in the spectrogram by a trained human observer have not been extracted, resulting in missing instruments. That obviously happens especially when many of the components are assigned to harmonic sustained sounds. The presence of very prominent and dynamical harmonic sustained instruments (expressive singing voice, trumpet or saxophone solos) also tends to increase the number of spuriously found onsets. Even the selection of only drum-like peaks is error-prone to influences of quickly changing sustained components. The separation of high sounding idiophones (hi-hat, cymbal, tambourine or shaker) can be particularly delicate because of their immensely overlapping spectral profiles. In contrast to lower sounding membranophones there are often no prominent partials, but a more or less broad distribution across the upper parts of the spectrum. This results in the indistinctiveness of the corresponding amplitude envelopes. So the decision if only one of those instruments is present at a certain onset time or whether

The authors would like to thank Markus Cremer for proofreading of this paper and valuable suggestions to its clarity. 6. REFERENCES

[1] W.A. Schloss, On the automatic transcription of percussive music from acoustic signal to higherlevel analysis, PhD thesis, Stanford University, 1985 [2] F. Gouyon and P. Herrera, Exploration of Techniques for Automatic Labeling of Audio Drum Tracks Instruments, in Proc. of MOSART Workshop on Current Research Directions in Computer Music, Barcelona, 2001 [3] J.K. Paulus, A.P. Klapuri, Conventional And Periodic N-Grams In The Transcription Of Drum Sequences, in Proc. of the IEEE International Conference On Multimedia and Expo, Baltimore, USA, 2003

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 7 of 8

Dittmar, Uhle

Further Steps towards Drum Transcription

[4] S. McDouglas, Biologicalesque Transcription of Percussion, in Proc. Of the Australasian Computer Music Conference, Canberra, 1998 [5] A. Zils, F. Pachet, O. Delerue, F. Gouyon, Automatic Extraction of Drum Tracks from Polyphonic Music Signals, in Proc. of the IEEE, 2002 [6] M.A. Casey and A. Westner, Separation of Mixed Audio Sources by Independent Subspace Analysis, in Proc. of the International Computer Music Conference, Berlin, 2000 [7] I.F.O. Orife, Riddim: A rhythm analysis and decomposition tool based on independent subspace analysis, Master thesis, Darthmouth College, Hanover, New Hampshire, 2001 [8] C. Uhle, C. Dittmar and T. Sporer, Extraction of Drum Tracks from polyphonic Music using Independent Subspace Analysis, in Proc. of the Fourth International Symposium on Independent Component Analysis, Nara, Japan, 2003 [9] D. Fitzgerald, B. Lawlor and E. Coyle, Prior Subspace Analysis for Drum Transcription, in Proc. of the 114th AES Convention, Amsterdam, 2003 [10] D. Fitzgerald, B. Lawlor and E. Coyle, Drum Transcription in the presence of pitched instruments using Prior Subspace Analysis, in Proc. of the ISSC, Limerick, Ireland, 2003 [11] F. Gouyon, P. Herrera, P. Cano, Pulse dependent Analyses of percussive music, in Proc. of the AES 22nd International Conference on Virtual, Synthetic and Entertainment Audio, Espoo, Finland, 2002 [12] A. Hyvrinen, J. Karhunen and E. Oja, Independent Component Analysis, Wiley & Sons, 2001 [13] A.Webb, Statistical Pattern Recognition, Wiley & Sons, 2002 [14] M. Plumbley, Algorithms for Non-Negative Independent Component Analysis, in IEEE Transactions on Neural Networks, 14 (3), pp 534543, May 2003

[15] E. Oja, M. Plumbley, Blind Separation of Positive Sources using Non-Negative PCA, in Proc. of the Fourth International Symposium on Independent Component Analysis, Nara, Japan, 2003 [16] J.-F. Cardoso, A. Souloumiac, Blind beamforming for non Gaussian signals, in Proc. of the IEEE, Vol. 140, no. 6, pp. 362-370, 1993 [17] A. Hyvrinen, E. Oja, A Fast and Robust FixedPoint Algorithm for Independent Component Analysis, in IEEE Transactions on Neural Networks, 1999 [18] W. Sethares, Local Consonance and the Relationship between Timbre and Scale, in Journal Acoust. Soc. Am., 94 (3), pt. 1, 1993

AES 116th Convention, Berlin, Germany, 2004 May 811

Page 8 of 8

You might also like

- Snazzyfx Ardcore ManualDocument16 pagesSnazzyfx Ardcore ManualKen StewartNo ratings yet

- Waldorf Microwave MasterclassDocument10 pagesWaldorf Microwave MasterclassWilleke van RijsewijkNo ratings yet

- Modulation: Farrukh JavedDocument26 pagesModulation: Farrukh JavedChaudhary FarrukhNo ratings yet

- Clavia DMI ABDocument4 pagesClavia DMI ABAnonymous a6UCbaJNo ratings yet

- Impulse Response GuideDocument11 pagesImpulse Response GuideandrewhorsburghNo ratings yet

- Waldorf Microwave Masterclass PDFDocument10 pagesWaldorf Microwave Masterclass PDFdaneNo ratings yet

- Plucked String Models FromtheKarplus StrongAlgorithmtoDigitalWG&BeyondDocument15 pagesPlucked String Models FromtheKarplus StrongAlgorithmtoDigitalWG&BeyondboboNo ratings yet

- Shruti-1 User Manual: Mutable InstrumentsDocument18 pagesShruti-1 User Manual: Mutable InstrumentsCristobal NicolásNo ratings yet

- Kim Cascone - "The Aesthetics of Failure"Document4 pagesKim Cascone - "The Aesthetics of Failure"KantOLoopNo ratings yet

- Pulse ModulationDocument38 pagesPulse ModulationMr. Ravi Rameshbhai PatelNo ratings yet

- Acoustics FinalDocument5 pagesAcoustics Finalapi-539630905No ratings yet

- SY Programming v0.40Document55 pagesSY Programming v0.40Lucas Parisi Santos Freitas100% (1)

- The Living Handbook of Narratology - Time - 2014-04-19Document20 pagesThe Living Handbook of Narratology - Time - 2014-04-19Jeet SinghNo ratings yet

- Zeroscillator OPMAN7Document7 pagesZeroscillator OPMAN7David WurthNo ratings yet

- DSP MajorDocument9 pagesDSP MajorPhan Anh HungNo ratings yet

- Martinez Scheffel 2003 Narratology and Theory of FictionDocument9 pagesMartinez Scheffel 2003 Narratology and Theory of FictionJuan Pancho ToroNo ratings yet

- Getting The Hands Dirty PDFDocument8 pagesGetting The Hands Dirty PDFBerk100% (1)

- Ca4 - Designing and Implementing FiltersDocument9 pagesCa4 - Designing and Implementing Filtersapi-223721455No ratings yet

- The Valve Wizard - Tremolo OscillatorDocument3 pagesThe Valve Wizard - Tremolo Oscillatormorag142No ratings yet

- Evolutionary Computation Applied To The Control of Sound SynthesisDocument261 pagesEvolutionary Computation Applied To The Control of Sound SynthesisBobNo ratings yet

- Studio PhaseDocument74 pagesStudio PhaseLeonel Molina AlvaradoNo ratings yet

- Cornell MDocument48 pagesCornell Mapi-633277434No ratings yet

- Nutube Difamp BOMDocument1 pageNutube Difamp BOMArif SusiloNo ratings yet

- Digital Filters in VHDL LabDocument5 pagesDigital Filters in VHDL LabAli AhmadNo ratings yet

- Field Programmable Gate Array Implementation of 14 Bit Sigma-Delta Analog To Digital ConverterDocument4 pagesField Programmable Gate Array Implementation of 14 Bit Sigma-Delta Analog To Digital ConverterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 555 Timer DoctronicsDocument18 pages555 Timer DoctronicsGaneshVenkatachalamNo ratings yet

- Room Impulse ResponseDocument22 pagesRoom Impulse ResponseThang PfievNo ratings yet

- Analog VlsiDocument28 pagesAnalog VlsihegdehegdeNo ratings yet

- A Basic Introduction To Filters, Active, Passive, and Switched CapacitorDocument22 pagesA Basic Introduction To Filters, Active, Passive, and Switched CapacitorheadupNo ratings yet

- Jannidis - Character - The Living Handbook of NarratologyDocument6 pagesJannidis - Character - The Living Handbook of NarratologyLeonardoNo ratings yet

- Clavia Nord Modular 2.0 FacDocument2 pagesClavia Nord Modular 2.0 FacganstarflowconsulNo ratings yet

- Stimming: Production TipsDocument25 pagesStimming: Production TipsJuliano VieiraNo ratings yet

- 1176 BomDocument7 pages1176 BomJimmi JammesNo ratings yet

- Audio Time Scale Pitch ModificationDocument5 pagesAudio Time Scale Pitch ModificationBonnie ThompsonNo ratings yet

- State Variable FilterDocument9 pagesState Variable FilterSimadri BadatyaNo ratings yet

- La2a Parts ListDocument2 pagesLa2a Parts ListJimmi JammesNo ratings yet

- Development of FiltersDocument15 pagesDevelopment of FiltersWilson Chan0% (1)

- Organized Sound Unbounded Space Edgard V PDFDocument6 pagesOrganized Sound Unbounded Space Edgard V PDFNatalia TunjanoNo ratings yet

- Simplified Analogue Realization of The Digital Direct Synthesis (DDS) Technique For Signal GenerationDocument5 pagesSimplified Analogue Realization of The Digital Direct Synthesis (DDS) Technique For Signal GenerationInternational Organization of Scientific Research (IOSR)No ratings yet

- Digital Signal Synthesis DDS - Tutorial - Rev12!2!99Document122 pagesDigital Signal Synthesis DDS - Tutorial - Rev12!2!99Lixo4618No ratings yet

- Classic Waveshapes and SpectraDocument4 pagesClassic Waveshapes and SpectraDæveNo ratings yet

- JOHN YOUNG Reflexions On Sound Image Design PDFDocument10 pagesJOHN YOUNG Reflexions On Sound Image Design PDFCarlos López Charles100% (1)

- Multiple Identity FilterDocument5 pagesMultiple Identity FilterKlemensKohlweisNo ratings yet

- Intermusic - Eq Masterclass - 2Document7 pagesIntermusic - Eq Masterclass - 2vioguitarNo ratings yet

- Wavetable SynthesisDocument1 pageWavetable Synthesismarktowers7653100% (1)

- Synthesis and Design of Dual-Wideband Bandpass Filters With Internally-Coupled Microstrip LinesDocument15 pagesSynthesis and Design of Dual-Wideband Bandpass Filters With Internally-Coupled Microstrip LinesAllam Naveen Kumar 21MCE0006No ratings yet

- Future of MusicDocument4 pagesFuture of MusicFrancisco EmeNo ratings yet

- David Novak 256 Metres of Space Japanese Music Coffeehouses and Experimental Practices of Listening 1Document20 pagesDavid Novak 256 Metres of Space Japanese Music Coffeehouses and Experimental Practices of Listening 1analoguesNo ratings yet

- Impulse Response of Frequency Domain ComponentDocument17 pagesImpulse Response of Frequency Domain Componentbubo28No ratings yet

- Substrate Integrated Waveguide FiltersDocument13 pagesSubstrate Integrated Waveguide FiltersSaeedRezvaniNo ratings yet

- Choosing The Right ReverbDocument6 pagesChoosing The Right ReverbCOLAmusicdeptNo ratings yet

- Impulse Response UtilityDocument26 pagesImpulse Response UtilityStefano MuzioNo ratings yet

- Nord Stage - How To Use The Virtual Analog SynthDocument2 pagesNord Stage - How To Use The Virtual Analog Syntheclosser100No ratings yet

- Exploring Phase Information in Sound Source SeparaDocument9 pagesExploring Phase Information in Sound Source SeparaPaulo FeijãoNo ratings yet

- Musical Applications of Digital SynthesiDocument284 pagesMusical Applications of Digital Synthesihnh64411No ratings yet

- Analýza Barev Orchestrálních NástrojůDocument30 pagesAnalýza Barev Orchestrálních NástrojůOndřej VaníčekNo ratings yet

- PS1 4 PDFDocument6 pagesPS1 4 PDFcyrcosNo ratings yet

- Kyoto UniversityDocument14 pagesKyoto UniversityelkaNo ratings yet

- First Research PaperDocument15 pagesFirst Research PaperHasitha Hiranya AbeykoonNo ratings yet

- Sound Source Separation Algorithm Comparison Using Popular MusicDocument4 pagesSound Source Separation Algorithm Comparison Using Popular Musicmeh3re100% (1)

- HMMPY DocDocument14 pagesHMMPY DocjaknoppNo ratings yet

- Intern by Company 2013Document13 pagesIntern by Company 2013jaknoppNo ratings yet

- Panel Template Style 2 XDocument1 pagePanel Template Style 2 XjaknoppNo ratings yet

- Eldo Ur PDFDocument1,233 pagesEldo Ur PDFDivanshu ChaturvediNo ratings yet

- 100 Days CalDocument9 pages100 Days CalinforumdocsNo ratings yet

- Modal Verbs 1 - AbilityDocument3 pagesModal Verbs 1 - AbilityperdidalmaNo ratings yet

- The "Circle of Fifths" Diagram: (For Major / Relative Minor Key Signatures)Document1 pageThe "Circle of Fifths" Diagram: (For Major / Relative Minor Key Signatures)test7683No ratings yet

- John Williams (Guitarist) - CavatinaDocument7 pagesJohn Williams (Guitarist) - CavatinaIrena MarcetaNo ratings yet

- 551 M.F. Jhocson ST., Sampaloc, Manila: National UniversityDocument4 pages551 M.F. Jhocson ST., Sampaloc, Manila: National UniversityMateo HernandezNo ratings yet

- Philippine Folk Dance: With Spanish InfluenceDocument30 pagesPhilippine Folk Dance: With Spanish InfluenceMauiNo ratings yet

- GEK1519 Concert ReviewDocument3 pagesGEK1519 Concert ReviewZu Jian LeeNo ratings yet

- Led Zeppelin, Hey Hey What Can I DoDocument2 pagesLed Zeppelin, Hey Hey What Can I DoJason CostanzoNo ratings yet

- International Dance Contest Mechanics 1 PDFDocument1 pageInternational Dance Contest Mechanics 1 PDFMemories Organizer100% (1)

- Test Descriptif (2) - 1Document9 pagesTest Descriptif (2) - 1auliaalfatinnissaNo ratings yet

- Scarlatti CatalogoDocument100 pagesScarlatti Catalogoorlando_fraga_1No ratings yet

- Sonate Au Clair de Lune Partition Facile 1er MVMTDocument3 pagesSonate Au Clair de Lune Partition Facile 1er MVMTAlainNo ratings yet

- Hall B QuartetDocument24 pagesHall B QuartetDillion NixonNo ratings yet

- Viva La Vida (Saxo Tenor)Document2 pagesViva La Vida (Saxo Tenor)Júlia Romero CorellaNo ratings yet

- A Certain RomanceDocument2 pagesA Certain RomanceRafael Carvalho Teodoro ScalettaNo ratings yet

- Narrative Report (SLAC - June 30, 2017)Document3 pagesNarrative Report (SLAC - June 30, 2017)ketian15100% (1)

- Yazz The Greatest HomeworkDocument6 pagesYazz The Greatest Homeworkafnafcowxaokyo100% (1)

- Line 6 Helix Tips For BassDocument10 pagesLine 6 Helix Tips For BassTony de SouzaNo ratings yet

- Log Encoder4Document3 pagesLog Encoder4Nicolas InfanteNo ratings yet

- BWV 169Document23 pagesBWV 169CpikssNo ratings yet

- We Wish You A Merry ChristmasDocument3 pagesWe Wish You A Merry ChristmasJoanna Braccini100% (4)

- James Newton Howard AnalysisDocument2 pagesJames Newton Howard AnalysisjuandagvNo ratings yet

- Language in Cinema PDFDocument42 pagesLanguage in Cinema PDFAshu PrakashNo ratings yet

- The Logical Song: Jump To Navigationjump To SearchDocument4 pagesThe Logical Song: Jump To Navigationjump To SearchGood GuyNo ratings yet

- MAPEHDocument22 pagesMAPEHMMercz FernandezNo ratings yet

- Solutions Pre-Int SBDocument2 pagesSolutions Pre-Int SBNisaun Najwa ShalihahNo ratings yet

- Peter Greenhill: Discussions On The Music of The Robert Ap Huw ManuscriptDocument211 pagesPeter Greenhill: Discussions On The Music of The Robert Ap Huw Manuscriptpeter-597928100% (4)

- Independent Research Project ProposalDocument2 pagesIndependent Research Project Proposalapi-425744426No ratings yet

- Azu Etd 2056 Sip1 MDocument148 pagesAzu Etd 2056 Sip1 MAlexandra MgzNo ratings yet

- Amity School of Architecture and Planning and Planning: B. Arch, Semester 7 Landscape Architecture Ar. Preeti MishraDocument15 pagesAmity School of Architecture and Planning and Planning: B. Arch, Semester 7 Landscape Architecture Ar. Preeti Mishrarishav rajNo ratings yet