Professional Documents

Culture Documents

Usage of Regular Expressions in NLP

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Usage of Regular Expressions in NLP

Copyright:

Available Formats

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

USAGE OF REGULAR EXPRESSIONS IN NLP

Gaganpreet Kaur

Computer Science Department, Guru Nanak Dev University, Amritsar, gagansehdev204@gmail.com

Abstract

A usage of regular expressions to search text is well known and understood as a useful technique. Regular Expressions are generic representations for a string or a collection of strings. Regular expressions (regexps) are one of the most useful tools in computer science. NLP, as an area of computer science, has greatly benefitted from regexps: they are used in phonology, morphology, text analysis, information extraction, & speech recognition. This paper helps a reader to give a general review on usage of regular expressions illustrated with examples from natural language processing. In addition, there is a discussion on different approaches of regular expression in NLP.

Keywords Regular Expression, Natural Language Processing, Tokenization, Longest common subsequence alignment, POS tagging ------------------------------------------------------------------------***---------------------------------------------------------------------1. INTRODUCTION

Natural language processing is a large and multidisciplinary field as it contains infinitely many sentences. NLP began in the 1950s as the intersection of artificial intelligence and linguistics. Also there is much ambiguity in natural language. There are many words which have several meanings, such as can, bear, fly, orange, and sentences have meanings different in different contexts. This makes creation of programs that understands a natural language, a challenging task [1] [5] [8].

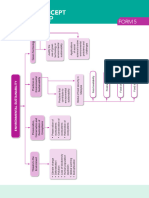

Fig 1: Steps in Natural Language Processing [8] The steps in NLP are [8]: 1. Morphology: Morphology concerns the way words are built up from smaller meaning bearing units. 2. Syntax: Syntax concerns how words are put together to form correct sentences and what structural role each word has. 3. Semantics: Semantics concerns what words mean and how these meanings combine in sentences to form sentence meanings. 4. Pragmatics: Pragmatics concerns how sentences are used in different situations and how use affects the interpretation of the sentence. 5. Discourse: Discourse concerns how the immediately preceding sentences affect the interpretation of the next sentence.

Figure 1 illustrates the steps or stages that followed in Natural Language processing in which surface text that is input is converted into the tokens by using the parsing or Tokenisation phase and then its syntax and semantics should be checked.

2. REGULAR EXPRESSION

Regular expressions (regexps) are one of the most useful tools in computer science. RE is a formal language for specifying the string. Most commonly called the search expression. NLP, as an area of computer science, has greatly benefitted from regexps: they are used in phonology, morphology, text analysis, information extraction, & speech recognition. Regular expressions are placed inside the pair of matching. A regular expression, or RE, describes strings of characters (words or phrases or any arbitrary text). It's a pattern that matches certain strings and doesn't match others. A regular expression is a set of characters that specify a pattern. Regular expressions are

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 168

IJRET: International Journal of Research in Engineering and Technology

case-sensitive. Regular Expression performs various functions in SQL: REGEXP_LIKE: Determine whether pattern matches REGEXP_SUBSTR: Determine what string matches the pattern REGEXP_INSTR: Determine where the match occurred in the string REGEXP_REPLACE: Search and replace a pattern How can RE be used in NLP? 1) Validate data fields (e.g., dates, email address, URLs, abbreviations) 2) Filter text (e.g., spam, disallowed web sites) 3) Identify particular strings in a text (e.g., token boundaries) 4) Convert the output of one processing component into the format required for a second component [4].

eISSN: 2319-1163 | pISSN: 2321-7308

accelerate both string expression matching.

matching

and

regular

4. BASIC RE PATTERNS IN NLP [8]

Here is a discussion of regular expression syntax or patterns and its meaning in various contexts.

Table 1 Regular expression meaning [8] Character . ? * Regular-expression meaning Any character, including whitespace or numeric Zero or one of the preceding character Zero or more of the preceding character One or more of the preceding character Negation or complement

3. RELATED WORK

(A.N. Arslan and Dan, 2006) have proposed the constrained sequence alignment process. The regular expression constrained sequence alignment has been introduced for this purpose. An alignment satisfies the constraint if part of it matches a given regular expression in each dimension (i.e. in each sequence aligned). There is a method that rewards the alignments that include a region matching the given regular expression. This method does not always guarantee the satisfaction of the constraint. (M. Shahbaz, P. McMinn and M. Stevenson, 2012) presented a novel approach of finding valid values by collating suitable regular expressions dynamically that validate the format of the string values, such as an email address. The regular expressions are found using web searches that are driven by the identifiers appearing in the program, for example a string parameter called email Address. The identifier names are processed through natural language processing techniques to tailor the web queries. Once a regular expression has been found, a secondary web search is performed for strings matching the regular expression. + ^

In table 1, all the different characters have their own meaning. Table 2 Regular expression String matching [8] RE /woodchucks/ /a/ /Ali says,/ /book/ /!/ String matched interesting links to woodchucks and lemurs Sammy Ali stopped by Nehas My gift please, Ali says, all our pretty books Leave him behind! said Sammy to neha.

Table3 Regular expression matching [8] RE Match

(Xiaofei Wang and Yang Xu, 2013)discussed the Woodchuck or woodchuck deep packet inspection that become a key /[wW]oodchuck/ /[abc]/ a, b, or c component in network intrusion detection systems, /[0123456789]/ Any digit where every packet in the incoming data stream needs to be compared with patterns in an attack In Table 2 and Table 3, there is a discussion on regular database, byte-by-byte, using either string matching expression string matching as woodchucks is matched with the or regular expression matching. Regular expression string interesting links to woodchucks and lemurs. matching, despite its flexibility and efficiency in Table 4 Regular expression Description [8] attack identification, brings significantly high computation and storage complexities to NIDSes, RE Description making line-rate packet processing a challenging /a*/ Zero or more as task. In this paper, authors present stride finite /a+/ One or more as automata (StriFA), a novel finite automata family, to __________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 169

IJRET: International Journal of Research in Engineering and Technology

eISSN: 2319-1163 | pISSN: 2321-7308

/a? / /cat|dog/

Zero or one as cat or dog

Finite state automata

In Table 4, there is a description of various regular expressions that are used in natural language processing.

Double circle indicates accept state Table 5 Regular expression kleene operators [8] Pattern Colou?r oo*h! o+h! Description Optional previous char 0 or more of previous char 1 or more of previous char

Regular Expression (baa*) or (baa+!) In this way a regular expression can be generated from a Language by creating finite state automata first as from it RE is generated.

In Table 5 there is a discussion on three kleene operators with its meaning. Regular expression: Kleene *, Kleene +, wildcard Special charater + (aka Kleene +) specifies one or more occurrences of the regular expression that comes right before it. Special character * (aka Kleene *) specifies zero or more occurrences of the regular expression that comes right before it. Special character. (Wildcard) specifies any single character. Regular expression: Anchors Anchors are special characters that anchor a regular expression to specific position in the text they are matched against. The anchors are ^ and $ anchor regular expressions at the beginning and end of the text, respectively [8]. Regular expression: Disjunction Disjunction of characters inside a regular expression is done with the matching square brackets [ ]. All characters inside [ ] are part of the disjunction. Regular expression: Negation [^] in disjunction Carat means negation only when first in [].

6. DIFFERENT APPROACHES OF RE IN NLP [2] [3] [10] 6.1 An Improved Algorithm for the Regular

Expression Constrained Multiple Sequence Alignment Problems [2]

This is the first approach, in which there is an illustration in which two synthetic sequences S1 = TGFPSVGKTKDDA, and S2 =TFSVAKDDDGKSA are aligned in a way to maximize the number of matches (this is the longest common subsequence problem). An optimal alignment with 8 matches is shown in part 3(a).

Fig 2(a): An optimal alignment with 8 matches [2]

5.

GENERATION

OF

RE

FROM

SHEEP

LANGUAGE

In NLP, the concept of regular expression (RE) by using Sheep Language is illustrated as [8]: A sheep can talk to another sheep with this language baaaaa! .In this there is a discussion on how a regular expression can be generated from a SHEEP Language. In the Sheep language: ba!, baa!, baaaaa!

For the regular expression constrained sequence alignment problem in NLP with regular expression, R = (G + A) GK(S + T), where denotes a fixed alphabet over which sequences are defined, the alignments sought change. The alignment in Part 2(a) does not satisfy the regular expression constraint. Part2 (b) shows an alignment with which the constraint is satisfied.

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 170

IJRET: International Journal of Research in Engineering and Technology

Fig 2(b): An alignment with the constraint [2] The alignment includes a region (shown with a rectangle drawn in dashed lines in the figure 2b) where the substring GFPSVGKT of S1 is aligned with substring AKDDDGKS of S2, and both substrings match R. In this case, optimal number of matches achievable with the constraint decreases to 4 [2].

eISSN: 2319-1163 | pISSN: 2321-7308

address str. Finally, all characters are converted to lowercase [3]. 2) PoS Tagging: Identifier names often contain words such as articles (a, and, the) and prepositions (to, at etc.) that are not useful when included in web queries. In addition, method names often contain verbs as a prefix to describe the action they are intended to perform. For example, parseEmailAddressStr is supposed to parse an email address. The key information for the input value is contained in the noun email address, rather than the verb parse. The part-of-speech category in the Identifier names can be identified using a NLP tool called Part-of-Speech (PoS) tagger, and thereby removing any non-noun tokens. Thus, an email address str and parse email address str both become email address str [3]. 3) Removal of Non-Words: Identifier names may include nonwords which can reduce the quality of search results. Therefore, names are filtered so that the web query should entirely consist of meaningful words. This is done by removing any word in the processed identifier name that is not a dictionary word. For example, email address str becomes email address, since str is not a dictionary word [3].

6.2 Automated Discovery of Valid Test Strings from the Web using Dynamic Regular Expressions

Collation and Natural Language Processing [3]

In this second approach, there is a combination of Natural Language Processing (NLP) techniques and dynamic regular expressions collation for finding valid String values on the Internet. The rest of the section provides details for each part of the approach [3].

6.2.3 Obtaining Regular Expressions [3]

The regular expressions for the identifiers are obtained dynamically from two ways: 1) RegExLib Search, and 2) Web Search. RegExLib: RegExLib [9] is an online regular expression library that is currently indexing around 3300 expressions for different types (e.g., email, URL, postcode) and scientific notations. It provides an interface to search for a regular expression. Web Search: When RegExLib is unable to produce regular expressions, the approach performs a simple web search. The search query is formulated by prefixing the processed identifier names with the string regular expression. For example, the regular expressions for email address are searched by applying the query regular expression email address. The regular expressions are collected by identifying any string that starts with ^ and ends with $ symbols.

Fig 3: Overview of the approach [3]

6.2.1 Extracting Identifiers

The key idea behind the approach is to extract important information from program identifiers and use them to generate web queries. An identifier is a name that identifies either a unique object or a unique class of objects. An identifier may be a word, number, letter, symbol, or any combination of those. For example, an identifier name including the string email is a strong indicator that its value is expected to be an email address. A web query containing email can be used to retrieve example email addresses from the Internet [3].

6.2.2 Processing Identifier Names

Once the identifiers are extracted, their names are processed using the following NLP techniques. 1) Tokenisation: Identifier names are often formed from concatenations of words and need to be split into separate words (or tokens) before they can be used in web queries. Identifiers are split into tokens by replacing underscores with whitespace and adding a whitespace before each sequence of upper case letters. For example, an_Email_Address_Str becomes an email address str and parseEmailAddressStr becomes parse email

6.2.4 Generating Web Queries

Once the regular expressions are obtained, valid values can be generated automatically, e.g., using automaton. However, the objective here is to generate not only valid values but also realistic and meaningful values. Therefore, a secondary web search is performed to identify values on the Internet matching the regular expressions. This section explains the generation of web queries for the secondary search to identify valid values.

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 171

IJRET: International Journal of Research in Engineering and Technology

The web queries include different versions of pluralized and quoting styles explained in the following.

eISSN: 2319-1163 | pISSN: 2321-7308

6.2.4.1 Pluralization

The approach generates pluralized versions of the processed identifier names by pluralizing the last word according to the grammar rules. For example, email address becomes email addresses.

Suppose here two patterns are to be matched, reference (P1) and replacement (P2. The matching process is performed by sending the input stream to the automaton byte by byte. If the DFA reaches any of its accept states (the states with double circles), say that a match is found.

6.2.4.2 Quoting

The approach generates queries with or without quotes. The former style enforces the search engine to target web pages that contain all words in the query as a complete phrase. The latter style is a general search to target web pages that contain the words in the query. In total, 4 queries are generated for each identifier name that represents all combinations of pluralisation and quoting styles. For a processed identifier name email address, the generated web queries are: email address, email addresses; email address, email addresses.

Fig 5: Tag e and a sliding window used to convert an input stream into an SL stream with tag e. [10]

6.2.4.3 Identifying Values

Finally, the regular expressions and the downloaded web pages are used to identify valid values.

6.3 StriDFA for Multistring Matching [10]

In this method, multistring matching is done and is one of the better approaches for matching pattern among the above two approaches but still contain limitations. Deterministic finite automaton (DFA) and nondeterministic finite automaton (NFA) are two typical finite automata used to implement regex matching. DFA is fast and has deterministic matching performance, but suffers from the memory explosion problem. NFA, on the other hand, requires less memory, but suffers from slow and nondeterministic matching performance. Therefore, neither of them is suitable for implementing high speed regex matching in environments where the fast memory is limited.

Fig 6: Sample StriDFA of patterns reference and replacement with character e set as the tag (some transitions are partly ignored) [10] The construction of the StriDFA in this example is very simple. Here first need to convert the patterns to SL streams. As shown in Figure 6, the SL of patterns P1 and P2 are Fe (P1) =2 2 3 and Fe (P2) = 5 2, respectively. Then the classical DFA construction algorithm is constructed for StriDFA. It is easy to see that the number of states to be visited during the processing is equal to the length of the input stream (in units of bytes), and this number determines the time required for finishing the matching process (each state visit requires a memory access, which is a major bottleneck in todays computer systems). This scheme is designed to reduce the number of states to be visited during the matching process. If this objective is achieved, the number of memory accesses required for detecting a match can be reduced, and consequently, the pattern matching speed can be improved. One way to achieve this objective is to reduce the number of characters sent to the DFA. For example, if select e as the tag and consider the input stream referenceabcdreplacement, as shown in Figure 5 , then the corresponding stride length (SL) stream is Fe(S) = 2 2 3 6 5 2, where Fe(S) denotes the SL stream of the input stream S, e denotes the tag character in

Fig 4: Traditional DFA for patterns reference and replacement (some transitions are partly ignored for simplicity) [10]

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 172

IJRET: International Journal of Research in Engineering and Technology

use. The underscore is used to indicate an SL, to distinguish it as not being a character. As shown in Figure 6, the SL of the input stream is fed into the StriDFA and compared with the SL streams extracted from the rule set.

eISSN: 2319-1163 | pISSN: 2321-7308

speed with a relatively low memory usage. But still third approach is not working for the large alphabet sets.

8. APPLICATIONS OF RE IN NLP [8]

1. 2. 3. 4. Web search Word processing, find, substitute (MS-WORD) Validate fields in a database (dates, email address, URLs) Information extraction (e.g. people & company names)

7. SUMMARY OF APPROACHES

Table 6 Regular expression meaning [8] APPROACH An improved algorithm for the regular expression constrained multiple sequence alignment problems Automated Discovery of Valid Test Strings from the Web using Dynamic Regular Expressions Collation and Natural Language Processing StriDFA for Multistring Matching DESCRIPTION In this approach, a particular RE constraint is followed. PROBLEM Follows constraints for string matching and used in limited areas and the results produced are arbitrary. Multiple strings cant be processed or matched at the same time.

CONCLUSIONS & FUTURE WORK

A regular expression, or RE, describes strings of characters (words or phrases or any arbitrary text). An attempt has been made to present the theory of regular expression in natural language processing in a unified way, combining the results of several authors and showing the different approaches for regular expression i.e. first is a expression constrained multiple sequence alignment problem and second is combination of Natural Language Processing (NLP) techniques and dynamic regular expressions collation for finding valid String values on the Internet and third is Multistring matching regex. All these approaches have their own importance and at last practical applications are discussed. Future work is to generate advanced algorithms for obtaining and filtering regular expressions that shall be investigated to improve the precision of valid values.

In this approach, gives valid values and another benefit is that the generated values are also realistic rather than arbitrary.

REFERENCES

[1]. K.R. Chowdhary, Introduction to Parsing - Natural Language Processing http://krchowdhary.com/me-nlp/nlp01.pdf, April, 2012. [2]. A .N. Arslan and Dan He, An improved algorithm for the regular expression constrained multiple sequence alignment problem, Proc.: Sixth IEEE Symposium on BionInformatics and Bio Engineering (BIBE'06), 2006 [3]. M. Shahbaz, P. McMinn and M. Stevenson, "Automated Discovery of Valid Test Strings from the Web using Dynamic Regular Expressions Collation and Natural Language Processing". Proceedings of the International Conference on Quality Software (QSIC 2012), IEEE, pp. 7988. [4]. Sai Qian, Applications for NLP -Lecture 6: Regular Expression http://talc.loria.fr/IMG/pdf/Lecture_6_Regular_Expression5.pdf , October, 2011 [5]. P. M Nadkarni, L.O. Machado, W. W. Chapman http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3168328/ [6]. H. A. Muhtaseb, Lecture 3: REGULAR EXPRESSIONS AND AUTOMATA [7]. A.McCallum, U. Amherst, Introduction to Natural Language Processing-Lecture 2 CMPSCI 585, 2007 [8]. D. Jurafsky and J.H. Martin, Speech and Language Processing Prentice Hall, 2 edition, 2008.

In this approach, multistring matching is performed. The main scheme is to reduce the number of states during the matching process.

Didnt processed the larger alphabet set.

In Table VI, the approaches are different and have its own importance. As the first approach follows some constraints for RE and used in case where a particular RE pattern is given and second and the third approach is quite different from the first one as there is no such concept of constraints. As second approach is used to extract and match the patterns from the web queries and these queries can be of any pattern. We suggest second approach which is better than the first and third approach as it generally gives valid values and another benefit is that the generated values are also realistic rather than arbitrary. This is because the values are obtained from the Internet which is a rich source of human-recognizable data. The third approach is used to achieve an ultrahigh matching

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 173

IJRET: International Journal of Research in Engineering and Technology

[9]. RegExLib: Regular Expression Library. http://regexlib.com/. [10]. Xiaofei Wang and Yang Xu, StriFA: Stride Finite Automata for High-Speed Regular Expression Matching in Network Intrusion Detection Systems IEEE Systems Journal, Vol. 7, No. 3, September 2013.

eISSN: 2319-1163 | pISSN: 2321-7308

__________________________________________________________________________________________

Volume: 03 Issue: 01 | Jan-2014, Available @ http://www.ijret.org 174

You might also like

- CP5191 Machine Learning Techniques L T P C3 0 0 3Document7 pagesCP5191 Machine Learning Techniques L T P C3 0 0 3indumathythanik933No ratings yet

- Department of Information TechnoloDocument116 pagesDepartment of Information Technolodee pNo ratings yet

- Langauage ModelDocument148 pagesLangauage ModelsharadacgNo ratings yet

- DSECL ZG 522: Big Data Systems: Session 2: Parallel and Distributed SystemsDocument58 pagesDSECL ZG 522: Big Data Systems: Session 2: Parallel and Distributed SystemsSwati BhagavatulaNo ratings yet

- System Models For Distributed and Cloud ComputingDocument15 pagesSystem Models For Distributed and Cloud ComputingSubrahmanyam SudiNo ratings yet

- Mpi Openmp ExamplesDocument27 pagesMpi Openmp ExamplesDeepak K NambiarNo ratings yet

- (A) What Is Traditional Model of NLP?: Unit - 1Document18 pages(A) What Is Traditional Model of NLP?: Unit - 1Sonu KumarNo ratings yet

- Solutions To NLP I Mid Set ADocument8 pagesSolutions To NLP I Mid Set Ajyothibellaryv100% (1)

- HEMWATI NANDAN BAHUGUNA GARHWAL UNIVERSITY PRACTICAL FILE FOR NATURAL LANGUAGE PROCESSINGDocument100 pagesHEMWATI NANDAN BAHUGUNA GARHWAL UNIVERSITY PRACTICAL FILE FOR NATURAL LANGUAGE PROCESSINGBhawini RajNo ratings yet

- Routinemap Patterns of Life in Spatiotemporal VisualizationDocument10 pagesRoutinemap Patterns of Life in Spatiotemporal Visualizationapi-305338429No ratings yet

- Modern NLP in PythonDocument46 pagesModern NLP in PythonRahul JainNo ratings yet

- F5C10 Concept MapDocument1 pageF5C10 Concept MapLeena bsb.No ratings yet

- (2007) - The Aesthetics of Graph VisualizationDocument8 pages(2007) - The Aesthetics of Graph VisualizationGJ SavarisNo ratings yet

- NLP QBDocument14 pagesNLP QBIshika Patel100% (1)

- MC9223-Design and Analysis of Algorithm Unit-I - IntroductionDocument35 pagesMC9223-Design and Analysis of Algorithm Unit-I - Introductionmonicadoss85No ratings yet

- NLP Iat QBDocument10 pagesNLP Iat QBABIRAMI SREERENGANATHANNo ratings yet

- Unit 4 NLPDocument51 pagesUnit 4 NLPKumar SumitNo ratings yet

- Natural Language ProcessingDocument31 pagesNatural Language Processingysf1991No ratings yet

- Is Zc415 (Data Mining BITS-WILP)Document4 pagesIs Zc415 (Data Mining BITS-WILP)Anonymous Lz6f4C6KFNo ratings yet

- Install OpenMPI in LinuxDocument5 pagesInstall OpenMPI in LinuxDummyofindiaIndiaNo ratings yet

- Assignment Coa Wase Wims2019Document8 pagesAssignment Coa Wase Wims2019RAHUL KUMAR RNo ratings yet

- Com713 Advanced Data Structures and AlgorithmsDocument13 pagesCom713 Advanced Data Structures and AlgorithmsThilinaAbhayarathneNo ratings yet

- Table of ContentDocument13 pagesTable of Contentrekha_rckstarNo ratings yet

- An Ontology-Based NLP Approach To Semantic Annotation of Annual ReportDocument4 pagesAn Ontology-Based NLP Approach To Semantic Annotation of Annual ReportRajesh KumarNo ratings yet

- NLTK: The Natural Language Toolkit: Steven Bird Edward LoperDocument4 pagesNLTK: The Natural Language Toolkit: Steven Bird Edward LoperYash GautamNo ratings yet

- Natural Language Processing: Dr. Tulasi Prasad Sariki SCOPE, VIT ChennaiDocument29 pagesNatural Language Processing: Dr. Tulasi Prasad Sariki SCOPE, VIT Chennainaruto sasukeNo ratings yet

- Ch11 3 TriesDocument11 pagesCh11 3 TriesAatika FatimaNo ratings yet

- NLP Assignment Anand1Document5 pagesNLP Assignment Anand1namanNo ratings yet

- Speech Recognition ArchitectureDocument13 pagesSpeech Recognition ArchitectureDhrumil DasNo ratings yet

- Data Science NewDocument9 pagesData Science NewKrishna mENo ratings yet

- Natural Language ProcessingDocument24 pagesNatural Language ProcessingsadiaNo ratings yet

- MPI Library Code ExamplesDocument8 pagesMPI Library Code ExamplesŞaban ŞekerNo ratings yet

- Lecture NLPDocument38 pagesLecture NLPharpritsingh100% (1)

- AI UncertaintyDocument38 pagesAI UncertaintySirishaNo ratings yet

- Natural Language Processing NotesDocument26 pagesNatural Language Processing NotescpasdNo ratings yet

- NLP ReportDocument23 pagesNLP ReportAnonymous TVE0T8rNo ratings yet

- Irt 2 Marks With AnswerDocument15 pagesIrt 2 Marks With AnswerAmaya EmaNo ratings yet

- Drug Specification Named Entity Recognition Base On BiLSTM-CRF Model PDFDocument5 pagesDrug Specification Named Entity Recognition Base On BiLSTM-CRF Model PDFEduardo LacerdaNo ratings yet

- Semantic Information RetrievalDocument168 pagesSemantic Information RetrievalHabHabNo ratings yet

- What is NLPDocument30 pagesWhat is NLPFerooz KhanNo ratings yet

- Word Semantics, Sentence Semantics and Utterance SemanticsDocument11 pagesWord Semantics, Sentence Semantics and Utterance SemanticsАннаNo ratings yet

- AI NLP guide covers processing textDocument16 pagesAI NLP guide covers processing textdemo dataNo ratings yet

- Query Processing & Optimization in 40 CharactersDocument15 pagesQuery Processing & Optimization in 40 Charactersmusikmania0% (1)

- A Hands-On Guide To Text Classification With Transformer Models (XLNet, BERT, XLM, RoBERTa)Document9 pagesA Hands-On Guide To Text Classification With Transformer Models (XLNet, BERT, XLM, RoBERTa)sita deviNo ratings yet

- Topic Modelling Using NLPDocument18 pagesTopic Modelling Using NLPbhaktaballav garaiNo ratings yet

- NLP PresentationDocument19 pagesNLP PresentationMUHAMMAD NOFIL BHATTYNo ratings yet

- B - N - M - Institute of Technology: Department of Computer Science & EngineeringDocument44 pagesB - N - M - Institute of Technology: Department of Computer Science & Engineering1DT18IS029 DILIPNo ratings yet

- 64 Natural Language Processing Interview Questions and Answers-18 Juli 2019Document30 pages64 Natural Language Processing Interview Questions and Answers-18 Juli 2019Ama AprilNo ratings yet

- 10 Natural Language ProcessingDocument27 pages10 Natural Language Processingrishad93No ratings yet

- ITR NotesDocument165 pagesITR NotesSuvetha SenguttuvanNo ratings yet

- IR - ModelsDocument58 pagesIR - ModelsMourad100% (3)

- Lecture 1Document81 pagesLecture 1adrian2rotiNo ratings yet

- Introduction to Machine Learning in 40 CharactersDocument59 pagesIntroduction to Machine Learning in 40 CharactersJAYACHANDRAN J 20PHD0157No ratings yet

- Cse I SEM Q.BANK 2016-17 PDFDocument127 pagesCse I SEM Q.BANK 2016-17 PDFAnitha McNo ratings yet

- Building Computational Models of Natural LanguageDocument79 pagesBuilding Computational Models of Natural LanguageRajGuptaNo ratings yet

- NLP UNIT 2 (Ques Ans Bank)Document26 pagesNLP UNIT 2 (Ques Ans Bank)Zainab BelalNo ratings yet

- Mining Association Rules in Large DatabasesDocument40 pagesMining Association Rules in Large Databasessigma70egNo ratings yet

- Introduction to Machine Learning in the Cloud with Python: Concepts and PracticesFrom EverandIntroduction to Machine Learning in the Cloud with Python: Concepts and PracticesNo ratings yet

- Wind Damage To Trees in The Gitam University Campus at Visakhapatnam by Cyclone HudhudDocument11 pagesWind Damage To Trees in The Gitam University Campus at Visakhapatnam by Cyclone HudhudInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Flood Related Disasters Concerned To Urban Flooding in Bangalore, IndiaDocument8 pagesFlood Related Disasters Concerned To Urban Flooding in Bangalore, IndiaInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Wind Damage To Buildings, Infrastrucuture and Landscape Elements Along The Beach Road at VisakhapatnamDocument10 pagesWind Damage To Buildings, Infrastrucuture and Landscape Elements Along The Beach Road at VisakhapatnamInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Likely Impacts of Hudhud On The Environment of VisakhapatnamDocument3 pagesLikely Impacts of Hudhud On The Environment of VisakhapatnamInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Effect of Lintel and Lintel Band On The Global Performance of Reinforced Concrete Masonry In-Filled FramesDocument9 pagesEffect of Lintel and Lintel Band On The Global Performance of Reinforced Concrete Masonry In-Filled FramesInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Groundwater Investigation Using Geophysical Methods - A Case Study of Pydibhimavaram Industrial AreaDocument5 pagesGroundwater Investigation Using Geophysical Methods - A Case Study of Pydibhimavaram Industrial AreaInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Enhancing Post Disaster Recovery by Optimal Infrastructure Capacity BuildingDocument8 pagesEnhancing Post Disaster Recovery by Optimal Infrastructure Capacity BuildingInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Impact of Flood Disaster in A Drought Prone Area - Case Study of Alampur Village of Mahabub Nagar DistrictDocument5 pagesImpact of Flood Disaster in A Drought Prone Area - Case Study of Alampur Village of Mahabub Nagar DistrictInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Shear Strength of RC Deep Beam Panels - A ReviewDocument15 pagesShear Strength of RC Deep Beam Panels - A ReviewInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Low Cost Wireless Sensor Networks and Smartphone Applications For Disaster Management and Improving Quality of LifeDocument5 pagesLow Cost Wireless Sensor Networks and Smartphone Applications For Disaster Management and Improving Quality of LifeInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Role of Voluntary Teams of Professional Engineers in Dissater Management - Experiences From Gujarat EarthquakeDocument6 pagesRole of Voluntary Teams of Professional Engineers in Dissater Management - Experiences From Gujarat EarthquakeInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Review Study On Performance of Seismically Tested Repaired Shear WallsDocument7 pagesReview Study On Performance of Seismically Tested Repaired Shear WallsInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Monitoring and Assessment of Air Quality With Reference To Dust Particles (Pm10 and Pm2.5) in Urban EnvironmentDocument3 pagesMonitoring and Assessment of Air Quality With Reference To Dust Particles (Pm10 and Pm2.5) in Urban EnvironmentInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Cyclone Disaster On Housing and Coastal AreaDocument7 pagesCyclone Disaster On Housing and Coastal AreaInternational Journal of Research in Engineering and TechnologyNo ratings yet

- A Geophysical Insight of Earthquake Occurred On 21st May 2014 Off Paradip, Bay of BengalDocument5 pagesA Geophysical Insight of Earthquake Occurred On 21st May 2014 Off Paradip, Bay of BengalInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Detection of Hazard Prone Areas in The Upper Himalayan Region in Gis EnvironmentDocument9 pagesDetection of Hazard Prone Areas in The Upper Himalayan Region in Gis EnvironmentInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Hudhud Cyclone - A Severe Disaster in VisakhapatnamDocument8 pagesHudhud Cyclone - A Severe Disaster in VisakhapatnamInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Assessment of Seismic Susceptibility of RC BuildingsDocument4 pagesAssessment of Seismic Susceptibility of RC BuildingsInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Can Fracture Mechanics Predict Damage Due Disaster of StructuresDocument6 pagesCan Fracture Mechanics Predict Damage Due Disaster of StructuresInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Coastal Zones - Seismic Vulnerability An Analysis From East Coast of IndiaDocument4 pagesCoastal Zones - Seismic Vulnerability An Analysis From East Coast of IndiaInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Comparative Study of The Forces in G+5 and G+10 Multi Storied Buildings Subjected To Different Wind SpeedsDocument10 pagesComparative Study of The Forces in G+5 and G+10 Multi Storied Buildings Subjected To Different Wind SpeedsInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Developing of Decision Support System For Budget Allocation of An R&D OrganizationDocument6 pagesDeveloping of Decision Support System For Budget Allocation of An R&D OrganizationInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Effect of Hudhud Cyclone On The Development of Visakhapatnam As Smart and Green City - A Case StudyDocument4 pagesEffect of Hudhud Cyclone On The Development of Visakhapatnam As Smart and Green City - A Case StudyInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Challenges in Oil and Gas Industry For Major Fire and Gas Leaks - Risk Reduction MethodsDocument4 pagesChallenges in Oil and Gas Industry For Major Fire and Gas Leaks - Risk Reduction MethodsInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Analytical Solutions For Square Shape PressureDocument4 pagesAnalytical Solutions For Square Shape PressureInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Disaster Recovery Sustainable HousingDocument4 pagesDisaster Recovery Sustainable HousingInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Cpw-Fed Uwb Antenna With Wimax Band-Notched CharacteristicsDocument5 pagesCpw-Fed Uwb Antenna With Wimax Band-Notched CharacteristicsInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Brain Tumor Segmentation Using Asymmetry Based Histogram Thresholding and K-Means ClusteringDocument4 pagesBrain Tumor Segmentation Using Asymmetry Based Histogram Thresholding and K-Means ClusteringInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Rate Adaptive Resource Allocation in Ofdma Using Bees AlgorithmDocument5 pagesRate Adaptive Resource Allocation in Ofdma Using Bees AlgorithmInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Engineering Thermodynamics Work and Heat Transfer 4th Edition by GFC Rogers Yon Mayhew 0582045665 PDFDocument5 pagesEngineering Thermodynamics Work and Heat Transfer 4th Edition by GFC Rogers Yon Mayhew 0582045665 PDFFahad HasanNo ratings yet

- Critical Review By:: Shema Sheravie Ivory F. QuebecDocument3 pagesCritical Review By:: Shema Sheravie Ivory F. QuebecShema Sheravie IvoryNo ratings yet

- SQL Server DBA Daily ChecklistDocument4 pagesSQL Server DBA Daily ChecklistLolaca DelocaNo ratings yet

- Signal Sampling, Quantization, Binary Encoding: Oleh Albert SagalaDocument46 pagesSignal Sampling, Quantization, Binary Encoding: Oleh Albert SagalaRamos D HutabalianNo ratings yet

- Blueprint 7 Student Book Teachers GuideDocument128 pagesBlueprint 7 Student Book Teachers GuideYo Rk87% (38)

- Equipment BrochureDocument60 pagesEquipment BrochureAmar BeheraNo ratings yet

- CHRM - A01.QXD - Unknown - 3071 PDFDocument545 pagesCHRM - A01.QXD - Unknown - 3071 PDFbeaudecoupe100% (2)

- Chapter 1&2 Exercise Ce StatisticDocument19 pagesChapter 1&2 Exercise Ce StatisticSky FireNo ratings yet

- AgendaDocument72 pagesAgendaThusitha WickramasingheNo ratings yet

- Project SELF Work Plan and BudgetDocument3 pagesProject SELF Work Plan and BudgetCharede BantilanNo ratings yet

- SEM GuideDocument98 pagesSEM GuideMustaque AliNo ratings yet

- Well Logging 1Document33 pagesWell Logging 1Spica FadliNo ratings yet

- Scheduling BODS Jobs Sequentially and ConditionDocument10 pagesScheduling BODS Jobs Sequentially and ConditionwicvalNo ratings yet

- Lpi - 101-500Document6 pagesLpi - 101-500Jon0% (1)

- Quality Policy Nestle PDFDocument6 pagesQuality Policy Nestle PDFJonathan KacouNo ratings yet

- Chemistry An Introduction To General Organic and Biological Chemistry Timberlake 12th Edition Test BankDocument12 pagesChemistry An Introduction To General Organic and Biological Chemistry Timberlake 12th Edition Test Banklaceydukeqtgxfmjkod100% (46)

- U.S. Copyright Renewals, 1962 January - June by U.S. Copyright OfficeDocument471 pagesU.S. Copyright Renewals, 1962 January - June by U.S. Copyright OfficeGutenberg.orgNo ratings yet

- InapDocument38 pagesInapSourav Jyoti DasNo ratings yet

- CHAPTER II: Review of Related Literature I. Legal ReferencesDocument2 pagesCHAPTER II: Review of Related Literature I. Legal ReferencesChaNo ratings yet

- Read Me Slave 2.1Document7 pagesRead Me Slave 2.1Prasad VylaleNo ratings yet

- Welcome To Word GAN: Write Eloquently, With A Little HelpDocument8 pagesWelcome To Word GAN: Write Eloquently, With A Little HelpAkbar MaulanaNo ratings yet

- District Plan of ActivitiesDocument8 pagesDistrict Plan of ActivitiesBrian Jessen DignosNo ratings yet

- 2nd Perdev TestDocument7 pages2nd Perdev TestBETHUEL P. ALQUIROZ100% (1)

- Herschel 10027757Document83 pagesHerschel 10027757jurebieNo ratings yet

- I-K Bus Codes v6Document41 pagesI-K Bus Codes v6Dobrescu CristianNo ratings yet

- Lesson Exemplar On Contextualizing Science Lesson Across The Curriculum in Culture-Based Teaching Lubang Elementary School Science 6Document3 pagesLesson Exemplar On Contextualizing Science Lesson Across The Curriculum in Culture-Based Teaching Lubang Elementary School Science 6Leslie SolayaoNo ratings yet

- Propagating Trees and Fruit Trees: Sonny V. Matias TLE - EA - TeacherDocument20 pagesPropagating Trees and Fruit Trees: Sonny V. Matias TLE - EA - TeacherSonny MatiasNo ratings yet

- FeminismDocument8 pagesFeminismismailjuttNo ratings yet

- USAF Electronic Warfare (1945-5)Document295 pagesUSAF Electronic Warfare (1945-5)CAP History LibraryNo ratings yet

- Afm PPT 2.1Document33 pagesAfm PPT 2.1Avi malavNo ratings yet