Professional Documents

Culture Documents

ANnetworks

Uploaded by

icovinyCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ANnetworks

Uploaded by

icovinyCopyright:

Available Formats

Artificial Neural Networks

2

Outline

Introduction to Neural Network

Introduction to Artificial Neural Network

Properties of Artificial Neural Network

Applications of Artificial Neural Network

Demo Neural Network Tool Box

Case-1 Designing XOR network

Case-2 Power system security assessment

CIARE-2012, IIT Mandi

What are Neural Networks?

Models of the brain and nervous system

Highly parallel

Process information much more like the

brain than a serial computer

Learning

Very simple principles

Very complex behaviours

3 CIARE-2012, IIT Mandi

BIOLOGICAL NEURAL NETWORK

4

Figure 1 Structure of biological neuron

CIARE-2012, IIT Mandi

5

The Structure of Neurons

Axons connect to dendrites via synapses.

Electro-chemical signals are propagated

from the dendritic input, through the cell

body, and down the axon to other neurons

A neuron has a cell body, a branching input

structure (the dendrI te) and a branching

output structure (the axOn)

CIARE-2012, IIT Mandi

6

A neuron only fires if its input signal

exceeds a certain amount (the threshold)

in a short time period.

Synapses vary in strength

Good connections allowing a large signal

Slight connections allow only a weak signal.

Synapses can be either excitatory or

inhibitory.

The Structure of Neurons

CIARE-2012, IIT Mandi

7

The Artificial Neural Network

Figure 2 Structure of artificial neuron

Mathematically, the output expression of the

network is given as

+ = =

=

N

K

K K

b W X F S F Y

1

) (

CIARE-2012, IIT Mandi

ANNs The basics

ANNs incorporate the two fundamental

components of biological neural nets:

1. Neurones (nodes)

2. Synapses (weights)

8 CIARE-2012, IIT Mandi

9

Properties of Artificial Neural

Nets (ANNs)

CIARE-2012, IIT Mandi

10

Properties of Artificial Neural

Nets (ANNs)

Many simple neuron-like threshold switching

units

Many weighted interconnections among units

Highly parallel, distributed processing

Learning by tuning the connection weights

CIARE-2012, IIT Mandi

11

Appropriate Problem Domains

for Neural Network Learning

Input is high-dimensional discrete or real-

valued (e.g. raw sensor input)

Output is discrete or real valued

Output is a vector of values

Form of target function is unknown

Humans do not need to interpret the results

(black box model)

CIARE-2012, IIT Mandi

Applications

Ability to model linear and non-linear systems

without the need to make assumptions implicitly.

Applied in almost every field of science and

engineering. Few of them are

Function approaximation, or regression analysis, including time series

and modelling.

Classification, including pattern and sequence recognition, novelty

detection and sequential decision making.

Data processing, including filtering, clustering, blind signal separation

and compression.

Computational neuroscience and neurohydrodynamics

Forecating and prediction

Estimation and control

12 CIARE-2012, IIT Mandi

Applications in Electrical

Load forecasting

Short-term load forecasting

Mid-term load forecasting

Long-term load frecasting

Fault diagnosis/ Fault location

Economic dispatch

Security Assessment

Estimation of solar radiation, solar heating, etc.

Wind speed prediction

13 CIARE-2012, IIT Mandi

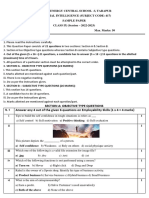

Designing ANN models follows

a number of systemic

procedures. In general, there

are five basics steps:

(1) collecting data,

(2) preprocessing data

(3) building the network

(4) train, and

(5) test performance of model

as shown in Fig.

Designing ANN models

Fig. 3. Basic flow for designing

artificial neural network model

14 CIARE-2012, IIT Mandi

15

Neural Network

Problems

Many Parameters to be set

Overfitting

long training times

...

CIARE-2012, IIT Mandi

The Neural Network Toolbox is one of the

commonly used, powerful, commercially

available software tools for the development

and design of neural networks. The software is

user-friendly, permits flexibility and convenience

in interfacing with other toolboxes in the same

environment to develop a full application.

INTRODUCTION TO NN TOOLBOX

16 CIARE-2012, IIT Mandi

Features

It supports a wide variety of feed-forward and

recurrent networks, including perceptrons, radial

basis networks, BP networks, learning vector

quantization (LVQ) networks, self-organizing

networks, Hopfield and Elman NWs, etc.

It also supports the activation function types of

bi-directional linear with hard limit (satlins) and

without hard limit, threshold (hard limit), signum

(symmetlic hard limit), sigmoidal (log-sigmoid),

and hyperbolic tan (tan-sigmoid).

17 CIARE-2012, IIT Mandi

Features

In addition, it supports unidirectional linear with

hard limit (satlins) and without hard limit, radial

basis and triangular basis, and competitive and

soft max functions. A wide variety of training and

learning algorithms are supported.

18 CIARE-2012, IIT Mandi

Case-1 Problem Definition

The XOR problemrequires one hidden layer &

one output layer, since its NOT linearly

separable.

19 CIARE-2012, IIT Mandi

Design Phase

20 CIARE-2012, IIT Mandi

NN Toolbox

NN toolbox can be open by entering

command

>>nntool

It can also be open as shown below

It will open NN Network/ Data Manager

screen.

21 CIARE-2012, IIT Mandi

Getting Started

22 CIARE-2012, IIT Mandi

NN Network/ Data Manager

23 CIARE-2012, IIT Mandi

Design

Let P denote the input and T denote the

target/output.

In Matlab as per the guidelines of

implementation these are to be expressed in

the form of matrices:

P = [0 0 1 1; 0 1 0 1]

T = [0 1 1 0]

To use a network first design it, then train it

before start simulation.

We follow the steps in order to do the above:

24 CIARE-2012, IIT Mandi

Provide input and target data

Step-1: First we have to enter P and T to the NN

Network Manager. This is done by clicking New

Data once.

Step-2: Type P as the Name, and corresponding

matrix as the Value, select Inputs under

DataType, then confirm by clicking on Create.

Step-3: Similarly, type in T as the Name, and

corresponding matrix as the Value, select

Targets, under DataType , then confirm.

See a screen like following figures

25 CIARE-2012, IIT Mandi

Providing input

26 CIARE-2012, IIT Mandi

Providing target data

27 CIARE-2012, IIT Mandi

Create Network

Step-4: Now we try to create a XORNet. For

this click on New Network.

See a screen like in the following figure.

Now change all the parameters on the screen

to the values as indicated on the following

screen:

28 CIARE-2012, IIT Mandi

Defining XORNet network

29 CIARE-2012, IIT Mandi

Setting network parameters

Make Sure the parameters are as follows:

Network Type = Feedforword Backprop

Train Function = TRAINLM

Adaption Learning Function = LEARNGDM

Performance Function = MSE

Numbers of Layers = 2

30 CIARE-2012, IIT Mandi

Define network size

Step-5: Select Layer 1, type in 2 for the

number of neurons, & select TANSIG as

Transfer Function.

Select Layer 2, type in 1 for the number of

neurons, & select TANSIG as Transfer

Function.

Step-6: Then, confirm by hitting the Create

button, which concludes the XOR network

implementation phase.

31 CIARE-2012, IIT Mandi

32 CIARE-2012, IIT Mandi

Step-7: Now, highlight XORNet with DOUBLE

click, then click on Train button.

You will get the following screen indicated in

figure.

33 CIARE-2012, IIT Mandi

Training network

34 CIARE-2012, IIT Mandi

Defining training parameters

35 CIARE-2012, IIT Mandi

Step-8: On Training Info, select P as Inputs,

T as Targets.

On Training Parameters, specify:

epochs = 1000

Goal = 0.000000000000001

Max fail = 50

After, confirming all the parameters have

been specified as indented, hit Train Network.

36 CIARE-2012, IIT Mandi

Training process

37 CIARE-2012, IIT Mandi

Various Plots

Now we can get following plots

Performance plot

It should get a decaying plot (since you are

trying to minimize the error).

Training State Plot

Regression Plot

38 CIARE-2012, IIT Mandi

Performance plot

39

plots the

training,

validation, and

test

performances

given the

training

record TR

returned by

the function

train.

CIARE-2012, IIT Mandi

Training state plot

40

plots the training

state from a

training record

TR returned by

train.

CIARE-2012, IIT Mandi

Regression plot

41

Plots the linear

regression of

targets relative to

outputs.

CIARE-2012, IIT Mandi

View weights and bias

Step-8: Now to confirm the XORNet structure

and values of various Weights and Bias of the

trained network click on View on the

Network/Data Manager window.

NOTE: If for any reason, you dont get the figure

as expected, click on Delete and recreate the

XORNet as described above.

Now, the XORNet has been trained

successfully and is ready for simulation.

42 CIARE-2012, IIT Mandi

XORNet Structure

43 CIARE-2012, IIT Mandi

Network simulation

With trained network, simulation is a way of

testing on the network to see if it meets our

expectation.

Step-9: Now, create a new test data S (with a

matrix [1; 0] representing a set of two inputs)

on the NN Network Manager, follow the same

procedure indicated before (like for input P).

44 CIARE-2012, IIT Mandi

Step-10: HighLight XORNet again with one

click, then click on the Simulate button on the

Network Manager. Select S as the Inputs,

type in ORNet_outputsSim as Outputs, then

hit the Simulate Network button and check

the result of XORNet_outputSim on the NN

Network Manager, by clicking View.

This concludes the whole process of XOR

network design, training & simulation.

45 CIARE-2012, IIT Mandi

Simulated result

46 CIARE-2012, IIT Mandi

Case-2 Problem Definition

Power system security assessment determines

safety status of a power system in three fold

steps: system monitoring, contingency analysis

and security control.

load flow equations are required to identify the

power flows and voltage levels throughout the

transmission system

The contingencies can be single element outage

(N-1), multiple-element outage (N-2 or N-X) and

sequential outage

Here single only outage at a time is considered

47 CIARE-2012, IIT Mandi

The input data is obtained from offline Newton-

Raphson load flow by using the MATLAB

software. The data have matrix size [12X65].

In data collection, these input data are divided

into three groups which are train data, validate

data, and test data. The matrix size of train data

is [12X32] while the matrix size of test data

is [12X23].

Data Collection

48 CIARE-2012, IIT Mandi

Data Collection

The bus voltages V

1

, V

2

and V

3

are not included

in the train data and test data because they

are generator buses. They will be controlled by

the automatic voltage regulator (AVR) system.

In train data, there are 10 train data in secure

condition while 12 train data in insecure

condition. For test data, there are 1 test data

which is secure status while 10 test data are

insecure status.

49 CIARE-2012, IIT Mandi

DATA COLLECTION

50 CIARE-2012, IIT Mandi

DATA COLLECTION

51 CIARE-2012, IIT Mandi

After data collection, 3 data preprocessing

procedures train the ANNs more efficiently.

solve the problem of missing data,

normalize data, and

randomize data.

The missing data are replaced by the average

of neighboring values.

DATA PRE-PROCESSING

52 CIARE-2012, IIT Mandi

Normalization

Normalization procedure before presenting

the input data to the network is required

since mixing variables with large magnitudes

and small magnitudes will confuse the

learning algorithm on the importance of each

variable and may force it to finally reject the

variable with the smaller magnitude.

53 CIARE-2012, IIT Mandi

At this stage, the designer specifies the

number of hidden layers, neurons in each

layer, transfer function in each layer, training

function, weight/bias learning function, and

performance function.

Building the Network

54 CIARE-2012, IIT Mandi

During the training process, the weights are

adjusted to make the actual outputs

(predicated) close to the target (measured)

outputs of the network.

Fourteen types of training algorithms for

developing the MLP network.

MATLAB provides built-in transfer functions

linear (purelin), Hyperbolic Tangent Sigmoid (tansig)

and Logistic Sigmoid (logsig). The graphical

illustration and mathematical form of such functions

are shown in Table 1.

TRAINING THE NETWORK

55 CIARE-2012, IIT Mandi

TRAINING THE NETWORK

Table 1. MATLAB built-in

transfer functions

56 CIARE-2012, IIT Mandi

57

Parameter setting

Number of layers

Number of neurons

too many neurons, require more training time

Learning rate

from experience, value should be small ~0.1

Momentum term

..

CIARE-2012, IIT Mandi

The next step is to test the performance of the

developed model. At this stage unseen data are

exposed to the model.

In order to evaluate the performance of the

developed ANN models quantitatively and verify

whether there is any underlying trend in

performance of ANN models, statistical analysis

involving the coefficient of determination (R), the

root mean square error (RMSE), and the mean

bias error (MBE) are conducted.

TESTING THE NETWORK

58 CIARE-2012, IIT Mandi

RMSE

RMSE provides information on the short

term performance which is a measure of

the variation of predicated values around

the measured data. The lower the RMSE,

the more accurate is the estimation.

59 CIARE-2012, IIT Mandi

MBE

MBE is an indication of the average deviation

of the predicted values from the corresponding

measured data and can provide information on

long term performance of the models; the

lower MBE the better is the long term model

prediction.

60 CIARE-2012, IIT Mandi

ANN implementation is a process that results in

design of best ANN configuration.

Percentages of classification accuracy and mean

square error are used to represent the

performance of ANN in terms of accuracy to

predict the security level of IEEE 9 bus system.

Steps of ANN implementation is shown in the

following flow chart.

PROGRAMMING THE NEURAL

NETWORK MODEL

61 CIARE-2012, IIT Mandi

FLOW CHART

62 CIARE-2012, IIT Mandi

First run the MATLAB file testandtrain.m.

This file contains test data (input data) and

target data.

Name of input data is train

Name of target data is target

Network can be initialized from command prompt

as

>>nftool

or by using following step

USING NN TOOLBOX

63 CIARE-2012, IIT Mandi

OPENING nftool

64 CIARE-2012, IIT Mandi

Network fitting tool appears as show below

NETWORK FITTING TOOL

65 CIARE-2012, IIT Mandi

Clicking on next button provide option to give

input and target data.

PROVIDING INPUT AND TARGET

DATA

66 CIARE-2012, IIT Mandi

Here we define training, validating, and test data.

VALIDATING AND TEST DATA

67 CIARE-2012, IIT Mandi

Here we set the number of neurons in the fitting

networks hidden layer.

DEFINING NETWORK SIZE

68 CIARE-2012, IIT Mandi

TRAIN NETWORK

69 CIARE-2012, IIT Mandi

By clicking on train button training process

starts.

TRAINING PROCESS

70 CIARE-2012, IIT Mandi

PERFORMANCE PLOT

71 CIARE-2012, IIT Mandi

TRAINING STATE PLOT

72 CIARE-2012, IIT Mandi

REGRESSION PLOT

73 CIARE-2012, IIT Mandi

EVALUATE NETWORK

74 CIARE-2012, IIT Mandi

SAVE RESULTS

75 CIARE-2012, IIT Mandi

Following are the simulink diagram of the network.

SIMULINK DIAGRAM

76 CIARE-2012, IIT Mandi

Query

?

77 CIARE-2012, IIT Mandi

Epoch- During iterative training of a neural network ,

an Epoch is a single pass through the entire training

set, followed by testing of the verification set.

Generalization- how well will the network make

predictions for cases that are not in the training set?

Backpropagation- refers to the method for computing

the gradient of the case-wise error function with

respect to the weights for a feedforward network.

Backprop- refers to a training method that uses

backpropagation to compute the gradient.

Backprop network- is a feedforward network trained

by backpropagation.

CIARE-2012, IIT Mandi 78

You might also like

- Some Complexities in English Article Use and AcquisitionDocument57 pagesSome Complexities in English Article Use and AcquisitionicovinyNo ratings yet

- 2015 11 03 Overcurrent Coordination Industrial Applications PDFDocument120 pages2015 11 03 Overcurrent Coordination Industrial Applications PDFLimuel EspirituNo ratings yet

- Microsoft PowerPoint - Motor Protection Principles 101308.Ppt - Motor Protection PrinciplesDocument35 pagesMicrosoft PowerPoint - Motor Protection Principles 101308.Ppt - Motor Protection PrinciplesPaneendra Kumar0% (1)

- Overcurrent Relay With Adjustable Characteristics: A New Inverse TimeDocument9 pagesOvercurrent Relay With Adjustable Characteristics: A New Inverse TimeicovinyNo ratings yet

- Lecture 1Document22 pagesLecture 1Nitin NileshNo ratings yet

- Time-Current Curves PDFDocument65 pagesTime-Current Curves PDFENG2017MUSTAFANo ratings yet

- Flap TR PracticeDocument1 pageFlap TR PracticeicovinyNo ratings yet

- SiemensPowerAcademyTD Katalog EN 2013 PDFDocument88 pagesSiemensPowerAcademyTD Katalog EN 2013 PDFicovinyNo ratings yet

- An Approach To Corrective Control of Voltage Instability Using Simulation and SensitivityDocument7 pagesAn Approach To Corrective Control of Voltage Instability Using Simulation and SensitivityicovinyNo ratings yet

- 1MRK511303-UEN - en Communication Protocol Manual IEC 61850 Edition 2 670 Series 2.0 IECDocument70 pages1MRK511303-UEN - en Communication Protocol Manual IEC 61850 Edition 2 670 Series 2.0 IECmatthewNo ratings yet

- How Polyglots Learn Languages enDocument3 pagesHow Polyglots Learn Languages enicoviny100% (1)

- J. J. J. J.: Ieee Standard Inverse-Time Characteristic Equations For Overcurrent RelaysDocument5 pagesJ. J. J. J.: Ieee Standard Inverse-Time Characteristic Equations For Overcurrent RelaysTiago Richter MaritanNo ratings yet

- 345 121031 Klappflyer EA SICAM US HiResDocument2 pages345 121031 Klappflyer EA SICAM US HiResicovinyNo ratings yet

- Double PendulumDocument8 pagesDouble PendulumLalu Sahrul HudhaNo ratings yet

- J. J. J. J.: Ieee Standard Inverse-Time Characteristic Equations For Overcurrent RelaysDocument5 pagesJ. J. J. J.: Ieee Standard Inverse-Time Characteristic Equations For Overcurrent RelaysTiago Richter MaritanNo ratings yet

- Cable Color CodesDocument1 pageCable Color CodesicovinyNo ratings yet

- Lecture 1Document22 pagesLecture 1Nitin NileshNo ratings yet

- Elife 45239 v2 PDFDocument21 pagesElife 45239 v2 PDFicovinyNo ratings yet

- Automatically Create Wiring Diagrams: E .Wiring Diagram GeneratorDocument2 pagesAutomatically Create Wiring Diagrams: E .Wiring Diagram GeneratoricovinyNo ratings yet

- How To Do This PDFDocument3 pagesHow To Do This PDFicovinyNo ratings yet

- K&N 4 Step Assy Instructions - 0Document3 pagesK&N 4 Step Assy Instructions - 0icovinyNo ratings yet

- Elife 45239 v2 PDFDocument21 pagesElife 45239 v2 PDFicovinyNo ratings yet

- Sicam Toolbox Ii, V5.11 SecurityDocument2 pagesSicam Toolbox Ii, V5.11 SecurityicovinyNo ratings yet

- Handout 20626 GEN20626 Chadwick AU2016Document29 pagesHandout 20626 GEN20626 Chadwick AU2016icovinyNo ratings yet

- Cahier Technique by SchneiderDocument30 pagesCahier Technique by SchneiderKS CheeNo ratings yet

- MIT18 03S10 ps1 PDFDocument4 pagesMIT18 03S10 ps1 PDFicovinyNo ratings yet

- Ect 167Document27 pagesEct 167Mind of BeautyNo ratings yet

- 2018 Course Portfolio SAS - EN 2018-09-17Document6 pages2018 Course Portfolio SAS - EN 2018-09-17icoviny0% (1)

- HYpact ProductDocument42 pagesHYpact ProductBogdan Vicol100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Double Circuit Transmission Line Protection Using Line Trap & Artificial Neural Network MATLAB ApproachDocument6 pagesDouble Circuit Transmission Line Protection Using Line Trap & Artificial Neural Network MATLAB ApproachAnonymous kw8Yrp0R5rNo ratings yet

- Machine Learning-Based Drone Detection and PDFDocument14 pagesMachine Learning-Based Drone Detection and PDFAbhinav MathurNo ratings yet

- Ppmi ReportDocument24 pagesPpmi ReportD21CE161 GOSWAMI PARTH NILESHKUMARNo ratings yet

- Machine learning approaches for crop yield prediction and nitrogen estimationDocument9 pagesMachine learning approaches for crop yield prediction and nitrogen estimationAhserNo ratings yet

- Neural Network Research Paper PDFDocument6 pagesNeural Network Research Paper PDFfvgbhswf100% (1)

- Customers Churn Prediction in Financial Institution Using Artificial Neural NetworkDocument9 pagesCustomers Churn Prediction in Financial Institution Using Artificial Neural Networkgarikai masawiNo ratings yet

- 600 Machine Learning DL NLP CV ProjectsDocument16 pages600 Machine Learning DL NLP CV ProjectsBalaji100% (1)

- 1 s2.0 S2213158217302073 MainDocument8 pages1 s2.0 S2213158217302073 MainBharat TradersNo ratings yet

- A Review of Artificial Intelligence Applications in Shallow FoundationsDocument13 pagesA Review of Artificial Intelligence Applications in Shallow FoundationsEbenezer ChingwinireNo ratings yet

- A60dd56d886364 Ekta BhaskarDocument1 pageA60dd56d886364 Ekta BhaskarKunal NagNo ratings yet

- Comparative Study of Various Sentiment Classification Techniques in Twitter 1Document9 pagesComparative Study of Various Sentiment Classification Techniques in Twitter 1International Journal of Innovative Science and Research TechnologyNo ratings yet

- Machine LearningDocument28 pagesMachine LearningLuis Gustavo de OliveiraNo ratings yet

- Masters ThesisDocument65 pagesMasters ThesisDovoza MambaNo ratings yet

- Tensorlayer Documentation: Release 1.11.1Document258 pagesTensorlayer Documentation: Release 1.11.1Ognjen OzegovicNo ratings yet

- Neural Nets (Wrap-Up) and Decision Trees: CS 188: Artificial IntelligenceDocument26 pagesNeural Nets (Wrap-Up) and Decision Trees: CS 188: Artificial IntelligenceMihai IlieNo ratings yet

- CNN Basic Beak of BirdDocument20 pagesCNN Basic Beak of BirdPoralla priyanka100% (1)

- Secure Machine Learning Against Adversarial Samples at Test TimeDocument15 pagesSecure Machine Learning Against Adversarial Samples at Test TimeLoguNo ratings yet

- Trends and Application of Machine Learning in Quant FinanceDocument9 pagesTrends and Application of Machine Learning in Quant Financevishnu kabir chhabraNo ratings yet

- DL Lab Ex - No.5Document2 pagesDL Lab Ex - No.521jr1a43d1No ratings yet

- BineshDocument32 pagesBineshBINESHNo ratings yet

- Fingerprint and Face Recognition System Using A Feed-Forward Artificial Neural Network ParadigmDocument12 pagesFingerprint and Face Recognition System Using A Feed-Forward Artificial Neural Network ParadigmInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- CCIDocument25 pagesCCITea ToduaNo ratings yet

- Real Time Object Detection Using Deep LearningDocument6 pagesReal Time Object Detection Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Learning Process Types in Neural NetworksDocument54 pagesLearning Process Types in Neural Networksnourhan fahmyNo ratings yet

- Survey of Machine Learning AcceleratorsDocument11 pagesSurvey of Machine Learning AcceleratorsdanydwarNo ratings yet

- Online Learning Neural Network Control of Buck-Boost ConverterDocument9 pagesOnline Learning Neural Network Control of Buck-Boost ConverterFajar MaulanaNo ratings yet

- Improving Graph Neural Networks With Simple Architecture DesignDocument10 pagesImproving Graph Neural Networks With Simple Architecture Designhilmi bukhoriNo ratings yet

- Best ANN Backpropagation Architecture Recommendations Based on Learning Rate, Momentum and Hidden LayersDocument9 pagesBest ANN Backpropagation Architecture Recommendations Based on Learning Rate, Momentum and Hidden LayersSyaharuddinNo ratings yet

- ML Unit-1Document12 pagesML Unit-120-6616 AbhinayNo ratings yet

- 9-Ai-Sample Paper-23Document6 pages9-Ai-Sample Paper-23Hardik Gulati96% (46)