Professional Documents

Culture Documents

Handling Constraints in Particle Swarm Optimization Using A Small Population Size

Uploaded by

debasishmee5808Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Handling Constraints in Particle Swarm Optimization Using A Small Population Size

Uploaded by

debasishmee5808Copyright:

Available Formats

Handling Constraints in Particle Swarm

Optimization using a Small Population Size

Juan C. Fuentes Cabrera and Carlos A. Coello Coello

CINVESTAV-IPN (Evolutionary Computation Group)

Departamento de Computacion

Av. IPN No. 2508, San Pedro Zacatenco

Mexico D.F. 07360, M

EXICO

jfuentes@computacion.cs.cinvestav.mx

ccoello@cs.cinvestav.mx

Abstract. This paper presents a particle swarm optimizer for solving

constrained optimization problems which adopts a very small popula-

tion size (ve particles). The proposed approach uses a reinitialization

process for preserving diversity, and does not use a penalty function nor

it requires feasible solutions in the initial population. The leader selec-

tion scheme adopted is based on the distance of a solution to the feasi-

ble region. In addition, a mutation operator is incorporated to improve

the exploratory capabilities of the algorithm. The approach is tested

with a well-know benchmark commonly adopted to validate constraint-

handling approaches for evolutionary algorithms. The results show that

the proposed algorithm is competitive with respect to state-of-the-art

constraint-handling techniques. The number of tness function evalua-

tions that the proposed approach requires is almost the same (or lower)

than the number required by the techniques of the state-of-the-art in the

area.

1 Introduction

A wide variety of Evolutionary Algorithms (EAs) have been used to solve di-

erent types of optimization problems. However, EAs are unconstrained search

techniques and thus require an additional mechanism to incorporate the cons-

traints of a problem into their tness function [3]. Penalty functions are the

most commonly adopted approach to incorporate constraints into an EA. How-

ever, penalty functions have several drawbacks, from which the most important

are that they require a ne-tuning of the penalty factors (which are problem-

dependent), since both under- and over-penalizations may result in an unsuc-

cessful optimization [3].

Particle swarm optimization (PSO) is a population based optimization tech-

nique inspired on the movements of a ock of birds or sh. PSO has been success-

fully applied in a wide of variety of optimization tasks in which it has shown a

high convergence rate [10].

This paper is organized as follows. In section 2, we dene the problem of

our interest and we introduce the Particle Swarm Optimization algorithm. The

previous related work is provided in Section 3. Section 4 describes our approach

including the reinitialization process, the constraint-handling mechanism and

the mutation operator adopted. In Section 5, we present our experimental setup

and the results obtained. Finally, Section 6 presents our conclusions and some

possible paths for future research.

2 Basic Concepts

We are interested in the general nonlinear programming problem in which we

want to:

Find

x which optimizes f(

x ) (1)

subject to:

g

i

(

x ) 0, i = 1, ..., n (2)

h

j

(

x ) = 0, j = 1, ..., p (3)

where

x is the vector of solutions

x = [x

1

, x

2

, ..., x

r

]

T

, n is the number of

inequality constraints and p is the number of equality constraints (in both cases,

constraints could be linear or nonlinear). If we denote with F to the feasible

region (set of points which satisfy the inequality and equality constraints) and

with S to the search space then F S. For an inequality constraint that satises

g

i

(x) = 0, the we will say that is active at

x . All equality constraints h

j

(regardless of the value of

x used) are considered active at all points of F.

2.1 Particle Swarm Optimization

Our approach is based in The Particle Swarm Optimization (PSO) algorithm

which was introduced by Eberhart and Kennedy in 1995 [4]. PSO is a population-

based search algorithm based on the simulation of social behavior of birds within

a ock [10]. In PSO, each individual (particle) of the population (swarm) adjusts

its trajectory according to its own ying experience and the ying experience of

the other particles within its topological neighborhood in the search space. In

the PSO algorithm, the population and velocities are randomly initialized at the

beginning of the search, and then they are iteratively updated, based on their

previous positions and those of each particles neighbors. Our proposed approach

implements equations (4) and (5), proposed in [19] for computing the velocity

and the position of a particle.

v

id

= w v

id

+ c

1

r

1

(pb

id

x

id

) + c

2

r

2

(lb

id

x

id

) (4)

x

id

= x

id

+ v

id

(5)

where c

1

and c

2

are both positive constants, r

1

and r

2

are random numbers

generated from a uniform distribution in the range [0,1], w is the inertia weight

that is generated in the range (0,1].

There are two versions of the PSO algorithm: the global version and the

local version. In the global version, the neighborhood consists of all the particles

of the swarm and the best particle of the population is called the global best

(gbest). In contrast, in the local version, the neighborhood is a subset of the

population and the best particle of the neighborhood is called local best (lbest).

3 Related Work

When incorporating constraints into the tness function of an evolutionary al-

gorithm, it is particularly important to maintain diversity in the population and

to be able to keep solutions both inside and outside the feasible region [3, 14].

Several studies have shown that, despite their popularity, traditional (external)

penalty functions, even when used with dynamic penalty factors, tend to have

diculties to deal with highly constrained search spaces and with problems in

which the constraints are active in the optimum [3, 11, 18]. Motivated by this

fact, a number of constraint-handling techniques have been proposed for evolu-

tionary algorithms [16, 3].

Remarkably, there has been relatively little work related to the incorporation

of constraints into the PSO algorithm, despite the fact that most real-world

applications have constraints. In previous work, some researchers have assumed

the possibility of being able to generate in a random way feasible solutions to

feed the population of a PSO algorithm [7, 8]. The problem with this approach

is that it may have a very high computational cost in some cases. For example,

in some of the test functions used in this paper, even the generation of one

million of random points was insucient to produce a single feasible solution.

Evidently, such a high computational cost turns out to be prohibitive in real-

world applications.

Other approaches are applicable only to certain types of constraints (see for

example [17]). Some of them rely on relatively simple mechanisms to select a

leader, based on the closeness of a particle to the feasible region, and adopt a

mutation operator in order to maintain diversity during the search (see for ex-

ample [20]). Other approaches rely on carefully designed topologies and diversity

mechanisms (see for example [6]). However, a lot of work remains to be done

regarding the use of PSO for solving constrained optimization problems. For

example, the use of very small population sizes has not been addressed so far in

PSO, to the authors best knowledge, and this paper precisely aims to explore

this venue in the context of constrained optimization.

4 Our Proposed Approach

As indicated before, our proposed approach is based on the local version of PSO,

since there is evidence that such model is less prone to getting stuck in local

minima [10]. Despite the existence of a variety of population topologies [9], we

adopt a randomly generated neighborhood topology for our approach. It also uses

a reinitialization process in order to maintain diversity in the population, and it

adopts a mutation operator that aims to improve the exploratory capabilities of

PSO (see Algorithm 1).

Since our proposed approach uses a very small population size, we called it

Micro-PSO, since this is analogous to the micro genetic algorithms that have

been in use for several years (see for example [12, 2]). Its constraint-handling

mechanism, together with its reinitialization process and its mutation operator,

are all described next in more detail.

Algorithm 1: Pseudocode of our proposed Micro-PSO

begin

for i = 1 to Number of particles do

Initialize position and velocity randomly;

Initialize the neighborhood (randomly);

end

cont = 1;

repeat

if cont ==reinitialization generations number then

Reinitialization process;

cont = 1;

end

Compute the tness value G(xi);

for i = 1 to Number of particles do

if G(xi) > G(xpbi) then

for d = 1 to number of dimensions do

xpb

id

= x

id

; // xpbi is the best position so far;

end

end

Select the local best position in the neighborhood lbi;

for d = 1 to number of dimensions do

w = rand(); // random(0,1];

v

id

= w v

id

+ c1r1(pbx

id

x

id

) + c2r2(lb

id

x

id

);

x

id

= x

id

+ v

id

;

end

end

cont =cont +1;

Perform mutation;

until Maximum number of generations ;

Report the best solution found.

end

4.1 Constraint-Handling Mechanism

We adopted the mechanism proposed in [20] for selecting leaders. This mecha-

nism is based both on the feasible solutions and the tness value of a particle.

When two feasible particles are compared, the particle that has the highest t-

ness value wins. If one of the particles is infeasible and the other one is feasible,

then the feasible particle wins. When two infeasible particles are compared, the

particle that has the lowest tness value wins. The idea is to select leaders that,

even when could be infeasible, lie close to the feasible region.

We used equation (6) for assigning tness to a solution:

fit(

x ) =

f

i

(

x ) if feasible

n

j=1

g

j

(

x ) +

p

k=1

|h

k

(

x )| otherwise

(6)

4.2 Reinitialization Process

The use of a small population size accelerates the loss of diversity at each itera-

tion, and therefore, it is uncommon practice to use population sizes that are too

small. However, in the genetic algorithms literature, it is known that it is possi-

ble, from a theoretical point of view, to use very small population sizes (no more

than 5 individuals) if appropriate reinitialization processes are implemented [12,

2]. In this paper, we incorporate one of these reinitialization processes taken

from the literature on micro genetic algorithms. Our mechanism is the follow-

ing: after certain number of iterations (replacement generations), the swarm is

sorted based on tness value, but placing the feasible solutions on top. Then,

we replace the rp particles (replacement particles) by randomly generated parti-

cles (position and velocity), but allow the rp particles to hold their best position

(pbest). The idea of mixing evolved and randomly generated particles is to avoid

premature convergence.

4.3 Mutation Operator

Although the original PSO algorithm had no mutation operator, the addition

of such mutation operator is a relatively common practice nowadays. The main

motivation for adding this operator is to improve the performance of PSO as an

optimizer, and to improve the overall exploratory capabilities of this heuristic

[1]. In our proposed approach, we implemented the mutation operator developed

by Michalewicz for Genetic Algorithms [15]. It is worth noticing that this muta-

tion operator has been used before in PSO, but in the context of unconstrained

multimodal optimization [5]. This operator varies the magnitude added or sub-

stracted to a solution during the actual mutation, depending on the current

iteration number (at the beginning of the search, large changes are allowed, and

they become very small towards the end of the search). We apply the mutation

operator in the particles position, for all of its dimensions:

x

id

=

x

id

+ (t, UB x

id

) if R = 0

x

id

(t, x

id

LB) if R = 1

(7)

where t is the current iteration number, UB is the upper bound on the value

of the particle dimension, LB is the lower bound on the particle dimension value,

R is a randomly generated bit (zero and one both have a 50% probability of being

generated) and (t, y) returns a value in the range [0, y] . (t, y) is dened by:

(t, y) = y (1 r

1(

t

T

)

b

) (8)

where r is a random number generated from a uniform distribution in the

range[0,1], T is the maximum number of iterations and b is a tunable parameter

that denes the non-uniformity level of the operator. In this approach, the b

parameter is set to 5 as suggested in [15].

5 Experiments and Results

For evaluating the performance of our proposed approach, we used the thirteen

test functions described in [18]. These test functions contain characteristics that

make them dicult to solve using evolutionary algorithms. We performed fty

independent runs for each test function and we compared our results with respect

to three algorithms representative of the state-of-the-art in the area: Stochastic

Ranking (SR) [18], the Simple Multimembered Evolution Strategy (SMES) [14],

and the Constraint-Handling Mechanism for PSO (CHM-PSO) [20]. Stochastic

Ranking uses a multimembered evolution strategy with a static penalty function

and a selection based on a stochastic ranking process, in which the stochastic

component allows infeasible solutions to be given priority a few times during

the selection process. The idea is to balance the inuence of the objective and

penalty function. This approach requires a user-dened parameter called Pf

wich determines this balance [18]. The Simple Multimembered Evolution Strat-

egy (SMES) is based on a ( + ) evolution strategy, and it has three main

mechanisms: a diversity mechanism, a combined recombination operator and a

dynamic step size that denes the smoothness of the movements performed by

the evolution strategy [14].

In all our experiments, we adopted the following parameters:

W = random number from a uniform distribution in the range [0,1].

C1 = C2 = 1.8.

population size = 5 particles;

number of generations = 48,000;

number of replacement generations = 100;

number of replacement particles = 2;

mutation percent = 0.1;

= 0.0001, except for g04, g05, g06, g07, in which we used = 0.00001.

The statistical results of our Micro-PSO are summarized in Table 1. Our

approach was able to nd the global optimum in ve test functions (g02, g04,

g06, g08, g09, g12) and it found solutions very close to the global optimum in the

remaining test functions, with the exception of g10. We compare the population

size, the tolerance value and the number of evaluations of the objective function

of our approach with respect to SR, CHM-PSO, and SMES in Table 2.

When comparing our approach with respect to SR, we can see that ours found

a better solution for g02 and similar results in other eleven problems, except for

Table 1. Results obtained by our Micro-PSO over 50 independent runs.

Statistical Results of our Micro-PSO

TF Optimal Best Mean Median Worst St.Dev.

g01 -15.000 -15.0001 -13.2734 -13.0001 -9.7012 1.41E+00

g02 0.803619 0.803620 0.777143 0.778481 0.711603 1.91E-02

g03 1.000 1.0004 0.9936 1.0004 0.6674 4.71E-02

g04 -30665.539 -30665.5398 -30665.5397 -30665.5398 -30665.5338 6.83E-04

g05 5126.4981 5126.6467 5495.2389 5261.7675 6272.7423 4.05E+02

g06 -6961.81388 -6961.8371 -6961.8370 -6961.8371 -6961.8355 2.61E-04

g07 24.3062 24.3278 24.6996 24.6455 25.2962 2.52E-01

g08 0.095825 0.095825 0.095825 0.095825 0.095825 0.00E+00

g09 680.630 680.6307 680.6391 680.6378 680.6671 6.68E-03

g10 7049.250 7090.4524 7747.6298 7557.4314 10533.6658 5.52E+02

g11 0.750 0.7499 0.7673 0.7499 0.9925 6.00E-02

g12 1.000 1.0000 1.0000 1.0000 1.0000 0.00E+00

g13 0.05395 0.05941 0.81335 0.90953 2.44415 3.81E-01

g10, in which our approach does not perform well (see Table 3). Note however

that SR performs 350,000 objective function evaluations and our approach only

performs 240,000.

Table 2. Comparison of the population size, the tolerance value and the number of

evaluations of the objective function of our approach with respect to SR, CHM-PSO

and SMES.

Constraint-Handling Population Size Tolerance Value () Number of Evaluations

Technique of the Objective Function

SR 200 0.001 350,000

CHMPSO 40 - 340,000

SMES 100 0.0004 240,000

Micro-PSO 5 0.0001 240,000

Compared with respect to CHM-PSO, our approach found a better solution

for g02, g03, g04, g06, g07, g09, and g13 and the same or similar results in

other ve problems (except for g10) (see Table 4). Note again that CMPSO

performs 340,000 objective function evaluations, and our approach performs only

240,000. It is also worth indicating that CHMOPSO is one of the best PSO-based

constraint-handling methods known to date.

When comparing our proposed approach against the SMES, ours found bet-

ter solutions for g02 and g09 and the same or similar results in other ten prob-

lems, except for g10 (see Table 5). Both approaches performed 240,000 objective

function evaluations.

Table 3. Comparison of our approach with respect the Stochastic Ranking (SR).

Best Result Mean Result Worst Result

TF Optimal Micro-PSO SR Micro-PSO SR Micro-PSO SR

g01 -15.0000 -15.0001 -15.000 -13.2734 -15.000 -9.7012 -15.000

g02 0.803619 0.803620 0.803515 0.777143 0.781975 0.711603 0.726288

g03 1.0000 1.0004 1.000 0.9936 1.000 0.6674 1.000

g04 -30665.539 -30665.539 -30665.539 -30665.539 -30665.539 -30665.534 -30665.539

g05 5126.4981 5126.6467 5126.497 5495.2389 5128.881 6272.7423 5142.472

g06 -6961.81388 -6961.8371 -6961.814 -6961.8370 -6875.940 -6961.8355 -6350.262

g07 24.3062 24.3278 24.307 24.6996 24.374 25.2962 24.642

g08 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825

g09 680.630 680.6307 680.630 680.6391 680.656 680.6671 680.763

g10 7049.250 7090.4524 7054.316 7747.6298 7559.192 10533.6658 8835.655

g11 0.750 0.7499 0.750 0.7673 0.750 0.9925 0.750

g12 1.000 1.0000 1.000 1.0000 1.000 1.0000 1.000

g13 0.05395 0.05941 0.053957 0.81335 0.067543 2.44415 0.216915

6 Conclusions and Future Work

We have proposed the use of a PSO algorithm with a very small population

size (only ve particles) for solving constrained optimization problems. The pro-

posed technique adopts a constraint-handling mechanism during the selection

of leaders, it performs a reinitialization process and it implements a mutation

operator. The proposed approach is easy to implement, since its main additions

are a sorting and a reinitialization process. The computational cost (measured

in terms of the number of evaluation of the objective function) that our Micro-

PSO requires is almost the same (or lower) than the number required by the

techniques with respect to which it was compared, which are representative of

the state-of-the-art in the area. The results obtained show that our approach is

competitive and could then, be a viable alternative for using PSO for solving

constrained optimization problems.

As part of our future work, we are interested in comparing our approach with

the extended benchmark for constrained evolutionary optimization [13]. We are

also interested in comparing our results with respect to more recent constraint-

handling methods that use PSO as their search engine (see for example [6]). We

will also study the sensitivity of our Micro-PSO to its parameters, aiming to nd

a set of parameters (or a self-adaptation mechanism) that improves its robustness

(i.e., that reduces the standard deviations obtained). Finally, we are also inter-

ested in experimenting with other neighborhood topologies and other types of

Table 4. Comparison of our approach with respect the Constraint-Handling Mecha-

nism for PSO (CHM-PSO).

Best Result Mean Result Worst Result

TF Optimal Micro-PSO CHM-PSO Micro-PSO CHM-PSO Micro-PSO CHM-PSO

g01 -15.0000 -15.0001 -15.000 -13.2734 -15.000 -9.7012 -15.000

g02 0.803619 0.803620 0.803432 0.777143 0.790406 0.711603 0.755039

g03 1.000 1.0004 1.004720 0.9936 1.003814 0.6674 1.000249

g04 -30665.539 -30665.539 -30665.500 -30665.539 -30665.500 -30665.534 -30665.500

g05 5126.4981 5126.6467 5126.6400 5495.2389 5461.08133 6272.7423 6104.7500

g06 -6961.8138 -6961.837 -6961.810 -6961.837 -6961.810 -6961.835 -6961.810

g07 24.3062 24.3278 24.3511 24.6996 24.35577 25.2962 27.3168

g08 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825

g09 680.6300 680.6307 680.638 680.6391 680.85239 680.6671 681.553

g10 7049.2500 7090.4524 7057.5900 7747.6298 7560.04785 10533.6658 8104.3100

g11 0.7500 0.7499 0.7499 0.7673 0.7501 0.9925 0.75288

g12 1.0000 1.0000 1.000 1.0000 1.000 1.0000 1.000

g13 0.05395 0.05941 0.068665 0.81335 1.71642 2.44415 13.6695

Table 5. Comparison of our approach with respect the Simple Multimembered Evo-

lution Strategy (SMES).

Best Result Mean Result Worst Result

TF Optimal Micro-PSO SMES Micro-PSO SMES Micro-PSO SMES

g01 -15.0000 -15.0001 -15.000 -13.2734 -15.000 -9.7012 -15.000

g02 0.803619 0.803620 0.803601 0.777143 0.785238 0.711603 0.751322

g03 1.0000 1.0004 1.000 0.9936 1.000 0.6674 1.000

g04 -30665.5390 -30665.5398 -30665.539 -30665.5397 -30665.539 -30665.5338 -30665.539

g05 5126.4980 5126.6467 5126.599 5495.2389 5174.492 6272.7423 5304.167

g06 -6961.8140 -6961.8371 -6961.814 -6961.8370 -6961.284 -6961.8355 -6952.482

g07 24.3062 24.3278 24.327 24.6996 24.475 25.2962 24.843

g08 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825 0.095825

g09 680.6300 680.6307 680.632 680.6391 680.643 680.6671 680.719

g10 7049.2500 7090.4524 7051.903 7747.6298 7253.047 10533.6658 7638.366

g11 0.7500 0.7499 0.75 0.7673 0.75 0.9925 0.75

g12 1.0000 1.0000 1.000 1.0000 1.000 1.0000 1.000

g13 0.05395 0.05941 0.053986 0.81335 0.166385 2.44415 0.468294

reinitialization processes, since they could improve our algorithms convergence

capabilities (i.e., we could reduce the number of objective function evaluations

performed) as well as the quality of the results achieved.

Acknowledgements

The rst author gratefully acknowledges support from CONACyT to pursue

graduate studies at CINVESTAV-IPNs Computer Science Department. The sec-

ond author acknowledges support from CONACyT project No. 45683-Y.

References

1. Paul S. Andrews. An investigation into mutation operators for particle swarm opti-

mization. In Proceedings of the 2006 IEEE Congress on Evolutionary Computation,

(CEC2006), pages 37893796, Vancouver, Canada, July 2006.

2. Carlos A. Coello Coello and Gregorio Toscano Pulido. Multiobjective optimization

using a micro-genetic algorithm. In in L. Spector, E.D. Goodman, A. Wu, W. Lang-

don, H.M. Voigt, M. Gen, S. Sen, M. Dorigo, S. Pezeshk M., Garzon, and E. Burke,

editors, Genetic and Evolutionary Computation Conference, GECCO,2001, pages

274282, San Francisco, California, 2001. Morgan Kaufmann Publishers.

3. Carlos A. Coello Coello. Theoretical and numerical constraint handling techniques

used with evolutionary algorithms: A survey of the state of the art. Computer

Methods in Applied Mechanics and Engineering, 191(1112):12451287, January

2002.

4. R. Eberhart and J. Kennedy. Particle swarm optimization. In Proceedings of the

IEEE International Conference on Neural Networks, pages 19421948. IEEE Press,

1995.

5. S.C. Esquivel and C.A. Coello Coello. On the use of particle swarm optimization

with multimodal functions. In Proceedings of the 2003 IEEE Congress on Evo-

lutionary Computation (CEC2003), pages 11301136, Canberra, Australia, 2003.

IEEE Press.

6. Arturo Hernandez Aguirre, Angel E. Mu noz Zavala, E. Villa Diharce, and S. Botello

Rionda. COPSO: Constrained Optimization via PSO algorithm. Technical Report

I-07-04, Center of Research in Mathematics (CIMAT), Guanajuato, Mexico, 2007.

7. Xiaohui Hu and Russell Eberhart. Solving Constrained Nonlinear Optimization

Problems with Particle Swarm Optimization. In Proceedings of the 6th World

Multiconference on Systemics, Cybernetics and Informatics (SCI 2002), volume 5.

Orlando, USA, IIIS, July 2002.

8. Xiaohui Hu, Russell C. Eberhart, and Yuhui Shi. Engineering Optimization with

Particle Swarm. In Proceedings of the 2003 IEEE Swarm Intelligence Symposium,

pages 5357. Indianapolis, Indiana, USA, IEEE Service Center, April 2003.

9. J. Kennedy and R. Mendes. Population structure and particle swarm performance.

In Proceedings of the 2002 IEEE Congress on Evolutionary Computation, volume 2,

pages 16711676, Honolulu, Hawaii, USA, May 2002. IEEE Press.

10. James Kennedy and Russell C. Eberhart. Swarm Intelligence. Morgan Kaumann

Publishers, San Francisco, California, 2001.

11. Slawomir Koziel and Zbigniew Michalewicz. Evolutionary Algorithms, Homomor-

phous Mappings, and Constrained Parameter Optimization. Evolutionary Compu-

tation, 7(1):1944, 1999.

12. Kalmanje Krishnakumar. Micro-genetic algorithms for stationary and non-

stationary function optimization. SPIE Proceedings: Intelligent Control and Adap-

tive Systems, 1196:289296, 1989.

13. J.J. Liang, T.P. Runarsson, E. Mezura-Montes, M. Clerc, P.N. Suganthan, C.A.

Coello Coello, and K. Deb. Problem denitions and evaluation criteria for the cec

2006 special session on constrained real-parameter optimization. Technical report,

Nanyang Technological University, Singapore, 2006.

14. Efren Mezura-Montes and Carlos A. Coello Coello. A simple multimembered evo-

lution strategy to solve constrained optimization problems. Transactions on Evo-

lutionary Computation, 9(1):117, February 2005.

15. Z. Michalewicz. Genetic Algorithms + Data Structures = Evolution Programs.

Springer-Verlag, 1996.

16. Zbigniew Michalewicz and Marc Schoenauer. Evolutionary Algorithms for Con-

strained Parameter Optimization Problems. Evolutionary Computation, 4(1):132,

1996.

17. Ulrich Paquet and Andries p Engelbrecht. A New Particle Swarm Optimiser for

Linearly Constrained Optimization. In Proceedings of the Congress on Evolutionary

Computation 2003 (CEC2003), volume 1, pages 227233, Piscataway, New Jersey,

December 2003. Canberra, Australia, IEEE Service Center.

18. T.P. Runanarsson and X. Yao. Stochastic ranking for constrained evolutionary

optimization. IEEE Transactions on Evolutionary Computation, 4(3):248249,

September 2000.

19. Y. Shi and R.C. Eberhart. A modied particle swarm optimizer. In Proceedings of

the 1998 IEEE Congress on Evolutionary Computation, pages 6973. IEEE Press,

1998.

20. Gregorio Toscano Pulido and Carlos A. Coello Coello. A constraint-handling mech-

anism for particle swarm optimization. In Proceedings of the 2004 IEEE Congress

on Evolutionary Computation (CEC2004), volume 2, pages 13961403, Portland,

Oregon, USA, June 2004. IEEE Press.

You might also like

- Particle Swarm OptimizationDocument17 pagesParticle Swarm OptimizationMitul Kumar AhirwalNo ratings yet

- Comparison of Genetic Algorithm and Particle Swarm OptimizationDocument6 pagesComparison of Genetic Algorithm and Particle Swarm OptimizationMikemekanicsNo ratings yet

- Particle Swarm OptimizationDocument12 pagesParticle Swarm Optimizationjoseph676No ratings yet

- A Particle Swarm Optimization Algorithm For Mixed Variable Nonlinear ProblemsDocument14 pagesA Particle Swarm Optimization Algorithm For Mixed Variable Nonlinear ProblemsAhmed WestministerNo ratings yet

- A Hybrid Particle Swarm Evolutionary Algorithm For Constrained Multi-Objective Optimization Jingxuan WeiDocument18 pagesA Hybrid Particle Swarm Evolutionary Algorithm For Constrained Multi-Objective Optimization Jingxuan WeithavaselvanNo ratings yet

- A Cellular Genetic Algorithm For Multiobjective OptimizationDocument12 pagesA Cellular Genetic Algorithm For Multiobjective Optimizationrogeriocosta37No ratings yet

- A Clustering Particle Swarm Optimizer For Locating and Tracking Multiple Optima in Dynamic EnvironmentsDocument16 pagesA Clustering Particle Swarm Optimizer For Locating and Tracking Multiple Optima in Dynamic EnvironmentskarteekakNo ratings yet

- Particle Swarm Optimization - WikipediaDocument9 pagesParticle Swarm Optimization - WikipediaRicardo VillalongaNo ratings yet

- TLSAPSODocument22 pagesTLSAPSOAndy SyaputraNo ratings yet

- A Grasp Algorithm To Solve The Unicost Set Covering Problem: Joaquín Bautista, Jordi PereiraDocument8 pagesA Grasp Algorithm To Solve The Unicost Set Covering Problem: Joaquín Bautista, Jordi PereiraPamela HendricksNo ratings yet

- Association Rule Mining Using Self Adaptive Particle Swarm OptimizationDocument5 pagesAssociation Rule Mining Using Self Adaptive Particle Swarm OptimizationAnonymous TxPyX8cNo ratings yet

- Hybrid Ant Colony-Genetic Algorithm (GAAPI) For Global Continuous OptimizationDocument12 pagesHybrid Ant Colony-Genetic Algorithm (GAAPI) For Global Continuous OptimizationRaghul RamasamyNo ratings yet

- Recent Adv in PSODocument8 pagesRecent Adv in PSONaren PathakNo ratings yet

- PCX - Report (Pso Latest)Document14 pagesPCX - Report (Pso Latest)adil khanNo ratings yet

- Multi-Objective Particle Swarm Optimisation Methods.: Jonathan E. FieldsendDocument20 pagesMulti-Objective Particle Swarm Optimisation Methods.: Jonathan E. Fieldsenddebasishmee5808No ratings yet

- Javid Rad 2017Document82 pagesJavid Rad 2017Chakib BenmhamedNo ratings yet

- Tracking Changing Extrema With Adaptive Particle Swarm OptimizerDocument5 pagesTracking Changing Extrema With Adaptive Particle Swarm OptimizerNaren PathakNo ratings yet

- Particle Swarm Optimization Based Algorithm For Economic Load DispatchDocument6 pagesParticle Swarm Optimization Based Algorithm For Economic Load Dispatchdebasishmee5808No ratings yet

- I JSC 040201Document15 pagesI JSC 040201ijscNo ratings yet

- Optimizing The Sensor Deployment Strategy For Large-Scale Internet of Things (IoT) Using Artificial Bee ColonyDocument6 pagesOptimizing The Sensor Deployment Strategy For Large-Scale Internet of Things (IoT) Using Artificial Bee ColonyHemanth S.NNo ratings yet

- Design Optimization by Using Particle Swarm Optimization in Matlab and Apdl in AnsysDocument10 pagesDesign Optimization by Using Particle Swarm Optimization in Matlab and Apdl in AnsysBakkiya RajNo ratings yet

- A Dynamic Global and Local Combined Particle Swarm Optimization AlgorithmDocument8 pagesA Dynamic Global and Local Combined Particle Swarm Optimization AlgorithmSopan Ram TambekarNo ratings yet

- Dynamic Multi-Objective Optimisation Using PSODocument19 pagesDynamic Multi-Objective Optimisation Using PSOIbtissem Bencheikh El HocineNo ratings yet

- Association Rule Mining by Dynamic Neighborhood Selection in Particle Swarm OptimizationDocument7 pagesAssociation Rule Mining by Dynamic Neighborhood Selection in Particle Swarm OptimizationAnonymous TxPyX8cNo ratings yet

- Workflow Scheduling in Cloud Computing Environment by Combining Particle Swarm Optimization and Grey Wolf OptimizationDocument10 pagesWorkflow Scheduling in Cloud Computing Environment by Combining Particle Swarm Optimization and Grey Wolf OptimizationAnonymous Gl4IRRjzNNo ratings yet

- Framework For Particle Swarm Optimization With Surrogate FunctionsDocument11 pagesFramework For Particle Swarm Optimization With Surrogate FunctionsLucas GallindoNo ratings yet

- Algoritmi GeneticiDocument7 pagesAlgoritmi GeneticiȘtefan CristeaNo ratings yet

- Multi-Objective Optimization With PSO: 1 Problem StatementDocument2 pagesMulti-Objective Optimization With PSO: 1 Problem StatementmaheshboobalanNo ratings yet

- Differential Evolution Using A Neighborhood-Based Mutation OperatorDocument42 pagesDifferential Evolution Using A Neighborhood-Based Mutation OperatorYogesh SharmaNo ratings yet

- Effects of Random Values For Particle Swarm Optimization Algorithm (Iteration)Document20 pagesEffects of Random Values For Particle Swarm Optimization Algorithm (Iteration)Muhammad ShafiqNo ratings yet

- Particle Swarm Optimization: Technique, System and ChallengesDocument9 pagesParticle Swarm Optimization: Technique, System and ChallengesMary MorseNo ratings yet

- Research Article: An Improved Method of Particle Swarm Optimization For Path Planning of Mobile RobotDocument12 pagesResearch Article: An Improved Method of Particle Swarm Optimization For Path Planning of Mobile Robotmarcio pivelloNo ratings yet

- A Hybrid Algorithm Based On Cuckoo Search and Differential Evolution For Numerical OptimizationDocument8 pagesA Hybrid Algorithm Based On Cuckoo Search and Differential Evolution For Numerical OptimizationrasminojNo ratings yet

- Mean Gbest and Gravitational Search AlgorithmDocument22 pagesMean Gbest and Gravitational Search AlgorithmmaniNo ratings yet

- Metaheuristics and Chaos TheoryDocument16 pagesMetaheuristics and Chaos TheoryAbid HasanNo ratings yet

- A New Hybrid PSOGSA Algorithm For Function OptimizationDocument4 pagesA New Hybrid PSOGSA Algorithm For Function OptimizationgantayatNo ratings yet

- Performance Evaluation of Dynamic Particle SwarPerformance Swarm OptimizationDocument5 pagesPerformance Evaluation of Dynamic Particle SwarPerformance Swarm OptimizationijcsnNo ratings yet

- Pso LitDocument7 pagesPso Litadil khanNo ratings yet

- Symmetry: An Improved Butterfly Optimization Algorithm For Engineering Design Problems Using The Cross-Entropy MethodDocument20 pagesSymmetry: An Improved Butterfly Optimization Algorithm For Engineering Design Problems Using The Cross-Entropy Methodomaima ghNo ratings yet

- Efficient Population Utilization Strategy For Particle Swarm OptimizerDocument13 pagesEfficient Population Utilization Strategy For Particle Swarm OptimizerTg WallasNo ratings yet

- 10 1016@j Engappai 2020 103771Document19 pages10 1016@j Engappai 2020 103771Chakib BenmhamedNo ratings yet

- PSO Literature ReviewDocument10 pagesPSO Literature Reviewadil khanNo ratings yet

- An Adaptive Particle Swarm Optimization Algorithm Based On Cat MapDocument8 pagesAn Adaptive Particle Swarm Optimization Algorithm Based On Cat MapmenguemengueNo ratings yet

- SBRN2002Document7 pagesSBRN2002Carlos RibeiroNo ratings yet

- 00r020bk1121qr PDFDocument11 pages00r020bk1121qr PDFIsaias BonillaNo ratings yet

- A Modified Genetic Algorithm For Resource ConstraiDocument5 pagesA Modified Genetic Algorithm For Resource ConstraiObemfalNo ratings yet

- Optimal Scheduling of Generation Using ANFIS: T Sobhanbabu, DR T.Gowri Manohar, P.Dinakar Prasad ReddyDocument5 pagesOptimal Scheduling of Generation Using ANFIS: T Sobhanbabu, DR T.Gowri Manohar, P.Dinakar Prasad ReddyMir JamalNo ratings yet

- My PSODocument14 pagesMy PSOjensi_ramakrishnanNo ratings yet

- Ant Colony Optimization For Continuous Domains: Krzysztof Socha, Marco DorigoDocument19 pagesAnt Colony Optimization For Continuous Domains: Krzysztof Socha, Marco DorigoJessica JaraNo ratings yet

- Genetic Algorithms: 4.1 Quick OverviewDocument12 pagesGenetic Algorithms: 4.1 Quick Overviewseunnuga93No ratings yet

- Improved Particle Swarm Optimization Combined With Chaos: Bo Liu, Ling Wang, Yi-Hui Jin, Fang Tang, De-Xian HuangDocument11 pagesImproved Particle Swarm Optimization Combined With Chaos: Bo Liu, Ling Wang, Yi-Hui Jin, Fang Tang, De-Xian HuangmenguemengueNo ratings yet

- Flexible Job-Shop Scheduling With TRIBES-PSO ApproachDocument9 pagesFlexible Job-Shop Scheduling With TRIBES-PSO ApproachJournal of ComputingNo ratings yet

- Multi-Directional Bat Algorithm For Solving Unconstrained Optimization ProblemsDocument22 pagesMulti-Directional Bat Algorithm For Solving Unconstrained Optimization ProblemsDebapriya MitraNo ratings yet

- Toc28341362083400 PDFDocument63 pagesToc28341362083400 PDFGica SelyNo ratings yet

- ShimuraDocument18 pagesShimuraDamodharan ChandranNo ratings yet

- Genetic Algorithms and Their Applications-AsifDocument11 pagesGenetic Algorithms and Their Applications-Asifarshiya65No ratings yet

- 01 Design of A Fuzzy Controller in Mobile Robotics Using Genetic AlgorithmsDocument7 pages01 Design of A Fuzzy Controller in Mobile Robotics Using Genetic Algorithmscacr_72No ratings yet

- RID 1 ModrobDocument4 pagesRID 1 ModrobbalakaleesNo ratings yet

- nRF24L01P Product Specification 1 0Document78 pagesnRF24L01P Product Specification 1 0CaelumBlimpNo ratings yet

- Bhimbra-Generalized Theory Electrical MachinesDocument10 pagesBhimbra-Generalized Theory Electrical Machinesdebasishmee5808No ratings yet

- RID 1 ModrobDocument6 pagesRID 1 Modrobdebasishmee5808No ratings yet

- TrademarksDocument1 pageTrademarksDhyan SwaroopNo ratings yet

- Adjunct 2Document5 pagesAdjunct 2debasishmee5808No ratings yet

- Extramural Funding2012-13Document2 pagesExtramural Funding2012-13debasishmee5808No ratings yet

- AssignmentsDocument11 pagesAssignmentsdebasishmee5808No ratings yet

- Lec 1Document18 pagesLec 1cavanzasNo ratings yet

- Adaptive Control of Linearizable Systems: Fully-StateDocument9 pagesAdaptive Control of Linearizable Systems: Fully-Statedebasishmee5808No ratings yet

- Control SystemDocument22 pagesControl SystemKamran RazaNo ratings yet

- Electromagnetic Field TheoryDocument3 pagesElectromagnetic Field Theorydebasishmee5808No ratings yet

- 10 1109@91 481843Document12 pages10 1109@91 481843debasishmee5808No ratings yet

- AssignmentsDocument11 pagesAssignmentsdebasishmee5808No ratings yet

- Sophus 3Document1 pageSophus 3debasishmee5808No ratings yet

- Book ListDocument6 pagesBook Listdebasishmee58080% (1)

- T Year B.tech Sylla1sbus Revised 18.08.10Document33 pagesT Year B.tech Sylla1sbus Revised 18.08.10Manoj KarmakarNo ratings yet

- Duplicate Doc Diploma CertiDocument1 pageDuplicate Doc Diploma Certidebasishmee5808No ratings yet

- Best Practices of Report Writing Based On Study of Reports: Venue: India Habitat Centre, New DelhiDocument24 pagesBest Practices of Report Writing Based On Study of Reports: Venue: India Habitat Centre, New Delhidebasishmee5808No ratings yet

- Lec 2Document25 pagesLec 2debasishmee5808No ratings yet

- Control System - I: Weekly Lesson PlanDocument5 pagesControl System - I: Weekly Lesson Plandebasishmee5808No ratings yet

- Introduction To Nba 16 NovDocument32 pagesIntroduction To Nba 16 Novdebasishmee5808No ratings yet

- Time ManagementDocument19 pagesTime ManagementShyam_Nair_9667No ratings yet

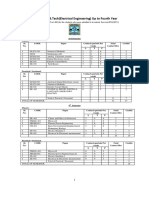

- Syllabus For B.Tech (Electrical Engineering) Up To Fourth YearDocument67 pagesSyllabus For B.Tech (Electrical Engineering) Up To Fourth YearDr. Kaushik MandalNo ratings yet

- Lec 2Document25 pagesLec 2debasishmee5808No ratings yet

- 2 14 24Document11 pages2 14 24debasishmee5808No ratings yet

- Lec 1Document18 pagesLec 1cavanzasNo ratings yet

- Graphical AbstractDocument2 pagesGraphical Abstractdebasishmee5808No ratings yet

- Comparison Between Integer Order and Fractional Order ControllersDocument6 pagesComparison Between Integer Order and Fractional Order Controllersdebasishmee5808No ratings yet

- 05-Algorithms and Problem SolvingDocument61 pages05-Algorithms and Problem SolvingDivaAuliaNo ratings yet

- Crash 2023 04 17 - 20.54.35 FMLDocument4 pagesCrash 2023 04 17 - 20.54.35 FMLDaniel SilvaNo ratings yet

- UntitledDocument772 pagesUntitledAndrew WangNo ratings yet

- Linux Command ListDocument8 pagesLinux Command ListhkneptuneNo ratings yet

- ID AutomationDocument28 pagesID Automationsymphonic82No ratings yet

- Lambda ExpressionsDocument25 pagesLambda ExpressionsTushar LalNo ratings yet

- ETN004 SOCKET TcpSendDocument3 pagesETN004 SOCKET TcpSendahlimNo ratings yet

- TCL 1 2015.00 LGDocument37 pagesTCL 1 2015.00 LGSandeepNo ratings yet

- Case StudyDocument3 pagesCase StudySthefen Nicole TomNo ratings yet

- DP 4 ReportDocument46 pagesDP 4 Reportsabira mullaNo ratings yet

- Cube It: Creating A 3D Rubik'S Cube Simulator in C++ and OpenglDocument1 pageCube It: Creating A 3D Rubik'S Cube Simulator in C++ and OpenglJ. P.No ratings yet

- User'S Manual: LCD 5 Line Digital OperatorDocument28 pagesUser'S Manual: LCD 5 Line Digital Operatorحماد النشاشNo ratings yet

- City, Grade, Salesman - Id) ORDERS (Ord - No, Purchase - Amt, Ord - Date, Customer - Id, Salesman - Id) Write SQL Queries ToDocument4 pagesCity, Grade, Salesman - Id) ORDERS (Ord - No, Purchase - Amt, Ord - Date, Customer - Id, Salesman - Id) Write SQL Queries ToVIDYA P0% (1)

- Final Exam Name: - Score: - Write The Letter of Your Chosen Answer On The Answer SheetDocument18 pagesFinal Exam Name: - Score: - Write The Letter of Your Chosen Answer On The Answer SheetJackie Masloff ConnorsNo ratings yet

- Java 11 Web Applications and Java EeDocument212 pagesJava 11 Web Applications and Java EeIstván KissNo ratings yet

- Ravi Kiran - Dasari: Door No: 6-102, Reddy Street, Akividu, West Godavari, Andhra Pradesh.Document3 pagesRavi Kiran - Dasari: Door No: 6-102, Reddy Street, Akividu, West Godavari, Andhra Pradesh.ravikiran465No ratings yet

- 2019 Summer Model Answer Paper (Msbte Study Resources)Document27 pages2019 Summer Model Answer Paper (Msbte Study Resources)Aditya BorleNo ratings yet

- 100 C Interview Questions and Answers - C Online TestDocument8 pages100 C Interview Questions and Answers - C Online TestEr Nayan PithadiyaNo ratings yet

- 02 - Basic of Apache ANTDocument18 pages02 - Basic of Apache ANTamitfegade121No ratings yet

- Pascal MT+ Language Manual Feb83Document154 pagesPascal MT+ Language Manual Feb83gottes villainNo ratings yet

- Dot Net Interview Questions and Answers PDFDocument16 pagesDot Net Interview Questions and Answers PDFSavitaDarekarNo ratings yet

- NVENC VideoEncoder API ProgGuide.9.0Document42 pagesNVENC VideoEncoder API ProgGuide.9.0iscrbdi100% (2)

- Assignment 6Document11 pagesAssignment 6Ritesh KolateNo ratings yet

- PL1 Condition CodesDocument10 pagesPL1 Condition CodesSatyabrata DashNo ratings yet

- SWE2005 Concepts of Object-Oriented Programming ELA FALL 2021-2022 Semester Lab Sessions - 10 Name: G.V.N.C.PRADYUMNA Reg No: 20MIS7088Document10 pagesSWE2005 Concepts of Object-Oriented Programming ELA FALL 2021-2022 Semester Lab Sessions - 10 Name: G.V.N.C.PRADYUMNA Reg No: 20MIS7088Pradhyumna GundaNo ratings yet

- C# Special CharactersDocument7 pagesC# Special CharactersDivine SolutionsNo ratings yet

- Intro Realtime Chapter1Document77 pagesIntro Realtime Chapter1gurudatt_kulkarniNo ratings yet

- Batery Gauge InitializationDocument4 pagesBatery Gauge InitializationLuis Felipe Colque MirandaNo ratings yet

- Python Microprojec 22616Document13 pagesPython Microprojec 22616nakshtra ahireNo ratings yet

- ADB Chapter 3Document54 pagesADB Chapter 3anwarsirajfeyeraNo ratings yet