Professional Documents

Culture Documents

CS109A Notes For Lecture 1/26/96 Running Time

Uploaded by

savio770 ratings0% found this document useful (0 votes)

33 views4 pagesn6

Original Title

notes6

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentn6

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

33 views4 pagesCS109A Notes For Lecture 1/26/96 Running Time

Uploaded by

savio77n6

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 4

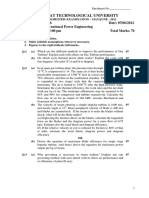

CS109A Notes for Lecture 1/26/96

Running Time

A program or algorithm has a running time ( ),

where is the measure of the size of the input.

( ) is the largest amount of time the program takes on any input of size .

Example: For a sorting algorithm, we normally choose to be the number of elements to

be sorted. For Mergesort, ( ) = log ; for

Selection-sort or Quicksort, ( ) = 2.

But there is an unknowable constant factor

that depends on various factors, such as machine speed, quality of the compiler, load on

the machine.

T n

T n

T n

T n

Why Measure Running Time?

Guides our selection of an algorithm to implement.

Helps us explore for better solutions without

expensive implementation, test, and measurement.

Arguments Against Running-Time Measurement

Algorithms often perform much better on average than the running time implies (e.g.,

quicksort is log on a \random" list but

2 in the worst case, where each division separates out only 1 element).

But for most algorithms, the worst case

is a good predictor of typical behavior.

When average and worst cases are radically di erent, we can do an average-case

analysis.

Who cares? In a few years machines will be

so fast that even bad algorithms will be fast.

n

The faster computers get, the more we

nd to do with them and the larger the

size of the problems we try to solve.

Asymptotic behavior (growth rate) of the

running time becomes more important,

not less, because we are getting closer to

the asymptote.

Constant factors hidden by \big-oh" are more

important than the growth rate of running

time.

Only for small instances, and anything is

OK when your input is small.

Benchmarking (running program on a popular set of test cases) is easier.

Sometimes true, but you've committed

yourself to an implementation already.

Big-Oh

A notation to let us ignore the unknowable

constant factors and focus on growth rate of

the running time.

Say ( ) is

( ) if for \large" , ( ) is no

more than proportional to ( ).

More formally: there exist constants 0 and

0 such that for all

0 we have ( )

( ).

0 and are called witnesses to the fact that

( ) is

( ).

Example: 10 2 + 50 + 100 is ( 2 ). Pick witnesses 0 = 1 and = 160. Then for any

1,

10 2 + 50 + 100 160 2.

Other choices of witness are possible, e.g.,

( 0 = 10 = 16).

General rule: any polynomial is big-oh of its

leading term with coe cient of 1.

T n

O f n

T n

f n

c >

T n

cf n

T n

O f n

n

O n

; c

Example:

is (2n).

Note that 10 can be very large compared to

2n for \small" .

10

2n is the same as saying

10 log2

. (False for = 32; true

for = 64.)

Pick witnesses 0 = 64 and = 1. For

64

we have 10 1 2n.

n

10

<

Growth Rates of Common Functions

The base of a logarithm doesn't matter.

loga is (logb ) for any bases and because loga = loga logb (i.e., witnesses are

0 = 1 = log a ).

Thus, we omit the base when talking

about big-oh.

Logarithms grow slower than any power of ,

e.g. log is ( 1=10 ).

An exponential is n for some constant 1.

Polynomials grow slower than any exponential, e.g. 10 is (1 001n).

Generally, exponential running times are impossibly slow; polynomial running times are

tolerable.

n

;c

O n

c >

Proofs That a Big-oh Relationship is False

Example: 3 is not ( 2 ). In proof: suppose

n

O n

it were. Then there would be witnesses 0 and

3

2.

such that for all

0 we have

Choose 1 to be

1. At least as large as 0 .

2. At least as large as 2 .

3

2 holds for

= 1, because 1 0

by (1).

2 , then

If 3

.

1

1

1

But by (2), 1 2 .

n

cn

cn

cn

Since 0 (holds for any witness ), it is not

possible that 2

.

1

Thus, our assumption that we could nd witnesses 0 and was wrong, and we conclude

3 is not ( 2 ).

c >

O n

General Idea of Non-Big-Oh Proofs

1.

2.

3.

Template p. 101 of FCS.

Assume witnesses 0 and exist.

Select 1 in terms of 0 and .

Show that 1 0, so the inequality ( )

( ) must hold for = 1.

Show that for the particular 1 chosen,

( 1)

( 1 ).

Conclude from (3) and (4) that 0 and are

not really witnesses. Since we assumed nothing special about witnesses 0 and , we conclude that no witnesses exist, and therefore

the big-oh relationship does not hold.

n

cf n

4.

T n

T n

5.

> cf n

You might also like

- Big O MIT PDFDocument9 pagesBig O MIT PDFJoanNo ratings yet

- Chapter 8 - Complexity AnalysisDocument62 pagesChapter 8 - Complexity AnalysisTanveer Ahmed HakroNo ratings yet

- Big O NotationDocument7 pagesBig O NotationdaniNo ratings yet

- Algorithm AnalysisDocument17 pagesAlgorithm Analysismuhammad amin ur rehman jani janiNo ratings yet

- Asymptotic NotationsDocument11 pagesAsymptotic NotationsKavitha Kottuppally RajappanNo ratings yet

- CS350-CH03 - Algorithm AnalysisDocument88 pagesCS350-CH03 - Algorithm AnalysisAsha Rose ThomasNo ratings yet

- Asymptotic Notations: 1) Θ Notation: The theta notation bounds a functions from above and below, so itDocument9 pagesAsymptotic Notations: 1) Θ Notation: The theta notation bounds a functions from above and below, so itDeepak ChaudharyNo ratings yet

- Lecture 06 - Algorithm Analysis PDFDocument6 pagesLecture 06 - Algorithm Analysis PDFsonamNo ratings yet

- Algorithmic Complexity Analysis GuideDocument13 pagesAlgorithmic Complexity Analysis Guidesarala devi100% (1)

- Algo AnalysisDocument24 pagesAlgo AnalysisAman QureshiNo ratings yet

- D35 Data Structure2012Document182 pagesD35 Data Structure2012Ponmalar SivarajNo ratings yet

- L1 - IntroductionDocument7 pagesL1 - IntroductionPonmalar SNo ratings yet

- Brief ComplexityDocument3 pagesBrief ComplexitySridhar ChandrasekarNo ratings yet

- Asymptotic Analysis of Algorithms in Data StructuresDocument11 pagesAsymptotic Analysis of Algorithms in Data StructuresHailu BadyeNo ratings yet

- KCS301 Unit1 Lec2Document23 pagesKCS301 Unit1 Lec2utkarshNo ratings yet

- Analysis of Algorithms: The Non-Recursive CaseDocument29 pagesAnalysis of Algorithms: The Non-Recursive CaseAsha Rose ThomasNo ratings yet

- Analyzing Code with Θ, O and Ω: 1 DefinitionsDocument18 pagesAnalyzing Code with Θ, O and Ω: 1 DefinitionsPeter Okidi Agara OyoNo ratings yet

- Advanced Algorithms & Data Structures: Lecturer: Karimzhan Nurlan BerlibekulyDocument31 pagesAdvanced Algorithms & Data Structures: Lecturer: Karimzhan Nurlan BerlibekulyNaski KuafniNo ratings yet

- 232ICS202 02 ComplexityAnalysisDocument46 pages232ICS202 02 ComplexityAnalysismodyzombie82No ratings yet

- Learn X in Y Minutes - Scenic Programming Language ToursDocument7 pagesLearn X in Y Minutes - Scenic Programming Language Tourstomandjerry625No ratings yet

- Asympototic NotationDocument21 pagesAsympototic NotationGame ZoneNo ratings yet

- DAA Unit 1,2,3-1 (1)Document46 pagesDAA Unit 1,2,3-1 (1)abdulshahed231No ratings yet

- Algorithm and Method AssignmentDocument8 pagesAlgorithm and Method AssignmentOm SharmaNo ratings yet

- Introduction To ComplexityDocument9 pagesIntroduction To Complexityafgha bNo ratings yet

- 3 - Algorithmic ComplexityDocument28 pages3 - Algorithmic ComplexityShakir khanNo ratings yet

- Analysis of Algorithms: Θ, O, Ω NotationsDocument4 pagesAnalysis of Algorithms: Θ, O, Ω NotationsNabin ThapaNo ratings yet

- Upload Test123Document4 pagesUpload Test123Nabin ThapaNo ratings yet

- Solution 1:: Big O - Order of MagnitudeDocument20 pagesSolution 1:: Big O - Order of MagnitudeAnam GhaffarNo ratings yet

- 2.analysis of AlgorithmDocument25 pages2.analysis of AlgorithmMesfin MathewosNo ratings yet

- Asymptotic Analysis ExplainedDocument6 pagesAsymptotic Analysis ExplainedDestiny OgahNo ratings yet

- Lec-03-Complexity of AlgorithmDocument38 pagesLec-03-Complexity of AlgorithmDJBRAVE131No ratings yet

- Asym AnalysisDocument8 pagesAsym AnalysiskhlooduniqueNo ratings yet

- MidtermlectureDocument22 pagesMidtermlectureRobinson BarsNo ratings yet

- Week 2 LectureDocument85 pagesWeek 2 Lecturef168002 Muhammad UmerNo ratings yet

- What Is Big O NotationDocument6 pagesWhat Is Big O Notationirfan_chand_mianNo ratings yet

- 15 BigoDocument3 pages15 BigoDiponegoro Muhammad KhanNo ratings yet

- Dsa 3 Sp2020-ADocument23 pagesDsa 3 Sp2020-Apicture sharingNo ratings yet

- Algorithmic Designing: Unit 1-1. Time and Space ComplexityDocument5 pagesAlgorithmic Designing: Unit 1-1. Time and Space ComplexityPratik KakaniNo ratings yet

- Chapter 1 - IntroductionDocument46 pagesChapter 1 - Introductionmishamoamanuel574No ratings yet

- Greedy AlgorithmsDocument42 pagesGreedy AlgorithmsreyannNo ratings yet

- CCC121-02-Asymptotic Notation - AnalysisDocument32 pagesCCC121-02-Asymptotic Notation - AnalysisJoshua MadulaNo ratings yet

- Analysis of AlgorithmsDocument25 pagesAnalysis of AlgorithmsAbdurezak ShifaNo ratings yet

- Lecture 1Document35 pagesLecture 1Mithal KothariNo ratings yet

- Analysis of LoopsDocument6 pagesAnalysis of LoopsNaseef Al Huq Bin RezaNo ratings yet

- CS 3110 Recitation 17 More Amortized Analysis: Binary CounterDocument5 pagesCS 3110 Recitation 17 More Amortized Analysis: Binary Counterforallthat youneedNo ratings yet

- ANALYSIS OF ALGORITHMS & ASYMPTOTIC NOTATIONSDocument22 pagesANALYSIS OF ALGORITHMS & ASYMPTOTIC NOTATIONSADityaNo ratings yet

- Big O Notation PDFDocument9 pagesBig O Notation PDFravigobiNo ratings yet

- Introduction To Algorithms: Chapter 3: Growth of FunctionsDocument29 pagesIntroduction To Algorithms: Chapter 3: Growth of FunctionsAyoade Akintayo MichaelNo ratings yet

- Big-Θ (Big-Theta) Notation: Targetvalue; Possibly Return The Value Of Guess; And Increment Guess. Each OfDocument3 pagesBig-Θ (Big-Theta) Notation: Targetvalue; Possibly Return The Value Of Guess; And Increment Guess. Each OfAnonymous gqSpNAmlWNo ratings yet

- CS3401 Algorithms Lecture Notes 1Document132 pagesCS3401 Algorithms Lecture Notes 1vkarthika7482No ratings yet

- Lecture 10Document39 pagesLecture 10khawla tadistNo ratings yet

- Analysis of Algorithms: The Non-Recursive CaseDocument29 pagesAnalysis of Algorithms: The Non-Recursive CaseErika CarrascoNo ratings yet

- Time ComplexityDocument15 pagesTime ComplexityHarsh Pandey BCANo ratings yet

- Asymptotic Notations ExplainedDocument12 pagesAsymptotic Notations Explainedkuchipudi durga pravallikaNo ratings yet

- Analysis of AlgorithmsDocument26 pagesAnalysis of AlgorithmsDanielMandefroNo ratings yet

- Analysis of Algorithms IDocument26 pagesAnalysis of Algorithms ISerge100% (3)

- Lock FreeDocument4 pagesLock Freesavio77No ratings yet

- Notes 11Document6 pagesNotes 11savio77No ratings yet

- WWW Davekuhlman OrgDocument52 pagesWWW Davekuhlman Orgsavio77No ratings yet

- HTTP WWW 1024cores Net Home Lock-free-Algorithms Lazy-concurrent-InitializationDocument5 pagesHTTP WWW 1024cores Net Home Lock-free-Algorithms Lazy-concurrent-Initializationsavio77No ratings yet

- CS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus PonensDocument5 pagesCS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus Ponenssavio77No ratings yet

- CS109A Priority Queues NotesDocument3 pagesCS109A Priority Queues Notessavio77No ratings yet

- CS109A Lecture Notes on Substrings, Subsequences and Longest Common Subsequence (LCSDocument3 pagesCS109A Lecture Notes on Substrings, Subsequences and Longest Common Subsequence (LCSsavio77No ratings yet

- CS109A Notes For Lecture 3/15/96 Representing StringsDocument2 pagesCS109A Notes For Lecture 3/15/96 Representing Stringssavio77No ratings yet

- CS109A Lecture Notes on Substrings, Subsequences and Longest Common Subsequence (LCSDocument3 pagesCS109A Lecture Notes on Substrings, Subsequences and Longest Common Subsequence (LCSsavio77No ratings yet

- CS109A Notes For Lecture 2/28/96 Binary Trees: EmptyDocument3 pagesCS109A Notes For Lecture 2/28/96 Binary Trees: Emptysavio77No ratings yet

- CS109A Priority Queues NotesDocument3 pagesCS109A Priority Queues Notessavio77No ratings yet

- CS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus PonensDocument5 pagesCS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus Ponenssavio77No ratings yet

- CS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next ArrayDocument4 pagesCS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next Arraysavio77No ratings yet

- CS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next ArrayDocument4 pagesCS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next Arraysavio77No ratings yet

- Notes 13Document4 pagesNotes 13savio77No ratings yet

- Notes 12Document4 pagesNotes 12savio77No ratings yet

- CS109A Notes For Lecture 1/19/96 Recursive Denition of Expressions Basis: InductionDocument3 pagesCS109A Notes For Lecture 1/19/96 Recursive Denition of Expressions Basis: Inductionsavio77No ratings yet

- CS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus PonensDocument5 pagesCS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus Ponenssavio77No ratings yet

- CS109A Notes For Lecture 1/24/96 Proving Recursive Programs WorkDocument3 pagesCS109A Notes For Lecture 1/24/96 Proving Recursive Programs Worksavio77No ratings yet

- CS109A Notes For Lecture 1/17/96 Simple Inductions: Basis Inductive Step Inductive Hypothesis NotDocument4 pagesCS109A Notes For Lecture 1/17/96 Simple Inductions: Basis Inductive Step Inductive Hypothesis Notsavio77No ratings yet

- Notes 10Document4 pagesNotes 10savio77No ratings yet

- Measuring Running Time of Programs in O(n) NotationDocument4 pagesMeasuring Running Time of Programs in O(n) Notationsavio77No ratings yet

- CS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus PonensDocument5 pagesCS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus Ponenssavio77No ratings yet

- CS109A Notes For Lecture 1/10/96 Major Theme: Data Models: Static Part Dynamic PartDocument4 pagesCS109A Notes For Lecture 1/10/96 Major Theme: Data Models: Static Part Dynamic Partsavio77No ratings yet

- System & Network Performance Tuning: Hal SternDocument184 pagesSystem & Network Performance Tuning: Hal Sternsavio77No ratings yet

- Calculus Based PhysicsDocument267 pagesCalculus Based PhysicsSAMEER ALI KHANNo ratings yet

- Physics FormulaeDocument108 pagesPhysics FormulaejoupiterNo ratings yet

- Visual MathsDocument57 pagesVisual Mathskds20850No ratings yet

- Indian Railways ElectrificationDocument1 pageIndian Railways ElectrificationVanitha RajendranNo ratings yet

- C++ FundamentalsDocument207 pagesC++ Fundamentalsbrm1shubhaNo ratings yet

- Erection of Earthing Arrangements (TNC, TN-S, TNC-S and TT) - EEPDocument8 pagesErection of Earthing Arrangements (TNC, TN-S, TNC-S and TT) - EEPHamad GulNo ratings yet

- Bantubani ElectrodetechforbasemetalfurnacesDocument10 pagesBantubani ElectrodetechforbasemetalfurnacesSEETHARAMA MURTHYNo ratings yet

- Elektricna Sema KlimeDocument58 pagesElektricna Sema Klimefrostssss0% (1)

- Slice 3 Choice BoardDocument3 pagesSlice 3 Choice Boardapi-463991923No ratings yet

- Computer Architecture - Wikipedia, The Free EncyclopediaDocument8 pagesComputer Architecture - Wikipedia, The Free EncyclopediaelcorinoNo ratings yet

- TCDC Corporate IdentityDocument90 pagesTCDC Corporate Identityชั่วคราว ธรรมดาNo ratings yet

- Srinivas ReportDocument20 pagesSrinivas ReportSrinivas B VNo ratings yet

- Southwire Mining Product CatalogDocument32 pagesSouthwire Mining Product Catalogvcontrerasj72No ratings yet

- Teacher's PlannerDocument66 pagesTeacher's PlannerYeeyee AungNo ratings yet

- RS124 ManualDocument13 pagesRS124 ManualSoakaosNo ratings yet

- GTU BE- Vth SEMESTER Power Engineering ExamDocument2 pagesGTU BE- Vth SEMESTER Power Engineering ExamBHARAT parmarNo ratings yet

- Bip 0072-2014 PDFDocument94 pagesBip 0072-2014 PDFgrNo ratings yet

- Cleaning Companies in UaeDocument5 pagesCleaning Companies in UaeurgentincleaningseoNo ratings yet

- Innovative Power Transmission Slide BearingsDocument6 pagesInnovative Power Transmission Slide BearingsharosalesvNo ratings yet

- BTech Seminar on MICROPILES: ADVANCED FOUNDATION ENGINEERINGDocument17 pagesBTech Seminar on MICROPILES: ADVANCED FOUNDATION ENGINEERINGTrudeep DaveNo ratings yet

- Tst170 03 RUP Testing DisciplineDocument26 pagesTst170 03 RUP Testing DisciplineMARYMP88No ratings yet

- Assignment 3 OSDocument5 pagesAssignment 3 OSValerie ThompsonNo ratings yet

- "Solid Waste Management": Visvesvaraya Technological UniversityDocument24 pages"Solid Waste Management": Visvesvaraya Technological UniversityManu MonaNo ratings yet

- ResumeDocument3 pagesResumeAadil AhmadNo ratings yet

- 6248 User Manual Sailor VHF DDocument66 pages6248 User Manual Sailor VHF DChristiano Engelbert SilvaNo ratings yet

- Client Needs and Software Requirements V2.2Document43 pagesClient Needs and Software Requirements V2.2Mehmet DemirezNo ratings yet

- Sumit Pandey CVDocument3 pagesSumit Pandey CVSumit PandeyNo ratings yet

- Preliminary Hazard Identification: Session 3Document19 pagesPreliminary Hazard Identification: Session 3Isabela AlvesNo ratings yet

- PRISM Proof Cloud Email ServicesDocument11 pagesPRISM Proof Cloud Email ServiceshughpearseNo ratings yet

- Ias Public Administration Mains Test 1 Vision IasDocument2 pagesIas Public Administration Mains Test 1 Vision IasM Darshan UrsNo ratings yet

- Object Oriented - AnalysisDocument43 pagesObject Oriented - AnalysisAbdela Aman MtechNo ratings yet

- Bhatia CPD 20001Document1 pageBhatia CPD 20001bilalaimsNo ratings yet

- Training Estimator by VladarDocument10 pagesTraining Estimator by VladarMohamad SyukhairiNo ratings yet

- Mm301 Fluid Mechanics Problem Set 3Document4 pagesMm301 Fluid Mechanics Problem Set 3oddomancanNo ratings yet