Professional Documents

Culture Documents

I-Core: A Run-Time Adaptive Processor For Embedded Multi-Core Systems

Uploaded by

inr0000zhaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

I-Core: A Run-Time Adaptive Processor For Embedded Multi-Core Systems

Uploaded by

inr0000zhaCopyright:

Available Formats

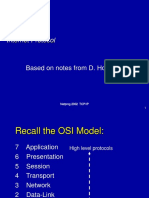

i-Core: A run-time adaptive processor

for embedded multi-core systems

Jrg Henkel, Lars Bauer, Michael Hbner, and Artjom Grudnitsky

Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany

{henkel, lars.bauer, michael.huebner, artjom.grudnitsky} @ kit.edu

Submitted as Invited Paper to ERSA 2011

Abstract

We present the i-Core (Invasive Core), an Application Spe-

cific Instruction Set Processor (ASIP) with a run-time adap-

tive instruction set. Its adaptivity is controlled by the run-

time system with respect to application properties that may

vary during run-time. A reconfigurable fabric hosts the

adaptive part of the instruction set whereas the rest of the

instruction set is fixed. We show how the i-Core is inte-

grated into an embedded multi-core system and that it is

particularly advantageous in multi-tasking scenarios, where

it performs applications-specific as well as system-specific

tasks.

1. Introduction and Motivation

Embedded processors are the key in rapidly growing appli-

cation fields ranging from automotive to personal mobile

communication, computation, and entertainment, to name

just a few. In the early 1990s, the term ASIP has emerged

denoting processors with an application-specific instruction

set (Application Specific Instruction-set Processors). They

are more efficient in one or more design criteria like per-

formance per area and performance per power [1] com-

pared to mainstream processors and eventually make to-

days embedded devices (which are often mobile) possible.

Nowadays, the term ASIP comprises a far larger variety of

embedded processors allowing for customization in various

ways including a) instruction set extensions, b) parameteri-

zation and c) inclusion/exclusion of predefined blocks tai-

lored to specific applications (like, for example, an MPEG-

4 decoder) [1]. An overview for the benefits and challenges

of ASIPs is given in [1-3].

A generic design flow of an embedded processor can be

described as follows:

i) an application is analyzed/profiled

ii) an instruction set extension (containing so-called Spe-

cial Instructions, SIs) is defined

iii) the instruction set extension is synthesized together

with the core instruction set

iv) retargetable tools for compilation, instruction set simu-

lation, and so on, are (often automatically) created and

application characteristics are analyzed

v) the process might be iterated several times until design

constraints comply

Automatically detecting and generating SIs from the appli-

cation code (like in [4]) plays a major role for speeding-up

an application and/or for power efficiency. Profiling and

pattern matching methods [5, 6] are typically used along

with libraries of reusable functions [7] to generate SIs.

However, fixing critical design decisions during design

time may lead to embedded processors that can hardly react

to an often non-predictive behavior of todays complex ap-

plications. This does not only result in reduced efficiency

but it also leads to an unsatisfactory behavior when it

comes to design criteria like performance and power

consumption. A means to address this dilemma is recon-

figurable computing [8-11] since its resources may be util-

ized in a time-multiplexed manner (i.e. reconfigured over

time). A large body of research has been conducted in in-

terfacing reconfigurable computing fabrics with standard

processor cores (e.g. using an embedded FPGA [12-14]).

This paper presents the i-Core, a reconfigurable proces-

sor that provides a high degree of adaptivity at the level of

instruction set architecture (through using reconfigurable

SIs) and microarchitecture (e.g. reconfigurable caches and

branch predictions). The potential performance advantages

at both levels are exploited and combined which allows for

a high degree of adaptivity that is especially beneficial in

run-time varying multi-tasking scenarios.

Paper structure: Section 2 presents state-of-the-art related

work for reconfigurable processors. An overview of our

i-Core processor is given in Section 3 where we explain the

way it is integrated into a heterogeneous multi-core system

and the kind of adaptivity it provides with respect to SIs

and the microarchitecture. Section 4 explains how SIs are

modeled and how the programmer can express which SIs

are demanded by the application to trigger their reconfigu-

ration. Performance results of applications executing on the

i-Core are given in Section 5 and conclusions are drawn in

Section 6.

2. Related Work

Diverse approaches for reconfigurable processors were in-

vestigated particularly within the last decade [8-11]. The

Molen Processor couples a reconfigurable coprocessor to a

core processor via a dual-port register file and an arbiter for

shared memory [15]. The application binary is extended to

include instructions that trigger the reconfigurations and

control the usage of the reconfigurable coprocessor. The

OneChip project [16, 17] uses tightly-coupled Reconfigur-

able Functional Units (RFUs) to utilize reconfigurable

computing in a processor. As their speedup is mainly ob-

tained from streaming applications, they allow their RFUs

to access the main memory, while the core processor (i.e.

the non-reconfigurable part of the processor) continues

executing [18]. Both approaches target a single-tasking en-

vironment and statically predetermine which SIs shall be

reconfigured at which time and to which location on the

reconfigurable fabric. This will lead to conflicts if multiple

tasks compete for the reconfigurable fabric (not addresses

by these approaches).

The Warp Processor [19] automatically detects computa-

tional kernels while the application executes. Then, custom

logic for SIs is generated at run-time through on-chip mi-

cro-CAD tools and the binary of the executing program is

patched to execute them. This potentially allows adapting

to changing multi-tasking scenarios. However, the required

online synthesis may incur a non-negligible overhead and

therefore the authors concentrate on scenarios where one

application is executing for a rather long time without sig-

nificant variation of the execution pattern. In these scenar-

ios, only one online synthesis is required (i.e. when the ap-

plication starts executing) and thus the initial performance

degradation accumulates over time. Adaptation to fre-

quently changing requirements as typically demanded in a

multi-tasking system is not addressed by this approach.

The Proteus Reconfigurable Processor [20] extends a

core processor with a tightly-coupled reconfigurable fabric.

It concentrates on Operating System (OS) support with re-

spect to SI opcode management to allow different tasks to

share the same SI implementations. Proteus reconfigurable

fabric is divided into multiple Programmable Functional

Units (PFUs) where each PFU may be reconfigured to con-

tain one SI (unlike ReconOS [21], where the reconfigurable

hardware is deployed to implement entire threads). How-

ever, when multiple tasks exhibit dissimilar processing

characteristics, a task may not obtain a sufficient number of

PFUs to execute all SIs in hardware. Therefore, some SIs

will execute in software, resulting in steep performance

degradation.

The RISPP processor [22, 23] uses the reconfigurable

fabric in a more flexible way by introducing a new concept

of SIs in conjunction with a run-time system to support

them. Each SI exists in multiple implementation alterna-

tives, reaching from a pure software implementation (i.e.

without using the reconfigurable fabric) to various hard-

ware implementations (providing different trade-offs be-

tween the amount of required hardware and the achieved

performance). The main idea of this concept is to partition

SIs into elementary reconfigurable data paths that are con-

nected to implement an SI. A run-time system then dy-

namically chooses one alternative out of the provided op-

tions for SI implementations, depending on run-time appli-

cation requirements. It focuses on single-tasking scenarios

and does not aim to share the reconfigurable fabric among

multiple tasks or among user tasks and OS tasks.

KAHRISMA [24, 25] extends the concepts of RISPP by

providing a fine-grained reconfigurable fabric along with a

coarse-grained reconfigurable fabric that can then be used

to implement SIs and to realize pipeline- or VLIW proces-

sors. Therefore, KAHRISMA supports simultaneous multi-

tasking (one task per core), but it does not consider execut-

ing multiple tasks per core or adapting the microarchitec-

ture (e.g. cache- or branch-prediction) of a core.

Altogether, only Proteus explicitly targets multi-tasking

systems in the scope of reconfigurable processors that use a

fine-grained reconfigurable fabric. The concept of PFUs

does not provide the demanded flexibility to support multi-

ple tasks efficiently though. RISPP and KAHRISMA pro-

vide a flexible SI concept but the challenge of sharing the

reconfigurable fabric and the configuration of the microar-

chitecture among competing tasks is not addressed. The

Warp processor provides the potentially highest flexibility,

but it comes at the cost of online synthesis, which limits the

scenarios in which this flexibility can be efficiently used.

Hence, when studying state-of-the-art approaches, the fol-

lowing challenge remains: providing an adaptive recon-

figurable processor that can share the reconfigurable fabric

efficiently among multiple user tasks while providing an

adaptive microarchitecture that can adapt to varying task

requirements.

3. i-Core Overview

The i-Core is a reconfigurable processor that provides a

run-time reconfigurable instruction set architecture (ISA)

along with a run-time reconfigurable microarchitecture.

The ISA consists of two parts, the so-called core ISA

(cISA) and the Instruction Set Extension (ISE). The cISA is

statically available (i.e. implemented with non-

reconfigurable hardware) and the ISE represents the task-

specific components of the ISA that are realized as recon-

figurable Special Instructions (SIs). The i-Core uses a fine-

grained reconfigurable fabric (i.e. an embedded FPGA, e.g.

[12-14]) to provide

i) task-specific ISEs,

ii) OS-specific ISE, and

iii) an adaptive microarchitecture that among others

allows for supporting and executing both kinds of ISEs

efficiently by performing run-time reconfigurations

This approach exceeds the concept of state-of-the-art

ASIPs, as it adds flexibility and additionally enables dy-

namic run-time adaptation towards the executing applica-

tion to increase the performance.

The reconfigurable microarchitecture characterizes the

concrete processor-internal realization of the i-Core for a

given ISA. It refers to the internal process of instruction

handling and it is developed with respect to a predefined

ISA (SPARC-V8 [26] in our case). Summarizing, the ISA

specifies the instruction set that can be used to program the

processor (without specifying how the instructions are im-

plemented) and the microarchitecture specifies its imple-

mentation and further ISA-independent components (e.g.

caches and branch prediction).

Figure 1 shows how the i-Core is embedded into a het-

erogeneous loosely-coupled multi-core system which is

partitioned into tiles that consist of processor cores and lo-

cal shared memory. Each tile is connected to one router that

is part of an on-chip network to connect the tiles to each

other and to external memory. Within one tile, none, one,

or multiple i-Core instances are located and connected to

the tile-internal communication structure (similar to the

other CPUs of the tile). The CPUs within the tile access the

shared memory (an application-managed scratchpad) via

the local bus. The i-Core uses a dedicated connection to the

local memory, using two 128-bit ports to provide a high

memory bandwidth to expedite the execution of SIs. When

multiple i-Cores are situated within one tile, then the access

to the local memory and the reconfigurable fabric is shared

among them.

.

.

.

Typical instantiation of a

heterogeneous loosely-

coupled MPSoC,

showing how the i-Cores

are embedded into it

Computa-

tion Tile

i-Core

...

Mem

CPU CPU

i-Core i-Core

Mem

i-Core CPU

CPU CPU

Mem

CPU CPU

CPU CPU

Mem

Ctl

Mem

CPU i-Core

CPU i-Core

Mem

CPU CPU

CPU i-Core

Mem

Figure 1: Integration of the i-Core into a heterogeneous

multi-core system with on-chip interconnect network

Figure 2 provides an overview of the i-Core-internal adap-

tation options of the ISE and the microarchitecture. The

ISE provides task-specific and system-specific SIs.

Whereas the task-specific SIs are particularly targeted to-

wards a certain application or application domain, the sys-

tem-specific SIs support basic operating system (OS) func-

tionalities that are required to execute tasks and to manage

the multi-core system. The OS can (to some degree) be

viewed as a set of tasks (e.g. task mapping, task scheduling,

etc.) that can be accelerated by SIs. Typically, it is impos-

sible to fulfill all ISE requests due to the limited size of the

reconfigurable fabric. The SIs that are not implemented on

the reconfigurable fabric at a certain point in time can be

executed through an unimplemented instruction trap as

presented in more detail in [27].

SIs are prepared at compile time of the tasks and the cal-

culations that are performed by an SI are fixed at run time.

Instead, the implementation of a particular SI may change

during run-time (details are explained in Section 4.1).

These adaptations correspond to ISE-specific adaptations at

the microarchitecture level. Figure 2 shows a reconfigur-

able fabric in addition to the hardware of the processor

pipeline that is employed to realize an ISE. Depending on

the executing tasks and their specific ISE requirements, the

reconfigurable fabric is allocated or as we call it in-

vaded to realize a certain subset of the requested ISEs (that

is why we call it an invasive Core, i.e. i-Core). The con-

cepts of invasive computing [28] are used to manage the

competing requests of different tasks. In the scope of recon-

figurable processors this means that each task specifies the

SIs that it uses (i.e. its requests; details are presented in

Section 4.2). Additionally, each task provides information

which performance improvement (speedup) can be ex-

pected, depending on the size of the reconfigurable fabric

that is assigned to it. A run-time system (part of the OS)

then decides for the tasks that compete for the resources,

which task obtains which share of the reconfigurable fabric

(similar to the approach presented in [29] where the fabric

of one task is partitioned among the SIs of that task).

Instruction-Set

Architecture (ISA)

Processor Pipeline

Adaptive Number of

Pipeline Stages etc.

Microarchitecture

Application-/System-

specific Instruction-Set Extension

(ISE), realized as Special

Instructions (SIs)

Cache /

Scratchpad

Reconfigurable Fabric

Adaptive Branch

Prediction etc.

i-Core

is execu-

ted by

is executed by

Control-

flow

Data

Transfers

Core Instruction-Set

Architecture (cISA)

Reconfigurable Fabric may be used for:

SIs of user tasks

potentially multiple tasks compete for the

reconfigurable fabric

SIs of Operating System tasks

ISE-independent microarchitectural optimizations

Figure 2: The Instruction-Set Architecture and Mi-

croarchitecture Adaptations of our i-Core

The highly adaptive nature of modern multi-tasking em-

bedded systems intensifies the potential advantages that

come along with an adaptive microarchitecture and instruc-

tion set architecture. The adaptations of the instruction set

comprise flexible task-specific SIs that support at run-time

adaptations in terms performance per area (details given

in Section 4.1) depending on the number of tasks that exe-

cute on an i-Core at a specific time (and thus the amount of

reconfigurable fabric that is available per task). In addition

to the ISE, the i-Core also supports adapting its microarchi-

tecture. The microarchitecture adaptations are independent

upon the instruction set and they comprise:

adaptive number of pipeline stages

adaptive branch prediction

adaptive cache/scratchpad configuration

Even though these optimizations are ISA-independent, they

may significantly increase the performance of the executing

task (or reduce the power consumption etc.). The number

of pipeline stages is altered by combining neighbored

stages and bypassing the pipeline registers between them.

This reduces the maximal frequency of the processor but it

may lead to power savings (reduced number of registers)

and may be beneficial in terms of performance for applica-

tions where a complex control flow leads to many branch

miss-predictions. Additionally, techniques like pipeline

balancing [30] or pipeline gating [31] can be applied. De-

pending on a tasks requirements, the branch prediction

scheme can also be changed dynamically, e.g. by providing

different schemes and letting the task decide which one to

use. Another example of performance-oriented adaptive de-

sign is branch history length adaptation, e.g. Juan et al.

[32] explore dynamic history-length fitting and develop a

method for dynamically selecting a history length that ac-

commodates the current workload.

In addition, the cache can be changed in various ways.

For instance, Albonesi [33] proposes to disable cache ways

dynamically to reduce dynamic energy dissipation. Kaxiras

et al. [34] reduce leakage power by invalidating and turning

off the cache lines when they hold data that is not likely to

be reused. The approaches of [35-37] use an adaptive strat-

egy to adjust the cache line size dynamically to an applica-

tion. In addition to these approaches, the microarchitecture

of the i-Core exploits the availability of the fine-grained re-

configurable fabric to extend the size and associativity of

the cache. For example, the size of the cache (e.g. number

of cache lines) can be extended (using the logic cells of the

reconfigurable fabric as fast memory), further parallel

comparators can be realized to increase the associativity of

the cache, or additional control bits can be assigned to each

cache line for protocol purpose (e.g. error detec-

tion/correction schemes). Additionally, the memory of the

cache can be reconfigured to be used as a task-managed

scratchpad memory.

In summary, instruction set and microarchitecture adap-

tations target task-specific optimizations, for example, a

particular task might benefit from a certain SI (part of the

ISA) and a certain branch prediction (part of the microar-

chitecture). Additionally, a particular task might also bene-

fit from different ISA/microarchitecture implementations at

different phases of its execution (e.g. different computa-

tional kernels), i.e. the requirements of a sole task may

change over time. Depending on the tasks that execute at a

certain time and their requirements, the adaptations focus

on:

some selected tasks (beneficial for those tasks at the cost

of other tasks)

operating system optimization (beneficial for all tasks)

a trade-off between both

Determining this trade-off depends on the user-priorities of

the executing tasks. This large degree of flexibility is an

advantage in comparison to state-of-the-art adaptive proc-

essors (e.g. RISPP [22, 23] or KAHRISMA [24, 25]) as

they focus on accelerating either the tasks or the operating

systems (but not both) by improving either the instruction

set or the microarchitecture (and gain, not both).

3.1. Partitioning the Reconfigurable Fabric among

Special Instructions and Microarchitecture

The core ISA (cISA) is executed by means of a specific

hardware at the microarchitecture level. Depending on the

requirements of the executing task, the microarchitecture

implementation of the cISA can be changed during run-

time. For instance, a 5-stage pipeline implementation can

be replaced by a faster 7-stage pipeline implementation as

explained in Section 3.

Figure 3 illustrates an example for the different levels of

adaptivity, using a task execution scenario in a sequence

from a) to d). It shows how the execution pipeline, the

cache, and the reconfigurable fabric may be invaded by dif-

ferent tasks (i.e. the resources are reconfigured towards the

requirements of the task as explained in Section 3). Part a)

of Figure 3 illustrates that the reconfigurable fabric can be

used to accelerate OS functionality. This is especially bene-

ficial, as the workload of the OS heavily depends on the

behavior of the tasks, that is, when and how many sys-

tem calls etc. will be executed. Therefore, providing static

accelerators for the OS is not necessarily beneficial. In-

stead, the hardware may be reconfigured to accelerate other

tasks in case the OS does not benefit from it at a certain

time, as shown in part b) of the figure.

7-stage

Pipeline

Cache

5-stage

Pipeline

Cache

5-stage

Pipeline

Scratchpad

5-stage

Pipeline

Scratchpad

OS

Accelerator

Guaranteed

Appl.-spe-

cific Accele-

rator 1

Cache

Exten-

sion

Guaranteed

Appl.-spe-

cific Accele-

rator 1

Tempo-

rary

Accele-

rator 2

Guaranteed

Appl.-spe-

cific Accele-

rator 1

Guarant.

Acc. for

second

i-let

a)

b)

c)

d)

Legend:

ISA-independent

Microarchitecture

Adaptation

ISA-dependent

OS-specific

Adaptation

ISA-dependent

Appl.-specific

Adaptation

Before a task starts executing, the

Operating System needs to decide

on which core it shall execute

(task mapping and scheduling

problem). Dedicated accelerators

are loaded to support the

corresponding algorithms

The task starts execution and an

application-specific accelerator is

established. Additionally, parts of

the reconfigurable fabric are

invaded to extend the capacity/

strategy of the cache. As the main

computational load is now covered

by the accelerator, the pipeline

can be reconfigured to a 5-stage

pipeline. This slows down its

maximal frequency, but it reduces

the penalty of wrong branch

predictions.

In addition to the guaranteed acce-

lerator (reserved during invasion)

a second accelerator is esta-

blished temporary and replaces

the cache extension. Due to the

reduced cache size, a user-

managed scratchpad is more

beneficial than a transparent

cache, thus the cache logic is

reconfigured correspondingly.

A second task starts execution

and demands different

accelerators. Therefore, the

temporary established second

accelerator for the first task is

replaced to realize a new

accelerator for the second

task, i.e. it invades the available

part of the reconfigurable fabric.

Figure 3: An example to demonstrate the adaptivity of

the i-Cores, comprising ISA-independent adaptations as

well as task-specific and OS-specific adaptations

Microarchitectural components like the cache and the proc-

essor pipeline can be adapted to different task require-

ments. Depending on the demanded time for performing

these reconfigurations, the changes may be performed spe-

cific to the currently executing task or they may be per-

formed for the set of tasks that execute on the i-Core. For

instance, reconfiguring a 7-stage pipeline into a 5-stage

pipeline only demands a few cycles and thus it can be per-

formed as part of the task switch (in a preemptive multi-

tasking system) when changing from one task to another.

Instead, the reconfiguration time of the reconfigurable fab-

ric is typically larger and changing the configuration as part

of the task switch would increase the task switching time

significantly. Therefore, to share the reconfigurable fabric

among multiple tasks, it needs to be partitioned dynami-

cally as indicated in parts c) to d) of Figure 3. Therefore, at

different points in time, the temporary allocation which

part of the reconfigurable fabric accelerates which task

changes dynamically. Parts c) and d) show that a task can

obtain a guaranteed share of the reconfigurable fabric, but it

may use a larger share of it temporarily.

4. Modeling and Using Reconfigurable Special

Instructions

4.1. Special Instruction Model

As motivated in Section 3, the reconfigurable fabric is

shared among several tasks and thus, the ISE of a particular

task has to cope with an at compile-time unknown size of

the reconfigurable fabric. The approach of so-called modu-

lar SIs allows for providing different trade-offs between the

amount of required hardware and the achieved performance

by breaking SIs into elementary reconfigurable data paths

(DPs) that are connected to implement an SI. It was origi-

nally developed in the scope of the RISPP project [22] and

is meanwhile integrated and extended in other projects as

well (e.g. KAHRIMSA [24]). The basic idea of modular

SIs is illustrated in Figure 4 (description and an example

follows) and is based on a hierarchical approach that

among others allows to represent that

a) an SI may be realized by multiple SI implementations,

typically differing in their hardware requirements and

their performance (and/or other metrics), and

b) a DP is not dedicated to a specific SI, but it can be used

as a part of different SIs instead.

SI A SI B SI C

A1 A2 A3 AcI SA

1

2

2

DP 2 DP 1

B1 B2 BcI SA C1

CcISA

DP 3

1

2

C2

Special

Instructions

(SIs)

SI Imple-

mentations

Data Paths

(DPs)

2

1

1

1

1

2

DP 4 DP 6 DP 5

1

2

1

2

2

1

1

2

Demanded in

different quan-

tities for SI

implemen-

tations

1

(an SI can be

realized by

any of its im-

plementations)

an implemen-

tation without

hardware

acceleration

Figure 4: Hierarchical composition of SIs: multiple im-

plementation alternatives exist per SI and demand data

paths for realization; Figure based on [22]

Modular SIs are composed of DPs, where typically multi-

ple DPs are combined to implement an SI. Figure 5 shows

the Transform DP and the SAV (Sum of Absolute Values)

DP to indicate their typical complexity. DPs are created and

synthesized during compile time and they are reconfigured

at run time. Technically, DPs are the smallest components

that are reconfigured in modular SIs. An SI is composed of

multiple DPs, as indicated in Figure 4 and illustrated with

an example in Figure 5 and an SI implementation incorpo-

rates them in different quantities.

Figure 4 shows the hierarchy of SIs, SI Implementations,

and DPs. At each point in time, a particular SI is imple-

mented by one specific SI Implementation. It is noticeable

that DPs can be used by different implementations of the

same SIs and even by different SIs. This means that a DP

can be shared among different SIs.

Each SI has one special Implementation that does not

demand any DPs. This means that this SI implementation is

not accelerated by hardware. If the SI shall execute but an

insufficient amount of DPs needed to implement any of the

other Implementations is available (i.e. reconfigured to the

reconfigurable fabric), then the processor raises an unim-

plemented instruction trap and the corresponding trap han-

dler is used to implement the SIs functionality [27]. The

trap handler uses the core Instruction Set Architecture

(cISA) of the processor. Therefore, this cISA implementa-

tion allows bridging the reconfiguration time (in the range

of 1 to 10 ms), i.e. the time until the required DPs are re-

configured.

INPUT: OUTPUT:

IDCT=0

QSub

SAV (Sumof

Absolute Values)

+

+

+

X00

X30

X10

X20

Y20

Y00

Y10

Y30

Repack

>> 1

>> 1

>> 1

>> 1

+ +

+

+

<< 1

<< 1

DCT HT

Transform

HT=0

X1 neg

0

X2 neg

0

X3 neg

0

X4 neg

0

+

+

+

Y

>> 1

IDCT

>> 1

DCT=0

IDCT=0 HT=1

DCT=0

Figure 5: Example for the modular Special Instruction

SATD (Sum of Absolute (Hadamard-) Transformed

Differences); Figure based on [22, 27]

Figure 5 shows an example for a modular SI that is com-

posed of four different types of DPs and that implements

the Sum of Absolute Hadamard-Transformed Differences

(SATD) functionality, as it is used in the Motion Estima-

tion process of an H.264 video encoder. The data-flow

graph shown in Figure 5 describes the general SI structure

and each implementation of this SI has to specify how

many DP instances of the four utilized DP types (i.e. QSub,

Repack, Transform, and SAV) shall be used for implemen-

tation. Depending on the provided amount of DPs, the SI

can execute in a more or less parallel way. When more DPs

are available, then a faster execution is possible (that corre-

sponds to a different implementation of same SI). Dynami-

cally changing between these different performance-levels

of an SI implementation allows reacting on changing appli-

cation requirements or changing availability of the recon-

figurable fabric.

4.2. Programming Interface for using the recon-

figurable fabric

A task uses DPs on the reconfigurable fabric without the

knowledge of low-level details such as partitioning the fab-

ric for different SIs, determining the sequence of which

DPs are loaded where on the fabric, etc. These steps are

handled by the run-time system of the i-Core. Nevertheless,

the programmer needs to trigger these steps by issuing sys-

tem calls (presented in this section). The code fragment in

Figure 6 (a high-level excerpt from the main loop of a

H.264 video encoder) illustrates the programming model of

the i-Core.

Microarchitectural features are adapted using the

set_i_Core_parameter system call. It ensures that any pre-

conditions for an i-Core adaptation are met (e.g. emptying

the pipeline before changing pipeline length, invalidating

cache-lines before modifying cache-management parame-

ters, etc.) and it then performs the adaptations. For exam-

ple, in Line 3 of Figure 6, the i-Core pipeline length is set

to 5 stages and branch prediction is switched to a (2, 2) cor-

relating branch predictor.

To request a share of the reconfigurable fabric, the task

issues the invade system call (Line 5). Invade selects a

share of a resource and grants it to the task. There, the re-

source is the entire reconfigurable fabric of the i-Core, a

part of which is assigned to the task. The size of the as-

signed fabric depends on the speedup that the application is

expected to exhibit. Generally, the more fabric is available

to the task, the higher the speedup, but the expected

speedup for a given amount of fabric is task-specific. This

speedup per size of assigned fabric relationship is ex-

plored during offline profiling and passed as the

trade_off_curve parameter to the invade system call. Dur-

ing execution of invade, the run-time system will use the

trade-off curve to decide which share of the fabric will be

granted to the task. In the worst case, the application will

receive no fabric at all (due to e.g. all fabric being occupied

by higher priority tasks), then all SIs need their core ISA

implementation (see Section 4.1) for execution The share

of the fabric assigned to an application corresponds to the

my_fabric return value in Line 5. Requesting a share of the

fabric is typically done before the actual computational

kernels start.

Next, the implementations of the SIs that will be used

during the next kernel execution (the while loop in the

code example, lines 6-15) must be determined. The applica-

tion programmer does not need to know which SI imple-

mentations are available at run time, as long as it is guaran-

teed that the SI functionality is performed (the SI imple-

mentation determines the performance of the SI, but not its

functionality). The application informs the run-time system

which SIs it will use during the next kernel execution and

the run-time system selects implementations for these SIs.

This is accomplished by means of the invade system call

again (Line 7). Here, the invaded resource is the applica-

tions own share of the reconfigurable fabric acquired ear-

lier, which needs to be partitioned such that SI implementa-

tions for the requested SIs (SATD and SAD in the code ex-

ample) fit onto it [29]. The programmer may also explicitly

request specific DPs that shall be loaded into the fabric and

the run-time system will consider these requests when se-

lecting SI implementations for the task. This manual inter-

vention may be used if the SI requirements of a kernel are

rather static, i.e. the run-time system does not need to pro-

vide adaptivity for its implementation. Additionally, it can

be used to limit the search for SI implementations and thus

reduce the overhead of the run-time system.

1. H264_encoder() {

2. // Set i-Core microarchitecture parameters

3. set_i_Core_parameter(pipeline_length=5,

branch_prediction=2_2_correlation_predictor);

4. // Invade a share of the reconfigurable fabric

5. my_fabric=invade(resource=reconf_fabric,

performance=trade_off_curve);

6. while (frame=videoInput.getNextFrame()) {

7. SI_implementations=invade(resource=my_fabric,

SI={SAD, trade_off_curve[SAD],

execution_prediction[SAD_ME]},

SI={SATD, trade-off_curve[SATD],

execution_prediction[SATD_ME]} );

8. infect(resource=my_fabric, SI_implementations);

9. motion_estimation(frame, ...);

10. ...

11. SI_implementations=invade(resource=my_fabric, ...);

12. infect(resource=my_fabric, SI_implementations);

13. encoding_engine(frame, ...);

14. ...

15. }

16. }

Figure 6: Pseudo code example for invading the recon-

figurable fabric and the microarchitecture

After the run-time system has decided which SI implemen-

tations to use, the application can start loading the required

DPs by issuing the infect system call (Line 8). DPs are

loaded in parallel to the task execution, allowing the appli-

cation to continue processing without waiting for the DPs

to finish loading (which is a non-negligible amount of time;

it is in the order of milliseconds). This implies that the mo-

tion_estimation function in the code example (Line 9) will

start executing before the DPs have finished loading. Re-

configuring DPs in parallel to task execution provides a

speedup for the following reason: if an SI is executed, but

not all DPs for the desired implementation are available,

the i-Core will use a slower implementation for the same SI

that requires only the already loaded DPs (see Section 4.1).

When additional DPs are loaded then faster SI implementa-

tions become available and they are used automatically.

After completing execution of a particular kernel (e.g.

motion_estimation in Line 9), an entirely different set of

SIs may be executed on the same share of the fabric at-

tained by the task during its initial invade call. The fabric

must, however, be prepared for execution of the new SIs

(invade and infect, lines 11-13).

5. Results

In this section, a first evaluation of the i-Core is given, fo-

cusing on the application-specific ISE extensions for dif-

ferent tasks. The SIs for speeding up the tasks were devel-

oped manually to demonstrate the feasibility and the per-

formance benefits of the i-Core. Figure 7 shows the

speedup of four different tasks when accelerated by DPs on

the reconfigurable fabric in comparison to executing the

tasks without SIs. The obtained speedup depends on the

size of the reconfigurable fabric that is expressed by the

number of so-called Data Path Containers (DPCs), i.e. re-

gions of the reconfigurable fabric into which a DPs can be

reconfigured. For the results in Figure 7, each task uses the

available number of DPCs on its own, i.e. the reconfigur-

able fabric is not shared among multiple tasks. Tasks like

the CRC calculation require only few DPs for acceleration.

With just one available DPC, a speedup of 2.51x is ob-

tained for CRC. Further DPCs do not lead to further per-

formance benefits for this task. The JPEG decoder ap-

proaches its peak performance after 5 DPCs. For more than

5 DPCs (3.49x speedup) only minor additional performance

improvements are achieved (up to 3.81x). The image-

processing library SUSAN and the H.264 video encoder

achieve noticeable performance improvements of more

than 18x each. Both tasks consist of multiple kernels that

execute repeatedly after each other, i.e. the DPCs are con-

sistently reconfigured to fulfill the tasks requirements.

0

2

4

6

8

10

12

14

16

18

20

22

24

1 3 5 7 9 11 13 15 17 19 21 23 25

A

p

p

l

i

c

a

t

i

o

n

S

p

e

e

d

u

p

Size of Reconfigurable Fabric [#DPCs]

CRC JPEG Decoder

SUSAN H.264 Encoder

Figure 7: Speedup of different tasks (in comparison to

execution without SIs) when executing on the i-Core in

single-tasking mode (i.e. the entire reconfigurable fabric

is available for the task)

Figure 8 shows the speedup of the same tasks as used in

Figure 7, but here all tasks are executed at the same time,

i.e. they need to share the available reconfigurable fabric

(shown as the horizontal axis). Consequently, the perform-

ance improvement for a given number of DPCs is lower

than the one shown in Figure 7, as not all available DPCs

are assigned to one task. Altogether, five tasks execute, as

two instances of the H.264 video encoder are executed at

the same time. The figure shows that the characteristics of

performance improvement is similar to the case where all

tasks execute on their own, i.e. when they can utilize the

entire reconfigurable fabric rather than sharing it. This

demonstrates that it is possible and beneficial to share the

reconfigurable fabric among the tasks.

0

2

4

6

8

10

12

14

16

18

20

1 3 5 7 9 11 13 15 17 19 21 23 25

A

p

p

l

i

c

a

t

i

o

n

S

p

e

e

d

u

p

Size of Reconfigurable Fabric [#DPCs]

CRC

JPEG Decoder

SUSAN

H.264 Encoder 1

H.264 Encoder 2

Figure 8: Speedup of different tasks (in comparison to

execution without SIs) when executing on the i-Core in

multi-tasking mode (i.e. the reconfigurable fabric is

shared among all tasks)

6. Conclusion

This paper presented the i-Core concept, a reconfigurable

processor that provides a very high adaptivity by utilizing a

reconfigurable fabric (to implement Special Instruction)

and a reconfigurable microarchitecture. The combination of

an adaptive instruction set architecture and microarchitec-

ture allows optimizing performance-wise relevant charac-

teristics of the i-Core to task-specific requirements, which

makes the i-Core especially beneficial in multi-tasking sce-

narios, where different tasks compete for the available re-

sources. We evaluated the i-Core and demonstrated its con-

ceptual advantages when several of these tasks execute to-

gether in a multi-tasking environment.

7. Acknowledgement

This work was supported by the German Research Founda-

tion (DFG) as part of the Transregional Collaborative Re-

search Centre "Invasive Computing" (SFB/TR 89)

References

[1] J. Henkel, Closing the SoC design gap, Computer, vol. 36,

no. 9, pp. 119121, September 2003.

[2] K. Keutzer, S. Malik, and A. R. Newton, From ASIC to

ASIP: The next design discontinuity, in International Con-

ference on Computer Design (ICCD). IEEE Computer Soci-

ety, September 2002, pp. 8490.

[3] J. Henkel and S. Parameswaran, Designing Embedded Proc-

essors: A Low Power Perspective. Springer Publishing Com-

pany, Incorporated, 2007.

[4] N. Clark, H. Zhong, and S. Mahlke, Processor acceleration

through automated instruction set customization, in Pro-

ceedings of the International Symposium on Microarchitec-

ture (MICRO), December 2003, pp. 129140.

[5] K. Atasu, L. Pozzi, and P. Ienne, Automatic application-

specific instruction-set extensions under microarchitectural

constraints, in Proceedings of the 40th annual Conference

on Design Automation (DAC), June 2003, pp. 256261.

[6] F. Sun, S. Ravi, A. Raghunathan, and N. K. Jha, A scalable

application-specific processor synthesis methodology, in

Proceedings of the International Conference on Computer-

Aided Design (ICCAD), November 2003, pp. 283290.

[7] N. Cheung, J. Henkel, and S. Parameswaran, Rapid configu-

ration and instruction selection for an ASIP: a case study, in

IEEE/ACM Proceedings of Design Automation and Test in

Europe (DATE), March 2003, pp. 802807.

[8] K. Compton and S. Hauck, Reconfigurable computing: a

survey of systems and software, ACM Computing Surveys

(CSUR), vol. 34, no. 2, pp. 171210, June 2002.

[9] F. Barat and R. Lauwereins, Reconfigurable instruction set

processors: A survey, in Proceedings of the 11th IEEE In-

ternational Workshop on Rapid System Prototyping (RSP),

June 2000, pp. 168173.

[10] S. Vassiliadis and D. Soudris, Fine- and Coarse-Grain Re-

configurable Computing. Springer Publishing Company, In-

corporated, 2007.

[11] H. P. Huynh and T. Mitra, Runtime adaptive extensible em-

bedded processors a survey, in Proceedings of the 9th In-

ternational Workshop on Embedded Computer Systems: Ar-

chitectures, Modeling, and Simulation (SAMOS), July 2009,

pp. 215225.

[12] T. v. Sydow, B. Neumann, H. Blume, and T. G. Noll, Quan-

titative analysis of embedded FPGA-architectures for arith-

metic, in Proceedings of the IEEE 17th International Con-

ference on Application-specific Systems, Architectures and

Processors (ASAP), September 2006, pp. 125131.

[13] B. Neumann, T. von Sydow, H. Blume, and T. G. Noll, De-

sign flow for embedded FPGAs based on a flexible architec-

ture template, in Proceedings of the conference on Design,

automation and test in Europe (DATE), 2008, pp. 5661.

[14] M. Huebner, P. Figuli, R. Girardey et al., A heterogeneous

multicore system on chip with run-time reconfigurable vir-

tual FPGA architecture, in Proc. of Reconfigurable Archi-

tectures Workshop (RAW), May 2011.

[15] S. Vassiliadis, S. Wong, G. Gaydadjiev et al., The MOLEN

polymorphic processor, IEEE Transactions on Computers

(TC), vol. 53, no. 11, pp. 13631375, November 2004.

[16] R. Wittig and P. Chow, OneChip: an FPGA processor with

reconfigurable logic, in IEEE Symposium on FPGAs for

Custom Computing Machines, April 1996, pp. 126135.

[17] J. A. Jacob and P. Chow, Memory interfacing and instruc-

tion specification for reconfigurable processors, in Proceed-

ings of the ACM/SIGDA 7th international symposium on

Field Programmable Gate Arrays (FPGA), February 1999,

pp. 145154.

[18] J. E. Carrillo and P. Chow, The effect of reconfigurable

units in superscalar processors, in Proceedings of the inter-

national symposium on Field Programmable Gate Arrays

(FPGA), February 2001, pp. 141150.

[19] R. Lysecky, G. Stitt, and F. Vahid, Warp processors, ACM

Transactions on Design Automation of Electronic Systems

(TODAES), vol. 11, no. 3, pp. 659681, June 2006.

[20] M. Dales, Managing a reconfigurable processor in a general

purpose workstation environment, in Design, Automation

and Test in Europe Conference and Exhibition (DATE),

March 2003, pp. 980985.

[21] E. Lbbers and M. Platzner, ReconOS: An RTOS support-

ing hard- and software threads, in International Conference

on Field Programmable Logic and Applications (FPL), Au-

gust 2007, pp. 441446.

[22] L. Bauer, M. Shafique, S. Kramer, and J. Henkel, RISPP:

Rotating Instruction Set Processing Platform, in Proceed-

ings of the 44th annual Conference on Design Automation

(DAC), June 2007, pp. 791796.

[23] L. Bauer, M. Shafique, and J. Henkel, Efficient resource

utilization for an extensible processor through dynamic in-

struction set adaptation, IEEE Transactions on Very Large

Scale Integration Systems (TVLSI), Special Section on Appli-

cation-Specific Processors, vol. 16, no. 10, pp. 12951308,

October 2008.

[24] R. Knig, L. Bauer, T. Stripf et al., KAHRISMA: A novel

hypermorphic reconfigurable-instruction-set multi-grained-

array architecture, in Proceedings of the 13th conference on

Design, Automation and Test in Europe (DATE), March

2010, pp. 819824.

[25] W. Ahmed, M. Shafique, L. Bauer, and J. Henkel, mRTS:

Run-time system for reconfigurable processors with multi-

grained instruction-set extensions, in Proceedings of the

14th conference on Design, Automation and Test in Europe

(DATE), March 2011, pp. 15541559.

[26] SPARC International, Inc., The SPARC architecture man-

ual, version 8, http://www.sparc.org/specificationsDocu-

ments.html#V8, http://gaisler.com/doc/sparcv8.pdf.

[27] L. Bauer, M. Shafique, and J. Henkel, A computation- and

communication- infrastructure for modular special instruc-

tions in a dynamically reconfigurable processor, in 18th In-

ternational Conference on Field Programmable Logic and

Applications (FPL), September 2008, pp. 203208.

[28] J. Teich, J. Henkel, A. Herkersdorf et al., Invasive comput-

ing: An overview, in Multiprocessor System-on-Chip

Hardware Design and Tool Integration, M. Hbner and

J. Becker, Eds. Springer, Berlin, Heidelberg, 2011, pp. 241

268.

[29] L. Bauer, M. Shafique, and J. Henkel, Run-time instruction

set selection in a transmutable embedded processor, in Pro-

ceedings of the 45th annual Conference on Design Automa-

tion (DAC), June 2008, pp. 5661.

[30] R. I. Bahar and S. Manne, Power and energy reduction via

pipeline balancing, in Proceedings of the International

Symp. on Computer architecture (ISCA), 2001, pp. 218229.

[31] S. Ghiasi, J. Casmira, and D. Grunwald, Using IPC varia-

tion in workloads with externally specified rates to reduce

power consumption, in Workshop on Complexity Effective

Design, 2000.

[32] T. Juan, S. Sanjeevan, and J. J. Navarro, Dynamic history-

length fitting: a third level of adaptivity for branch predic-

tion, in Proceedings of the international symposium on

Computer architecture (ISCA), 1998, pp. 155166.

[33] D. H. Albonesi, Selective cache ways: on-demand cache re-

source allocation, in Proceedings of the 32nd annual

ACM/IEEE international symposium on Microarchitecture

(MICRO), 1999, pp. 248259.

[34] S. Kaxiras, Z. Hu, and M. Martonosi, Cache decay: exploit-

ing generational behavior to reduce cache leakage power, in

Proceedings of the 28th annual international symposium on

Computer architecture (ISCA), 2001, pp. 240251.

[35] A. V. Veidenbaum, W. Tang, R. Gupta et al., Adapting cache

line size to application behavior, in Proceedings of the intl.

conference on Supercomputing (ICS), 1999, pp. 145154.

[36] F. Nowak, R. Buchty, and W. Karl, A run-time reconfigur-

able cache architecture, in International Conference on

Parallel Computing: Architectures, Algorithms and Applica-

tions, 2007, pp. 757766.

[37] J. Tao, M. Kunze, F. Nowak et al., Performance advantage

of reconfigurable cache design on multicore processor sys-

tems, International Journal of Parallel Programming,

vol. 36, no. 3, pp. 347360, 2008.

You might also like

- A Light-Weight Approach To Dynamical Runtime Linking Supporting Heterogenous, Parallel, and Reconfigurable ArchitecturesDocument12 pagesA Light-Weight Approach To Dynamical Runtime Linking Supporting Heterogenous, Parallel, and Reconfigurable ArchitecturesAbhishek ChaturvediNo ratings yet

- A Framework For Reconfigurable Computing: Task Scheduling and Context ManagementDocument16 pagesA Framework For Reconfigurable Computing: Task Scheduling and Context ManagementrmaestrecetemNo ratings yet

- A Scalable Synthesis Methodology For Application-Specific ProcessorsDocument14 pagesA Scalable Synthesis Methodology For Application-Specific ProcessorsMurali PadalaNo ratings yet

- Host-Compiled Multicore RTOS Simulator For Embedded Real-Time Software DevelopmentDocument6 pagesHost-Compiled Multicore RTOS Simulator For Embedded Real-Time Software DevelopmentRey JohnNo ratings yet

- Unit 1 and 2 PPTsDocument81 pagesUnit 1 and 2 PPTsKundankumar Saraf100% (2)

- Artigo CompDocument16 pagesArtigo CompcesarNo ratings yet

- 3-Tier Reconfiguration Model For Fpgas Using Hardwired Network On ChipDocument6 pages3-Tier Reconfiguration Model For Fpgas Using Hardwired Network On ChipAqeel WahlahNo ratings yet

- OS Final PaperDocument21 pagesOS Final PaperSharan SharmaNo ratings yet

- A Gossip Protocol For Dynamic Resource Management in Large Cloud EnvironmentsDocument13 pagesA Gossip Protocol For Dynamic Resource Management in Large Cloud EnvironmentsNamith DevadigaNo ratings yet

- Os Support AdaptiveDocument12 pagesOs Support AdaptiveHugo MarcondesNo ratings yet

- APRIL: A Processor Architecture MultiprocessingDocument11 pagesAPRIL: A Processor Architecture MultiprocessingCarlos LoboNo ratings yet

- Pid 411805Document9 pagesPid 411805shadigamalhassanNo ratings yet

- Support For Hierarchical Scheduling in FreeRTOSDocument10 pagesSupport For Hierarchical Scheduling in FreeRTOSAviral MittalNo ratings yet

- HPCS 2018 Extended Abstract Eric RuttenDocument3 pagesHPCS 2018 Extended Abstract Eric RuttensaraNo ratings yet

- Article 1 R-E-W HeuristicDocument11 pagesArticle 1 R-E-W HeuristichelinNo ratings yet

- From Reconfigurable Architectures To Self-Adaptive Autonomic SystemsDocument7 pagesFrom Reconfigurable Architectures To Self-Adaptive Autonomic SystemsLucas Gabriel CasagrandeNo ratings yet

- Computação ParalelaDocument18 pagesComputação ParalelaGuilherme AlminhanaNo ratings yet

- Efficient Reconfigurable On-ChipDocument11 pagesEfficient Reconfigurable On-ChipsuganyaNo ratings yet

- 24 Boden2008Document6 pages24 Boden2008Rahul ShandilyaNo ratings yet

- Processes and Resource Management in a Scalable Many-Core OSDocument6 pagesProcesses and Resource Management in a Scalable Many-Core OSNemraNo ratings yet

- Reconfigurable Computing: Architectures and Design MethodsDocument15 pagesReconfigurable Computing: Architectures and Design MethodsLuis Charrua FigueiredoNo ratings yet

- Ijsta V1n4r16y15Document5 pagesIjsta V1n4r16y15IJSTANo ratings yet

- Scheduled Dataflow: Execution Paradigm, Architecture, and Performance EvaluationDocument13 pagesScheduled Dataflow: Execution Paradigm, Architecture, and Performance EvaluationssfofoNo ratings yet

- Kerrighed and Parallelism: Cluster Computing On Single System Image Operating SystemsDocument10 pagesKerrighed and Parallelism: Cluster Computing On Single System Image Operating SystemsPercival Jacinto Leigh511No ratings yet

- 4-Bit Processing Unit Design Usingvhdl Structural Modeling For Multiprocessor ArchitectureDocument6 pages4-Bit Processing Unit Design Usingvhdl Structural Modeling For Multiprocessor ArchitectureArpan AdakNo ratings yet

- Hardware-Based Job Queue Management For Manycore Architectures and Openmp EnvironmentsDocument12 pagesHardware-Based Job Queue Management For Manycore Architectures and Openmp EnvironmentsManali BhutiyaniNo ratings yet

- Design and Implementation of A Cache Hierarchy-Aware Task Scheduling For Parallel Loops On Multicore ArchitecturesDocument13 pagesDesign and Implementation of A Cache Hierarchy-Aware Task Scheduling For Parallel Loops On Multicore ArchitecturesCS & ITNo ratings yet

- How Do: Evolving Operating Systems and Architectures: Kernel Implementors Catch Up?Document4 pagesHow Do: Evolving Operating Systems and Architectures: Kernel Implementors Catch Up?Ibraheem FaheemNo ratings yet

- FPGA BasedDocument7 pagesFPGA BasedPushpa LathaNo ratings yet

- Compusoft, 3 (9), 1103-1107 PDFDocument5 pagesCompusoft, 3 (9), 1103-1107 PDFIjact EditorNo ratings yet

- Embedded Multi-Processor System TopicsDocument3 pagesEmbedded Multi-Processor System TopicsmathhoangNo ratings yet

- 08777130Document12 pages08777130xrovljolscjvmiszchNo ratings yet

- Zygos: Achieving Low Tail Latency For Microsecond-Scale Networked TasksDocument17 pagesZygos: Achieving Low Tail Latency For Microsecond-Scale Networked Tasksrose jackNo ratings yet

- Scheduling Elastic Applications in Compositional Real-Time SystemsDocument8 pagesScheduling Elastic Applications in Compositional Real-Time Systemsguezaki6636No ratings yet

- A Reconfigurable Heterogeneous Multicore With A Homogeneous ISADocument6 pagesA Reconfigurable Heterogeneous Multicore With A Homogeneous ISASatyaJaswanthNo ratings yet

- Reuse and Refactoring of GPU Kernels To Design Complex ApplicationsDocument8 pagesReuse and Refactoring of GPU Kernels To Design Complex Applicationsshubh9190No ratings yet

- RECONFIGURABLE COMPUTING PresentationDocument23 pagesRECONFIGURABLE COMPUTING PresentationNitin KumarNo ratings yet

- Performance Analysis of N-Computing Device Under Various Load ConditionsDocument7 pagesPerformance Analysis of N-Computing Device Under Various Load ConditionsInternational Organization of Scientific Research (IOSR)No ratings yet

- Rlu Sosp15 PaperDocument16 pagesRlu Sosp15 PapersymmetricdupNo ratings yet

- GPU Versus FPGA For High Productivity Computing: Imperial College London, Electrical and Electronic Engineering, LondonDocument6 pagesGPU Versus FPGA For High Productivity Computing: Imperial College London, Electrical and Electronic Engineering, LondonUsama JavedNo ratings yet

- Operating Systems On Socs: A Good Idea?Document8 pagesOperating Systems On Socs: A Good Idea?Omer TariqNo ratings yet

- Improving GPU Multitasking Efficiency Using Dynamic Resource SharingDocument4 pagesImproving GPU Multitasking Efficiency Using Dynamic Resource SharingsahanaNo ratings yet

- Adaptive Elasticity Policies For Staging Based - 2023 - Future Generation CompuDocument15 pagesAdaptive Elasticity Policies For Staging Based - 2023 - Future Generation CompuRuchira SahaNo ratings yet

- Schedubality - FullDocDocument57 pagesSchedubality - FullDocbhavadharaniNo ratings yet

- Tishk International University Computer Engineering DepartmentDocument11 pagesTishk International University Computer Engineering DepartmentMahmoud M. MusstafaNo ratings yet

- IOSR JournalsDocument8 pagesIOSR JournalsInternational Organization of Scientific Research (IOSR)No ratings yet

- Creating HWSW Co-Designed MPSoPCs From High Level Programming ModelsDocument7 pagesCreating HWSW Co-Designed MPSoPCs From High Level Programming ModelsRamon NepomucenoNo ratings yet

- On-The - y Composition of FPGA-Based SQL Query Accelerators Using A Partially Reconfigurable Module LibraryDocument8 pagesOn-The - y Composition of FPGA-Based SQL Query Accelerators Using A Partially Reconfigurable Module LibraryTimNo ratings yet

- Electronics Circuit DesignDocument8 pagesElectronics Circuit DesignBikash RoutNo ratings yet

- A Survey of Performance Modeling and Simulation Techniques For Accelerator-Based ComputingDocument10 pagesA Survey of Performance Modeling and Simulation Techniques For Accelerator-Based ComputingMiguel Ramírez CarrilloNo ratings yet

- Formal Definitions and Performance Comparison of Consistency Models For Parallel File SystemsDocument15 pagesFormal Definitions and Performance Comparison of Consistency Models For Parallel File SystemsappsscribdNo ratings yet

- An Operating System Architecture For Organic ComputingDocument15 pagesAn Operating System Architecture For Organic ComputingBill PetrieNo ratings yet

- Parallelism Joins Concurrency For Multicore Embedded ComputingDocument6 pagesParallelism Joins Concurrency For Multicore Embedded ComputingRaveendra Reddy PadalaNo ratings yet

- Multiprocessor System ArchitectureDocument11 pagesMultiprocessor System ArchitectureAditya SihagNo ratings yet

- A Multicore Reconfigurable Processor Platform For Energy and Throughput Aware ApplicationsDocument3 pagesA Multicore Reconfigurable Processor Platform For Energy and Throughput Aware Applicationslovelyosmile253No ratings yet

- Isca TSPDocument14 pagesIsca TSPupdatesmockbankNo ratings yet

- COA MidtermDocument13 pagesCOA MidtermAliaa TarekNo ratings yet

- Managing Qos Flows at Task Level in Noc-Based Mpsocs: Everton Carara, Ney Calazans, Fernando MoraesDocument6 pagesManaging Qos Flows at Task Level in Noc-Based Mpsocs: Everton Carara, Ney Calazans, Fernando MoraesCristian Machado GoulartNo ratings yet

- Science China Information Sciences: Mingzhen LI, Yi Liu, Bangduo CHEN, Hailong YANG, Zhongzhi LUAN & Depei QIANDocument19 pagesScience China Information Sciences: Mingzhen LI, Yi Liu, Bangduo CHEN, Hailong YANG, Zhongzhi LUAN & Depei QIANBoul chandra GaraiNo ratings yet

- Model-Driven Online Capacity Management for Component-Based Software SystemsFrom EverandModel-Driven Online Capacity Management for Component-Based Software SystemsNo ratings yet

- Internet Protocol: Based On Notes From D. HollingerDocument45 pagesInternet Protocol: Based On Notes From D. Hollingerinr0000zhaNo ratings yet

- Sigtran Training 2 DayDocument5 pagesSigtran Training 2 Dayinr0000zhaNo ratings yet

- SCTP Sigtran and SS7Document26 pagesSCTP Sigtran and SS7sergey_750% (2)

- OSS Technical PresentationDocument25 pagesOSS Technical Presentationjcdlopes390No ratings yet

- Bash Quick ReferenceDocument7 pagesBash Quick ReferencewjtwjNo ratings yet

- Sigtran Training 2 DayDocument5 pagesSigtran Training 2 Dayinr0000zhaNo ratings yet

- Vsphere Esxi Vcenter Server 51 Storage GuideDocument272 pagesVsphere Esxi Vcenter Server 51 Storage GuideVinod DhakaNo ratings yet

- NP WhitePaperDocument19 pagesNP WhitePaperJoão CostaNo ratings yet

- Dialogic Diva PRI Media Boards: This Datasheet Discusses The Following ProductsDocument8 pagesDialogic Diva PRI Media Boards: This Datasheet Discusses The Following ProductsManolo BalboaNo ratings yet

- Specs PowerHawk-Pro enDocument8 pagesSpecs PowerHawk-Pro eninr0000zhaNo ratings yet

- NP WhitePaperDocument19 pagesNP WhitePaperJoão CostaNo ratings yet

- Gregory Test PlanDocument5 pagesGregory Test Plananon-770282100% (1)

- Dekkers CountingAgileProjectsDocument9 pagesDekkers CountingAgileProjectsinr0000zhaNo ratings yet

- A Python BookDocument94 pagesA Python BookGerald GavinaNo ratings yet

- SystemDocument1 pageSysteminr0000zhaNo ratings yet

- Run-Time Scaling of Microarchitecture Resources in A Processor For Energy SavingsDocument4 pagesRun-Time Scaling of Microarchitecture Resources in A Processor For Energy Savingsinr0000zhaNo ratings yet

- Gregory Test PlanDocument5 pagesGregory Test Plananon-770282100% (1)

- Vi Editor Cheat Sheet 3Document7 pagesVi Editor Cheat Sheet 3Anand ChaudharyNo ratings yet

- Dialogic DSI Development Package For Linux Release Notes: Document Reference: RN003DPK Publication Date: March 2013Document63 pagesDialogic DSI Development Package For Linux Release Notes: Document Reference: RN003DPK Publication Date: March 2013inr0000zhaNo ratings yet

- Lvs TutorialDocument31 pagesLvs TutorialLuigi NotoNo ratings yet

- MD CoreFileDocument1 pageMD CoreFileinr0000zhaNo ratings yet

- Performance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing ModelingDocument4 pagesPerformance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing Modelinginr0000zhaNo ratings yet

- Automated Discovery of Custom Instructions for Extensible ProcessorsDocument8 pagesAutomated Discovery of Custom Instructions for Extensible Processorsinr0000zhaNo ratings yet

- Vi Editor Cheat Sheet 3Document7 pagesVi Editor Cheat Sheet 3Anand ChaudharyNo ratings yet

- Run-Time Scaling of Microarchitecture Resources in A Processor For Energy SavingsDocument4 pagesRun-Time Scaling of Microarchitecture Resources in A Processor For Energy Savingsinr0000zhaNo ratings yet

- Cisco 2621 Gateway-PBX Interoperability: Ericsson MD-110 With T1 PRI SignalingDocument11 pagesCisco 2621 Gateway-PBX Interoperability: Ericsson MD-110 With T1 PRI Signalinginr0000zhaNo ratings yet

- MipsabiDocument258 pagesMipsabiinr0000zhaNo ratings yet

- Performance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing ModelingDocument4 pagesPerformance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing Modelinginr0000zhaNo ratings yet

- Performance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing ModelingDocument4 pagesPerformance Behavior Analysis of The Present 3-Level Cache System For Multi-Core Systems Using Queuing Modelinginr0000zhaNo ratings yet

- Discover Pro April - 2018 - EN PDFDocument5 pagesDiscover Pro April - 2018 - EN PDFmiguel2alNo ratings yet

- System Requirements Specification ExampleDocument2 pagesSystem Requirements Specification ExampleChan Pei XinNo ratings yet

- Open FTADocument2 pagesOpen FTAAkhmad NizarNo ratings yet

- E BOOK 10 Time Consuming IT Tasks You Should Automate Today by Gabby NizriDocument24 pagesE BOOK 10 Time Consuming IT Tasks You Should Automate Today by Gabby Nizrimalli karjunNo ratings yet

- OBIEE DrilldownDocument2 pagesOBIEE DrilldownChaitu BachuNo ratings yet

- Function Trend Control V 7Document58 pagesFunction Trend Control V 7Muhamed AlmaNo ratings yet

- BA BSW Sem 1 Unit 2Document28 pagesBA BSW Sem 1 Unit 2Joy RathodNo ratings yet

- Weblogicadminmanagedserversautomaticmigration 130324181906 Phpapp01 PDFDocument12 pagesWeblogicadminmanagedserversautomaticmigration 130324181906 Phpapp01 PDFSai KadharNo ratings yet

- MTechAI CurriculumDocument2 pagesMTechAI CurriculumAayush SugandhNo ratings yet

- Linked Lists - Search, Deletion and Insertion: Reading: Savitch, Chapter10Document39 pagesLinked Lists - Search, Deletion and Insertion: Reading: Savitch, Chapter10mhòa_43No ratings yet

- PCP Users and Administrators GuideDocument123 pagesPCP Users and Administrators GuideClaudiaNo ratings yet

- Embedded C With Pic18Document61 pagesEmbedded C With Pic18Baris AdaliNo ratings yet

- Devops (3) NewDocument9 pagesDevops (3) NewMahesh PasulaNo ratings yet

- Section 1-3: Strategies For Problem SolvingDocument10 pagesSection 1-3: Strategies For Problem SolvingPatel HirenNo ratings yet

- MPLAB XC8 C Compiler User GuideDocument597 pagesMPLAB XC8 C Compiler User GuideOsmar Gabriel PeñalozaNo ratings yet

- Cache MemoryDocument10 pagesCache MemoryasrNo ratings yet

- The Digital Radiography SystemDocument9 pagesThe Digital Radiography Systemrokonema nikorekaNo ratings yet

- Exadata x8.2Document25 pagesExadata x8.2Fran GurgelNo ratings yet

- Manual FreeDocument958 pagesManual FreeAaron Alberto SanchezNo ratings yet

- Class Element El1, El2, El3: Selector Example SelectsDocument1 pageClass Element El1, El2, El3: Selector Example SelectsElvis KadicNo ratings yet

- Archmodels Vol 121Document14 pagesArchmodels Vol 121gombestralala0% (1)

- ReadmeDocument2 pagesReadmeHimanshu ModiNo ratings yet

- Certified Ethical Hacker v10 PDFDocument3 pagesCertified Ethical Hacker v10 PDFGustavo FerreiraNo ratings yet

- 04-Firm 85e PDFDocument198 pages04-Firm 85e PDFLotfi HarrathNo ratings yet

- ArmitageDocument28 pagesArmitageRizky Aditya ImranNo ratings yet

- Manual TestingDocument3 pagesManual TestingPrasad GovindarajuNo ratings yet

- tcptrace(1): TCP connection analysis tool - Linux man page summaryDocument7 pagestcptrace(1): TCP connection analysis tool - Linux man page summaryborntowin08No ratings yet

- GCV405-RCII-Chapter 12 - Introduction To Seismic Design - Norms and StandardsDocument3 pagesGCV405-RCII-Chapter 12 - Introduction To Seismic Design - Norms and StandardsOec EngNo ratings yet

- LabVIEW For Measurement and Data AnalysisDocument9 pagesLabVIEW For Measurement and Data Analysismikeiancu20023934No ratings yet

- Scripting Languages LabDocument14 pagesScripting Languages LabHajiram Beevi100% (1)