Professional Documents

Culture Documents

Projct Report On A Foreground Extraction Algorithm Based On Adaptively

Uploaded by

Sabithkk20070 ratings0% found this document useful (0 votes)

15 views7 pagesThe ability to estimate non-stationary temporal distributions efficiently and accurately is a key element for any robust vision system. Background subtraction is a method typically used to segment moving regions in video sequences by comparing each new frame to a model of the scene background. It has been used successfully for indoor and outdoor applications. Generally speaking, there are two types of background subtraction algorithms: pixel modeling and global modeling

Original Title

Projct Report on a Foreground Extraction Algorithm Based on Adaptively

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe ability to estimate non-stationary temporal distributions efficiently and accurately is a key element for any robust vision system. Background subtraction is a method typically used to segment moving regions in video sequences by comparing each new frame to a model of the scene background. It has been used successfully for indoor and outdoor applications. Generally speaking, there are two types of background subtraction algorithms: pixel modeling and global modeling

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

15 views7 pagesProjct Report On A Foreground Extraction Algorithm Based On Adaptively

Uploaded by

Sabithkk2007The ability to estimate non-stationary temporal distributions efficiently and accurately is a key element for any robust vision system. Background subtraction is a method typically used to segment moving regions in video sequences by comparing each new frame to a model of the scene background. It has been used successfully for indoor and outdoor applications. Generally speaking, there are two types of background subtraction algorithms: pixel modeling and global modeling

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 7

A Foreground Extraction Algorithm Based on Adaptively

Adjusted Gaussian Mixture Models

AbstractBackground subtraction is a widely used method for moving object detection in computer

vision field. To cope with highly dynamic and complex environments, the mixture of models has been

proposed. In this paper, a background subtraction method is proposed based on the popular Gaussian

Mixture Models technique and a scheme is put forward to adaptively adjust the number of Gaussian

distributions aiming at speeding up execution. Moreover, edge-based image is utilized to weaken the

effect of illumination changes and shadows of moving objects. The final foreground mask is extracted

by the proposed data fusion scheme. Experimental results validate the performance of proposed

algorithm in both computational complexity and segmentation quality.

Keywords-Background subtraction; Gaussian Mixture Model;

Motion detection;

I. INTRODUCTION

The ability to estimate non-stationary temporal distributions efficiently and accurately is a key element

for any robust vision system. Background subtraction is a method typically used to segment moving

regions in video sequences by comparing each new frame to a model of the scene background. It has

been used successfully for indoor and outdoor applications. Generally speaking, there are two types of

background subtraction algorithms: pixel modeling and global modeling. Pixelwise approaches take each

pixel as an output from an independent random process, while global modeling considers the spatial

correlations. One of simple adaptive background differencing technique has become a simplest solution,

since it is sensitive to motion in background and not influenced by the gradual illumination changes,

however, its high sensitivity makes this algorithm come out with a very bad result in complex

background, especially with periodic noise existing. In an actual scene, the complex background such as

snowy or windy conditions, make the conventional algorithm unfit for the real surveillance systems.

Stauffer and Grimson [2,5] modeled each pixel as a mixture of Gaussians and an online EM Algorithm

was proposed by P. KaewTraKulPong et al. [6] to update the model. Even though 3 to 5 Gaussian

distributions are able to model a multi-modal background with difficult situations like shaking branches

of tree, clutter and so forth, there is a fact that this kind of pixel-based background modeling is sensitive

to noise and illumination change. Lots of effort made to modify the model or integrate

III. GMM BASED ADAPTIVELY ADJUSTED MECHANISM

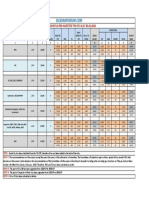

Even though K (3 to 5) Gaussian distributions are capable of modeling a multimodal background, the

number of Gaussian distributions brings a huge computational load for surveillance system. If K equals to

5, it achieves to 320x240x5=384000, with complex computation for learning process of RGB 3 channels

to update weight, mean, and variance, which makes the surveillance system difficult to achieve real time,

let alone the higher resolution for current needs. In fact, not all the pixels of the background change

repetitively. To the pixels where less repetitive motion occurs, such as the areas of ground, houses and

parking lot in the scene of Fig. 1(a), only the first Fig. 1(b) and second Fig. 1(c) highest weighted

Gaussians at each pixel are adequate to model the multi-possibilities for background, and as the Fig.

1(d), (e), (f) show, most of third, fourth and fifth highest weighted Gaussians were much less updated

except the car-passing area where should be foreground area. Based on the analysis above, Adaptively

Adjustment Mechanism was proposed to reduce the number of the Gaussian distributions which offered

less contribution to the multi-possibilities in the process of modeling background. From the analysis and

experiments, different model number can be adopted for different pixels. The update of weight, mean

and variance for our proposal is based on online EM algorithm [6], which allowed fast convergence on a

stable background model for GMM,proposed by P. KaewTraKulPong et al. The main procedure

of the adaptive Elimination Mechanism for GMM is as follows:

E-step: As the online EM algorithm does, we begin our estimating of the Gaussian Mixture Model by

expected sufficient statistics, which is called E-step. Due to the unpredictable factor and possibilities for

the complexity of background pixel, and the first L frames is very important for Gaussian models to

dominant background component and achieve stable adaptations,we keep the number of Gaussians

models, K, for each pixel fixed during E-step. Experiments also show these could provide a good estimate

which helps to improve the accuracy for M-step process. For initialization part,

VI. CONCLUSIONS

This paper presents a new background subtraction scheme based on the Gaussian Mixture Models. We

proposed an Adaptively Adjusted Mechanism to reduce less useful Gaussian distributions which

contribute less to background modeling. Through this method, the execution speed for

video is improved. Additionally, an image segmentation based on edge information has been introduced.

By lessening the influence from illumination changes and excluding the shadow of moving object, the

quality of segmentation mask is enhanced consequently. Experimental results validate the gains in both

processing speed and segmentation quality. The proposed algorithm works very well in various complex

background for both outdoor and indoor surveillance system.

Histogram-based Foreground Object Extraction for

Indoor

and Outdoor Scenes

Mandar Kulkarni

_

IPCV Lab, Electrical Engg. Department

Indian Institute of Technology Madras

Chennai, India

mandareln40@gmail.com

ABSTRACT

Extracting foreground objects is an important task in many video processing/analysis systems. In this

paper, we propose a technique for foreground object extraction, under static camera condition. In our

approach the spatial histogram of a single background image is modeled as Mixture of Gaussians and

this model is updated after every few frames. To extract the foreground, input frames are compared with

current background frame model and foreground pixels are classified according to intensity differences.

To mitigate the errors caused due to movement of the background objects (e.g tree leaves in outdoor

scenes), we also incorporate optical flow in an efficient manner. We demonstrate performance of our

approach on various indoor and outdoor scenes.

Foreground extraction is an important task in many computervision applications. In this paper, we

propose an method which models the histogram of an initial background frame by the mixtures of

Gaussians. Generally, a natural background includes large objects such as trees, road, floor, buildings,

walls etc., each of which contains pixels with similar intensity values, but whose intensities differ

considerably from each other. Hence, the histogram of the background frame containing multiple

objects, is usually multi-modal and can be approximated by the Mixture of Gaussian. The number of

Gaussians is determined by the number of objects present in the background. We also update the

background histogram model at regular intervals to adapt to illumination variations over time. We use

the Expectation Maximization (EM) algorithm to find maximum likelihood parameters of every Gaussian

component. To detect the foreground objects, we compare input frame with the current background

histogram model. Pixels showing higher intensity deviations than background pixels, are classified as

foreground objects. The threshold for foreground classification is computed from the current background

model. We also account for the fact that if a classified foreground object remains stationary for long time,

its corresponding pixels are re-classified as background. To improve the results under significant

background motion, we also incorporate optical flow efficiently in our framework. We provide various

qualitative and quantitative results on indoor and outdoor scenes. An illustration of our approach is

shown in Fig. 1. Note the different intensities of the background objects such as the road, building etc.

These differences show up in the multi-modal histogram (Fig.1 (b)) where blue line indicates histogram

of background frame and red line indicates Gaus sian approximation of the histogram. The foreground

extraction results for the scene are shown in Figs.1 (d,f) for the corresponding frames in Figs.1 (c,e).

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- 7th CPC Salary Chart 2Document1 page7th CPC Salary Chart 2गुंजन सिन्हाNo ratings yet

- Solution Manual Hcs12 Microcontrollers and Embedded Systems 1st Edition Ali MazidiDocument14 pagesSolution Manual Hcs12 Microcontrollers and Embedded Systems 1st Edition Ali MazidiSabithkk20070% (1)

- LSJ1318 - Towards A Statistical Framework For Source Anonymity in Sensor NetworksDocument3 pagesLSJ1318 - Towards A Statistical Framework For Source Anonymity in Sensor NetworksSwathi ManthenaNo ratings yet

- Mobile Relay Configuration in Data - Intensive Wireless Sensor NetworksDocument5 pagesMobile Relay Configuration in Data - Intensive Wireless Sensor NetworksSabithkk2007No ratings yet

- Projct Report On A Foreground Extraction Algorithm Based On AdaptivelyDocument7 pagesProjct Report On A Foreground Extraction Algorithm Based On AdaptivelySabithkk2007No ratings yet

- Ultra High Strength Concrete or UHSCDocument6 pagesUltra High Strength Concrete or UHSCSabithkk2007No ratings yet

- Matlab Code For Basic DSP Signals MATLAB PROGRAM TO DISPLAY THE PROPERTIES OF DISCRETE FOURIER TRANSFORM (DFT)Document16 pagesMatlab Code For Basic DSP Signals MATLAB PROGRAM TO DISPLAY THE PROPERTIES OF DISCRETE FOURIER TRANSFORM (DFT)Sabithkk2007No ratings yet

- Electronic Smart Helmet SystemDocument1 pageElectronic Smart Helmet SystemSabithkk2007No ratings yet

- Ultra High Strength Concrete or UHSCDocument6 pagesUltra High Strength Concrete or UHSCSabithkk2007No ratings yet

- Shopping ManagementDocument2 pagesShopping ManagementSabithkk2007No ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Use of Information TechnologyDocument18 pagesThe Use of Information TechnologyZidan ZaifNo ratings yet

- Artificial IntelligenceDocument1 pageArtificial IntelligenceRajat SharmaNo ratings yet

- Inductive Bias Hypothesis Hypothesis Space VarianceDocument12 pagesInductive Bias Hypothesis Hypothesis Space VariancesuryavamshimeghanaNo ratings yet

- OD Unit 4Document20 pagesOD Unit 4dhivyaNo ratings yet

- Artificial Intelligence AssignmentDocument3 pagesArtificial Intelligence AssignmentPaul BrianNo ratings yet

- Whole Education 2019 Annual Conference ProgrammeDocument16 pagesWhole Education 2019 Annual Conference ProgrammeWhole EducationNo ratings yet

- Robotic Process Automation The Next Revolution of Corporate Functions 0 PDFDocument16 pagesRobotic Process Automation The Next Revolution of Corporate Functions 0 PDFVijay SamuelNo ratings yet

- Face Recognition Using PCA Based Algorithm and Neural NetworkDocument4 pagesFace Recognition Using PCA Based Algorithm and Neural NetworkBhavya SahayNo ratings yet

- A Year of Connection, Action and ImpactDocument97 pagesA Year of Connection, Action and Impactsaransh bhardwajNo ratings yet

- Machine Learning (CS-601) Class NotesDocument17 pagesMachine Learning (CS-601) Class NotesASHOKA KUMARNo ratings yet

- Group 1Document12 pagesGroup 1Bé SâuNo ratings yet

- Generative Ai in Oracle Cloud HCMDocument9 pagesGenerative Ai in Oracle Cloud HCMS SNo ratings yet

- Evaluation and Comparison of AutoML Approaches and ToolsDocument9 pagesEvaluation and Comparison of AutoML Approaches and ToolsMathias RosasNo ratings yet

- Is It Time To Rethink Teaching and Assessment? AI and Higher EducationDocument22 pagesIs It Time To Rethink Teaching and Assessment? AI and Higher EducationpNo ratings yet

- AI Concepts Quiz GradedDocument2 pagesAI Concepts Quiz GradedYesmine MakkesNo ratings yet

- Strategies For Improving Object Detection in Real-Time Projects That Use Deep Learning TechnologyDocument7 pagesStrategies For Improving Object Detection in Real-Time Projects That Use Deep Learning TechnologyMindful NationNo ratings yet

- Human Rights in The Age of Social MediaDocument3 pagesHuman Rights in The Age of Social MediaAlnur PrmakhanNo ratings yet

- Learn AI and ML for a Career BoostDocument8 pagesLearn AI and ML for a Career BoostRishiNo ratings yet

- CS402 Data Mining and WarehousingDocument3 pagesCS402 Data Mining and WarehousingGeorge VoresNo ratings yet

- AI Lab MAnual FinalDocument44 pagesAI Lab MAnual FinalKongati VaishnaviNo ratings yet

- Machine Learning - Part 1Document80 pagesMachine Learning - Part 1cjon100% (1)

- Roadmap To Becoming A Data Scientist in PythonDocument12 pagesRoadmap To Becoming A Data Scientist in Pythonjigsan5No ratings yet

- The Commonwealth Advances with Emerging Tech and AIDocument15 pagesThe Commonwealth Advances with Emerging Tech and AIHari NarayanNo ratings yet

- Data Scientist with 15+ Years Experience in AI/MLDocument4 pagesData Scientist with 15+ Years Experience in AI/MLSrikanth ReddyNo ratings yet

- 9 Speech RecognitionDocument26 pages9 Speech RecognitionGetnete degemuNo ratings yet

- The Rise of The Intelligent Health SystemDocument299 pagesThe Rise of The Intelligent Health Systemradium.08slangNo ratings yet

- 531 projct s 문단배열Document6 pages531 projct s 문단배열Kelly LuvNo ratings yet

- Critical Thinking for Siri LeadershipDocument5 pagesCritical Thinking for Siri LeadershipGaurav ChaudharyNo ratings yet

- Startup Ecosystem India Incubators Accelerators 23 01 2019Document95 pagesStartup Ecosystem India Incubators Accelerators 23 01 2019joshniNo ratings yet

- Predict Rainfall using Decision Trees and Logistic RegressionDocument2 pagesPredict Rainfall using Decision Trees and Logistic RegressionAKSHAY PARIHAR0% (1)