Professional Documents

Culture Documents

Artificial Intelligence Unit 2 Solving Problems by Searching

Uploaded by

Ananth NathOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Artificial Intelligence Unit 2 Solving Problems by Searching

Uploaded by

Ananth NathCopyright:

Available Formats

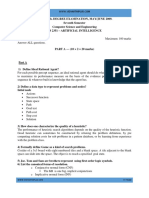

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

Artificial intelligence

CHAPTER 3 Solving Problems by Searching

Problem solving agents decide what to do by finding sequences of actions that lead to desirable

states.

Uninformed algorithms give no information about the problem other than its definition.

Intelligent agents maximize their performance measure by adopting a goal and aiming at

satisfying it.

Goal formulation based on the current situation and the agents performance is the first step in

problem solving.

Problem formulation is the process of deciding what actions and states to consider given a goal.

An agent with several immediate options of unknown value can decide what to do by first

examining different possible sequences of actions that lead to states of known value and then

choosing the best sequence. This process of looking for such a sequence is called search.

A search algorithm takes a problem as input and returns a solution in the form of an action

sequence. Once a solution is found, the actions it recommends actions that can be carried out.

This is called the execution phase.

Problem solving agent:

This figure assumes that environment is static, observable, discrete and deterministic.

Problem can be defined by four components:

a) Initial state

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

b) Possible actions available to the agent: using successor function

Given a particular state x, SUCCESSOR-FN(x) returns a set if <action, successor> ordered pairs.

Initial state and successor function defines state space of the problem (set of all states reachable

from initial state). The state space forms a graph in which the nodes are states and the arcs

between nodes are actions. A path in the state space is sequence of states connected by sequence

of actions.

c) Goal test determines whether a given state is a goal state

d) Path cost assigns numeric cost to each path (sum of costs of individual actions along the

path)

Step cost of taking action a to go from x to y: c(x, a, y)

A solution to a problem is a path from the initial state to a goal state. Solution quality is

measured by path cost function and an optimal solution has lowest path cost among all solutions.

Abstraction process of removing detail from representation.

Example problems:

1. Toy problem

Vacuum cleaner:

states initial state successor function goal test path cost

2. Real world problem

Travelling sales person problem

Searching for solutions:

Search techniques use explicit search tree that is generated by initial state and successor function

that together define the state space. Root of search tree is search node corresponding to initial

state. The steps to be followed are:

a) Check whether it is a goal state.

b) If it is not the goal state, expand current state by applying successor function to current

state thereby generating new set of states.

c) Continue choosing, testing and expanding until either a solution is found or there are no

more states to be expanded. Choice of which state to expand depends on search strategy.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

Node is a book keeping data structure with five components:

a) state state in state space to which node corresponds

b) parent_node node in search tree that generated this node

c) action action applied to parent to generate the node

d) path_cost cost denoted by g(n) from initial state to node indicated by parent pointers

e) depth number of steps along the path from the initial state

Collection of nodes that have been generated but not yet expanded is called fringe. Each

element of fringe is called leaf node (no successors)

Measuring problem solving performance

a) Completeness: does a solution exist? b) Optimality: is this an optimal solution?

c) Time complexity: how long does it take to find a solution?

d) Space complexity: amount of memory needed to perform a search?

General tree search algorithm-

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

In AI, graph is represented implicitly by initial state and successor function and is frequently

finite; complexity is expressed in three quantities-

B Branching factor or maximum number of successors of any node; D Depth of

shallowest goal node; m- Maximum length of any path in state space

For finding effectiveness of search algorithm, use

a) Search cost depends on time complexity

b) Total cost combines search cost and path cost of solution found

Uninformed search strategies (also called blind search)

No additional information about states beyond that provided in the problem definition.

Breadth first search: Root node is expanded first, and then all the successors of root node are

expanded next and so on.

a) Nodes visited first will be expanded first using FIFO approach

b) FIFO puts all newly generated successors at the end of queue which means shallow nodes are

expanded first before deeper nodes.

Depth first search: Expands deepest node in current fringe of search tree, search proceeds

immediately to the deepest level of search tree where nodes have no successors. As those

nodes are expanded they are dropped from the fringe, then the search backs up to the next

shallowest node. This is implemented with LIFO queue also known as stack.

Backtracking search: uses less memory compared to DFS. Only one successor is generated

at a time rather than all successors, each partially node expanded node remembers which

successor to generate next.

Drawback of DFS - gets stuck going down a very long (or even infinite) path when a

different choice would lead to a solution near the root of the search tree.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

CHAPTER 4 Informed Search and Exploration

Informed search strategy uses problem specific knowledge thus finding solution more efficiently.

Best first search node is selected for expansion based on evaluation function f(n).Node with

lowest evaluation is selected for expansion. This is implemented via a priority queue. Choose a

node that appears to be best according to evaluation function.

Key component of best first search algorithm is heuristic function denoted by h(n).

h(n) estimated cost of cheapest path from node n to goal node.

2 ways to use heuristic information

a) Greedy best first search : tries to expand node that is closest to goal , evaluates nodes by

using heuristic function f(n) = h(n)

Algorithm is called greedy because at each step it tries to get close to the goal as it can.

Resembles DFS as it prefers to allow single path all the way to the goal but will back up

when it hits a dead end.

Disadvantage not optimal and incomplete.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

b) A* search evaluates nodes by combining g(n) and h(n)

g(n) cost from start node to node n

h(n) cost to get from node n to goal.

f(n) = g(n) + h(n) where f(n) is the estimated cost of the cheapest solution through n.

A* is optimal if h(n) is an admissible heuristic provided h(n) never over estimates the

cost to reach the goal.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

CHAPTER 6 Adversarial Search

Multi agent environments agent has to consider actions of other agents

Co operative environment: taxi driving Competitive environment: playing chess

Unpredictability in competitive multi agent environment gives rise to adversarial search

problems known as games. Impact of each agent is significant on the others. In AI, games are

deterministic, turn taking, two players, zero sum games of perfect information whose actions

must alternate and in which the utility value at end of game are equal and opposite.

Pruning allows us to ignore portions of search tree that make no difference to the final choice.

Heuristic evaluation functions allow approximating the true utility of a state without doing a

complete search.

Optimal decisions in games

A game can be defined as a kind of search problem with the following components

a) Initial state b) Successor function c) Terminal test d) Utility function

Given a game tree, the optimal strategy can be determined by examining the minimax value of

each node which is written as MINIMAX VALUE (n). The minimax value of a node is the

utility (for MAX) of being in the corresponding state assuming that both players play optimally

from there to the end of the game.

MAX will prefer to move to a state of maximum value whereas MIN prefers a state of minimum

value.

Minimax algorithm

Computes minimax decision from current state. It uses a simple recursive computation of

the minimax values of each successor state directly implementing the defining equations.

The recursion proceeds all the way down to the leaves of the tree and then the minimax

values are backed up through the tree as the recursion unwinds.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

Optimal decisions in multiplayer games

Extending the idea of minimax to multiplayer games. First replace the single value for

each node with a vector of values. For terminal states, this vector gives the utility of the

state from each players view point. This can be achieved by having the utility function

return a vector of utilities.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

Alpha beta pruning

Compute correct minimax decision by pruning which eliminates large parts of tree from

consideration. When applied to a standard minimax tree, it returns the same as minimax

would but prunes away branches that cannot possibly influence the final decision.

Technique applied is DFS and can be applied to tress of any depth.

Alpha beta pruning gets its name from 2 parameters that describe bounds on the backed up

values that appear anywhere along the path:

Alpha value of best (highest value) choice found so far at any choice point along path for MAX

Beta value of best (lowest value) choice found so far at any choice point along path for MIN

Alpha beta search updates the value of alpha and beta as it goes along and prunes the

remaining branches at a node as soon as the value of the current node is known to be worse than

current alpha or beta value for max or min respectively.

Disadvantage alpha beta still has to search all the way to terminal states for at least a portion

of the search space.

In games, repeated states occur frequently because of transposition different permutations of

the move sequence that end up in the same position.

www.jntuworld.com

www.jntuworld.com

Solving Problems by Searching AI-Unit II

A.Aslesha Lakshmi

Asst. Prof, CSE, VNRVJIET

Imperfect Real Time Decisions

Minimax algorithm generates entire game search space whereas alpha beta algorithm allows us

to prune large parts of it. Hence programs should cut off the search earlier and apply a heuristic

evaluation function to states in the search turning non terminal nodes into terminal leaves. This

alters alpha beta pruning in 2 ways: utility function is replaced by a heuristic evaluation

function called EVAL which gives an estimate of the positions utility and the terminal test is

replaced by a cut-off test that decides when to apply EVAL.

Evaluation functions:

An evaluation that returns an estimate of the expected utility of the game from a given

position. First, the evaluation function should order the terminal states in the same way as the

true utility function. Second, the computation must not take too long. Third, for non terminal

states, the evaluation function should be strongly correlated with the chances of winning.

Most evaluation functions work by calculating various features of the state. These features

taken together define various categories or equivalence classes of states the states in each

category have the same values for all the features.

a) Expected value b

b) Material value

c) Weighted linear function

Cutting off search:

Here we modify alpha beta search so that it will call the heuristic EVAL function when it is

appropriate to cut off the search.

If CUTOFF-TEST (state, depth) then return EVAL (state)

www.jntuworld.com

www.jntuworld.com

You might also like

- Artificial Intelligence Unit-2 Solving Problems by SearchingDocument11 pagesArtificial Intelligence Unit-2 Solving Problems by SearchingMukeshNo ratings yet

- AI - AssignmentDocument14 pagesAI - AssignmentlmsrtNo ratings yet

- Module 3. Solving Problems by Searching - 20230824 - 200318 - 0000Document15 pagesModule 3. Solving Problems by Searching - 20230824 - 200318 - 0000afrozbhati1111No ratings yet

- CS-1351 Artificial Intelligence - Two MarksDocument24 pagesCS-1351 Artificial Intelligence - Two Marksrajesh199155100% (1)

- Ai NotesDocument13 pagesAi NotesHitesh MahiNo ratings yet

- AI Lec2Document43 pagesAI Lec2Asil Zulfiqar 4459-FBAS/BSCS4/F21No ratings yet

- Chapter ThreeDocument46 pagesChapter ThreeJiru AlemayehuNo ratings yet

- Question bank-AI-12-13-10144CS601Document30 pagesQuestion bank-AI-12-13-10144CS601Bhaskar Veeraraghavan100% (1)

- Ai Unit 2 NotesDocument52 pagesAi Unit 2 NotesIndumathiNo ratings yet

- Unit II NotesDocument28 pagesUnit II NotesHatersNo ratings yet

- Searching in Problem Solving AI - PPTX - 20240118 - 183824 - 0000Document57 pagesSearching in Problem Solving AI - PPTX - 20240118 - 183824 - 0000neha praveenNo ratings yet

- AI Unit 2 NotesDocument37 pagesAI Unit 2 NotesRomaldoNo ratings yet

- AI Mod2Document17 pagesAI Mod2Ashok KumarNo ratings yet

- Mca AI 2 UnitDocument46 pagesMca AI 2 Unitkrishnan iyerNo ratings yet

- Part A: Unit Ii Representation of KnowledgeDocument11 pagesPart A: Unit Ii Representation of KnowledgedhanalakshmiNo ratings yet

- AI - UNIV - 2 MarksDocument19 pagesAI - UNIV - 2 MarksGEETHA SNo ratings yet

- Unit 2Document19 pagesUnit 2aman YadavNo ratings yet

- AI Unit 2 NotesDocument10 pagesAI Unit 2 NotesSatya NarayanaNo ratings yet

- AI - 1824052 - Rasaili Tilak (Assignment 3)Document8 pagesAI - 1824052 - Rasaili Tilak (Assignment 3)RTM Production (KING)No ratings yet

- Solving Problems by SearchingDocument27 pagesSolving Problems by Searchingoromafi tubeNo ratings yet

- AI Lecture 03 (Uninformed Search)Document43 pagesAI Lecture 03 (Uninformed Search)Bishowjit DattaNo ratings yet

- AI UNIT2 Anna University NotesDocument81 pagesAI UNIT2 Anna University NotesRomaldoNo ratings yet

- Lect 05Document7 pagesLect 05Govind TripathiNo ratings yet

- AimidreviewerDocument4 pagesAimidreviewermart arvyNo ratings yet

- Examples of State-Space Search AlgorithmsDocument6 pagesExamples of State-Space Search AlgorithmsAyele MitkuNo ratings yet

- Ai-Unit-Ii NotesDocument77 pagesAi-Unit-Ii NotesCharan 'sNo ratings yet

- Problem-Solving As SearchDocument41 pagesProblem-Solving As SearchsshammimNo ratings yet

- Unit 2Document26 pagesUnit 2skraoNo ratings yet

- AI 2 Mark Questions Unit 2Document8 pagesAI 2 Mark Questions Unit 2Prasad_Psd_4549No ratings yet

- Ai 02Document22 pagesAi 02Debashish DekaNo ratings yet

- Chapter 2-AIDocument26 pagesChapter 2-AIjajara5519No ratings yet

- Artificial Intelligence (UNIT - 2)Document53 pagesArtificial Intelligence (UNIT - 2)tusharNo ratings yet

- AI - Popular Search AlgorithmsDocument7 pagesAI - Popular Search AlgorithmsRainrock BrillerNo ratings yet

- Search Algorithms in Artificial IntelligenceDocument32 pagesSearch Algorithms in Artificial Intelligenceমোহাম্মদ রাহাত হাসানNo ratings yet

- MODULE - 3 Solved (11) PDFDocument17 pagesMODULE - 3 Solved (11) PDFnida19sc115No ratings yet

- Artificial Intelligence Questions SolvedDocument17 pagesArtificial Intelligence Questions SolvedAlima NasaNo ratings yet

- III It Ci Unit 1Document28 pagesIII It Ci Unit 15049 preethi BaskaranNo ratings yet

- Intelligent Systems NotesDocument7 pagesIntelligent Systems NotesSourav DasNo ratings yet

- AI UNIT 1 BEC Part 2Document102 pagesAI UNIT 1 BEC Part 2Naga sai ChallaNo ratings yet

- Elaborate Artificial IntelligenceDocument8 pagesElaborate Artificial IntelligenceRohan GhadgeNo ratings yet

- 4 - C Problem Solving AgentsDocument17 pages4 - C Problem Solving AgentsPratik RajNo ratings yet

- Annauniversity CSE 2marks AIDocument40 pagesAnnauniversity CSE 2marks AIPuspha Vasanth RNo ratings yet

- Artificial Intelligence UNIT IIDocument14 pagesArtificial Intelligence UNIT IIAditya SinghNo ratings yet

- 2-1 CAI Unit II Q & ADocument6 pages2-1 CAI Unit II Q & Aluciferk0405No ratings yet

- AI Class NotesDocument1 pageAI Class Notes75b4s8mchsNo ratings yet

- Selection SortDocument6 pagesSelection SortHaiderNo ratings yet

- Cs2351 Artificial Intelligence 2 MarksDocument14 pagesCs2351 Artificial Intelligence 2 Markscute_guddyNo ratings yet

- AI Sheet (FINAL PRO MAX)Document19 pagesAI Sheet (FINAL PRO MAX)gana09890No ratings yet

- Ai PDFDocument85 pagesAi PDFRomaldoNo ratings yet

- FA17-BCS-027 LabDocument27 pagesFA17-BCS-027 Lab?No ratings yet

- MCQ AiDocument40 pagesMCQ AiTushar JainNo ratings yet

- Module-02 AIML 21CS54Document27 pagesModule-02 AIML 21CS54Deepali Konety67% (3)

- Unit 2 Hill Climbing TechniquesDocument23 pagesUnit 2 Hill Climbing Techniquesharshita.sharma.phd23No ratings yet

- Shayantan Kabiraj Company TarDocument12 pagesShayantan Kabiraj Company Tar22Shayantan KabirajNo ratings yet

- Informed Search Algorithms in AI - JavatpointDocument10 pagesInformed Search Algorithms in AI - JavatpointNahla khanNo ratings yet

- NNAIDocument28 pagesNNAISimone SinghNo ratings yet

- Solving Problem by SearchingDocument53 pagesSolving Problem by SearchingIqra ImtiazNo ratings yet

- BankDocument27 pagesBankmail2sps10No ratings yet

- Solving Problems by SearchingDocument58 pagesSolving Problems by SearchingjustadityabistNo ratings yet

- Artificial Intelligence Unit 3 Knowledge and ReasoningDocument7 pagesArtificial Intelligence Unit 3 Knowledge and ReasoningmesaberNo ratings yet

- C&DS NotesDocument98 pagesC&DS NotesAnanth NathNo ratings yet

- C & DS Notes Unit-IDocument18 pagesC & DS Notes Unit-IAnanth NathNo ratings yet

- C Programming and Data Structures Lab ManualDocument275 pagesC Programming and Data Structures Lab ManualAnanth NathNo ratings yet

- Unit 2.3 Bucket - Radix - Counting - SortingDocument32 pagesUnit 2.3 Bucket - Radix - Counting - SortingHarshil ModhNo ratings yet

- CS8391 NotesDocument346 pagesCS8391 NotesbalaNo ratings yet

- Chapter III - 1.2 Solution of The Assignment ModelDocument24 pagesChapter III - 1.2 Solution of The Assignment ModelMiks EnriquezNo ratings yet

- Program To Find Smallest Element in An Array Using CDocument18 pagesProgram To Find Smallest Element in An Array Using CAJAY J BITNo ratings yet

- Presentation 11Document30 pagesPresentation 11Kiran singhNo ratings yet

- Algorithm and Flow Chart ScriptDocument7 pagesAlgorithm and Flow Chart ScriptBeybee BuzzNo ratings yet

- Data Structure AucseDocument10 pagesData Structure Aucseapi-3804388No ratings yet

- 05.01 Newton Raphson MethodDocument30 pages05.01 Newton Raphson MethodJet GamezNo ratings yet

- Solution Manual For An Introduction To Optimization 4th Edition Edwin K P Chong Stanislaw H ZakDocument7 pagesSolution Manual For An Introduction To Optimization 4th Edition Edwin K P Chong Stanislaw H ZakDarla Lambe100% (30)

- Algorithms Practice QuestionsDocument3 pagesAlgorithms Practice QuestionsAnamta KhanNo ratings yet

- Assignment 5: Unit 7 - Week 5Document6 pagesAssignment 5: Unit 7 - Week 5cse_julieNo ratings yet

- Lab 1.1Document6 pagesLab 1.1atharNo ratings yet

- Assignments Unit 2,3Document2 pagesAssignments Unit 2,3TaskeenNo ratings yet

- Elementary Graph Algorithms: Slide Sources: CLRS "Intro. To Algorithms" Book Website Adapted and SupplementedDocument20 pagesElementary Graph Algorithms: Slide Sources: CLRS "Intro. To Algorithms" Book Website Adapted and SupplementedharveyNo ratings yet

- T0026 Data Structures: Soal PraktikumDocument3 pagesT0026 Data Structures: Soal PraktikumAndre LaurentNo ratings yet

- More Sorting: Radix Sort Bucket Sort In-Place Sorting How Fast Can We Sort?Document19 pagesMore Sorting: Radix Sort Bucket Sort In-Place Sorting How Fast Can We Sort?DAWIT SEGEDNo ratings yet

- FADML 06 PPC Graphs and Traversals PDFDocument47 pagesFADML 06 PPC Graphs and Traversals PDFarpan singhNo ratings yet

- Data Structures & AlgorithmsDocument9 pagesData Structures & AlgorithmsHuzaifa UbaidNo ratings yet

- Computational Complexity of DSPDocument12 pagesComputational Complexity of DSPnazmulNo ratings yet

- Binary Search TreesDocument71 pagesBinary Search Treesalok_choudhury_2No ratings yet

- How Hashmap Works in Java: The Get MethodDocument5 pagesHow Hashmap Works in Java: The Get MethodPujaNo ratings yet

- Analysis of Algorithm: By: Waqas Haider Khan BangyalDocument12 pagesAnalysis of Algorithm: By: Waqas Haider Khan Bangyalresham amanatNo ratings yet

- L8 - CD 303 Linear Array - Binary SearchDocument3 pagesL8 - CD 303 Linear Array - Binary SearchAryaman BhatnagarNo ratings yet

- Program No. Aim:-To Check Whether The Entered String Is A Keyword or NotDocument11 pagesProgram No. Aim:-To Check Whether The Entered String Is A Keyword or NotGarima MudgalNo ratings yet

- CSC263 Spring 2011 Assignment 2 SolutionsDocument4 pagesCSC263 Spring 2011 Assignment 2 SolutionsyellowmoogNo ratings yet

- LinkedList in Java - GeeksforGeeksDocument19 pagesLinkedList in Java - GeeksforGeekspawanyadav3317No ratings yet

- Placement ChecklistDocument2 pagesPlacement ChecklistRishu KumarNo ratings yet

- MedTerm Machine LearningDocument14 pagesMedTerm Machine LearningMOhmedSharafNo ratings yet

- Fast Fourier Transform On HexagonsDocument7 pagesFast Fourier Transform On HexagonsYufangNo ratings yet