Professional Documents

Culture Documents

WP Essentials of Video Transcoding

Uploaded by

Carlos A. Galeano A.Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

WP Essentials of Video Transcoding

Uploaded by

Carlos A. Galeano A.Copyright:

Available Formats

Contents

Introduction Page 1

Digital media formats Page 2

The essence of compression Page 3

The right tool for the job Page 4

Bridging the gaps Page 5

(media exchange between diverse systems)

Transcoding workows Page 6

Conclusion Page 7

Introduction

Its been more than three decades now since digital media emerged from the

lab into real-world applications, igniting a communications revolution that

continues to play out today. First up was the Compact Disc, featuring

compression-free LPCM digital audio that was designed to fully reconstruct

the source at playback. With digital video, on the other hand, it was clear from

the outset that most recording, storage, and playback situations wouldnt

have the data capacity required to describe a series of images with complete

accuracy. And so the race was on to develop compression/decompression

algorithms (codecs) that could adequately capture a video source while

requiring as little data bandwidth as possible.

The intent of this paper is to

provide an overview of the

transcoding basics youll need to

know in order to make informed

decisions about implementing

transcoding in your workow. That

involves explaining the elements of

the various types of digital media

les used for different purposes,

how transcoding works upon

those elements, what challenges

might arise in moving from one

format to another, and what

workows might be most effective

for transcoding in various common

situations.

1

The Essentials of Video Transcoding

Enabling Acquisition, Interoperability, and Distribution in Digital Media

Telestream

Whitepaper

The good news is that astonishing progress has been

made in the ability of codecs to allocate bandwidth

effectively to the parts of a signal that are most critical

to our perception of the content. Take, for example, the

Advanced Audio Codec (AAC) popularized by Apple via

iTunes. Its delity may be debatable for purists, but the

fact is that 128 kbps AAC delivers audio quality that is

acceptable to a great many people in a great many

situations, and it uses only one-eleventh of the bitrate of

CD-quality LPCM (16-bit/44.1 kHz). Even more impres-

sive, the codec used to enable high-denition (1920 x

1080) video broadcast under the ATSC television

standard is capable of spectacular image quality at a

bitrate of only 18 Mbps, which requires a compression

ratio of 55:1 (or more).

With success, of course, comes competition and

complication. Codecs make possible the extension of

digital content into new realms the desktop, the smart

phone, etc. each of which has constraints that are

best addressed by optimizing a codecs performance

for a particular platform in a particular situation. The

result is an ever-expanding array of specialized codecs.

Some codecs are used for content acquisition, others

for editing and post-production, still others for storage

and archiving, and still more for delivery to viewers on

everything from big-screen TVs to mobile devices.

Because content is increasingly used in a multitude of

settings, its become increasingly important to be able

to move it efciently from one digital format to another

without degrading its quality. Simply put, that is the

purpose of transcoding.

Perhaps the easiest way to realize the importance of

transcoding is to imagine a world in which it doesnt

exist. Suppose, for example, that you manage produc-

tion for a local TV news team that has nabbed a

bombshell interview. Youve got an exclusive on the

story only until the following day, and you need to get it

on the evening news. Unfortunately, the digital video

format of your ENG camera uses a different codec than

that of your non-linear editor (NLE). So before you can

even begin to edit youll have to play the raw footage

from the camera, decompressing it in real time while

simultaneously redigitizing it in a format that your NLE

can handle. Now suppose that you have to go through

the same painfully slow routine to get the edited version

to your broadcast server, and again to make a backup

copy for archiving, and again to make a set of les at

different bitrates for different devices to stream from

your stations website. The inefciency multiplies as you

move through the process.

Transcoding bypasses this tedious, inefcient scenario.

It allows programs to be converted from codec to

codec faster than real-time using standard IT hardware

rather than more costly video routers and switches. And

it facilitates automation because it avoids manual steps

such as routing signal or transporting storage media.

Intelligently implemented and managed, transcoding

vastly improves throughput while preserving quality.

Digital media formats

The rst rule of digital media le formats and codecs is

that their number is always growing. A glance back in

time will reveal some older formats that have fallen by

the wayside, but also shows that there are far more

formats in regular use today than there were fteen or

even ve years ago. Different formats arise to ll

different needs (more on this later), but they all share

some basic characteristics.

At the simplest level a video-capable le format is a

container or wrapper for component parts of at least

three types. One type is video stream data and another

is audio stream data; together the elementary streams

of these two content types are referred to as the les

essence. The third component is metadata, which is

data that is not itself essence but is instead about the

essence.

Metadata can be anything from a simple le ID to a set

of information about the content, such as the owner

(rights holder), the artist, the year of creation, etc. In

addition to these three, container formats may also

support additional elementary content streams, such as

the overlay graphics used in DVD Video menus and

subtitles.

Component parts of a media container le

2

Telestream

Whitepaper

The following are examples of container le types that

are in common use today:

QuickTime MOV, an Apple-developed cross-

platform multimedia format

MPEG-2 Transport Stream and Program Stream,

both developed by the Motion Picture Experts

Group, with applications in optical media (DVD-Vid-

eo, Blu-ray Disc) and digital broadcast (e.g. ATSC).

WMV and MP4 for online distribution

GXF (General eXchange Format), developed by

Grass Valley and standardized by SMPTE

LXF, a broadcast server format developed by Harris

MXF (Material eXchange Format), standardized by

SMPTE

Media le formats such as the above differ from one

another in a multitude of ways. At the overall le level,

these include the structure of the header (identifying

information at the start of a le that tells a hardware

device or software algorithm the type of data contained

in the le), the organization of the component parts

within the wrapper le, the way elementary streams are

packetized and interleaved, the amount and organiza-

tion of the le-level metadata, and the support for

simultaneous inclusion of more than one audio and/or

video stream, such as audio streams for multiple

languages or video streams at different bitrates (e.g. for

adaptive streaming formats such as Apple HLS or

Microsoft Smooth Streaming).

At the essence level, format differences relate to the

technical attributes of the elementary streams. For

video, these include the frame size (resolution) and

shape (aspect ratio), frame rate, color depth, bit rate,

etc. For audio, such attributes include the sample rate,

the bit depth, and the number of channels.

Container le formats also differ greatly in the type and

organization of the metadata they support, both at the

le level and the stream level. In this context, metadata

generally falls into one of two categories:

Playback metadata is time-synchronized data

intended for use by the playback device to

correctly render the content to the viewer, such as:

captions, including VBI (Line 21) or teletext in SD

and VANC in HD;

timecode (e.g.VITC);

aspect ratio (e.g. AFD);

V-Chip settings;

copy protection (e.g. CGMS).

Content metadata describes the content in ways

that are relevant to a particular application,

workow, or usage, such as:

for Web streaming: title, description, artist, etc.;

for television commercials: Ad-ID, Advertiser,

Brand, Agency, Title, etc.;

for sporting events: event name, team names,

athlete names, etc.

File formats are designed with various purposes in

mind, so naturally the metadata schemes used by those

formats are not all the same. A metadata scheme

designed for one purpose may not include the same

attributes as a scheme designed for a different purpose,

which can greatly complicate the task of preserving

metadata in the transcoding process.

The essence of compression

In many cases the most important difference between

le formats, and the reason transcoding is required

between them, is the codecs they support and the

amount of compression applied to the video and audio

streams that comprise each formats essence. Those

factors are determined by the purpose for which each

format was dened. The common video le formats fall

into a half-dozen different usage categories, including

acquisition, editing, archiving, broadcast servers,

distribution, and proxy formats.

To understand why different compression schemes are

used in these different categories we need a quick

refresher about how compression works. In video,

compression schemes are generally based on one or

both of two approaches to reducing the overall amount

of data needed to represent motion pictures:

Intra-frame compression reduces the data used to

store each individual frame.

Inter-frame compression reduces the data used to

store a series of frames.

Both compression approaches start with the assump-

tion that video contains a fair amount of redundancy.

Within a given frame, for example, there may be many

areas of adjacent pixels whose color and brightness

values are identical. Lossless intraframe compression

simply expresses those areas more concisely. Rather

than repeat the values for each individual pixel, the

values may be stored once along with the number of

sequential pixels to which they apply.

3

Telestream

Whitepaper

If greater compression is required, you then move into

the realm of lossy compression, meaning that you

begin discarding some of the information that you

would need in order to reconstruct the original image

with perfect accuracy. Adjacent pixels that are similar in

color and brightness will be treated as if they are actual-

ly identical, so theres more redundancy and therefore

less data is required to describe the overall picture. This

reduces bandwidth requirements but increases the

chance that the viewer will begin to see compression

artifacts at playback, such as blockiness rather than

smooth color gradients.

Inter-frame compression also assumes redundancy, in

this case over time across a group of pictures (GOP)

that is typically 12-15 frames in length, but may be as

short as 3 frames or as long as a few hundred frames.

The rst frame in each GOP, called the I-frame, is stored

in full while the subsequent frames (called B-frames

and P-frames) are stored only in terms of how they

differ from the I-frame or from each other. The greater

the frame-to frame redundancy, the less data it takes to

store the differences. If limited bandwidth requires

greater compression, a pixel that is similar from one

frame to the next may be treated as if it is identical,

thereby increasing redundancy. Inter-frame compres-

sion allows a drastic reduction in the overall amount of

data, but it also means that at playback the only frame

that can be reconstructed independently (without

reference to other frames) will be the I-frame.

The right tool for the job

The way that intra-frame and inter-frame compression

are used (or not) for the video stream in a given le

format has a direct impact on the suitability of that

format for various tasks. If youre choosing a format for

editing, its video codec must support independent

access to every frame, so intra-frame compression is

usually the ideal choice. If youre choosing a format for

longterm archiving, then independent access to every

frame is not a priority, so you can signicantly reduce

your bitrate and le size by using both intra-frame and

inter-frame compression. Distinct but similar consider-

ations apply to audio as well, with differences in

supported audio codecs once again affecting suitability

for different purposes.

The following are examples of different applications for

which container formats are used, and the types of

codecs that are commonly used to compress essence

in those applications:

Editing applications use formats such as DV,

Apples ProRes, Avids DNxHD, and Sonys IMX.

These formats typically use only intra-frame

compression, resulting in video streams comprised

entirely of I-frames that can each be decom-

pressed on their own. The quality of the source is

maintained and the material is easy to seek and

edit on individual frames. But resulting bitrates are

high, in the range of nearly 150 Mbps. The easiest

approach to editing the accompanying audio

streams is to leave them as uncompressed LPCM.

Archiving applications require high quality, and so

may be handled using JPEG2000 or DV video

elementary streams within a QuickTime or AVI

container. But reducing storage requirements is

often a higher priority than maintaining indepen-

dent access to every frame, so inter-frame com-

pression may be applied as well, typically using

long-GOP (15-frame) formats such as MXF

wrapped MPEG-2 at 36Mbps or higher. Audio

streams are often left as PCM or encoded to Dolby

E, which uses two channels of 20-bit PCM to store

up to eight channels of sound.

Broadcast server formats are designed for use with

proprietary systems, such as the Grass Valley K2,

Omneon Spectrum, or Harris Nexio, that serve as

the source from which stations pull program for the

feed to the broadcast transmitter or cable signal

distribution system. Editability is not a requirement.

The video compression employed is typically

long-GOP MPEG-2. Audio may or may not be

compressed.

Compared to codecs using intra-frame compression only

(top), inter-frame compression (bottom) reduces bitrate

by storing only frame-to-frame difference data for most

frames.

4

Telestream

Whitepaper

Distribution is a diverse category covering everything

from CableLabs MPEG-2 transport streams for

video on demand (VOD) to adaptive streaming

formats (Apple HLS, Microsoft Smooth Streaming,

Adobe HDS) for new media distribution via

desktop or mobile device. A single container may

include streams of different bitrates and resolutions

(e.g. 320 x 240 or 1280 x 720). H.264 is a common

video codec for this application. For audio, common

codecs include Dolby Digital (AC-3), DTS, Advanced

Audio Coding (AAC), MP3, and Windows Media

Audio (WMA).

Proxy formats are small, highly-compressed stand-

ins for full-quality source les, allowing fast, easy

viewing of material when bandwidth or playback

power is limited. A stock footage library, for example,

may use proxies to allow prospective customers to

browse for suitable scenes, after which they are

supplied with a high-resolution, lossless-compressed

version of the footage they choose. Similarly, editors

can use proxies to quickly nd the start and end

timecode points of takes that they want to include in

their nal production, without being in a highpow-

ered editing suite. Since the priority is to make the

les small, proxies typically use lossy inter-frame

video compression.

Bridging the gaps

With a fuller understanding of le formats and their

codecs, the need for media exchange between diverse

systems is clear. Production, post production, archiving,

and distribution are like islands, each operating based on

its own requirements, standards, and practices. Transcod-

ing bridges the gaps between them, enabling media

exchange between systems and making possible the vast

range of uses to which digital media are now put.

The basic idea of transcoding is quite straightforward:

make content from a source in one format playable by a

system using a different format. If the desired attributes

(e.g. frame size, frame rate, etc.) are the same for both

source and destination formats, and the destination

container supports the codec used on the sources

elementary streams, then it may be possible to re-wrap

(direct encode) those streams in the destination format.

But in all other cases the source streams must each be

transcoded, which means decoding to an uncompressed

state, still in the digital domain, while nearly-simultane-

ously re-encoding with a different codec and/or different

attributes. The transcoding workow may also allow

streams to be manipulated in other ways while they are

in the uncompressed state between decoding and

re-encoding. An audio stream, for example, may be

adjusted for loudness, or a video stream may be

rescaled, color corrected, or letterboxed.

In an ideal world, transcoded material would retain all

the media and metadata present in the source. In

practice, the extent to which all aspects of the source

are effectively preserved may be limited by the

capabilities of the destination format. Metadata is

particularly problematic in this regard. Content

metadata that cannot be mapped from a source

attribute to a corresponding destination attribute will

be lost because there is nowhere to put it in the

destination le.

Transcoding ideally results in the destination fully representing the source (left), but le format

incompatibilities mean that some aspects of the source may be left behind (right).

5

Telestream

Whitepaper

Transcoding workows

Just as the le formats used for digital media vary greatly

depending on their purpose, so too do the workows

used in various segments of the media industry. Well

look at a few here to get a sense of how transcoding is

implemented in various situations.

One of the simplest congurations is the integration of

transcoding into digital asset management (DAM)

applications, where the content is stored in a form that is

different from the form it will be used in if needed. A

news organization, for example, would typically use a

DAM system to store footage that is acquired and

broadcast live as events unfold. Some of that footage

would later be pulled from the DAM system and

transcoded from the DAM-compatible format into an

NLE-compatible format so that it can be edited into a

regularly scheduled news show. The transcoding element

of that asset retrieval process should be automated for

transparency to the user, which can be achieved either

through a watch-folder system or software coding that

addresses interfaces supported by the DAM system.

A transcoding system that supports API-level

integration can enable assets stored on a DAM

system to be transparently readied for editing on an

NLE.

A somewhat more complex scenario is post-to-

broadcast, where the nished output of an NLE is

moved to a broadcast server. The next illustration, for

example, shows an Avid NLE system with an ISIS or

Unity storage area network (SAN). When post

production is complete, including compositing and

rendering of effects, the SAN will contain a set of

nished video segments in the NLEs working format,

in this case DNxHD.

The NLE will create a QuickTime reference le that is

comprised of a series of timecode references to

segments of this DNxHD video on the SAN. The

QT-Ref le is output to a watch folder, alerting the

transcoding system that theres work to do and point-

ing it to the source material to transcode. The

transcoder then accesses the SAN and transcodes

the DNxHD material specied in the QT-ref le to a

destination format that is compatible with the

broadcast server, in this case to Grass Valleys

proprietary GXF format for both an HD feed and an

SD feed.

Conguration gets even more complex when

designing a workow for broadcast stations or cable

networks, where content comes from multiple

sources, both local and remote, and may be either

prerecorded or live. For maximum efciency, such

systems must integrate seamlessly with a variety of

content sources, including in-house NLEs used for

production of promos and tags, delivery networks

such as Pathre or Pitch Blue that provide syndicated

content, and digital catch servers that ingest com-

mercials from delivery services such as Extreme

Reach, Javelin, and Hula MX.

Avid

Isis/Unity

DNxHD, IMX

8 Channels Audio

VBI/VANC

GXF

MPEG Long GOP

8 Channels Audio

VBI

GXF

MPEG Long GOP

8 Channels Audio

VBI

Transcoder

Watch

Folder

QT-Ref

XML-file VANC Data

Grass Valley

Xxx (SD)

Grass Valley

Xxx (HD)

A post-to-broad-

cast transcoding

workow in which

content from the

NLEs SAN is

converted for use

on the broadcast

servers of a station

or network.

6

Telestream

Whitepaper

As shown above, a multi-input system can maximize

efciency by serving as the hub through which content

from the entire range of sources passes on its way to

on-air servers as well as to servers for online distribution.

Another complex scenario one whose importance has

increased dramatically in recent years is content

repurposing for new media. In this situation the content

is programming that has been created originally for

broadcast (terrestrial or cable) and is being prepared for

downloading to computer desktops and mobile devices,

for example via the iTunes Store. As shown in the next

block diagram, a series of steps are involved, including

not only changing the resolution, e.g. from HD (1920 x

1080) to iPhone-compatible widescreen (640 x 360), but

also things like stitching the between-ad segments into a

single le and perhaps adding an appropriate logo in the

lower right corner.

The imperative is to complete all of these steps with as

little manual intervention as possible, because human

labor is expensive and the revenue from repurposed

media is incremental and uncertain. The most effective

transcoding system for this application will be one that

can also handle all of these related tasks within a single,

automated environment. That environment should require

minimal operator involvement, intelligently routing les

based on their media characteristics or metadata while

also incorporating quality checking and analysis.

Regardless of the specics of the individual situation,

the fundamental question facing a designer who is

integrating transcoding capabilities is where the

transcoders t into the workow.

A large centralized transcoding capability can be

easier to manage and to generate consistent results,

but it also requires a exible network that can

support multiple workows for different types of

tasks. Multiple dedicated transcode systems, on the

other hand, may each individually be simpler, but can

present more of a maintenance challenge overall. The

greater the volume of work being done, the more

likely it is that a centralized system will ultimately

prove more efcient.

Conclusion

As weve learned, a fully-realized transcoding solution

must take into account each of the major compo-

nents audio streams, video streams, and both

playback and content metadata of the le formats

it supports. It must be automatable so that the overall

process can execute reliably with little operator

intervention.

PROMOS

Media Ingest

Proxy Generation

Metadata Ingest

Spots Arriive via

Ad Delivery Service

Advertisers Upload

& Enter Metadata

via Web

VANTAGE SPOT INVENTORY

Automate QC

Create Mezzanine

Review & Approval

LOCAL SPOTS

Transcode

Correct Audio

Deliver Media

Versions to

Playout Server

Notify Automation

Notify Traffic

Clean-up

Search for Assets

Update Trim Points

Correct Metadata

Portals for Sales &

Marketing

WEB PORTAL

Trigger Jobs

Identify Missing Spots

Check Dub List Status

DUB LIST TOOLS

INTEGRATIONS

NATIONAL SPOTS

Metadata Ingest

Media Ingest

Proxy Generation

Upload Interface

Metadata Ingest

A content ingestion system that

handles transcoding can serve

as an efcient hub for content

routing at a station or network.

7

Telestream

Whitepaper

And it must be designed to integrate seamlessly with

components handling other tasks (stitching, logo

overlays, proxy generation, etc.) that also need to occur

at the same point in the workow, so that content can

ow as smoothly as possible from source to destination.

Because codecs and le formats are each tailored for a

specic application, their capabilities are inherently

distinct, and the features of one dont always port easily

to another. As long as these differences persist, there

remains the possibility that some aspect of a source will

be lost in translation. But its precisely because of those

differences that transcoding is such an indispensable

part of todaysand tomorrowsproduction and distribu-

tion processes. If the past is any guide, the future holds

ever-increasing ways in which we create, edit, store,

transmit, and use digital media. Transcoding enables the

interoperability that makes that future possible.

About Telestream and Vantage Transcode

Telestream provides world-class live and on-demand

digital video tools and workow solutions that allow

consumers and businesses to transform video on the

desktop and across the enterprise. Telestream products

span the entire digital media lifecycle, including video

capture and ingest; live and on-demand encoding and

transcoding; playout, delivery, and live streaming; as well

as automation and management of the entire workow.

Media, entertainment, corporations, education,

government, content creators, and consumers all rely

on Telestream to simplify their digital media work-

ows. Robust products, reliable customer support,

and quick response to evolving needs in complex

video environments have gained Telestream a

worldwide reputation as the leading provider for

automated and transcoding-based digital media

workow solutions.

Telestream Vantage Transcode simplies le format

conversion in todays multi-format, multi-vendor video

environments. Video and audio are automatically

converted with metadata between all the major SD

and HD broadcast server, edit system, streaming

server, cable VOD server, web, mobile and handheld

le formats. Telestreams complete range of Vantage

products take transcoding workows to the next level

by adding automated decision making and

bringing complex process steps together into an

automated, easy-to-manage workow. Vantage

enterprise-class system management products

provide an even higher level of visibility and control

for high-volume video workows.

To learn more

Visit us on the web at www.telestream.net

or call Telestream at 1.530.470.1300.

The ideal new media workow integrates transcoding into a unied, automation-intensive

environment providing multi-step processing

Ingest

Format/

Transcode

Edit &

Assemble

Graphic Overlays

& Branding

Localization

& Compliance

Package Automation

Distribution

Outlets

Non-Creative Production Content Globalization

Content Acquistion

Capture from tape/live feeds

File-based ingest of user-

generated, production &

broadcast formats

Editing, assembly, trimming

Splicing and combining

Audio/video extraction

Standards conversion

Subtitling and captioning

Regulatory compliance

Content Enhancement Content Transformation

Media

Storage

Audio/video processing

and grooming (color

correction, audio

normalization, etc.)

Title & graphics overlay

Encoding & transcoding

Media conversion from

any source to any outlet

Metadata transformation

8

Telestream

Whitepaper

www.telestream.net | info@telestream.net | tel +1 530 470 1300

Copyright 2014. Telestream, CaptionMaker, Episode, Flip4Mac, FlipFactory, Flip

Player, Lightspeed, ScreenFlow, Vantage, Wirecast, GraphicsFactory, MetaFlip,

MotionResolve, and Split-and-Stitch are registered trademarks and Pipeline,

MacCaption, e-Captioning, and Switch are trademarks of Telestream, Inc.

All other trademarks are the property of their respective owners. June 2014

You might also like

- Design A Car Was Waste Treatamwent SystemDocument14 pagesDesign A Car Was Waste Treatamwent SystemCarlos A. Galeano A.No ratings yet

- Manual Vehiculo Alarma TRF Premium V2.0. NalDocument16 pagesManual Vehiculo Alarma TRF Premium V2.0. NalCarlos A. Galeano A.100% (1)

- Hexylon Datasheet (En)Document2 pagesHexylon Datasheet (En)Carlos A. Galeano A.No ratings yet

- TT09 - DAY2 - CCTA - Fundamental FTTH Planing and Design PDFDocument126 pagesTT09 - DAY2 - CCTA - Fundamental FTTH Planing and Design PDFCarlos A. Galeano A.No ratings yet

- RF Management: Active Amplifiers & RF SwitchesDocument3 pagesRF Management: Active Amplifiers & RF SwitchesCarlos A. Galeano A.No ratings yet

- Flojet Jabsco RuleDocument11 pagesFlojet Jabsco RuleCarlos A. Galeano A.No ratings yet

- The Bicycle Builders Bible 1980Document365 pagesThe Bicycle Builders Bible 1980Carlos A. Galeano A.100% (1)

- Manual Instalacion Prisma IIDocument124 pagesManual Instalacion Prisma IICarlos A. Galeano A.No ratings yet

- Wp-IPTV Cable White PaperDocument9 pagesWp-IPTV Cable White PaperCarlos A. Galeano A.No ratings yet

- Home Networking WallpaperDocument1 pageHome Networking WallpaperCarlos A. Galeano A.No ratings yet

- Calculos Del Enlace SatelitalDocument5 pagesCalculos Del Enlace SatelitalCarlos A. Galeano A.No ratings yet

- Como Trabaja El LNB DigitalDocument8 pagesComo Trabaja El LNB DigitalCarlos A. Galeano A.No ratings yet

- Defining Omi 2011Document29 pagesDefining Omi 2011Carlos A. Galeano A.No ratings yet

- Coax Temp Attenuation Tech TipDocument3 pagesCoax Temp Attenuation Tech TipCarlos A. Galeano A.No ratings yet

- Documento CNR CiscoDocument17 pagesDocumento CNR CiscoCarlos A. Galeano A.No ratings yet

- Optical Node ConfigurationsDocument8 pagesOptical Node ConfigurationsCarlos A. Galeano A.No ratings yet

- Art of Digital TroubleshootingDocument58 pagesArt of Digital TroubleshootingCarlos A. Galeano A.No ratings yet

- Optical Node ConfigurationsDocument8 pagesOptical Node ConfigurationsCarlos A. Galeano A.No ratings yet

- Data Over Cable Service Interface Specifications Physical Layer SpecificactionDocument171 pagesData Over Cable Service Interface Specifications Physical Layer SpecificactionCarlos A. Galeano A.No ratings yet

- Chips Z 80 Leventhal 2Document124 pagesChips Z 80 Leventhal 2howlerbrNo ratings yet

- DOCSIS QAM Power MeasurementDocument6 pagesDOCSIS QAM Power MeasurementCarlos A. Galeano A.No ratings yet

- Dialog Television User's Manual: CDVB5110DDocument12 pagesDialog Television User's Manual: CDVB5110DThomas1Jessie100% (1)

- Our Old Friend CNR - Communications TechnologyDocument4 pagesOur Old Friend CNR - Communications TechnologyCarlos A. Galeano A.No ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Ad-All Ss Pressure GaugeDocument5 pagesAd-All Ss Pressure Gaugepankaj doshiNo ratings yet

- Marketing Information Systems & Market ResearchDocument20 pagesMarketing Information Systems & Market ResearchJalaj Mathur100% (1)

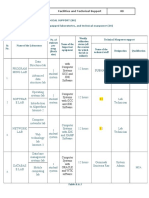

- Criterion 6 Facilities and Technical Support 80: With Computer Systems GCC and JDK SoftwareDocument6 pagesCriterion 6 Facilities and Technical Support 80: With Computer Systems GCC and JDK SoftwareVinaya Babu MNo ratings yet

- Tjibau Cultural CentreDocument21 pagesTjibau Cultural CentrepoojaNo ratings yet

- TWITCH INTERACTIVE, INC. v. JOHN AND JANE DOES 1-100Document18 pagesTWITCH INTERACTIVE, INC. v. JOHN AND JANE DOES 1-100PolygondotcomNo ratings yet

- Selfdrive Autopilot CarsDocument20 pagesSelfdrive Autopilot CarsPavan L ShettyNo ratings yet

- Surdial Service Manual PDFDocument130 pagesSurdial Service Manual PDFHelpmedica IDI100% (1)

- TravelerSafe ReadMe PDFDocument17 pagesTravelerSafe ReadMe PDFnatadevxNo ratings yet

- Doppler NavigationDocument21 pagesDoppler NavigationRe-ownRe-ve100% (3)

- 6.3. Example of Transmission Ratio Assessment 6.3.1. Power-Split Hydro-Mechanical TransmissionDocument2 pages6.3. Example of Transmission Ratio Assessment 6.3.1. Power-Split Hydro-Mechanical TransmissionNita SebastianNo ratings yet

- GB 2626 - 2006 Respiratory Protective Equipment Non-Powered Air-Purifying Particle RespiratorDocument22 pagesGB 2626 - 2006 Respiratory Protective Equipment Non-Powered Air-Purifying Particle RespiratorCastañeda Valeria100% (1)

- Unit 1 Module 2 Air Data InstrumentsDocument37 pagesUnit 1 Module 2 Air Data Instrumentsveenadivyakish100% (1)

- Naruto - NagareboshiDocument2 pagesNaruto - NagareboshiOle HansenNo ratings yet

- EV 10 Best Practice - Implementing Enterprise Vault On VMware (January 2012)Document31 pagesEV 10 Best Practice - Implementing Enterprise Vault On VMware (January 2012)TekkieNo ratings yet

- Behringer FX2000-Product InfoDocument10 pagesBehringer FX2000-Product Infogotti45No ratings yet

- RGNQA Parameters ConsolidatedDocument186 pagesRGNQA Parameters ConsolidatedpendialaNo ratings yet

- Group B04 - MM2 - Pepper Spray Product LaunchDocument34 pagesGroup B04 - MM2 - Pepper Spray Product LaunchBharatSubramonyNo ratings yet

- ASTM D1265-11 Muestreo de Gases Método ManualDocument5 pagesASTM D1265-11 Muestreo de Gases Método ManualDiana Alejandra Castañón IniestraNo ratings yet

- MM LabDocument4 pagesMM LabJstill54No ratings yet

- Understanding ZTPFDocument41 pagesUnderstanding ZTPFsanjivrmenonNo ratings yet

- Evoque Owners Club Manual PDFDocument258 pagesEvoque Owners Club Manual PDFmihai12moveNo ratings yet

- Final Informatics Practices Class XiDocument348 pagesFinal Informatics Practices Class XisanyaNo ratings yet

- Fluid MechDocument2 pagesFluid MechJade Kristine CaleNo ratings yet

- Clean Energy Council Installers Checklist PDFDocument3 pagesClean Energy Council Installers Checklist PDFAndre SNo ratings yet

- Data Loss PreventionDocument24 pagesData Loss PreventionhelmaaroufiNo ratings yet

- Odisha Current Affairs 2019 by AffairsCloudDocument27 pagesOdisha Current Affairs 2019 by AffairsCloudTANVEER AHMEDNo ratings yet

- Sony CFD s100lDocument11 pagesSony CFD s100lGeremias KunohNo ratings yet

- Astm A325mDocument8 pagesAstm A325mChitra Devi100% (1)

- Física Básica II - René CondeDocument210 pagesFísica Básica II - René CondeYoselin Rodriguez C.100% (1)

- 2015 - Catalog - ABB Cable Accessories 12-42 KV - English - CSE-A FamilyDocument12 pages2015 - Catalog - ABB Cable Accessories 12-42 KV - English - CSE-A FamilyAnonymous oKr1c2WNo ratings yet