Professional Documents

Culture Documents

The Research of Method For Blurring Image Reconstruction: Intelligent

Uploaded by

Varun KalpurathOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Research of Method For Blurring Image Reconstruction: Intelligent

Uploaded by

Varun KalpurathCopyright:

Available Formats

The research of intelligent method for blurring image reconstruction

The restoration methods of foggy blurring image and fast movement blurring image are

researched in this paper. Hue saturation adjustment method, Retinex method and the dark

channel prior method are all used for restoring foggy blurring image. POCS method and

bicubic interpolation methods are all used for restoring fast movement blurring image. The

experimental results show that the restoration image of foggy degradation image obtained by

using dark channel prior method is better than the ones obtained by Retinex algorithm and hue

saturation adjustment method, in which the color and detail information are all recovered well;

POCS and bicubic interpolation super-resolution methods can all recover lots of information lost

in low-resolution images, suitable for high-resolution reconstruction image.

Identification of comparative sentences with adjective predicates in patent

Chinese-English machine translation

Comparison is a common expression to indicate the relations of different objects and it exists in

almost every language. In Chinese patent texts, there are lots of comparative sentences, and more

than 70% of the sentences whose predicates are adjectives. In particular, the structure like ***

(be less than) is quite rare in the previous researches, which is a key and difficult point of our

work. So the study on identification of these sentences in patent texts is very significant and can

contribute to the patent Chinese-English machine translation. In this work, we applied the

method based on semantic rules to analyze and identify comparative sentences with adjective

predicates in patent texts. Various linguistic features of these sentences have been explored, such

as comparative keywords and patterns, based on which a lot of semantic rules for identification

were done. Experiment verifies good performance of our method compared with Google

translator and Baidu translator. The method improves the performance of patent machine

translation effectively.

Game-theoretic analysis of advance reservation services

In many services, such as cloud computing, customers have the option to make reservations in

advance. However, little is known about the strategic behavior of customers in such systems. In

this paper, we use game theory to analyze several models of time-slotted systems in which

customers can choose whether or not making an advance reservation of server resources in future

time slots. Since neither the provider nor the customers know in advance how many customers

will request service in a given slot, the models are analyzed using Poisson games, with decisions

made based on statistical information. The games differ in their payment mechanisms, and the

main objective is to find which mechanism yields the highest average profit for the provider. Our

analysis shows that the highest profit is achieved when advance reservation fees are charged only

from customers that are granted service. Furthermore, informing customers about the availability

of free servers prior to their decisions do not affect the provider's profit in that case.

A Scalable Server Architecture for Mobile Presence Services in Social

Network Applications (feb 2013)

Social network applications are becoming increasingly popular on mobile devices. A mobile

presence service is an essential component of a social network application because it maintains

each mobile user's presence information, such as the current status (online/offline), GPS location

and network address, and also updates the user's online friends with the information continually.

If presence updates occur frequently, the enormous number of messages distributed by presence

servers may lead to a scalability problem in a large-scale mobile presence service. To address the

problem, we propose an efficient and scalable server architecture, called PresenceCloud, which

enables mobile presence services to support large-scale social network applications. When a

mobile user joins a network, PresenceCloud searches for the presence of his/her friends and

notifies them of his/her arrival. PresenceCloud organizes presence servers into a quorum-based

server-to-server architecture for efficient presence searching. It also leverages a directed search

algorithm and a one-hop caching strategy to achieve small constant search latency. We analyze

the performance of PresenceCloud in terms of the search cost and search satisfaction level. The

search cost is defined as the total number of messages generated by the presence server when a

user arrives; and search satisfaction level is defined as the time it takes to search for the arriving

user's friend list. The results of simulations demonstrate that PresenceCloud achieves

performance gains in the search cost without compromising search satisfaction.

Output-Oriented Refactoring in PHP-Based Dynamic Web Applications

Refactoring is crucial in the development process of traditional programs as well as advanced

Web applications. In a dynamic Web application, multiple versions of client code in HTML and

JavaScript are dynamically generated from server-side code at run time for different usage

scenarios. Toward understanding refactoring for dynamic Web code, we conducted an empirical

study on several PHP-based Web applications. We found that Web developers perform a new

type of refactoring that is specific to PHP-based dynamic Web code and pertain to output client-

side code. After such a refactoring, the server-side code is more compact and modular with less

amount of embedded and inline client-side HTML/JS code, or produces more standard-

conforming client-side code. However, the corresponding output client-side code of the server

code before and after the refactoring provides the same external behavior. We call it output-

oriented refactoring. Our finding in the study motivates us to build WebDyn, an automatic tool

for dynamicalizing refactorings. When performing on a portion of server-side code (which might

contain both PHP and embedded/inline HTML/JS code), WebDyn detects the repeated and

varied parts in that code portion and produces dynamic PHP code that creates the same client-

side code. Our empirical evaluation on several projects showed WebDyn's accuracy in such

automated refactorings.

Query-Adaptive Image Search With Hash Codes

Scalable image search based on visual similarity has been an active topic of research in recent

years. State-of-the-art solutions often use hashing methods to embed high-dimensional image

features into Hamming space, where search can be performed in real-time based on Hamming

distance of compact hash codes. Unlike traditional metrics (e.g., Euclidean) that offer

continuous distances, the Hamming distances are discrete integer values. As a consequence,

there are often a large number of images sharing equal Hamming distances to a query, which

largely hurts search results where fine-grained ranking is very important. This paper

introduces an approach that enables query-adaptive ranking of the returned images with equal

Hamming distances to the queries.

This is achieved by firstly offline learning bitwise weights of the hash codes for a diverse set

of predefined semantic concept classes. We formulate the weight learning process as a

quadratic programming problem that minimizes intra-class distance while preserving inter-

class relationship captured by original raw image features. Query-adaptive weights are then

computed online by evaluating the proximity between a query and the semantic concept

classes. With the query-adaptive bitwise weights, returned images can be easily ordered by

weighted Hamming distance at a finer-grained hash code level rather than the original

Hamming distance level. Experiments on a Flickr image dataset show clear improvements

from our proposed approach.

Existing System:

While traditional image search engines heavily rely on textual words associated to the images,

scalable content-based search is receiving increasing attention. Apart from providing better

image search experience for ordinary Web users, large-scale similar image search has also

been demonstrated to be very helpful for solving a number of very hard problems in computer

vision and multimedia such as image categorization.

Proposed System:

In this work we represent images using the popular bag-of-visual-words (BoW) framework,

where local invariant image descriptors (e.g., SIFT) are extracted and quantized based on a set

of visual words. The BoW features are then embedded into compact hash codes for efficient

search. For this, we consider state-of-the-art techniques including semi-supervised hashing

and semantic hashing with deep belief networks. Hashing is preferable over tree-based

indexing structures (e.g., kd-tree) as it generally requires greatly reduced memory and also

works better for high-dimensional samples.

With the hash codes, image similarity can be efficiently measured (using logical XOR

operations) in Hamming space by Hamming distance, an integer value obtained by counting

the number of bits at which the binary values are different. In large scale applications, the

dimension of Hamming space is usually set as a small number (e.g., less than a hundred) to

reduce memory cost and avoid low recall.

Tools Used:

Local Directional Number Pattern for Face Analysis: Face and Expression

Recognition

This paper proposes a novel local feature descriptor, local directional number pattern (LDN),

for face analysis, i.e., face and expression recognition. LDN encodes the directional

information of the faces textures (i.e., the textures structure) in a compact way, producing a

more discriminative code than current methods. We compute the structure of each micro-

pattern with the aid of a compass mask that extracts directional information, and we encode

such information using the prominent direction indices (directional numbers) and sign

Front End : C#.Net

which allows us to distinguish among similar structural patterns that have different intensity

transitions.

We divide the face into several regions, and extract the distribution of the LDN features from

them. Then, we concatenate these features into a feature vector, and we use it as a face

descriptor. We perform several experiments in which our descriptor performs consistently

under illumination, noise, expression, and time lapse variations. Moreover, we test our

descriptor with different masks to analyze its performance in different face analysis tasks

Existing System:

In the literature, there are many methods for the holistic class, such as, Eigenfaces and

Fisherfaces, which are built on Principal Component Analysis (PCA); the more recent 2D

PCA, and Linear Discriminant Analysis are also examples of holistic methods. Although

these methods have been studied widely, local descriptors have gained attention because of

their robustness to illumination and pose variations. Heiseleet al.showed the validity of the

component-based methods, and how they outperform holistic methods. The local-feature

methods compute the descriptor from parts of the face, and then gather the information into

one descriptor. Among these methods are Local Features Analysis, Gabor features, Elastic

Bunch Graph Matching, and Local Binary Pattern (LBP).

The last one is an extension of the LBP feature that was originally designed for texture

description, applied to face recognition. LBP achieved better performance than previous

methods, thus it gained popularity, and was studied extensively. Newer methods tried to

overcome the shortcomings of LBP, like Local Ternary Pattern (LTP), and Local Directional

Pattern (LDiP). The last method encodes the directional information in the neighborhood,

instead of the intensity. Also, Zhanget al. explored the use of higher order local derivatives

(LDeP) to produce better results than LBP.

Both methods use other information, instead of intensity, to overcome noise and illumination

variation problems. However, these methods still suffer in non-monotonic illumination

variation, random noise, and changes in pose, age, and expression conditions. Although some

methods, like Gradientfaces, have a high discrimination power under illumination variation,

they still have low recognition capabilities for expression and age variation conditions.

However, some methods explored different features, such as, infrared, near infrared, and

phase information, to overcome the illumination problem while maintaining the performance

under difficult conditions.

Proposed System:

In this paper, we propose a face descriptor, Local Directional Number Pattern (LDN), for

robust face recognition that encodes the structural information and the intensity variations of

the faces texture. LDN encodes the structure of a local neighborhood by analyzing its

directional information. Consequently, we compute the edge responses in the neighborhood,

in eight different directions with a compass mask. Then, from all the directions, we choose

the top positive and negative directions to produce a meaningful descriptor for different

textures with similar structural patterns. This approach allows us to distinguish intensity

changes (e.g., from bright to dark and vice versa) in the texture.

Furthermore, our descriptor uses the information of the entire neighborhood, instead of using

sparse points for its computation like LBP. Hence, our approach conveys more information

into the code, yet it is more compactas it is six bit long. Moreover, we experiment with

different masks and resolutions of the mask to acquire characteristics that may be neglected

by just one, and combine them to extend the encoded information. We found that the

inclusion of multiple encoding levels produces an improvement in the detection process.

Tools Used:

ID-Based Cryptography for Secure Cloud Data Storage (php)

This paper addresses the security issues of storing sensitive data in a cloud storage service and

the need for users to trust the commercial cloud providers. It proposes a cryptographic scheme

for cloud storage, based on an original usage of ID-Based Cryptography. Our solution has

several advantages. First, it provides secrecy for encrypted data which are stored in public

servers. Second, it offers controlled data access and sharing among users, so that unauthorized

users or untrusted servers cannot access or search over data without clients authorization.

Front End : C#.Net

Back End : SQL Server 2005

You might also like

- Created By: Susan JonesDocument246 pagesCreated By: Susan JonesdanitzavgNo ratings yet

- Booklet - Frantic Assembly Beautiful BurnoutDocument10 pagesBooklet - Frantic Assembly Beautiful BurnoutMinnie'xoNo ratings yet

- Kodak Case StudyDocument8 pagesKodak Case StudyRavi MishraNo ratings yet

- CogAT 7 PlanningImplemGd v4.1 PDFDocument112 pagesCogAT 7 PlanningImplemGd v4.1 PDFBahrouniNo ratings yet

- AdvacDocument13 pagesAdvacAmie Jane MirandaNo ratings yet

- Lets Install Cisco ISEDocument8 pagesLets Install Cisco ISESimon GarciaNo ratings yet

- Communication and Globalization Lesson 2Document13 pagesCommunication and Globalization Lesson 2Zetrick Orate0% (1)

- Content-Based Image Retrieval Over The Web Using Query by Sketch and Relevance FeedbackDocument8 pagesContent-Based Image Retrieval Over The Web Using Query by Sketch and Relevance FeedbackApoorva IsloorNo ratings yet

- Quantization of Product Using Collaborative Filtering Based On ClusterDocument9 pagesQuantization of Product Using Collaborative Filtering Based On ClusterIJRASETPublicationsNo ratings yet

- Secure Mining of Association Rules in Horizontally Distributed DatabasesDocument3 pagesSecure Mining of Association Rules in Horizontally Distributed DatabasesvenkatsrmvNo ratings yet

- Online Product QuantizationDocument18 pagesOnline Product QuantizationsatyaNo ratings yet

- All Project Abstract Comp 2017-18Document15 pagesAll Project Abstract Comp 2017-18KevalNo ratings yet

- Research Paper On Image RetrievalDocument7 pagesResearch Paper On Image Retrievalafmbvvkxy100% (1)

- Efficient Cache-Supported Path Planning On RoadsDocument24 pagesEfficient Cache-Supported Path Planning On RoadsvaddeseetharamaiahNo ratings yet

- A Simple Model For Chunk-Scheduling Strategies in P2P StreamingDocument4 pagesA Simple Model For Chunk-Scheduling Strategies in P2P StreamingSri RamNo ratings yet

- Mobile Cloud Computing: First Author, Second Author, Third AuthorDocument5 pagesMobile Cloud Computing: First Author, Second Author, Third Authordevangana1991No ratings yet

- A Literature Survey On Various Approaches On Content Based Image SearchDocument6 pagesA Literature Survey On Various Approaches On Content Based Image SearchijsretNo ratings yet

- Record Matching Over Query Results From Multiple Web DatabasesDocument27 pagesRecord Matching Over Query Results From Multiple Web DatabasesShamir_Blue_8603No ratings yet

- c3 g2 Chapter 3Document3 pagesc3 g2 Chapter 3lolzNo ratings yet

- SurveyDocument3 pagesSurveyDeepa LakshmiNo ratings yet

- Network-Assisted Mobile Computing With Optimal Uplink Query ProcessingDocument11 pagesNetwork-Assisted Mobile Computing With Optimal Uplink Query ProcessingRasa GovindasmayNo ratings yet

- Web Image Re-Ranking Using Query-Specific Semantic SignaturesDocument4 pagesWeb Image Re-Ranking Using Query-Specific Semantic SignaturessangeethaNo ratings yet

- Part Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesDocument31 pagesPart Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesSrikar ChintalaNo ratings yet

- An Efficient and Robust Algorithm For Shape Indexing and RetrievalDocument5 pagesAn Efficient and Robust Algorithm For Shape Indexing and RetrievalShradha PalsaniNo ratings yet

- Topics For WS and Major ProjectDocument3 pagesTopics For WS and Major ProjectkavitanitkNo ratings yet

- Online Handwritten Cursive Word RecognitionDocument40 pagesOnline Handwritten Cursive Word RecognitionkousalyaNo ratings yet

- Efficient and Robust Detection of Duplicate Videos in A DatabaseDocument4 pagesEfficient and Robust Detection of Duplicate Videos in A DatabaseEditor IJRITCCNo ratings yet

- Personalized Search Engine For MobilesDocument3 pagesPersonalized Search Engine For MobilesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Web Programming Language: Final Project ReportDocument9 pagesWeb Programming Language: Final Project ReportHumagain BasantaNo ratings yet

- PHD Thesis On Content Based Image RetrievalDocument7 pagesPHD Thesis On Content Based Image Retrievallizaschmidnaperville100% (2)

- Web Service CompositionDocument4 pagesWeb Service Compositionbebo_ha2008No ratings yet

- Literature Review On Content Based Image RetrievalDocument8 pagesLiterature Review On Content Based Image RetrievalafdtzwlzdNo ratings yet

- Hidden Web Crawler Research PaperDocument5 pagesHidden Web Crawler Research Paperafnkcjxisddxil100% (1)

- Java Projects On 2013 Ieee PapersDocument7 pagesJava Projects On 2013 Ieee Papersமுத்து குமார்No ratings yet

- Content Based Image Retrieval ThesisDocument8 pagesContent Based Image Retrieval ThesisDon Dooley100% (2)

- Systems Comprising HPS and Software-Based Services (SBS) - Experts Offer Their Skills andDocument42 pagesSystems Comprising HPS and Software-Based Services (SBS) - Experts Offer Their Skills andSumit ChaudhuriNo ratings yet

- Unified Relevant Feedback Frame WorkDocument13 pagesUnified Relevant Feedback Frame Worksudheer1234561No ratings yet

- IEEE 2012 Titles AbstractDocument14 pagesIEEE 2012 Titles AbstractSathiya RajNo ratings yet

- Collaborative Filtering Approach For Big Data Applications in Social NetworksDocument5 pagesCollaborative Filtering Approach For Big Data Applications in Social Networkstp2006sterNo ratings yet

- A Semantic Framework For Personalized Ad Recommendation Based On Advanced Textual AnalysisDocument3 pagesA Semantic Framework For Personalized Ad Recommendation Based On Advanced Textual AnalysisMemoona JavedNo ratings yet

- A Secure and Dynamic Multi-Keyword Ranked Search Scheme Over Encrypted Cloud DataDocument32 pagesA Secure and Dynamic Multi-Keyword Ranked Search Scheme Over Encrypted Cloud Datam.muthu lakshmiNo ratings yet

- Handwritten Text Recognition: Software Requirements SpecificationDocument10 pagesHandwritten Text Recognition: Software Requirements SpecificationGaurav BhadaneNo ratings yet

- Cbir Thesis PDFDocument6 pagesCbir Thesis PDFfc4b5s7r100% (2)

- Ecrime Identification Using Face Matching Based Using PHPDocument18 pagesEcrime Identification Using Face Matching Based Using PHPLogaNathanNo ratings yet

- Major Information SecurityDocument3 pagesMajor Information SecuritymoizNo ratings yet

- Chapter 1Document57 pagesChapter 1Levale XrNo ratings yet

- Shape-Based Retrieval: A Case Study With Trademark Image DatabasesDocument35 pagesShape-Based Retrieval: A Case Study With Trademark Image DatabasesNavjot PalNo ratings yet

- Project FinalDocument59 pagesProject FinalRaghupal reddy GangulaNo ratings yet

- Udemy Documentation 4Document2 pagesUdemy Documentation 4Roberto Mellino LadresNo ratings yet

- Literature Review of Bus Ticket Reservation SystemDocument6 pagesLiterature Review of Bus Ticket Reservation SystemaflsmyebkNo ratings yet

- Web Scale Semantic Product Search With Large Language ModelsDocument12 pagesWeb Scale Semantic Product Search With Large Language ModelsYaron RianyNo ratings yet

- Advance Projects AbstractDocument12 pagesAdvance Projects AbstractSanthiya SriramNo ratings yet

- Schema MatchingDocument3 pagesSchema MatchingSyed ZaheerNo ratings yet

- Dynamic and Optimal Interactive Voice Response System For Automated Service DiscoveryDocument9 pagesDynamic and Optimal Interactive Voice Response System For Automated Service DiscoveryKavitha BathreshNo ratings yet

- Web Image Re-Ranking UsingDocument14 pagesWeb Image Re-Ranking UsingkorsairNo ratings yet

- Multi Server Communication in Distributed Management SystemDocument3 pagesMulti Server Communication in Distributed Management Systemaruna2707No ratings yet

- 5s PDFDocument9 pages5s PDFPraveen KumarNo ratings yet

- A Literature Review of Domain Adaptation With Unlabeled DataDocument4 pagesA Literature Review of Domain Adaptation With Unlabeled DataafmzzulfmzbxetNo ratings yet

- PDC Review2Document23 pagesPDC Review2corote1026No ratings yet

- SPREE: Object Prefetching For Mobile ComputersDocument18 pagesSPREE: Object Prefetching For Mobile Computersmohitsaxena019No ratings yet

- Laporan Tubes (Translate Ver)Document6 pagesLaporan Tubes (Translate Ver)rezaNo ratings yet

- CoextractionfinalreportDocument64 pagesCoextractionfinalreportsangeetha sNo ratings yet

- Hostel Management System Literature Review SampleDocument4 pagesHostel Management System Literature Review SampleaflskfbueNo ratings yet

- The Design and Implementation of E-Commerce Log Analysis System Based On HadoopDocument5 pagesThe Design and Implementation of E-Commerce Log Analysis System Based On HadoopInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- S4: Distributed Stream Computing PlatformDocument8 pagesS4: Distributed Stream Computing PlatformOtávio CarvalhoNo ratings yet

- What Is Cloud Computing?Document55 pagesWhat Is Cloud Computing?Bhanu Prakash YadavNo ratings yet

- Automatic Image Annotation: Enhancing Visual Understanding through Automated TaggingFrom EverandAutomatic Image Annotation: Enhancing Visual Understanding through Automated TaggingNo ratings yet

- CSEC Eng A Summary ExerciseDocument1 pageCSEC Eng A Summary ExerciseArisha NicholsNo ratings yet

- Preliminaries Qualitative PDFDocument9 pagesPreliminaries Qualitative PDFMae NamocNo ratings yet

- Arctic Beacon Forbidden Library - Winkler-The - Thousand - Year - Conspiracy PDFDocument196 pagesArctic Beacon Forbidden Library - Winkler-The - Thousand - Year - Conspiracy PDFJames JohnsonNo ratings yet

- Papadakos PHD 2013Document203 pagesPapadakos PHD 2013Panagiotis PapadakosNo ratings yet

- Stockholm KammarbrassDocument20 pagesStockholm KammarbrassManuel CoitoNo ratings yet

- Summar Training Report HRTC TRAINING REPORTDocument43 pagesSummar Training Report HRTC TRAINING REPORTPankaj ChauhanNo ratings yet

- Prime White Cement vs. Iac Assigned CaseDocument6 pagesPrime White Cement vs. Iac Assigned CaseStephanie Reyes GoNo ratings yet

- Life&WorksofrizalDocument5 pagesLife&WorksofrizalPatriciaNo ratings yet

- D5 PROF. ED in Mastery Learning The DefinitionDocument12 pagesD5 PROF. ED in Mastery Learning The DefinitionMarrah TenorioNo ratings yet

- Acfrogb0i3jalza4d2cm33ab0kjvfqevdmmcia - Kifkmf7zqew8tpk3ef Iav8r9j0ys0ekwrl4a8k7yqd0pqdr9qk1cpmjq Xx5x6kxzc8uq9it Zno Fwdrmyo98jelpvjb-9ahfdekf3cqptDocument1 pageAcfrogb0i3jalza4d2cm33ab0kjvfqevdmmcia - Kifkmf7zqew8tpk3ef Iav8r9j0ys0ekwrl4a8k7yqd0pqdr9qk1cpmjq Xx5x6kxzc8uq9it Zno Fwdrmyo98jelpvjb-9ahfdekf3cqptbbNo ratings yet

- Chapter 8 - FluidDocument26 pagesChapter 8 - FluidMuhammad Aminnur Hasmin B. HasminNo ratings yet

- E 05-03-2022 Power Interruption Schedule FullDocument22 pagesE 05-03-2022 Power Interruption Schedule FullAda Derana100% (2)

- Literature Review On Catfish ProductionDocument5 pagesLiterature Review On Catfish Productionafmzyodduapftb100% (1)

- 2nd Form Sequence of WorkDocument7 pages2nd Form Sequence of WorkEustace DavorenNo ratings yet

- Dr. A. Aziz Bazoune: King Fahd University of Petroleum & MineralsDocument37 pagesDr. A. Aziz Bazoune: King Fahd University of Petroleum & MineralsJoe Jeba RajanNo ratings yet

- Hygiene and HealthDocument2 pagesHygiene and HealthMoodaw SoeNo ratings yet

- Tan vs. Macapagal, 43 SCRADocument6 pagesTan vs. Macapagal, 43 SCRANikkaDoriaNo ratings yet

- Adm Best Practices Guide: Version 2.0 - November 2020Document13 pagesAdm Best Practices Guide: Version 2.0 - November 2020Swazon HossainNo ratings yet

- Accountancy Department: Preliminary Examination in MANACO 1Document3 pagesAccountancy Department: Preliminary Examination in MANACO 1Gracelle Mae Oraller0% (1)

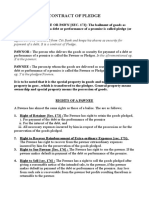

- Contract of PledgeDocument4 pagesContract of Pledgeshreya patilNo ratings yet

- Survey Results Central Zone First LinkDocument807 pagesSurvey Results Central Zone First LinkCrystal Nicca ArellanoNo ratings yet

- Business Administration: Hints TipsDocument11 pagesBusiness Administration: Hints Tipsboca ratonNo ratings yet

- Google Automatically Generates HTML Versions of Documents As We Crawl The WebDocument2 pagesGoogle Automatically Generates HTML Versions of Documents As We Crawl The Websuchi ravaliaNo ratings yet