Professional Documents

Culture Documents

MA and Invertability

Uploaded by

klerinetCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

MA and Invertability

Uploaded by

klerinetCopyright:

Available Formats

Introduction to Time Series Analysis. Lecture 5.

Peter Bartlett

www.stat.berkeley.edu/bartlett/courses/153-fall2010

Last lecture:

1. ACF, sample ACF

2. Properties of the sample ACF

3. Convergence in mean square

1

Introduction to Time Series Analysis. Lecture 5.

Peter Bartlett

www.stat.berkeley.edu/bartlett/courses/153-fall2010

1. AR(1) as a linear process

2. Causality

3. Invertibility

4. AR(p) models

5. ARMA(p,q) models

2

AR(1) as a linear process

Let {X

t

} be the stationary solution to X

t

X

t1

= W

t

, where

W

t

WN(0,

2

).

If || < 1,

X

t

=

j=0

j

W

tj

is the unique solution:

This innite sum converges in mean square, since || < 1 implies

|

j

| < .

It satises the AR(1) recurrence.

3

AR(1) in terms of the back-shift operator

We can write

X

t

X

t1

= W

t

(1 B)

(B)

X

t

= W

t

(B)X

t

= W

t

Recall that B is the back-shift operator: BX

t

= X

t1

.

4

AR(1) in terms of the back-shift operator

Also, we can write

X

t

=

j=0

j

W

tj

X

t

=

j=0

j

B

j

(B)

W

t

X

t

= (B)W

t

5

AR(1) in terms of the back-shift operator

With these denitions:

(B) =

j=0

j

B

j

and (B) = 1 B,

we can check that (B) = (B)

1

:

(B)(B) =

j=0

j

B

j

(1 B) =

j=0

j

B

j

j=1

j

B

j

= 1.

Thus, (B)X

t

= W

t

(B)(B)X

t

= (B)W

t

X

t

= (B)W

t

.

6

AR(1) in terms of the back-shift operator

Notice that manipulating operators like (B), (B) is like manipulating

polynomials:

1

1 z

= 1 +z +

2

z

2

+

3

z

3

+ ,

provided || < 1 and |z| 1.

7

Introduction to Time Series Analysis. Lecture 5.

1. AR(1) as a linear process

2. Causality

3. Invertibility

4. AR(p) models

5. ARMA(p,q) models

8

AR(1) and Causality

Let X

t

be the stationary solution to

X

t

X

t1

= W

t

,

where W

t

WN(0,

2

).

If || < 1,

X

t

=

j=0

j

W

tj

.

= 1?

= 1?

|| > 1?

9

AR(1) and Causality

If || > 1, (B)W

t

does not converge.

But we can rearrange

X

t

= X

t1

+W

t

as X

t1

=

1

X

t

W

t

,

and we can check that the unique stationary solution is

X

t

=

j=1

j

W

t+j

.

But... X

t

depends on future values of W

t

.

10

Causality

A linear process {X

t

} is causal (strictly, a causal function

of {W

t

}) if there is a

(B) =

0

+

1

B +

2

B

2

+

with

j=0

|

j

| <

and X

t

= (B)W

t

.

11

AR(1) and Causality

Causality is a property of {X

t

} and {W

t

}.

Consider the AR(1) process dened by (B)X

t

= W

t

(with

(B) = 1 B):

(B)X

t

= W

t

is causal

iff || < 1

iff the root z

1

of the polynomial (z) = 1 z satises |z

1

| > 1.

12

AR(1) and Causality

Consider the AR(1) process (B)X

t

= W

t

(with (B) = 1 B):

If || > 1, we can dene an equivalent causal model,

X

t

1

X

t1

=

W

t

,

where

W

t

is a new white noise sequence.

13

AR(1) and Causality

Is an MA(1) process causal?

14

Introduction to Time Series Analysis. Lecture 5.

1. AR(1) as a linear process

2. Causality

3. Invertibility

4. AR(p) models

5. ARMA(p,q) models

15

MA(1) and Invertibility

Dene

X

t

= W

t

+W

t1

= (1 +B)W

t

.

If || < 1, we can write

(1 +B)

1

X

t

= W

t

(1 B +

2

B

2

3

B

3

+ )X

t

= W

t

j=0

()

j

X

tj

= W

t

.

That is, we can write W

t

as a causal function of X

t

.

We say that this MA(1) is invertible.

16

MA(1) and Invertibility

X

t

= W

t

+W

t1

If || > 1, the sum

j=0

()

j

X

tj

diverges, but we can write

W

t1

=

1

W

t

+

1

X

t

.

Just like the noncausal AR(1), we can show that

W

t

=

j=1

()

j

X

t+j

.

That is, we can write W

t

as a linear function of X

t

, but it is not causal.

We say that this MA(1) is not invertible.

17

Invertibility

A linear process {X

t

} is invertible (strictly, an invertible

function of {W

t

}) if there is a

(B) =

0

+

1

B +

2

B

2

+

with

j=0

|

j

| <

and W

t

= (B)X

t

.

18

MA(1) and Invertibility

Invertibility is a property of {X

t

} and {W

t

}.

Consider the MA(1) process dened by X

t

= (B)W

t

(with

(B) = 1 +B):

X

t

= (B)W

t

is invertible

iff || < 1

iff the root z

1

of the polynomial (z) = 1 +z satises |z

1

| > 1.

19

MA(1) and Invertibility

Consider the MA(1) process X

t

= (B)W

t

(with (B) = 1 +B):

If || > 1, we can dene an equivalent invertible model in terms of a

new white noise sequence.

Is an AR(1) process invertible?

20

Introduction to Time Series Analysis. Lecture 5.

1. AR(1) as a linear process

2. Causality

3. Invertibility

4. AR(p) models

5. ARMA(p,q) models

21

AR(p): Autoregressive models of order p

An AR(p) process {X

t

} is a stationary process that satises

X

t

1

X

t1

p

X

tp

= W

t

,

where {W

t

} WN(0,

2

).

Equivalently, (B)X

t

= W

t

,

where (B) = 1

1

B

p

B

p

.

22

AR(p): Constraints on

Recall: For p = 1 (AR(1)), (B) = 1

1

B.

This is an AR(1) model only if there is a stationary solution to

(B)X

t

= W

t

, which is equivalent to |

1

| = 1.

This is equivalent to the following condition on (z) = 1

1

z:

z R, (z) = 0 z = 1

equivalently, z C, (z) = 0 |z| = 1,

where C is the set of complex numbers.

23

AR(p): Constraints on

Stationarity: z C, (z) = 0 |z| = 1,

where C is the set of complex numbers.

(z) = 1

1

z has one root at z

1

= 1/

1

R.

But the roots of a degree p > 1 polynomial might be complex.

For stationarity, we want the roots of (z) to avoid the unit circle,

{z C : |z| = 1}.

24

AR(p): Stationarity and causality

Theorem: A (unique) stationary solution to (B)X

t

= W

t

exists iff

(z) = 1

1

z

p

z

p

= 0 |z| = 1.

This AR(p) process is causal iff

(z) = 1

1

z

p

z

p

= 0 |z| > 1.

25

Recall: Causality

A linear process {X

t

} is causal (strictly, a causal function

of {W

t

}) if there is a

(B) =

0

+

1

B +

2

B

2

+

with

j=0

|

j

| <

and X

t

= (B)W

t

.

26

AR(p): Roots outside the unit circle implies causal (Details)

z C, |z| 1 (z) = 0

{

j

}, > 0, |z| 1 +,

1

(z)

=

j=0

j

z

j

.

|z| 1 +, |

j

z

j

| 0,

|

j

|

1/j

|z|

j

0

j

0

, j j

0

, |

j

|

1/j

1

1 +/2

j=0

|

j

| < .

So if |z| 1 (z) = 0, then S

m

=

m

j=0

j

B

j

W

t

converges in mean

square, so we have a stationary, causal time series X

t

=

1

(B)W

t

.

27

Calculating for an AR(p): matching coefcients

Example: X

t

= (B)W

t

(1 0.5B + 0.6B

2

)X

t

= W

t

,

so 1 = (B)(1 0.5B + 0.6B

2

)

1 = (

0

+

1

B +

2

B

2

+ )(1 0.5B + 0.6B

2

)

1 =

0

,

0 =

1

0.5

0

,

0 =

2

0.5

1

+ 0.6

0

,

0 =

3

0.5

2

+ 0.6

1

,

.

.

.

28

Calculating for an AR(p): example

1 =

0

, 0 =

j

(j 0),

0 =

j

0.5

j1

+ 0.6

j2

1 =

0

, 0 =

j

(j 0),

0 = (B)

j

.

We can solve these linear difference equations in several ways:

numerically, or

by guessing the form of a solution and using an inductive proof, or

by using the theory of linear difference equations.

29

Calculating for an AR(p): general case

(B)X

t

= W

t

, X

t

= (B)W

t

so 1 = (B)(B)

1 = (

0

+

1

B + )(1

1

B

p

B

p

)

1 =

0

,

0 =

1

1

0

,

0 =

2

1

1

2

0

,

.

.

.

1 =

0

, 0 =

j

(j < 0),

0 = (B)

j

.

30

Introduction to Time Series Analysis. Lecture 5.

1. AR(1) as a linear process

2. Causality

3. Invertibility

4. AR(p) models

5. ARMA(p,q) models

31

ARMA(p,q): Autoregressive moving average models

An ARMA(p,q) process {X

t

} is a stationary process that

satises

X

t

1

X

t1

p

X

tp

= W

t

+

1

W

t1

+ +

q

W

tq

,

where {W

t

} WN(0,

2

).

AR(p) = ARMA(p,0): (B) = 1.

MA(q) = ARMA(0,q): (B) = 1.

32

ARMA processes

Can accurately approximate many stationary processes:

For any stationary process with autocovariance , and any k >

0, there is an ARMA process {X

t

} for which

X

(h) = (h), h = 0, 1, . . . , k.

33

ARMA(p,q): Autoregressive moving average models

An ARMA(p,q) process {X

t

} is a stationary process that

satises

X

t

1

X

t1

p

X

tp

= W

t

+

1

W

t1

+ +

q

W

tq

,

where {W

t

} WN(0,

2

).

Usually, we insist that

p

,

q

= 0 and that the polynomials

(z) = 1

1

z

p

z

p

, (z) = 1 +

1

z + +

q

z

q

have no common factors. This implies it is not a lower order ARMA model.

34

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- List For Concert Band4Document1 pageList For Concert Band4klerinetNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- PrnaDocument87 pagesPrnaklerinetNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- List For Concert Band3Document1 pageList For Concert Band3klerinetNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Sy. Dance 3, FiestaDocument4 pagesSy. Dance 3, FiestaklerinetNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- 1Document1 page1klerinetNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- List For Concert BandDocument1 pageList For Concert BandklerinetNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Basson - MultiphonicsDocument3 pagesBasson - MultiphonicsLucho50% (2)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Poulenc - Trio For Oboe Bassoon and Piano PDFDocument46 pagesPoulenc - Trio For Oboe Bassoon and Piano PDFklerinetNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

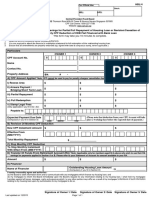

- Form HBL4Document3 pagesForm HBL4klerinetNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Armenian Dances I Bsn1Document4 pagesArmenian Dances I Bsn1klerinet100% (1)

- Armenian Dances I Bsn1Document4 pagesArmenian Dances I Bsn1klerinet100% (1)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- BassoonDocument1 pageBassoonklerinetNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Tenor and Bass Trombone Position123Document3 pagesTenor and Bass Trombone Position123eduardgeorge122No ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Consent Form For Medical InformationDocument1 pageConsent Form For Medical InformationklerinetNo ratings yet

- BassoonDocument1 pageBassoonklerinetNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Habenarias and Pecteilis CultureDocument3 pagesHabenarias and Pecteilis CultureklerinetNo ratings yet

- Terms and ConditionsDocument1 pageTerms and ConditionsklerinetNo ratings yet

- Cooper ExtractsDocument47 pagesCooper ExtractsklerinetNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Application For Change in PolicyDocument6 pagesApplication For Change in PolicyklerinetNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Chapter 14 NotesDocument3 pagesChapter 14 NotesklerinetNo ratings yet

- Statistical Digital Signal Processing and Modeling: Monson H. HayesDocument6 pagesStatistical Digital Signal Processing and Modeling: Monson H. HayesDebajyoti DattaNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Forecasting Market Share Using Predicted Values of Competitive BehaviorDocument23 pagesForecasting Market Share Using Predicted Values of Competitive BehaviormaleticjNo ratings yet

- ch8 9 10Document1,155 pagesch8 9 10DavidNo ratings yet

- MFM 5053 Chapter 1Document26 pagesMFM 5053 Chapter 1NivanthaNo ratings yet

- Dynamic Stochastic General Equilibrium (Dsge) Modelling:Theory and PracticeDocument34 pagesDynamic Stochastic General Equilibrium (Dsge) Modelling:Theory and PracticeRSNo ratings yet

- QuantitativeDocument90 pagesQuantitativeSen RinaNo ratings yet

- Partial Autocorrelations: Timotheus Darikwa SSTA031: Time Series AnalysisDocument26 pagesPartial Autocorrelations: Timotheus Darikwa SSTA031: Time Series AnalysisMaggie Kalembo100% (1)

- CAPTAIN Getting StartedDocument29 pagesCAPTAIN Getting StartedmgrubisicNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- 1997 (Jack Johnston, John Dinardo) Econometric Methods PDFDocument514 pages1997 (Jack Johnston, John Dinardo) Econometric Methods PDFtitan10084% (19)

- Transportation Research Part A: Anna Bottasso, Maurizio Conti, Claudio Ferrari, Alessio TeiDocument12 pagesTransportation Research Part A: Anna Bottasso, Maurizio Conti, Claudio Ferrari, Alessio TeiAnonymous qfU34ZNo ratings yet

- Time Series Analysis With PythonDocument64 pagesTime Series Analysis With Pythonpandey1987No ratings yet

- 2019 05 Exam SRM SyllabusDocument5 pages2019 05 Exam SRM SyllabusSujith GopinathanNo ratings yet

- Time Series Analysis Using e ViewsDocument131 pagesTime Series Analysis Using e ViewsNabeel Mahdi Aljanabi100% (1)

- MA ReinickeDocument50 pagesMA Reinickejalajsingh1987No ratings yet

- On Smooth Transition Autoregressive Family of Models and Their Applications-AsifDocument11 pagesOn Smooth Transition Autoregressive Family of Models and Their Applications-AsifFajri HusnulNo ratings yet

- Lecture Note 4 - Dynamic Models For Stationary DataDocument28 pagesLecture Note 4 - Dynamic Models For Stationary DataFaizus Saquib Chowdhury100% (1)

- Time Series AnalysisDocument7 pagesTime Series AnalysisVAIBHAV KASHYAP U ce16b062No ratings yet

- Autoregressive-Moving Average (ARMA) ModelsDocument34 pagesAutoregressive-Moving Average (ARMA) Modelsflaviorochaavila100% (1)

- Chapter 10: Multicollinearity Chapter 10: Multicollinearity: Iris WangDocument56 pagesChapter 10: Multicollinearity Chapter 10: Multicollinearity: Iris WangОлена БогданюкNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Panel Unit Root Tests - A Review PDFDocument57 pagesPanel Unit Root Tests - A Review PDFDimitris AnastasiouNo ratings yet

- Time Series Analysis: Applied Econometrics Prof. Dr. Simone MaxandDocument124 pagesTime Series Analysis: Applied Econometrics Prof. Dr. Simone MaxandAmandaNo ratings yet

- Deep Gaussian Processes For Multi-Fidelity ModelingDocument15 pagesDeep Gaussian Processes For Multi-Fidelity ModelingJulianNo ratings yet

- European Journal of Operational ResearchDocument11 pagesEuropean Journal of Operational Researchle100% (1)

- Time Series: "The Art of Forecasting"Document98 pagesTime Series: "The Art of Forecasting"Anonymous iEtUTYPOh3No ratings yet

- Parental Burnout - What Is It, and Why Does It MatterDocument33 pagesParental Burnout - What Is It, and Why Does It MatterDream Factory TVNo ratings yet

- Load Forecasting Techniques and Methodologies A ReviewDocument10 pagesLoad Forecasting Techniques and Methodologies A ReviewBasir Ahmad SafiNo ratings yet

- Stochastic Models of Nigerian Total LivebirthsDocument13 pagesStochastic Models of Nigerian Total LivebirthssardinetaNo ratings yet

- Ee583 - Statistical Signal ProcessingDocument6 pagesEe583 - Statistical Signal ProcessingMayam AyoNo ratings yet

- 3 SpectralEstimationParametricAR MA ARMADocument76 pages3 SpectralEstimationParametricAR MA ARMAKarl Adolphs100% (1)

- Chapter 16: Time-Series ForecastingDocument48 pagesChapter 16: Time-Series ForecastingMohamed MedNo ratings yet