Professional Documents

Culture Documents

ORTHOGONAL PROJECTIONS AND MATRICES

Uploaded by

Douglas SoaresOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ORTHOGONAL PROJECTIONS AND MATRICES

Uploaded by

Douglas SoaresCopyright:

Available Formats

19 Orthogonal projections and orthogonal matrices

19.1 Orthogonal projections

We often want to decompose a given vector, for example, a force, into the sum of two

orthogonal vectors.

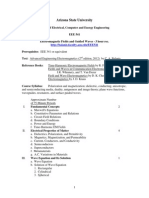

Example: Suppose a mass m is at the end of a rigid, massless rod (an ideal pendulum),

and the rod makes an angle with the vertical. The force acting on the pendulum is the

gravitational force mge

2

. Since the pendulum is rigid, the component of the force parallel

mg sin()

The pendulum bob makes an angle

with the vertical. The magnitude of the

force (gravity) acting on the bob is mg.

The component of the force acting in

the direction of motion of the pendulum

has magnitude mg sin().

mg

to the direction of the rod doesnt do anything (i.e., doesnt cause the pendulum to move).

Only the force orthogonal to the rod produces motion.

The magnitude of the force parallel to the pendulum is mg cos ; the orthogonal force has

the magnitude mg sin . If the pendulum has length l, Newtons law (F = ma) reads

ml

= mg sin ,

or

+

g

l

sin = 0.

This is the dierential equation for the motion of the pendulum. For small angles, we have,

approximately, sin , and the equation can be linearized to give

+

2

= 0, where =

g

l

,

which is identical to the equation of the harmonic oscillator.

1

19.2 Algorithm for the decomposition

Given the xed vector w, and another vector v, we want to decompose v as the sum v =

v

||

+v

, where v

||

is parallel to w, and v

is orthogonal to w. See the gure. Suppose is

the angle between w and v. We assume for the moment that 0 /2. Then

is ||v|| cos().

v

w

v

||

If the angle between v and w

||v|| cos()

projection of v onto w

is , then the magnitude of the

||v

||

|| = ||v|| cos = ||v||

w

||v|| ||w||

=

v

w

||w||

,

or

||v

||

|| = v

a unit vector in the direction of w

And v

||

is this number times a unit vector in the direction of w:

v

||

=

v

w

||w||

w

||w||

=

w

w

w.

In other words, if w = (1/||w||)w, then v

||

= (v

w) w. This is worth remembering.

Definition: The vector v

||

= (v

w) w is called the orthogonal projection of v onto w.

The nonzero vector w also determines a 1-dimensional subspace, denoted W, consisting of

all multiples of w, and v

||

is also known as the orthogonal projection of v onto the

subspace W.

Since v = v

||

+v

, once we have v

||

, we can solve for v

algebraically:

v

= v v

||

.

Example: Let

v =

1

1

2

, and w =

1

0

1

.

2

Then ||w|| =

2, so w = (1/

2)w, and

(v

w) w =

3/2

0

3/2

.

Then

v

= v v

||

=

1

1

2

3/2

0

3/2

1/2

1

1/2

.

and you can easily check that v

||

= 0.

Remark: Suppose that, in the above, /2 < , so the angle is not acute. In this case,

cos is negative, and ||v|| cos is not the length of v

||

(since its negative, it cant be a

length). It is interpreted as a signed length, and the correct projection points in the opposite

direction from that of w. In other words, the formula is correct, no matter what the value

of .

Exercises:

1. Find the orthogonal projection of

v =

2

2

0

onto

w =

1

4

2

.

Find the vector v

.

2. When is v

= 0? When is v

||

= 0?

3. This refers to the pendulum gure. Suppose the mass is located at (x, y) R

2

. Find

the unit vector parallel to the direction of the rod, say r, and a unit vector orthogonal

to r, say

, obtained by rotating r counterclockwise through an angle /2. Express

these orthonormal vectors in terms of the angle . And show that F

= mg sin as

claimed above.

4. (For those with some knowledge of dierential equations) Explain (physically) why the

linearized pendulum equation is only valid for small angles. (Hint: if you give a real

pendulum a large initial velocity, what happens? Is this consistent with the behavior

of the harmonic oscillator?)

3

19.3 Orthogonal matrices

Suppose we take an orthonormal (o.n.) basis {e

1

, e

2

, . . . , e

n

} of R

n

and form the nn matrix

E = (e

1

| |e

n

). Then

E

t

E =

e

t

1

e

t

2

.

.

.

e

t

n

(e

1

| |e

n

) = I

n

,

because

(E

t

E)

ij

= e

t

i

e

j

= e

i

e

j

=

ij

,

where

ij

are the components of the identity matrix:

ij

=

1 if i = j

0 if i = j

Since E

t

E = I, this means that E

t

= E

1

.

Definition: A square matrix E such that E

t

= E

1

is called an orthogonal matrix.

Example:

{e

1

, e

2

} =

1/

2

1/

1/

2

1/

is an o.n. basis for R

2

. The corresponding matrix

E = (1/

2)

1 1

1 1

is easily veried to be orthogonal. Of course the identity matrix is also orthogonal. .

Exercises:

If E is orthogonal, then the columns of E form an o.n. basis of R

n

.

If E is orthogonal, so is E

t

, so the rows of E also form an o.n. basis.

(*) If E and F are orthogonal and of the same dimension, then EF is orthogonal.

(*) If E is orthogonal, then det(E) = 1.

Let

{e

1

(), e

2

()} =

cos

sin

sin

cos

.

Let R() = (e

1

()|e

2

()). Show that R()R() = R( + ).

4

If E and F are the two orthogonal matrices corresponding to two o.n. bases, then

F = EP, where P is the change of basis matrix from E to F. Show that P is also

orthogonal.

19.4 Invariance of the dot product under orthogonal transforma-

tions

In the standard basis, the dot product is given by

x

y = x

t

Iy = x

t

y,

since the matrix which represents

is just I. Suppose we have another orthonormal basis,

{e

1

, . . . , e

n

}, and we form the matrix E = (e

1

| |e

n

). Then E is orthogonal, and its the

change of basis matrix taking us from the standard basis to the new one. We have, as usual,

x = Ex

e

, and y = Ey

e

.

So

x

y = x

t

y = (Ex

e

)

t

(Ey

e

) = x

t

e

E

t

Ey

e

= x

t

e

Iy

e

= x

t

e

y

e

.

What does this mean? It means that you compute the dot product in any o.n. basis using

exactly the same formula that you used in the standard basis.

Example: Let

x =

2

3

, and y =

3

1

.

So x

y = x

1

y

1

+ x

2

y

2

= (2)(3) + (3)(1) = 3.

In the o.n. basis

{e

1

, e

2

} =

1

1

,

1

1

1

,

we have

x

e

1

= x

e

1

= 1/

2

x

e

2

= x

e

2

= 5/

2 and

y

e

1

= y

e

1

= 4/

2

y

e

2

= y

e

2

= 2/

2.

And

x

e

1

y

e

1

+ x

e

2

y

e

2

= 4/2 + 10/2 = 3.

This is the same result as we got using the standard basis! This means that, as long as

were operating in an orthonormal basis, we get to use all the same formulas we use in the

standard basis. For instance, the length of x is the square root of the sum of the squares of the

5

components, the cosine of the angle between x and y is computed with the same formula as

in the standard basis, and so on. We can summarize this by saying that Euclidean geometry

is invariant under orthogonal transformations.

Exercise: ** Heres another way to get at the same result. Suppose A is an orthogonal

matrix, and f

A

: R

n

R

n

the corresponding linear transformation. Show that f

A

preserves

the dot product: Ax

Ay = x

y for all vectors x, y. (Hint: use the fact that x

y = x

t

y.)

Since the dot product is preserved, so are lengths (i.e. ||Ax|| = ||x||) and so are angles, since

these are both dened in terms of the dot product.

6

You might also like

- Sizing Cables, Conduit and TrunkingDocument56 pagesSizing Cables, Conduit and TrunkingChanel Maglinao100% (9)

- VFD Manual PDFDocument60 pagesVFD Manual PDFray1coNo ratings yet

- 3.three Ph. Circuits PDocument22 pages3.three Ph. Circuits Parchanabelge1No ratings yet

- Fundamentals of Electrical Circuits-ITSE-1261: Lecture 01: Course Overview, Basic Electric Circuit ConceptsDocument45 pagesFundamentals of Electrical Circuits-ITSE-1261: Lecture 01: Course Overview, Basic Electric Circuit ConceptsKebede Alemu100% (1)

- Differential Forms PDFDocument110 pagesDifferential Forms PDFeyenir100% (2)

- Random MatricesDocument27 pagesRandom MatricesolenobleNo ratings yet

- Viscous Fluid FlowDocument48 pagesViscous Fluid FlowTrym Erik Nielsen100% (1)

- Working Principle of I - P ConverterDocument4 pagesWorking Principle of I - P ConvertersandystaysNo ratings yet

- Matrix Similarity andDocument100 pagesMatrix Similarity andenes özNo ratings yet

- La2 8Document5 pagesLa2 8Roy VeseyNo ratings yet

- Hand Out FiveDocument9 pagesHand Out FivePradeep RajasekeranNo ratings yet

- L9 Vectors in SpaceDocument4 pagesL9 Vectors in SpaceKhmer ChamNo ratings yet

- Covectors Definition. Let V Be A Finite-Dimensional Vector Space. A Covector On V IsDocument11 pagesCovectors Definition. Let V Be A Finite-Dimensional Vector Space. A Covector On V IsGabriel RondonNo ratings yet

- 01-jgsp-13-2009-75-88-THE LOXODROME ON AN ELLIPSOID OF REVOLUTIONDocument14 pages01-jgsp-13-2009-75-88-THE LOXODROME ON AN ELLIPSOID OF REVOLUTIONdfmolinaNo ratings yet

- ENEE 660 HW Sol #1Document8 pagesENEE 660 HW Sol #1PeacefulLionNo ratings yet

- Chapter 21Document6 pagesChapter 21manikannanp_ponNo ratings yet

- Conditional ExpectationDocument33 pagesConditional ExpectationOsama HassanNo ratings yet

- 06b) Plane Wave Spectrum - 1 - 28Document8 pages06b) Plane Wave Spectrum - 1 - 28Josh GreerNo ratings yet

- Game Engine Programming 2 Week 3 Module 3Document25 pagesGame Engine Programming 2 Week 3 Module 3Dean LevyNo ratings yet

- A Survey of Gauge Theories and Symplectic Topology: Jeff MeyerDocument12 pagesA Survey of Gauge Theories and Symplectic Topology: Jeff MeyerEpic WinNo ratings yet

- PPTDocument27 pagesPPTfantahun ayalnehNo ratings yet

- Vectors in The PlaneDocument12 pagesVectors in The Planeai5uNo ratings yet

- C1.Vector AnalysisDocument27 pagesC1.Vector AnalysisRudy ObrienNo ratings yet

- Fundamental Theorem of Curves and Non-Unit Speed CurvesDocument7 pagesFundamental Theorem of Curves and Non-Unit Speed CurvesJean Pierre RukundoNo ratings yet

- Mappings of Elliptic Curves: Benjamin SmithDocument28 pagesMappings of Elliptic Curves: Benjamin SmithblazardNo ratings yet

- Mat67 LL Spectral - Theorem PDFDocument12 pagesMat67 LL Spectral - Theorem PDFDzenis PucicNo ratings yet

- Permutation Representations and Their InvariantsDocument33 pagesPermutation Representations and Their InvariantsfarhanNo ratings yet

- Repn Theory ExerciseDocument21 pagesRepn Theory ExerciseLuisNo ratings yet

- Intrinsic CoordinatesDocument12 pagesIntrinsic CoordinatesPeibol1991No ratings yet

- Thom Thom Spectra and Other New Brave Algebras: J.P. MayDocument27 pagesThom Thom Spectra and Other New Brave Algebras: J.P. MayEpic WinNo ratings yet

- Numerical Ranges of Unbounded OperatorsDocument22 pagesNumerical Ranges of Unbounded OperatorsAdedokun AbayomiNo ratings yet

- Tree 0Document65 pagesTree 0Jorge PachasNo ratings yet

- Strain, Strain Rate, Stress-2Document26 pagesStrain, Strain Rate, Stress-2Marika LeitnerNo ratings yet

- Envelope (Mathematics) - WikipediaDocument44 pagesEnvelope (Mathematics) - Wikipediashubhajitkshetrapal2No ratings yet

- Sec10 PDFDocument5 pagesSec10 PDFRaouf BouchoukNo ratings yet

- Dama50 Unit2Document27 pagesDama50 Unit2apostolosNo ratings yet

- Lec 958975Document19 pagesLec 958975Rajasekar PichaimuthuNo ratings yet

- Tutorial 1Document21 pagesTutorial 1kila122803No ratings yet

- Connections and Covariant DerivativesDocument8 pagesConnections and Covariant DerivativesNguyễn Duy KhánhNo ratings yet

- Isometries of Rn: Classifications and Examples in 2D and 3D SpacesDocument5 pagesIsometries of Rn: Classifications and Examples in 2D and 3D SpacesfelipeplatziNo ratings yet

- Janson - Tensors SjN2Document26 pagesJanson - Tensors SjN2rasgrn7112No ratings yet

- Chapter 1Document13 pagesChapter 1Jmartin FloresNo ratings yet

- Gonder UniversityDocument29 pagesGonder UniversityAmare AlemuNo ratings yet

- Eigenvalues and EigenvectorsDocument5 pagesEigenvalues and EigenvectorsMahmoud NaguibNo ratings yet

- Emtl Unit1 PDFDocument49 pagesEmtl Unit1 PDFnelapatikoteswarammaNo ratings yet

- FM3003 - Calculus III: Dilruk Gallage (PDD Gallage)Document31 pagesFM3003 - Calculus III: Dilruk Gallage (PDD Gallage)Dilruk GallageNo ratings yet

- Dynamics - Rigid Body DynamicsDocument20 pagesDynamics - Rigid Body DynamicsFelipe López GarduzaNo ratings yet

- Goldstein 22 15 21 23Document9 pagesGoldstein 22 15 21 23Laura SáezNo ratings yet

- Orthosymmetric Bilinear Map On Riesz SpacesDocument11 pagesOrthosymmetric Bilinear Map On Riesz SpacesRudi ChendraNo ratings yet

- Wave Propagation in Even and Odd Dimensional SpacesDocument5 pagesWave Propagation in Even and Odd Dimensional SpacesSrinivasaNo ratings yet

- ps4 2022Document2 pagesps4 2022Kalua BhaiNo ratings yet

- MA 106: Linear Algebra: J. K. Verma Department of Mathematics Indian Institute of Technology BombayDocument11 pagesMA 106: Linear Algebra: J. K. Verma Department of Mathematics Indian Institute of Technology Bombayjatin choudharyNo ratings yet

- Polarization of LightDocument11 pagesPolarization of LightBih-Yaw JinNo ratings yet

- Solving Nonlinear Equations From Higher Order Derivations in Linear StagesDocument24 pagesSolving Nonlinear Equations From Higher Order Derivations in Linear StagesAzhar Ali ZafarNo ratings yet

- Orthogonal ComplementDocument6 pagesOrthogonal ComplementmalynNo ratings yet

- Mathematics For ElectromagnetismDocument20 pagesMathematics For ElectromagnetismPradeep RajasekeranNo ratings yet

- Geometry in PhysicsDocument79 pagesGeometry in PhysicsParag MahajaniNo ratings yet

- 6.7 Introduction Dynamics in Three Dimensions A. General PrinciplesDocument12 pages6.7 Introduction Dynamics in Three Dimensions A. General PrincipleselvyNo ratings yet

- MetgeomDocument13 pagesMetgeomschultzmathNo ratings yet

- PH111 Chapter 4: Non-Inertial Frames and Pseudo ForcesDocument35 pagesPH111 Chapter 4: Non-Inertial Frames and Pseudo ForcesAd EverythingNo ratings yet

- MECH3300 - Lecture 4: Introduction To 2D Finite ElementsDocument15 pagesMECH3300 - Lecture 4: Introduction To 2D Finite Elementssqaiba_gNo ratings yet

- Physics 430 Lecture on Inertia Tensor and Principal AxesDocument17 pagesPhysics 430 Lecture on Inertia Tensor and Principal AxesKenn SenadosNo ratings yet

- Phpforandroiden 101031114048 Phpapp01Document31 pagesPhpforandroiden 101031114048 Phpapp01Douglas SoaresNo ratings yet

- Gap Caracther LibraryDocument96 pagesGap Caracther LibraryDouglas SoaresNo ratings yet

- CRUD Application in Zend FrameworkDocument9 pagesCRUD Application in Zend FrameworkHumus BalzerumNo ratings yet

- Programacao Curso de Linguagem PHPDocument67 pagesProgramacao Curso de Linguagem PHPThais FazzioNo ratings yet

- Beams On Elastic FoundationDocument15 pagesBeams On Elastic FoundationIngeniero EstructuralNo ratings yet

- Post Insulator 2017-2018Document40 pagesPost Insulator 2017-2018Jawad Azizi100% (1)

- BU1-AC - AC-voltage Relay: Fig. 1: Front PlateDocument4 pagesBU1-AC - AC-voltage Relay: Fig. 1: Front PlateLászló MártonNo ratings yet

- Friction: The Surface Offers A Force That Opposes The Motion Called As Frictional ForceDocument6 pagesFriction: The Surface Offers A Force That Opposes The Motion Called As Frictional Forcechhabra navdeep100% (1)

- E101 AnalysisDocument7 pagesE101 AnalysischristianNo ratings yet

- CENG198 CE Competency Appraisal III Static and Dynamics ProblemsDocument4 pagesCENG198 CE Competency Appraisal III Static and Dynamics ProblemsJeric DinglasanNo ratings yet

- Tuning Dielectric Resonators ElectronicallyDocument5 pagesTuning Dielectric Resonators Electronicallykhanafzaal2576No ratings yet

- Chapter 20Document58 pagesChapter 20Santiago Orellana CNo ratings yet

- Circuit Theory Question BankDocument19 pagesCircuit Theory Question BankVijay KumarNo ratings yet

- Ma'Din Polytechnic College Malappuram: Department of Electrical & ElectronicsengineeringDocument35 pagesMa'Din Polytechnic College Malappuram: Department of Electrical & ElectronicsengineeringUnais KKNo ratings yet

- Textile Research Journal: Problems and Possibilities in Sliver MonitoringDocument14 pagesTextile Research Journal: Problems and Possibilities in Sliver MonitoringMritunjay KumarNo ratings yet

- 6 Work and EnergyDocument44 pages6 Work and EnergyClaire Danes SuraltaNo ratings yet

- Understanding Multiphase CircuitsDocument15 pagesUnderstanding Multiphase CircuitsNidhija PillayNo ratings yet

- Nonlinear Optics: A Back-To-Basics PrimerDocument36 pagesNonlinear Optics: A Back-To-Basics Primerمحمد رضا پورهاشمیNo ratings yet

- SyllabusDocument2 pagesSyllabusAnonymous DuA3jEqUqNo ratings yet

- MATHS SyllabusDocument39 pagesMATHS SyllabusAndrew NelsonNo ratings yet

- SD 0100CT1902 Sec-16 PDFDocument22 pagesSD 0100CT1902 Sec-16 PDFFerdi BayuNo ratings yet

- 5.2 Ohms LawDocument31 pages5.2 Ohms LawNaUfal PaiNo ratings yet

- User Guide: External Wall Heights From 3.0m To 3.6 MDocument4 pagesUser Guide: External Wall Heights From 3.0m To 3.6 MPeter SemianiwNo ratings yet

- Vehicle Dynamics Example ProblemsDocument12 pagesVehicle Dynamics Example ProblemsMohammed ImranNo ratings yet

- Assignment 2 Series Parallel and Star Delta 1Document5 pagesAssignment 2 Series Parallel and Star Delta 1Sajan MaharjanNo ratings yet

- Duncan R - 6 - 3.2: SpecificationDocument2 pagesDuncan R - 6 - 3.2: SpecificationJosé Angel PinedaNo ratings yet

- Spin Waves and Magnons Unit 20Document12 pagesSpin Waves and Magnons Unit 20Martin ChuNo ratings yet

- Physics Handbook by DishaDocument9 pagesPhysics Handbook by DishaShubham kumarNo ratings yet

- Scheme of Work - Cambridge IGCSE Physics (0625) : Unit 5: ElectromagnetismDocument5 pagesScheme of Work - Cambridge IGCSE Physics (0625) : Unit 5: ElectromagnetismEman Gamal Mahmoud HusseinNo ratings yet