Professional Documents

Culture Documents

1 NN in Cap Mkts Refenes Ch01 Intro

Uploaded by

porapooka123Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1 NN in Cap Mkts Refenes Ch01 Intro

Uploaded by

porapooka123Copyright:

Available Formats

1

Introduction

Apostolos-Paul Refenes, London Business School, UK

POSITIONING OF NEURAL NETWORKS

The recent upturn of neural networks research has been brought about by two

driving forces. First, there is the realisation that neural networks are powerful tools

for modelling and understanding human cognitive behaviour. This has stimulated

research in neuroscience, anatomy, psychology and the biological sciences, whose

primary objective is to investigate the physiological plausibility of current artifi-

cial neural models and to identify new models which will give a more accurate

insight into the functionality of the human brain. Second, there is the realisa-

tion that artificial neural networks have powerful pattern recognition properties

and, in many applications, can outperform contemporary modelling techniques.

This has attracted researchers from a diverse field of applications including signal

processing, medical imaging, economic modelling, financial engineering, and also

researchers from the mathematical, physical and statistical sciences whose interest

lies in the investigation of the computational properties of neural networks. This

cross-fertilisation has provided added impetus in methodological developments but

it has also occasionally led to exaggerated claims and expectations. Anyone even

remotely connected with neural computation will testify that much more is expected

from neural networks than merely a useful addition to the statistician's toolbox.

With this in mind we shall attempt to elucidate the computational properties of

neural networks.

The awareness of the computational properties of any new modelling method-

ology provides an extra degree of freedom in deciding whether or not the use of

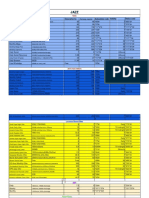

the methodology is desirable for a particular (class of) application. Figure 1.1 uses

a simple taxonomy of problem-solving methodologies to position neural networks

Neural Networks in the Capital Markets. Edited Ly Apostolos-Paul Refenes.

1995 John Wiley & Sons Ltd

4

c

o

9

n

i

o

imPliCit

n

explicit

precise

algorithmic

inference

precise

understanding

of system dynamics

Neural Networks in the Capital Markets

inductive

deductive inference

inference

non-observable

observable "- invariant behaviour

invariant behaviour

I

._- nonparametric regression

_! _ neural networks

b

1_- genetic algorithms

KB expert systems

parametric regression

complexity

Figure 1.1 Positioning of neural networks

with respect to their established counterparts. The horizontal axis represents the

complexity of the system we are trying to model. The "ertical axis represents the

various levels of the modeller's scientific understanding of the system's dynamics.

At the lower end of the spectrum (bottom left-hand comer), there are prob-

lems of relatively simple complexity, for which we have a precise understanding

of the mathematics and physics underlying the phenomenon that we are trying

to model. For problems of this nature it is possible to specify the equations of

the system's dynamics and subsequently hardwire (or softwire) the algorithms to

implement the required solution. Methods in this part of the spectrum are known as

algorithmic. Algorithmic methods use strong models. Strong models make strong

assumptions; they are usually expressed in a few equations with no free parameters,

and are clearly the most efficient methodologies to use in the context of model-rich

problems.

Further up the 'cognition' scale there are problems for which we have no precise

understanding of the system dynamics - in the sense that we are not able to

write down the equations of the system. However, if we observe the target system

over time we can detect invariant behaviour with the naked eye, that is to say,

under similar conditions the system behaves in similar ways. This enables the

modeller to specify a set of explicit rules of a general form which describe the

system's dynamics, often in conjunction with an 'expert' in the particular appli-

cation. Typically, these rules are formulated in a top-down fashion which allows

deductive reasoning. Deductive inference systems such as knowledge-based expert

Introduction 5

systems also use strong models with many rules and a few free parameters. Statis-

tical inference systems such as linear regression make weaker assumptions in that,

although they make strong assumptions about the nature of the relationship between

the various variables, they allow the system to deduce or compute the coefficients

for these variables from the observed data.

Finally, there are systems in which invariant behaviour is not observable, at

least not with the naked eye. In this territory, researchers have two complementary

tasks: estimating a model from observed data and analysing the properties of the

results generated from the estimated model. Typically, in such data-rich but model-

weak environments, model estimation is done using statistical inference techniques

such as nonparametric regression. Neural networks are essentially statistical devices

for performing inductive inference. From the statistician's point of view neural

networks are analogous to non parametric , nonlinear regression models. The idea

of nonparametric modelling in the absence of a strong model is by no means new.

Classical autoregressive moving average (ARMA) models are a good example of

'weak' modelling methods. The novelty of neural networks lies in their ability

to combine much more generality with an increasing insight into how to manage

their complexity.

The advantages and disadvantages of parametric versus nonparametric modelling

are well known to statisticians. In principle, strong models (when available) are

preferable to weak nonparametric models. Strong models make strong assumptions

about the properties of the data they are trying to fit. By doing so they build a high

degree of bias into the modelling process. Therefore, any errors that parametric

estimators make in forecasting are almost entirely due to this bias. Weak (nonpara-

metric) models, on the other hand, make no such a priori assumptions but 'let

the data speak for themselves'. In trying to find an appropriate model, nonpara-

metric estimators are exploring a much larger search space of functions to fit to the

observations. This is not always an advantage, as we shall see in later sections.

With nonparametric, nonlinear inductive inference the modeller's second task -

discovering the properties of the results generated from the estimated model -

becomes very important. This is so because for nonlinear systems it is no longer

possible to reconstruct an input signal and an independent transfer function from a

given output. It is, however, possible to investigate the properties of the estimated

model - for example, to quantify the sensitivity of an output to changes in each

of the inputs and to estimate the relative significance of the inputs.

Much of the terminology used in neural computation traces its roots to neuro-

science and psychology. The disciplines of statistics and econometrics use different

terms to refer to similar concepts. For example, inputs are independent variables,

outputs are dependent variables, convergence denotes model fitness in sample,

generalisation denotes out of sample predictions, etc. In the following section we

give a brief overview of the basic concepts underlying neural computation and

explain some of the terminology.

I

I

,I

6

Neural Networks in the Capital Markets

THE NEURON AS A BIOLOGICAL ABSTRACTION AND A

COMPUTING DEVICE

Neural networks attempt to mimic the way in which the human brain processes

information. The basic computing device in the human brain is the neuron. A

neuron consists of a cell body, branching extensions called dendrites for receiving

input, and an axon that carries the neuron's output to the dendrites of other neurons

(see Figure 1.2). This junction between an axon and a dendrite is called a synapse.

A neuron is believed to carry out a simple threshold calculation. It collects signals

at its synapses and sums them. If the combined signal strength exceeds a certain

threshold the neuron sends out its own signal which is a transformation of the

original input signal.

The transformation between the total input signal and the output signal is deter-

mined by a nonlinear function. Typical transformation functions are hard limiters,

sigmoids and pseudolinear functions, as shown in Figure 1.3. Hard limiter func-

tions produce values in the range {a, I}, depending on whether the total input of

a unit exceeds the threshold value. This type of function is used in most types of

THE (Biological Abstraction)

The NEURON (Computing IJevice)

SmlA

r V Axons

t Synapses

S .

(a) (b)

Figure 1.2 Functional block diagram of a neuron: (a) as a biological abstraction; (b) as

a computing device

o o o

(a) (b) (c)

Figure 1.3 Nonlinear transformation functions: (a) hard limiter; (b) asymmetric

sigmoid; (c) pseudolinear

Introduction 7

neural learning. Its main advantage is its simplicity, which makes mathematical

proofs relatively simple.

Sigmoid functions are the most widely used in all types of learning. They are

more complex, differentiable, and behave well for many applications. There are

two types of sigmoid function: asymmetric such as the one shown in Figure 1.3,

and symmetric. A typical asymmetric squashing function:

Xi = f(a) = 1 +e-

a

(1.1)

with asymptotes at and I as shown in Figure 1.3(b).

Associated with each connection is a weight, and associated with each neuron

is a state (usually implemented as an extra weight). Together these weights and

states represent the distributed data of the network. A neural network is a structure

made up of highly interconnected, primitive neurons. Typically neurons are organ-

ised into layers, with each neuron in one layer having a weighted connection to

each neuron in the next layer. This organisation of neurons and weighted connec-

tions creates a neural network, also known as an artificial neural nenvork system

(ANNS). An neural network system learns by means of appropriately changing

the internal connection strengths. This is called weight adoptation and takes place

during the so-called training phase. In this phase, external input patterns that have

to be associated with specific external output patterns or specific activation patterns

across the networks units are presented to the network (commonly several times).

The set of these external input patterns is called the training set (training sample);

a single input pattern is called a training vector (observation). Furthermore, the

network may receive environmental learning feedback, and this feedback may be

used as additional information in determining the magnitude of the weight changes.

SIMPLE TAXONOMY OF NEURAL NETWORK LEARNING

PROCEDURES

The principal goal of neural learning is to form associations between observed

patterns. There are two variants of the association paradigm: auto-association and

hetero-association. An auto-associative paradigm is one in which a pattern is asso-

ciated with itself (cf. clustering). A hetero-associative paradigm is one in which

two different patterns have to be associated with each other (cf. regression).

Depending on whether a learning procedure uses external signals (environmental

feedback) to help form the association, one can divide the learning procedures into

three classes: procedures implementing supervised learning, associative reinforce-

ment learning, and unsupervised learning.

In supervised learning (learning with a teacher), the environmental feedback

specifies the desired output pattern. Thereby the learning feedback is provided for

each input-output association to be learnt by the network. The implication of this

8 Neural Networks in the Capital Markets

is that in each input-output pair, the exact difference between desired and actual

output is known. Supervised learning is instruction-orientated. The objective of

this type of learning is to eliminate differences between actual and desired output

patterns by minimising a cost function or maximising an objective function.

In associative reinforcement learning (learning with a critic) the learning feed-

back is a scalar signal called a reinforcement signal which indicates whether or not

the actual and desired patterns coincide. The reinforcement signal may be provided

to the network for each input-ouput case or for sequences of input-output cases.

Associative reinforcement learning is evaluation-orientated. The objective of this

type of learning is to maximise some function of the reinforcement signal.

In unsupervised learning the network does not receive any environmental feed-

back. Unsupervised learning is self-organisation orientated. The objective of this

type of learning is to capture regularities (clusters) in the stream of input patterns

without receiving any learning feedback.

A secondary, but equally important classification criterion is the type of the

propagation rule, the nonlinear transformation function, and the weight adaptation

rules. Unsupervised learning procedures have no environmental feedback. Super-

vised learning procedures use some function of the difference between the target

outputs and the desired output as a measure of how well they are doing during

learning. Associative reinforcement procedures use a scalar value. Weight adapta-

tion is performed after the unit has received a reinforcement signal.

Comprehensive reviews of these procedures and ways of converting one kind of

learning procedure into another can be found in [Hinton87, Lippma87J. In this book

we shall concentrate on the two most commonly used procedures in financial data,

namely supervised learning by error backpropagation, and unsupervised learning

by self-organising feature maps.

SUPERVISED LEARNING: THE ERROR BACKPROPAGATION

ALGORITHM

Supervised learning procedures operate on regular feedforward networks of neurons.

Networks typically consist of many simple neuron-like processing elements grouped

together in layers. Each unit has a state or activity level that is determined by the

input received from the other units in the network (see Figure 1.4). Information

is processed locally in each unit by computing the dot product between its input

vector OJ and its weight vector Wji:

n

Xi = LOjWji - B, (1.2)

This weighted sum, Xi, which is called the total input of unit i, is then passed

through a sigmoid squashing function to produce the state of unit i denoted by 0i.

The most common squashing functions are the sigmoidal, the hyperbolic tangent

Introduction

INPUT

o

o

o

o

o

Y = f(EwX -B)

o

o

o

o

o

9

OUTPUT

Actual (a) Desired (d)

0.7 0.9

Figure 1.4 Fully interconnected network with one layer of hidden units

and the thermodynamic-like ones. All these functions can be included in the same

family. In this book our attention is focused on the family of squashing functions

Fn = {f = ftx, k, T, c)lx, k E lR; T, c E lR - {OJ} which are defined by (1.3):

c

f =k+ l+eTx;

(1.3)

Note that squashing functions vary according to the permissible values of k, c

and T. We shall use these results later to examine the relationship between the

various threshold functions, to explore the various results that we obtained, and to

prove that, in general, symmetric thresholding functions in the family are capable

of producing faster convergence.

Before training, the weights are initialised with random values. Training the

network to produce a desired output vector o(r) when presented with an input pattern

j(r) involves systematically changing the weights until the network produces the

desired output (within a given tolerance). This is repeated over the entire training

set. In doing so, each connection in the network computes the derivative, with

respect to the connection strength, of a global measure of the error in the perfor-

mance of the network. The connection strength is then adjusted in the direction that

decr\!ases the error. A plausible measure of how poorly the network is performing

with its current set of weights is given by E in 0.4).

n

E = 1 L(Yj.e - dj .e )2 0.4)

j.e

where Yj.e is the actual state of the output unit j in input-output case c, and d

j

.

e

is its desired state.

Learning is thus reduced to a minimisation procedure of the error measure

given in 0.4). This is achieved by repeatedly changing the weights by an amount

proportional to the derivative a E / a W, denoted by 8

i

:

t.Wij(t + 1) = )..,8iYij (1.5)

10 Neural Networks in the Capital Markets

The learning rate, ).. (i.e. the fraction by which the global error is minimised during

each pass) is kept constant at least for the duration of a single pass. In the limit, as )..

tends to zero and the number of iterations tends to infinity, this learning procedure

is guaranteed to find the set of weights that gives the least mean square (LMS)

error. The value of 8; = BE/BW is computed by differentiating (1.4) and (1.2):

8; = (d),c - Y),c)!*(Y;) ( 1.6)

The LMS error procedure has a simple geometric intef!Jretation [Hinton87]: if

we construct a multi-dimensional 'weight space' that has an axis for each weight

and one extra axis called 'height' that corresponds to the error measure, For each

combination of weights, the network will have a certain error which can be repre-

sented by the height of a point in weight space, These points form a surface called

the 'error surface'. For networks with linear output units and no hidden units, the

error surface always forms a bowl whose horizontal cross-sections are ellipses and

whose vertical cross-sections are parabolas, Since the bowl has only one minimum

(perhaps a complete subspace but nevertheless only one), gradient descent on the

error surface is guaranteed to find it. If the output units have a nonlinear but mono-

tonic transfer function, the bowl is deformed but still has only one minimum, so

gradient descent still works. However, with hidden units, the error surface may

contain many local minima, so it is possible that steepest descent in weight space

will be trapped in poor local minima,

UNSUPERVISED LEARNING: SELF-ORGANISING FEATURE MAPS

The most popular unsupervised learning procedure is Kohonen's self-organising

maps [Kohone84]. The algorithm is based on the common belief that many parts

of the human brain operate in a self-organised way. For instance, there are areas

of the cerebral cortex corresponding to the sensory modalities (visual area, audi-

tory area, somatosensory area, etc.) and to various operational areas (speech area,

motor area, etc.). This means that the topographic order in which sensory signals

are received at the sensory organs is the same as the topographic order in which

these signals are obtained on their specific areas; different feature values of the

sensory signals cause different spatial locations of the neural responses. Hence, the

brain realises a topology-preserving mapping from the sensory environment to the

sensory specific area; the brain forms topographically ordered (retinotopic, tono-

topic, somatotopic, etc.) maps. Another central characteristic of the brain maps is

that they organise themselves, that is, there is no teacher guiding their formation.

Although the mechanisms of how these brain maps self-organise in a topology-

preserving form are not definitely known, there is evidence for the assumption that

a special kind of lateral feedback (sometimes referred to as the Mexican-hat-type

interaction) plays an important part in their formation. Due to this neural prin-

ciple of lateral feedback, a neuron excitates itself and its short-range neighbouring

neurons, and inhibits its middle-range neighbouring neurons.

Introduction

30 input weight 'o'9c\or

Image of the input .... ector

(the maximally responding unit)

2-D neighbourhood

11

Figure 1.5 Self-organising feature map network. A mapping is formed from a 3-D

input space onto a 2-D network. The values of the input components, weights and the

unit output are shown by grey scale coding

A topological feature map [Kohone84] is a large adaptive system which consists

of a number of processing units in a laminar organisation (see Figure 1.5). To form

a self-organised map first, the input data is coded along a number of features,

forming an input space of N-dimensional vectors. Input items are randomly drawn

from the input distribution and presented to the network one at a time. All units

receive the same input and produce the same output, proportional to the similarity

of the input vector and the unit's parameter vector (which is also called the input

weight vector of the unit). The unit with the maximum response is taken as the

image of the input vector on the map. The parameter vector of this unit and each

unit on its neighbourhood are changed towards the input vector, so that these units

will produce an even response to the same input in the future. The parallelism of

neighbouring vectors is thus increased at each presentation, a process which results

in a global order.

The processing units of the resulting network are sensitive to specific items of

the input space. Topological relations are retained: two input items which are close

in the input space are mapped onto units close in the map. The distribution of

the parameter vectors approximates that of the input vectors. This means that the

most common areas of the input space are represented to a greater detail, that is,

more units are allocated to represent these inputs. The dimensionality of the map

is determined by the definition of the neighbourhood, that is, whether the units are

laid out in a line (1-D) or on a plane (2-D), etc. If dimensionality is reduced in the

mapping, the dimensions of the map do not necessarily stand for any recognisable

features of the input space. The dimensions develop automatically to facilitate the

best discrimination between the input items.

The basic system used by Kohonen is a single layered network whose units form

an one- or two-dimensional array. The mapping from the external input patterns to

the network's activity patterns is realised by correlating the input patterns with the

weights of the units. This leads to a mapping which works in two phases: similarity

matching (clustering of activity, discrimination process, and 'bubble formation'),

and weight adaptation.

12 Neural Networks in the Capital Markets

Initially the weights of the connections are set to small random values. During

the similarity matching phase, the unit which is most similar to the actually

presented input pattern X = (X" ... , Xn) at time t is located (discriminated). This

most similar unit is given to the unit Us whose weight vector W s = (Ws ', .. , Wsn )

meets the condition:

Ix(t) - Wsl

E

= min{lX(t) - Wi(t)IEl

i

(1.7)

where I 1 E is the Euclidean distance function. The next step in this phase is to

define a topological neighbourhood Ns of this unit Us; Ns contains all units being

within a certain radius centred at Us. This neighbourhood Ns corresponds to the

centre of neural response caused by the actual input pattern x (often referred to as

the activity bubble).

During the second phase, weight adaptation, the input weights of the units being

in Ns are changed according to the rule in (1.8)

Wet + 1) = {Wi(t) + a[x(t) - Wi(t)] if i E ,,!s (1.8)

I Wi (t) otherwIse

where a is (in the simplest case) a positive scalar constant. This type of weight

changing simulates the Mexican-hat-type interaction mentioned earlier.

NEURAL NETWORKS AS ADDITIVE NONLINEAR, NONPARAMETRIC

REGRESSION MODELS

It is claimed that because of their inductive nature, neural networks can bypass the

step of theory formulation and can infer complex nonlinear relationships between

an asset price and its determinants. Various performance figures are being quoted to

support these claims but there is rarely a comprehensive investigation of the nature

of the relationship that has been captured between asset prices and their determi-

nants. The absence of explicit models makes it difficult to assess the significance

of the estimated model and the possibility that any short-term success is due to

'data mining'.

In this section we formulate neural learning in a framework similar to additive

nonlinear regression [HasTib90). This formulation provides an explicit representa-

tion of the estimated models and enables us to use a rich collection of analytic and

statistical tools to test the significance of the various parameters in the estimated

neural models. The formulation, when applied to modelling asset returns, can make

use of modem financial economics theory on market dynamics to investigate the

plausibility of the estimated models and to analyse them in order to separate the

nonlinear components of the models which'are invariant through time from those

that reflect temporary (and probably unrepeatable) market imperfections.

Consider the family of neural networks with asymmetric sigmoids as the

nonlinear transfer function. For simplicity, we consider networks with two layers of

hidden connections as shown in Figure 1.6 with A and B denoting input variables,

Introduction

13

A

y

B

Figure 1.6 Feedforward network with two layers of hidden units

y denoting the output variable, ao, a" fJo, fJ, the connection weights from the

input units to the hidden layer, and Yo, y, connections from the hidden units to the

output unit.

The task of the training procedure is to estimate a function between input and

response vectors. The function is parametrised by the network weights and the

transfer function see equation (1.1) and takes the form:

y=

1 + e-(YO"o+YI"I)

(1.9)

where Vo and v, are the outputs of the intermediate hidden units, similarly

parametrised by the weights between the input and hidden layer. Ignoring the

bias components, we have:

Vo = and v, = (UO)

1 + e-(<>oA+lioB) 1 + e-(<>I A+lil B)

Let us illustrate how the task of the learning procedure can be compared to that

of additive nonlinear regression by assuming, without loss of generality, that on a

network with linear units at the output level:

1

Y = Yovo + y, v, = Yo 1 + e-(<>oA+lioB) + y, 1 + e-(<>IA+1i1 B) (l.I1)

In most financial engineering applications, it is common to apply smoothing trans-

formations to the input and output variables prior to training in order, for example,

to remove the effect of statistical outliers. A commonly used transformation is the

logarithmic operation. Typically, instead of estimating y = I(A, B) one would use

the reversible transformation In(y) = l(ln(A), In(B. Using this transformation,

the exponential term can be rewritten as:

e(<>o 'n(AHlIo 'n(B)) =

= e'n(A"'O

= A <>0 Blio

( 1.12)

Using (1.12) it is easy to show that (1.11) can be rewritten as the sum of two

products:

A<>o Blio A"I Blil

In(y) = Yo A<>o Bilo + 1 + y, A<>I Blil + 1

( 1.13)

14 Neural Networks in the Capital Markets

Overall we have six parameters lao, a" fJo, fJ, and Yo, yd ignoring the constants,

i.e. biases. The task of the learning procedure is to estimate the parameters in a

way that minimises the residual least square error. In the general case for networks

with n hidden units and m input variables (1.13) takes the form:

AUO Btlo ... MilO Aal Btll ... Mill

In(y) = Yo AaoBtlo ... Mllo + 1 + y, AalBtll ... MIlI + I

Aan Btln ... Milm

+ ... + Yn -------

AUn Btln ... Milm + I

0.14)

Thus neural learning is analogous to searching the function space defined by the

terms of (1.14) and the range of the permissible values for the parameters.

This formulation is strikingly similar to the formulation of additive nonlinear

nonparametric regression formulation [ReBeBu94] and it allows us to apply the

analytic and statistical tools that have been developed in the field of additive

nonparametric regression for the class of neural networks of a similar structure.

SUMMARY

Neural networks are essentially statistical devices for inductive inference. Their

strengths (and weaknesses) accrue from the fact that they need no a priori assump-

tions of models and from their capability to infer complex, nonlinear underlying

relationships. Although the future of neural networks as nonlinear estimators in

financial engineering seems to be very promising, developing successful applica-

tions is not a straightforward procedure.

Substantial expertise in both the domain of neural network engineering and

the domain of financial engineering is required. The choice of the variables, their

significance and correlation, normalisation and optimisation of multi-parameter data

sets, etc., are of extreme importance. The application development is usually a time-

consuming process, involving extensive data preprocessing and experimentation

with network engineering parameters.

Research in neural computation ranges from reassuring proofs that neural

networks with sigmoid units can essentially fit any function and its derivative,

to theorems on generalisation ability that can be obtained under very weak

(nonparametric) assumptions. For such models with broad approximation abilities

and few specific assumptions, the distinction between memorisation and

generalisation becomes critical. The awareness of the complex interelationships

between data preprocessing, fine-tuning of neural learning parameters, and

generalisation is an essential element in successful application development. The

purpose of the next two chapters is to explore the interrelationships among the

numerous network and data engineering parameters and to highlight the importance

of careful choice of the indicators used as network inputs.

2

Neural Network

Design Considerations

Apostolos-Paul Refenes, London Business School, UK

In Chapter 1 we gave a brief introduction to supervised learning together with a

simple geometrical interpretation of machine learning by gradient descent. This

procedure is summarised in Figure 2.1. This chapter defines the main performance

measures for neural networks and discusses the parameters that influence them.

As shown in the bottom left-hand side of Figure 2.1, we evaluate network perfor-

mance as a weighted index of three metrics:

convergence - accuracy of model fitness in-sample;

generalisation - accuracy of model fitness out-of-sample;

stability - variance in prediction accuracy.

The bottom right -hand side of Figure 2.1 lists a number of control mechanisms

that can be used to influence the above performance measures.

the choice of activation function;

the choice of cost function;

network architecture;

gradient descent/ascent control terms;

learning times.

In the following sections we explore ways in which the control mechanisms can

be used to influence (for better or worse) the main performance measures. We start

by a brief definition of the performance measures.

Neural Networks in the Capital Markets. Edited by Apostolos-Paul Refenes.

1995 John Wiley & Sons Ltd

You might also like

- Hybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsFrom EverandHybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsNo ratings yet

- Fuzzy Logic and Expert Systems ApplicationsFrom EverandFuzzy Logic and Expert Systems ApplicationsRating: 5 out of 5 stars5/5 (1)

- 10 Myths About Neutral NetworksDocument10 pages10 Myths About Neutral NetworksNialish KhanNo ratings yet

- On Neural Networks in Identification and Control of Dynamic SystemsDocument34 pagesOn Neural Networks in Identification and Control of Dynamic Systemsvane-16No ratings yet

- Neural Networks and Their Application To Finance: Martin P. Wallace (P D)Document10 pagesNeural Networks and Their Application To Finance: Martin P. Wallace (P D)Goutham BeesettyNo ratings yet

- Chapter 19Document15 pagesChapter 19Ara Martínez-OlguínNo ratings yet

- Psichogios AICHE-1 PDFDocument13 pagesPsichogios AICHE-1 PDFRuppahNo ratings yet

- Artificial Neural NetworksDocument9 pagesArtificial Neural Networksm_pandeeyNo ratings yet

- A Hybrid Neural Network-First Principles Approach Process ModelingDocument13 pagesA Hybrid Neural Network-First Principles Approach Process ModelingJuan Carlos Ladino VegaNo ratings yet

- Richard D. de Veaux Lyle H. Ungar Williams College University of PennsylvaniaDocument29 pagesRichard D. de Veaux Lyle H. Ungar Williams College University of PennsylvaniajagruthimsNo ratings yet

- CISIM2010 HBHashemiDocument5 pagesCISIM2010 HBHashemiHusain SulemaniNo ratings yet

- Feedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsFrom EverandFeedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsNo ratings yet

- Artificial Neural NetworkDocument8 pagesArtificial Neural NetworkRoyalRon Yoga PrabhuNo ratings yet

- Long Short Term Memory: Fundamentals and Applications for Sequence PredictionFrom EverandLong Short Term Memory: Fundamentals and Applications for Sequence PredictionNo ratings yet

- I-:'-Ntrumsu'!I: Model Selection in Neural NetworksDocument27 pagesI-:'-Ntrumsu'!I: Model Selection in Neural Networksniwdex12No ratings yet

- Neuroevolution: Fundamentals and Applications for Surpassing Human Intelligence with NeuroevolutionFrom EverandNeuroevolution: Fundamentals and Applications for Surpassing Human Intelligence with NeuroevolutionNo ratings yet

- FNAE Cap1Document6 pagesFNAE Cap1JULIANANo ratings yet

- Artificial Neural NetworkDocument10 pagesArtificial Neural NetworkSantosh SharmaNo ratings yet

- Neural NetworksDocument12 pagesNeural NetworksP PNo ratings yet

- Article ANN EstimationDocument7 pagesArticle ANN EstimationMiljan KovacevicNo ratings yet

- Multilayer Neural NetworksDocument20 pagesMultilayer Neural NetworksSaransh VijayvargiyaNo ratings yet

- Neural Network and Fuzzy LogicDocument46 pagesNeural Network and Fuzzy Logicdoc. safe eeNo ratings yet

- Artificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationFrom EverandArtificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationNo ratings yet

- InTech-Introduction To The Artificial Neural Networks PDFDocument17 pagesInTech-Introduction To The Artificial Neural Networks PDFalexaalexNo ratings yet

- Bio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldFrom EverandBio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldNo ratings yet

- IndexDocument51 pagesIndexsaptakniyogiNo ratings yet

- Dissertation Informatik UmfangDocument7 pagesDissertation Informatik UmfangWebsiteThatWillWriteAPaperForYouOmaha100% (1)

- COM417 Note Aug-21-2022Document25 pagesCOM417 Note Aug-21-2022oseni wunmiNo ratings yet

- Neural NetworksDocument11 pagesNeural Networks16Julie KNo ratings yet

- On Neural Networks and Application: Submitted By: Makrand Ballal Reg No. 1025929 Mca 3 SemDocument14 pagesOn Neural Networks and Application: Submitted By: Makrand Ballal Reg No. 1025929 Mca 3 Semmakku_ballalNo ratings yet

- ShayakDocument6 pagesShayakShayak RayNo ratings yet

- Data Mining TechniquesDocument3 pagesData Mining TechniquesZaryaab AhmedNo ratings yet

- A Visualization Framework For The Analysis of Neuromuscular SimulationsDocument11 pagesA Visualization Framework For The Analysis of Neuromuscular Simulationsyacipo evyushNo ratings yet

- Neural Networks and Statistical ModelsDocument13 pagesNeural Networks and Statistical ModelsIhsan MuharrikNo ratings yet

- Applications ANN Stock MarketDocument13 pagesApplications ANN Stock MarketSwamy KrishnaNo ratings yet

- Multilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksFrom EverandMultilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksNo ratings yet

- Fuzzy Logic Based Model For Analysing The Artificial NetworkDocument6 pagesFuzzy Logic Based Model For Analysing The Artificial NetworkJournal of Computer ApplicationsNo ratings yet

- CSE - Soft ComputingDocument70 pagesCSE - Soft ComputingTapasRoutNo ratings yet

- Soft Computing Notes PDFDocument69 pagesSoft Computing Notes PDFSidharth Bastia100% (1)

- Practical On Artificial Neural Networks: Amrender KumarDocument11 pagesPractical On Artificial Neural Networks: Amrender Kumarnawel dounaneNo ratings yet

- Neural Networks and Their Application To Finance: Martin P. Wallace (P D)Document10 pagesNeural Networks and Their Application To Finance: Martin P. Wallace (P D)babaloo11No ratings yet

- (2016) Analysis of Neuronal Spike Trains DeconstructedDocument39 pages(2016) Analysis of Neuronal Spike Trains DeconstructedDiego Soto ChavezNo ratings yet

- Artificial Neural NetworksDocument25 pagesArtificial Neural NetworksManoj Kumar SNo ratings yet

- An Overview of Advances of Pattern Recognition Systems in Computer VisionDocument27 pagesAn Overview of Advances of Pattern Recognition Systems in Computer VisionHoang LMNo ratings yet

- Evolutionary Algorithms and Neural Networks: Theory and ApplicationsFrom EverandEvolutionary Algorithms and Neural Networks: Theory and ApplicationsNo ratings yet

- DL Unit1Document10 pagesDL Unit1Ankit MahapatraNo ratings yet

- Deep-Learning Notes 01Document8 pagesDeep-Learning Notes 01Ankit MahapatraNo ratings yet

- What Is An Artificial Neural Network?Document11 pagesWhat Is An Artificial Neural Network?Lakshmi GanapathyNo ratings yet

- Dynamic Evolving Neuro Fuzzy Systems of PDFDocument10 pagesDynamic Evolving Neuro Fuzzy Systems of PDFSam JacobNo ratings yet

- A Beginners Guide To The Mathematics of Neural NetworksDocument17 pagesA Beginners Guide To The Mathematics of Neural Networksrhycardo5902No ratings yet

- Artificial IntelligenceDocument7 pagesArtificial IntelligenceGandhar MujumdarNo ratings yet

- Article On Neural NetworksDocument5 pagesArticle On Neural NetworksSaad ZahidNo ratings yet

- Artificial Neural Networks and Their Applications: June 2005Document6 pagesArtificial Neural Networks and Their Applications: June 2005sadhana mmNo ratings yet

- Artificial Neural Networks: A Seminar Report OnDocument13 pagesArtificial Neural Networks: A Seminar Report OnShiv KumarNo ratings yet

- Statistical Markov Model Markov Process Dynamic Bayesian Network Markov ModelDocument6 pagesStatistical Markov Model Markov Process Dynamic Bayesian Network Markov ModelJimit GandhiNo ratings yet

- Quality Prediction in Object Oriented System by Using ANN: A Brief SurveyDocument6 pagesQuality Prediction in Object Oriented System by Using ANN: A Brief Surveyeditor_ijarcsseNo ratings yet

- Forex - Nnet Vs RegDocument6 pagesForex - Nnet Vs RegAnshik BansalNo ratings yet

- Seminar Report ANNDocument21 pagesSeminar Report ANNkartik143100% (2)

- Dimmable Bulbs SamplesDocument11 pagesDimmable Bulbs SamplesBOSS BalaNo ratings yet

- Effect of IctDocument10 pagesEffect of IctRVID PhNo ratings yet

- Gastone Petrini: Strutture e Costruzioni Autarchiche Di Legno in Italia e Colonie Caratteri e CriterDocument9 pagesGastone Petrini: Strutture e Costruzioni Autarchiche Di Legno in Italia e Colonie Caratteri e CriterPier Pasquale TrausiNo ratings yet

- Standard Dimensions Grooved and Shouldered Joints AMERICAN - The Right WayDocument2 pagesStandard Dimensions Grooved and Shouldered Joints AMERICAN - The Right WaySopon SrirattanapiboonNo ratings yet

- Mi Account ေက်ာ္နည္းDocument16 pagesMi Account ေက်ာ္နည္းamk91950% (2)

- Dynamic Shear Modulus SoilDocument14 pagesDynamic Shear Modulus SoilMohamed A. El-BadawiNo ratings yet

- An Overview and Framework For PD Backtesting and BenchmarkingDocument16 pagesAn Overview and Framework For PD Backtesting and BenchmarkingCISSE SerigneNo ratings yet

- The Adoption of e Procurement in Tanzani PDFDocument5 pagesThe Adoption of e Procurement in Tanzani PDFDangyi GodSeesNo ratings yet

- Design of AC Chopper Voltage Regulator Based On PIC16F716 MicrocontrollerDocument4 pagesDesign of AC Chopper Voltage Regulator Based On PIC16F716 MicrocontrollerabfstbmsodNo ratings yet

- Certified Vendors As of 9 24 21Document19 pagesCertified Vendors As of 9 24 21Micheal StormNo ratings yet

- Help SIMARIS Project 3.1 enDocument61 pagesHelp SIMARIS Project 3.1 enVictor VignolaNo ratings yet

- AIF User Guide PDFDocument631 pagesAIF User Guide PDFÖzgün Alkın ŞensoyNo ratings yet

- Formula Renault20 Mod00Document68 pagesFormula Renault20 Mod00Scuderia MalatestaNo ratings yet

- Slipform Construction TechniqueDocument6 pagesSlipform Construction TechniqueDivyansh NandwaniNo ratings yet

- Hunger Games Mini Socratic Seminar2012Document4 pagesHunger Games Mini Socratic Seminar2012Cary L. TylerNo ratings yet

- PhotometryDocument2 pagesPhotometryHugo WNo ratings yet

- Yumemiru Danshi Wa Genjitsushugisha Volume 2Document213 pagesYumemiru Danshi Wa Genjitsushugisha Volume 2carldamb138No ratings yet

- Online Dynamic Security Assessment of Wind Integrated Power System UsingDocument9 pagesOnline Dynamic Security Assessment of Wind Integrated Power System UsingRizwan Ul HassanNo ratings yet

- 2014 Abidetal. TheoreticalPerspectiveofCorporateGovernance BulletinofBusinessDocument11 pages2014 Abidetal. TheoreticalPerspectiveofCorporateGovernance BulletinofBusinessOne PlusNo ratings yet

- ITP - Plaster WorkDocument1 pageITP - Plaster Workmahmoud ghanemNo ratings yet

- DAB Submersible PumpsDocument24 pagesDAB Submersible PumpsMohamed MamdouhNo ratings yet

- Jazz PrepaidDocument4 pagesJazz PrepaidHoney BunnyNo ratings yet

- DC Motor: F Bli NewtonDocument35 pagesDC Motor: F Bli NewtonMuhammad TausiqueNo ratings yet

- Methods of Estimation For Building WorksDocument22 pagesMethods of Estimation For Building Worksvara prasadNo ratings yet

- Bug Life Cycle in Software TestingDocument2 pagesBug Life Cycle in Software TestingDhirajNo ratings yet

- Cultural Practices of India Which Is Adopted by ScienceDocument2 pagesCultural Practices of India Which Is Adopted by ScienceLevina Mary binuNo ratings yet

- TIB Bwpluginrestjson 2.1.0 ReadmeDocument2 pagesTIB Bwpluginrestjson 2.1.0 ReadmemarcmariehenriNo ratings yet

- Pre Intermediate Talking ShopDocument4 pagesPre Intermediate Talking ShopSindy LiNo ratings yet

- Video Tutorial: Machine Learning 17CS73Document27 pagesVideo Tutorial: Machine Learning 17CS73Mohammed Danish100% (2)

- Sentence Diagramming:: Prepositional PhrasesDocument2 pagesSentence Diagramming:: Prepositional PhrasesChristylle RomeaNo ratings yet