Professional Documents

Culture Documents

Hop Field Net

Uploaded by

Zheng Ronnie LiCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hop Field Net

Uploaded by

Zheng Ronnie LiCopyright:

Available Formats

Part VII 1

CSE 5526: Introduction to Neural Networks

Hopfield Network for

Associative Memory

The basic task

Store a set of fundamental memories {

1

,

2

, ,

} so that,

when presented a new pattern , the system outputs one of

the stored memories that is most similar to

Such a system is called content-addressable memory

Part VII 2

Architecture

The Hopfield net consists of N McCulloch-Pitts neurons,

recurrently connected among themselves

Part VII 3

Definition

Each neuron is defined as

where

=1

+

and =

1 if 0

1 otherwise

Without loss of generality, let

= 0

Part VII 4

Storage phase

To store fundamental memories, the Hopfield model uses the

outer-product rule, a form of Hebbian learning:

=

1

=1

,

Hence

, i.e., =

, so the weight matrix is

symmetric

Part VII 5

Retrieval phase

The Hopfield model sets the initial state of the net to the

input pattern. It adopts asynchronous (serial) update, which

updates one neuron at a time

Part VII 6

Hamming distance

Hamming distance between two binary/bipolar patterns is

the number of differing bits

Example (see blackboard)

Part VII 7

One memory case

Let the input be the same as the single memory

=

1

Therefore the memory is stable

Part VII 8

One memory case (cont.)

Actually for any input pattern, as long as the Hamming

distance between and is less than 2 , the net converges

to . Otherwise it converges to

Think about it

Part VII 9

Multiple memories

The stability (alignment) condition for any memory

is

=

,

where

=

1

,

=

,

+

1

,

Part VII 10

crosstalk

Multiple memories (cont.)

If |crosstalk| <N, the memory system is stable. In general,

fewer memories are more likely stable

Example 2 in book (see blackboard)

Part VII 11

Example (cont.)

Part VII 12

Memory capacity

Define

=

,

,

< 0 stable

0

< stable

> unstable

What if

= ?

Part VII 13

Memory capacity (cont.)

Consider random memories where each element takes +1 or

-1 with equal probability (prob.). We measure

error

= Prob(

> )

To compute capacity M

max

, decide on an error criterion

For random patterns,

is proportional to a sum of

( 1) random numbers of +1 or -1. For large , it can

be approximated by a Gaussian distribution (central limit

theorem) with zero mean and variance

2

=

Part VII 14

Memory capacity (cont.)

So

error

=

1

2

exp (

2

2

2

)

d

=

1

2

1

2

exp

2

2

2

0

d

=

1

2

1

2

exp

2

/(2)

0

d

Part VII 15

error function

( = 2)

Memory capacity (cont.)

Error probability plot

Part VII 16

x

N

(

)

Memory capacity (cont.)

error

max

/

0.001 0.105

0.0036 0.138

0.01 0.185

0.05 0.37

0.1 0.61

Part VII 17

So

error

< 0.01

max

= 0.185, an upper bound

Memory capacity (cont.)

Part VII 18

What if 1% flips occur? Avalanche effect?

Real upper bound: 0.138N to prevent the avalanche effect

Memory capacity (cont.)

The above analysis is for one bit. If we want perfect retrieval

for

with probability of 0.99:

(1

error

)

> 0.99

Approximately

error

<

0.01

For this case

max

=

2log

Part VII 19

Memory capacity (cont.)

But real patterns are not random (they could be encoded) and

the capacity is worse for correlated patterns

At one favorable extreme, if memories are orthogonal

= 0 for

then

= 0 and

max

=

This is the maximum number of orthogonal patterns

Part VII 20

Memory capacity (cont.)

But in reality a useful system stores a little less; otherwise

the memory is useless as it does not evolve

Part VII 21

Coding illustration

Part VII 22

Energy function (Lyapunov function)

The existence of an energy (Lyapunov function) for a

dynamical system ensures its stability

The energy function for the Hopfield net is

() =

1

2

Theorem: Given symmetric weights,

, the energy

function does not increase as the Hopfield net evolves

Part VII 23

Energy function (cont.)

Let

be the new value of

after an update

If

, remains the same

Part VII 24

Energy function (cont.)

In the other case,

=

1

2

+

1

2

=

1

2

+

1

2

= 2

= 2

< 0

Part VII 25

since

=

different signs

since

Energy function (cont.)

Thus, () decreases every time

flips. Since is

bounded, the Hopfield net is always stable

Remarks:

Useful concepts from dynamical systems: attractors, basins of

attraction, energy (Lyapunov) surface or landscape

Part VII 26

Energy contour map

Part VII 27

2-D energy landscape

Part VII 28

Memory recall illustration

Part VII 29

Remarks (cont.)

Bipolar neurons can be extended to continuous-valued

neurons by using hyperbolic tangent activation function, and

discrete update can be extended to continuous-time

dynamics (good for analog VLSI implementation)

The concept of energy minimization has been applied to

optimization problems (neural optimization)

Part VII 30

Spurious states

Not all local minima (stable states) correspond to

fundamental memories. Typically,

, linear combination

of odd number of memories, or other uncorrelated patterns,

can be attractors

Such attractors are called spurious states

Part VII 31

Spurious states (cont.)

Spurious states tend to have smaller basins and occur higher

on the energy surface

Part VII 32

local minima

for spurious states

Kinds of associative memory

Autoassociative (e.g. Hopfiled net)

Heteroassociative: store pairs of <

> explicitly

Part VII 33

matrix memory

holographic memory

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Why File A Ucc1Document10 pagesWhy File A Ucc1kbarn389100% (4)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Flying ColorsDocument100 pagesFlying ColorsAgnieszkaAgayo20% (5)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Alpha Sexual Power Vol 1Document95 pagesAlpha Sexual Power Vol 1Joel Lopez100% (1)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hydrogeological Survey and Eia Tor - Karuri BoreholeDocument3 pagesHydrogeological Survey and Eia Tor - Karuri BoreholeMutonga Kitheko100% (1)

- Department of Education: Income Generating ProjectDocument5 pagesDepartment of Education: Income Generating ProjectMary Ann CorpuzNo ratings yet

- Broiler ProductionDocument13 pagesBroiler ProductionAlexa Khrystal Eve Gorgod100% (1)

- HAYAT - CLINIC BrandbookDocument32 pagesHAYAT - CLINIC BrandbookBlankPointNo ratings yet

- GTA IV Simple Native Trainer v6.5 Key Bindings For SingleplayerDocument1 pageGTA IV Simple Native Trainer v6.5 Key Bindings For SingleplayerThanuja DilshanNo ratings yet

- Five Kingdom ClassificationDocument6 pagesFive Kingdom ClassificationRonnith NandyNo ratings yet

- Frequency Response For Control System Analysis - GATE Study Material in PDFDocument7 pagesFrequency Response For Control System Analysis - GATE Study Material in PDFNarendra AgrawalNo ratings yet

- Lesson: The Averys Have Been Living in New York Since The Late NinetiesDocument1 pageLesson: The Averys Have Been Living in New York Since The Late NinetiesLinea SKDNo ratings yet

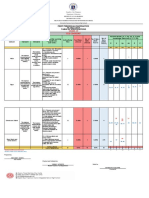

- Revised Final Quarter 1 Tos-Rbt-Sy-2022-2023 Tle-Cookery 10Document6 pagesRevised Final Quarter 1 Tos-Rbt-Sy-2022-2023 Tle-Cookery 10May Ann GuintoNo ratings yet

- Oral ComDocument2 pagesOral ComChristian OwlzNo ratings yet

- Fmicb 10 02876Document11 pagesFmicb 10 02876Angeles SuarezNo ratings yet

- Chap9 PDFDocument144 pagesChap9 PDFSwe Zin Zaw MyintNo ratings yet

- ULANGAN HARIAN Mapel Bahasa InggrisDocument14 pagesULANGAN HARIAN Mapel Bahasa Inggrisfatima zahraNo ratings yet

- Land of PakistanDocument23 pagesLand of PakistanAbdul Samad ShaikhNo ratings yet

- Planning EngineerDocument1 pagePlanning EngineerChijioke ObiNo ratings yet

- Antibiotics MCQsDocument4 pagesAntibiotics MCQsPh Israa KadhimNo ratings yet

- Restaurant Report Card: February 9, 2023Document4 pagesRestaurant Report Card: February 9, 2023KBTXNo ratings yet

- Specification Sheet: Case I Case Ii Operating ConditionsDocument1 pageSpecification Sheet: Case I Case Ii Operating ConditionsKailas NimbalkarNo ratings yet

- Rare Malignant Glomus Tumor of The Esophagus With PulmonaryDocument6 pagesRare Malignant Glomus Tumor of The Esophagus With PulmonaryRobrigo RexNo ratings yet

- Powerwin EngDocument24 pagesPowerwin Engbillwillis66No ratings yet

- A SURVEY OF ENVIRONMENTAL REQUIREMENTS FOR THE MIDGE (Diptera: Tendipedidae)Document15 pagesA SURVEY OF ENVIRONMENTAL REQUIREMENTS FOR THE MIDGE (Diptera: Tendipedidae)Batuhan ElçinNo ratings yet

- DIFFERENTIATING PERFORMANCE TASK FOR DIVERSE LEARNERS (Script)Document2 pagesDIFFERENTIATING PERFORMANCE TASK FOR DIVERSE LEARNERS (Script)Laurice Carmel AgsoyNo ratings yet

- 100 20210811 ICOPH 2021 Abstract BookDocument186 pages100 20210811 ICOPH 2021 Abstract Bookwafiq alibabaNo ratings yet

- Amp DC, OaDocument4 pagesAmp DC, OaFantastic KiaNo ratings yet

- 3M 309 MSDSDocument6 pages3M 309 MSDSLe Tan HoaNo ratings yet

- BIOBASE Vortex Mixer MX-S - MX-F User ManualDocument10 pagesBIOBASE Vortex Mixer MX-S - MX-F User Manualsoporte03No ratings yet

- III.A.1. University of Hawaii at Manoa Cancer Center Report and Business PlanDocument35 pagesIII.A.1. University of Hawaii at Manoa Cancer Center Report and Business Planurindo mars29No ratings yet