Professional Documents

Culture Documents

Unidimensional Scaling

Uploaded by

waldemarclausOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Unidimensional Scaling

Uploaded by

waldemarclausCopyright:

Available Formats

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

UNIDIMENSIONAL SCALING

JAN DE LEEUW

Abstract. This is anentryfor The Encyclopedia of Statistics inBehavioral

Science, to be published by Wiley in 2005.

Unidimensional scaling is the special one-dimensional case of multidimen-

sional scaling [5]. It is often discussed separately, because the unidimen-

sional case is quite different fromthe general multidimensional case. It is ap-

plied in situations where we have a strong reason to believe there is only one

interesting underlying dimension, such as time, ability, or preference. And

unidimensional scaling techniques are very different frommultidimensional

scaling techniques, because they use very different algorithms to minimize

their loss functions.

The classical form of unidimensional scaling starts with a symmetric and

non-negative matrix = {

i j

} of dissimilarities and another symmetric and

non-negative matrix W = {w

i j

} of weights. Both W and have a zero

diagonal. Unidimensional scaling nds coordinates x

i

for n points on the

Date: March 27, 2004.

Key words and phrases. tting distances, multidimensional scaling.

1

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

2 JAN DE LEEUW

line such that

(x) =

1

2

n

i =1

n

j =1

w

i j

(

i j

|x

i

x

j

|)

2

is minimized. Those n coordinates in x dene the scale we were looking

for.

To analyze this unidimensional scaling problem in more detail, let us start

with the situation in which we know the order of the x

i

, and we are just

looking for their scale values. Now |x

i

x

j

| = s

i j

(x)(x

i

x

j

), where

s

i j

(x) = sign(x

i

x

j

). If the order of the x

i

is known, then the s

i j

(x)

are known numbers, equal to either 1 or +1 or 0, and thus our problem

becomes minimization of

(x) =

1

2

n

i =1

n

j =1

w

i j

(

i j

s

i j

(x

i

x

j

))

2

over all x such that s

i j

(x

i

x

j

) 0. Assume, without loss of generality,

that the weighted sum of squares of the dissimilarities is one. By expanding

the sum of squares we see that

(x) = 1 t

t + (x t )

V(x t ).

Here V is the matrix with off-diagonal elements v

i j

= w

i j

and diagonal

elements v

i i

=

n

j =1

w

i j

. Also, t = V

+

r, where r is the vector with

elements r

i

=

n

j =1

w

i j

i j

s

i j

, and V

+

is the generalized inverse of V. If

all the off-diagonal weights are equal we simply have t = r/n.

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

UNIDIMENSIONAL SCALING 3

Thus the unidimensional scaling problem, with a known scale order, requires

us to minimize (x t )

V(x t ) over all x satisfying the order restrictions.

This is a monotone regression problem [2], which can be solved quickly

and uniquely by simple quadratic programming methods.

Now for some geometry. The vectors x satisfying the same set of order

constraints form a polyhedral convex cone K in R

n

. Think of K as an

ice cream cone with its apex at the origin, except for the fact that the ice

creamcone is not round, but instead bounded by a nite number hyperplanes.

Since there are n! different possible orderings of x, there are n! cones, all

with their apex at the origin. The interior of the cone consists of the vectors

without ties, intersections of different cones are vectors with at least one tie.

Obviously the union of the n! cones is all of R

n

.

Thus the unidimensional scaling problemcan be solved by solving n! mono-

tone regression problems, one for each of the n! cones [3]. The problem has

a solution which is at the same time very simple and prohibitively compli-

cated. The simplicity comes from the n! subproblems, which are easy to

solve, and the complications come from the fact that there are simply too

many different subproblems. Enumeration of all possible orders is imprac-

tical for n > 10, although using combinatorial programming techniques

makes it possible to nd solutions for n as large as 20 [7].

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

4 JAN DE LEEUW

Actually, the subproblems are even simpler than we suggested above. The

geometry tells us that we solve the subproblem for cone K by nding the

closest vector to t in the cone, or, in other words, by projecting t on the cone.

There are three possibilities. Either t is in the interior of its cone, or on the

boundary of its cone, or outside its cone. In the rst two cases t is equal to its

projection, in the third case the projection is on the boundary. The general

result in [1] tells us that the loss function cannot have a local minimum at

a point in which there are ties, and thus local minima can only occur in the

interior of the cones. This means that we can only have a local minimum if

t is in the interior of its cone, and it also means that we actually never have

to compute the monotone regression. We just have to verify if t is in the

interior, if it is not then does not have a local minimum in this cone.

There have been many proposals to solve the combinatorial optimization

problem of moving through the n! until the global optimum of has been

found. A recent review is [8].

We illustrate the method with a simple example, using the vegetable paired

comparison data from [6, page 160]. Paired comparison data are usually

given in a matrix P of proportions, indicating how many times stimulus i is

preferred over stimulus j . P has 0.5 on the diagonal, while corresponding el-

ements p

i j

and p

j i

on both sides of the diagonal add up to 1.0. We transform

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

UNIDIMENSIONAL SCALING 5

the proportions to dissimilarities by using using the probit transformation

z

i j

=

1

( p

i j

) and then dening

i j

= |z

i j

|. There are 9 vegetables in the

experiment, and we evaluate all 9! = 362880 permutations. Of these cones

14354 or 4% have a local minimum in their interior. This may be a small

percentage, but the fact that has 14354 isolated local minima indicates how

complicated the unidimensional scaling problem is. The global minimum is

obtained for the order given in Guilfords book, which is Turnips <Cabbage

< Beets < Asparagus < Carrots < Spinach < String Beans < Peas < Corn.

Since there are no weights in this example, the optimal unidimensional scal-

ing values are the rowaverages of the matrix with elements s

i j

(x)

i j

. Except

for a single sign change of the smallest element (the Carrots and Spinach

comparison), this matrix is identical to the probit matrix Z. And because the

Thurstone Case V scale values are the row averages of Z, they are virtually

identical to the unidimensional scaling solution in this case.

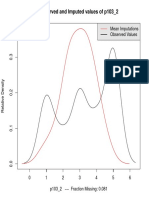

The second example is quite different. It has weights and incomplete infor-

mation. We take it from a paper by Fisher [4], in which he studies crossover

percentages of eight genes on the sex chromosome of Drosophila willistoni.

He takes the crossover percentage as a measure of distance, and supposes the

number n

i j

of crossovers in N

i j

observations is binomial. Although there

are eight genes, and thus

8

2

= 28 possible dissimilarities, there are only

15 pairs that are actually observed. Thus 13 of the off-diagonal weights

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

6 JAN DE LEEUW

are zero, and the other weights are set to the inverses of the standard errors

of the proportions. We investigate all 8! = 40320 permutations, and we

nd 78 local minima. The solution given by Fisher, computed by solving

linearized likelihood equations, has Reduced < Scute < Peach < Beaded

< Rough < Triple < Deformed < Rimmed. This order corresponds with

a local minimum of equal to 40.16. The global minimum is obtained for

the permutation that interchanges Reduced and Scute, with value 35.88. In

Figure 1 we see the scales for the two local minima, one corresponding with

Fishers order and the other one with the optimal order.

0.04 0.02 0.00 0.02 0.04

0

.

0

4

0

.

0

2

0

.

0

0

0

.

0

2

0

.

0

4

fisher

u

d

s

reduced

scute

peach

beaded

rough

triple

deformed

rimmed

Figure 1. Genes on Chromosome

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

UNIDIMENSIONAL SCALING 7

In this entry we have only discussed least squares metric unidimensional

scaling. The rst obvious generalizations are to replace the least squares

loss function, for example by the least absolute value or l

1

loss function.

The second generalization is to look at nonmetric unidimensional scaling.

These generalizations have not been studied in much detail, but in both we

cancontinue touse the basic geometrywe have discussed. The combinatorial

nature of the problem remains intact.

References

[1] J. De Leeuw. Differentiability of Kruskals Stress at a Local Minimum.

Psychometrika, 49:111113, 1984.

[2] J. De Leeuw. Monotone Regression. In B. Everitt and D. Howell, editors,

The Encyclopedia of Statistics in Behavioral Science. Wiley, 2005.

[3] J. De Leeuw and W. J. Heiser. Convergence of Correction Matrix Algo-

rithms for Multidimensional Scaling. In J.C. Lingoes, editor, Geometric

representations of relational data, pages 735752. Mathesis Press, Ann

Arbor, Michigan, 1977.

[4] R.A. Fisher. The Systematic Location of Genes by Means of Crossover

Observations. American Naturalist, 56:406411, 1922.

[5] P. Groenen. Multidimensional scaling. In B. Everitt and D. Howell,

editors, The Encyclopedia of Statistics in Behavioral Science. Wiley,

F

R

O

M

J

A

N

S

D

E

S

K

F

R

O

M

J

A

N

S

D

E

S

K

8 JAN DE LEEUW

2005.

[6] J.P. Guilford. Psychometric Methods. McGrawHill, second edition,

1954.

[7] L.J. Hubert and P. Arabie. Unidimensional Scaling and Combinatorial

Optimization. In J. De Leeuw, W. Heiser, J. Meulman, and F. Critchley,

editors, Multidimensional data analysis, Leiden, The Netherlands, 1986.

DSWO-Press.

[8] L.J. Hubert, P. Arabie, andJ.J. Meulman. Linear Unidimensional Scaling

in the L

2

-Norm: Basic Optimization Methods Using MATLAB. Journal

of Classication, 19:303328, 2002.

Department of Statistics, University of California, Los Angeles, CA 90095-1554

E-mail address, Jan de Leeuw: deleeuw@stat.ucla.edu

URL, Jan de Leeuw: http://gifi.stat.ucla.edu

You might also like

- Chapter4 Regression ModelAdequacyCheckingDocument38 pagesChapter4 Regression ModelAdequacyCheckingAishat OmotolaNo ratings yet

- Kruskal 1964bDocument15 pagesKruskal 1964byismar farith mosquera mosqueraNo ratings yet

- Partial Inverse Regression: Biometrika (2007), 94, 3, Pp. 615-625 Printed in Great BritainDocument12 pagesPartial Inverse Regression: Biometrika (2007), 94, 3, Pp. 615-625 Printed in Great BritainLiliana ForzaniNo ratings yet

- Quasi-Random Sequences and Their DiscrepanciesDocument44 pagesQuasi-Random Sequences and Their Discrepanciesabc010No ratings yet

- Radically Elementary Probability Theory. (AM-117), Volume 117From EverandRadically Elementary Probability Theory. (AM-117), Volume 117Rating: 4 out of 5 stars4/5 (2)

- On Orthogonal Polynomials in Several Variables: Fields Institute Communications Volume 00, 0000Document23 pagesOn Orthogonal Polynomials in Several Variables: Fields Institute Communications Volume 00, 0000Terwal Aandrés Oortiz VargasNo ratings yet

- Chp2 LinalgDocument8 pagesChp2 LinalgLucas KevinNo ratings yet

- Measure and Integration NotesDocument93 pagesMeasure and Integration NotessebastianavinaNo ratings yet

- Linear Regression Analysis: Module - IvDocument10 pagesLinear Regression Analysis: Module - IvupenderNo ratings yet

- S. Catterall Et Al - Baby Universes in 4d Dynamical TriangulationDocument9 pagesS. Catterall Et Al - Baby Universes in 4d Dynamical TriangulationGijke3No ratings yet

- Regression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurDocument36 pagesRegression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurAbcNo ratings yet

- 05 AbstractsDocument24 pages05 AbstractsMukta DebnathNo ratings yet

- Analysis of A Complex of Statistical Variables Into Principal ComponentsDocument25 pagesAnalysis of A Complex of Statistical Variables Into Principal ComponentsAhhhhhhhNo ratings yet

- 2011 ARML Advanced Combinatorics - Principle of Inclusion-ExclusionDocument4 pages2011 ARML Advanced Combinatorics - Principle of Inclusion-ExclusionAman MachraNo ratings yet

- Applications of Linear Models in Animal Breeding Henderson-1984Document385 pagesApplications of Linear Models in Animal Breeding Henderson-1984DiegoPagungAmbrosiniNo ratings yet

- Laplace Equations and The Weak Lefschetz ( (2012)Document21 pagesLaplace Equations and The Weak Lefschetz ( (2012)Matemáticas AsesoriasNo ratings yet

- Chapter-9-Simple Linear Regression & CorrelationDocument11 pagesChapter-9-Simple Linear Regression & CorrelationAyu AlemuNo ratings yet

- Aims ThesisDocument43 pagesAims ThesisNoyiessie Ndebeka RostantNo ratings yet

- Operations ResearchDocument37 pagesOperations ResearchShashank BadkulNo ratings yet

- On Contrastive Divergence LearningDocument8 pagesOn Contrastive Divergence Learningkorn651No ratings yet

- Asymptotic Analysis NotesDocument36 pagesAsymptotic Analysis NotesmihuangNo ratings yet

- Data Reduction or Structural SimplificationDocument44 pagesData Reduction or Structural SimplificationDaraaraa MulunaaNo ratings yet

- Chapter 4Document68 pagesChapter 4Nhatty WeroNo ratings yet

- Probability DistributionsDocument29 pagesProbability DistributionsPranav MishraNo ratings yet

- Primera ClaseDocument48 pagesPrimera ClaseLino Ccarhuaypiña ContrerasNo ratings yet

- Multivariate Statistical Analysis: The Multivariate Normal DistributionDocument13 pagesMultivariate Statistical Analysis: The Multivariate Normal DistributionGenesis Carrillo GranizoNo ratings yet

- Solving Systems of Algebraic Equations: Daniel LazardDocument27 pagesSolving Systems of Algebraic Equations: Daniel LazardErwan Christo Shui 廖No ratings yet

- CS 717: EndsemDocument5 pagesCS 717: EndsemGanesh RamakrishnanNo ratings yet

- Statistical ApplicationDocument18 pagesStatistical ApplicationArqam AbdulaNo ratings yet

- Uniqueness and Multiplicity of Infinite ClustersDocument13 pagesUniqueness and Multiplicity of Infinite Clusterskavv1No ratings yet

- An Introduction To FractalsDocument25 pagesAn Introduction To FractalsDimitar DobrevNo ratings yet

- Analysis of Quaternions Components in Algorithms of Attitude. A Simple Computational Solution For The Problem of Duality in The Results.Document16 pagesAnalysis of Quaternions Components in Algorithms of Attitude. A Simple Computational Solution For The Problem of Duality in The Results.Leandro CamposNo ratings yet

- On Systems of Linear Diophantine Equations PDFDocument6 pagesOn Systems of Linear Diophantine Equations PDFhumejiasNo ratings yet

- Advanced Problem Solving Lecture Notes and Problem Sets: Peter Hästö Torbjörn Helvik Eugenia MalinnikovaDocument35 pagesAdvanced Problem Solving Lecture Notes and Problem Sets: Peter Hästö Torbjörn Helvik Eugenia Malinnikovaసతీష్ కుమార్No ratings yet

- 69813Document16 pages69813Edu Merino ArbietoNo ratings yet

- MAE 300 TextbookDocument95 pagesMAE 300 Textbookmgerges15No ratings yet

- A Course of Mathematics for Engineers and Scientists: Volume 1From EverandA Course of Mathematics for Engineers and Scientists: Volume 1No ratings yet

- Tverberg's Theorem Is 50 Years Old: A Survey: Imre B Ar Any Pablo Sober OnDocument2 pagesTverberg's Theorem Is 50 Years Old: A Survey: Imre B Ar Any Pablo Sober OnZygmund BaumanNo ratings yet

- Legendre PolynomialsDocument7 pagesLegendre Polynomialsbraulio.dantas0% (1)

- Intersection Theory - Valentina KiritchenkoDocument18 pagesIntersection Theory - Valentina KiritchenkoHoracio CastellanosNo ratings yet

- Midterm 1 ReviewDocument4 pagesMidterm 1 ReviewJovan WhiteNo ratings yet

- Data Analysis: Measures of DispersionDocument6 pagesData Analysis: Measures of DispersionAadel SepahNo ratings yet

- Metric Spaces and Complex AnalysisDocument120 pagesMetric Spaces and Complex AnalysisMayank KumarNo ratings yet

- Pattern Separation and Prediction Via Linear and Semidefinite ProgrammingDocument16 pagesPattern Separation and Prediction Via Linear and Semidefinite Programmingrupaj_n954No ratings yet

- Least Squares EllipsoidDocument17 pagesLeast Squares EllipsoidJoco Franz AmanoNo ratings yet

- Mathematical FinanceDocument13 pagesMathematical FinanceAlpsNo ratings yet

- Unsolved ProblemsDocument3 pagesUnsolved ProblemsStephan BaltzerNo ratings yet

- Discrete and Continuous Dynamical Systems Volume 33, Number 6, June 2013Document17 pagesDiscrete and Continuous Dynamical Systems Volume 33, Number 6, June 2013Cras JorchilNo ratings yet

- Ojection Estimation in Multiple Regression With Application To Functional AnovaDocument31 pagesOjection Estimation in Multiple Regression With Application To Functional AnovaFrank LiuNo ratings yet

- Thisyear PDFDocument112 pagesThisyear PDFegeun mankdNo ratings yet

- Probability PresentationDocument26 pagesProbability PresentationNada KamalNo ratings yet

- Random MatricesDocument27 pagesRandom MatricesolenobleNo ratings yet

- Inequalitty Ky FanDocument15 pagesInequalitty Ky FanhungkgNo ratings yet

- Lesson Plan PPT (Ict in Math)Document23 pagesLesson Plan PPT (Ict in Math)Jennifer EugenioNo ratings yet

- Mathematics Promotional Exam Cheat SheetDocument8 pagesMathematics Promotional Exam Cheat SheetAlan AwNo ratings yet

- Ols 2Document19 pagesOls 2sanamdadNo ratings yet

- Experiment 1Document11 pagesExperiment 1Aimee RarugalNo ratings yet

- Module 2 in IStat 1 Probability DistributionDocument6 pagesModule 2 in IStat 1 Probability DistributionJefferson Cadavos CheeNo ratings yet

- Banyay, Irene Kardos-The History of The Hungarian MusicDocument301 pagesBanyay, Irene Kardos-The History of The Hungarian MusicwaldemarclausNo ratings yet

- Observed and Imputed Values of p103 - 2Document1 pageObserved and Imputed Values of p103 - 2waldemarclausNo ratings yet

- Handbook On Prisons. Willan Publishing, Collumpton: 471-495Document2 pagesHandbook On Prisons. Willan Publishing, Collumpton: 471-495waldemarclausNo ratings yet

- Chambliss StateorgcrimeDocument26 pagesChambliss StateorgcrimewaldemarclausNo ratings yet

- Crime Handbook Final PDFDocument62 pagesCrime Handbook Final PDFwaldemarclausNo ratings yet

- Illegal Markets Protection Rackets and O PDFDocument19 pagesIllegal Markets Protection Rackets and O PDFwaldemarclausNo ratings yet

- A Brief Introduction To Factor AnalysisDocument12 pagesA Brief Introduction To Factor AnalysiswaldemarclausNo ratings yet

- Tait CareandtheprisonDocument9 pagesTait CareandtheprisonwaldemarclausNo ratings yet

- Moe-The Positive Theory of Public BureaucracyDocument14 pagesMoe-The Positive Theory of Public BureaucracywaldemarclausNo ratings yet

- Staff Prisoner Relations WhitemoorDocument201 pagesStaff Prisoner Relations WhitemoorwaldemarclausNo ratings yet

- 6 F608 D 01Document64 pages6 F608 D 01waldemarclausNo ratings yet

- Managing The Dilemma: Occupational Culture and Identity Among Prison OfficersDocument1 pageManaging The Dilemma: Occupational Culture and Identity Among Prison OfficerswaldemarclausNo ratings yet

- Assignment 2Document2 pagesAssignment 2Ben ReichNo ratings yet

- Assignment 1 PDFDocument6 pagesAssignment 1 PDFSudarshan KuntumallaNo ratings yet

- Matrices PDFDocument31 pagesMatrices PDFCHAITANYA KNo ratings yet

- Controls Exit OTDocument13 pagesControls Exit OTJeffrey Wenzen AgbuyaNo ratings yet

- Linear VectorDocument36 pagesLinear VectorNirajan PandeyNo ratings yet

- B Sc-MathsDocument24 pagesB Sc-MathsVijayakumar KopNo ratings yet

- DeltaDocument81 pagesDeltaAmit MandalNo ratings yet

- Xica Bca Sem IDocument30 pagesXica Bca Sem IPrasanna Kumar DasNo ratings yet

- Matriks Simetri, Diagonal Dan SegitigaDocument11 pagesMatriks Simetri, Diagonal Dan SegitigaDieky AdzkiyaNo ratings yet

- A Review On The Inverse of Symmetric Tridiagonal and Block Tridiagonal MatricesDocument22 pagesA Review On The Inverse of Symmetric Tridiagonal and Block Tridiagonal MatricesTadiyos Hailemichael MamoNo ratings yet

- Chapterwise A' Level Questions: By: OP GUPTA (+91-9650 350 480) For Class XiiDocument16 pagesChapterwise A' Level Questions: By: OP GUPTA (+91-9650 350 480) For Class XiiharshilNo ratings yet

- Holiday Homework Class Xii MathDocument4 pagesHoliday Homework Class Xii Mathmanish kumarNo ratings yet

- 12 SQP CH 4Document3 pages12 SQP CH 4vivinsam786No ratings yet

- Maths 1A - Chapter Wise Important Questions PDFDocument27 pagesMaths 1A - Chapter Wise Important Questions PDFSk Javid0% (1)

- Novel Shannon Graph Entropy, Capacity: Spectral Graph TheoryDocument16 pagesNovel Shannon Graph Entropy, Capacity: Spectral Graph Theoryvanaj123No ratings yet

- Matrices That Commute With Their Conjugate and Transpose: Geoffrey GoodsonDocument4 pagesMatrices That Commute With Their Conjugate and Transpose: Geoffrey GoodsonJade Bong NatuilNo ratings yet

- P3 Maths MCQDocument25 pagesP3 Maths MCQMd. Sohail RazaNo ratings yet

- Maths Class Xii Sample Paper Test 02 For Board Exam 2023Document6 pagesMaths Class Xii Sample Paper Test 02 For Board Exam 2023Priyanshu KasanaNo ratings yet

- Maths PDF 2Document140 pagesMaths PDF 2tharunvara000No ratings yet

- Lecture No. 13Document11 pagesLecture No. 13Sujat AliNo ratings yet

- Nicholson Solution For Linear Algebra 7th Edition.Document194 pagesNicholson Solution For Linear Algebra 7th Edition.Peter Li60% (5)

- Supplementary MaterialsDocument38 pagesSupplementary Materials陳楷翰No ratings yet

- Hadamard Matrix Analysis and Synthesis With Applications To Communications and Signal Image ProcessingDocument119 pagesHadamard Matrix Analysis and Synthesis With Applications To Communications and Signal Image ProcessingEmerson MaucaNo ratings yet

- Homework1 SolutionsDocument3 pagesHomework1 SolutionsDominic LombardiNo ratings yet

- Chapter 2Document30 pagesChapter 2MengHan LeeNo ratings yet

- Shifting MethodDocument9 pagesShifting MethodSerkan SancakNo ratings yet

- Basic Linear AlgebraDocument268 pagesBasic Linear AlgebraAhmet DoğanNo ratings yet

- EEE I & IV Year R09Document107 pagesEEE I & IV Year R09Sravan GuptaNo ratings yet

- Advanced Level Questions Class-12Document16 pagesAdvanced Level Questions Class-12jitender8No ratings yet

- Class-12-Maths-Important Short Answer Type Questions Chapter-3Document14 pagesClass-12-Maths-Important Short Answer Type Questions Chapter-3Hf vgvvv Vg vNo ratings yet