Professional Documents

Culture Documents

AOA Analysis of Well Known Algorithms

Uploaded by

Balaji JadhavOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

AOA Analysis of Well Known Algorithms

Uploaded by

Balaji JadhavCopyright:

Available Formats

1

Analysis of Algorithms

Unit 4 - Analysis of well known Algorithms

2

Copyright 2004, Infosys

Technologies Ltd

2

ER/CORP/CRS/SE15/003

Version No: 2.0

Analysis of well known Algorithms

Brute Force Algorithms

Greedy Algorithms

Divide and Conquer Algorithms

Decrease and Conquer Algorithms

Dynamic Programming

Analysis of well known Algorithms:

In this chapter we will understand the design methodologies of certain well algorithms and

also analyze the complexities of these well known algorithms.

3

Copyright 2004, Infosys

Technologies Ltd

3

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms

Brute force approach is a straight forward approach to solve the problem. It is

directly based on the problem statement and the concepts

The force here refers to the computer and not to the intellect of the problem

solver

It is one of the simplest algorithm design to implement and covers a wide range

of problems under its gamut

We will analyze the following three algorithms which fall under the category of brute force

algorithm design.

Selection Sort

Bubble Sort

Linear Search

4

Copyright 2004, Infosys

Technologies Ltd

4

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Selection Sort

Sorting is the technique of rearranging a given array of data into a specific

order. When the array contains numeric data the sorting order is either

ascending or descending. Similarly when the array contains non-numeric data

the sorting order is lexicographical order

All sorting algorithms start with the given input array of data (unsorted array)

and after each iteration extend the sorted part of the array by one cell. The

sorting algorithm terminates when sorted part of array is equal to the size of

the array

In selection sort, the basic idea is to find the smallest number in the array and

swap this number with the leftmost cell of the unsorted array, thereby

increasing the sorted part of the array by one more cell

In all sorting algorithms the entire array is seen as two parts, the sorted and unsorted part.

Initially the size of the sorted part is zero and the unsorted part is the entire array. The basic

aim of sorting is to extend the sorted part of the array after each iteration of the algorithm

thereby decreasing the unsorted part. The algorithm terminates when the sorted part is the

entire array (or in other words the unsorted part becomes zero)

5

Copyright 2004, Infosys

Technologies Ltd

5

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Selection Sort (Contd)

Selection Sort:

To sort the given array a[1n] in ascending order:

1. Begin

2. For i = 1 to n-1 do

2.1 set min = i

2.2 For j = i+1 to n do

2.2.1 If (a[j] < a[min]) then set min = j

2.3 If (i min) then swap a[i] and a[min]

3. End

Let us analyze the selection sort. The basic operation here is comparison and swapping.

Since we have a nested for loop we need to do an inside out analysis. Step 2.2.1 does one

comparison and this step executes (n - (i+1) +1) times. Step 2.3 performs 1 swap operation

(assuming that each time the if condition is true). So number of basic operations performed

inside the first for loop (step 2) is

((n (i+1) +1) +1) = (n-i+1). From step 2 we can see that i varies from 1 to n-1. So the

number of basic operations performed by the outer for loop is:

(n-1+1) + (n-2+1) + (n-3+1) + + (n-(n-1)+1) = n + (n-1) + (n-2) + 2

= ((n(n+1))/2 1) = (n

2

+ n 2)/2 . Ignoring the slow growing terms we get the worst case

complexity of selection sort as O(n

2

)

6

Copyright 2004, Infosys

Technologies Ltd

6

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Bubble Sort

Bubble sort is yet another sorting technique which works on the design of brute

force

Bubble sort works by comparing adjacent elements of an array and exchanges

them if they are not in order

After each iteration (pass) the largest element bubbles up to the last position of

the array. In the next iteration the second largest element bubbles up to the

second last position and so on

Bubble sort is one of the simplest sorting techniques to implement

7

Copyright 2004, Infosys

Technologies Ltd

7

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Bubble Sort (Contd)

Bubble Sort:

To sort the given array a[1n] in ascending order:

1. Begin

2. For i = 1 to n-1 do

2.1 For j = 1 to n-1-i do

2.2.1 If (a[j+1] < a[j]) then swap a[j] and a[j+1]

3. End

The analysis of bubble sort is given below. The basic operation here again is comparison

and swapping

We have a nested for loop and hence we need to do an inside out analysis. Step 2.2.1 at the

most performs 2 operations (one comparison and one swapping) and these operations are

repeated ((n-1-i) 1) + 1 times. i.e. Step 2.1 performs (n-i-1) operation where i varies from

1 to n-1 (step 2). So the total number of operations performed by bubble sort is :

(n-1-1)+(n-2-1)+(n-3-1)++(n-(n-1) 1) = (n-2)+(n-3)+(n-4)++2

= ((n-2)(n-1))/2 1 = (n

2

-3n)/2.

Hence the worst case complexity of bubble sort is O(n

2

)

8

Copyright 2004, Infosys

Technologies Ltd

8

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Linear Search

Searching is a technique where in we find if a target element is part of the

given set of data

There are different searching techniques like linear search, binary search etc

Linear search works on the design principle of brute force and it is one of the

simplest searching algorithm as well

Linear search is also called as a sequential search as it compares the

successive elements of the given set with the search key

9

Copyright 2004, Infosys

Technologies Ltd

9

ER/CORP/CRS/SE15/003

Version No: 2.0

Brute Force Algorithms Linear Search (Contd)

Linear Search:

To find which element (if any) of the given array a[1n] equals the target

element:

1. Begin

2. For i = 1 to n do

2.1 If (target = a[i]) then End with output as i

3. End with output as none

The analysis of Linear search is given below. The basic operation here is comparison.

Worst Case Analysis: Step 2.1 performs 1 comparison and this step executes n times. So

the worst case complexity of Linear search is O(n).

Average Case Analysis: To perform an average case analysis we need to work with the

assumption that every element in the given array is equal likely to be the target. So the

probability that the search element is at position 1 is 1/n, the probability that the search

element is at position 2 is 2/n, and so on. The probability that the search element is at the

last position is n/n. Hence the average case complexity of the linear search is:

(1/n)+(2/n)++(n/n) = (1/n)(1+2++n) = (1/n)(n(n+1)/2) = (n+1)/2 O(n)

10

Copyright 2004, Infosys

Technologies Ltd

10

ER/CORP/CRS/SE15/003

Version No: 2.0

Greedy Algorithms

Greedy design technique is primarily used in Optimization problems

The Greedy approach helps in constructing a solution for a problem through a

sequence of steps where each step is considered to be a partial solution. This

partial solution is extended progressively to get the complete solution

The choice of each step in a greedy approach is done based on the following

It must be feasible

It must be locally optimal

It must be irrevocable

Optimization problems are problems where in we would like to find the best of all possible

solutions. In other words we need to find the solution which has the optimal (maximum or

minimum) value satisfying the given constraints.

In the greedy approach each step chosen has to satisfy the constraints given in the problem.

Each step is chosen such that it is the best alternative among all feasible choices that are

available. The choice of a step once made cannot be changed in subsequent steps.

We analyze an Activity Selection Problem which works on the greedy approach.

11

Copyright 2004, Infosys

Technologies Ltd

11

ER/CORP/CRS/SE15/003

Version No: 2.0

Greedy Algorithms An Activity Selection Problem

An Activity Selection problem is a slight variant of the problem of scheduling a

resource among several competing activities.

Suppose that we have a set S = {1, 2, , n} of n events that wish to use an

auditorium which can be used by only one event at a time. Each event i has a

start time s

i

and a finish time f

i

where s

i

f

i

. An event i if selected can be executed

anytime on or after s

i

and must necessarily end before f

i

. Two events i and j are

said to compatible if they do not overlap (meaning s

i

f

j

or s

j

f

i

). The activity

selection problem is to select a maximum subset of compatible activities.

To solve this problem we use the greedy approach

12

Copyright 2004, Infosys

Technologies Ltd

12

ER/CORP/CRS/SE15/003

Version No: 2.0

Greedy Algorithms An Activity Selection Problem

(Contd)

An Activity Selection Problem:

To find the maximum subset of mutually compatible activities from a given

set of n activities. The given set of activities are represented as two arrays s

and f denoting the set of all start time and set of all finish time respectively:

1. Begin

2. Set A = {1}, j = 1

3. For i = 2 to n do

2.1 If (si fj) then A = A {i}, j = i

3. End with output as A

The Activity Selection Problem works under the assumption that the given input array f is

sorted in the increasing order of the finishing time. The greedy approach in the above

algorithm is highlighted in step 2.1 when we select the next activity such that the start time

of that activity is greater than or equal to the finish time of the preceding activity in the array

A. Note that it suffices to do only this check. We dont have to compare the start time of the

next activity with all the elements of the array A. This is so because the finishing time (array

f) are in increasing order. The analysis of the activity-selector problem is given below.

Worst Case Analysis: The basic operation here is comparison. Step 2.1 performs 1

comparison and this step is repeated (n-1) times. So the worst case complexity of this

algorithm is 1 * (n-1) = O(n).

13

Copyright 2004, Infosys

Technologies Ltd

13

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms

Divide and Conquer algorithm design works on the principle of dividing the

given problem into smaller sub problems which are similar to the original

problem. The sub problems are ideally of the same size.

These sub problems are solved independently using recursion

The solutions for the sub problems are combined to get the solution for the

original problem

The Divide and Conquer strategy can be viewed as one which has three steps. The first step

is called Divide which is nothing but dividing the given problems into smaller sub problems

which are identical to the original problem and also these sub problems are of the same

size. The second step is called Conquer where in we solve these sub problems recursively.

The third step is called Combine where in we combine the solutions of the sub problems to

get the solution for the original problem.

As part of the Divide and Conquer algorithm design we will analyze the Quick sort, Merge

sort and the Binary Search algorithms.

14

Copyright 2004, Infosys

Technologies Ltd

14

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Quick Sort

Quick sort is one of the most powerful sorting algorithm. It works on the Divide

and Conquer design principle.

Quick sort works by finding an element, called the pivot, in the given input

array and partitions the array into three sub arrays such that

The left sub array contains all elements which are less than or equal to the pivot

The middle sub array contains pivot

The right sub array contains all elements which are greater than or equal to the pivot

Now the two sub arrays, namely the left sub array and the right sub array are

sorted recursively

The basic principle of Quick sort is given above. The partitioning of the given input array is

part of the Divide step. The recursive calls to sort the sub arrays are part of the Conquer

step. Since the sorted sub arrays are already in the right place there is no Combine step for

the Quick sort.

15

Copyright 2004, Infosys

Technologies Ltd

15

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Quick Sort

(Contd)

Quick Sort:

To sort the given array a[1n] in ascending order:

1. Begin

2. Set left = 1, right = n

3. If (left < right) then

3.1 Partition a[leftright] such that a[leftp-1] are all less than a[p] and

a[p+1right] are all greater than a[p]

3.2 Quick Sort a[leftp-1]

3.3 Quick Sort a[p+1right]

4. End

The basic operation in Quick sort is comparison and swapping. Note that the Quick Sort

Algorithm calls it self recursively. Quick Sort is one of the classical examples for recursive

algorithms. There are many partition algorithms which identifies the pivot and partitions the

given array. We will see one simple partition algorithm which takes n comparisons to

partition the given array.

Analysis of Quick Sort: Step 3.1 partitions the given array. The partition algorithm which

we will see shortly performs (n-1) comparisons to identify the pivot and rearrange the array

such that every element to the left of the pivot is smaller than or equal to the pivot and every

element to the right of the pivot is greater than or equal to the pivot. Hence Step 3.1

performs (n-1) operations. Step 3.2 and Step 3.3 are recursive calls to Quick Sort with a

smaller problem size.

16

Copyright 2004, Infosys

Technologies Ltd

16

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Quick Sort

(Contd)

The Best Case and Worst Case Analysis for Quick Sort is given below in the

notes page

To analyze recursive algorithms we need to solve the recurrence relation. Let T(n) denote the total

number of basic operations performed by Quick Sort to sort an array on n elements.

Best Case Analysis: In best case the the partition algorithm will split the given array into 2 equal sub

arrays. i.e. In the Best Case, the Pivot turns out to be the median value in the array. Then both the left

sub array and the right sub array are both of equal size (n/2). Hence

T(n) = 2T(n/2) + (n-1) , if n 1

= 0, if n 1

T(n) = 2(2T(n/4) + ((n/2)-1)) + n-1 = 2

2

T(n/2

2

) + 2n 3, simplifying this recurrence in a similar

manner we get,

T(n) = 2

k

T(n/2

k

) + kn (2

k

1). The base condition for the recurrence is T(1). So in this case we have

n/2

k

=1 k = log(n). Substituting for k we get

T(n) = n + nlog(n) (n-1) T(n) = nlogn + 1.

The Best Case Complexity of quick sort if O(nlogn).

Worst Case Analysis: The worst case for Quick sort can arise when the partition algorithm divides the

array in such a way that either the left sub array or the right sub array is empty. In which case the

problem size is reduced from n to n-1. The recurrence relation for the worst case is as follows:

T(n) = T(n-1) + n-1, if n >1

= 0, if n 1

Simplifying this we get, T(n) = (n-1) + (n-2) + + 2 + 1 = ((n-1)*n)/2

The Worst Case Complexity of Quick Sort is O(n

2

).

The Average Case Complexity of Quick Sort is O(nlogn)

Remark: The Worst Case for the Quick Sort could happen when the pivot element is the smallest (or

largest) in each recursive call.

17

Copyright 2004, Infosys

Technologies Ltd

17

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Quick Sort

(Contd)

Quick Sort Partition Algorithm:

To partition the given array a[1n] such that every element to the left of the

pivot is less than the pivot and every element to the right of the pivot is

greater than the pivot.

1. Begin

2. Set left = 1, right = n, pivot = a[left], p = left

3. For r = left+1 to right do

3.1 If a[r] < pivot then

3.1.1 a[p] = a[r], a[r] = a[p+1], a[p+1] = pivot

3.1.2 Increment p

4. End with output as p

The partition algorithm given is one of the simplest partition algorithms for quick sort. The

basic operation here is comparison.

Worst Case Analysis: Step 3.1 performs 1 comparison and this step is repeated n-1 times.

So the Worst Case Complexity of the above mentioned partition algorithm is O(n).

18

Copyright 2004, Infosys

Technologies Ltd

18

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Merge Sort

Merge sort is yet another sorting algorithm which works on the Divide and

Conquer design principle.

Merge sort works by dividing the given array into two sub arrays of equal size

The sub arrays are sorted independently using recursion

The sorted sub arrays are then merged to get the solution for the original array

The breaking of the given input array into two sub arrays of equal size is part of the Divide

step. The recursive calls to sort the sub arrays are part of the Conquer step. The merging of

the sub arrays to get the solution for the original array is part of the Combine step.

19

Copyright 2004, Infosys

Technologies Ltd

19

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Merge Sort

(Contd)

Merge Sort:

To sort the given array a[1n] in ascending order:

1. Begin

2. Set left = 1, right = n

3. If (left < right) then

3.1 Set m = floor((left + right) / 2)

3.2 Merge Sort a[leftm]

3.3 Merge Sort a[m+1right]

3.4 Merge a[leftm] and a[m+1right] into an auxiliary array b

3.5 Copy the elements of b to a[leftright]

4. End

Again the basic operation in Merge sort is comparison and swapping. Merge Sort Algorithm

calls it self recursively. Merge Sort divides the array into sub arrays based on the position of

the elements whereas Quick Sort divides the array into sub arrays based on the value of the

elements. Merge Sort requires an auxiliary array to do the merging (Combine step). The

merging of two sub arrays, which are already sorted, into an auxiliary array can be done in

O(n) where n is the total number of elements in both the sub arrays. This is possible

because both the sub arrays are sorted.

Worst Case Analysis of Merge Sort: Step 3.2 and Step 3.3 are recursive calls to Merge

Sort with a smaller problem size. Also step 3.4 is the merging step which takes O(n)

comparisons. We need to first identify the recurrence relation for this algorithm. Let T(n)

denote the total number of basic operations performed by Merge Sort to sort an array on n

elements.

T(n) = 2 T(n/2) + n, if n > 1

= 0, if n 1

This when simplified gives T(n) = O(nlogn). Hence the Worst Case Complexity of Merge

Sort is O(nlogn).

20

Copyright 2004, Infosys

Technologies Ltd

20

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Binary Search

Binary Search works on the Divide and Conquer Strategy

Binary Search takes a sorted array as the input

It works by comparing the target (search key) with the middle element of the

array and terminates if it is the same, else it divides the array into two sub

arrays and continues the search in left (right) sub array if the target is less

(greater) than the middle element of the array.

Reducing the problem size by half is part of the Divide step in Binary Search and searching

in this reduced problem space is part of the Conquer step in Binary Search. There is no

Combine step in Binary Search.

21

Copyright 2004, Infosys

Technologies Ltd

21

ER/CORP/CRS/SE15/003

Version No: 2.0

Divide and Conquer Algorithms Binary Search

(Contd)

Binary Search:

To find which element (if any) of the given array a[1n] equals the target

element:

1. Begin

2. Set left = 1, right = n

3. While (left right) do

3.1 m = floor(( left + right )/2)

3.2 If (target = a[m]) then End with output as m

3.3 If (target < a[m]) then right = m-1

3.4 If (target > a[m]) then left = m+1

3. End with output as none

The basic operation here is comparison.

Worst Case Analysis: Step 3.2 to Step 3.4 each performs 1 comparison and these steps

executes logn times. logn is the number of times the while loop executes. Each time we

are reducing the problem size by half. i.e n/2, n/4, n/8, The loop terminates when we hit

on a single element array and this would occur when the while loop has executed logn

times. The maximum number of comparisons in Binary Search would be 3 * logn. Hence

the worst case complexity of Binary search is O(logn).

22

Copyright 2004, Infosys

Technologies Ltd

22

ER/CORP/CRS/SE15/003

Version No: 2.0

Decrease and Conquer Algorithms

Decrease and Conquer design algorithm technique exploits the relationship

between a solution for the given instance of the problem and a solution for a

smaller instance of the same problem. Based on this relationship the problem

can be solved using the top-down or bottom-up approach

There are different variants of Decrease and Conquer design strategies

Decrease by a constant

Decrease by a constant factor

Variable size decrease

Many a time we could establish a relationship between the solution for the problem of a

given size and the solution of the same problem with smaller size. Say for example a

n

= a

(n-

1)

* a. Such a relationship will help us to solve the problem easily. The Decrease and

Conquer algorithm design technique is based on exploiting such relationships. The decrease

in the problem size can be a constant for each iteration as in the case of the power example

mentioned above or it can be a decrease by a constant factor for each iteration. For example

a

n

= (a

(n/2)

)

2

(the constant factor here is 2). There can also be a variable size decrease in the

problem size like in the case of Euclids algorithm to compute GCD, GCD(a , b) = GCD(b

, a mod b).

We will analyze the Insertion Sort which works on the design principle of Decrease and

Conquer.

23

Copyright 2004, Infosys

Technologies Ltd

23

ER/CORP/CRS/SE15/003

Version No: 2.0

Decrease and Conquer Algorithms Insertion Sort

Insertion sort is a sorting algorithm which works on the algorithm design

principle of Decrease and Conquer

Insertion sort works by decreasing the problem size by a constant in each

iteration and exploits the relationship between the solution for the problem of

smaller size and that of the original problem

Insertion Sort is the sorting technique which we normally use in the cards

game

The Insertion sort works on Decrease by One technique. In each iteration the problem size

is decreased by one. Suppose a[1n] is a given array to be sorted. The way insertion sort

works is it assumes the smaller problem size a[1n-1] is already sorted and it exploits this

to put a[n] in place. This is done by scanning from the right most position, which is n-1, in

a[1n-1] and traversing towards the left till a value lesser than or equal to a[n] is

encountered. Once this found we insert a[n] next to that value.

24

Copyright 2004, Infosys

Technologies Ltd

24

ER/CORP/CRS/SE15/003

Version No: 2.0

Decrease and Conquer Algorithms Insertion Sort

(Contd)

Insertion Sort:

To sort the given array a[1n] in ascending order:

1. Begin

2. For i = 2 to n do

2.1 Set v = a[i], j = i 1

2.2 While (j 1) and v a[j] do

2.2.1 a[j+1] = a[j], j = j 1

2.3 a[j+1] = v

3. End

The basic operation here again is comparison and swapping. The analysis of the Insertion

sort is as follows:

Worst Case Analysis: Step 2.2.1 performs 1 swapping and this step is repeated at most (

i-1 1 + 1) = i+1 times. This is true because j varies from i-1 and goes on till it is 1. Also the

number of comparisons in the while loop is 2 * (i+1). So the while loop performs 3 * (i+1)

basic operations. Step 2.3 does 1 swapping. So the number of operations performed by the

statements inside the for loop is 3 * (i+1) + 1 (=3i+4) and the for loop executes n-1 times.

So the total number of basic operations performed by the insertion sort is

3(2+3+4+n) +4(n-1) = 3((n(n+1)/2) 1) + 4(n-1) = O(n

2

). Hence the worst case

complexity of Insertion sort is O(n

2

)

Average Case Analysis: The statements inside the for loop perform anywhere 1 to (3i +4)

basic operations. So on an average the number of basic operations performed by these

statements is (3i+4+1)/2 = (3i+5)/2. Now i varies from 2 to n. So the average number of

basic operations performed by Insertion sort is

(1/2)(3(2+3+4++n) + 5(n-1)) = (1/2)(3((n(n+1)/2)-1) + 5(n-1)) = O(n

2

). Hence the average

case complexity of Insertion sort is also O(n

2

)

25

Copyright 2004, Infosys

Technologies Ltd

25

ER/CORP/CRS/SE15/003

Version No: 2.0

Dynamic Programming

Dynamic Programming is a design principle which is used to solve problems

with overlapping sub problems

It solves the problem by combining the solutions for the sub problems

The difference between Dynamic Programming and Divide and Conquer is that

the sub problems in Divide and Conquer are considered to be disjoint and

distinct various in Dynamic Programming they are overlapping.

Dynamic Programming first appeared in the context of optimization problems. The term

programming here refers to the tabular method to solve the problem. Fibonacci Series is an

example of a problem which has overlapping sub problems. This can be best solved using

Dynamic Programming when compared to Divide and Conquer strategy.

26

Copyright 2004, Infosys

Technologies Ltd

26

ER/CORP/CRS/SE15/003

Version No: 2.0

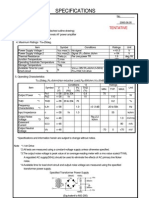

Dynamic Programming Computing a Binomial

Coefficient

The Binomial Coefficient, denoted by C(n,k), is the number of combinations of

k elements from a set of n elements.

The Binomial Coefficient is computed using the following formula

C(n,k) = C(n-1,k-1) + C(n-1,k) for all n > k > 0 and C(n,0) = C(n,n) = 1

Computing the Binomial Coefficient is one of the standard non optimization problem for

which Dynamic Programming technique is used. The recurrence relation gives us a feel of

the overlapping sub problems. To compute this we tabulate values of the binomial coefficient

as shown in the figure in the following slide. The values in the table are filled using the

algorithm which we will introduce shortly.

27

Copyright 2004, Infosys

Technologies Ltd

27

ER/CORP/CRS/SE15/003

Version No: 2.0

Dynamic Programming Computing a Binomial

Coefficient (Contd)

0 1 2 . . . k-1 k

0 1

1 1 1

2 1 2 1

.

k 1 1

.

n-1 1 C(n-1,k-1) C(n-1,k)

n 1 C(n,k)

C(n,k) is computed by filling the above table. We fill row by row from row 0 to n. The

algorithm in the next slide fills the table with the values using Dynamic Programming

technique.

28

Copyright 2004, Infosys

Technologies Ltd

28

ER/CORP/CRS/SE15/003

Version No: 2.0

Dynamic Programming Computing a Binomial

Coefficient (Contd)

Computing a Binomial Coefficient:

1. Begin

2. For i = 0 to n do

2.1 For j = 0 to min(i,k) do

2.1.1 If j = 0 or j = k then C(i,j] = 1else C[i,j] = C[i-1, j-1] + C[i-1, j]

3. Output C[n,k]

4. End

The basic operation in Computing a Binomial Coefficient is addition. Let T(n,k) be the total

number of additions done by this algorithm to compute C(n,k).

The entire summation needs to be split into two parts, one covering the triangle comprising

of the first k+1 rows (refer to the table in the earlier slide) and the other covering the

rectangle comprising of the remaining (n-k) rows.

It can be shown that the A(n,k) = ((k-1)(k))/2 + k(n-k) = O(nk)

29

Copyright 2004, Infosys

Technologies Ltd

29

ER/CORP/CRS/SE15/003

Version No: 2.0

Summary

Algorithm Design Methodologies

Studied the various algorithm design methodologies like Brute Force, Greedy

approach, Divide and Conquer, Decrease and Conquer and Dynamic Programming

Techniques

Analysis of well known algorithms

Analyzed certain well known algorithms which work on one of above mentioned

design principles

You might also like

- Windows Registry PresentationDocument22 pagesWindows Registry Presentationvoxpop51No ratings yet

- Registry TweaksDocument67 pagesRegistry TweaksBandaru SateeshNo ratings yet

- DFIG Wind Power Rapid Controller Prototyping System Based On PXI and StarSimDocument7 pagesDFIG Wind Power Rapid Controller Prototyping System Based On PXI and StarSimRoger IlachoqueNo ratings yet

- Panasonic KX-TD500 Users Guide v1Document442 pagesPanasonic KX-TD500 Users Guide v1Петар ПитаревићNo ratings yet

- BP 09 08 Cover Story KorrDocument4 pagesBP 09 08 Cover Story KorrmeggioNo ratings yet

- Chapter 8 Software TestingDocument212 pagesChapter 8 Software TestingDiya DuttaNo ratings yet

- STK442-110 70W Audio Power Amplifier SpecificationsDocument4 pagesSTK442-110 70W Audio Power Amplifier SpecificationsIvan AsimovNo ratings yet

- Solutions For Electic ChargeDocument9 pagesSolutions For Electic ChargeBreanna VenableNo ratings yet

- Free Range VHDLDocument151 pagesFree Range VHDLpickup666No ratings yet

- Panasonic Program CodesDocument4 pagesPanasonic Program CodesEiflaRamosStaRitaNo ratings yet

- Manuel Mazzara, Bertrand Meyer - Present and Ulterior Software Engineering (2017, Springer)Document225 pagesManuel Mazzara, Bertrand Meyer - Present and Ulterior Software Engineering (2017, Springer)Baltic Currency TradingNo ratings yet

- Chapter 1 IntroductionDocument64 pagesChapter 1 IntroductionAdarshGupta0% (1)

- Electric Circuit Analysis QBDocument16 pagesElectric Circuit Analysis QBboopathy kNo ratings yet

- Design Patterns in Modern C++: Reusable Approaches for Object-Oriented Software DesignFrom EverandDesign Patterns in Modern C++: Reusable Approaches for Object-Oriented Software DesignNo ratings yet

- DSP Book v300 Extended Preview InternetDocument303 pagesDSP Book v300 Extended Preview InternetSemoNo ratings yet

- Java Deployment With JNLP and WebStartDocument508 pagesJava Deployment With JNLP and WebStartniectzenNo ratings yet

- Developer's Guide To Microsoft Enterprise Library - 2nd EditionDocument245 pagesDeveloper's Guide To Microsoft Enterprise Library - 2nd Editionmanavsharma100% (1)

- OpenCV Android Programming by Example - Sample ChapterDocument41 pagesOpenCV Android Programming by Example - Sample ChapterPackt PublishingNo ratings yet

- Syllabus For Short Term FPGA CourseDocument2 pagesSyllabus For Short Term FPGA CoursemanikkalsiNo ratings yet

- Synthesis of LC & RC Networks Using Cauer I & II RealizationsDocument2 pagesSynthesis of LC & RC Networks Using Cauer I & II RealizationsRain EllorinNo ratings yet

- A Primer On Memory Consistency and CoherenceDocument214 pagesA Primer On Memory Consistency and Coherenceyxiefacebook100% (1)

- MASON Tutorial SlidesDocument43 pagesMASON Tutorial SlidesMaksim Tsvetovat100% (1)

- Chapter 2 Software Development Life Cycle ModelsDocument48 pagesChapter 2 Software Development Life Cycle ModelsGurteg SinghNo ratings yet

- Sams Asp Dotnet Evolution Isbn0672326477Document374 pagesSams Asp Dotnet Evolution Isbn0672326477paulsameeNo ratings yet

- Html5 Game DevelopmentDocument32 pagesHtml5 Game DevelopmentYinYangNo ratings yet

- Lavatory IdentificationDocument158 pagesLavatory IdentificationMax FajardowNo ratings yet

- Artificial Intelligence - Mini-Max AlgorithmDocument5 pagesArtificial Intelligence - Mini-Max AlgorithmAfaque AlamNo ratings yet

- SSCDNotes PDFDocument53 pagesSSCDNotes PDFshreenathk_n100% (1)

- VisualSVN - Subversion For Visual StudioDocument46 pagesVisualSVN - Subversion For Visual Studiojishin100% (7)

- Unit3pptx 2019 03 05 13 35 22Document116 pagesUnit3pptx 2019 03 05 13 35 22Vishal JoshiNo ratings yet

- Unit 8Document24 pagesUnit 8badboy_rockss0% (1)

- Linear Algebra in Gaming: Vectors, Distances & Virtual EnvironmentsDocument8 pagesLinear Algebra in Gaming: Vectors, Distances & Virtual EnvironmentsjuanNo ratings yet

- Opearating System LAb FilesDocument128 pagesOpearating System LAb FilesAshish100% (1)

- DSP Principles Algorithms and Applications Third EditionDocument1,033 pagesDSP Principles Algorithms and Applications Third EditionHarish Gunasekaran100% (1)

- Python TutorialDocument32 pagesPython TutorialjunyhuiNo ratings yet

- W3Schools: Angularjs Ajax - $HTTPDocument12 pagesW3Schools: Angularjs Ajax - $HTTPccalin10No ratings yet

- (Lecture ... _ Lecture Notes in Artificial Intelligence) Ian F.C. Smith - Intelligent Computing in Engineering and Architecture_ 13th EG-ICE Workshop 2006, Ascona, Switzerland, June 25-30, 2006, Revis.pdfDocument703 pages(Lecture ... _ Lecture Notes in Artificial Intelligence) Ian F.C. Smith - Intelligent Computing in Engineering and Architecture_ 13th EG-ICE Workshop 2006, Ascona, Switzerland, June 25-30, 2006, Revis.pdfcxwNo ratings yet

- 567 Pramujati Intro To Auto ControlDocument15 pages567 Pramujati Intro To Auto ControlCruise_IceNo ratings yet

- GATE Transducers BookDocument12 pagesGATE Transducers BookFaniAli100% (1)

- Visual Prolog 5.0 - GetstartDocument149 pagesVisual Prolog 5.0 - GetstartAlessandroNo ratings yet

- Face RecognitionDocument26 pagesFace RecognitionDhinesh_90No ratings yet

- Concurrent Window System - Rob PikeDocument11 pagesConcurrent Window System - Rob PikeIvan VNo ratings yet

- MSP430 LaunchPad Workshop v2.22Document260 pagesMSP430 LaunchPad Workshop v2.22phwindNo ratings yet

- Bad IdeasDocument69 pagesBad IdeasArmin RonacherNo ratings yet

- 01 Version ControlDocument37 pages01 Version ControlImad AlbadawiNo ratings yet

- Speech Recognition Using Ic HM2007Document31 pagesSpeech Recognition Using Ic HM2007Nitin Rawat100% (4)

- Digital Signal Processing Introduction PartDocument13 pagesDigital Signal Processing Introduction Partshankar100% (1)

- Searching in C#Document181 pagesSearching in C#writetogupta100% (1)

- Operating SystemsDocument85 pagesOperating SystemsAli JameelNo ratings yet

- 9780134048192Document79 pages9780134048192AS BAKA100% (1)

- Computer Hardware Servicing LecturesDocument244 pagesComputer Hardware Servicing LecturesGladysNo ratings yet

- Opencv TutorialsDocument349 pagesOpencv TutorialsMartin Carames AbenteNo ratings yet

- Algorithm & ComplexitiesDocument28 pagesAlgorithm & ComplexitiesWan Azra Balqis Binti Che Mohd ZainudinNo ratings yet

- Intro Notes on Design and Analysis of AlgorithmsDocument25 pagesIntro Notes on Design and Analysis of AlgorithmsrocksisNo ratings yet

- Design and Analysis of AlgorithmDocument33 pagesDesign and Analysis of AlgorithmajNo ratings yet

- Chapter 2 - Elementary Searching and Sorting AlgorithmsDocument25 pagesChapter 2 - Elementary Searching and Sorting Algorithmsmishamoamanuel574No ratings yet

- K. J. Somaiya College of Engineering, Mumbai-77Document7 pagesK. J. Somaiya College of Engineering, Mumbai-77ARNAB DENo ratings yet

- CSA Lab 4Document6 pagesCSA Lab 4vol damNo ratings yet

- Searching and SortingDocument119 pagesSearching and SortingRajkumar MurugesanNo ratings yet

- Date Symbol Open High Low Close Action Moving AverageDocument3 pagesDate Symbol Open High Low Close Action Moving AverageBalaji JadhavNo ratings yet

- Forex Trading StrategiesDocument30 pagesForex Trading StrategiesKali GallardoNo ratings yet

- Nifty500 CompaniesDocument1 pageNifty500 CompaniesBalaji JadhavNo ratings yet

- Trailing SLDocument1 pageTrailing SLBalaji JadhavNo ratings yet

- Down Up Sell Buy Stop Loss Buy TargetDocument2 pagesDown Up Sell Buy Stop Loss Buy TargetBalaji JadhavNo ratings yet

- IntervieweQuestion SelfDocument4 pagesIntervieweQuestion SelfBalaji JadhavNo ratings yet

- Nifty Jan 18 Futures OrdersDocument1 pageNifty Jan 18 Futures OrdersBalaji JadhavNo ratings yet

- AgsDocument39 pagesAgsBalaji JadhavNo ratings yet

- Risk RewardDocument1 pageRisk RewardBalaji JadhavNo ratings yet

- Forex Trading StrategiesDocument30 pagesForex Trading StrategiesKali GallardoNo ratings yet

- Module2 1Document84 pagesModule2 1Kr Prajapat100% (1)

- Zenqa Question PaperDocument5 pagesZenqa Question PaperBalaji JadhavNo ratings yet

- AOA PreludeDocument6 pagesAOA Prelude9chand3No ratings yet

- AOA Analysis of Well Known AlgorithmsDocument29 pagesAOA Analysis of Well Known AlgorithmsBalaji JadhavNo ratings yet

- Program TestDocument2 pagesProgram TestBalaji JadhavNo ratings yet

- UK Tier1 General Visa Holder Seeks .NET Developer RoleDocument6 pagesUK Tier1 General Visa Holder Seeks .NET Developer RoleBalaji JadhavNo ratings yet

- Fresher Dotnet Resume Model 6 NetDocument2 pagesFresher Dotnet Resume Model 6 NetBalaji JadhavNo ratings yet

- STC Day Trading PlanDocument19 pagesSTC Day Trading Planyraion100% (1)

- Infosys ExperienceDocument2 pagesInfosys ExperienceBalaji JadhavNo ratings yet

- Interview Questions Topic Wise PDFDocument7 pagesInterview Questions Topic Wise PDFBalaji KommaNo ratings yet

- 8M-BIT SERIAL FLASH MEMORY WITH 4KB SECTORS AND DUAL I/O SPIDocument50 pages8M-BIT SERIAL FLASH MEMORY WITH 4KB SECTORS AND DUAL I/O SPImartin sembinelliNo ratings yet

- Poll Day Monitoring SystemDocument40 pagesPoll Day Monitoring Systemravikant143No ratings yet

- SketchUp 2016 HelpDocument175 pagesSketchUp 2016 Helpdimtcho jacksonNo ratings yet

- Network QuestionsDocument4 pagesNetwork QuestionsmicuNo ratings yet

- 1000 Ways To Pack The Bin PDFDocument50 pages1000 Ways To Pack The Bin PDFafsarNo ratings yet

- Final PPT Dab301Document17 pagesFinal PPT Dab301KARISHMA PRADHANNo ratings yet

- FOX505 Product Manual 1KHW001973 Ed02dDocument139 pagesFOX505 Product Manual 1KHW001973 Ed02dSamuel Freire Corrêa100% (1)

- VLOG 4: Subtraction of Decimals (Flow)Document3 pagesVLOG 4: Subtraction of Decimals (Flow)Earl CalingacionNo ratings yet

- FCI ST100 Series Thermal Mass Flow MetersDocument20 pagesFCI ST100 Series Thermal Mass Flow Metersivan8villegas8buschNo ratings yet

- AIAG VDA DFMEA TrainingDocument68 pagesAIAG VDA DFMEA TrainingAtul SURVE100% (2)

- What Is New in PTV Vissim/Viswalk 11Document20 pagesWhat Is New in PTV Vissim/Viswalk 11Billy BrosNo ratings yet

- Bangladesh Army: User Id: LezlvpDocument1 pageBangladesh Army: User Id: LezlvpBulbul AhmedNo ratings yet

- Age CalculationDocument4 pagesAge CalculationMuhammad Hafeez ShaikhNo ratings yet

- NSPFEM2D: A Lightweight 2D Node-Based Smoothed Particle Finite Element Method Code For Modeling Large DeformationDocument16 pagesNSPFEM2D: A Lightweight 2D Node-Based Smoothed Particle Finite Element Method Code For Modeling Large DeformationShu-Gang AiNo ratings yet

- Perkin Elmer Icp Oes Winlab User GuideDocument569 pagesPerkin Elmer Icp Oes Winlab User GuideLuz Contreras50% (2)

- (M8S2-POWERPOINT) - Data Control Language (DCL)Document24 pages(M8S2-POWERPOINT) - Data Control Language (DCL)Yael CabralesNo ratings yet

- Belkin Router Manual PDFDocument120 pagesBelkin Router Manual PDFChrisKelleherNo ratings yet

- Tracking, Track Parcels, Packages, Shipments - DHL Express TrackingDocument2 pagesTracking, Track Parcels, Packages, Shipments - DHL Express TrackingJosue GalindoNo ratings yet

- Hackerrank Phase 1 Functional Programming PDFDocument25 pagesHackerrank Phase 1 Functional Programming PDFPawan NaniNo ratings yet

- Some New Fixed Point Theorems For Generalized Weak Contraction in Partially Ordered Metric SpacesDocument9 pagesSome New Fixed Point Theorems For Generalized Weak Contraction in Partially Ordered Metric SpacesNaveen GulatiNo ratings yet

- Sarvesh PresentationDocument12 pagesSarvesh PresentationSarvesh SoniNo ratings yet

- Business Communication TodayDocument39 pagesBusiness Communication TodaymzNo ratings yet

- AZ 301 StarWarDocument188 pagesAZ 301 StarWarranjeetsingh2908No ratings yet

- List of Console Commands - Dota 2 WikiDocument82 pagesList of Console Commands - Dota 2 WikiOscar Taype Cayllahua50% (2)

- Smart Mirror: A Reflective Interface To Maximize ProductivityDocument6 pagesSmart Mirror: A Reflective Interface To Maximize Productivityعلو الدوريNo ratings yet

- Barbering Course DesignDocument94 pagesBarbering Course DesignNovalyn PaguilaNo ratings yet

- User Manual For PileGroupDocument120 pagesUser Manual For PileGroupmyplaxisNo ratings yet

- Der DirectoryDocument262 pagesDer DirectoryBenNo ratings yet

- What are the cousins of the compilerDocument6 pagesWhat are the cousins of the compilerManinblack ManNo ratings yet