Professional Documents

Culture Documents

Ijcatr 03091012

Uploaded by

ATS0 ratings0% found this document useful (0 votes)

7 views3 pagesPositive-unlabeled learning is a learning problem which uses a semi-supervised method for learning. Two-step approach is a solution for PU learning problem that consists of tow steps: (1) Identifying a set of reliable negative documents. (2) Building a classifier iteratively. Using Rocchio method in step 1 and expectation-maximization method in step 2 is most effective combination in our experiments.

Original Description:

Original Title

ijcatr03091012

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentPositive-unlabeled learning is a learning problem which uses a semi-supervised method for learning. Two-step approach is a solution for PU learning problem that consists of tow steps: (1) Identifying a set of reliable negative documents. (2) Building a classifier iteratively. Using Rocchio method in step 1 and expectation-maximization method in step 2 is most effective combination in our experiments.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

7 views3 pagesIjcatr 03091012

Uploaded by

ATSPositive-unlabeled learning is a learning problem which uses a semi-supervised method for learning. Two-step approach is a solution for PU learning problem that consists of tow steps: (1) Identifying a set of reliable negative documents. (2) Building a classifier iteratively. Using Rocchio method in step 1 and expectation-maximization method in step 2 is most effective combination in our experiments.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 3

International Journal of Computer Applications Technology and Research

Volume 3 Issue 9, 592 - 594, 2014

www.ijcat.com 592

An Evaluation of Two-Step Techniques for Positive-

Unlabeled Learning in Text Classification

Azam Kaboutari

Computer Department

Islamic Azad University,

Shabestar Branch

Shabestar, Iran

Jamshid Bagherzadeh

Computer Department

Urmia University

Urmia, Iran

Fatemeh Kheradmand

Biochemistry Department

Urmia University of Medical

Sciences

Urmia, Iran

Abstract: Positive-unlabeled (PU) learning is a learning problem which uses a semi-supervised method for learning. In PU learning

problem, the aim is to build an accurate binary classifier without the need to collect negative examples for training. Two-step approach

is a solution for PU learning problem that consists of tow steps: (1) Identifying a set of reliable negative documents. (2) Building a

classifier iteratively. In this paper we evaluate five combinations of techniques for two-step strategy. We found that using Rocchio

method in step 1 and Expectation-Maximization method in step 2 is most effective combination in our experiments.

Keywords: PU Learning; positive-unlabeled learning; one-class classification; text classification; partially supervised learning

1. INTRODUCTION

In recent years, the traditional machine learning task division

into supervised and unsupervised categories is blurred and a

new type of learning problems has been raised due to the

emergence of real-world problems. One of these partially

supervised learning problems is the problem of learning from

positive and unlabeled examples and called Positive-

Unlabeled learning or PU learning [2]. PU learning assumes

two-class classification, but there are no labeled negative

examples for training. The training data is only a small set of

labeled positive examples and a large set of unlabeled

examples. In this paper the problem is supposed in the context

of text classification and Web page classification.

The PU learning problem occurs frequently in Web and text

retrieval applications, because Oftentimes the user is looking

for documents related to a special subject. In this application

collecting some positive documents from the Web or any

other source is relatively easy. But Collecting negative

training documents is especially requiring strenuous effort

because (1) negative training examples must uniformly

represent the universal set, excluding the positive class and (2)

manually collected negative training documents could be

biased because of humans unintentional prejudice, which

could be detrimental to classification accuracy [6]. PU

learning resolves need for manually collecting negative

training examples.

In PU learning problem, learning is done from a set of

positive examples and a collection of unlabeled examples.

Unlabeled set indicates random samples of the universal set

for which the class of each sample is arbitrary and may be

positive or negative. Random sampling in Web can be done

directly from the Internet or it can be done in most databases,

warehouses, and search engine databases (e.g., DMOZ

1

).

Two kinds of solutions have been proposed to build PU

classifiers: the two-step approach and the direct approach. In

this paper, we review some techniques that are proposed for

step 1 and step 2 in the two-step approach and evaluate their

performance on our dataset that is collected for identifying

diabetes and non-diabetes WebPages. We find that using

1

http://www.dmoz.org/

Rocchio method in step 1 and Expectation-Maximization

method in step 2 seems particularly promising for PU

Learning.

The next section provides an overview of PU learning and

describes the PU learning techniques considered in the

evaluation - the evaluation is presented in section 3. The paper

concludes with a summary and some proposals for further

research in section 4.

2. POSITIVE-UNLABELED LEARNING

PU learning includes a collection of techniques for training a

binary classifier on positive and unlabeled examples only.

Traditional binary classifiers for text or Web pages require

laborious preprocessing to collect and labeling positive and

negative training examples. In text classification, the labeling

is typically performed manually by reading the documents,

which is a time consuming task and can be very labor

intensive. PU learning does not need full supervision, and

therefore is able to reduce the labeling effort.

Two sets of examples are available for training in PU

learning: the positive set P and an unlabeled set U. The set U

contains both positive and negative examples, but label of

these examples not specified. The aim is to build an accurate

binary classifier without the need to collect negative

examples. [2]

To build PU classifier, two kinds of approaches have been

proposed: the two-step approach that is illustrated in Figure 1

and the direct approach. In The two-step approach as its name

indicates there are two steps for learning: (1) Extracting a

subset of documents from the unlabeled set, as reliable

negative (RN), (2) Applying a classification algorithm

iteratively, building some classifiers and then selecting a good

classifier. [2]

Two-step approaches include S-EM [3], PEBL [6], Roc-SVM

[7] and CR-SVM [8]. Direct approaches such as biased-SVM

[4] and Probability Estimation [5] also are offered to solve the

problem. In this paper, we suppose some two-step approaches

for review and evaluation.

International Journal of Computer Applications Technology and Research

Volume 3 Issue 9, 592 - 594, 2014

www.ijcat.com 593

Figure 1. Two-step approach in PU learning [2].

2.1 Techniques for Step 1

For extracting a subset of documents from the unlabeled set,

as reliable negative five techniques are proposed:

2.1.1 Spy

In this technique small percentage of positive documents from

P are sampled randomly and put in U to act as spies and

new sets Ps and Us are made respectively. Then the nave

Bayesian (NB) algorithm runs using the set Ps as positive and

the set Us as negative. The NB classifier is then applied to

assign a probabilistic class label Pr(+1|d) to each document d

in Us. The probabilistic labels of the spies are used to decide

which documents are most likely to be negative. S-EM [3]

uses Spy technique.

2.1.2 Cosine-Rocchio

It first computes similarities of the unlabeled documents in U

with the positive documents in P using the cosine measure and

extracts a set of potential negatives PN from U. Then the

algorithm applies the Rocchio classification method to build a

classifier f using P and PN. Those documents in U that are

classified as negatives by f are regarded as the final reliable

negatives and stored in set RN. This method is used in [8].

2.1.3 1DNF

It first finds the set of words W as positive words that occur in

the positive documents more frequently than in the unlabeled

set, then those documents from the unlabeled set that do not

contain any positive words in W extracted as reliable negative

and used for building set RN. This method is employed in

PEBL [6].

2.1.4 Nave Bayesian

It builds a NB classifier using the set P as positive and the set

U as negative. The NB classifier is then applied to classify

each document in U. Those documents that are classified as

negative denoted by RN. [4]

2.1.5 Rocchio

This technique is the same as that in the previous technique

except that NB is replaced with Rocchio. Roc-SVM [7] uses

Rocchio technique.

2.2 Techniques for Step 2

If the set RN contains mostly negative documents and is

sufficiently large, a learning algorithm such as SVM using P

and RN applied in this step and it works very well and will be

able to build a good classifier. But often a very small set of

negative documents identified in step 1 especially with 1DNF

technique, then a learning algorithm iteratively runs till it

converges or some stopping criterion is met. [2]

For iteratively learning approach two techniques proposed:

2.2.1 EM-NB

This method is the combination of nave Bayesian

classification (NB) and the EM algorithm. The Expectation-

Maximization (EM) algorithm is an iterative algorithm for

maximum likelihood estimation in problems with missing

data [1].

The EM algorithm consists of two steps, the Expectation step

that fills in the missing data, and the Maximization step that

estimates parameters. Estimating parameters leads to the next

iteration of the algorithm. EM converges when its parameters

stabilize.

In this case the documents in Q (= URN) regarded as having

missing class. First, a NB classifier f is constructed from set P

as positive and set RN as negative. Then EM iteratively runs

and in Expectation step, uses f to assign a probabilistic class

labels to each document in Q. In the Maximization step a new

NB classifier f is learned from P, RN and Q. The classifier f

from the last iteration is the result. This method is used in [3].

2.2.2 SVM Based

In this method, SVM is run iteratively using P, RN and Q. In

each iteration, a new SVM classifier f is constructed from set

P as positive and set RN as negative, and then f is applied to

classify the documents in Q. The set of documents in Q that

are classified as negative is removed from Q and added to RN.

The iteration stops when no document in Q is classified as

negative. The final classifier is the result. This method, called

I-SVM is used in [6].

In the other similar method that is used in [7] and [4], after

iterative SVM converges, either the first or the last classifier

selected as the final classifier. The method, called SVM-IS.

3. EVALUATION

3.1 Data Set

We suppose the Internet as the universal set in our

experiments. To collect random samples of Web pages as

unlabeled set U we used DMOZ, a free open Web directory

containing millions of Web pages. To construct an unbiased

sample of the Internet, a random sampling of a search engine

database such as DMOZ is sufficient [6].

We randomly selected 5,700 pages from DMOZ to collect

unbiased unlabeled data. We also manually collected 539

Web pages about diabetes as positive set P to construct a

classifier for classifying diabetes and non-diabetes Web

pages. For evaluating the classifier, we manually collected

2500 non-diabetes pages and 600 diabetes page. (We

collected negative data just for evaluating the classifier.)

3.2 Performance Measure

Since the F-score is a good performance measure for binary

classification, we report the result of our experiments with this

measure. F-score is the harmonic mean of precision and

recall. Precision is defined as the number of correct positive

predictions divided by number of positive predictions. Recall

is defined as the number of correct positive predictions

divided by number of positive data.

3.3 Experimental Results

We present the experimental results in this subsection. We

extracted features from normal text of the content of Web

pages, and then we perform stopwording, lowercasing and

stemming. Finally, we get a set of about 176,000 words. We

used document frequency (DF), one of the simple

unsupervised feature selection methods for vocabulary and

vector dimensionality reduction [9].

International Journal of Computer Applications Technology and Research

Volume 3 Issue 9, 592 - 594, 2014

www.ijcat.com 594

The document frequency of a word is the number of

documents containing the word in the training set, in our case

in PU. Then we create a ranked list of features, and returns

the i highest ranked features as selected features, which i is in

{200, 400, 600, 1000, 2000, 3000, 5000, 10000}.

As discussed in Section 2, we studied 5 techniques for Step 1

and 3 techniques for Step 2 (EM-NB, I-SVM and SVM-IS).

Clearly, each technique for first step can be combined with

each technique for the second step. In this paper, we will

empirically evaluate only the 5 possible combinations of

methods of Step 1 and Step 2 that available in the LPU

2

, a text

learning or classification system, which learns from a set of

positive documents and a set of unlabeled documents.

These combinations are S-SVM which is Spy combined with

SVM-IS, Roc-SVM is Rocchio combined with SVM-IS, Roc-

EM is Rocchio+EM-NB, NB-SVM is Nave Bayesian+ SVM-

IS and NB-EM is Nave Bayesian+ EM-NB.

In our experiments, each document is represented by a vector

of selected features, using a bag-of-words representation and

term frequency (TF) weighting method which the value of

each feature in each document is the number of times

(frequency count) that the feature (word) appeared in the

document. When running SVM in Step 2, the feature counts

are automatically converted to normalized tf-idf values by

LPU. The F-score is shown in Figure 2.

Figure 2. Results of LPU using DF feature selection method.

As Figure 2 shows, very poor results are obtained in S-SVM

which Spy is used in Step 1 and SVM-IS is used in Step 2.

Since we obtain better results in other combinations that

SVM-IS is used in Step 2, we conduct that Spy in not a good

technique for Step 1 in our experiments. By using NB in step

2, results are improved and best results we have obtained in

our experiments when using Rocchio technique in Step 1.

Figure 2 also shows that how using EM-NB instead of SVM-

IS in Step 2 can improve results significantly.

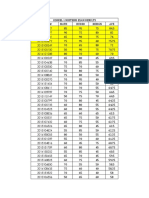

The average of all F-score in each combination of techniques

of Step 1 and Step 2 are shown in Table 1. As seen in Table 1

and Figure 2 Roc-EM is the best combination in our

experiments which Rocchio technique is used in Step 1 and

EM-NB is used in Step 2.

2

http://www.cs.uic.edu/~liub/LPU/LPU-download.html

Table 1. Comparison of two-step approaches results.

S

-

S

V

M

R

o

c

-

S

V

M

R

o

c

-

E

M

N

B

-

S

V

M

N

B

-

E

M

Average

F-score

0.0489 0.3191 0.9332 0.0698 0.2713

4. CONCLUSIONS

In this paper, we discussed some methods for learning a

classifier from positive and unlabeled documents using the

two-step strategy. An evaluation of 5 combinations of

techniques of Step 1 and Step 2 that available in the LPU

system was conducted to compare the performance of each

combination, which enables us to draw some important

conclusions. Our results show that in the general Rocchio

technique in step 1 outperforms other techniques. Also, we

found that using EM for the second step performs better than

SVM. Finally, we observed best combination for LPU in our

experiments is R-EM, which is Rocchio, combined with EM-

NB.

In our future studies, we plan to evaluate other combinations

for Step 1 and Step 2 for Positive-Unlabeled Learning.

5. REFERENCES

[1] Dempster, N. Laird and D. Rubin, Maximum likelihood

from incomplete data via the EM algorithm, Journal of

the Royal Statistical Society. Series B (Methodological),

1977, 39(1): pp. 1-38.

[2] Liu and W. Lee, Partially supervised learning, In Web

data mining, 2nd ed., Springer Berlin Heidelberg, 2011,

pp. 171-208.

[3] Liu, W. Lee, P. Yu and X. Li, Partially supervised

classification of text documents, In Proceedings of

International Conference on Machine Learning(ICML-

2002), 2002.

[4] B. Liu, Y. Dai, X. Li, W. Lee and Ph. Yu, Building text

classifiers using positive and unlabeled examples, In

Proceedings of IEEE International Conference on Data

Mining (ICDM-2003), 2003.

[5] Elkan and K. Noto, Learning classifiers from only

positive and unlabeled data, In Proceedings of ACM

SIGKDD International Conference on Knowledge

Discovery and Data Mining (KDD-2008), 2008.

[6] H. Yu, J. Han and K. Chang, PEBL: Web page

classification without negative examples, Knowledge

and Data Engineering, IEEE Transactions on , vol.16,

no.1, pp. 70- 81, Jan. 2004.

[7] X. Li and B. Liu. "Learning to classify texts using

positive and unlabeled data". In Proceedings of

International Joint Conference on Artificial Intelligence

(IJCAI-2003), 2003.

[8] X. Li, B. Liu and S. Ng, Negative Training Data can be

Harmful to Text Classification, In Proceedings of

Conference on Empirical Methods in Natural Language

Processing (EMNLP-2010), 2010.

[9] X. Qi and B. Davison, Web page classification:

Features and algorithms, ACM Comput. Surv., 41(2):

pp 131, 2009.

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Effect of Wind Environment On High Voltage Transmission Lines SpanDocument6 pagesEffect of Wind Environment On High Voltage Transmission Lines SpanATSNo ratings yet

- Campus Placement Analyzer: Using Supervised Machine Learning AlgorithmsDocument5 pagesCampus Placement Analyzer: Using Supervised Machine Learning AlgorithmsATSNo ratings yet

- License Plate Detection and Recognition Using OCR Based On Morphological OperationDocument5 pagesLicense Plate Detection and Recognition Using OCR Based On Morphological OperationATSNo ratings yet

- Electronic Transcript Management SystemDocument5 pagesElectronic Transcript Management SystemATSNo ratings yet

- Optimization of Lift Gas Allocation Using Evolutionary AlgorithmsDocument5 pagesOptimization of Lift Gas Allocation Using Evolutionary AlgorithmsATSNo ratings yet

- Engineering College Admission Preferences Based On Student PerformanceDocument6 pagesEngineering College Admission Preferences Based On Student PerformanceATSNo ratings yet

- Application of Matrices in Human's LifeDocument6 pagesApplication of Matrices in Human's LifeATSNo ratings yet

- Investigation and Chemical Constituents of MuskmelonsDocument6 pagesInvestigation and Chemical Constituents of MuskmelonsATSNo ratings yet

- Modeling and Analysis of Lightning Arrester For Transmission Line Overvoltage ProtectionDocument5 pagesModeling and Analysis of Lightning Arrester For Transmission Line Overvoltage ProtectionATSNo ratings yet

- Restaurants Rating Prediction Using Machine Learning AlgorithmsDocument4 pagesRestaurants Rating Prediction Using Machine Learning AlgorithmsATSNo ratings yet

- Expert System For Student Placement PredictionDocument5 pagesExpert System For Student Placement PredictionATSNo ratings yet

- The "Promotion" and "Call For Service" Features in The Android-Based Motorcycle Repair Shop MarketplaceDocument5 pagesThe "Promotion" and "Call For Service" Features in The Android-Based Motorcycle Repair Shop MarketplaceATSNo ratings yet

- Applications of Machine Learning For Prediction of Liver DiseaseDocument3 pagesApplications of Machine Learning For Prediction of Liver DiseaseATSNo ratings yet

- Wine Quality Prediction Using Machine Learning AlgorithmsDocument4 pagesWine Quality Prediction Using Machine Learning AlgorithmsATS100% (1)

- Individual Household Electric Power Consumption Forecasting Using Machine Learning AlgorithmsDocument4 pagesIndividual Household Electric Power Consumption Forecasting Using Machine Learning AlgorithmsATSNo ratings yet

- Air Quality Prediction Using Machine Learning AlgorithmsDocument4 pagesAir Quality Prediction Using Machine Learning AlgorithmsATS100% (1)

- Website-Based High School Management Information SystemDocument5 pagesWebsite-Based High School Management Information SystemATSNo ratings yet

- Customer Churn Analysis and PredictionDocument4 pagesCustomer Churn Analysis and PredictionATSNo ratings yet

- Expert System For Problem Solution in Quality Assurance SystemDocument3 pagesExpert System For Problem Solution in Quality Assurance SystemATSNo ratings yet

- Android-Based High School Management Information SystemDocument5 pagesAndroid-Based High School Management Information SystemATSNo ratings yet

- Notification Features On Android-Based Job Vacancy Information SystemDocument6 pagesNotification Features On Android-Based Job Vacancy Information SystemATSNo ratings yet

- Design of Microstrip Reflective Array AntennaDocument8 pagesDesign of Microstrip Reflective Array AntennaATSNo ratings yet

- E-Readiness System E-Government (Case of Communication and Information Office of Badung Regency)Document4 pagesE-Readiness System E-Government (Case of Communication and Information Office of Badung Regency)ATSNo ratings yet

- Design of Cloud Computing-Based Geographic Information Systems For Mapping Tutoring AgenciesDocument8 pagesDesign of Cloud Computing-Based Geographic Information Systems For Mapping Tutoring AgenciesATSNo ratings yet

- Density Based Traffic Signalling System Using Image ProcessingDocument4 pagesDensity Based Traffic Signalling System Using Image ProcessingATSNo ratings yet

- A Review of Fuzzy Logic Model For Analysis of Computer Network Quality of ExperienceDocument12 pagesA Review of Fuzzy Logic Model For Analysis of Computer Network Quality of ExperienceATSNo ratings yet

- A Learnability Model For Children Mobile ApplicationsDocument14 pagesA Learnability Model For Children Mobile ApplicationsATSNo ratings yet

- A Model For Code Restructuring, A Tool For Improving Systems Quality in Compliance With Object Oriented Coding PracticeDocument5 pagesA Model For Code Restructuring, A Tool For Improving Systems Quality in Compliance With Object Oriented Coding PracticeATSNo ratings yet

- Search Engine Development To Enhance User CommunicationDocument3 pagesSearch Engine Development To Enhance User CommunicationATSNo ratings yet

- Frequent Itemset in Sequential Pattern Matching Using BigdataDocument3 pagesFrequent Itemset in Sequential Pattern Matching Using BigdataATSNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- QAF10A200S TheTimkenCompany 2DSalesDrawing 03 06 2023Document1 pageQAF10A200S TheTimkenCompany 2DSalesDrawing 03 06 2023LeroyNo ratings yet

- Gallium Nitride Materials and Devices IV: Proceedings of SpieDocument16 pagesGallium Nitride Materials and Devices IV: Proceedings of SpieBatiriMichaelNo ratings yet

- Midterm Exam Result Ce199-1l 2Q1920Document3 pagesMidterm Exam Result Ce199-1l 2Q1920RA CarpioNo ratings yet

- 2 To 12F SM UT Armoured OFC-2Steel Wire Design Sheet-7.5 MMDocument3 pages2 To 12F SM UT Armoured OFC-2Steel Wire Design Sheet-7.5 MMTropic BazarNo ratings yet

- Public Key Cryptography: S. Erfani, ECE Dept., University of Windsor 0688-558-01 Network SecurityDocument7 pagesPublic Key Cryptography: S. Erfani, ECE Dept., University of Windsor 0688-558-01 Network SecurityAbrasaxEimi370No ratings yet

- Wartsila CPP PaperDocument4 pagesWartsila CPP Papergatheringforgardner9550No ratings yet

- Green Synthesis of Zinc Oxide Nanoparticles: Elizabeth Varghese and Mary GeorgeDocument8 pagesGreen Synthesis of Zinc Oxide Nanoparticles: Elizabeth Varghese and Mary GeorgesstephonrenatoNo ratings yet

- Instrument Resume OIL and GAS.Document3 pagesInstrument Resume OIL and GAS.RTI PLACEMENT CELLNo ratings yet

- CS 102 Programming Fundamentals Lecture NotesDocument14 pagesCS 102 Programming Fundamentals Lecture NotesOkay OkayNo ratings yet

- Proper Fluid Selection Maintenance For Heat Transfer TNTCFLUIDS PDFDocument12 pagesProper Fluid Selection Maintenance For Heat Transfer TNTCFLUIDS PDFAnonymous bHh1L1No ratings yet

- QT140 500 KG Per Hr. Fish Feed Pelleting PlantDocument11 pagesQT140 500 KG Per Hr. Fish Feed Pelleting PlantShekhar MitraNo ratings yet

- Baidu - LeetCodeDocument2 pagesBaidu - LeetCodeSivareddyNo ratings yet

- Introduction To Oracle GroovyDocument53 pagesIntroduction To Oracle GroovyDeepak BhagatNo ratings yet

- Huawei Mediapad m5 10.8inch Ръководство За Потребителя (Cmr-Al09, 01, Neu)Document6 pagesHuawei Mediapad m5 10.8inch Ръководство За Потребителя (Cmr-Al09, 01, Neu)Галина ЦеноваNo ratings yet

- Homa 2 CalculatorDocument6 pagesHoma 2 CalculatorAnonymous 4dE7mUCIH0% (1)

- Mathematics of Finance: Simple and Compound Interest FormulasDocument11 pagesMathematics of Finance: Simple and Compound Interest FormulasAshekin MahadiNo ratings yet

- A Design and Analysis of A Morphing Hyper-Elliptic Cambered Span (HECS) WingDocument10 pagesA Design and Analysis of A Morphing Hyper-Elliptic Cambered Span (HECS) WingJEORJENo ratings yet

- Die Science - Developing Forming Dies - Part I - The FabricatorDocument6 pagesDie Science - Developing Forming Dies - Part I - The FabricatorSIMONENo ratings yet

- Cross Belt Magnetic Separator (CBMS)Document2 pagesCross Belt Magnetic Separator (CBMS)mkbhat17kNo ratings yet

- Iso 10042Document5 pagesIso 10042Nur Diana100% (3)

- 2017 Yr 9 Linear Relations Test A SolutionsDocument13 pages2017 Yr 9 Linear Relations Test A SolutionsSam JeffreyNo ratings yet

- PDS - GulfSea Hydraulic AW Series-1Document2 pagesPDS - GulfSea Hydraulic AW Series-1Zaini YaakubNo ratings yet

- Reboilers and VaporizersDocument20 pagesReboilers and Vaporizers58 - Darshan ShahNo ratings yet

- Convert MBR Disk To GPT DiskDocument5 pagesConvert MBR Disk To GPT Diskjelenjek83No ratings yet

- FMDS0129Document49 pagesFMDS0129hhNo ratings yet

- Control PhilosophyDocument2 pagesControl PhilosophytsplinstNo ratings yet

- Bobcat Technical Information Bulletin - CompressDocument2 pagesBobcat Technical Information Bulletin - Compressgerman orejarenaNo ratings yet

- 000 200 1210 Guidelines For Minimum Deliverables 3 November 2011Document22 pages000 200 1210 Guidelines For Minimum Deliverables 3 November 2011Raul Bautista100% (1)

- Leroy Somer 3434c - GB-NyDocument28 pagesLeroy Somer 3434c - GB-NyCris_eu09100% (1)

- Control Lights with SOU-1 Twilight SwitchDocument1 pageControl Lights with SOU-1 Twilight SwitchjsblNo ratings yet